Comprehensive Data Integration and Web Services Project Report

VerifiedAdded on 2021/04/17

|10

|2276

|254

Project

AI Summary

This project delves into the multifaceted realm of data integration, encompassing system and data integration processes, validation, mapping, and transformation of data from disparate sources. It explores RESTful web services, detailing their architecture, HTTP operations, and message formats. The project also covers mashups, explaining their role in integrating existing web components, along with data merging and cleaning techniques. Furthermore, it introduces the Python package PETL for ETL pipelines, providing code examples and running instructions for merging data and locating services using web services and Google Maps integration. The project concludes with a discussion of the system's scalability and the integration of information.

1. Introduction

System and data integration is the process. It is the combination of both technical and

business process. The system and integration is used to perform some operations such as

validation, mapping, transformation, and also consolidate the data. The data is from disparate

sources into meaningful information. The integrated systems are easily identified. The field

mapping operation and gap analysis is performed well. To achieve the effective result we need to

ensure the smooth data into the data ware house. We know the using system and data integration

perform validation, cleansing, and also transformation.

RESTful services

HTTP protocol performs the REST architecture style. In RESTful design is based on the

following concepts.

Uniform resource identifiers are used. Basically it’s a hierarchal structure based. Each

and every resource must have at least one URI.

To read and manipulate the resources using uniform interfaces

There are four basic HTTP operations are applicable. They are GET, POST, PUT, and

DELETE.

And also support other operations such as HEAD, OPTIONS

Using these operations the meat data is easily performed.

The accepted messages are represented in different formats like HTML, JSON, or XML.

So self descriptive messages are applicable

It’s also known as stateless interaction. The session date is does not maintain by the

stateless interaction.

That means all the information’s are meaningful including the HTTP message

For instance resource name and message

Mashups and tools

Two or more existing components are integrated by the mashup application. The existing

components are available in the web. The components are in different form like data, logic, or

application logic and user. Mashup components are also known as individual component.

System and data integration is the process. It is the combination of both technical and

business process. The system and integration is used to perform some operations such as

validation, mapping, transformation, and also consolidate the data. The data is from disparate

sources into meaningful information. The integrated systems are easily identified. The field

mapping operation and gap analysis is performed well. To achieve the effective result we need to

ensure the smooth data into the data ware house. We know the using system and data integration

perform validation, cleansing, and also transformation.

RESTful services

HTTP protocol performs the REST architecture style. In RESTful design is based on the

following concepts.

Uniform resource identifiers are used. Basically it’s a hierarchal structure based. Each

and every resource must have at least one URI.

To read and manipulate the resources using uniform interfaces

There are four basic HTTP operations are applicable. They are GET, POST, PUT, and

DELETE.

And also support other operations such as HEAD, OPTIONS

Using these operations the meat data is easily performed.

The accepted messages are represented in different formats like HTML, JSON, or XML.

So self descriptive messages are applicable

It’s also known as stateless interaction. The session date is does not maintain by the

stateless interaction.

That means all the information’s are meaningful including the HTTP message

For instance resource name and message

Mashups and tools

Two or more existing components are integrated by the mashup application. The existing

components are available in the web. The components are in different form like data, logic, or

application logic and user. Mashup components are also known as individual component.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

The mashup mechanism is known as mashup logic. The mashup logic is used to tell about

how the mashup operators are created and how the mashup components are created. They are

used to specify some of operators between the different components. Control flow, data flow,

and data mediation, are types of operations performed by mashup tools.

There are different types of tools are used

Node-Red

Glue.things

WoTKit

T/WoT

2. Data merging and cleaning

Multiple database of information are merged is known as data merging. The information

about common entities is frequently used in large commercial and government organizations.

This problem is known as merge/purge problem. They have duplicate information about the

typical data. The data are very complex and also domain depend matching process.

It achieves the data cleaning task. The customers are directly making the marketing type

application. Final result explains the statistical details about the data. The result finally generate

the statistical data it means the final result will be accurate and effective.

Using transitive closure all individual results are combing. The independent result

generates and produces more accurate result, in lower cost. The rule programming module is

provided by the system. It is easy to find and locate the duplicate environment. Large amount of

data is used in this application. The real world data base is done by data merging. The final result

generates the statistical data.

PETL python package

Petl is a python package index. The following command is used to describe the pip

$ pip install petl

And to download manually, extract and run by following command

how the mashup operators are created and how the mashup components are created. They are

used to specify some of operators between the different components. Control flow, data flow,

and data mediation, are types of operations performed by mashup tools.

There are different types of tools are used

Node-Red

Glue.things

WoTKit

T/WoT

2. Data merging and cleaning

Multiple database of information are merged is known as data merging. The information

about common entities is frequently used in large commercial and government organizations.

This problem is known as merge/purge problem. They have duplicate information about the

typical data. The data are very complex and also domain depend matching process.

It achieves the data cleaning task. The customers are directly making the marketing type

application. Final result explains the statistical details about the data. The result finally generate

the statistical data it means the final result will be accurate and effective.

Using transitive closure all individual results are combing. The independent result

generates and produces more accurate result, in lower cost. The rule programming module is

provided by the system. It is easy to find and locate the duplicate environment. Large amount of

data is used in this application. The real world data base is done by data merging. The final result

generates the statistical data.

PETL python package

Petl is a python package index. The following command is used to describe the pip

$ pip install petl

And to download manually, extract and run by following command

python setup.py install

To verify the installation following command is used

$ pip install nose

$ nosetests –v pet1

We are using the python version 2.7 and 3.4. The UNIX and WINDOWS operating

system is used to perform python.

ETL pipelines

Using this package we can easily avoid the lazy evolution and iterations. The pipeline

will not execute accurately, until the data is required.

For instance

>>> example_data = """foo,bar,baz

... a,1,3.4

... b,2,7.4

... c,6,2.2

... d,9,8.1

... """

>>> with open('example.csv', 'w') as f:

... f.write(example_data)

...

petl.util.vis.look() is a calling function. Using this function easily write the data and files

or database.

Following codes are some examples

petl. Io csv. tocsv()

petl.io.db.todb()

To verify the installation following command is used

$ pip install nose

$ nosetests –v pet1

We are using the python version 2.7 and 3.4. The UNIX and WINDOWS operating

system is used to perform python.

ETL pipelines

Using this package we can easily avoid the lazy evolution and iterations. The pipeline

will not execute accurately, until the data is required.

For instance

>>> example_data = """foo,bar,baz

... a,1,3.4

... b,2,7.4

... c,6,2.2

... d,9,8.1

... """

>>> with open('example.csv', 'w') as f:

... f.write(example_data)

...

petl.util.vis.look() is a calling function. Using this function easily write the data and files

or database.

Following codes are some examples

petl. Io csv. tocsv()

petl.io.db.todb()

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Table containers are used to perform the data extraction. Each table contains table

containers and table iterations. First we need to accept the requested data otherwise the actual

transformation is not done. All the transformations are run using pipeline.

3. RESTful web services

RESTful web services is known as representational state transfer in the form of

architecture and that seems to be the constraints like interface as uniform and scalability and it is

known as the best web service. REST is known as the web standards and it is used for data

communication. In this architecture the server of the REST provide the access to the resources

and these sources are analyzed by the uniform resource locator and it provide the representation

in the form of text and xml. And most Hypertext transfer protocol methods used in this web

service and they are get, put, post, delete. And the RESTful web services is defined as the list of

protocols and the standards sued for the data communication. RESTful web services is the kind

of web services based on REST architecture. And mainly it defines by the uniform resource

locator. And the resources are used in this web services and contents are known as the resources

and that is the kind of text and html or xml. And RESTful web services give the access to the

web and for the client access and the resources is the kind of object-oriented contents. And the

resources are represented in the way of understandability, completeness and link ability. The

client and server should understand the resource and analyze what kind of resource. And the

resources should be complete and can be access by others and in the link ability it should have

link with others and in any way finally it deliver the xml format. RESTful web services uses the

protocol like HTTP for the data communication. And the request and response are delivered in

the form of HTTP response. In the request message verb, uniform resource locator and for that

request body and header must be needed. And for the response the version of HTTP and the

status and for this also the body and header also needed. And the RESTful web services used for

the web applications built in different programming languages and it is used to for the

applications that can be reside in the platforms like windows and Linux and it provides the

flexibility to the web services can easily communicate with others. And in the RESTful web

services addresses should be like use noun as plural and keep the backward compatibility and the

usage of lower case letters and ignore the spaces.

containers and table iterations. First we need to accept the requested data otherwise the actual

transformation is not done. All the transformations are run using pipeline.

3. RESTful web services

RESTful web services is known as representational state transfer in the form of

architecture and that seems to be the constraints like interface as uniform and scalability and it is

known as the best web service. REST is known as the web standards and it is used for data

communication. In this architecture the server of the REST provide the access to the resources

and these sources are analyzed by the uniform resource locator and it provide the representation

in the form of text and xml. And most Hypertext transfer protocol methods used in this web

service and they are get, put, post, delete. And the RESTful web services is defined as the list of

protocols and the standards sued for the data communication. RESTful web services is the kind

of web services based on REST architecture. And mainly it defines by the uniform resource

locator. And the resources are used in this web services and contents are known as the resources

and that is the kind of text and html or xml. And RESTful web services give the access to the

web and for the client access and the resources is the kind of object-oriented contents. And the

resources are represented in the way of understandability, completeness and link ability. The

client and server should understand the resource and analyze what kind of resource. And the

resources should be complete and can be access by others and in the link ability it should have

link with others and in any way finally it deliver the xml format. RESTful web services uses the

protocol like HTTP for the data communication. And the request and response are delivered in

the form of HTTP response. In the request message verb, uniform resource locator and for that

request body and header must be needed. And for the response the version of HTTP and the

status and for this also the body and header also needed. And the RESTful web services used for

the web applications built in different programming languages and it is used to for the

applications that can be reside in the platforms like windows and Linux and it provides the

flexibility to the web services can easily communicate with others. And in the RESTful web

services addresses should be like use noun as plural and keep the backward compatibility and the

usage of lower case letters and ignore the spaces.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4. Mashups

Mashup is a methodology of application development and it is used to multiple services for

the users to create the services for new kind of application. In the mashups for the new

development user must be know how to use the various the various webs services rather than

knowing to write a code using programming languages. For that have to develop the tools and it

should be used for mashup applications with programming languages and in the data integration

its main aim is to recognize the richness and weakness of the mashup tool. The mashup

technologies include the gadgets and widgets and JavaScript and supporting languages like css

and html and interfaces like active x controls and the java applets and these are all in the

presentation kind of mashups. And in data related mashups it includes the data combination and

the sites of webpages and the languages like xml and json and this data related or data oriented

services also called as data as a service and it is used to provide the application programming

interface and the components and in this data mashups the process is vary in the form in and out

and in process data mashups it uses the technology like JavaScript and Ajax and it includes the

configuration of data retrieving from the servers and about the user interface and in the out

process mashups it is used the technologies like java and python and used to create new data

models. And in the process related mashups it includes the functions and the programming

languages like python and java and in that the function is used to the inter process

communication. And the data is exchanged in between the processes and the results are different

in each data oriented process.

5. Demo Running Instruction:

Combine the two files.

Code explanation:

The program file name saved as “dataMerger.py” is used to merge given content and the files are

imported by using the keyword “import” and every attributes in the codes are used to form the

tree and the web service side the python program that is “clinics_locator.py” used to search and

locate the address in the nearest tab.

Mashup is a methodology of application development and it is used to multiple services for

the users to create the services for new kind of application. In the mashups for the new

development user must be know how to use the various the various webs services rather than

knowing to write a code using programming languages. For that have to develop the tools and it

should be used for mashup applications with programming languages and in the data integration

its main aim is to recognize the richness and weakness of the mashup tool. The mashup

technologies include the gadgets and widgets and JavaScript and supporting languages like css

and html and interfaces like active x controls and the java applets and these are all in the

presentation kind of mashups. And in data related mashups it includes the data combination and

the sites of webpages and the languages like xml and json and this data related or data oriented

services also called as data as a service and it is used to provide the application programming

interface and the components and in this data mashups the process is vary in the form in and out

and in process data mashups it uses the technology like JavaScript and Ajax and it includes the

configuration of data retrieving from the servers and about the user interface and in the out

process mashups it is used the technologies like java and python and used to create new data

models. And in the process related mashups it includes the functions and the programming

languages like python and java and in that the function is used to the inter process

communication. And the data is exchanged in between the processes and the results are different

in each data oriented process.

5. Demo Running Instruction:

Combine the two files.

Code explanation:

The program file name saved as “dataMerger.py” is used to merge given content and the files are

imported by using the keyword “import” and every attributes in the codes are used to form the

tree and the web service side the python program that is “clinics_locator.py” used to search and

locate the address in the nearest tab.

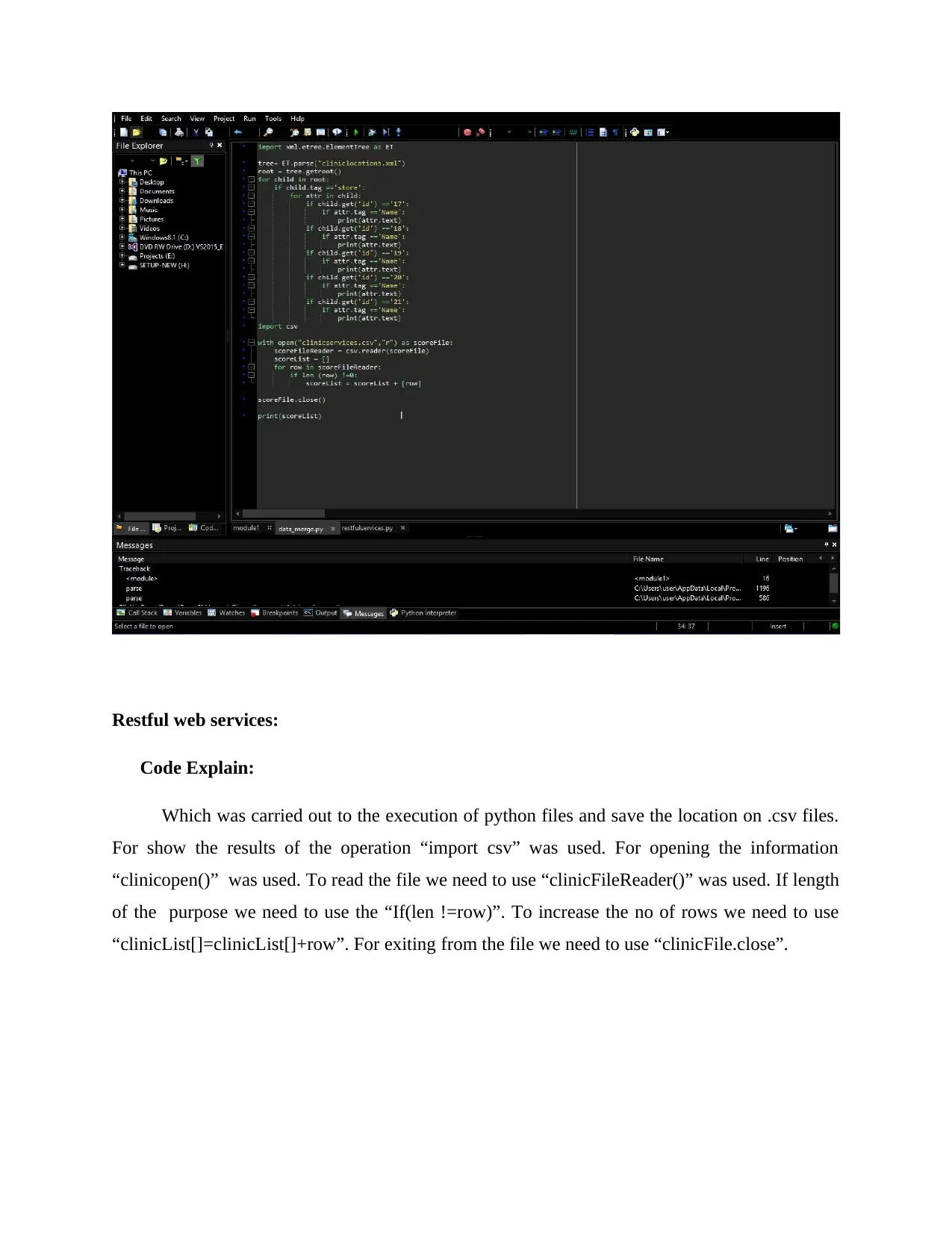

Restful web services:

Code Explain:

Which was carried out to the execution of python files and save the location on .csv files.

For show the results of the operation “import csv” was used. For opening the information

“clinicopen()” was used. To read the file we need to use “clinicFileReader()” was used. If length

of the purpose we need to use the “If(len !=row)”. To increase the no of rows we need to use

“clinicList[]=clinicList[]+row”. For exiting from the file we need to use “clinicFile.close”.

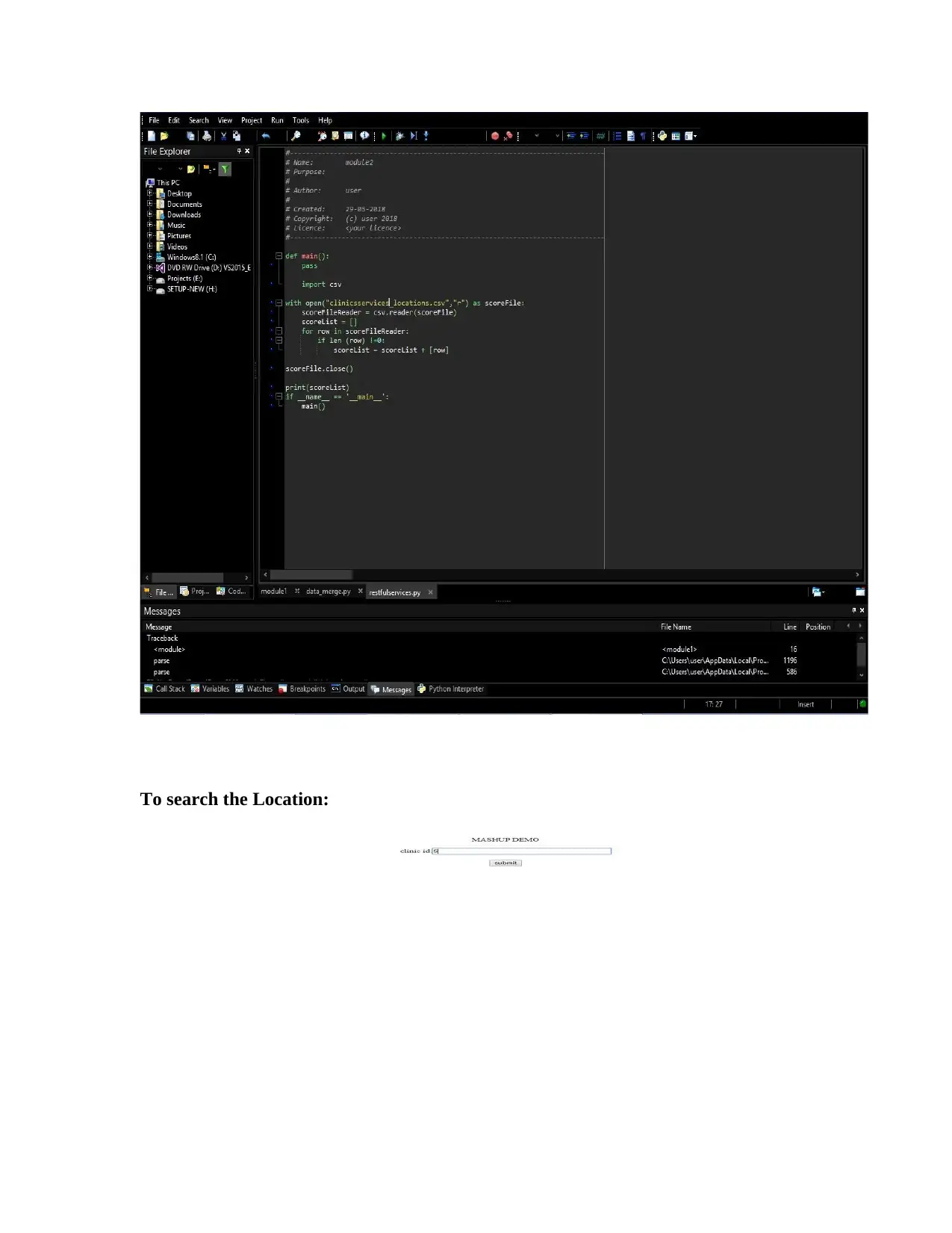

Code Explain:

Which was carried out to the execution of python files and save the location on .csv files.

For show the results of the operation “import csv” was used. For opening the information

“clinicopen()” was used. To read the file we need to use “clinicFileReader()” was used. If length

of the purpose we need to use the “If(len !=row)”. To increase the no of rows we need to use

“clinicList[]=clinicList[]+row”. For exiting from the file we need to use “clinicFile.close”.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

To search the Location:

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

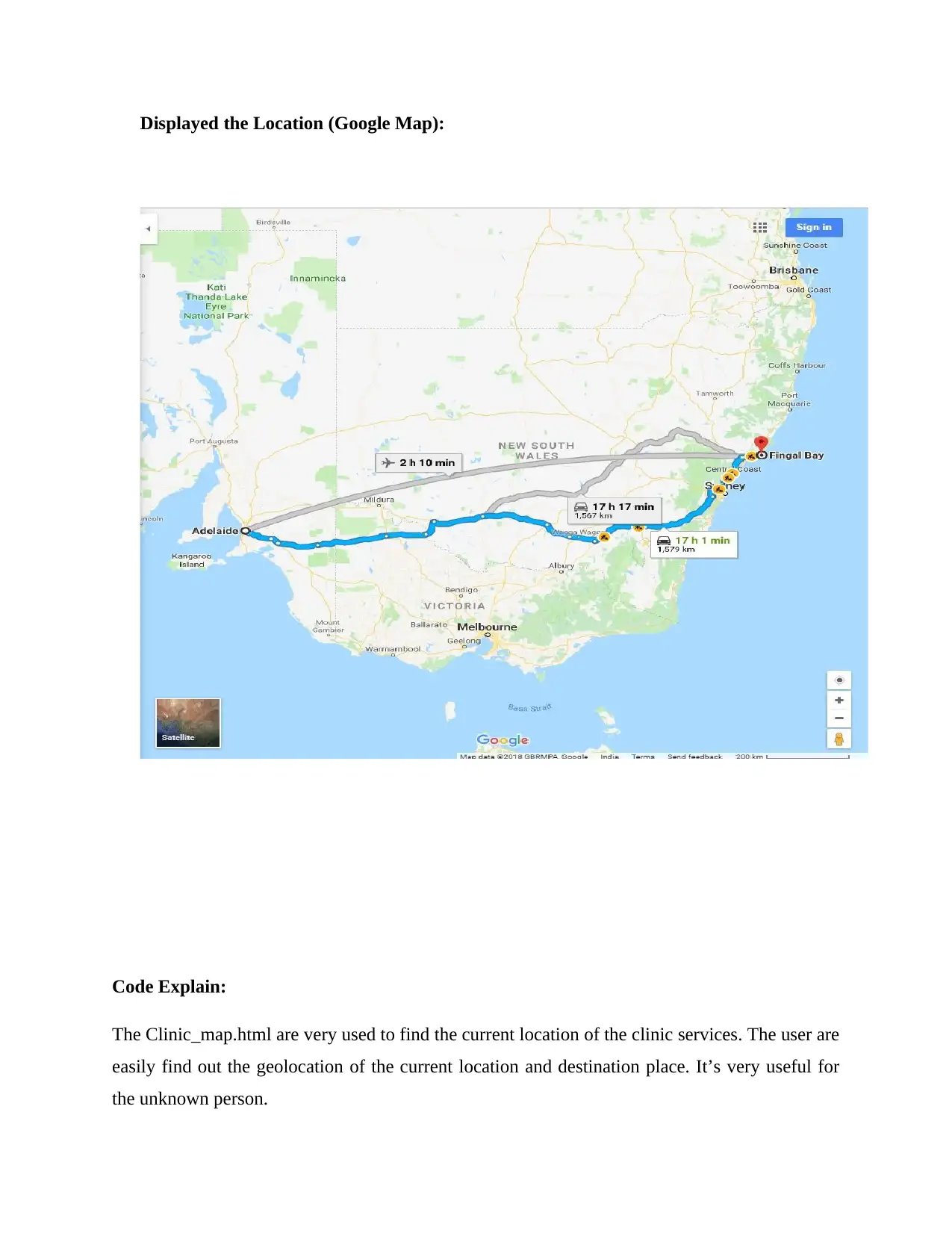

Displayed the Location (Google Map):

Code Explain:

The Clinic_map.html are very used to find the current location of the clinic services. The user are

easily find out the geolocation of the current location and destination place. It’s very useful for

the unknown person.

Code Explain:

The Clinic_map.html are very used to find the current location of the clinic services. The user are

easily find out the geolocation of the current location and destination place. It’s very useful for

the unknown person.

Conclusion:

The position of the stores in the MAP was identified. The IT structure are mainly used to access

the data centers and based on the Functionality of the dependent on the type of the Infrastructure.

Growth of the process is slowly increasing and non – dynamic. The techniques are used to

compute the responsibilities of the system. The system integration of various data the final

required data was recovered. Scalability of the system was ensured by the virtualizing

techniques. And finally the integrating the information, demonstrations also performed.

References

Dong, X. and Srivastava, D. (n.d.). Big data integration.

Finkelstein, C. (2006). Enterprise architecture for integration. Boston: Artech House.

Haltiwanger, J., Lynch, L. and Mackie, C. (2007). Understanding business dynamics.

Washington, D.C.: National Academies Press.

Huang, X. and Zhu, W. (2013). An Enterprise Data Integration ERP System Conversion System

Design and Implementation. Applied Mechanics and Materials, 433-435, pp.1765-1769.

ISHII, H. and TEMPO, R. (2009). Computing the PageRank Variation for Fragile Web

Data. SICE Journal of Control, Measurement, and System Integration, 2(1), pp.1-9.

Joglekar, A. (2016). Prediction of Favourable Rules to Identify Suspected Patients of HIV Using

Integration of Expert System and Data Mining. International Journal of Mechanical

Engineering and Information Technology.

Kaps, A., Dyshlevoi, K., Heumann, K., Jost, R., Kontodinas, I., Wolff, M. and Hani, J. (2006).

The BioRSTM Integration and Retrieval System: An open system for distributed data

integration. Journal of Integrative Bioinformatics, 3(2).

Mynarz, J. (2014). Integration of public procurement data using linked data. Journal of Systems

Integration, pp.19-31.

Oró, E. and Salom, J. (2015). Energy Model for Thermal Energy Storage System Management

Integration in Data Centres. Energy Procedia, 73, pp.254-262.

The position of the stores in the MAP was identified. The IT structure are mainly used to access

the data centers and based on the Functionality of the dependent on the type of the Infrastructure.

Growth of the process is slowly increasing and non – dynamic. The techniques are used to

compute the responsibilities of the system. The system integration of various data the final

required data was recovered. Scalability of the system was ensured by the virtualizing

techniques. And finally the integrating the information, demonstrations also performed.

References

Dong, X. and Srivastava, D. (n.d.). Big data integration.

Finkelstein, C. (2006). Enterprise architecture for integration. Boston: Artech House.

Haltiwanger, J., Lynch, L. and Mackie, C. (2007). Understanding business dynamics.

Washington, D.C.: National Academies Press.

Huang, X. and Zhu, W. (2013). An Enterprise Data Integration ERP System Conversion System

Design and Implementation. Applied Mechanics and Materials, 433-435, pp.1765-1769.

ISHII, H. and TEMPO, R. (2009). Computing the PageRank Variation for Fragile Web

Data. SICE Journal of Control, Measurement, and System Integration, 2(1), pp.1-9.

Joglekar, A. (2016). Prediction of Favourable Rules to Identify Suspected Patients of HIV Using

Integration of Expert System and Data Mining. International Journal of Mechanical

Engineering and Information Technology.

Kaps, A., Dyshlevoi, K., Heumann, K., Jost, R., Kontodinas, I., Wolff, M. and Hani, J. (2006).

The BioRSTM Integration and Retrieval System: An open system for distributed data

integration. Journal of Integrative Bioinformatics, 3(2).

Mynarz, J. (2014). Integration of public procurement data using linked data. Journal of Systems

Integration, pp.19-31.

Oró, E. and Salom, J. (2015). Energy Model for Thermal Energy Storage System Management

Integration in Data Centres. Energy Procedia, 73, pp.254-262.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

SAEKI, M. and SUGITANI, Y. (2011). Partial Tuning of Dynamical Controllers by Data-Driven

Loop-Shaping. SICE Journal of Control, Measurement, and System Integration, 4(1), pp.71-

76.

Wang, X., Shen, J. and Sun, C. (2013). Data Warehouse Oriented Data Integration System

Design and Implementation. Applied Mechanics and Materials, 321-324, pp.2532-2538.

Zhai, L., Guo, L., Cui, X. and Li, S. (2011). Research on Real-time Publish/Subscribe System

supported by Data-Integration. Journal of Software, 6(6).

Loop-Shaping. SICE Journal of Control, Measurement, and System Integration, 4(1), pp.71-

76.

Wang, X., Shen, J. and Sun, C. (2013). Data Warehouse Oriented Data Integration System

Design and Implementation. Applied Mechanics and Materials, 321-324, pp.2532-2538.

Zhai, L., Guo, L., Cui, X. and Li, S. (2011). Research on Real-time Publish/Subscribe System

supported by Data-Integration. Journal of Software, 6(6).

1 out of 10

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.