Automated User Testing Using Machine Learning: A Thesis Paper

VerifiedAdded on 2019/10/01

|10

|1412

|38

Thesis and Dissertation

AI Summary

This thesis paper explores the application of machine learning in automated user testing, contrasting it with traditional manual testing procedures. The paper begins with an abstract outlining the evolution of testing methodologies, from manual test cases to automated tools and the integration of fuzzy logic. It emphasizes the need for dynamic test procedures that adapt to changing software environments. The core of the thesis involves a comparative analysis of manual versus automated testing using machine learning algorithms. A specific case study is presented, involving the determination of an isosceles triangle using black box testing. The manual test procedure, a step-by-step flowchart, is contrasted with an automated, dynamic logic based on simple query techniques. The results section highlights the superior performance of machine learning-driven tests, which achieve better scores in fewer iterations compared to manual methods. Capability and performance statistics are used to demonstrate the effectiveness of the automated approach. The conclusion underscores the adaptability, reliability, and efficiency of machine learning test cases, saving manual effort and enhancing the precision of black box testing. The paper recommends understanding the complexities of machine learning deployment while acknowledging the necessity of manual intervention, even in highly logical domains. The thesis includes acknowledgements, a bibliography, and figures illustrating the testing methodologies.

11/27/2018

Automated User Testing Using Machine Learning

Thesis Paper

Automated User Testing Using Machine Learning

Thesis Paper

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

ABSTRACT

The procedures of manual testing have evolved over time. Earlier, all the test cases

were written manually, executed sequentially and there was a limitation to the scope of

modification, if the program to be tested changed. Then came the innovative

mechanisms of tools and procedures that enabled developers to test their programs

efficiently and in a more precise way. The tools that were used, use to record

procedures, catch test cases and results, maintain logs and summaries and all the

points of failure and exit.

With the advancements in software programs world-wide, the introduction of

fuzzy logic in the day to day operations, the increased complexities with critical

programs came the need of identification of dynamic test procedures which were to

adjust to the changing procedures and would adapt to the new logic. These test cases

were later programmed effectively once this need of dynamic changes was felt. The

terminology of machine learning, in the automated test case scenario, gives an edge to

highly innovative technology products to be tested in the most effective manner,

reducing floor time.

The methodology used here are simple cases, that adjusts and understand the

dynamics of changing programming environment, by manual test case adaptation

versus automated user tests. The results indicate the functionality of adaptive test cases

to be exceptionally accurate and helpful in reducing the multi scenario and modifying

parameters of software programming and its variables. The adaptive functionality of

machine learning is a boon in today’s software development scenario.

2 | P a g e

The procedures of manual testing have evolved over time. Earlier, all the test cases

were written manually, executed sequentially and there was a limitation to the scope of

modification, if the program to be tested changed. Then came the innovative

mechanisms of tools and procedures that enabled developers to test their programs

efficiently and in a more precise way. The tools that were used, use to record

procedures, catch test cases and results, maintain logs and summaries and all the

points of failure and exit.

With the advancements in software programs world-wide, the introduction of

fuzzy logic in the day to day operations, the increased complexities with critical

programs came the need of identification of dynamic test procedures which were to

adjust to the changing procedures and would adapt to the new logic. These test cases

were later programmed effectively once this need of dynamic changes was felt. The

terminology of machine learning, in the automated test case scenario, gives an edge to

highly innovative technology products to be tested in the most effective manner,

reducing floor time.

The methodology used here are simple cases, that adjusts and understand the

dynamics of changing programming environment, by manual test case adaptation

versus automated user tests. The results indicate the functionality of adaptive test cases

to be exceptionally accurate and helpful in reducing the multi scenario and modifying

parameters of software programming and its variables. The adaptive functionality of

machine learning is a boon in today’s software development scenario.

2 | P a g e

TABLE OF CONTENTS

ABSTRACT...................................................................................................................... 2

INTROUCTION................................................................................................................ 4

PROCEDURE.................................................................................................................. 4

RESULTS.........................................................................................................................4

DISCUSSION...................................................................................................................4

CONCLUSION................................................................................................................. 4

RECOMMENDATION......................................................................................................4

ACKNOWLEDGEMENTS................................................................................................5

BIBLIOGRAPHY.............................................................................................................. 6

3 | P a g e

ABSTRACT...................................................................................................................... 2

INTROUCTION................................................................................................................ 4

PROCEDURE.................................................................................................................. 4

RESULTS.........................................................................................................................4

DISCUSSION...................................................................................................................4

CONCLUSION................................................................................................................. 4

RECOMMENDATION......................................................................................................4

ACKNOWLEDGEMENTS................................................................................................5

BIBLIOGRAPHY.............................................................................................................. 6

3 | P a g e

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

INTROUCTION

The purpose of the thesis written here is to test the adaptability of machine learning

algorithms that claim flexibility in test cases while performing user testing in the complex

software scenarios. The performance of hard bound, inflexible and brittle manual test

cases are very poor. The manual cost of writing and modifying the test cases are very

high. These manual testing scenarios are limited to the scope, where the software code

alterations to achieve a functionality does not need dynamic input, which in turn may not

be form specific.

Mostly, the user test cases are drawn and are brittle. If something goes wrong, in a test

procedural framework, a tool or manual test case, it needs to drop the remaining

segment of the test or skip certain testing steps that ultimately leaves the accuracy of

testing questionable. Since there is no mechanism with manual testing to check if the

test is performing effectively, unless it is executed completely, the procedures of

intermittent results is not achievable by manual test procedures.

The introduction of machine learning and artificial intelligence systems into testing with

automated procedures has given a breakthrough by making adaptable, dynamically

changing, fuzzy logic driven algorithmic approaches. The case tests the effectiveness of

automated Machine Learning driven algorithms against manually driven test

procedures. The criteria of acceptance is the intermittent log based modifications by

algorithms adjusting to case test, if the required input parameters does not match

exactly.

PROCEDURE

The problem is a simple one. To find out whether the three sides given as input form an

isosceles triangle or not, can be done through the black box test mechanism, where the

internal content of the coding is not known to the user.

4 | P a g e

The purpose of the thesis written here is to test the adaptability of machine learning

algorithms that claim flexibility in test cases while performing user testing in the complex

software scenarios. The performance of hard bound, inflexible and brittle manual test

cases are very poor. The manual cost of writing and modifying the test cases are very

high. These manual testing scenarios are limited to the scope, where the software code

alterations to achieve a functionality does not need dynamic input, which in turn may not

be form specific.

Mostly, the user test cases are drawn and are brittle. If something goes wrong, in a test

procedural framework, a tool or manual test case, it needs to drop the remaining

segment of the test or skip certain testing steps that ultimately leaves the accuracy of

testing questionable. Since there is no mechanism with manual testing to check if the

test is performing effectively, unless it is executed completely, the procedures of

intermittent results is not achievable by manual test procedures.

The introduction of machine learning and artificial intelligence systems into testing with

automated procedures has given a breakthrough by making adaptable, dynamically

changing, fuzzy logic driven algorithmic approaches. The case tests the effectiveness of

automated Machine Learning driven algorithms against manually driven test

procedures. The criteria of acceptance is the intermittent log based modifications by

algorithms adjusting to case test, if the required input parameters does not match

exactly.

PROCEDURE

The problem is a simple one. To find out whether the three sides given as input form an

isosceles triangle or not, can be done through the black box test mechanism, where the

internal content of the coding is not known to the user.

4 | P a g e

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Manual Test Procedure:

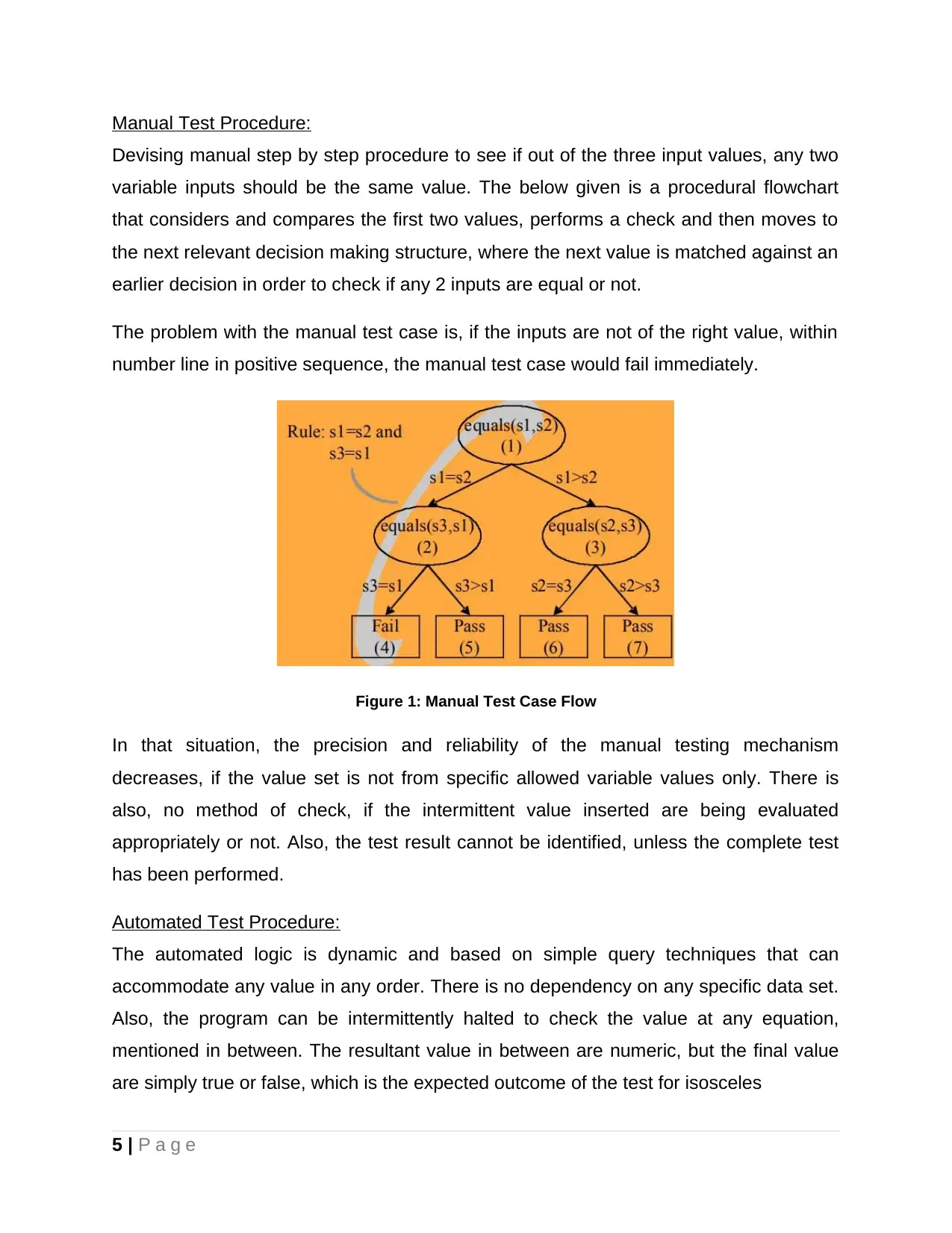

Devising manual step by step procedure to see if out of the three input values, any two

variable inputs should be the same value. The below given is a procedural flowchart

that considers and compares the first two values, performs a check and then moves to

the next relevant decision making structure, where the next value is matched against an

earlier decision in order to check if any 2 inputs are equal or not.

The problem with the manual test case is, if the inputs are not of the right value, within

number line in positive sequence, the manual test case would fail immediately.

Figure 1: Manual Test Case Flow

In that situation, the precision and reliability of the manual testing mechanism

decreases, if the value set is not from specific allowed variable values only. There is

also, no method of check, if the intermittent value inserted are being evaluated

appropriately or not. Also, the test result cannot be identified, unless the complete test

has been performed.

Automated Test Procedure:

The automated logic is dynamic and based on simple query techniques that can

accommodate any value in any order. There is no dependency on any specific data set.

Also, the program can be intermittently halted to check the value at any equation,

mentioned in between. The resultant value in between are numeric, but the final value

are simply true or false, which is the expected outcome of the test for isosceles

5 | P a g e

Devising manual step by step procedure to see if out of the three input values, any two

variable inputs should be the same value. The below given is a procedural flowchart

that considers and compares the first two values, performs a check and then moves to

the next relevant decision making structure, where the next value is matched against an

earlier decision in order to check if any 2 inputs are equal or not.

The problem with the manual test case is, if the inputs are not of the right value, within

number line in positive sequence, the manual test case would fail immediately.

Figure 1: Manual Test Case Flow

In that situation, the precision and reliability of the manual testing mechanism

decreases, if the value set is not from specific allowed variable values only. There is

also, no method of check, if the intermittent value inserted are being evaluated

appropriately or not. Also, the test result cannot be identified, unless the complete test

has been performed.

Automated Test Procedure:

The automated logic is dynamic and based on simple query techniques that can

accommodate any value in any order. There is no dependency on any specific data set.

Also, the program can be intermittently halted to check the value at any equation,

mentioned in between. The resultant value in between are numeric, but the final value

are simply true or false, which is the expected outcome of the test for isosceles

5 | P a g e

Figure 2: Automated Code For Testing

RESULTS

The results obtained from analysis of input values and output responses are compared

and analyzed. The outcomes states that are obtained as the capability statistics and

performance statistics for executing automated test driven by machine learning are far

better and achieves the scores gained by manual testing procedures in just one or two

iterations. The quality of the outcome is significantly better in Machine Learning driven

tests than the manual test case procedures.

DISCUSSION

Capability statistics is a measure of potential of a procedure to achieve a desired set or

goal in order to meet the requisites behind the specifications mentioned. Another

attribute of Performance capability helps in demonstrating the overall performance of

execution of one singular run in testing, either manually or automated. The CP and PP

of the results give away the closeness with logic and deviation from the expected

outcome.

CONCLUSION

The results indicate a clear indication that machine driven test cases are adaptable and

reliable and saves manual effort of changes in test procedures. Dynamic test cases

6 | P a g e

RESULTS

The results obtained from analysis of input values and output responses are compared

and analyzed. The outcomes states that are obtained as the capability statistics and

performance statistics for executing automated test driven by machine learning are far

better and achieves the scores gained by manual testing procedures in just one or two

iterations. The quality of the outcome is significantly better in Machine Learning driven

tests than the manual test case procedures.

DISCUSSION

Capability statistics is a measure of potential of a procedure to achieve a desired set or

goal in order to meet the requisites behind the specifications mentioned. Another

attribute of Performance capability helps in demonstrating the overall performance of

execution of one singular run in testing, either manually or automated. The CP and PP

of the results give away the closeness with logic and deviation from the expected

outcome.

CONCLUSION

The results indicate a clear indication that machine driven test cases are adaptable and

reliable and saves manual effort of changes in test procedures. Dynamic test cases

6 | P a g e

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

created out of modified fuzzy logic helps in performing with precision and accuracy of

black box testing.

RECOMMENDATION

It is important to understand the logic and complexities involved in order to deploy a

Machine Learning concept. These machine learning technological upgrades come with

certain costs, yet they make significant impact at performing standardized tests with

mere resemblance nearing hit to be found on specific program. They are independent of

input value sets and enable better understanding. Yet manual intervention is a basic

necessity even in these highly logical domains.

7 | P a g e

black box testing.

RECOMMENDATION

It is important to understand the logic and complexities involved in order to deploy a

Machine Learning concept. These machine learning technological upgrades come with

certain costs, yet they make significant impact at performing standardized tests with

mere resemblance nearing hit to be found on specific program. They are independent of

input value sets and enable better understanding. Yet manual intervention is a basic

necessity even in these highly logical domains.

7 | P a g e

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

ACKNOWLEDGEMENTS

8 | P a g e

8 | P a g e

BIBLIOGRAPHY

Chang, R., Sankaranarayanan, S., Jiang, G., & Ivancic, F. (2014). U.S. Patent No. 8,924,938.

Washington, DC: U.S. Patent and Trademark Office.

Feurer, M., Klein, A., Eggensperger, K., Springenberg, J., Blum, M., & Hutter, F. (2015). Efficient and

robust automated machine learning. In Advances in Neural Information Processing Systems (pp. 2962-

2970).

Jonsson, L., Borg, M., Broman, D., Sandahl, K., Eldh, S., & Runeson, P. (2016). Automated bug

assignment: Ensemble-based machine learning in large scale industrial contexts. Empirical Software

Engineering, 21(4), 1533-1578.

Lemaître, G., Nogueira, F., & Aridas, C. K. (2017). Imbalanced-learn: A python toolbox to tackle the curse

of imbalanced datasets in machine learning. The Journal of Machine Learning Research, 18(1), 559-563.

Malhotra, R. (2015). A systematic review of machine learning techniques for software fault

prediction. Applied Soft Computing, 27, 504-518.

9 | P a g e

Chang, R., Sankaranarayanan, S., Jiang, G., & Ivancic, F. (2014). U.S. Patent No. 8,924,938.

Washington, DC: U.S. Patent and Trademark Office.

Feurer, M., Klein, A., Eggensperger, K., Springenberg, J., Blum, M., & Hutter, F. (2015). Efficient and

robust automated machine learning. In Advances in Neural Information Processing Systems (pp. 2962-

2970).

Jonsson, L., Borg, M., Broman, D., Sandahl, K., Eldh, S., & Runeson, P. (2016). Automated bug

assignment: Ensemble-based machine learning in large scale industrial contexts. Empirical Software

Engineering, 21(4), 1533-1578.

Lemaître, G., Nogueira, F., & Aridas, C. K. (2017). Imbalanced-learn: A python toolbox to tackle the curse

of imbalanced datasets in machine learning. The Journal of Machine Learning Research, 18(1), 559-563.

Malhotra, R. (2015). A systematic review of machine learning techniques for software fault

prediction. Applied Soft Computing, 27, 504-518.

9 | P a g e

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

TABLE OF FIGURES

Figure 1: Manual Test Case Flow....................................................................................5

Figure 2: Automated Code For Testing............................................................................5

10 | P a g e

Figure 1: Manual Test Case Flow....................................................................................5

Figure 2: Automated Code For Testing............................................................................5

10 | P a g e

1 out of 10

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2025 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.