IRIS Dataset Classification Using KNN Algorithm: A Practical Approach

VerifiedAdded on 2023/04/06

|3

|500

|376

Homework Assignment

AI Summary

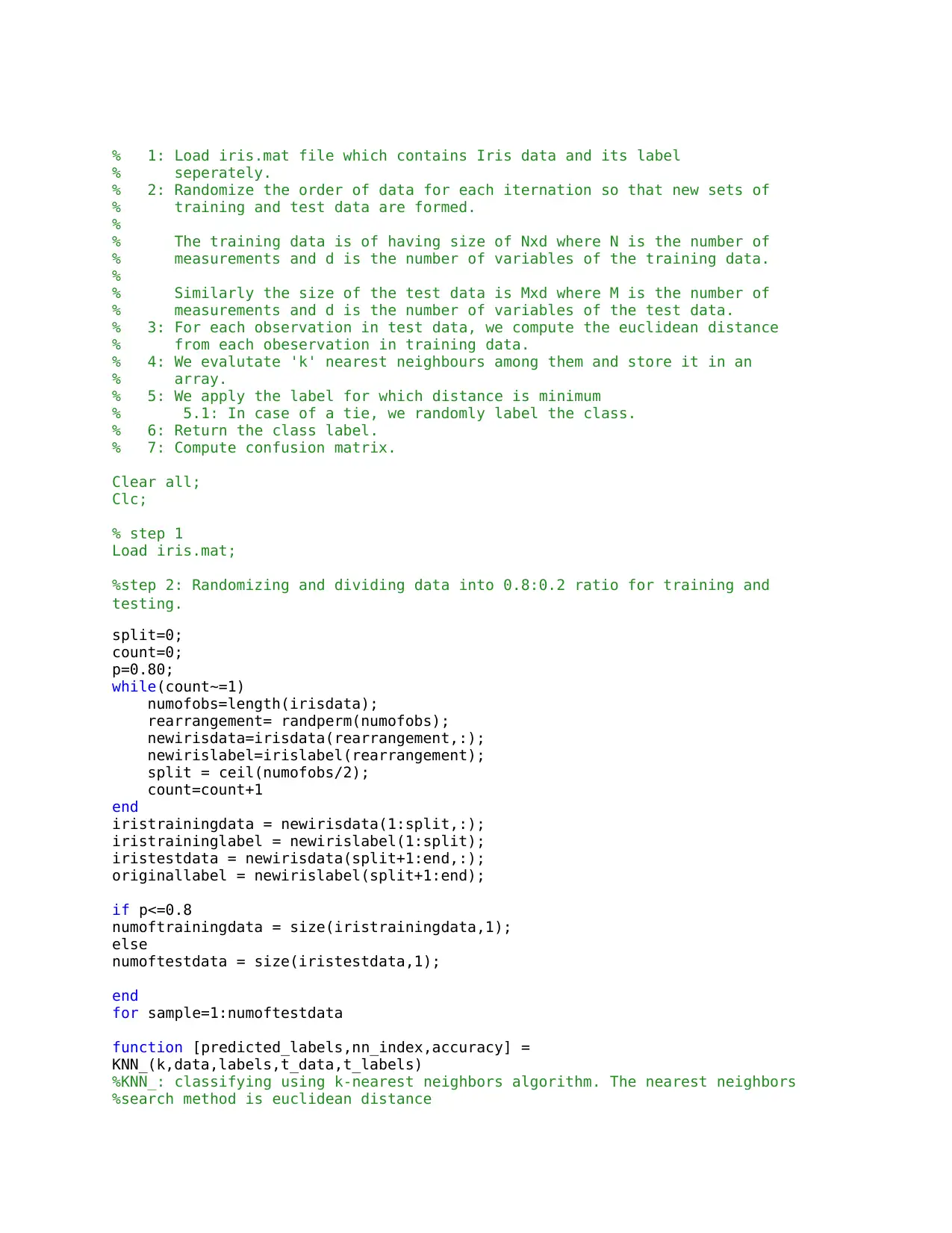

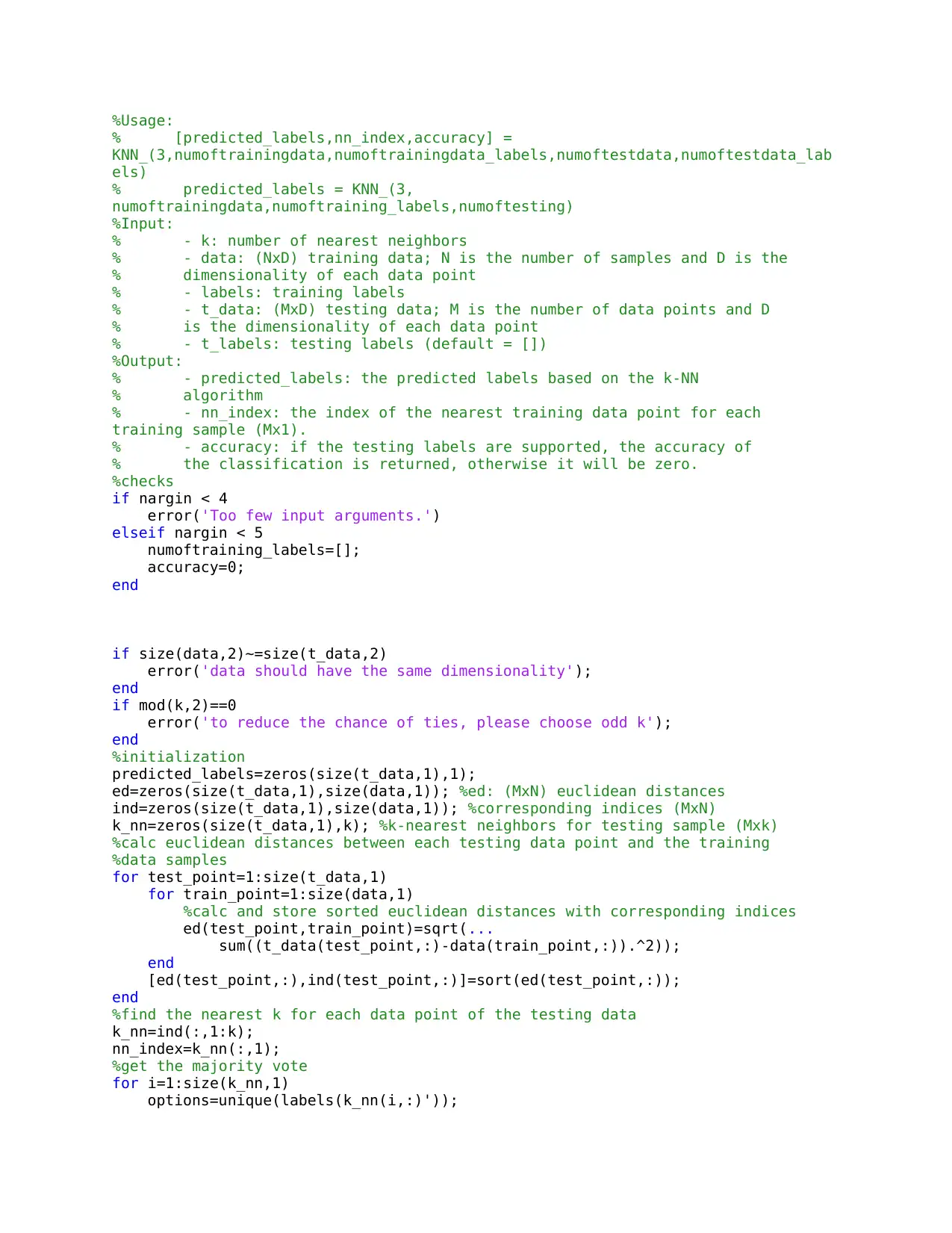

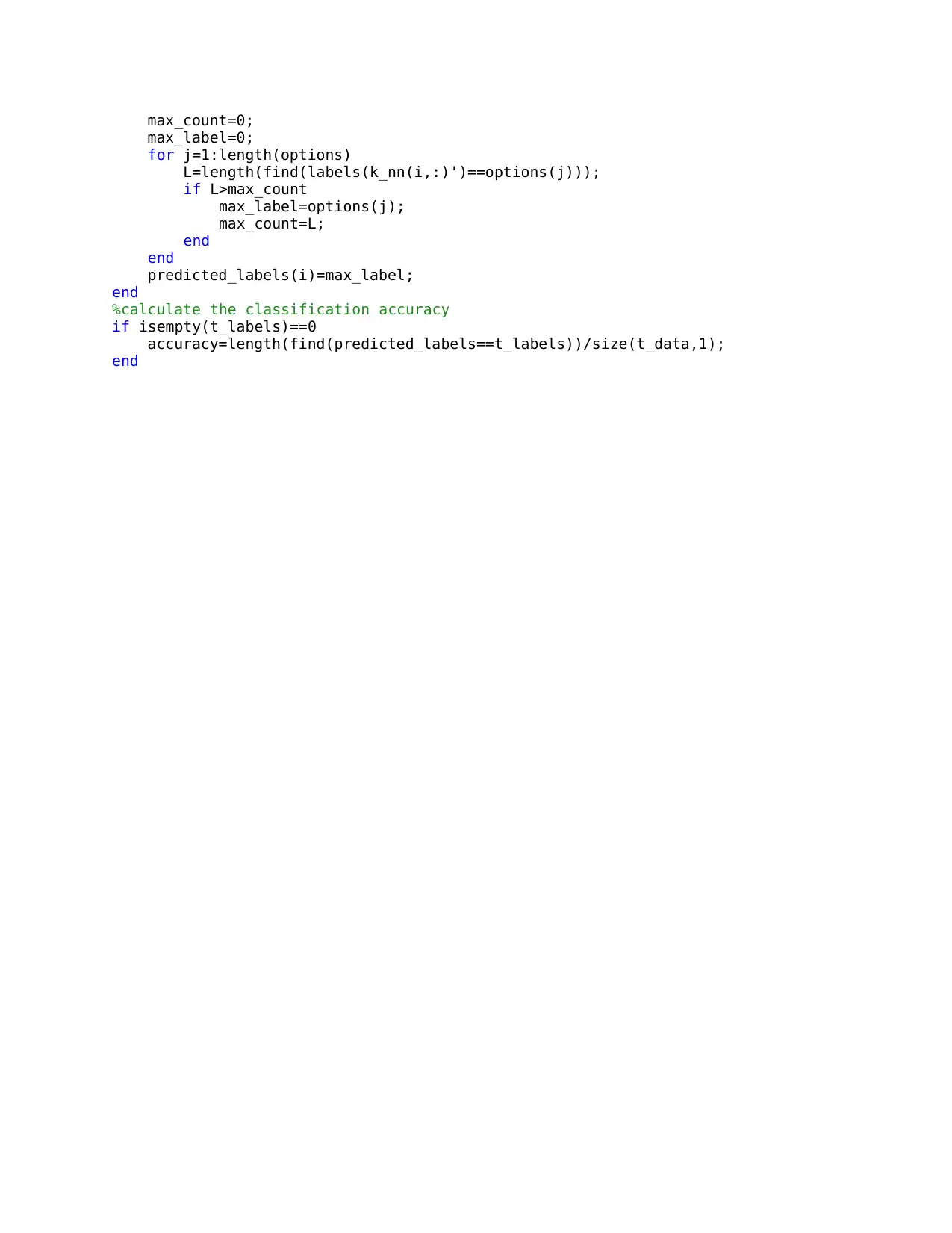

This assignment focuses on implementing a K-Nearest Neighbors (KNN) classifier for the IRIS dataset. The solution involves loading the IRIS dataset, randomizing the data order, and splitting it into training and testing sets. The Euclidean distance is computed between each test data point and all training data points. The 'k' nearest neighbors are identified, and a class label is assigned based on the majority vote among these neighbors, with ties resolved randomly. The code provides a function to perform KNN classification, calculate classification accuracy, and generate a confusion matrix. The assignment includes a detailed explanation of the KNN algorithm, the code implementation, and the steps involved in data preparation, distance calculation, and label prediction. The aim is to classify the IRIS dataset using KNN with different parameter settings and evaluate the performance of the classifier.

1 out of 3

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)