International Young Scientists Forum

VerifiedAdded on 2022/08/31

|7

|4872

|16

AI Summary

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

HDFS

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Abstract: Data is considered as an asset in most of the

commercial business organizations all around the world. It can

be said that the growth and progress of a commercial business

organization depends hugely on the management of the huge

volumes of data. There are different categories of concepts

which are directly related with management of data such as

reporting of the data, analytics of data, and managing the

insights of the data. Different aspects of HDFS shall be

discussed, critically analyzed and elaborated in this report

from different perspectives. The data which was considered in

this report will be from secondary sources and only latest peer

reviewed journals will be selected to gather information about

HDFS.

Keywords: Hadoop Distributed File System, POSIX style, and

Traffic Management

I. INTRODUCTION

There are diverse categories of data threats which can have a

huge impact on the net profitability and business sales of

corporate establishments such as the social engineering

attacks, software attacks, identity threat, theft of intellectual

property and extortion of information.

The address these diverse categories of threats there are

diverse categories of data storage systems which are created

and deployed by corporate establishments such as the

secondary and primary data storage systems [1]. The diverse

categories of secondary data storage systems are Blu-ray

discs, and USB memory sticks. The categories of primary

data storage systems are register and the diverse categories of

distributed systems.

This report shall focus on Hadoop Distributed File System

(HDFS) which is one of the most significant primary data

storage systems which are increasingly used in most of the

global organizations all around the world. Implementation of

this distributed file system is a huge challenge for the

strategic planners and the IT experts of the business

organizations. The concept of HDFS was introduced in the

year 2006, and it was used in the Apache software

foundation project [2]. This primary storage systems is

widely used in most of the big data analytics projects all

around the world. In the year 2012, Hadoop and HDFS was

available in a unified version of 1.0. The following version

of the software was there in the year 2013 with the

This report shall be having numerous sections and each of

the section will be very much significant to enhance the

originality and effectiveness of the report. The following unit

of this report shall be introducing how HDFS works in most

of the corporate establishments all over the world.

II. DETAILS OF HDFS

A. Working principles of HDFS

There are huge volumes of data which are transferred

between each business unit to another in a corporate

environment and managing the security of those data is a

huge challenge for most of these organization as a result this

distributed file became popular.

HDFS is very much useful to transfer huge volumes of data

between the compute nodes of a business [3]. There are

diverse categories of frameworks which can be closely

aligned with this file storage systems such as the Map

Reduce programmatic framework which are very much

useful to process bulk volumes of data with a seconds.

HDFS is very much useful to manage the integrity as well as

the originality of the data as it breaks down the longer and

complex data into smaller segments. Each of these divided

segments are distributed equally to different nodes in a

cluster. This primary storage system is very well aligned

with the concept of parallel processing [4]. Usually there are

diverse categories of faults when huge volumes of data are

managed however, the efficiency of this distributed file

system is very much on the higher side as a result there are

no real fault tolerant issues related with this system.

Recovery of the data can be done in a more organized

manner with the help of HDFS. Server tracking is one of the

other significant functionality of HDFS. Even if data is at

fault, HDFS is very much beneficial as it ensures processing

of the data.

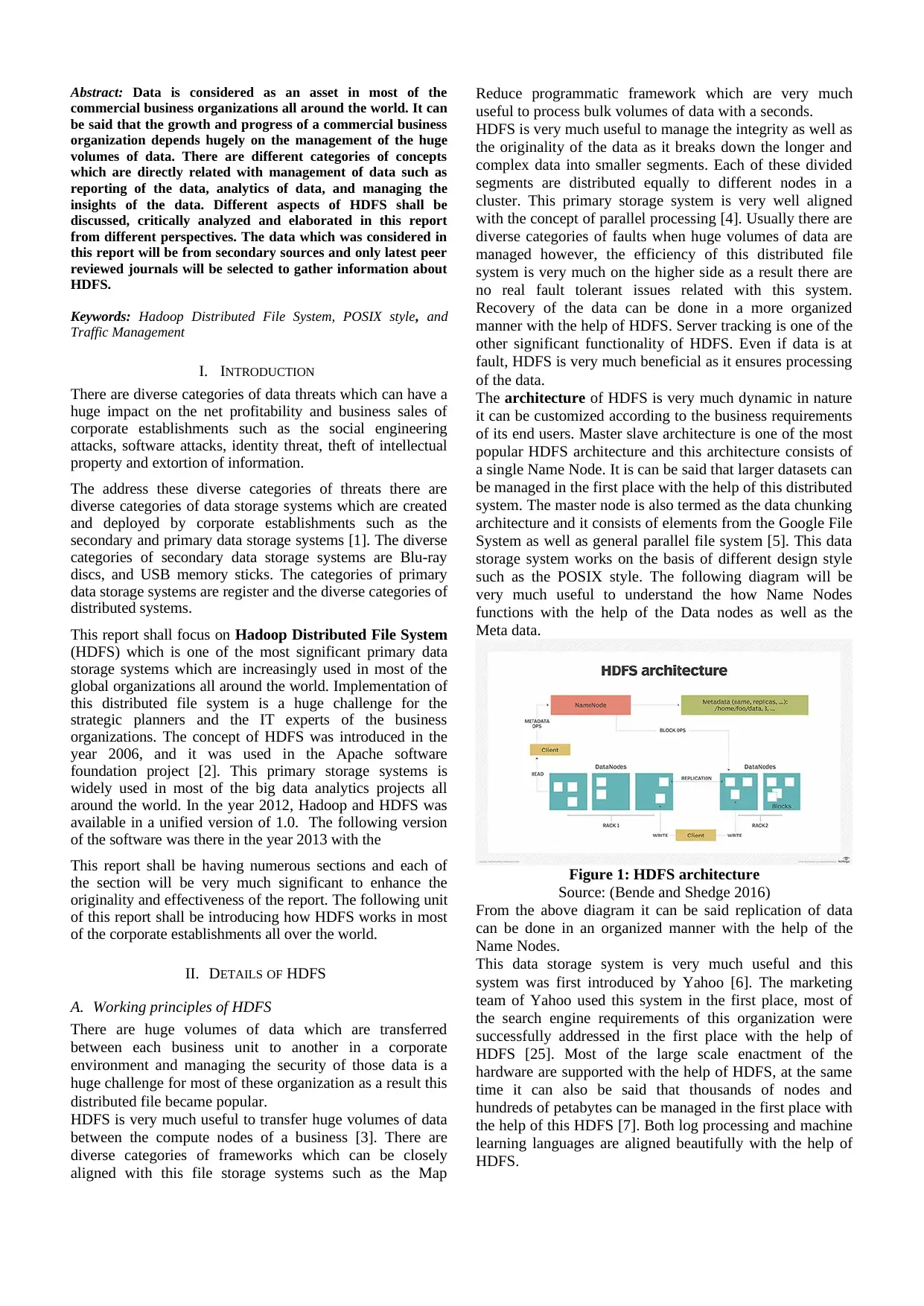

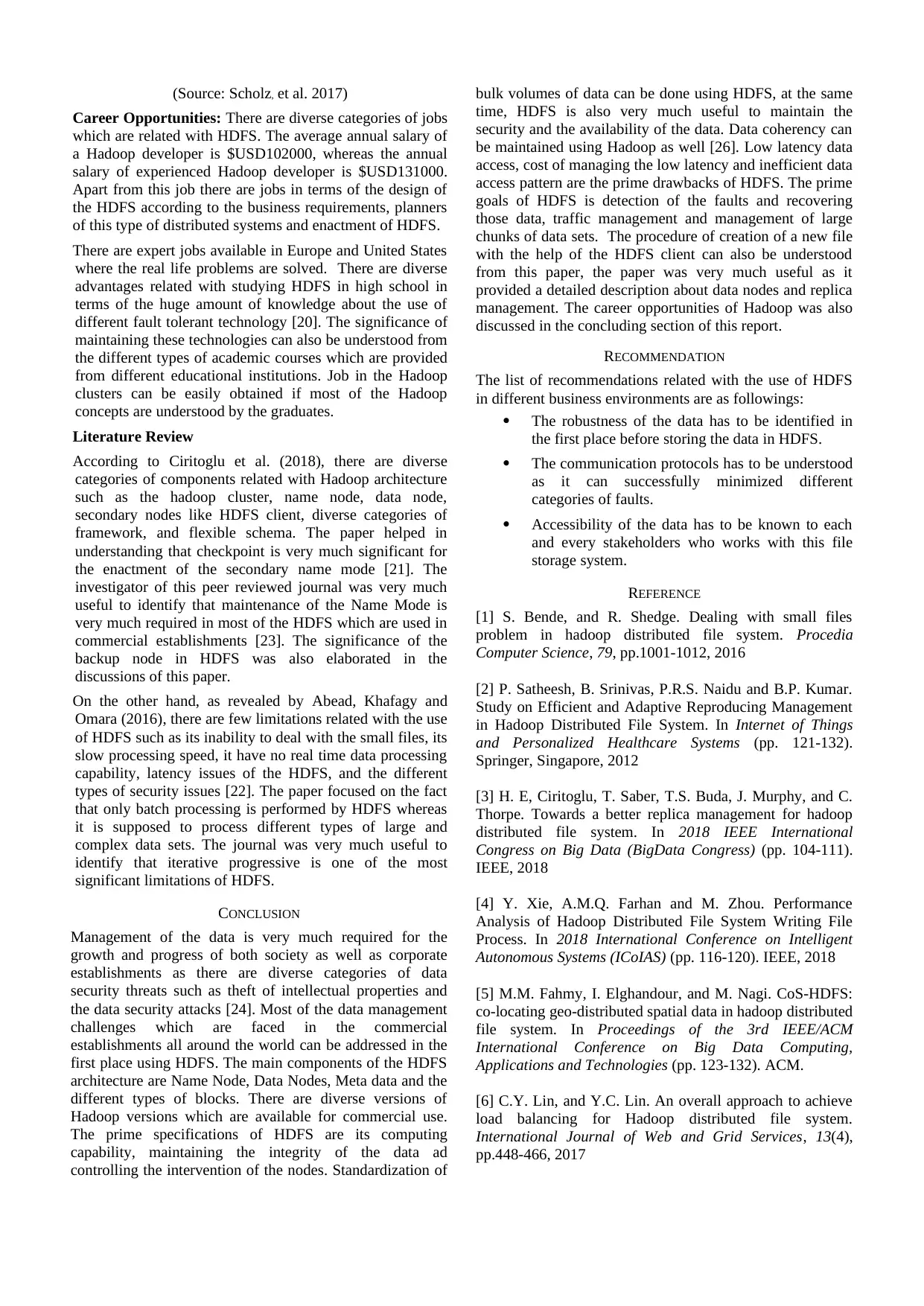

The architecture of HDFS is very much dynamic in nature

it can be customized according to the business requirements

of its end users. Master slave architecture is one of the most

popular HDFS architecture and this architecture consists of

a single Name Node. It is can be said that larger datasets can

be managed in the first place with the help of this distributed

system. The master node is also termed as the data chunking

architecture and it consists of elements from the Google File

System as well as general parallel file system [5]. This data

storage system works on the basis of different design style

such as the POSIX style. The following diagram will be

very much useful to understand the how Name Nodes

functions with the help of the Data nodes as well as the

Meta data.

Figure 1: HDFS architecture

Source: (Bende and Shedge 2016)

From the above diagram it can be said replication of data

can be done in an organized manner with the help of the

Name Nodes.

This data storage system is very much useful and this

system was first introduced by Yahoo [6]. The marketing

team of Yahoo used this system in the first place, most of

the search engine requirements of this organization were

successfully addressed in the first place with the help of

HDFS [25]. Most of the large scale enactment of the

hardware are supported with the help of HDFS, at the same

time it can also be said that thousands of nodes and

hundreds of petabytes can be managed in the first place with

the help of this HDFS [7]. Both log processing and machine

learning languages are aligned beautifully with the help of

HDFS.

commercial business organizations all around the world. It can

be said that the growth and progress of a commercial business

organization depends hugely on the management of the huge

volumes of data. There are different categories of concepts

which are directly related with management of data such as

reporting of the data, analytics of data, and managing the

insights of the data. Different aspects of HDFS shall be

discussed, critically analyzed and elaborated in this report

from different perspectives. The data which was considered in

this report will be from secondary sources and only latest peer

reviewed journals will be selected to gather information about

HDFS.

Keywords: Hadoop Distributed File System, POSIX style, and

Traffic Management

I. INTRODUCTION

There are diverse categories of data threats which can have a

huge impact on the net profitability and business sales of

corporate establishments such as the social engineering

attacks, software attacks, identity threat, theft of intellectual

property and extortion of information.

The address these diverse categories of threats there are

diverse categories of data storage systems which are created

and deployed by corporate establishments such as the

secondary and primary data storage systems [1]. The diverse

categories of secondary data storage systems are Blu-ray

discs, and USB memory sticks. The categories of primary

data storage systems are register and the diverse categories of

distributed systems.

This report shall focus on Hadoop Distributed File System

(HDFS) which is one of the most significant primary data

storage systems which are increasingly used in most of the

global organizations all around the world. Implementation of

this distributed file system is a huge challenge for the

strategic planners and the IT experts of the business

organizations. The concept of HDFS was introduced in the

year 2006, and it was used in the Apache software

foundation project [2]. This primary storage systems is

widely used in most of the big data analytics projects all

around the world. In the year 2012, Hadoop and HDFS was

available in a unified version of 1.0. The following version

of the software was there in the year 2013 with the

This report shall be having numerous sections and each of

the section will be very much significant to enhance the

originality and effectiveness of the report. The following unit

of this report shall be introducing how HDFS works in most

of the corporate establishments all over the world.

II. DETAILS OF HDFS

A. Working principles of HDFS

There are huge volumes of data which are transferred

between each business unit to another in a corporate

environment and managing the security of those data is a

huge challenge for most of these organization as a result this

distributed file became popular.

HDFS is very much useful to transfer huge volumes of data

between the compute nodes of a business [3]. There are

diverse categories of frameworks which can be closely

aligned with this file storage systems such as the Map

Reduce programmatic framework which are very much

useful to process bulk volumes of data with a seconds.

HDFS is very much useful to manage the integrity as well as

the originality of the data as it breaks down the longer and

complex data into smaller segments. Each of these divided

segments are distributed equally to different nodes in a

cluster. This primary storage system is very well aligned

with the concept of parallel processing [4]. Usually there are

diverse categories of faults when huge volumes of data are

managed however, the efficiency of this distributed file

system is very much on the higher side as a result there are

no real fault tolerant issues related with this system.

Recovery of the data can be done in a more organized

manner with the help of HDFS. Server tracking is one of the

other significant functionality of HDFS. Even if data is at

fault, HDFS is very much beneficial as it ensures processing

of the data.

The architecture of HDFS is very much dynamic in nature

it can be customized according to the business requirements

of its end users. Master slave architecture is one of the most

popular HDFS architecture and this architecture consists of

a single Name Node. It is can be said that larger datasets can

be managed in the first place with the help of this distributed

system. The master node is also termed as the data chunking

architecture and it consists of elements from the Google File

System as well as general parallel file system [5]. This data

storage system works on the basis of different design style

such as the POSIX style. The following diagram will be

very much useful to understand the how Name Nodes

functions with the help of the Data nodes as well as the

Meta data.

Figure 1: HDFS architecture

Source: (Bende and Shedge 2016)

From the above diagram it can be said replication of data

can be done in an organized manner with the help of the

Name Nodes.

This data storage system is very much useful and this

system was first introduced by Yahoo [6]. The marketing

team of Yahoo used this system in the first place, most of

the search engine requirements of this organization were

successfully addressed in the first place with the help of

HDFS [25]. Most of the large scale enactment of the

hardware are supported with the help of HDFS, at the same

time it can also be said that thousands of nodes and

hundreds of petabytes can be managed in the first place with

the help of this HDFS [7]. Both log processing and machine

learning languages are aligned beautifully with the help of

HDFS.

The version of this system kept n changing over the years,

Apache Hadoop 3.0.0 was available for business use by the

end of the year 2017. Additional Name Nodes was

intrioduced in each of the versions [8]. There are other

technologies which were widely incorporated with this

distributed file system such as the dynamometer and the

diverse categories of performance testing tools [26]. There

are complexities related with the incorporation procedure of

the different technologies There are diverse categories of

features which are directly related with use of the HDFS

features which can be understood from the below table.

Features Description

Minimum intervention Most of the nodes which do

not have their operator can

be intervened with the help

coming from the HDFS.

Integrity of the data Most of the corrupted data

are replicated on numerous

occasions.

Rollback Returning to the previous

versions is one of the prime

functionality of HDFS.

Scaling out Scaling up is also one of the

key features of scaling of the

data.

Computing Both parallel as well as

distributed commuting is

supported with the help of

HDFS.

Table 1: Description of the key features of HDFS

III. ADVANTAGES AND LIMITATIONS

A. Advantages

The data storage capability of the commercial

organizations using the HDFS. Similar data which are

stored in multiple sets can be managed in the first

place with the help coming from HDFS.

The data seek time of the organizations can be

enhanced in the first place using HDFS. Aggregation

of the data bandwidth is also considered as one of the

prime benefits of HDFS [9]. Standardization of the

data sets can be enhanced with the help of HDFS as

well.

The data availability is also enhanced in the first

place with the help of the HDFS [10]. The data loads

of the streaming format can also be managed with the

help coming from HDFS.

Data processing can be done is a smaller amount of

time with the help of HDFS.

Checking the status of the clusters is the other

benefit of this file storage system.

The data security can also be enhanced in the first

place with the help of the HDFS. Most of the

commodity hardware which are increasingly used in

our city are significantly benefitted if HDFS is

incorporated [11]. HDFS usually do not have any sort

of compatibility issues with the other file storage

systems or software.

Inspite of the malfunction of numerous nodes HDFS

is very much useful and reliable. Data coherency is

also one of the most significant contributions of this

file distributed system.

The higher fault tolerance is the other major

advantage of using HDFS.

B. Disdvantages

Low latency data access is one of the prime

limitations of HDFS.

The cost of managing the latency is very much on

the higher side which is one of the most significant

drawback of this storage system.

This storage system is very much useful to store

and manage larger chunks or volumes of data

rather than smaller data, thus it must not be used in

smaller organizations and it is also one of the

limitations of this storage system.

Inefficient data access pattern is the other

limitation related with HDFS.

IV. ASPECTS OF HDFS

A. Goals of HDFS

There are diverse categories of objectives which are related

with the use of HDFS such as the followings:

Recovery and fault detection: There are diverse categories

of hardware as well as software which are directly related

with HDFS, as a result fault occurs sometimes, failure of

components tends to occur sometimes, and however the

incorporation of HDFS is very much useful as it can

successfully deal with these kinds of faults [12]. Most of the

data security issues which occurs due to the presence of

thousands of servers can be restricted or managed in the first

place with the assistance of HDFS.

Traffic Management: Managing the outgoing as well as the

incoming traffic is one of the most significant success

factors in global establishments and HDFS can be very

much useful to monitor these outgoing as well as the

incoming traffic [13]. It can also be said that management of

the throughput of the data is also one of the notable

objectives of HDFS. Most of the streaming data can be

accessed in the first place with the help of HDFS. At the

same time, it can also be said that

Huge datasets: The bigger datasets which are managed by

the commercial organizations can be managed with the help

of HDFS as well. Most of the business applications which

are used in our society have different categories of data sets

and these data sets are very much vulnerable to different

data security issues which can be sorted in the first place

with the help of HDFS [14]. Scaling of thousands can be

done in an organized manner with the help of HDFS as well.

The other goal of this storage system is to facilitate adoption

procedure. Multiple hardware platforms can be accessed

with the help of HDFS. HDFS do not have any sort of issues

with any of the operating systems which are used both in

corporate environments and in our society.

Apache Hadoop 3.0.0 was available for business use by the

end of the year 2017. Additional Name Nodes was

intrioduced in each of the versions [8]. There are other

technologies which were widely incorporated with this

distributed file system such as the dynamometer and the

diverse categories of performance testing tools [26]. There

are complexities related with the incorporation procedure of

the different technologies There are diverse categories of

features which are directly related with use of the HDFS

features which can be understood from the below table.

Features Description

Minimum intervention Most of the nodes which do

not have their operator can

be intervened with the help

coming from the HDFS.

Integrity of the data Most of the corrupted data

are replicated on numerous

occasions.

Rollback Returning to the previous

versions is one of the prime

functionality of HDFS.

Scaling out Scaling up is also one of the

key features of scaling of the

data.

Computing Both parallel as well as

distributed commuting is

supported with the help of

HDFS.

Table 1: Description of the key features of HDFS

III. ADVANTAGES AND LIMITATIONS

A. Advantages

The data storage capability of the commercial

organizations using the HDFS. Similar data which are

stored in multiple sets can be managed in the first

place with the help coming from HDFS.

The data seek time of the organizations can be

enhanced in the first place using HDFS. Aggregation

of the data bandwidth is also considered as one of the

prime benefits of HDFS [9]. Standardization of the

data sets can be enhanced with the help of HDFS as

well.

The data availability is also enhanced in the first

place with the help of the HDFS [10]. The data loads

of the streaming format can also be managed with the

help coming from HDFS.

Data processing can be done is a smaller amount of

time with the help of HDFS.

Checking the status of the clusters is the other

benefit of this file storage system.

The data security can also be enhanced in the first

place with the help of the HDFS. Most of the

commodity hardware which are increasingly used in

our city are significantly benefitted if HDFS is

incorporated [11]. HDFS usually do not have any sort

of compatibility issues with the other file storage

systems or software.

Inspite of the malfunction of numerous nodes HDFS

is very much useful and reliable. Data coherency is

also one of the most significant contributions of this

file distributed system.

The higher fault tolerance is the other major

advantage of using HDFS.

B. Disdvantages

Low latency data access is one of the prime

limitations of HDFS.

The cost of managing the latency is very much on

the higher side which is one of the most significant

drawback of this storage system.

This storage system is very much useful to store

and manage larger chunks or volumes of data

rather than smaller data, thus it must not be used in

smaller organizations and it is also one of the

limitations of this storage system.

Inefficient data access pattern is the other

limitation related with HDFS.

IV. ASPECTS OF HDFS

A. Goals of HDFS

There are diverse categories of objectives which are related

with the use of HDFS such as the followings:

Recovery and fault detection: There are diverse categories

of hardware as well as software which are directly related

with HDFS, as a result fault occurs sometimes, failure of

components tends to occur sometimes, and however the

incorporation of HDFS is very much useful as it can

successfully deal with these kinds of faults [12]. Most of the

data security issues which occurs due to the presence of

thousands of servers can be restricted or managed in the first

place with the assistance of HDFS.

Traffic Management: Managing the outgoing as well as the

incoming traffic is one of the most significant success

factors in global establishments and HDFS can be very

much useful to monitor these outgoing as well as the

incoming traffic [13]. It can also be said that management of

the throughput of the data is also one of the notable

objectives of HDFS. Most of the streaming data can be

accessed in the first place with the help of HDFS. At the

same time, it can also be said that

Huge datasets: The bigger datasets which are managed by

the commercial organizations can be managed with the help

of HDFS as well. Most of the business applications which

are used in our society have different categories of data sets

and these data sets are very much vulnerable to different

data security issues which can be sorted in the first place

with the help of HDFS [14]. Scaling of thousands can be

done in an organized manner with the help of HDFS as well.

The other goal of this storage system is to facilitate adoption

procedure. Multiple hardware platforms can be accessed

with the help of HDFS. HDFS do not have any sort of issues

with any of the operating systems which are used both in

corporate environments and in our society.

Hardware at data: There are diverse categories of data

computations which are conducted in most of the business

organizations all over the world, however, it can be seen that

there are human errors in most of those computations. The

introduction of HDFS is very much useful to manage those

computations.

B. HDFS Clients

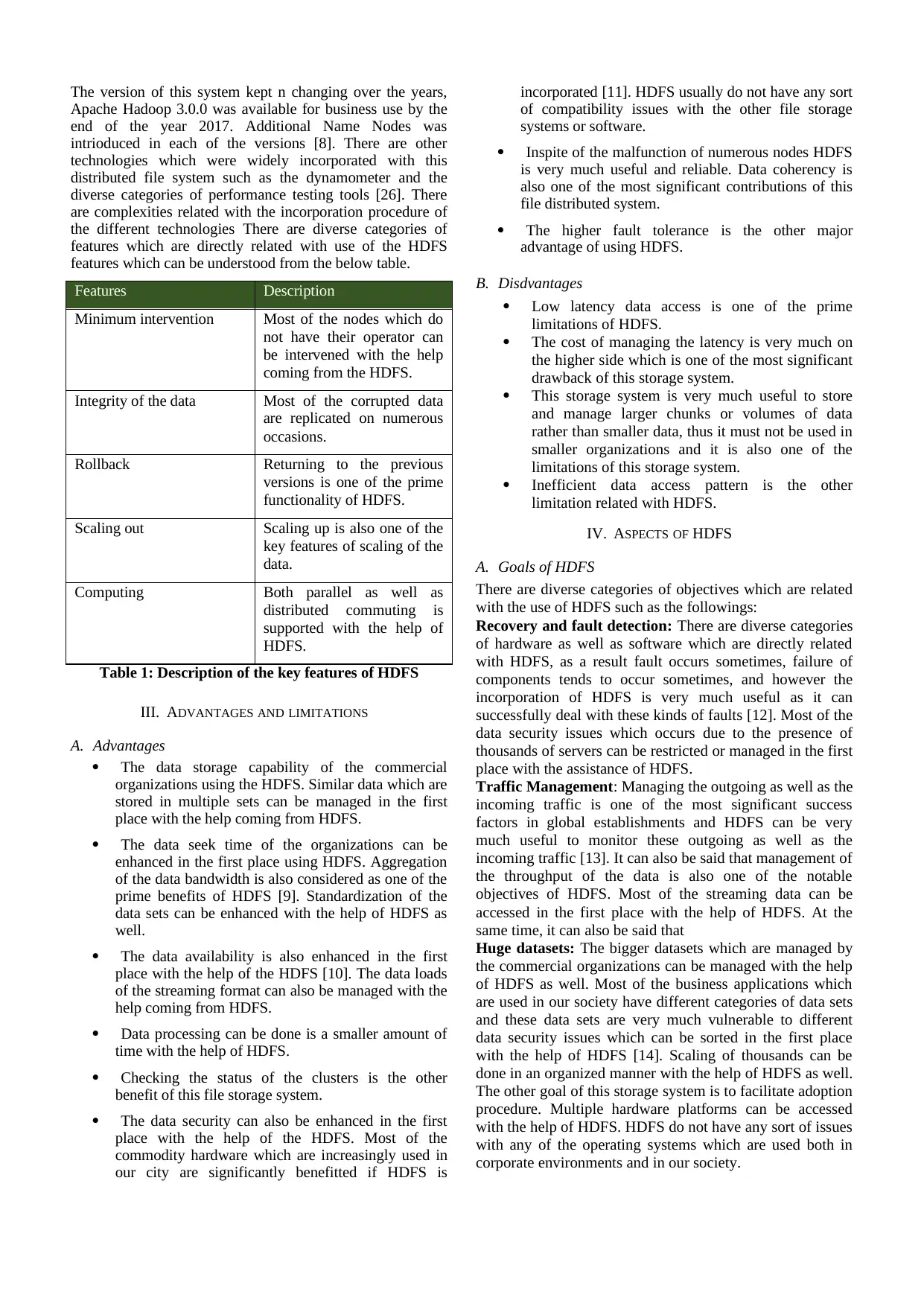

This section of the paper shall illustrating how HDFS clients

creates a new file. In most of the cases, a library is used

which exports the HDFS file system interface. This is very

much beneficial as it supports delete, write, read operations

which are generally conducted on the data which are stored

and managed. The necessity of the multiple replicas can be

identified in the first place with the help of the HDFS.

In the initial stages, the HDFS client asks for the Name

Node to get the list of the Data nodes, the network topology

is very much useful sort the network topology [15]. The

client then contacts the node directly, after that a new

pipeline is organized from one to another then sends data

accordingly. When the first block is filed a new block is

requested, as a result a new block gets created. The

procedure which are used to create a new file can be

understood from the below diagram.

Figure 2: Creation of a new file using HDFS client

(Source: Mahmoud, Hegazy and Khafagy 2018)

C. Data Nodes

Each block which are there in the data node is there due to

the presence of two files of the file system. The initial or the

first file contains the data which is accessed by HDFS. The

metadata of that particular file is in their in the second file.

The actual length of the block is directly proportional to the

size of the data file. Each Name Node gets associated with

the data node in the initial stages, after that a handshaking

protocol is practiced [16]. Verification of the namespace ID

is the main objective behind the handshaking protocol. The

interiority of the file system has to be maintained under

every circumstances. After the handshake procedure the data

node registers themselves with the Name node. The data

node is very much useful as it successfully stores the data

and there is a unique identified related with the data which

is stored. The storage ID is them assigned with the Data

node [17]. Most of the block replicas are managed in the

first place with the help of the Data nodes and after that a

block report is sent which contains a general stamp and a

block ID. The block report is very much useful to block

different types of replica [19]. In the following step

heartbeats are send to the Name node by the Data node. The

name node is the responsible to send new replicas to the

other blocks of the Data nodes. Heartbeats is considered as

the integral party of a data node. The load balancing

decisions as well as the space allocation of the Name node

are the other major operations of the data nodes. The role of

the Name node is very much significant in the entire

procedure as is replies heartbeats to the instructions which

are send to the data nodes. The diverse categories

commands which are directly related with the Name nodes

are as followings:

Sending of immediate bock report.

Removing of the local block replicas.

Replication of blocks to the other nodes.

Shutting down of a node.

Re-registering of the node.

a) I/O operationsd and Replica Management

Write, Read of the file: After the creation of new file with

the help of HDFS, and after the file is closed, the bytes

cannot be altered in the first place. HDFS is very much

useful regarding the enactment of multiple reader model and

single writer model [18]. When one new file is opened, no

other file cannot be opened unless it is altered or removed.

A heart best is said to the Name node, at the same time, it

can be said that the when the file will be closed the lease

will be revoked. The duration of the lease is bounded by the

soft limit as well as the hard limit. If the soft limit expires or

the consumers of thus storage devices fails to close the file

another client can preempt the deal. In less than one hour the

hard limit expires and if the client has failed to renew the

lease the HDFS shall automatically close on behave of the

writer.

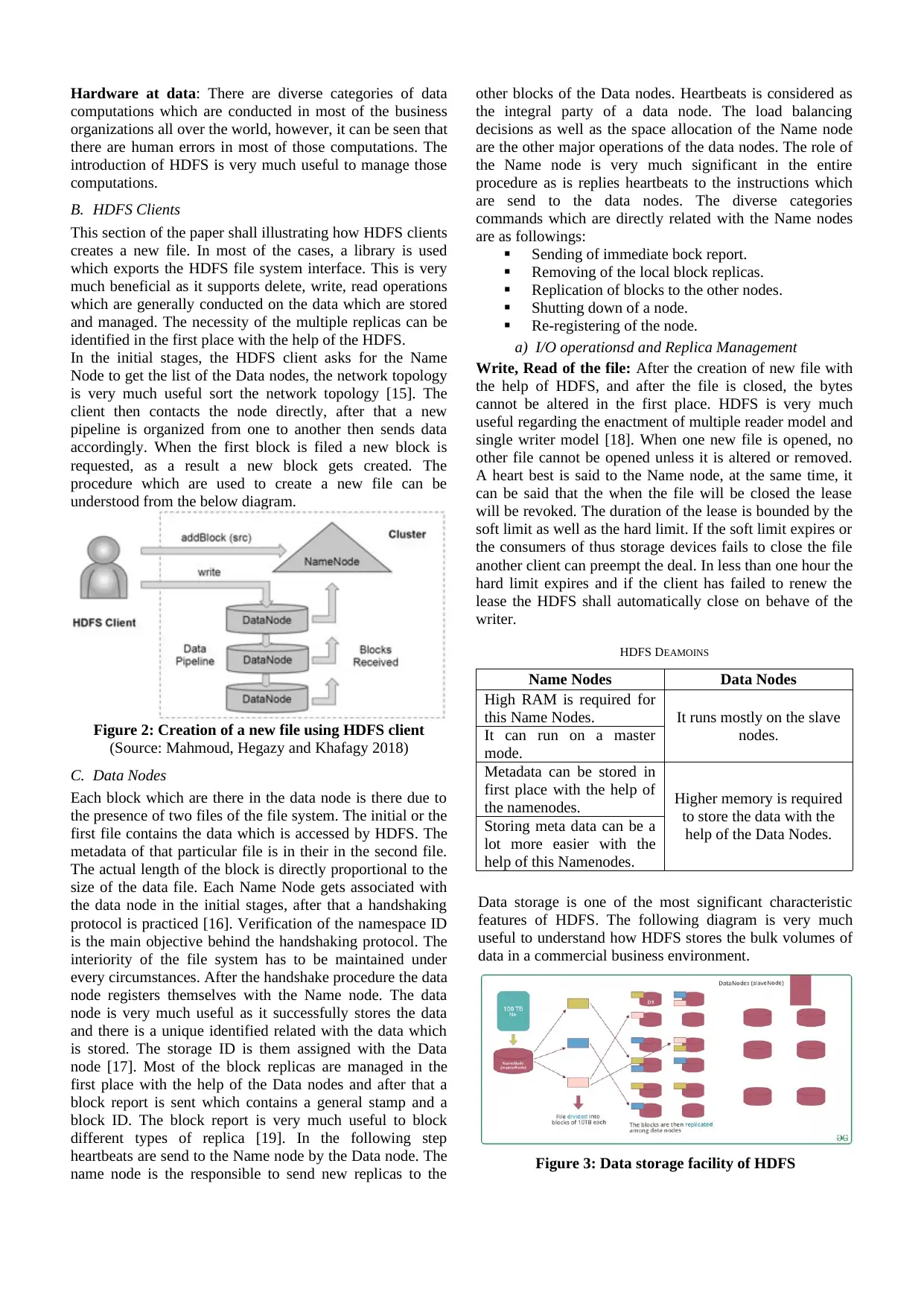

HDFS DEAMOINS

Name Nodes Data Nodes

High RAM is required for

this Name Nodes. It runs mostly on the slave

nodes.It can run on a master

mode.

Metadata can be stored in

first place with the help of

the namenodes. Higher memory is required

to store the data with the

help of the Data Nodes.

Storing meta data can be a

lot more easier with the

help of this Namenodes.

Data storage is one of the most significant characteristic

features of HDFS. The following diagram is very much

useful to understand how HDFS stores the bulk volumes of

data in a commercial business environment.

Figure 3: Data storage facility of HDFS

computations which are conducted in most of the business

organizations all over the world, however, it can be seen that

there are human errors in most of those computations. The

introduction of HDFS is very much useful to manage those

computations.

B. HDFS Clients

This section of the paper shall illustrating how HDFS clients

creates a new file. In most of the cases, a library is used

which exports the HDFS file system interface. This is very

much beneficial as it supports delete, write, read operations

which are generally conducted on the data which are stored

and managed. The necessity of the multiple replicas can be

identified in the first place with the help of the HDFS.

In the initial stages, the HDFS client asks for the Name

Node to get the list of the Data nodes, the network topology

is very much useful sort the network topology [15]. The

client then contacts the node directly, after that a new

pipeline is organized from one to another then sends data

accordingly. When the first block is filed a new block is

requested, as a result a new block gets created. The

procedure which are used to create a new file can be

understood from the below diagram.

Figure 2: Creation of a new file using HDFS client

(Source: Mahmoud, Hegazy and Khafagy 2018)

C. Data Nodes

Each block which are there in the data node is there due to

the presence of two files of the file system. The initial or the

first file contains the data which is accessed by HDFS. The

metadata of that particular file is in their in the second file.

The actual length of the block is directly proportional to the

size of the data file. Each Name Node gets associated with

the data node in the initial stages, after that a handshaking

protocol is practiced [16]. Verification of the namespace ID

is the main objective behind the handshaking protocol. The

interiority of the file system has to be maintained under

every circumstances. After the handshake procedure the data

node registers themselves with the Name node. The data

node is very much useful as it successfully stores the data

and there is a unique identified related with the data which

is stored. The storage ID is them assigned with the Data

node [17]. Most of the block replicas are managed in the

first place with the help of the Data nodes and after that a

block report is sent which contains a general stamp and a

block ID. The block report is very much useful to block

different types of replica [19]. In the following step

heartbeats are send to the Name node by the Data node. The

name node is the responsible to send new replicas to the

other blocks of the Data nodes. Heartbeats is considered as

the integral party of a data node. The load balancing

decisions as well as the space allocation of the Name node

are the other major operations of the data nodes. The role of

the Name node is very much significant in the entire

procedure as is replies heartbeats to the instructions which

are send to the data nodes. The diverse categories

commands which are directly related with the Name nodes

are as followings:

Sending of immediate bock report.

Removing of the local block replicas.

Replication of blocks to the other nodes.

Shutting down of a node.

Re-registering of the node.

a) I/O operationsd and Replica Management

Write, Read of the file: After the creation of new file with

the help of HDFS, and after the file is closed, the bytes

cannot be altered in the first place. HDFS is very much

useful regarding the enactment of multiple reader model and

single writer model [18]. When one new file is opened, no

other file cannot be opened unless it is altered or removed.

A heart best is said to the Name node, at the same time, it

can be said that the when the file will be closed the lease

will be revoked. The duration of the lease is bounded by the

soft limit as well as the hard limit. If the soft limit expires or

the consumers of thus storage devices fails to close the file

another client can preempt the deal. In less than one hour the

hard limit expires and if the client has failed to renew the

lease the HDFS shall automatically close on behave of the

writer.

HDFS DEAMOINS

Name Nodes Data Nodes

High RAM is required for

this Name Nodes. It runs mostly on the slave

nodes.It can run on a master

mode.

Metadata can be stored in

first place with the help of

the namenodes. Higher memory is required

to store the data with the

help of the Data Nodes.

Storing meta data can be a

lot more easier with the

help of this Namenodes.

Data storage is one of the most significant characteristic

features of HDFS. The following diagram is very much

useful to understand how HDFS stores the bulk volumes of

data in a commercial business environment.

Figure 3: Data storage facility of HDFS

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

(Source: Scholz, et al. 2017)

Career Opportunities: There are diverse categories of jobs

which are related with HDFS. The average annual salary of

a Hadoop developer is $USD102000, whereas the annual

salary of experienced Hadoop developer is $USD131000.

Apart from this job there are jobs in terms of the design of

the HDFS according to the business requirements, planners

of this type of distributed systems and enactment of HDFS.

There are expert jobs available in Europe and United States

where the real life problems are solved. There are diverse

advantages related with studying HDFS in high school in

terms of the huge amount of knowledge about the use of

different fault tolerant technology [20]. The significance of

maintaining these technologies can also be understood from

the different types of academic courses which are provided

from different educational institutions. Job in the Hadoop

clusters can be easily obtained if most of the Hadoop

concepts are understood by the graduates.

Literature Review

According to Ciritoglu et al. (2018), there are diverse

categories of components related with Hadoop architecture

such as the hadoop cluster, name node, data node,

secondary nodes like HDFS client, diverse categories of

framework, and flexible schema. The paper helped in

understanding that checkpoint is very much significant for

the enactment of the secondary name mode [21]. The

investigator of this peer reviewed journal was very much

useful to identify that maintenance of the Name Mode is

very much required in most of the HDFS which are used in

commercial establishments [23]. The significance of the

backup node in HDFS was also elaborated in the

discussions of this paper.

On the other hand, as revealed by Abead, Khafagy and

Omara (2016), there are few limitations related with the use

of HDFS such as its inability to deal with the small files, its

slow processing speed, it have no real time data processing

capability, latency issues of the HDFS, and the different

types of security issues [22]. The paper focused on the fact

that only batch processing is performed by HDFS whereas

it is supposed to process different types of large and

complex data sets. The journal was very much useful to

identify that iterative progressive is one of the most

significant limitations of HDFS.

CONCLUSION

Management of the data is very much required for the

growth and progress of both society as well as corporate

establishments as there are diverse categories of data

security threats such as theft of intellectual properties and

the data security attacks [24]. Most of the data management

challenges which are faced in the commercial

establishments all around the world can be addressed in the

first place using HDFS. The main components of the HDFS

architecture are Name Node, Data Nodes, Meta data and the

different types of blocks. There are diverse versions of

Hadoop versions which are available for commercial use.

The prime specifications of HDFS are its computing

capability, maintaining the integrity of the data ad

controlling the intervention of the nodes. Standardization of

bulk volumes of data can be done using HDFS, at the same

time, HDFS is also very much useful to maintain the

security and the availability of the data. Data coherency can

be maintained using Hadoop as well [26]. Low latency data

access, cost of managing the low latency and inefficient data

access pattern are the prime drawbacks of HDFS. The prime

goals of HDFS is detection of the faults and recovering

those data, traffic management and management of large

chunks of data sets. The procedure of creation of a new file

with the help of the HDFS client can also be understood

from this paper, the paper was very much useful as it

provided a detailed description about data nodes and replica

management. The career opportunities of Hadoop was also

discussed in the concluding section of this report.

RECOMMENDATION

The list of recommendations related with the use of HDFS

in different business environments are as followings:

The robustness of the data has to be identified in

the first place before storing the data in HDFS.

The communication protocols has to be understood

as it can successfully minimized different

categories of faults.

Accessibility of the data has to be known to each

and every stakeholders who works with this file

storage system.

REFERENCE

[1] S. Bende, and R. Shedge. Dealing with small files

problem in hadoop distributed file system. Procedia

Computer Science, 79, pp.1001-1012, 2016

[2] P. Satheesh, B. Srinivas, P.R.S. Naidu and B.P. Kumar.

Study on Efficient and Adaptive Reproducing Management

in Hadoop Distributed File System. In Internet of Things

and Personalized Healthcare Systems (pp. 121-132).

Springer, Singapore, 2012

[3] H. E, Ciritoglu, T. Saber, T.S. Buda, J. Murphy, and C.

Thorpe. Towards a better replica management for hadoop

distributed file system. In 2018 IEEE International

Congress on Big Data (BigData Congress) (pp. 104-111).

IEEE, 2018

[4] Y. Xie, A.M.Q. Farhan and M. Zhou. Performance

Analysis of Hadoop Distributed File System Writing File

Process. In 2018 International Conference on Intelligent

Autonomous Systems (ICoIAS) (pp. 116-120). IEEE, 2018

[5] M.M. Fahmy, I. Elghandour, and M. Nagi. CoS-HDFS:

co-locating geo-distributed spatial data in hadoop distributed

file system. In Proceedings of the 3rd IEEE/ACM

International Conference on Big Data Computing,

Applications and Technologies (pp. 123-132). ACM.

[6] C.Y. Lin, and Y.C. Lin. An overall approach to achieve

load balancing for Hadoop distributed file system.

International Journal of Web and Grid Services, 13(4),

pp.448-466, 2017

Career Opportunities: There are diverse categories of jobs

which are related with HDFS. The average annual salary of

a Hadoop developer is $USD102000, whereas the annual

salary of experienced Hadoop developer is $USD131000.

Apart from this job there are jobs in terms of the design of

the HDFS according to the business requirements, planners

of this type of distributed systems and enactment of HDFS.

There are expert jobs available in Europe and United States

where the real life problems are solved. There are diverse

advantages related with studying HDFS in high school in

terms of the huge amount of knowledge about the use of

different fault tolerant technology [20]. The significance of

maintaining these technologies can also be understood from

the different types of academic courses which are provided

from different educational institutions. Job in the Hadoop

clusters can be easily obtained if most of the Hadoop

concepts are understood by the graduates.

Literature Review

According to Ciritoglu et al. (2018), there are diverse

categories of components related with Hadoop architecture

such as the hadoop cluster, name node, data node,

secondary nodes like HDFS client, diverse categories of

framework, and flexible schema. The paper helped in

understanding that checkpoint is very much significant for

the enactment of the secondary name mode [21]. The

investigator of this peer reviewed journal was very much

useful to identify that maintenance of the Name Mode is

very much required in most of the HDFS which are used in

commercial establishments [23]. The significance of the

backup node in HDFS was also elaborated in the

discussions of this paper.

On the other hand, as revealed by Abead, Khafagy and

Omara (2016), there are few limitations related with the use

of HDFS such as its inability to deal with the small files, its

slow processing speed, it have no real time data processing

capability, latency issues of the HDFS, and the different

types of security issues [22]. The paper focused on the fact

that only batch processing is performed by HDFS whereas

it is supposed to process different types of large and

complex data sets. The journal was very much useful to

identify that iterative progressive is one of the most

significant limitations of HDFS.

CONCLUSION

Management of the data is very much required for the

growth and progress of both society as well as corporate

establishments as there are diverse categories of data

security threats such as theft of intellectual properties and

the data security attacks [24]. Most of the data management

challenges which are faced in the commercial

establishments all around the world can be addressed in the

first place using HDFS. The main components of the HDFS

architecture are Name Node, Data Nodes, Meta data and the

different types of blocks. There are diverse versions of

Hadoop versions which are available for commercial use.

The prime specifications of HDFS are its computing

capability, maintaining the integrity of the data ad

controlling the intervention of the nodes. Standardization of

bulk volumes of data can be done using HDFS, at the same

time, HDFS is also very much useful to maintain the

security and the availability of the data. Data coherency can

be maintained using Hadoop as well [26]. Low latency data

access, cost of managing the low latency and inefficient data

access pattern are the prime drawbacks of HDFS. The prime

goals of HDFS is detection of the faults and recovering

those data, traffic management and management of large

chunks of data sets. The procedure of creation of a new file

with the help of the HDFS client can also be understood

from this paper, the paper was very much useful as it

provided a detailed description about data nodes and replica

management. The career opportunities of Hadoop was also

discussed in the concluding section of this report.

RECOMMENDATION

The list of recommendations related with the use of HDFS

in different business environments are as followings:

The robustness of the data has to be identified in

the first place before storing the data in HDFS.

The communication protocols has to be understood

as it can successfully minimized different

categories of faults.

Accessibility of the data has to be known to each

and every stakeholders who works with this file

storage system.

REFERENCE

[1] S. Bende, and R. Shedge. Dealing with small files

problem in hadoop distributed file system. Procedia

Computer Science, 79, pp.1001-1012, 2016

[2] P. Satheesh, B. Srinivas, P.R.S. Naidu and B.P. Kumar.

Study on Efficient and Adaptive Reproducing Management

in Hadoop Distributed File System. In Internet of Things

and Personalized Healthcare Systems (pp. 121-132).

Springer, Singapore, 2012

[3] H. E, Ciritoglu, T. Saber, T.S. Buda, J. Murphy, and C.

Thorpe. Towards a better replica management for hadoop

distributed file system. In 2018 IEEE International

Congress on Big Data (BigData Congress) (pp. 104-111).

IEEE, 2018

[4] Y. Xie, A.M.Q. Farhan and M. Zhou. Performance

Analysis of Hadoop Distributed File System Writing File

Process. In 2018 International Conference on Intelligent

Autonomous Systems (ICoIAS) (pp. 116-120). IEEE, 2018

[5] M.M. Fahmy, I. Elghandour, and M. Nagi. CoS-HDFS:

co-locating geo-distributed spatial data in hadoop distributed

file system. In Proceedings of the 3rd IEEE/ACM

International Conference on Big Data Computing,

Applications and Technologies (pp. 123-132). ACM.

[6] C.Y. Lin, and Y.C. Lin. An overall approach to achieve

load balancing for Hadoop distributed file system.

International Journal of Web and Grid Services, 13(4),

pp.448-466, 2017

[7] C. Liao, A. Squicciarini, and D. Lin. Last-hdfs:

Location-aware storage technique for hadoop distributed file

system. In 2016 IEEE 9th International Conference on

Cloud Computing (CLOUD) (pp. 662-669). IEEE, 2016

[8] N. Jyoti. The role of Hadoop Distributed Files System

(HDFS) in Technological era, 2017

[9] P.P. Deshpande. Hadoop Distributed FileSystem:

Metadata Management, 2017

[10] V.B. Bobade. Survey paper on big data and Hadoop.

International Research Journal of Engineering and

Technology, 3(1), pp.2395-0056, 2016

[11] H. Mahmoud, A, Hegazy, and M.H. Khafagy. An

approach for big data security based on Hadoop distributed

file system. In 2018 International Conference on Innovative

Trends in Computer Engineering (ITCE) (pp. 109-114).

IEEE, 2018

[12] N. Bhojwani and A. P.V. Shah. A survey on hadoop

hbase system. Development, 3(1), 2016

[13] B. Tian, Y. Tian, B. Huebert, Y. Sun, T. Hurt, W. Ho,

Y. Zhang, D. Chen, and H. Lee. SecHDFS: a secure data

allocation scheme for heterogenous Hadoop systems. In

2016 IEEE International Conference on Networking,

Architecture and Storage (NAS) (pp. 1-2). IEEE, 2016

[14] S. Maneas, and B. Schroeder. The evolution of the

hadoop distributed file system. In 2018 32nd International

Conference on Advanced Information Networking and

Applications Workshops (WAINA) (pp. 67-74). IEEE, 2018

[15] R. Scholz, N. Tcholtchev, P. Lämmel and I.

Schieferdecker. A CKAN Plugin for Data Harvesting to the

Hadoop Distributed File System. In CLOSER (pp. 19-28),

2017

[16] G. Manogaran, C. Thota, D. Lopez, V. Vijayakumar,

K.M. Abbas and R. Sundarsekar. Big data knowledge

system in healthcare. In Internet of things and big data

technologies for next generation healthcare (pp. 133-157).

Springer, Cham, 2017

[17] T.L.S.R. Krishna, T. Ragunathan, and S. K. Battula.

Customized web user interface for hadoop distributed file

system. In Proceedings of the Second International

Conference on Computer and Communication Technologies

(pp. 567-576). Springer, New Delhi, 2016

[18] N. V. Patil. Apache Hadoop Distributed File System.

Asian Journal For Convergence In Technology (AJCT), 3,

2017

[19] B. Rangaswamy, N. Geethanjali, T. Ragunathan and

B.S. Kumar. Performance Improvement of Read Operations

in Distributed File System Through Anticipated Parallel

Processing. In Proceedings of the Second International

Conference on Computer and Communication Technologies

(pp. 275-287). Springer, New Delhi, 2016

[20] E.S. Abead, M.H. Khafagy and F.A. Omara. An

efficient replication technique for hadoop distributed file

system. Int. J. Sci. Eng. Res, 7(1), pp.254-261, 2016

[21] W.G. Choi and S. Park. A write-friendly approach to

manage namespace of Hadoop distributed file system by

utilizing nonvolatile memory. The Journal of

Supercomputing, pp.1-31, 2019

[22] K. Arnepalli and K.S. Rao. Application of Hadoop

Distributed File System in Digital Libraries.

Basha, S.J., Kumar, P.A. and Babu, S.G., 2016. Storage and

processing speed for knowledge from enhanced cloud

computing with Hadoop frame work: A survey. IJSRSET,

2(2), pp.126-132, 2016

[23] W. Ryu. Flexible Management of Data Nodes for

Hadoop Distributed File system. ALLDATA 2017, p.10,

2017

[24] S.Y. Inamdar,A.H. Jadhav, R.B. Desai, P.S. Shinde, I.

M. Ghadage and A.A. Gaikwad. Data Security in Hadoop

Distributed File System, 2016

[25] S. Niazi, M. Ismail, S. Haridi, J. Dowling, S.

Grohsschmiedt and M. Ronström. Hopsfs: Scaling

hierarchical file system metadata using newsql databases. In

15th {USENIX} Conference on File and Storage

Technologies ({FAST} 17) (pp. 89-104), 2017

[26] V. Taran, O. Alienin, S. Stirenko, Y. Gordienko and A.

Rojbi. Performance evaluation of distributed computing

environments with Hadoop and Spark frameworks. In 2017

IEEE International Young Scientists Forum on Applied

Physics and Engineering (YSF) (pp. 80-83). IEEE, 2017

Location-aware storage technique for hadoop distributed file

system. In 2016 IEEE 9th International Conference on

Cloud Computing (CLOUD) (pp. 662-669). IEEE, 2016

[8] N. Jyoti. The role of Hadoop Distributed Files System

(HDFS) in Technological era, 2017

[9] P.P. Deshpande. Hadoop Distributed FileSystem:

Metadata Management, 2017

[10] V.B. Bobade. Survey paper on big data and Hadoop.

International Research Journal of Engineering and

Technology, 3(1), pp.2395-0056, 2016

[11] H. Mahmoud, A, Hegazy, and M.H. Khafagy. An

approach for big data security based on Hadoop distributed

file system. In 2018 International Conference on Innovative

Trends in Computer Engineering (ITCE) (pp. 109-114).

IEEE, 2018

[12] N. Bhojwani and A. P.V. Shah. A survey on hadoop

hbase system. Development, 3(1), 2016

[13] B. Tian, Y. Tian, B. Huebert, Y. Sun, T. Hurt, W. Ho,

Y. Zhang, D. Chen, and H. Lee. SecHDFS: a secure data

allocation scheme for heterogenous Hadoop systems. In

2016 IEEE International Conference on Networking,

Architecture and Storage (NAS) (pp. 1-2). IEEE, 2016

[14] S. Maneas, and B. Schroeder. The evolution of the

hadoop distributed file system. In 2018 32nd International

Conference on Advanced Information Networking and

Applications Workshops (WAINA) (pp. 67-74). IEEE, 2018

[15] R. Scholz, N. Tcholtchev, P. Lämmel and I.

Schieferdecker. A CKAN Plugin for Data Harvesting to the

Hadoop Distributed File System. In CLOSER (pp. 19-28),

2017

[16] G. Manogaran, C. Thota, D. Lopez, V. Vijayakumar,

K.M. Abbas and R. Sundarsekar. Big data knowledge

system in healthcare. In Internet of things and big data

technologies for next generation healthcare (pp. 133-157).

Springer, Cham, 2017

[17] T.L.S.R. Krishna, T. Ragunathan, and S. K. Battula.

Customized web user interface for hadoop distributed file

system. In Proceedings of the Second International

Conference on Computer and Communication Technologies

(pp. 567-576). Springer, New Delhi, 2016

[18] N. V. Patil. Apache Hadoop Distributed File System.

Asian Journal For Convergence In Technology (AJCT), 3,

2017

[19] B. Rangaswamy, N. Geethanjali, T. Ragunathan and

B.S. Kumar. Performance Improvement of Read Operations

in Distributed File System Through Anticipated Parallel

Processing. In Proceedings of the Second International

Conference on Computer and Communication Technologies

(pp. 275-287). Springer, New Delhi, 2016

[20] E.S. Abead, M.H. Khafagy and F.A. Omara. An

efficient replication technique for hadoop distributed file

system. Int. J. Sci. Eng. Res, 7(1), pp.254-261, 2016

[21] W.G. Choi and S. Park. A write-friendly approach to

manage namespace of Hadoop distributed file system by

utilizing nonvolatile memory. The Journal of

Supercomputing, pp.1-31, 2019

[22] K. Arnepalli and K.S. Rao. Application of Hadoop

Distributed File System in Digital Libraries.

Basha, S.J., Kumar, P.A. and Babu, S.G., 2016. Storage and

processing speed for knowledge from enhanced cloud

computing with Hadoop frame work: A survey. IJSRSET,

2(2), pp.126-132, 2016

[23] W. Ryu. Flexible Management of Data Nodes for

Hadoop Distributed File system. ALLDATA 2017, p.10,

2017

[24] S.Y. Inamdar,A.H. Jadhav, R.B. Desai, P.S. Shinde, I.

M. Ghadage and A.A. Gaikwad. Data Security in Hadoop

Distributed File System, 2016

[25] S. Niazi, M. Ismail, S. Haridi, J. Dowling, S.

Grohsschmiedt and M. Ronström. Hopsfs: Scaling

hierarchical file system metadata using newsql databases. In

15th {USENIX} Conference on File and Storage

Technologies ({FAST} 17) (pp. 89-104), 2017

[26] V. Taran, O. Alienin, S. Stirenko, Y. Gordienko and A.

Rojbi. Performance evaluation of distributed computing

environments with Hadoop and Spark frameworks. In 2017

IEEE International Young Scientists Forum on Applied

Physics and Engineering (YSF) (pp. 80-83). IEEE, 2017

1 out of 7

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.