Bit Error Probability, ML Decoding Rule and Specific Codeword Decoding

VerifiedAdded on 2023/04/26

|7

|968

|89

AI Summary

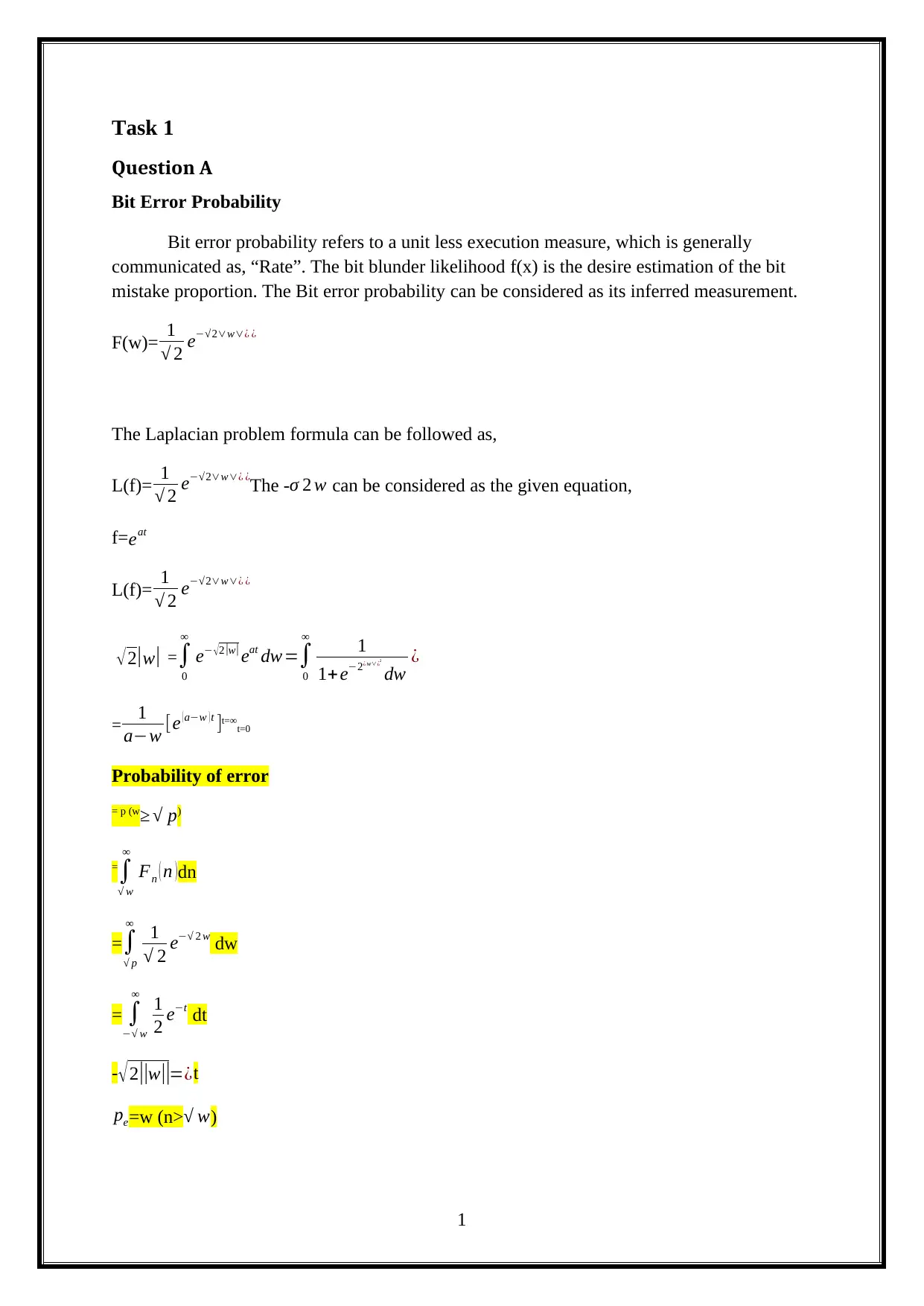

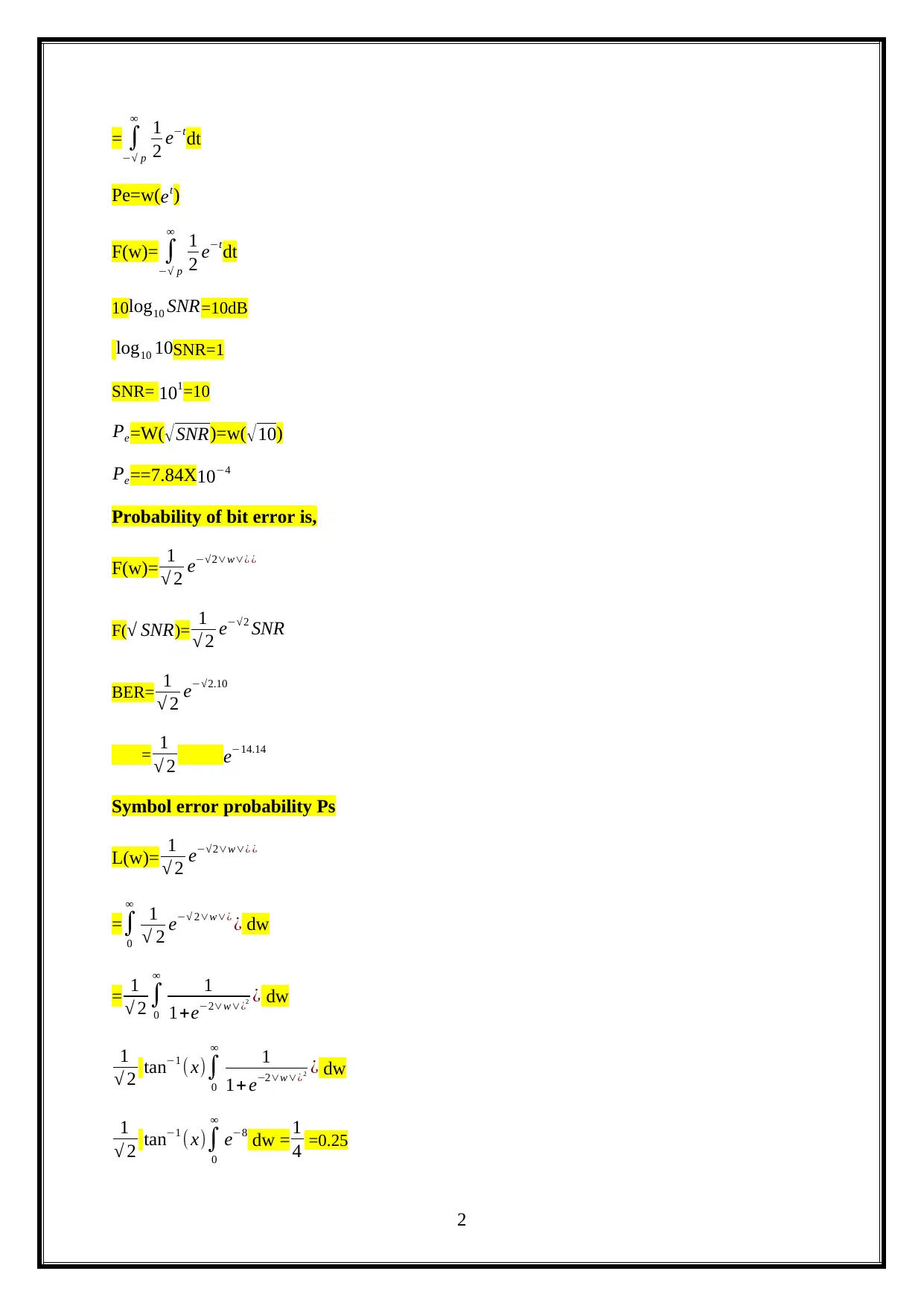

This document covers topics like Bit Error Probability, ML Decoding Rule and Specific Codeword Decoding in Electronics. It explains the formulas and calculations involved in these topics. The document also mentions the subject, course code and college/university.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

1 out of 7

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)