Implementation of Cloud Computing Technology using Data Mining Algorithms

VerifiedAdded on 2023/06/12

|7

|5000

|181

AI Summary

This paper discusses the implementation of cloud computing technology using data mining algorithms. It explores the benefits of distributed computing and MapReduce parallel processing architecture for association rule mining. The paper also introduces Aneka Cloud Strategy and Mahout open source project for data mining parallelism. The theoretical analysis of association rule mining in cloud is also presented.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

A Study On Implementation of Cloud

Computing Technology using Data Mining

Algorithms

Abstract - There are some implementation stuck and

flexibility issues when standard data mining structure is

utilized as a bit of appropriated figuring. In this paper,

we introduce an information mining stage in context of

Cloud Computing innovation. Separated and a standard

information mining structure, this stage is altogether

adaptable, has gigantic information managing capacities

ˈ is advantage masterminded, and has low equipment

cost. This stage can bolster the outline and livelihoods of

an expansive variety of dispersed information mining

frameworks. In this paper we will think about on huge

information mining and extraordinary process and data

taking care of limits of dispersed registering give

extreme help to information mining. Through the

examination of the information mining and the

appropriated registering development, in this paper,

new idea to design the association rule mining algorithm

along with MapReduce parallel processing architecture

is introduced. An experimental investigation on this idea

is carried out. In recent times, Aneka Cloud Strategy is

becoming a well utilized cloud strategy worldwide and it

will be discussed.

Keywords-Cloud, Cloud Computing, Data Mining,

Association Rule Mining, FP algorithm, Cloud Strategy,

Distributed Computing

I. INTRODUCTION

With the short change of versatile web and the

web of things, huge measures of information are

conveyed in reliably. Information has invaded into

each train of industry and business endeavor limits.

Inside the season of significant data, on the off chance

that we need to find beyond any doubt important data

from no holonomic, substantial, commotion and self-

assertive records, we should enhance the capability

realities mining count. Administered processing is to

display dynamic guide, virtualization and

unreasonable helpful figuring stage. Distributed

computing into insights mining can adapt to the

viability inconvenience of huge actualities mining.

Over the top transparency says that passed on

enrolling can drive forward focus messes up or even a

substantial wide assortment of center point

dissatisfactions won't not affect the program to run

decisively [1]. Mass data restrict and scattered

enrolling give some other way to deal with mass

actualities mining and revise into a suitable response

for the surpassed on gathering and successful figuring

of mass insights mining. The passed on enlisting

model has brought various central focuses, for

instance, unimportant effort, charge tolerant part, the

quick managing speed, more noteworthy pleasing

machine exchange and so forth. Various experts

expect that the cloud records mining is the

unavoidable predetermination of web information

mining nearer to the way [2].

II. CLOUD COMPUTING AND DATA MINING

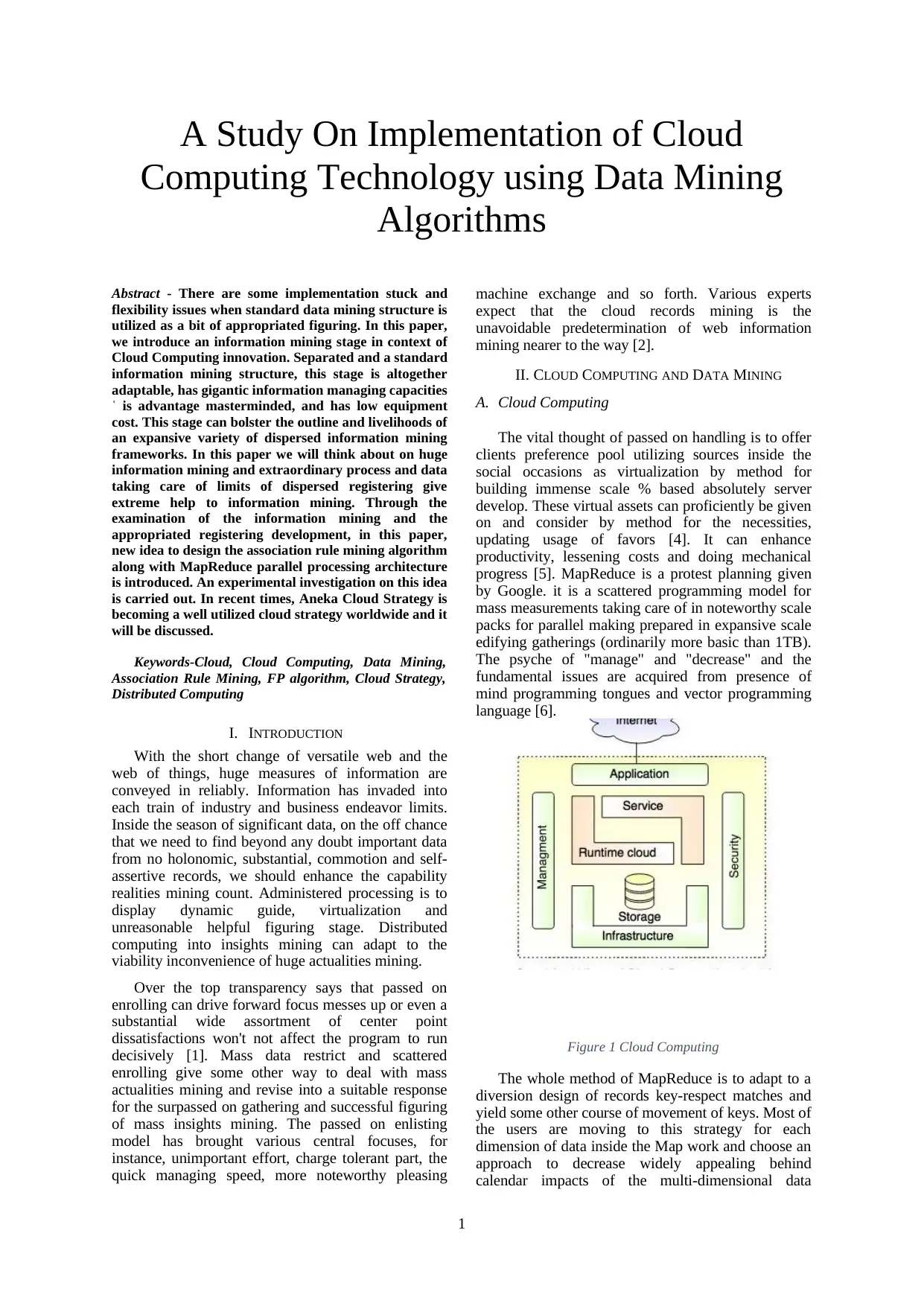

A. Cloud Computing

The vital thought of passed on handling is to offer

clients preference pool utilizing sources inside the

social occasions as virtualization by method for

building immense scale % based absolutely server

develop. These virtual assets can proficiently be given

on and consider by method for the necessities,

updating usage of favors [4]. It can enhance

productivity, lessening costs and doing mechanical

progress [5]. MapReduce is a protest planning given

by Google. it is a scattered programming model for

mass measurements taking care of in noteworthy scale

packs for parallel making prepared in expansive scale

edifying gatherings (ordinarily more basic than 1TB).

The psyche of "manage" and "decrease" and the

fundamental issues are acquired from presence of

mind programming tongues and vector programming

language [6].

Figure 1 Cloud Computing

The whole method of MapReduce is to adapt to a

diversion design of records key-respect matches and

yield some other course of movement of keys. Most of

the users are moving to this strategy for each

dimension of data inside the Map work and choose an

approach to decrease widely appealing behind

calendar impacts of the multi-dimensional data

1

Computing Technology using Data Mining

Algorithms

Abstract - There are some implementation stuck and

flexibility issues when standard data mining structure is

utilized as a bit of appropriated figuring. In this paper,

we introduce an information mining stage in context of

Cloud Computing innovation. Separated and a standard

information mining structure, this stage is altogether

adaptable, has gigantic information managing capacities

ˈ is advantage masterminded, and has low equipment

cost. This stage can bolster the outline and livelihoods of

an expansive variety of dispersed information mining

frameworks. In this paper we will think about on huge

information mining and extraordinary process and data

taking care of limits of dispersed registering give

extreme help to information mining. Through the

examination of the information mining and the

appropriated registering development, in this paper,

new idea to design the association rule mining algorithm

along with MapReduce parallel processing architecture

is introduced. An experimental investigation on this idea

is carried out. In recent times, Aneka Cloud Strategy is

becoming a well utilized cloud strategy worldwide and it

will be discussed.

Keywords-Cloud, Cloud Computing, Data Mining,

Association Rule Mining, FP algorithm, Cloud Strategy,

Distributed Computing

I. INTRODUCTION

With the short change of versatile web and the

web of things, huge measures of information are

conveyed in reliably. Information has invaded into

each train of industry and business endeavor limits.

Inside the season of significant data, on the off chance

that we need to find beyond any doubt important data

from no holonomic, substantial, commotion and self-

assertive records, we should enhance the capability

realities mining count. Administered processing is to

display dynamic guide, virtualization and

unreasonable helpful figuring stage. Distributed

computing into insights mining can adapt to the

viability inconvenience of huge actualities mining.

Over the top transparency says that passed on

enrolling can drive forward focus messes up or even a

substantial wide assortment of center point

dissatisfactions won't not affect the program to run

decisively [1]. Mass data restrict and scattered

enrolling give some other way to deal with mass

actualities mining and revise into a suitable response

for the surpassed on gathering and successful figuring

of mass insights mining. The passed on enlisting

model has brought various central focuses, for

instance, unimportant effort, charge tolerant part, the

quick managing speed, more noteworthy pleasing

machine exchange and so forth. Various experts

expect that the cloud records mining is the

unavoidable predetermination of web information

mining nearer to the way [2].

II. CLOUD COMPUTING AND DATA MINING

A. Cloud Computing

The vital thought of passed on handling is to offer

clients preference pool utilizing sources inside the

social occasions as virtualization by method for

building immense scale % based absolutely server

develop. These virtual assets can proficiently be given

on and consider by method for the necessities,

updating usage of favors [4]. It can enhance

productivity, lessening costs and doing mechanical

progress [5]. MapReduce is a protest planning given

by Google. it is a scattered programming model for

mass measurements taking care of in noteworthy scale

packs for parallel making prepared in expansive scale

edifying gatherings (ordinarily more basic than 1TB).

The psyche of "manage" and "decrease" and the

fundamental issues are acquired from presence of

mind programming tongues and vector programming

language [6].

Figure 1 Cloud Computing

The whole method of MapReduce is to adapt to a

diversion design of records key-respect matches and

yield some other course of movement of keys. Most of

the users are moving to this strategy for each

dimension of data inside the Map work and choose an

approach to decrease widely appealing behind

calendar impacts of the multi-dimensional data

1

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

dealing with inside the reduction dealing with. The

buyer truly need to demonstrate the Map works of art

and the diminish ability to make appropriated parallel

endeavors. Right when MapReduce programs are

strolling on the social affair, clients don't have to

concern how to thwart the data, task or booking.

The contraption will in like way administer center

bombs inside the package and the between center

correspondence association and differing matters. The

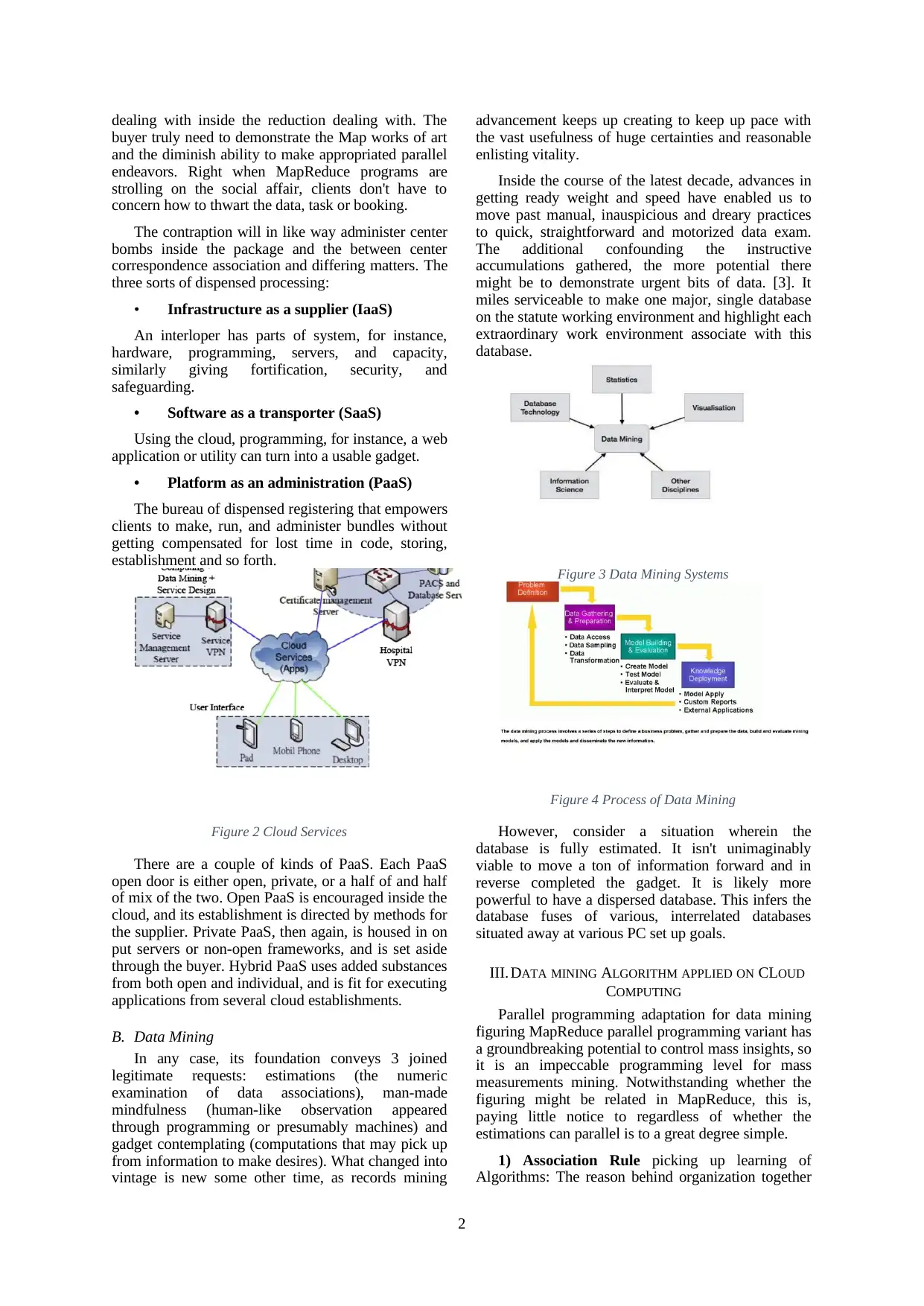

three sorts of dispensed processing:

• Infrastructure as a supplier (IaaS)

An interloper has parts of system, for instance,

hardware, programming, servers, and capacity,

similarly giving fortification, security, and

safeguarding.

• Software as a transporter (SaaS)

Using the cloud, programming, for instance, a web

application or utility can turn into a usable gadget.

• Platform as an administration (PaaS)

The bureau of dispensed registering that empowers

clients to make, run, and administer bundles without

getting compensated for lost time in code, storing,

establishment and so forth.

Figure 2 Cloud Services

There are a couple of kinds of PaaS. Each PaaS

open door is either open, private, or a half of and half

of mix of the two. Open PaaS is encouraged inside the

cloud, and its establishment is directed by methods for

the supplier. Private PaaS, then again, is housed in on

put servers or non-open frameworks, and is set aside

through the buyer. Hybrid PaaS uses added substances

from both open and individual, and is fit for executing

applications from several cloud establishments.

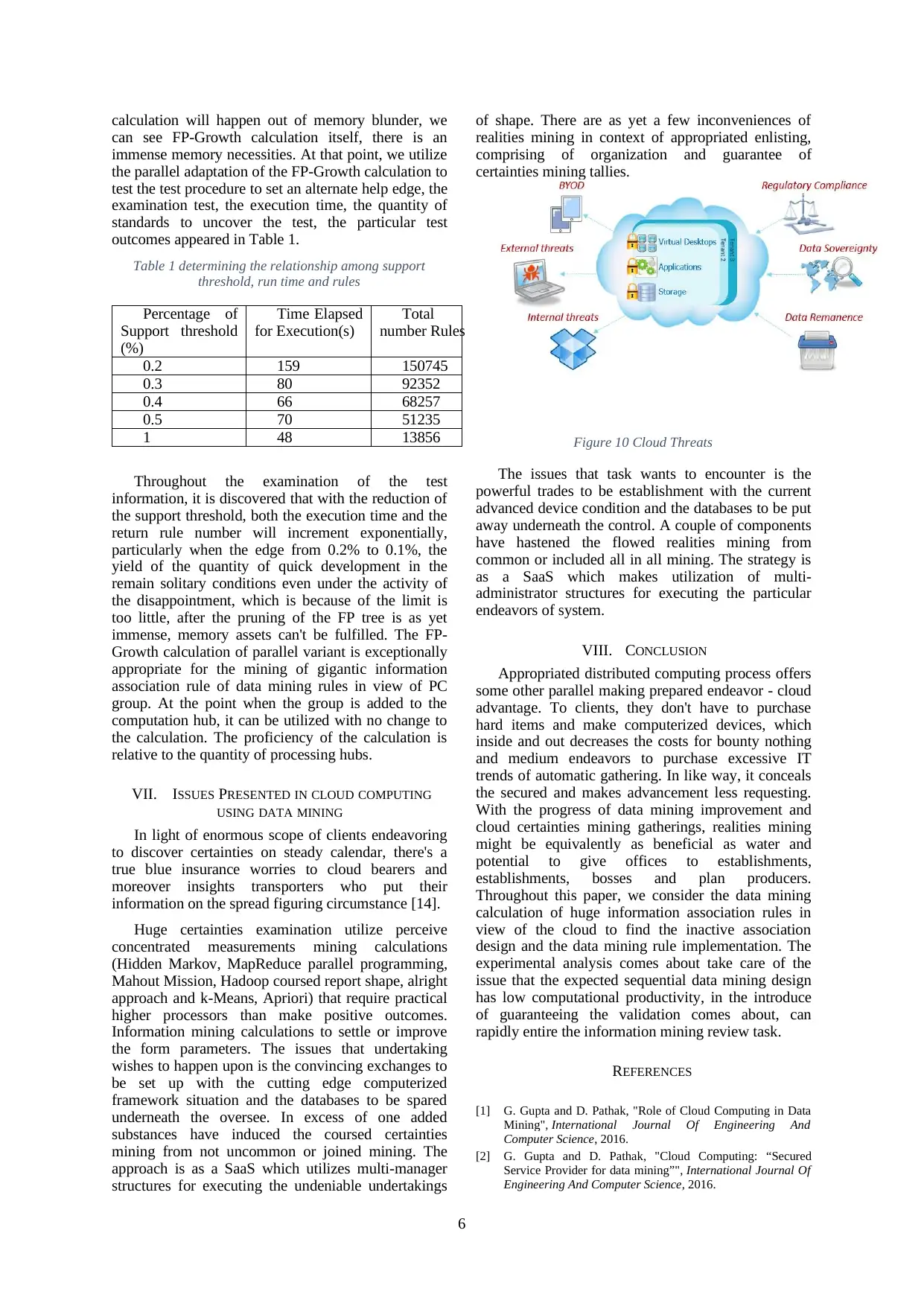

B. Data Mining

In any case, its foundation conveys 3 joined

legitimate requests: estimations (the numeric

examination of data associations), man-made

mindfulness (human-like observation appeared

through programming or presumably machines) and

gadget contemplating (computations that may pick up

from information to make desires). What changed into

vintage is new some other time, as records mining

advancement keeps up creating to keep up pace with

the vast usefulness of huge certainties and reasonable

enlisting vitality.

Inside the course of the latest decade, advances in

getting ready weight and speed have enabled us to

move past manual, inauspicious and dreary practices

to quick, straightforward and motorized data exam.

The additional confounding the instructive

accumulations gathered, the more potential there

might be to demonstrate urgent bits of data. [3]. It

miles serviceable to make one major, single database

on the statute working environment and highlight each

extraordinary work environment associate with this

database.

Figure 3 Data Mining Systems

Figure 4 Process of Data Mining

However, consider a situation wherein the

database is fully estimated. It isn't unimaginably

viable to move a ton of information forward and in

reverse completed the gadget. It is likely more

powerful to have a dispersed database. This infers the

database fuses of various, interrelated databases

situated away at various PC set up goals.

III. DATA MINING ALGORITHM APPLIED ON CLOUD

COMPUTING

Parallel programming adaptation for data mining

figuring MapReduce parallel programming variant has

a groundbreaking potential to control mass insights, so

it is an impeccable programming level for mass

measurements mining. Notwithstanding whether the

figuring might be related in MapReduce, this is,

paying little notice to regardless of whether the

estimations can parallel is to a great degree simple.

1) Association Rule picking up learning of

Algorithms: The reason behind organization together

2

buyer truly need to demonstrate the Map works of art

and the diminish ability to make appropriated parallel

endeavors. Right when MapReduce programs are

strolling on the social affair, clients don't have to

concern how to thwart the data, task or booking.

The contraption will in like way administer center

bombs inside the package and the between center

correspondence association and differing matters. The

three sorts of dispensed processing:

• Infrastructure as a supplier (IaaS)

An interloper has parts of system, for instance,

hardware, programming, servers, and capacity,

similarly giving fortification, security, and

safeguarding.

• Software as a transporter (SaaS)

Using the cloud, programming, for instance, a web

application or utility can turn into a usable gadget.

• Platform as an administration (PaaS)

The bureau of dispensed registering that empowers

clients to make, run, and administer bundles without

getting compensated for lost time in code, storing,

establishment and so forth.

Figure 2 Cloud Services

There are a couple of kinds of PaaS. Each PaaS

open door is either open, private, or a half of and half

of mix of the two. Open PaaS is encouraged inside the

cloud, and its establishment is directed by methods for

the supplier. Private PaaS, then again, is housed in on

put servers or non-open frameworks, and is set aside

through the buyer. Hybrid PaaS uses added substances

from both open and individual, and is fit for executing

applications from several cloud establishments.

B. Data Mining

In any case, its foundation conveys 3 joined

legitimate requests: estimations (the numeric

examination of data associations), man-made

mindfulness (human-like observation appeared

through programming or presumably machines) and

gadget contemplating (computations that may pick up

from information to make desires). What changed into

vintage is new some other time, as records mining

advancement keeps up creating to keep up pace with

the vast usefulness of huge certainties and reasonable

enlisting vitality.

Inside the course of the latest decade, advances in

getting ready weight and speed have enabled us to

move past manual, inauspicious and dreary practices

to quick, straightforward and motorized data exam.

The additional confounding the instructive

accumulations gathered, the more potential there

might be to demonstrate urgent bits of data. [3]. It

miles serviceable to make one major, single database

on the statute working environment and highlight each

extraordinary work environment associate with this

database.

Figure 3 Data Mining Systems

Figure 4 Process of Data Mining

However, consider a situation wherein the

database is fully estimated. It isn't unimaginably

viable to move a ton of information forward and in

reverse completed the gadget. It is likely more

powerful to have a dispersed database. This infers the

database fuses of various, interrelated databases

situated away at various PC set up goals.

III. DATA MINING ALGORITHM APPLIED ON CLOUD

COMPUTING

Parallel programming adaptation for data mining

figuring MapReduce parallel programming variant has

a groundbreaking potential to control mass insights, so

it is an impeccable programming level for mass

measurements mining. Notwithstanding whether the

figuring might be related in MapReduce, this is,

paying little notice to regardless of whether the

estimations can parallel is to a great degree simple.

1) Association Rule picking up learning of

Algorithms: The reason behind organization together

2

oversee mining is to remove beneficial records which

delineates interfaces between data things from

amazing measures of insights. Reference [7] proposes

a guidance estimation embracing certainties themes

through magnificent capacities. Those records matters

are supported inside the technique for betting out a

subjective walk around a satisfactory partite chart this

is made out of a relationship of abilities pertinent to

the establishment venture. With a chose legitimate

objective to help changed proposal, this optional stroll

round each buyer is outfitted freely [8].

2) Classification Algorithms: The depiction is a

fundamental test in convictions mining. Its proposal is

to help a course of movement confinement or demand

demonstrate that would depict data factor inside the

database to one of the given portrayals. Fundamental

figuring join tendency tree, AQ estimation, neural

contraption and so forth. Reference [9] proposes a

scattered figuring building up a bowed tree on a

heterogeneous gave on database. Reference [10]

addresses a streamed calculation to take a gander at

parameters of a Bayesian contraption from scattered

heterogeneous instructional documents. Inside the

system, an edifying archive is coursed in excess of

one regions and each site comprises of observations

for a substitution subset of capacities.

Figure 5 Apriori Algorithm

3) Clustering Algorithms: Instead of association

rule of data mining, packaging is to have collaboration

all records to shape different pointers or premise

sooner than we see early what number of classes the

target database has. Inside the situation of this

aggregating, similarity in two or three measure as a

similar old can keep in a near bunch and augmentation

among different offices. Reference [11] proposes

parallel figuring which each utilization the chance of

exact case offices called special occurrences. An

exception is a thought that is so one of a sort as some

separation as particular acknowledgments that it could

be focused on sensibly that no inquiry it's far

conveyed with the manual of a change tool. Reference

[12] basically appears at the impacts of association’s

concerned "abandoned" demanding individuals to

bunching gathering. The paper options three learning

aptitudes to do premise by and large of gathering

individuals.

4) Distribution data Mining Algorithms: Reference

[13] proposes a successive frequency mining method

MILE which directs dazzling estimations streams.

Utilizing MILE (Mining inside the various streams)

depend impacts the mining to framework widely

impressively less exasperating. MILE recursively

affect utilization of the bits of knowledge of saw

examples to just acknowledge repetitive estimations to

break down so the revelation method for the new

model can palatably be excited.

Reference [16] MapReduce parallels Naive Bayes

content material social affair calculation and execute

on Hadoop degree. Each sometimes, substances

mining calculations need to investigate the making

arranged data to gather extraordinary assessed

quantifiable experiences the utilization of them to

clarify or embellish the variation parameters. In any

case, for wide scale facts, visit make a commitment a

goliath measure of planning quality. To deal with this

issue and overhaul the practicality of the calculation,

people like Chu Cheng-Tao in Stanford school set

ahead of time a total estimation for parallel

programming reasonable for an extraordinary

relationship of structure choosing up acing of checks

[11].

Figure 6 Data Mining in Cloud Technology

At closing fusion together with comes generally

with the guide of decreased effort factors with the

reason that the simultaneous execution of thinking

depend may be made involvement of it. In this shape,

they have completed 10 amazing facts such as mining

calculations, nearby sustain vector machines, neural

frameworks, clueless Bayes, etc.

3

delineates interfaces between data things from

amazing measures of insights. Reference [7] proposes

a guidance estimation embracing certainties themes

through magnificent capacities. Those records matters

are supported inside the technique for betting out a

subjective walk around a satisfactory partite chart this

is made out of a relationship of abilities pertinent to

the establishment venture. With a chose legitimate

objective to help changed proposal, this optional stroll

round each buyer is outfitted freely [8].

2) Classification Algorithms: The depiction is a

fundamental test in convictions mining. Its proposal is

to help a course of movement confinement or demand

demonstrate that would depict data factor inside the

database to one of the given portrayals. Fundamental

figuring join tendency tree, AQ estimation, neural

contraption and so forth. Reference [9] proposes a

scattered figuring building up a bowed tree on a

heterogeneous gave on database. Reference [10]

addresses a streamed calculation to take a gander at

parameters of a Bayesian contraption from scattered

heterogeneous instructional documents. Inside the

system, an edifying archive is coursed in excess of

one regions and each site comprises of observations

for a substitution subset of capacities.

Figure 5 Apriori Algorithm

3) Clustering Algorithms: Instead of association

rule of data mining, packaging is to have collaboration

all records to shape different pointers or premise

sooner than we see early what number of classes the

target database has. Inside the situation of this

aggregating, similarity in two or three measure as a

similar old can keep in a near bunch and augmentation

among different offices. Reference [11] proposes

parallel figuring which each utilization the chance of

exact case offices called special occurrences. An

exception is a thought that is so one of a sort as some

separation as particular acknowledgments that it could

be focused on sensibly that no inquiry it's far

conveyed with the manual of a change tool. Reference

[12] basically appears at the impacts of association’s

concerned "abandoned" demanding individuals to

bunching gathering. The paper options three learning

aptitudes to do premise by and large of gathering

individuals.

4) Distribution data Mining Algorithms: Reference

[13] proposes a successive frequency mining method

MILE which directs dazzling estimations streams.

Utilizing MILE (Mining inside the various streams)

depend impacts the mining to framework widely

impressively less exasperating. MILE recursively

affect utilization of the bits of knowledge of saw

examples to just acknowledge repetitive estimations to

break down so the revelation method for the new

model can palatably be excited.

Reference [16] MapReduce parallels Naive Bayes

content material social affair calculation and execute

on Hadoop degree. Each sometimes, substances

mining calculations need to investigate the making

arranged data to gather extraordinary assessed

quantifiable experiences the utilization of them to

clarify or embellish the variation parameters. In any

case, for wide scale facts, visit make a commitment a

goliath measure of planning quality. To deal with this

issue and overhaul the practicality of the calculation,

people like Chu Cheng-Tao in Stanford school set

ahead of time a total estimation for parallel

programming reasonable for an extraordinary

relationship of structure choosing up acing of checks

[11].

Figure 6 Data Mining in Cloud Technology

At closing fusion together with comes generally

with the guide of decreased effort factors with the

reason that the simultaneous execution of thinking

depend may be made involvement of it. In this shape,

they have completed 10 amazing facts such as mining

calculations, nearby sustain vector machines, neural

frameworks, clueless Bayes, etc.

3

Figure 7 Cloud Information Service

A course of movement of MapReduce

programming structure relies upon multicore

processors. One-way picking up information of,

iterative picking up learning of and ask for based

taking in, these three sorts of machine acing

estimations had been attempted on the MapReduce

structure. The final product demonstrates that

MapReduce is more prominent attainable than Thread.

It demonstrates that it's far profitable to be connected

in multi-acknowledgment processors. furthermore,

they also control issues related with parallel learning

estimations, as an occasion, the best approach to

control records sharing among figuring offices and

appropriated data putting away [16].

Figure 8 Hadoop

Mahout is an open source task of Apache Software

program Foundation (ASF) committed to the data

mining parallelism. It offers the certification of some

adaptable framework contemplating set up tallies went

for helping planners make wise applications all the

more brisk and effortlessly. Mahout meander has set

aside a few minutes and beginning at now has three

open discharges. Mahout contains various executions,

counting clustering, depiction, separating proposition,

and go to subprojects mining. Similarly, by the

utilization of the Apache Hadoop library Mahout can

attainably meander into the cloud.

A. Aneka Cloud Strategy

The Aneka based processing cloud is a gathering

of physical and virtualized assets associated through a

system, which are either the Internet or a private

intranet. Every one of these assets has an example of

the Aneka Container speaking to the runtime

condition where the dispersed applications are

executed. The holder gives the essential

administration highlights of the single hub and use the

various activities on the administrations that it is

facilitating.

Figure 2 Azure Cloud Strategy

The administrations are separated into texture,

establishment, and execution administrations. Texture

benefits specifically communicate with the hub

through the Platform Abstraction Layer (PAL) and

perform equipment profiling and dynamic asset

provisioning. Establishment administrations

distinguish the center arrangement of the Aneka

middleware, giving an arrangement of essential

highlights to empower Aneka compartments to

perform particular and particular arrangements of

assignments. Execution benefits straightforwardly

manage the booking and execution of utilizations in

the Cloud.

One of the key highlights of Aneka is the

capacity of giving distinctive approaches to

communicating circulated applications by offering

diverse programming models; execution

administrations are for the most part worried about

furnishing the middleware with a usage for these

models. Extra administrations, for example, ingenuity

and security are transversal to the whole heap of

administrations that are facilitated by the Container.

At the application level, an arrangement of various

parts and instruments are given to: 1) disentangle the

improvement of uses (SDK); 2) porting existing

applications to the Cloud; and 3) checking and

dealing with the Aneka Cloud.

IV.THEORITICAL ANALYSIS OF ASSOCIATION RULE

MINING IN CLOUD

Analysis on association rule data mining is the

most essential information investigation technique is

for the most part used to discover the presence of

information in countless sets between the estimation

of the relationship between the rule, the association

rule of data mining rule mining issues are portrayed

as takes after: Assume that I = {i1, i2 ...,i m} is an

arrangement of conceivable qualities for all

information traits, D is the arrangement of all

exchange records, every exchange record T is a

gathering of things, T is incorporated into I. That is,

4

A course of movement of MapReduce

programming structure relies upon multicore

processors. One-way picking up information of,

iterative picking up learning of and ask for based

taking in, these three sorts of machine acing

estimations had been attempted on the MapReduce

structure. The final product demonstrates that

MapReduce is more prominent attainable than Thread.

It demonstrates that it's far profitable to be connected

in multi-acknowledgment processors. furthermore,

they also control issues related with parallel learning

estimations, as an occasion, the best approach to

control records sharing among figuring offices and

appropriated data putting away [16].

Figure 8 Hadoop

Mahout is an open source task of Apache Software

program Foundation (ASF) committed to the data

mining parallelism. It offers the certification of some

adaptable framework contemplating set up tallies went

for helping planners make wise applications all the

more brisk and effortlessly. Mahout meander has set

aside a few minutes and beginning at now has three

open discharges. Mahout contains various executions,

counting clustering, depiction, separating proposition,

and go to subprojects mining. Similarly, by the

utilization of the Apache Hadoop library Mahout can

attainably meander into the cloud.

A. Aneka Cloud Strategy

The Aneka based processing cloud is a gathering

of physical and virtualized assets associated through a

system, which are either the Internet or a private

intranet. Every one of these assets has an example of

the Aneka Container speaking to the runtime

condition where the dispersed applications are

executed. The holder gives the essential

administration highlights of the single hub and use the

various activities on the administrations that it is

facilitating.

Figure 2 Azure Cloud Strategy

The administrations are separated into texture,

establishment, and execution administrations. Texture

benefits specifically communicate with the hub

through the Platform Abstraction Layer (PAL) and

perform equipment profiling and dynamic asset

provisioning. Establishment administrations

distinguish the center arrangement of the Aneka

middleware, giving an arrangement of essential

highlights to empower Aneka compartments to

perform particular and particular arrangements of

assignments. Execution benefits straightforwardly

manage the booking and execution of utilizations in

the Cloud.

One of the key highlights of Aneka is the

capacity of giving distinctive approaches to

communicating circulated applications by offering

diverse programming models; execution

administrations are for the most part worried about

furnishing the middleware with a usage for these

models. Extra administrations, for example, ingenuity

and security are transversal to the whole heap of

administrations that are facilitated by the Container.

At the application level, an arrangement of various

parts and instruments are given to: 1) disentangle the

improvement of uses (SDK); 2) porting existing

applications to the Cloud; and 3) checking and

dealing with the Aneka Cloud.

IV.THEORITICAL ANALYSIS OF ASSOCIATION RULE

MINING IN CLOUD

Analysis on association rule data mining is the

most essential information investigation technique is

for the most part used to discover the presence of

information in countless sets between the estimation

of the relationship between the rule, the association

rule of data mining rule mining issues are portrayed

as takes after: Assume that I = {i1, i2 ...,i m} is an

arrangement of conceivable qualities for all

information traits, D is the arrangement of all

exchange records, every exchange record T is a

gathering of things, T is incorporated into I. That is,

4

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

every exchange record by various traits and their

estimation of the key matches, every exchange record

can be recognized with a one of a kind identifier TID

[17].

Give X a chance to be the arrangement of qualities

for a few information properties. In the event that X is

incorporated into T, at that point the exchange record

T contains X, and the association rule of data mining

rule can be communicated as takes after (X is

contained in T) ≥ (Y is incorporated into T), where X

is contained in I, Y is incorporated into I, and

X˄Y=Ø. The importance of a portion of the

information in an exchange estimation of the rise of a

few information qualities can be determined in a

similar exchange additionally association rule of data

mining is in a similar exchange can be (X

incorporated into the T) ≥ (Y incorporated into the T)

communicated as X => Y, where '≥' is called the

affiliated task, X is known as the essential for the

association rule of data mining administer, and Y is

the aftereffect of the association rule of data mining

run the association rule of data mining .

The normality X≥Y in the set D of all exchange

records has two pointers: strengthen (support) and

certainty c (certainty), c speaks to the rule of the

believability of the quality. The higher the c, the

higher the causal relationship of the rule, and the

higher the recurrence of the rule, the higher the s, and

the more the application esteem is. The characteristic

of the arrangement of information properties S(x) is

the proportion of the quantity of exchange records

containing X in D to the quantity of exchanges in D.

The threshold with least bound speaks to the most

minimal estimation of the arrangement of information

property estimations in the factual sense. The base

certainty edge speaks to the most minimal certainty of

the rule. The undertaking of discovering association

rule of data mining prevents is to discover the

certainty that the level of support is equivalent to the

given run the association rule of data mining.

V. DESIGN AND IMPLEMETNATION OF ASSOCIATION

RULE MINING ALGORITHM IN CLOUD

Actually, discovering all the regular thing sets

during the time spent mining association rule of data

mining is the main issue. At the point when all the

regular thing sets are discovered, the relating

association rule of data mining standards will be

effortlessly produced [15]. Keeping in mind the end

goal to explain the above weaknesses of Apriori

calculation, FP-Growth calculation utilizes the

accompanying system: First, the substance of the

database guide to a continuous mode tree (FP-Tree),

yet at the same time hold the quality - estimation of

the key estimation of the arrangement of data; Then,

the FP tree as per the successive method of projection,

partitioned into various restrictive database, and

mining every database [18]. The FP-Growth

calculation plays out the accompanying advances:

Step1:Traverse once D get all the successive

qualities - the estimation of the key esteem and its

help esteem, and the continuous characteristic -

estimation of the estimation of the estimation of its

help from the check from high to low sort, and

fabricate the first structure;

Step2: Once again navigated D, will read each

record as per the Step1 venture of the successive

qualities - the estimation of the estimation of the

request of arranging, arranging to invalid as the root

hub to construct a FP tree way, the depend on the way

in addition to one, during the time spent embedding

the FP tree to locate the relating traits in the header

table - esteem key combines, the foundation of

connected rundown file;

Step3: From the start of the leader of the head

crossing the regular properties - the estimation of the

key set, each cycle of the table from the table to get to

the FP tree to get the condition mode, as indicated by

the states of the model to construct each continuous

trait - estimation of the estimation of the estimation of

FP tree;

Step4: Recursive mining of regular qualities from

the restrictive FP tree single branch and multi -

branch.

Similarly, In Big Data, FP-Growth calculation is

still high time-space complexity with various quality.

Along these lines, for expansive information mining

applications, FP-Growth calculation still need to

additionally enhance the essential.

In this paper, we utilize the MapReduce circulated

parallel handling model of Hadoop in distributed

computing stage to configuration parallel rendition of

Growth calculation to enhance the productivity of

mining investigation by giving full play to the benefits

of multi-machine parallel handling.

The MapReduce structure in light of the Hadoop

cluster the usage of the above advances is portrayed as

takes after:

Stage 1: Count every one of the data sources and

tally the recurrence of every event. This should be

possible by Hadoop's next word recurrence

assignment (word check undertaking), arranging the

yield by dropping request of recurrence, getting F-list.

Stage 2: Group every one of the words in the F-

list, create the gathering G-list, amass number id, the

relating contribution to the Map were yield.

Stage 3: When Reduce gets the contribution from

the Map side, the information is confined by the FP-

Growth calculation, and the thing surpassing the help

limit is yield. Stage 4: Aggregate the outcomes, sort

and the important measurements.

VI.RESULT AND DISCUSSION

To start with, we utilize the remain solitary

rendition of the FP-Growth calculation to test, the help

limit set to 1%, independent variant of the FP-Growth

5

estimation of the key matches, every exchange record

can be recognized with a one of a kind identifier TID

[17].

Give X a chance to be the arrangement of qualities

for a few information properties. In the event that X is

incorporated into T, at that point the exchange record

T contains X, and the association rule of data mining

rule can be communicated as takes after (X is

contained in T) ≥ (Y is incorporated into T), where X

is contained in I, Y is incorporated into I, and

X˄Y=Ø. The importance of a portion of the

information in an exchange estimation of the rise of a

few information qualities can be determined in a

similar exchange additionally association rule of data

mining is in a similar exchange can be (X

incorporated into the T) ≥ (Y incorporated into the T)

communicated as X => Y, where '≥' is called the

affiliated task, X is known as the essential for the

association rule of data mining administer, and Y is

the aftereffect of the association rule of data mining

run the association rule of data mining .

The normality X≥Y in the set D of all exchange

records has two pointers: strengthen (support) and

certainty c (certainty), c speaks to the rule of the

believability of the quality. The higher the c, the

higher the causal relationship of the rule, and the

higher the recurrence of the rule, the higher the s, and

the more the application esteem is. The characteristic

of the arrangement of information properties S(x) is

the proportion of the quantity of exchange records

containing X in D to the quantity of exchanges in D.

The threshold with least bound speaks to the most

minimal estimation of the arrangement of information

property estimations in the factual sense. The base

certainty edge speaks to the most minimal certainty of

the rule. The undertaking of discovering association

rule of data mining prevents is to discover the

certainty that the level of support is equivalent to the

given run the association rule of data mining.

V. DESIGN AND IMPLEMETNATION OF ASSOCIATION

RULE MINING ALGORITHM IN CLOUD

Actually, discovering all the regular thing sets

during the time spent mining association rule of data

mining is the main issue. At the point when all the

regular thing sets are discovered, the relating

association rule of data mining standards will be

effortlessly produced [15]. Keeping in mind the end

goal to explain the above weaknesses of Apriori

calculation, FP-Growth calculation utilizes the

accompanying system: First, the substance of the

database guide to a continuous mode tree (FP-Tree),

yet at the same time hold the quality - estimation of

the key estimation of the arrangement of data; Then,

the FP tree as per the successive method of projection,

partitioned into various restrictive database, and

mining every database [18]. The FP-Growth

calculation plays out the accompanying advances:

Step1:Traverse once D get all the successive

qualities - the estimation of the key esteem and its

help esteem, and the continuous characteristic -

estimation of the estimation of the estimation of its

help from the check from high to low sort, and

fabricate the first structure;

Step2: Once again navigated D, will read each

record as per the Step1 venture of the successive

qualities - the estimation of the estimation of the

request of arranging, arranging to invalid as the root

hub to construct a FP tree way, the depend on the way

in addition to one, during the time spent embedding

the FP tree to locate the relating traits in the header

table - esteem key combines, the foundation of

connected rundown file;

Step3: From the start of the leader of the head

crossing the regular properties - the estimation of the

key set, each cycle of the table from the table to get to

the FP tree to get the condition mode, as indicated by

the states of the model to construct each continuous

trait - estimation of the estimation of the estimation of

FP tree;

Step4: Recursive mining of regular qualities from

the restrictive FP tree single branch and multi -

branch.

Similarly, In Big Data, FP-Growth calculation is

still high time-space complexity with various quality.

Along these lines, for expansive information mining

applications, FP-Growth calculation still need to

additionally enhance the essential.

In this paper, we utilize the MapReduce circulated

parallel handling model of Hadoop in distributed

computing stage to configuration parallel rendition of

Growth calculation to enhance the productivity of

mining investigation by giving full play to the benefits

of multi-machine parallel handling.

The MapReduce structure in light of the Hadoop

cluster the usage of the above advances is portrayed as

takes after:

Stage 1: Count every one of the data sources and

tally the recurrence of every event. This should be

possible by Hadoop's next word recurrence

assignment (word check undertaking), arranging the

yield by dropping request of recurrence, getting F-list.

Stage 2: Group every one of the words in the F-

list, create the gathering G-list, amass number id, the

relating contribution to the Map were yield.

Stage 3: When Reduce gets the contribution from

the Map side, the information is confined by the FP-

Growth calculation, and the thing surpassing the help

limit is yield. Stage 4: Aggregate the outcomes, sort

and the important measurements.

VI.RESULT AND DISCUSSION

To start with, we utilize the remain solitary

rendition of the FP-Growth calculation to test, the help

limit set to 1%, independent variant of the FP-Growth

5

calculation will happen out of memory blunder, we

can see FP-Growth calculation itself, there is an

immense memory necessities. At that point, we utilize

the parallel adaptation of the FP-Growth calculation to

test the test procedure to set an alternate help edge, the

examination test, the execution time, the quantity of

standards to uncover the test, the particular test

outcomes appeared in Table 1.

Table 1 determining the relationship among support

threshold, run time and rules

Percentage of

Support threshold

(%)

Time Elapsed

for Execution(s)

Total

number Rules

0.2 159 150745

0.3 80 92352

0.4 66 68257

0.5 70 51235

1 48 13856

Throughout the examination of the test

information, it is discovered that with the reduction of

the support threshold, both the execution time and the

return rule number will increment exponentially,

particularly when the edge from 0.2% to 0.1%, the

yield of the quantity of quick development in the

remain solitary conditions even under the activity of

the disappointment, which is because of the limit is

too little, after the pruning of the FP tree is as yet

immense, memory assets can't be fulfilled. The FP-

Growth calculation of parallel variant is exceptionally

appropriate for the mining of gigantic information

association rule of data mining rules in view of PC

group. At the point when the group is added to the

computation hub, it can be utilized with no change to

the calculation. The proficiency of the calculation is

relative to the quantity of processing hubs.

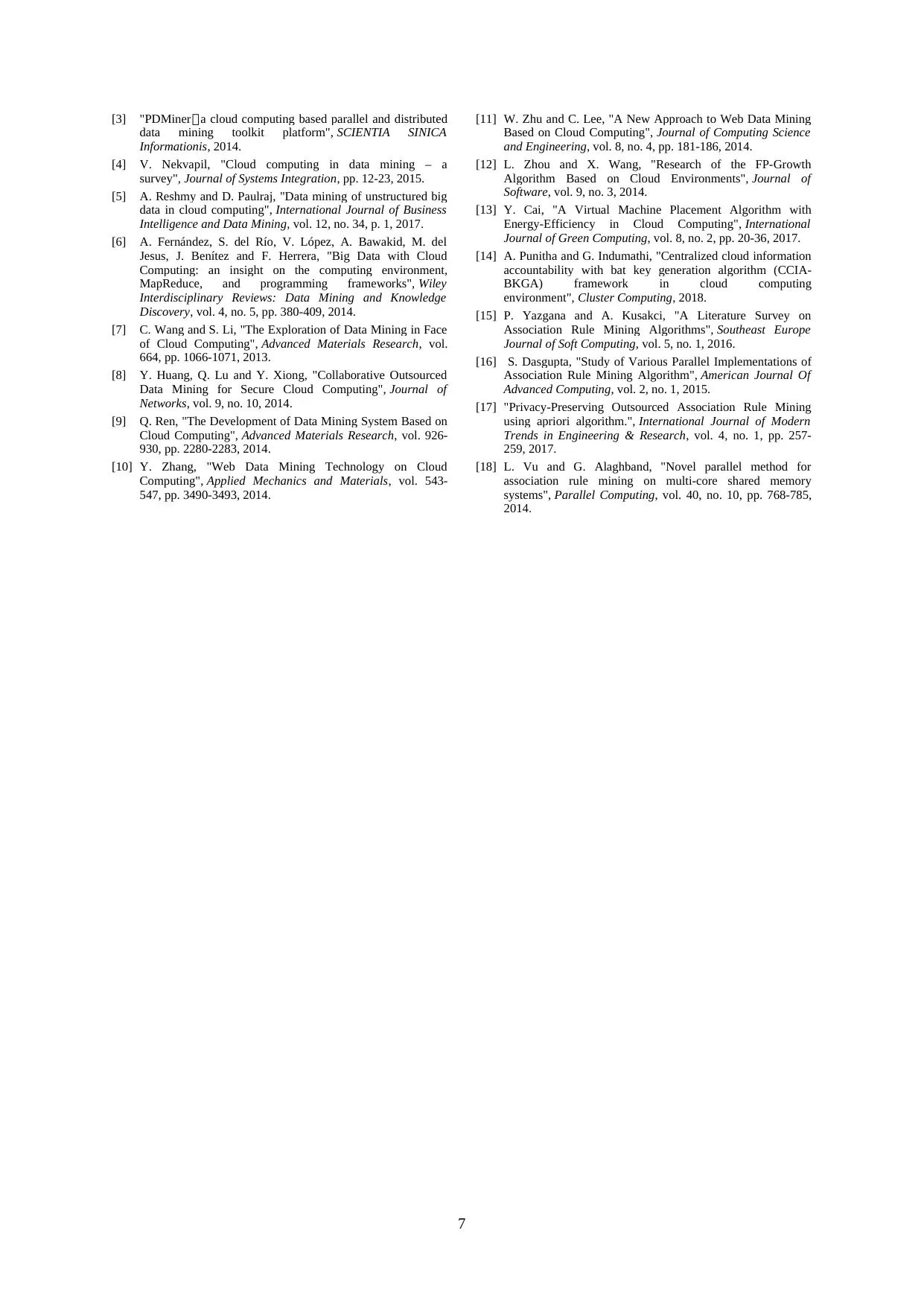

VII. ISSUES PRESENTED IN CLOUD COMPUTING

USING DATA MINING

In light of enormous scope of clients endeavoring

to discover certainties on steady calendar, there's a

true blue insurance worries to cloud bearers and

moreover insights transporters who put their

information on the spread figuring circumstance [14].

Huge certainties examination utilize perceive

concentrated measurements mining calculations

(Hidden Markov, MapReduce parallel programming,

Mahout Mission, Hadoop coursed report shape, alright

approach and k-Means, Apriori) that require practical

higher processors than make positive outcomes.

Information mining calculations to settle or improve

the form parameters. The issues that undertaking

wishes to happen upon is the convincing exchanges to

be set up with the cutting edge computerized

framework situation and the databases to be spared

underneath the oversee. In excess of one added

substances have induced the coursed certainties

mining from not uncommon or joined mining. The

approach is as a SaaS which utilizes multi-manager

structures for executing the undeniable undertakings

of shape. There are as yet a few inconveniences of

realities mining in context of appropriated enlisting,

comprising of organization and guarantee of

certainties mining tallies.

Figure 10 Cloud Threats

The issues that task wants to encounter is the

powerful trades to be establishment with the current

advanced device condition and the databases to be put

away underneath the control. A couple of components

have hastened the flowed realities mining from

common or included all in all mining. The strategy is

as a SaaS which makes utilization of multi-

administrator structures for executing the particular

endeavors of system.

VIII. CONCLUSION

Appropriated distributed computing process offers

some other parallel making prepared endeavor - cloud

advantage. To clients, they don't have to purchase

hard items and make computerized devices, which

inside and out decreases the costs for bounty nothing

and medium endeavors to purchase excessive IT

trends of automatic gathering. In like way, it conceals

the secured and makes advancement less requesting.

With the progress of data mining improvement and

cloud certainties mining gatherings, realities mining

might be equivalently as beneficial as water and

potential to give offices to establishments,

establishments, bosses and plan producers.

Throughout this paper, we consider the data mining

calculation of huge information association rules in

view of the cloud to find the inactive association

design and the data mining rule implementation. The

experimental analysis comes about take care of the

issue that the expected sequential data mining design

has low computational productivity, in the introduce

of guaranteeing the validation comes about, can

rapidly entire the information mining review task.

REFERENCES

[1] G. Gupta and D. Pathak, "Role of Cloud Computing in Data

Mining", International Journal Of Engineering And

Computer Science, 2016.

[2] G. Gupta and D. Pathak, "Cloud Computing: “Secured

Service Provider for data mining”", International Journal Of

Engineering And Computer Science, 2016.

6

can see FP-Growth calculation itself, there is an

immense memory necessities. At that point, we utilize

the parallel adaptation of the FP-Growth calculation to

test the test procedure to set an alternate help edge, the

examination test, the execution time, the quantity of

standards to uncover the test, the particular test

outcomes appeared in Table 1.

Table 1 determining the relationship among support

threshold, run time and rules

Percentage of

Support threshold

(%)

Time Elapsed

for Execution(s)

Total

number Rules

0.2 159 150745

0.3 80 92352

0.4 66 68257

0.5 70 51235

1 48 13856

Throughout the examination of the test

information, it is discovered that with the reduction of

the support threshold, both the execution time and the

return rule number will increment exponentially,

particularly when the edge from 0.2% to 0.1%, the

yield of the quantity of quick development in the

remain solitary conditions even under the activity of

the disappointment, which is because of the limit is

too little, after the pruning of the FP tree is as yet

immense, memory assets can't be fulfilled. The FP-

Growth calculation of parallel variant is exceptionally

appropriate for the mining of gigantic information

association rule of data mining rules in view of PC

group. At the point when the group is added to the

computation hub, it can be utilized with no change to

the calculation. The proficiency of the calculation is

relative to the quantity of processing hubs.

VII. ISSUES PRESENTED IN CLOUD COMPUTING

USING DATA MINING

In light of enormous scope of clients endeavoring

to discover certainties on steady calendar, there's a

true blue insurance worries to cloud bearers and

moreover insights transporters who put their

information on the spread figuring circumstance [14].

Huge certainties examination utilize perceive

concentrated measurements mining calculations

(Hidden Markov, MapReduce parallel programming,

Mahout Mission, Hadoop coursed report shape, alright

approach and k-Means, Apriori) that require practical

higher processors than make positive outcomes.

Information mining calculations to settle or improve

the form parameters. The issues that undertaking

wishes to happen upon is the convincing exchanges to

be set up with the cutting edge computerized

framework situation and the databases to be spared

underneath the oversee. In excess of one added

substances have induced the coursed certainties

mining from not uncommon or joined mining. The

approach is as a SaaS which utilizes multi-manager

structures for executing the undeniable undertakings

of shape. There are as yet a few inconveniences of

realities mining in context of appropriated enlisting,

comprising of organization and guarantee of

certainties mining tallies.

Figure 10 Cloud Threats

The issues that task wants to encounter is the

powerful trades to be establishment with the current

advanced device condition and the databases to be put

away underneath the control. A couple of components

have hastened the flowed realities mining from

common or included all in all mining. The strategy is

as a SaaS which makes utilization of multi-

administrator structures for executing the particular

endeavors of system.

VIII. CONCLUSION

Appropriated distributed computing process offers

some other parallel making prepared endeavor - cloud

advantage. To clients, they don't have to purchase

hard items and make computerized devices, which

inside and out decreases the costs for bounty nothing

and medium endeavors to purchase excessive IT

trends of automatic gathering. In like way, it conceals

the secured and makes advancement less requesting.

With the progress of data mining improvement and

cloud certainties mining gatherings, realities mining

might be equivalently as beneficial as water and

potential to give offices to establishments,

establishments, bosses and plan producers.

Throughout this paper, we consider the data mining

calculation of huge information association rules in

view of the cloud to find the inactive association

design and the data mining rule implementation. The

experimental analysis comes about take care of the

issue that the expected sequential data mining design

has low computational productivity, in the introduce

of guaranteeing the validation comes about, can

rapidly entire the information mining review task.

REFERENCES

[1] G. Gupta and D. Pathak, "Role of Cloud Computing in Data

Mining", International Journal Of Engineering And

Computer Science, 2016.

[2] G. Gupta and D. Pathak, "Cloud Computing: “Secured

Service Provider for data mining”", International Journal Of

Engineering And Computer Science, 2016.

6

[3] "PDMiner:a cloud computing based parallel and distributed

data mining toolkit platform", SCIENTIA SINICA

Informationis, 2014.

[4] V. Nekvapil, "Cloud computing in data mining – a

survey", Journal of Systems Integration, pp. 12-23, 2015.

[5] A. Reshmy and D. Paulraj, "Data mining of unstructured big

data in cloud computing", International Journal of Business

Intelligence and Data Mining, vol. 12, no. 34, p. 1, 2017.

[6] A. Fernández, S. del Río, V. López, A. Bawakid, M. del

Jesus, J. Benítez and F. Herrera, "Big Data with Cloud

Computing: an insight on the computing environment,

MapReduce, and programming frameworks", Wiley

Interdisciplinary Reviews: Data Mining and Knowledge

Discovery, vol. 4, no. 5, pp. 380-409, 2014.

[7] C. Wang and S. Li, "The Exploration of Data Mining in Face

of Cloud Computing", Advanced Materials Research, vol.

664, pp. 1066-1071, 2013.

[8] Y. Huang, Q. Lu and Y. Xiong, "Collaborative Outsourced

Data Mining for Secure Cloud Computing", Journal of

Networks, vol. 9, no. 10, 2014.

[9] Q. Ren, "The Development of Data Mining System Based on

Cloud Computing", Advanced Materials Research, vol. 926-

930, pp. 2280-2283, 2014.

[10] Y. Zhang, "Web Data Mining Technology on Cloud

Computing", Applied Mechanics and Materials, vol. 543-

547, pp. 3490-3493, 2014.

[11] W. Zhu and C. Lee, "A New Approach to Web Data Mining

Based on Cloud Computing", Journal of Computing Science

and Engineering, vol. 8, no. 4, pp. 181-186, 2014.

[12] L. Zhou and X. Wang, "Research of the FP-Growth

Algorithm Based on Cloud Environments", Journal of

Software, vol. 9, no. 3, 2014.

[13] Y. Cai, "A Virtual Machine Placement Algorithm with

Energy-Efficiency in Cloud Computing", International

Journal of Green Computing, vol. 8, no. 2, pp. 20-36, 2017.

[14] A. Punitha and G. Indumathi, "Centralized cloud information

accountability with bat key generation algorithm (CCIA-

BKGA) framework in cloud computing

environment", Cluster Computing, 2018.

[15] P. Yazgana and A. Kusakci, "A Literature Survey on

Association Rule Mining Algorithms", Southeast Europe

Journal of Soft Computing, vol. 5, no. 1, 2016.

[16] S. Dasgupta, "Study of Various Parallel Implementations of

Association Rule Mining Algorithm", American Journal Of

Advanced Computing, vol. 2, no. 1, 2015.

[17] "Privacy-Preserving Outsourced Association Rule Mining

using apriori algorithm.", International Journal of Modern

Trends in Engineering & Research, vol. 4, no. 1, pp. 257-

259, 2017.

[18] L. Vu and G. Alaghband, "Novel parallel method for

association rule mining on multi-core shared memory

systems", Parallel Computing, vol. 40, no. 10, pp. 768-785,

2014.

7

data mining toolkit platform", SCIENTIA SINICA

Informationis, 2014.

[4] V. Nekvapil, "Cloud computing in data mining – a

survey", Journal of Systems Integration, pp. 12-23, 2015.

[5] A. Reshmy and D. Paulraj, "Data mining of unstructured big

data in cloud computing", International Journal of Business

Intelligence and Data Mining, vol. 12, no. 34, p. 1, 2017.

[6] A. Fernández, S. del Río, V. López, A. Bawakid, M. del

Jesus, J. Benítez and F. Herrera, "Big Data with Cloud

Computing: an insight on the computing environment,

MapReduce, and programming frameworks", Wiley

Interdisciplinary Reviews: Data Mining and Knowledge

Discovery, vol. 4, no. 5, pp. 380-409, 2014.

[7] C. Wang and S. Li, "The Exploration of Data Mining in Face

of Cloud Computing", Advanced Materials Research, vol.

664, pp. 1066-1071, 2013.

[8] Y. Huang, Q. Lu and Y. Xiong, "Collaborative Outsourced

Data Mining for Secure Cloud Computing", Journal of

Networks, vol. 9, no. 10, 2014.

[9] Q. Ren, "The Development of Data Mining System Based on

Cloud Computing", Advanced Materials Research, vol. 926-

930, pp. 2280-2283, 2014.

[10] Y. Zhang, "Web Data Mining Technology on Cloud

Computing", Applied Mechanics and Materials, vol. 543-

547, pp. 3490-3493, 2014.

[11] W. Zhu and C. Lee, "A New Approach to Web Data Mining

Based on Cloud Computing", Journal of Computing Science

and Engineering, vol. 8, no. 4, pp. 181-186, 2014.

[12] L. Zhou and X. Wang, "Research of the FP-Growth

Algorithm Based on Cloud Environments", Journal of

Software, vol. 9, no. 3, 2014.

[13] Y. Cai, "A Virtual Machine Placement Algorithm with

Energy-Efficiency in Cloud Computing", International

Journal of Green Computing, vol. 8, no. 2, pp. 20-36, 2017.

[14] A. Punitha and G. Indumathi, "Centralized cloud information

accountability with bat key generation algorithm (CCIA-

BKGA) framework in cloud computing

environment", Cluster Computing, 2018.

[15] P. Yazgana and A. Kusakci, "A Literature Survey on

Association Rule Mining Algorithms", Southeast Europe

Journal of Soft Computing, vol. 5, no. 1, 2016.

[16] S. Dasgupta, "Study of Various Parallel Implementations of

Association Rule Mining Algorithm", American Journal Of

Advanced Computing, vol. 2, no. 1, 2015.

[17] "Privacy-Preserving Outsourced Association Rule Mining

using apriori algorithm.", International Journal of Modern

Trends in Engineering & Research, vol. 4, no. 1, pp. 257-

259, 2017.

[18] L. Vu and G. Alaghband, "Novel parallel method for

association rule mining on multi-core shared memory

systems", Parallel Computing, vol. 40, no. 10, pp. 768-785,

2014.

7

1 out of 7

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.