Data Analysis and Mining Assignment

VerifiedAdded on 2020/07/22

|28

|2628

|100

AI Summary

The provided assignment is focused on data analysis and mining techniques. It starts with identifying outliers in the data set, which reduces the size from 4899 to 4074 after applying a rule-based approach. The task then proceeds with dividing the data into training and test sets of equal sizes using a forward selection method to build a working model. Additionally, it involves creating a Tableau Desktop View of snowfall data at Whistler, BC, Canada, over time.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

Task 1 Data Management Architectures

Task 1.1

A data warehouse

Information warehousing is the process of making use of and constructing an information warehouse.

The data warehouse is built by integrating data through multiple heterogeneous sources that will

support analytical reporting, organized and/or ad hoc queries, plus decision making. Data warehousing

consists of data cleaning, data incorporation, and data consolidations.

Making use of Data Warehouse Information

You can find decision support technologies that will help utilize the data obtainable in a data warehouse.

These types of technologies help executives to make use of the warehouse quickly and effectively. They

can gather data, evaluate it, plus take decisions based on the provided information present in the

stockroom. The information collected in a warehouse can be used in different of the following domains:

o Tuning Production Strategies - The item strategies can be well tuned simply by repositioning the

products and handling the product portfolios by evaluating the sales quarterly or even yearly.

o Customer Analysis: Customer analysis is done simply by analyzing the customer's purchasing

preferences, buying time, spending budget cycles, etc.

o Operations Evaluation - Data warehousing will also help in customer relationship administration,

and making environmental modifications. The info allows us to analyze business procedures

also.

Integrating Heterogeneous Directories

To integrate heterogeneous directories, we have two approaches:

o Query-driven Approach

o Update-driven Approach

Query-Driven Approach

This is the traditional method of integrate heterogeneous databases. This method was used to build

packages and integrators on top of several heterogeneous databases. These types of integrators are also

known as mediators.

Procedure for Query-Driven Approach

o When the query is issued to some client side, the metadata dictionary translates the particular

query into an appropriate type for individual heterogeneous websites involved.

o Now these queries are mapped and sent to the local issue processor.

Task 1.1

A data warehouse

Information warehousing is the process of making use of and constructing an information warehouse.

The data warehouse is built by integrating data through multiple heterogeneous sources that will

support analytical reporting, organized and/or ad hoc queries, plus decision making. Data warehousing

consists of data cleaning, data incorporation, and data consolidations.

Making use of Data Warehouse Information

You can find decision support technologies that will help utilize the data obtainable in a data warehouse.

These types of technologies help executives to make use of the warehouse quickly and effectively. They

can gather data, evaluate it, plus take decisions based on the provided information present in the

stockroom. The information collected in a warehouse can be used in different of the following domains:

o Tuning Production Strategies - The item strategies can be well tuned simply by repositioning the

products and handling the product portfolios by evaluating the sales quarterly or even yearly.

o Customer Analysis: Customer analysis is done simply by analyzing the customer's purchasing

preferences, buying time, spending budget cycles, etc.

o Operations Evaluation - Data warehousing will also help in customer relationship administration,

and making environmental modifications. The info allows us to analyze business procedures

also.

Integrating Heterogeneous Directories

To integrate heterogeneous directories, we have two approaches:

o Query-driven Approach

o Update-driven Approach

Query-Driven Approach

This is the traditional method of integrate heterogeneous databases. This method was used to build

packages and integrators on top of several heterogeneous databases. These types of integrators are also

known as mediators.

Procedure for Query-Driven Approach

o When the query is issued to some client side, the metadata dictionary translates the particular

query into an appropriate type for individual heterogeneous websites involved.

o Now these queries are mapped and sent to the local issue processor.

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

o The results from heterogeneous sites are integrated into a worldwide answer set.

Update-Driven Approach

It is really an alternative to the traditional approach. All of us data warehouse systems adhere to update-

driven approach rather than the conventional approach discussed earlier. Within update-driven

approach, the information through multiple heterogeneous sources is usually integrated in advance and

is kept in a warehouse. This particular given information is available to get direct querying and

evaluation.

Benefits:

o Approach provides top rated.

o The data is replicated, processed, integrated, annotated, described and restructured in semantic

data store in advance.

o Query processing does not need an interface to procedure data at local resources.

o Features of Data Warehouse Equipment and Utilities

o The following are the features of data warehouse equipment and utilities:

o Data Removal - Involves gathering information from multiple heterogeneous resources.

o Data Cleaning - Requires finding and correcting the particular errors in data.

o Data Transformation - Involves switching the data from legacy file format to warehouse format.

o Data Loading - Involves selecting, summarizing, consolidating, checking condition, plus building

partitions and indices.

o Refreshing - Requires updating from data resources to warehouse.

A Data lake

Data Lake is really a new and increasingly popular method to store and analyze information that

addresses many of these problems. Data Lake allows a business to store all of their information,

organized and unstructured, in one, and centralized database. Since data can be kept as-is, there is no

need to transform it to a predefined schema and you no longer need to know exactly what questions

you want to ask of the data beforehand.

A Data Lake should support these capabilities:

o Collecting and keeping any type of data, at any level and at low costs

o Securing and protecting all of information stored in the central database

o Searching and finding the related data in the central database

o Quickly and easily executing new types of data evaluation on datasets

o Querying the information by defining the data’s structure at the time of use (schema on read)

Furthermore, the Data Lake isn’t intended to be replacing your existing Information Warehouses, but

instead complement them. Should you be already using a Data Stockroom, or even are looking to

implement one particular, the Data Lake can be used being a source for both organized and

Update-Driven Approach

It is really an alternative to the traditional approach. All of us data warehouse systems adhere to update-

driven approach rather than the conventional approach discussed earlier. Within update-driven

approach, the information through multiple heterogeneous sources is usually integrated in advance and

is kept in a warehouse. This particular given information is available to get direct querying and

evaluation.

Benefits:

o Approach provides top rated.

o The data is replicated, processed, integrated, annotated, described and restructured in semantic

data store in advance.

o Query processing does not need an interface to procedure data at local resources.

o Features of Data Warehouse Equipment and Utilities

o The following are the features of data warehouse equipment and utilities:

o Data Removal - Involves gathering information from multiple heterogeneous resources.

o Data Cleaning - Requires finding and correcting the particular errors in data.

o Data Transformation - Involves switching the data from legacy file format to warehouse format.

o Data Loading - Involves selecting, summarizing, consolidating, checking condition, plus building

partitions and indices.

o Refreshing - Requires updating from data resources to warehouse.

A Data lake

Data Lake is really a new and increasingly popular method to store and analyze information that

addresses many of these problems. Data Lake allows a business to store all of their information,

organized and unstructured, in one, and centralized database. Since data can be kept as-is, there is no

need to transform it to a predefined schema and you no longer need to know exactly what questions

you want to ask of the data beforehand.

A Data Lake should support these capabilities:

o Collecting and keeping any type of data, at any level and at low costs

o Securing and protecting all of information stored in the central database

o Searching and finding the related data in the central database

o Quickly and easily executing new types of data evaluation on datasets

o Querying the information by defining the data’s structure at the time of use (schema on read)

Furthermore, the Data Lake isn’t intended to be replacing your existing Information Warehouses, but

instead complement them. Should you be already using a Data Stockroom, or even are looking to

implement one particular, the Data Lake can be used being a source for both organized and

unstructured data, which can be easily changed into a well-defined schema just before ingesting it into

your Information Warehouse.

Task 1.2) a data warehouse, a data lake and a data mart would be used in an organization

Use of a data warehouse to have an organization including:

• Possible high returns on purchase

Implementation of data storage by an organization requires a massive investment typically from Rest 10

lack to fifty lacks. However, a study by International Data Corporation (IDC) in 1996 reported which will

average three-year returns on investment (RO I) within data warehousing reached 401%.

• Competitive advantage

The specific huge returns on financial commitment for those companies that have efficiently

implemented a data stockroom are evidence of the massive competitive advantage that includes this

technology. The competing advantage is gained simply by allowing decision-makers access to

information that can reveal previously not available, unknown, and untapped house elevators, for

example , customers, trends, in addition demands.

• Increased efficiency of corporate decision-makers

Information warehousing improves the efficiency of corporate decision-makers simply by creating an

integrated database associated with consistent, subject-oriented, historical details. It integrates data

through multiple incompatible systems to a form that provides one constant view of the organization.

Simply by transforming data into substantial information, a data storage space place allows business

managers to do more substantive, accurate, plus consistent analysis.

• Much more cost-effective decision-making

Data storage space helps to reduce the overall price of the· product· by decreasing the number of

channels.

o Far better enterprise intelligence.

o It helps to give better enterprise intelligence.

o Enhanced customer service.

A Data mart

A data mart is a subset of information from an enterprise information ware house in which the

importance is limited to a specific company unit or group of customers.

Data marts provide a long range view of data inside a given subject area, like sales or finance. Data

marts provide the exact same benefits of a data stockroom, using limited scope and dimension.

your Information Warehouse.

Task 1.2) a data warehouse, a data lake and a data mart would be used in an organization

Use of a data warehouse to have an organization including:

• Possible high returns on purchase

Implementation of data storage by an organization requires a massive investment typically from Rest 10

lack to fifty lacks. However, a study by International Data Corporation (IDC) in 1996 reported which will

average three-year returns on investment (RO I) within data warehousing reached 401%.

• Competitive advantage

The specific huge returns on financial commitment for those companies that have efficiently

implemented a data stockroom are evidence of the massive competitive advantage that includes this

technology. The competing advantage is gained simply by allowing decision-makers access to

information that can reveal previously not available, unknown, and untapped house elevators, for

example , customers, trends, in addition demands.

• Increased efficiency of corporate decision-makers

Information warehousing improves the efficiency of corporate decision-makers simply by creating an

integrated database associated with consistent, subject-oriented, historical details. It integrates data

through multiple incompatible systems to a form that provides one constant view of the organization.

Simply by transforming data into substantial information, a data storage space place allows business

managers to do more substantive, accurate, plus consistent analysis.

• Much more cost-effective decision-making

Data storage space helps to reduce the overall price of the· product· by decreasing the number of

channels.

o Far better enterprise intelligence.

o It helps to give better enterprise intelligence.

o Enhanced customer service.

A Data mart

A data mart is a subset of information from an enterprise information ware house in which the

importance is limited to a specific company unit or group of customers.

Data marts provide a long range view of data inside a given subject area, like sales or finance. Data

marts provide the exact same benefits of a data stockroom, using limited scope and dimension.

Data marts are utilized by a variety of businesspeople. The advantages of a data mart generally arise

because it is too time-consuming to collect the information the users require directly from the source

database.

The data mart gives customers direct access to specific information about the performance of their

company unit. It is a cost-effective replacement for a data warehouse, which could take many months to

create. A data mart is simple to use because it is designed especially for the needs of its users, the data

mart can speed up business processes thus.

A data lake

A data lake, nevertheless, you can put all sorts of information into a single repository without worrying

regarding schemas that define the incorporation points between different information sets.

Ability to handle loading data

Today’s data planet is a streaming world. Loading has evolved from rare make use of cases, for example

sensor data from the share and Iota market information, to common everyday data, such as social

networking.

Fitting the task to the device

When you store data within an EDW, it works well for several kinds of analytics. But when you are

utilizing Spark, Map Reduce, or some other new models, preparing information for analysis in an EDW

can take more time than executing the actual analytics. In an information lake, information can be

processed by these types of new paradigm tools without having excessive prep work effectively.

Integrating information involves fewer steps due to the fact data lakes don’t impose a rigid metadata

schema. Schema-on-read allows users to create custom schema into their questions upon query

execution.

Simpler accessibility

Information lakes also solve the task of data accessibility plus integration that plague EDWs. Using Large

Data Hardtop infrastructures, you are able to bring together ever-larger data quantities for analytics-or

simply shop them for some as-yet-undetermined upcoming use. Unlike a monolithic see of a single

enterprise-wide information model, the data lake enables you to put off modeling until you really use

the data, which usually creates opportunities for much better operational insights and information

discovery. This particular advantage only grows because data volumes, variety, plus metadata richness

increase.

Decreased costs

Because of economies associated with scale, some Hadoop customers claim they pay lower than $1, 000

per tb for a Hadoop cluster. Even though numbers can vary, business customers understand that

because it is too time-consuming to collect the information the users require directly from the source

database.

The data mart gives customers direct access to specific information about the performance of their

company unit. It is a cost-effective replacement for a data warehouse, which could take many months to

create. A data mart is simple to use because it is designed especially for the needs of its users, the data

mart can speed up business processes thus.

A data lake

A data lake, nevertheless, you can put all sorts of information into a single repository without worrying

regarding schemas that define the incorporation points between different information sets.

Ability to handle loading data

Today’s data planet is a streaming world. Loading has evolved from rare make use of cases, for example

sensor data from the share and Iota market information, to common everyday data, such as social

networking.

Fitting the task to the device

When you store data within an EDW, it works well for several kinds of analytics. But when you are

utilizing Spark, Map Reduce, or some other new models, preparing information for analysis in an EDW

can take more time than executing the actual analytics. In an information lake, information can be

processed by these types of new paradigm tools without having excessive prep work effectively.

Integrating information involves fewer steps due to the fact data lakes don’t impose a rigid metadata

schema. Schema-on-read allows users to create custom schema into their questions upon query

execution.

Simpler accessibility

Information lakes also solve the task of data accessibility plus integration that plague EDWs. Using Large

Data Hardtop infrastructures, you are able to bring together ever-larger data quantities for analytics-or

simply shop them for some as-yet-undetermined upcoming use. Unlike a monolithic see of a single

enterprise-wide information model, the data lake enables you to put off modeling until you really use

the data, which usually creates opportunities for much better operational insights and information

discovery. This particular advantage only grows because data volumes, variety, plus metadata richness

increase.

Decreased costs

Because of economies associated with scale, some Hadoop customers claim they pay lower than $1, 000

per tb for a Hadoop cluster. Even though numbers can vary, business customers understand that

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

because it’s no more excessively costly for them to shop all their data, they could maintain copies of

everything simply by dumping it into Hadoop simply, to become discovered and analyzed later on.

Scalability

Huge Data is typically defined as the particular intersection between volume, variety, plus velocity.

EDWs are well known for not being able to scale over and above a certain volume due to limitations of

the architecture. Data digesting takes so long that institutions are prevented from taking advantage of

all their data to the fullest extent. Using Hadoop, petabyte- scale data lakes are cost-efficient and simple

to create and maintain at whatever size is desired relatively.

A data mart:

o To partition data to be able to impose access control techniques.

o To speed up the questions by reducing the volume associated with data to be scanned.

o To segment data into various hardware platforms.

o To framework data in a form ideal for a user access tool.

Budget-friendly Data Marting

Follow the actions given below to make information marting cost-effective:

o Identify the particular Functional Splits

o Identify Consumer Access Tool Requirements

o Identify Access Control Issues

A retail organization, exactly where each merchant is responsible for maximizing the sales of the group

of products. With this, the following are the valuable info:

o sales transaction on a daily basis

o sales forecast on a weekly schedule

o stock position on a daily basis

o stock movements on a daily basis

As the service provider is not interested in the products they may not be dealing with, the data marting

is really a subset of the data coping which the product group of curiosity. The following diagram shows

information marting for different users.

everything simply by dumping it into Hadoop simply, to become discovered and analyzed later on.

Scalability

Huge Data is typically defined as the particular intersection between volume, variety, plus velocity.

EDWs are well known for not being able to scale over and above a certain volume due to limitations of

the architecture. Data digesting takes so long that institutions are prevented from taking advantage of

all their data to the fullest extent. Using Hadoop, petabyte- scale data lakes are cost-efficient and simple

to create and maintain at whatever size is desired relatively.

A data mart:

o To partition data to be able to impose access control techniques.

o To speed up the questions by reducing the volume associated with data to be scanned.

o To segment data into various hardware platforms.

o To framework data in a form ideal for a user access tool.

Budget-friendly Data Marting

Follow the actions given below to make information marting cost-effective:

o Identify the particular Functional Splits

o Identify Consumer Access Tool Requirements

o Identify Access Control Issues

A retail organization, exactly where each merchant is responsible for maximizing the sales of the group

of products. With this, the following are the valuable info:

o sales transaction on a daily basis

o sales forecast on a weekly schedule

o stock position on a daily basis

o stock movements on a daily basis

As the service provider is not interested in the products they may not be dealing with, the data marting

is really a subset of the data coping which the product group of curiosity. The following diagram shows

information marting for different users.

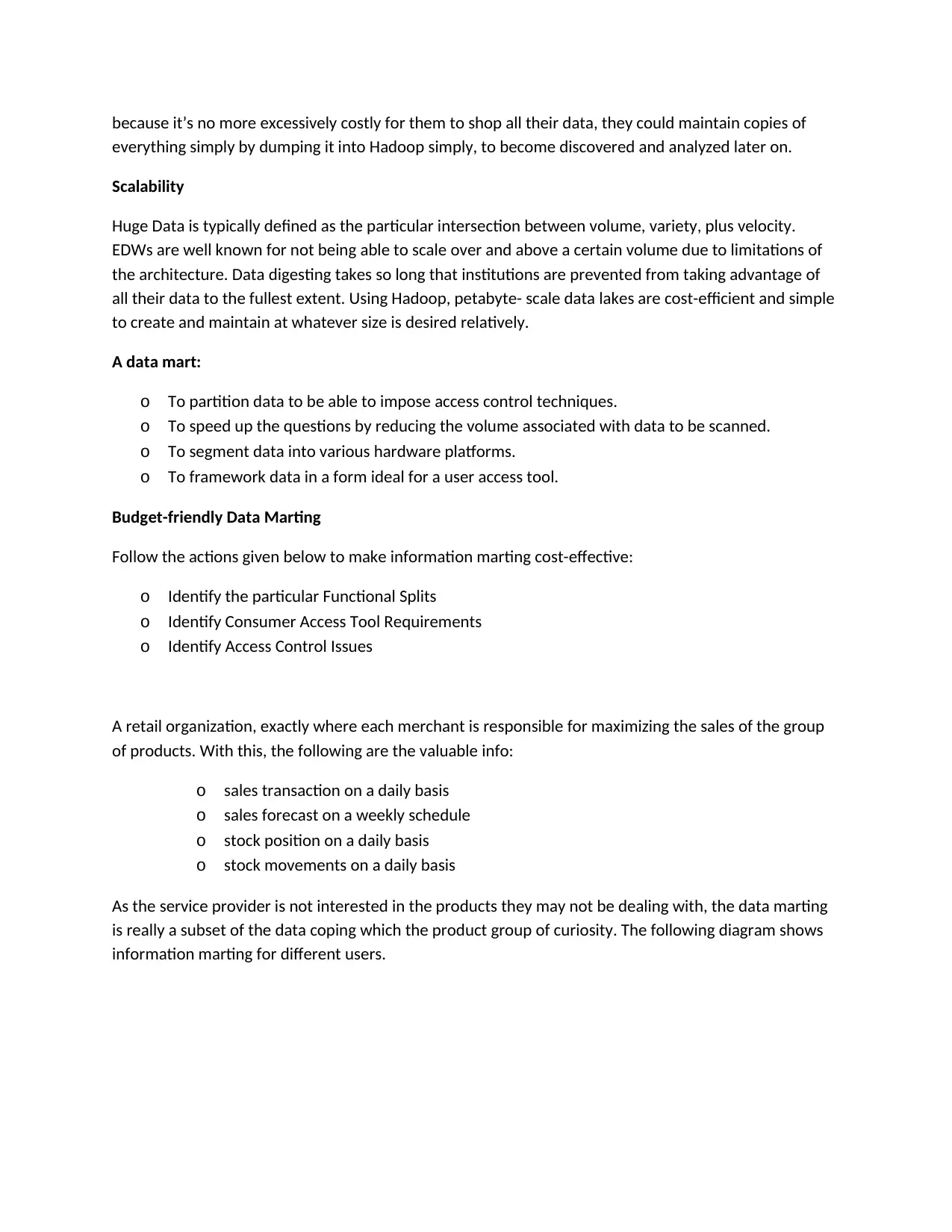

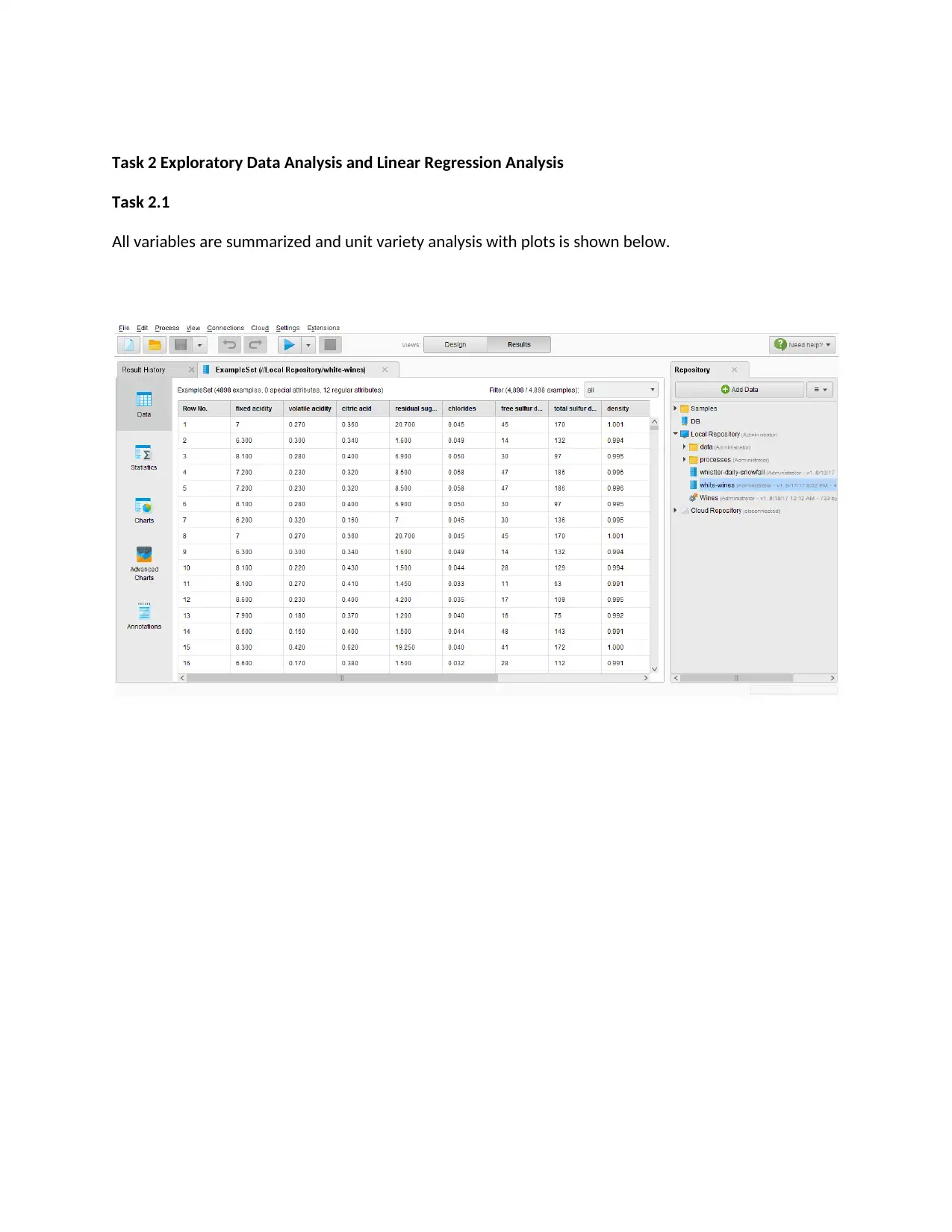

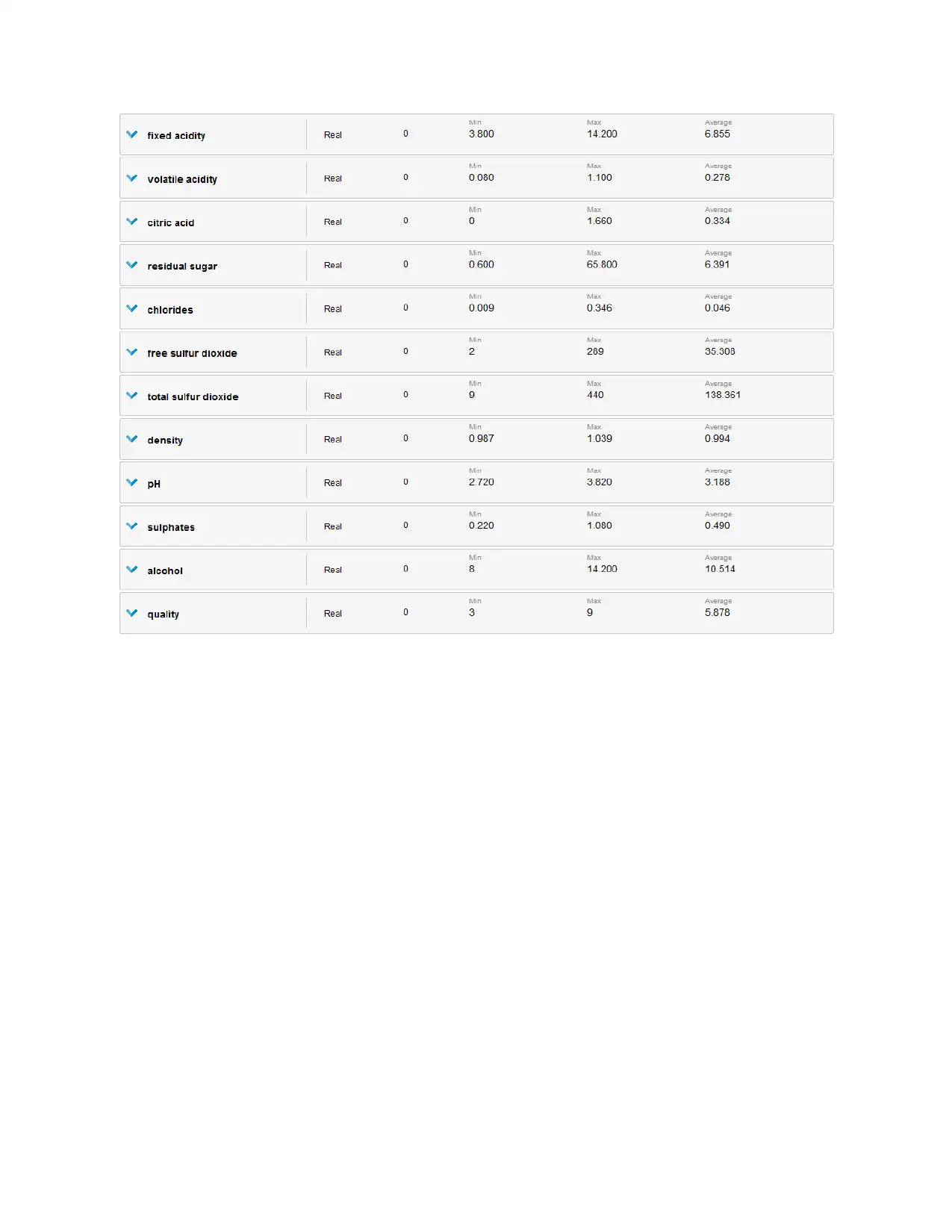

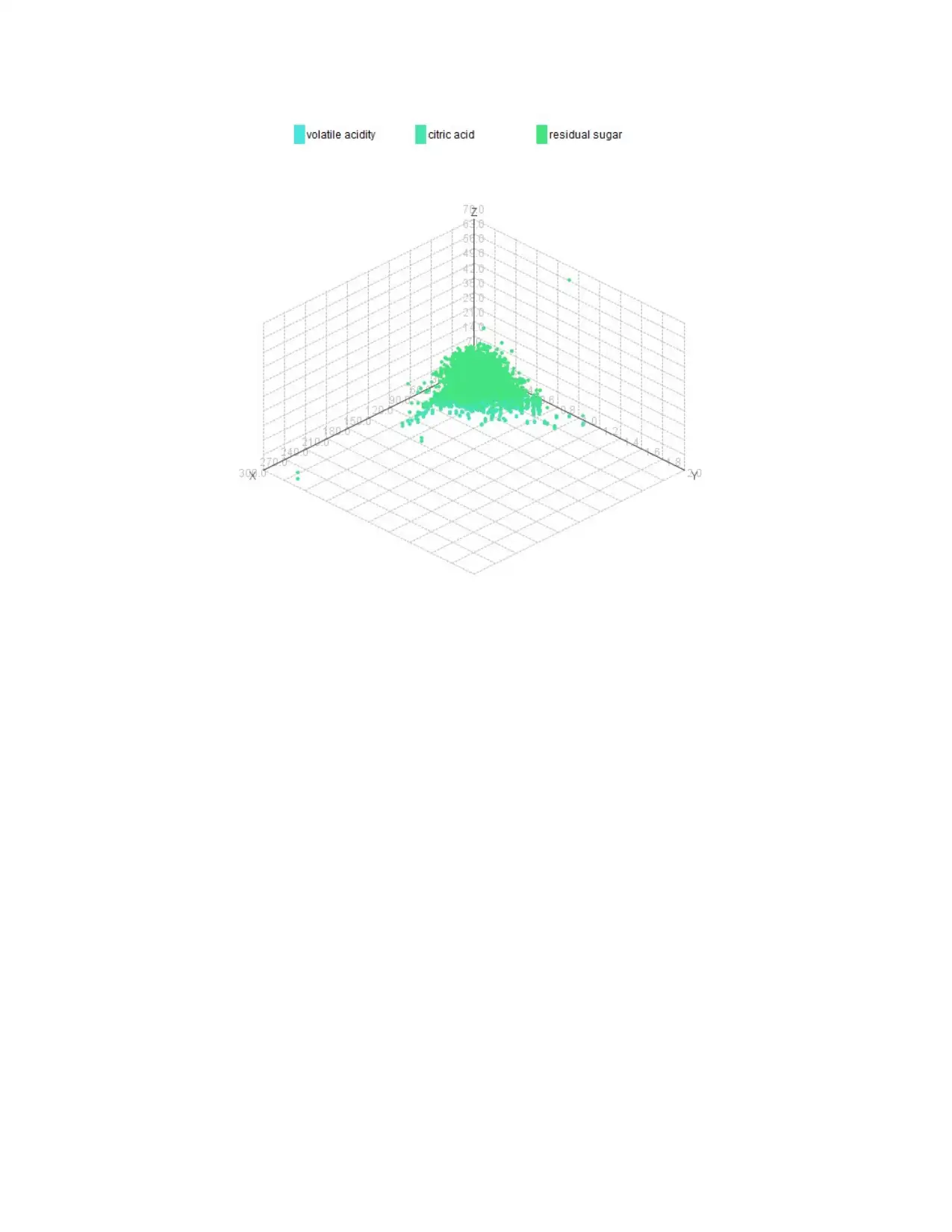

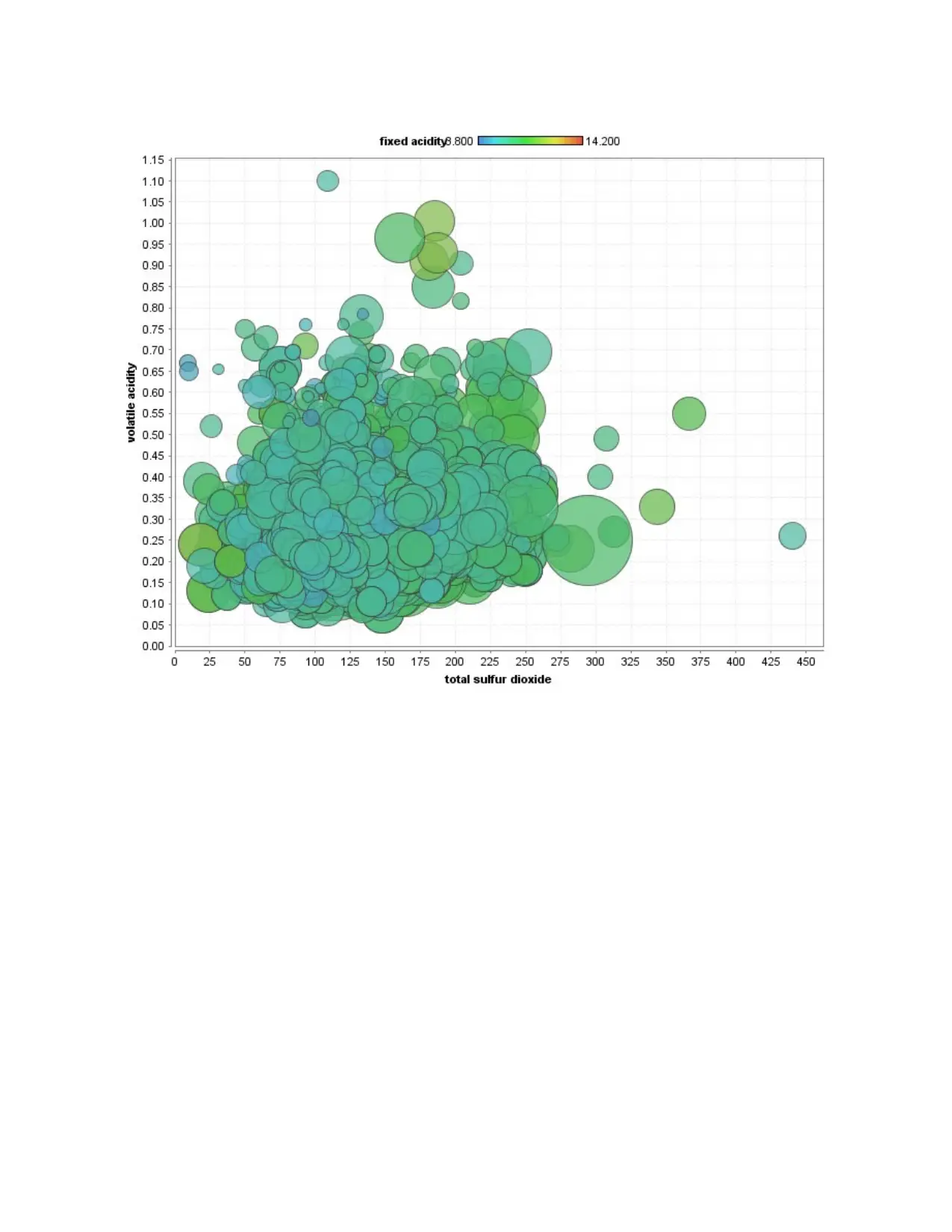

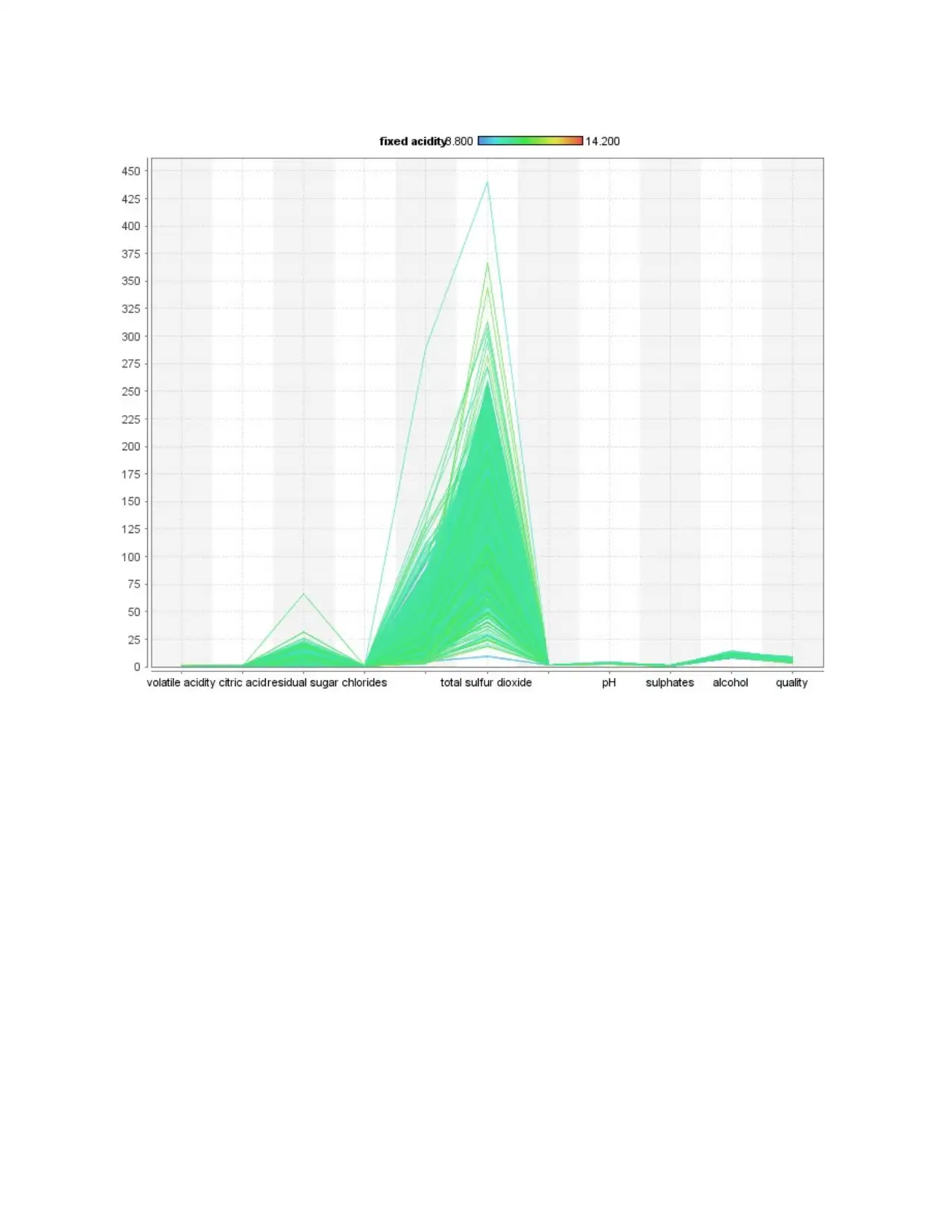

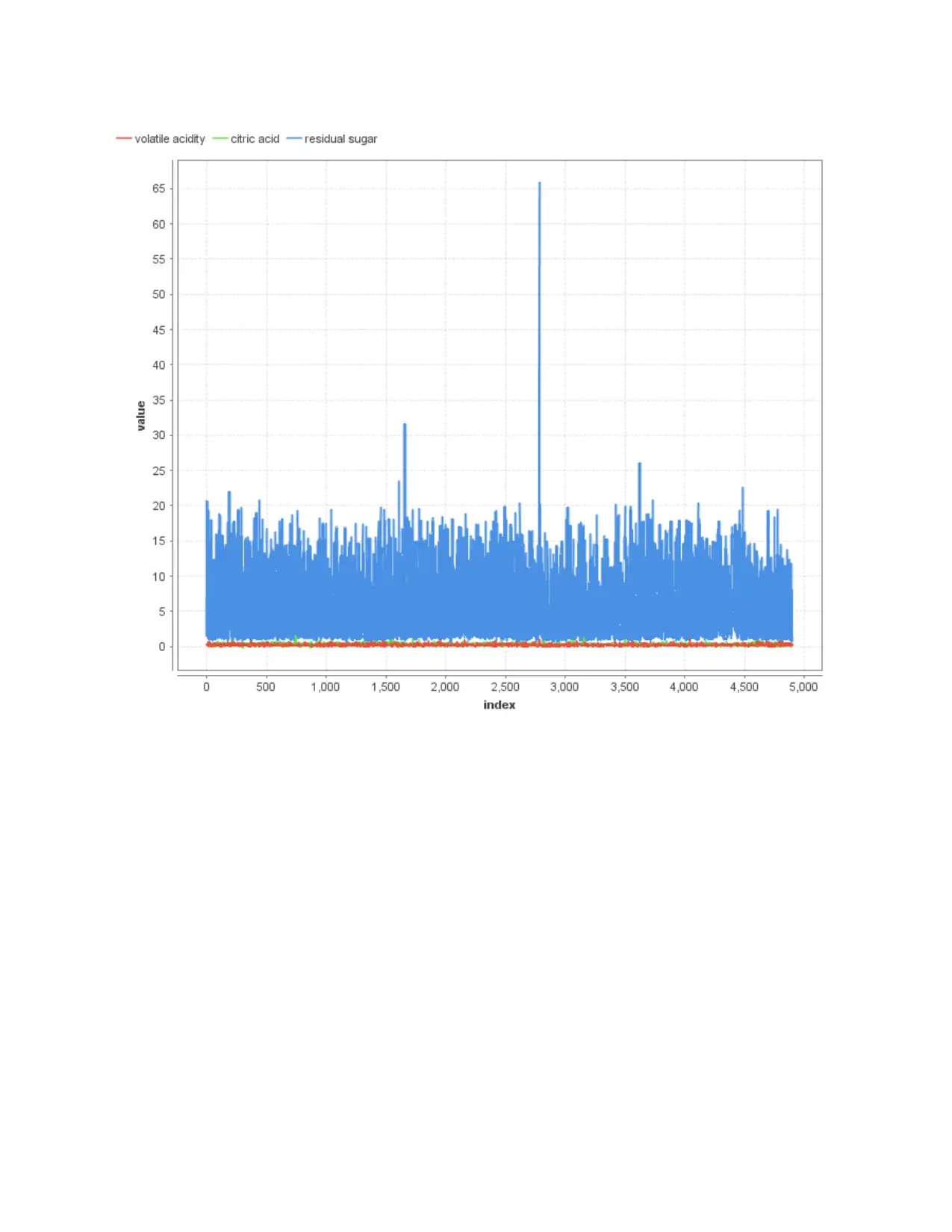

Task 2 Exploratory Data Analysis and Linear Regression Analysis

Task 2.1

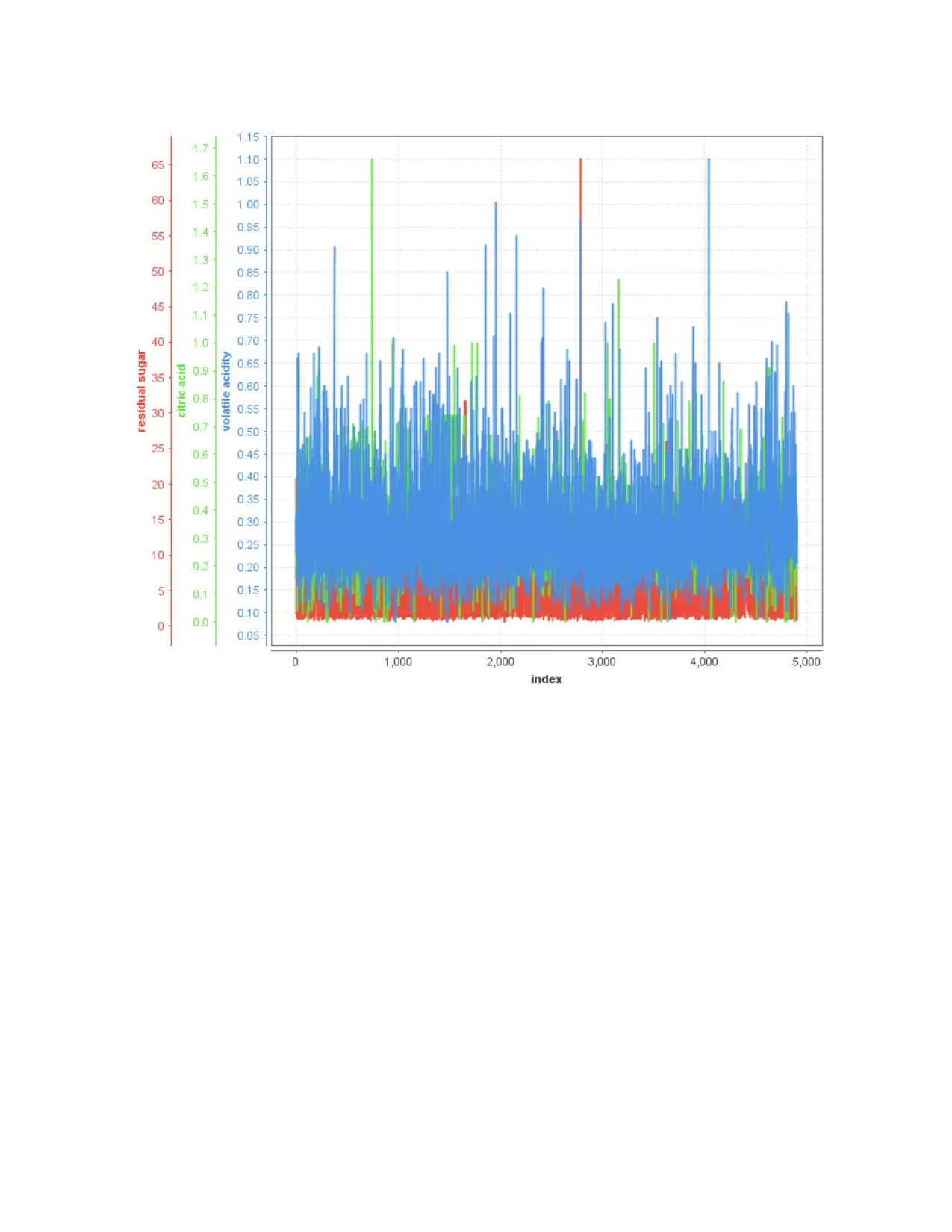

All variables are summarized and unit variety analysis with plots is shown below.

Task 2.1

All variables are summarized and unit variety analysis with plots is shown below.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

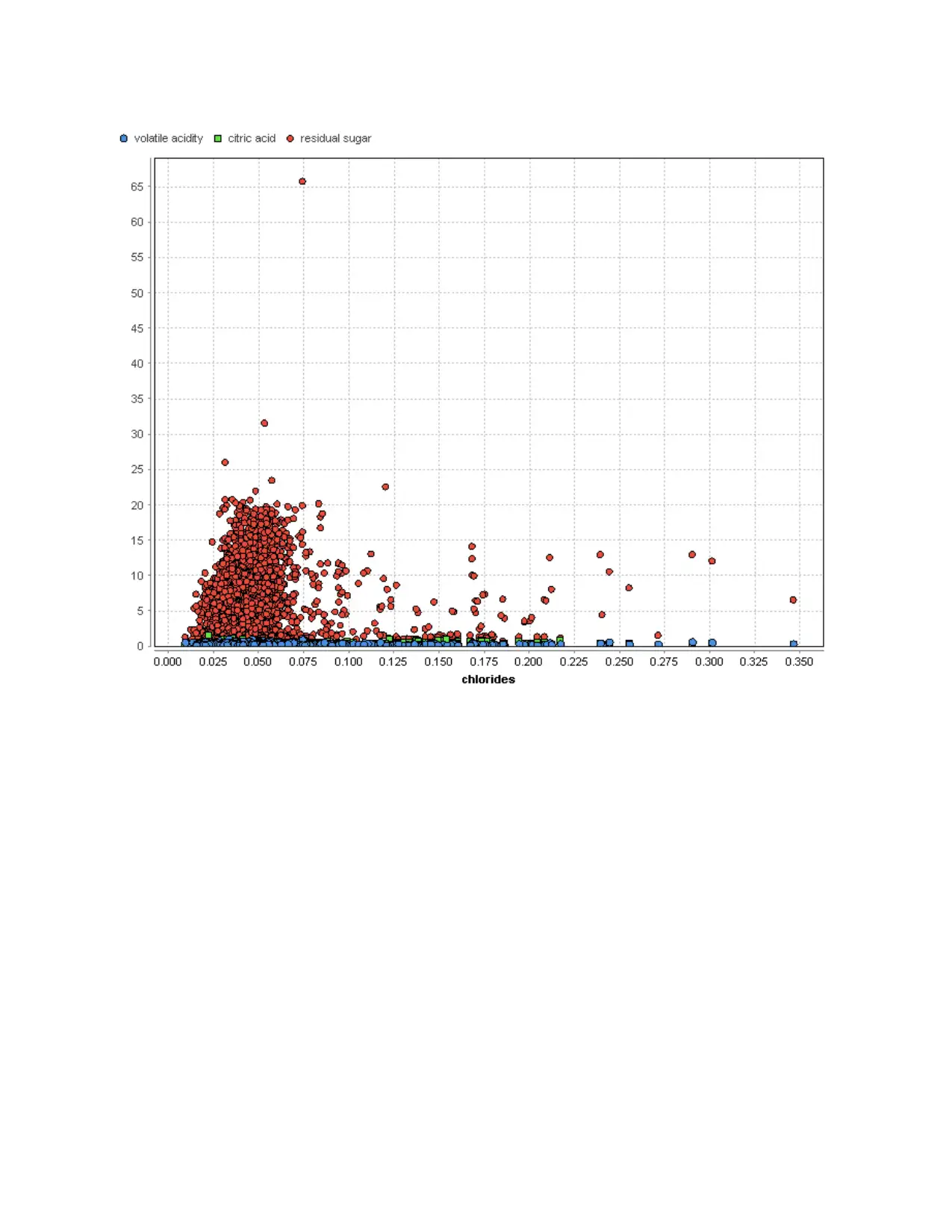

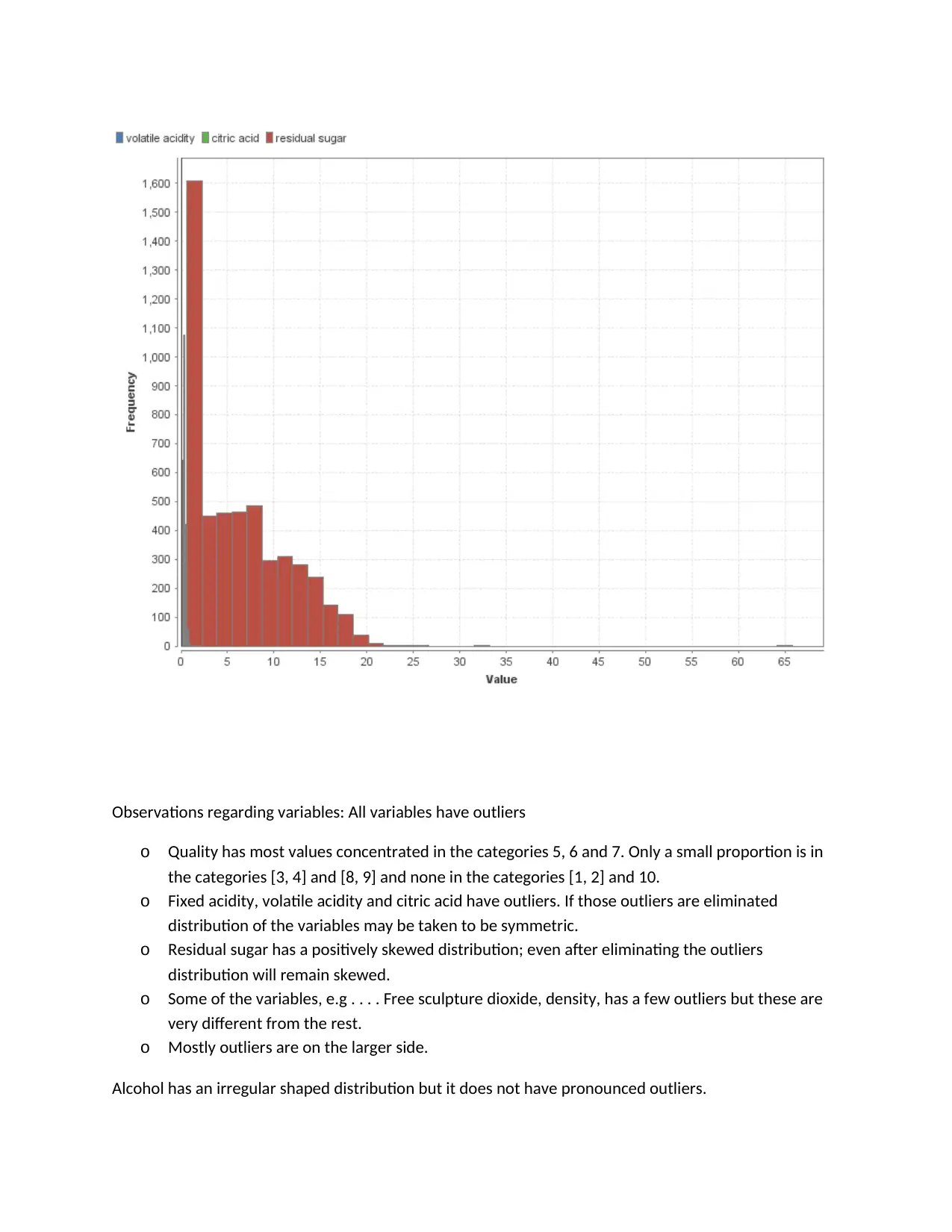

Observations regarding variables: All variables have outliers

o Quality has most values concentrated in the categories 5, 6 and 7. Only a small proportion is in

the categories [3, 4] and [8, 9] and none in the categories [1, 2] and 10.

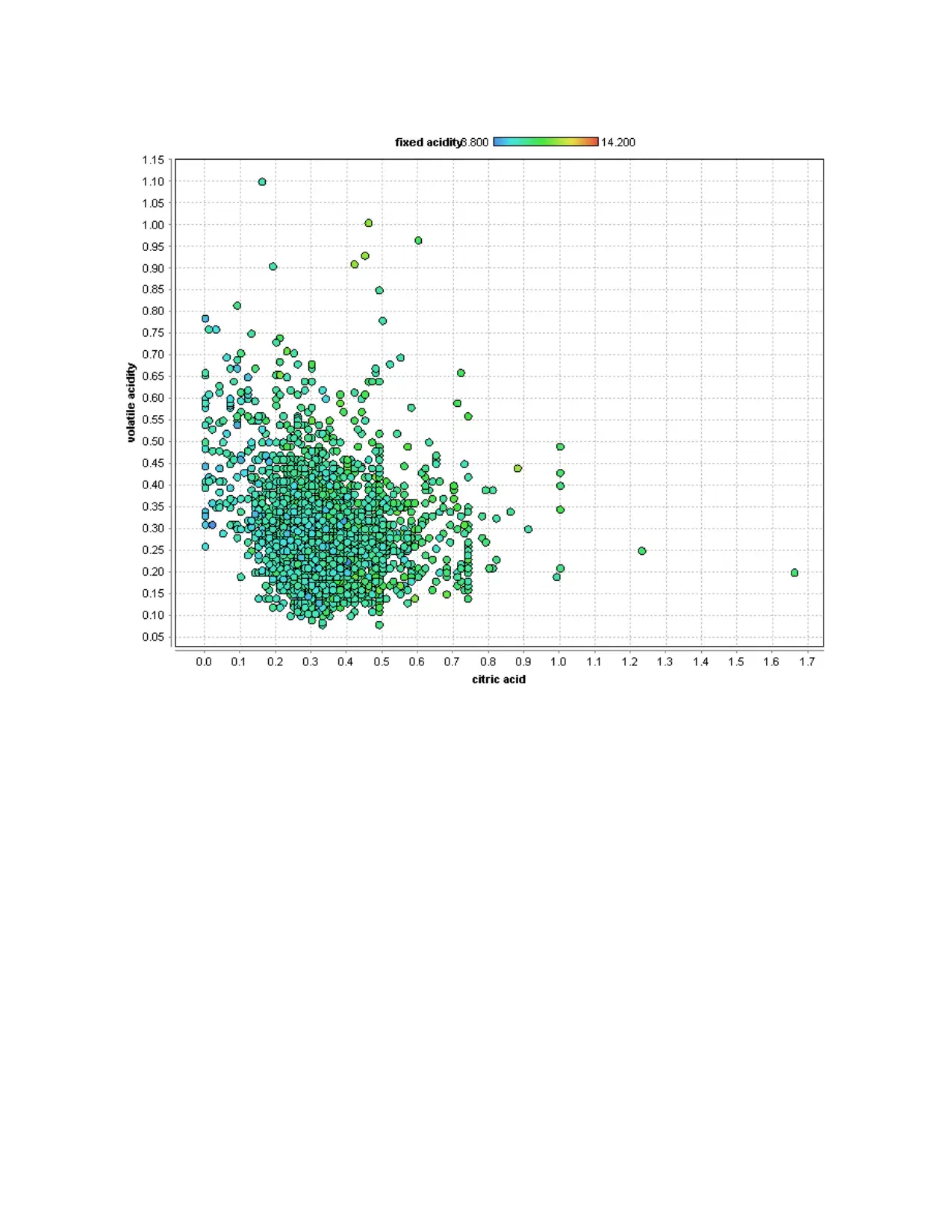

o Fixed acidity, volatile acidity and citric acid have outliers. If those outliers are eliminated

distribution of the variables may be taken to be symmetric.

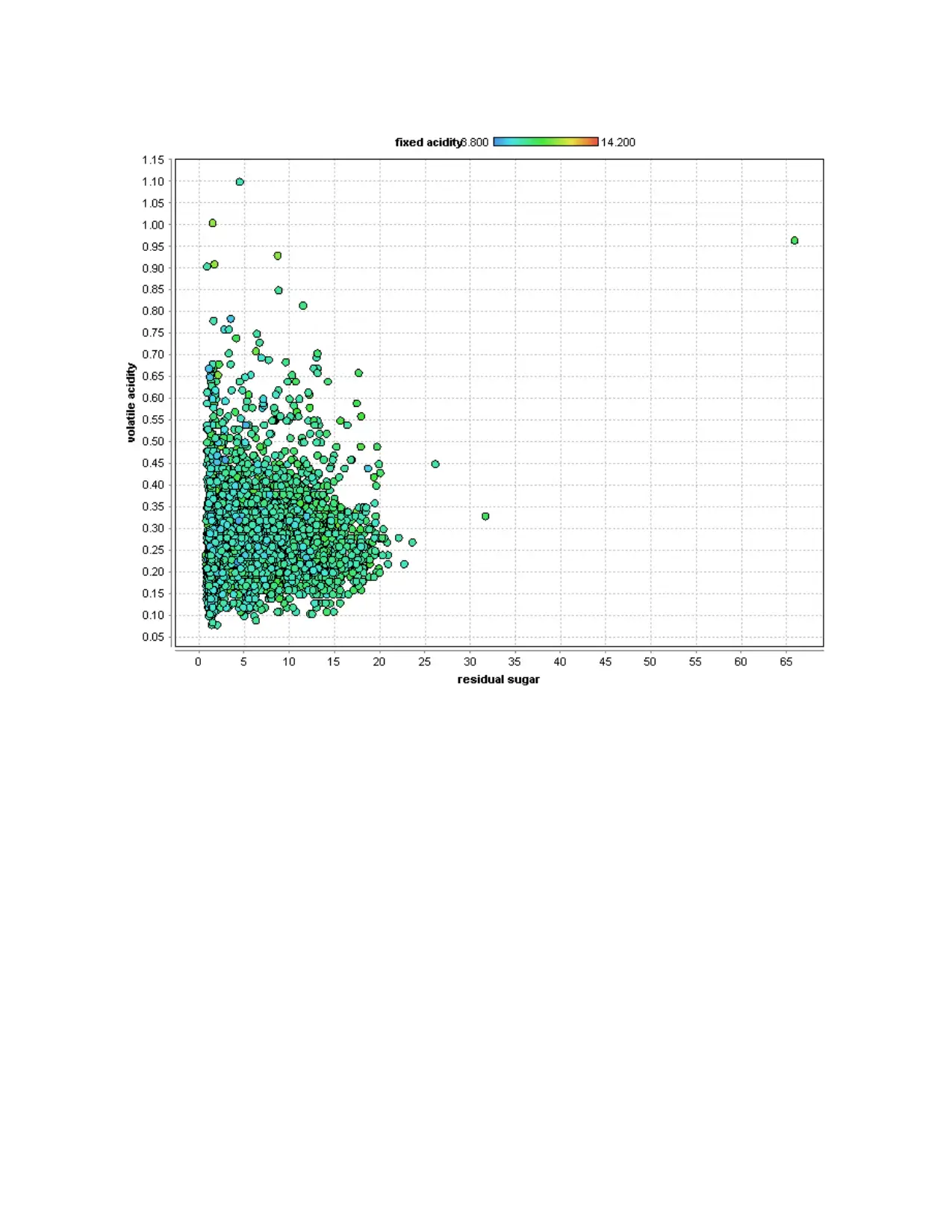

o Residual sugar has a positively skewed distribution; even after eliminating the outliers

distribution will remain skewed.

o Some of the variables, e.g . . . . Free sculpture dioxide, density, has a few outliers but these are

very different from the rest.

o Mostly outliers are on the larger side.

Alcohol has an irregular shaped distribution but it does not have pronounced outliers.

o Quality has most values concentrated in the categories 5, 6 and 7. Only a small proportion is in

the categories [3, 4] and [8, 9] and none in the categories [1, 2] and 10.

o Fixed acidity, volatile acidity and citric acid have outliers. If those outliers are eliminated

distribution of the variables may be taken to be symmetric.

o Residual sugar has a positively skewed distribution; even after eliminating the outliers

distribution will remain skewed.

o Some of the variables, e.g . . . . Free sculpture dioxide, density, has a few outliers but these are

very different from the rest.

o Mostly outliers are on the larger side.

Alcohol has an irregular shaped distribution but it does not have pronounced outliers.

Range is much larger compared to the IQR. Mean is usually greater than the median. These observations

indicate that there are outliers in the data set and before any analysis is performed outliers must be

taken care of.

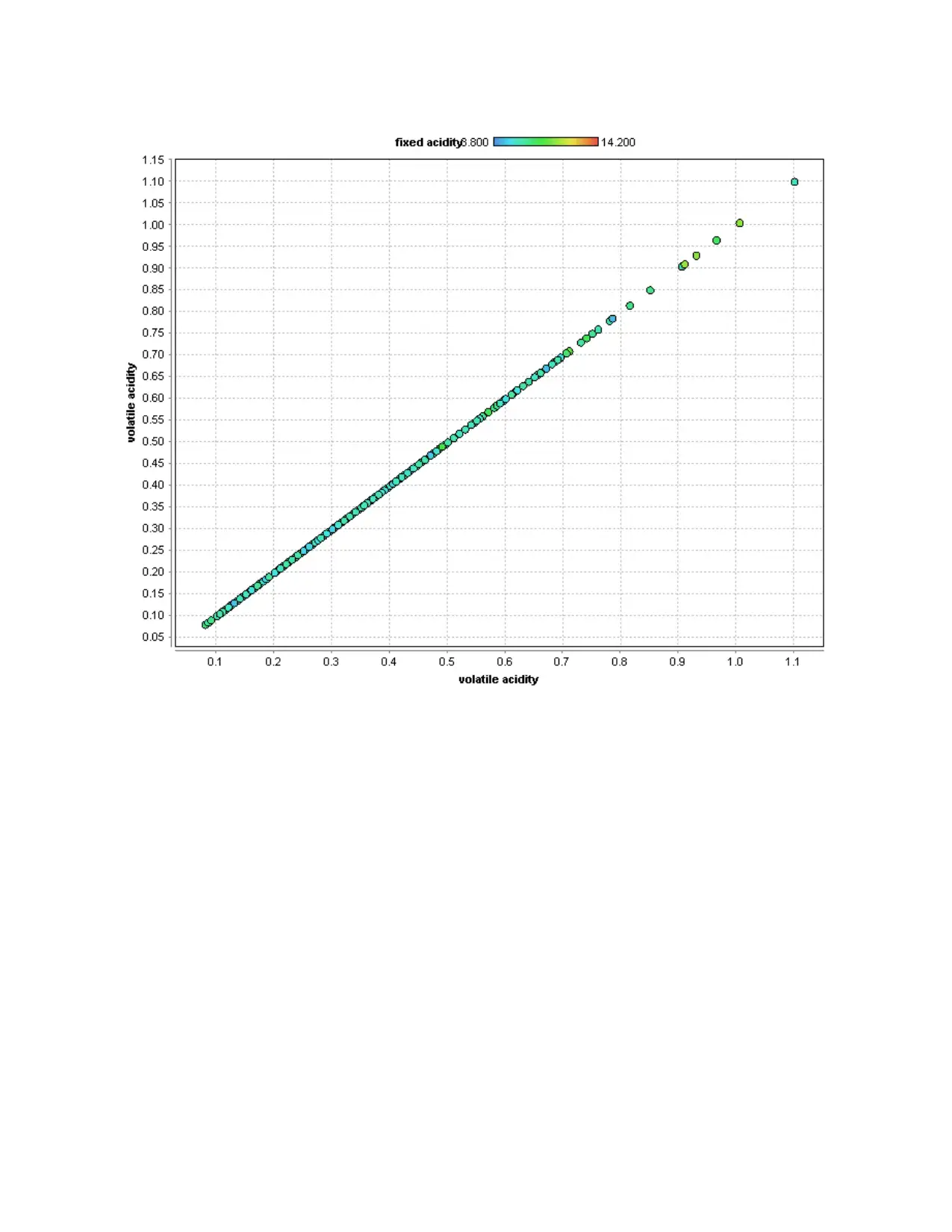

Next we look at the bivariate analysis, including all pairwise scatterplot and correlation coefficients.

Since the variables have non-normal distribution, we have considered both person and spearman rank

correlations.

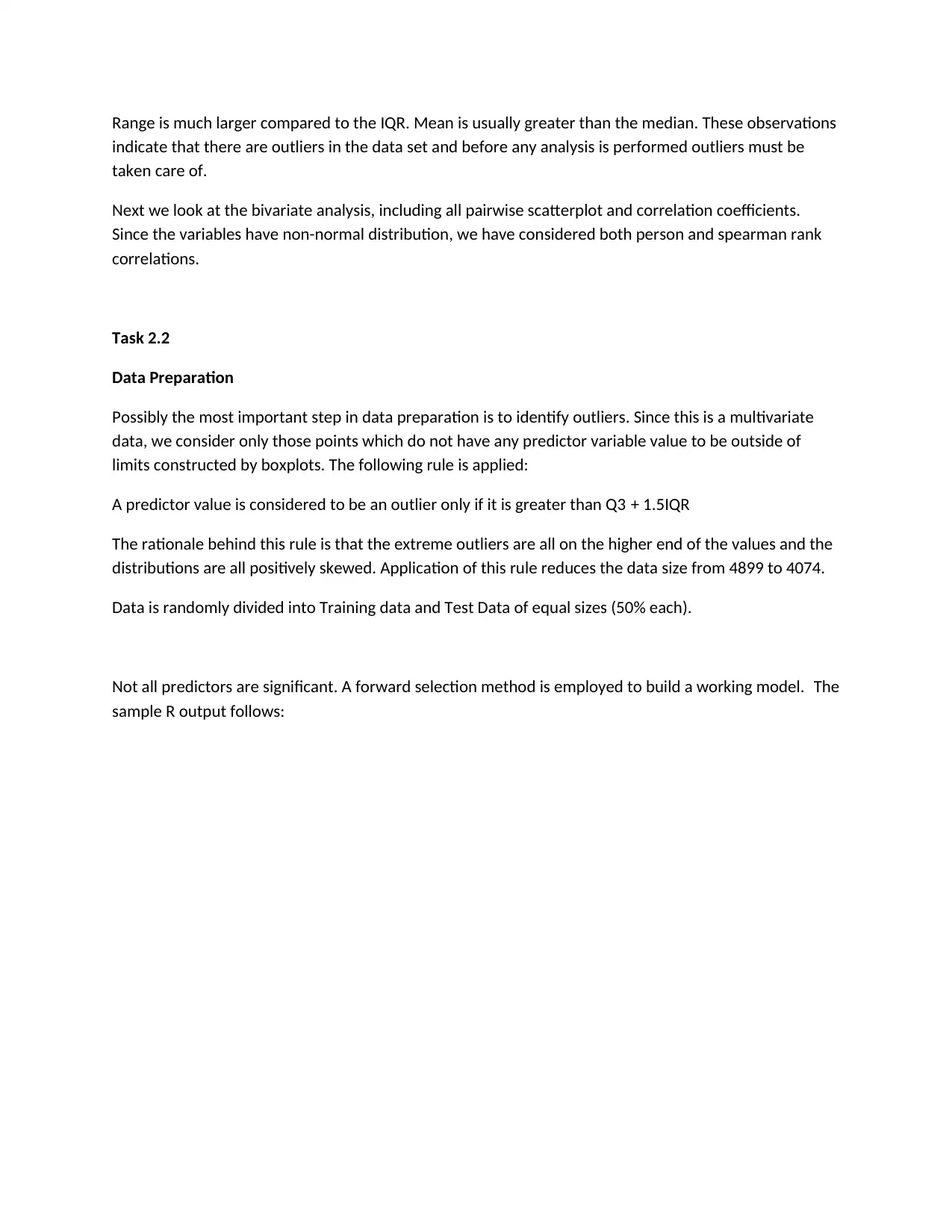

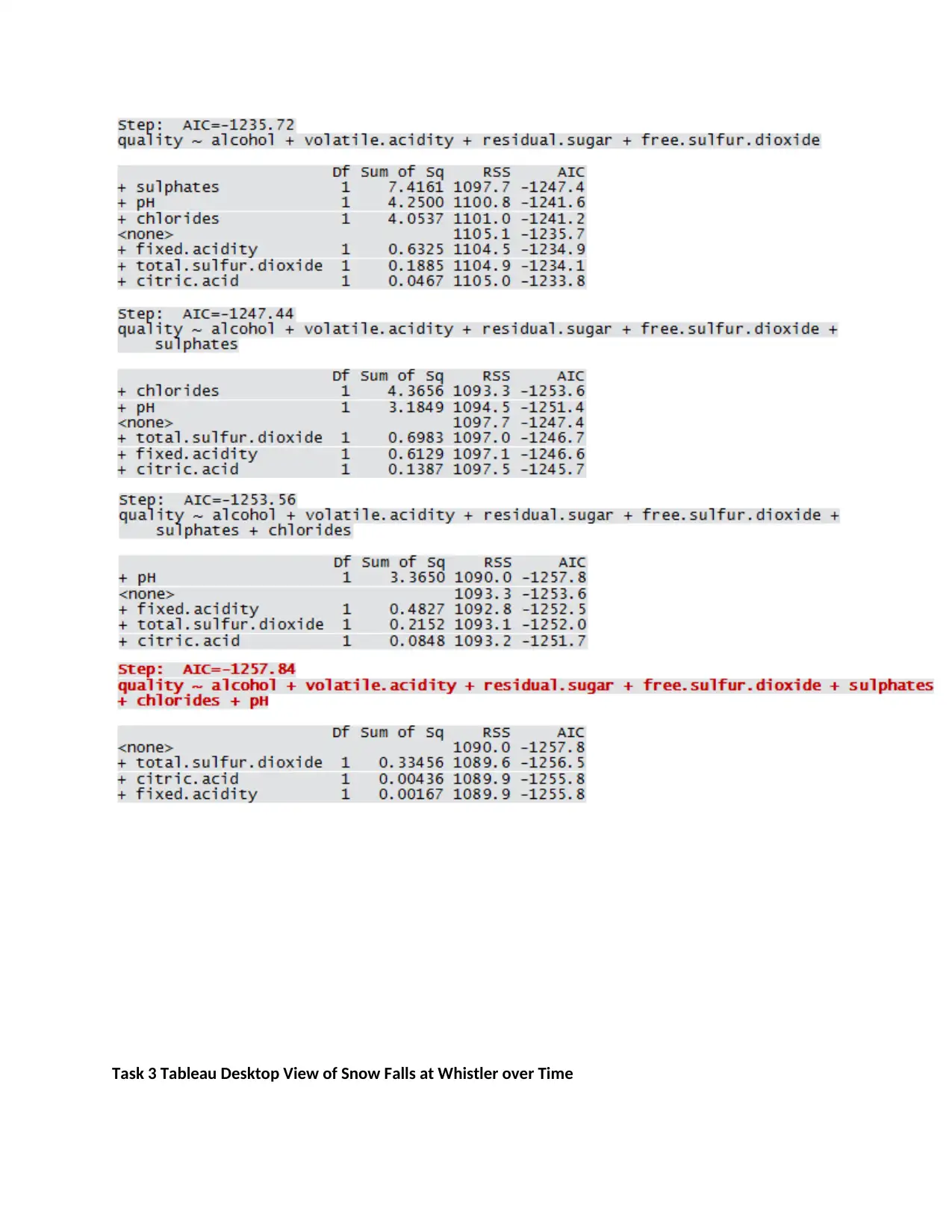

Task 2.2

Data Preparation

Possibly the most important step in data preparation is to identify outliers. Since this is a multivariate

data, we consider only those points which do not have any predictor variable value to be outside of

limits constructed by boxplots. The following rule is applied:

A predictor value is considered to be an outlier only if it is greater than Q3 + 1.5IQR

The rationale behind this rule is that the extreme outliers are all on the higher end of the values and the

distributions are all positively skewed. Application of this rule reduces the data size from 4899 to 4074.

Data is randomly divided into Training data and Test Data of equal sizes (50% each).

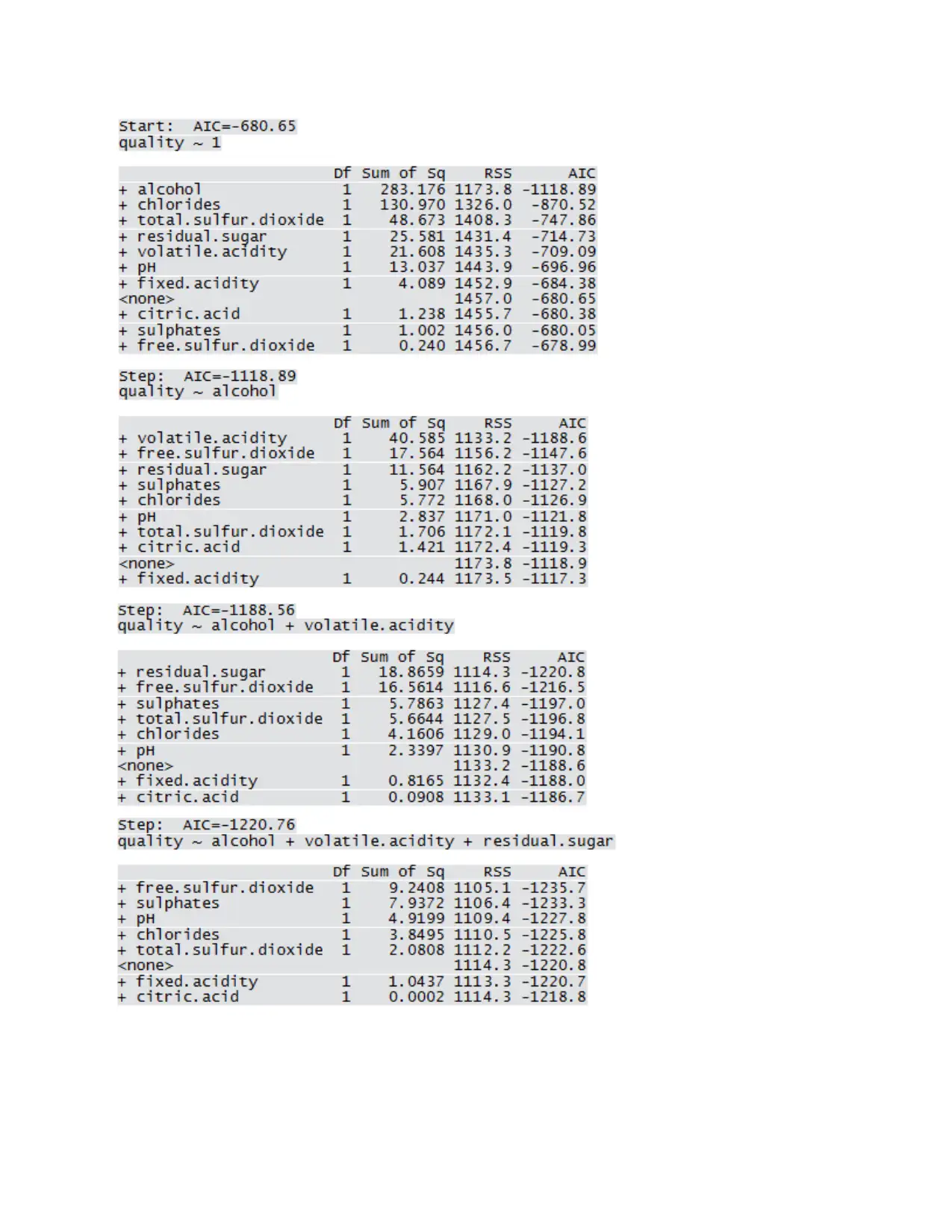

Not all predictors are significant. A forward selection method is employed to build a working model. The

sample R output follows:

indicate that there are outliers in the data set and before any analysis is performed outliers must be

taken care of.

Next we look at the bivariate analysis, including all pairwise scatterplot and correlation coefficients.

Since the variables have non-normal distribution, we have considered both person and spearman rank

correlations.

Task 2.2

Data Preparation

Possibly the most important step in data preparation is to identify outliers. Since this is a multivariate

data, we consider only those points which do not have any predictor variable value to be outside of

limits constructed by boxplots. The following rule is applied:

A predictor value is considered to be an outlier only if it is greater than Q3 + 1.5IQR

The rationale behind this rule is that the extreme outliers are all on the higher end of the values and the

distributions are all positively skewed. Application of this rule reduces the data size from 4899 to 4074.

Data is randomly divided into Training data and Test Data of equal sizes (50% each).

Not all predictors are significant. A forward selection method is employed to build a working model. The

sample R output follows:

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

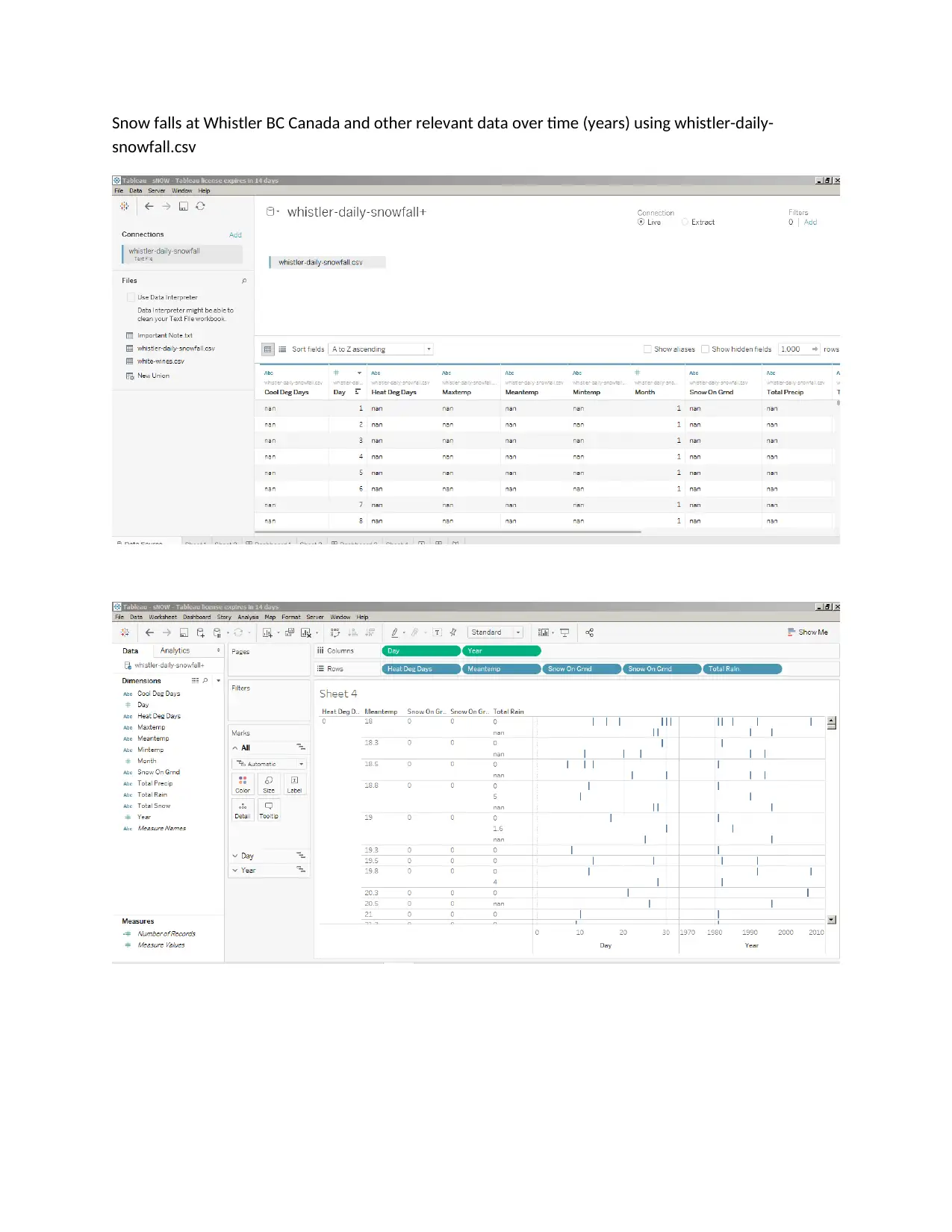

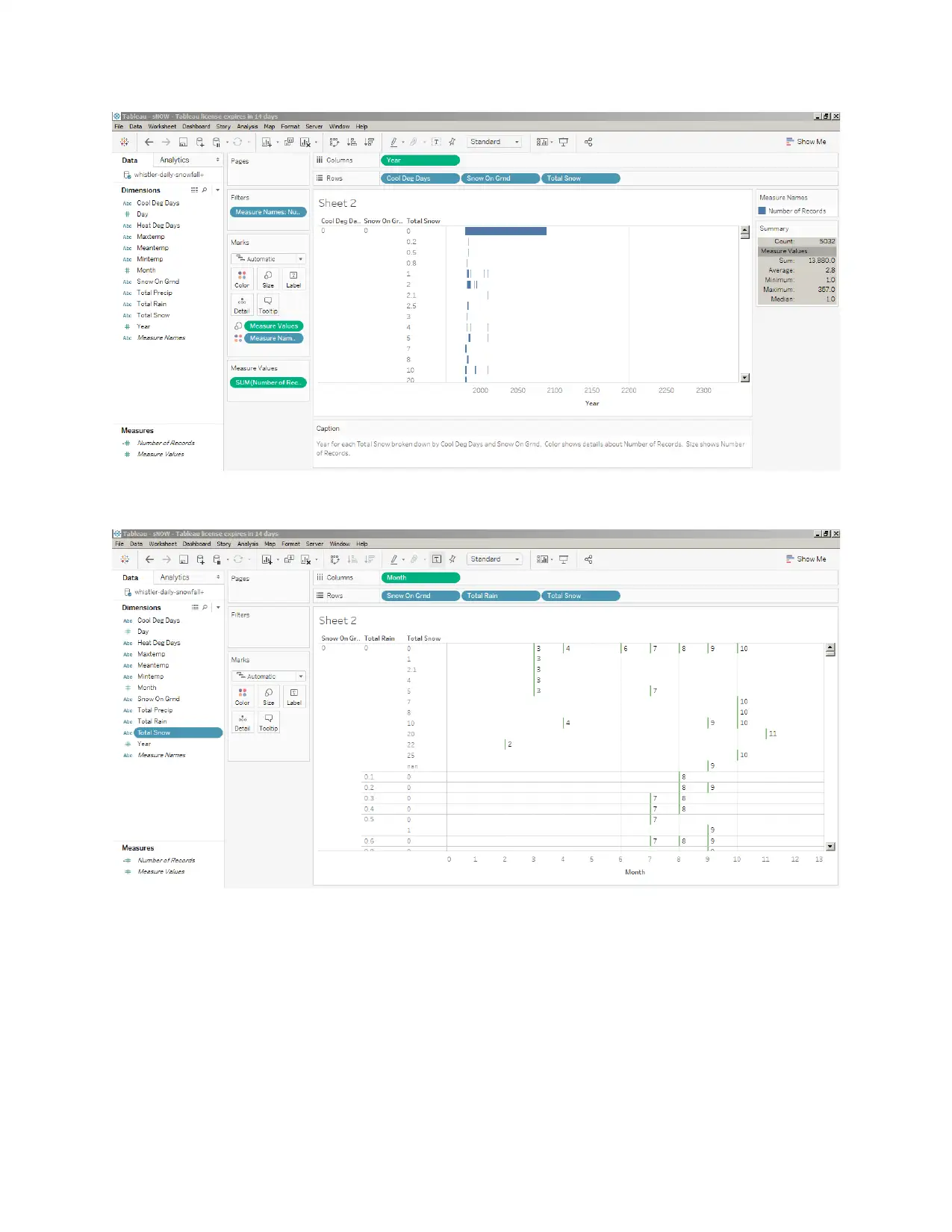

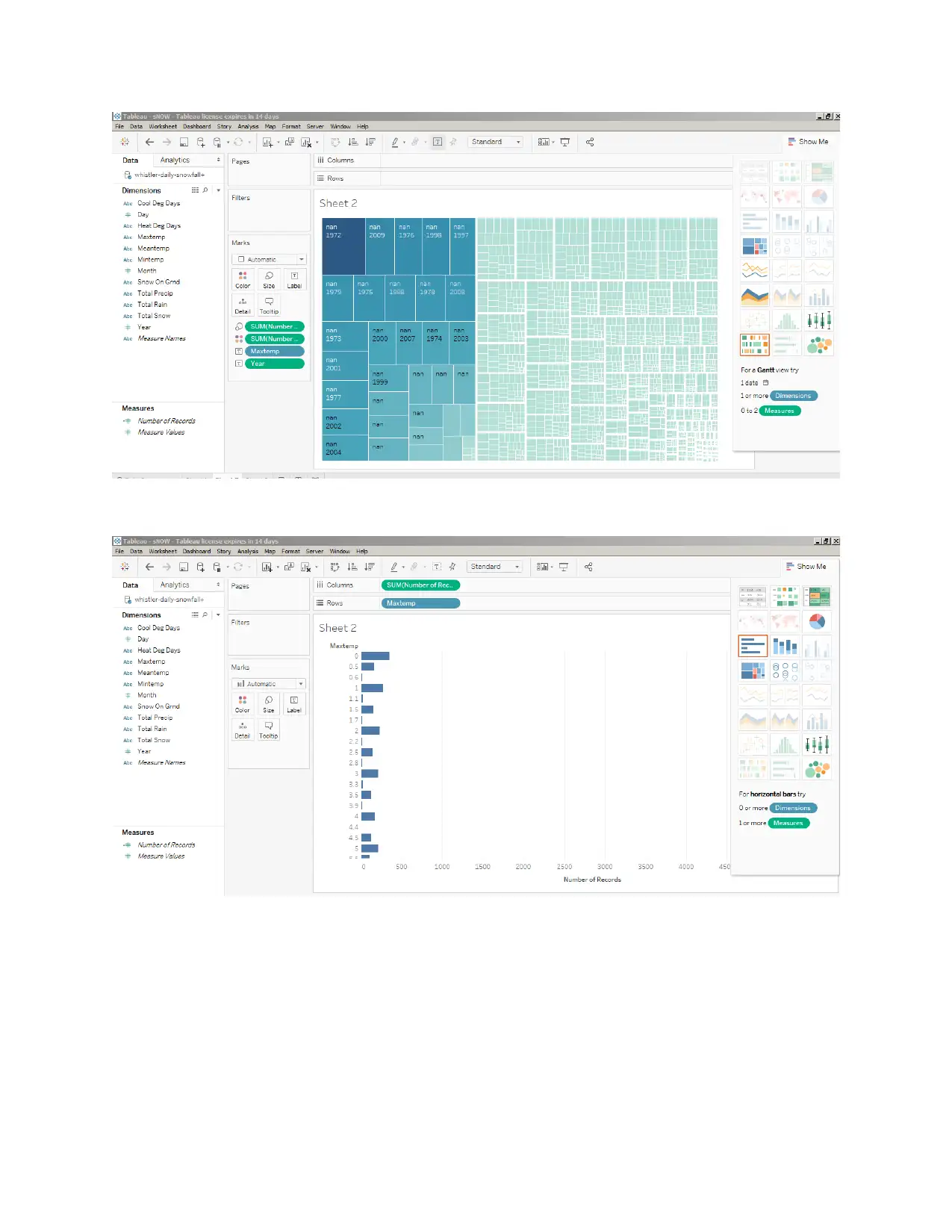

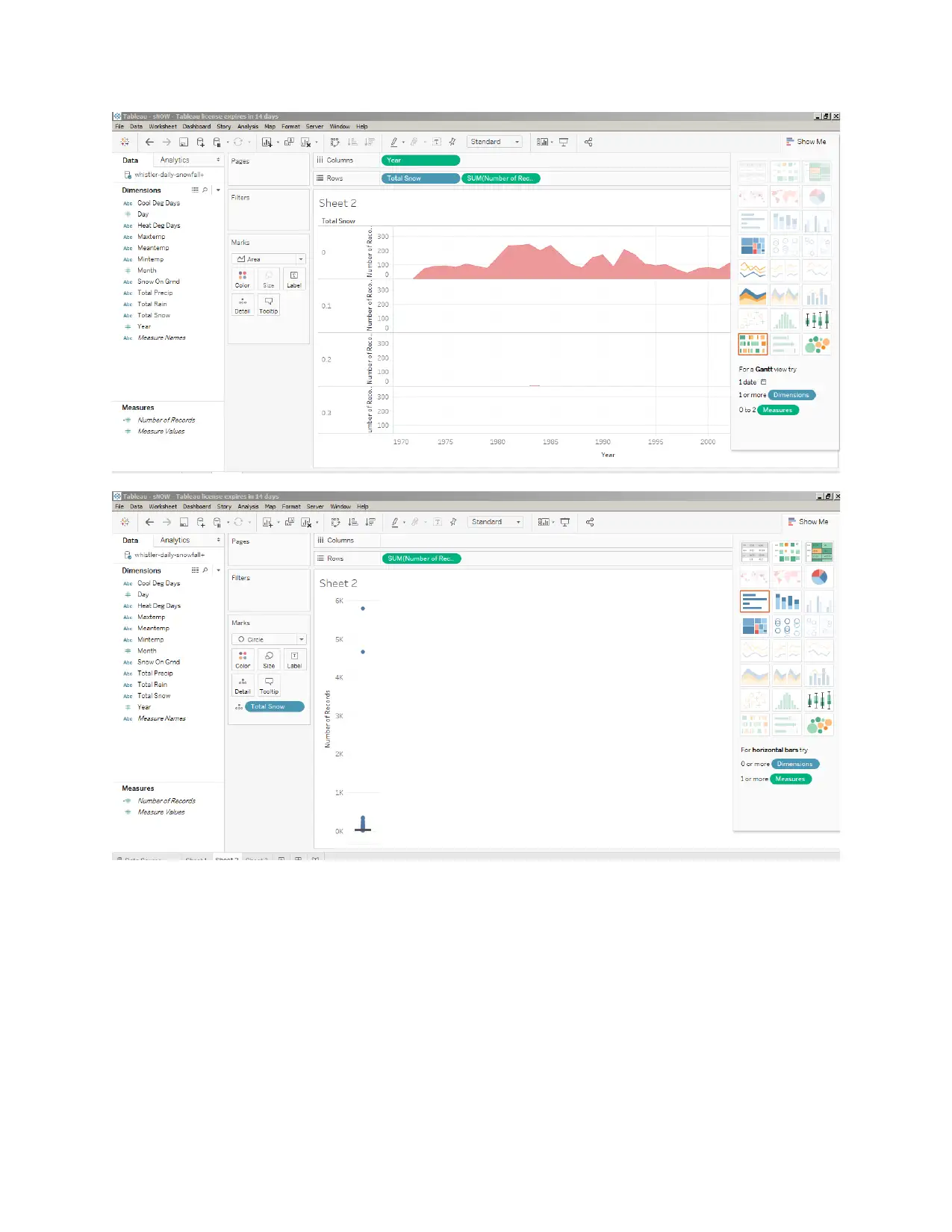

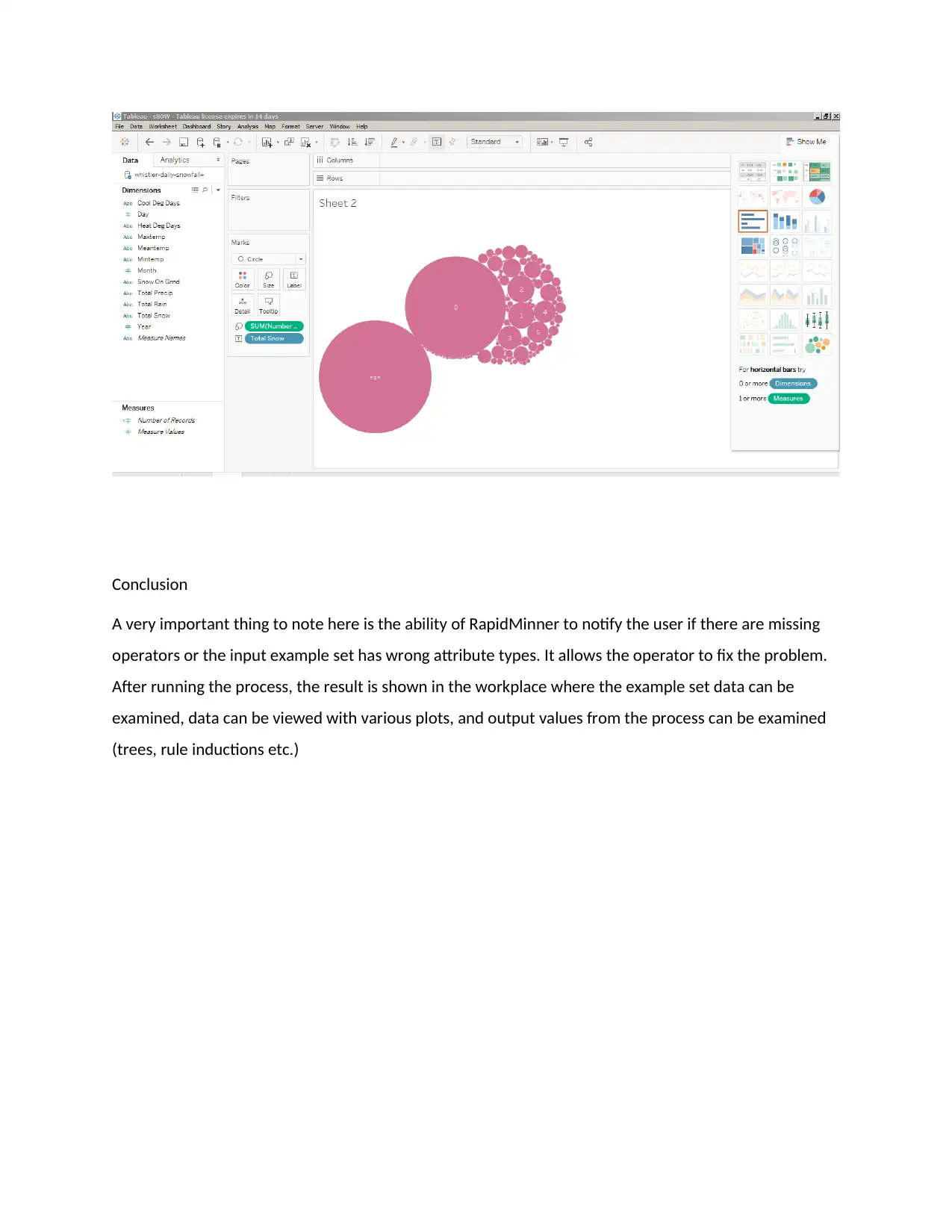

Task 3 Tableau Desktop View of Snow Falls at Whistler over Time

Snow falls at Whistler BC Canada and other relevant data over time (years) using whistler-daily-

snowfall.csv

snowfall.csv

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Conclusion

A very important thing to note here is the ability of RapidMinner to notify the user if there are missing

operators or the input example set has wrong attribute types. It allows the operator to fix the problem.

After running the process, the result is shown in the workplace where the example set data can be

examined, data can be viewed with various plots, and output values from the process can be examined

(trees, rule inductions etc.)

A very important thing to note here is the ability of RapidMinner to notify the user if there are missing

operators or the input example set has wrong attribute types. It allows the operator to fix the problem.

After running the process, the result is shown in the workplace where the example set data can be

examined, data can be viewed with various plots, and output values from the process can be examined

(trees, rule inductions etc.)

References

[1] Wikipedia, http://en.wikipedia.org/wiki/Data_analysis.

[2] J. Luan, Data Mining and Its Applications in Higher Education, 2004.

[3] S. Ayesha, T. Mustafa, A.R. Sattar, M.I. Khan, Data Mining Model for Higher Education System,

European Journal of Scientific Research ISSN 1450-216X Vol.43 No.1 (2010), pp.24-29

[4] R.S.J.d. Baker, J.E Beck. (2008). Preface. Proceedings of the First International Conference on

Educational Data Mining,pp. 2.

[5] A.N. Rafferty, M. Yudelson, Applying Learning Factors Analysis to Build Stereotypic Student Models,

Stanford University, University of Pittsburgh

[6] R.S.J.d. Baker Data Mining for Education Carnegie Mellon University, Pittsburgh, Pennsylvania, USA

[7] C. Romero , S. Ventura, Educational data mining: A survey from 1995 to 2005, Department of

Computer Sciences, University of Cordoba, Cordoba, Spain,2006

[8] R. Baker, K. Yacef, The State of Educational Data Mining in 2009: A Review and Future Visions

[9] S. Abbas, H. Sawamura, Developing an Argument Learning Environment Using Agent-Based ITS (ALES)

[10] P. Baepler, C. J. Murdoch, Academic Analytics and Data Mining in Higher Education, International

Journal for the Scholarship of Teaching and Learning, Vol. 4, No. 2 (July 2010), ISSN 1931-4744 @

Georgia Southern University

[1] Wikipedia, http://en.wikipedia.org/wiki/Data_analysis.

[2] J. Luan, Data Mining and Its Applications in Higher Education, 2004.

[3] S. Ayesha, T. Mustafa, A.R. Sattar, M.I. Khan, Data Mining Model for Higher Education System,

European Journal of Scientific Research ISSN 1450-216X Vol.43 No.1 (2010), pp.24-29

[4] R.S.J.d. Baker, J.E Beck. (2008). Preface. Proceedings of the First International Conference on

Educational Data Mining,pp. 2.

[5] A.N. Rafferty, M. Yudelson, Applying Learning Factors Analysis to Build Stereotypic Student Models,

Stanford University, University of Pittsburgh

[6] R.S.J.d. Baker Data Mining for Education Carnegie Mellon University, Pittsburgh, Pennsylvania, USA

[7] C. Romero , S. Ventura, Educational data mining: A survey from 1995 to 2005, Department of

Computer Sciences, University of Cordoba, Cordoba, Spain,2006

[8] R. Baker, K. Yacef, The State of Educational Data Mining in 2009: A Review and Future Visions

[9] S. Abbas, H. Sawamura, Developing an Argument Learning Environment Using Agent-Based ITS (ALES)

[10] P. Baepler, C. J. Murdoch, Academic Analytics and Data Mining in Higher Education, International

Journal for the Scholarship of Teaching and Learning, Vol. 4, No. 2 (July 2010), ISSN 1931-4744 @

Georgia Southern University

1 out of 28

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.