Data Mining and Visualization for Business Intelligence Report

VerifiedAdded on 2023/06/08

|14

|1554

|444

Report

AI Summary

This report presents a practical analysis of data mining and visualization techniques for business intelligence, focusing on the application of classifier algorithms using the Weka software. The study involves loading the 'vote.arff' dataset into Weka and comparing the performance of three classification algorithms: Decision Tree, Naive Bayes, and K-Nearest Neighbor. The report details the practical implementation of each algorithm, including screenshots of the Weka tool and the resulting confusion matrices. It discusses the accuracy and performance of each algorithm based on the number of correctly and incorrectly classified instances. The report concludes with a comparative analysis of the three classifiers, highlighting their strengths, weaknesses, and suitability for different classification problems, with references to relevant sources.

Data Mining and Visualization for Business Intelligence

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Table of Contents

1. Part – B Practical Part...........................................................................................................2

1.1 Classifier Algorithms......................................................................................................3

1.2 Practical Work on Decision Tree Algorithm................................................................4

1.3 Practical Work on K-Nearest Neighbour Algorithm...................................................7

1.4 Practical Work on Naïve Bayes Algorithm...................................................................9

1.5 Discussion and Consequences......................................................................................11

References.....................................................................................................................................13

1

1. Part – B Practical Part...........................................................................................................2

1.1 Classifier Algorithms......................................................................................................3

1.2 Practical Work on Decision Tree Algorithm................................................................4

1.3 Practical Work on K-Nearest Neighbour Algorithm...................................................7

1.4 Practical Work on Naïve Bayes Algorithm...................................................................9

1.5 Discussion and Consequences......................................................................................11

References.....................................................................................................................................13

1

1. Part – B Practical Part

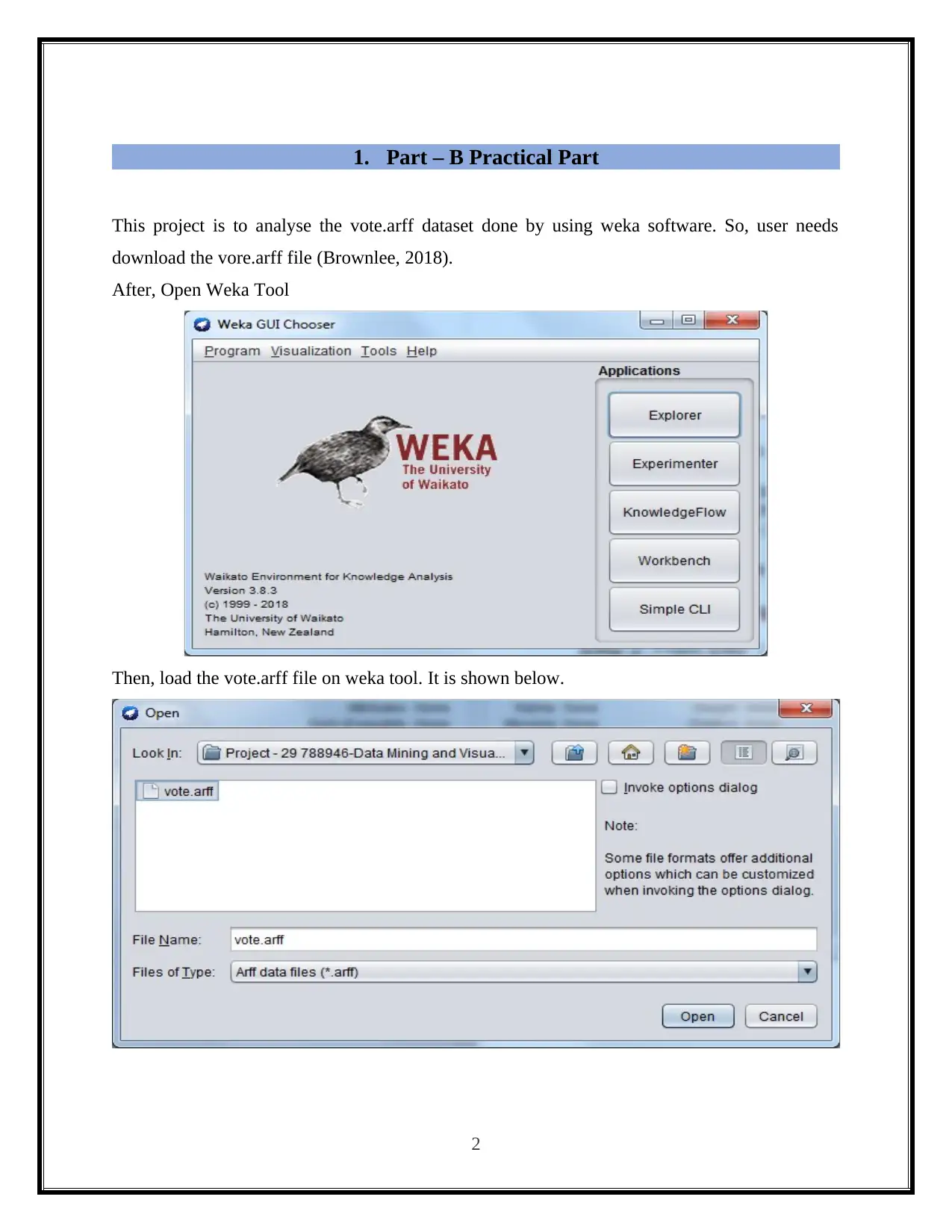

This project is to analyse the vote.arff dataset done by using weka software. So, user needs

download the vore.arff file (Brownlee, 2018).

After, Open Weka Tool

Then, load the vote.arff file on weka tool. It is shown below.

2

This project is to analyse the vote.arff dataset done by using weka software. So, user needs

download the vore.arff file (Brownlee, 2018).

After, Open Weka Tool

Then, load the vote.arff file on weka tool. It is shown below.

2

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

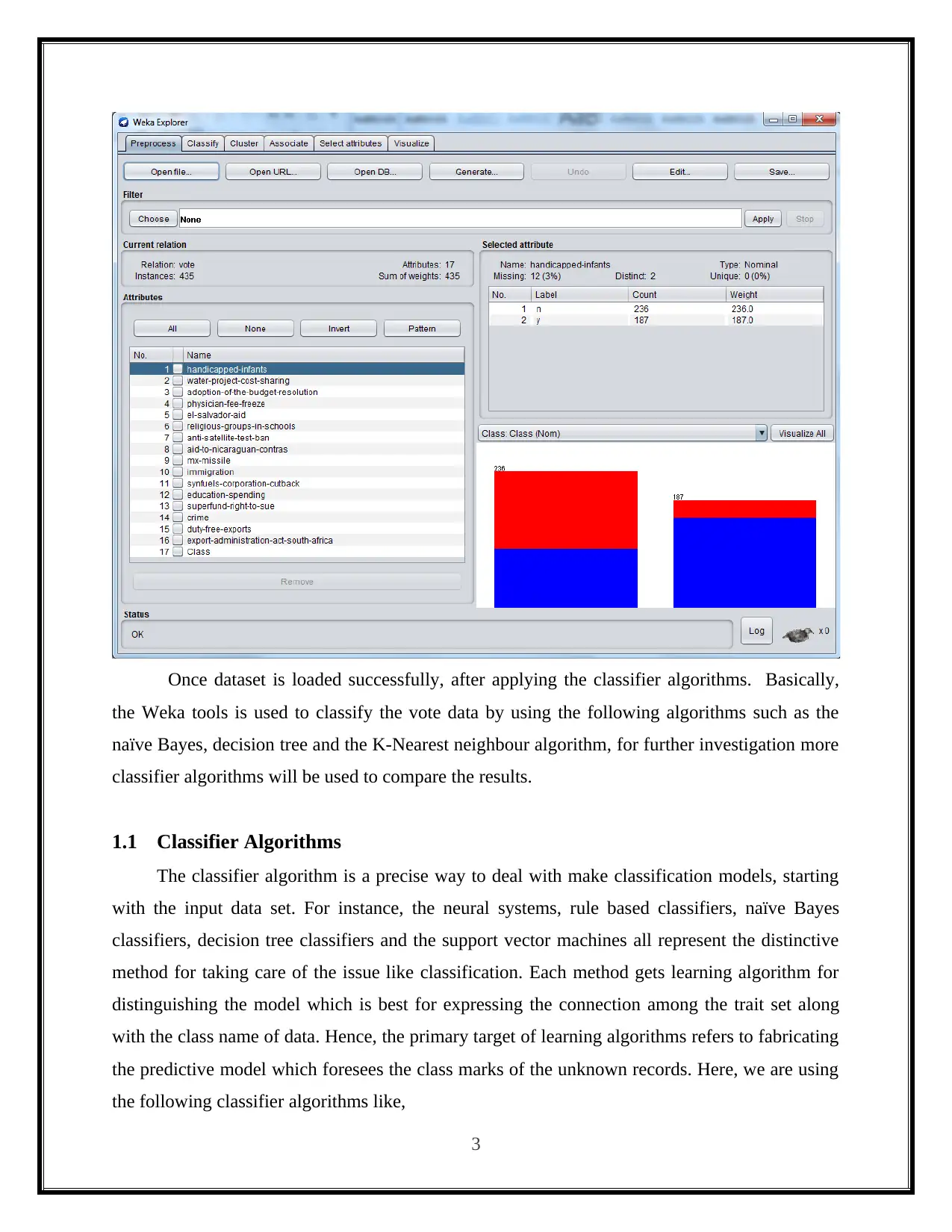

Once dataset is loaded successfully, after applying the classifier algorithms. Basically,

the Weka tools is used to classify the vote data by using the following algorithms such as the

naïve Bayes, decision tree and the K-Nearest neighbour algorithm, for further investigation more

classifier algorithms will be used to compare the results.

1.1 Classifier Algorithms

The classifier algorithm is a precise way to deal with make classification models, starting

with the input data set. For instance, the neural systems, rule based classifiers, naïve Bayes

classifiers, decision tree classifiers and the support vector machines all represent the distinctive

method for taking care of the issue like classification. Each method gets learning algorithm for

distinguishing the model which is best for expressing the connection among the trait set along

with the class name of data. Hence, the primary target of learning algorithms refers to fabricating

the predictive model which foresees the class marks of the unknown records. Here, we are using

the following classifier algorithms like,

3

the Weka tools is used to classify the vote data by using the following algorithms such as the

naïve Bayes, decision tree and the K-Nearest neighbour algorithm, for further investigation more

classifier algorithms will be used to compare the results.

1.1 Classifier Algorithms

The classifier algorithm is a precise way to deal with make classification models, starting

with the input data set. For instance, the neural systems, rule based classifiers, naïve Bayes

classifiers, decision tree classifiers and the support vector machines all represent the distinctive

method for taking care of the issue like classification. Each method gets learning algorithm for

distinguishing the model which is best for expressing the connection among the trait set along

with the class name of data. Hence, the primary target of learning algorithms refers to fabricating

the predictive model which foresees the class marks of the unknown records. Here, we are using

the following classifier algorithms like,

3

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Decision Tree

Naïve Bayes

K-Nearest neighbour

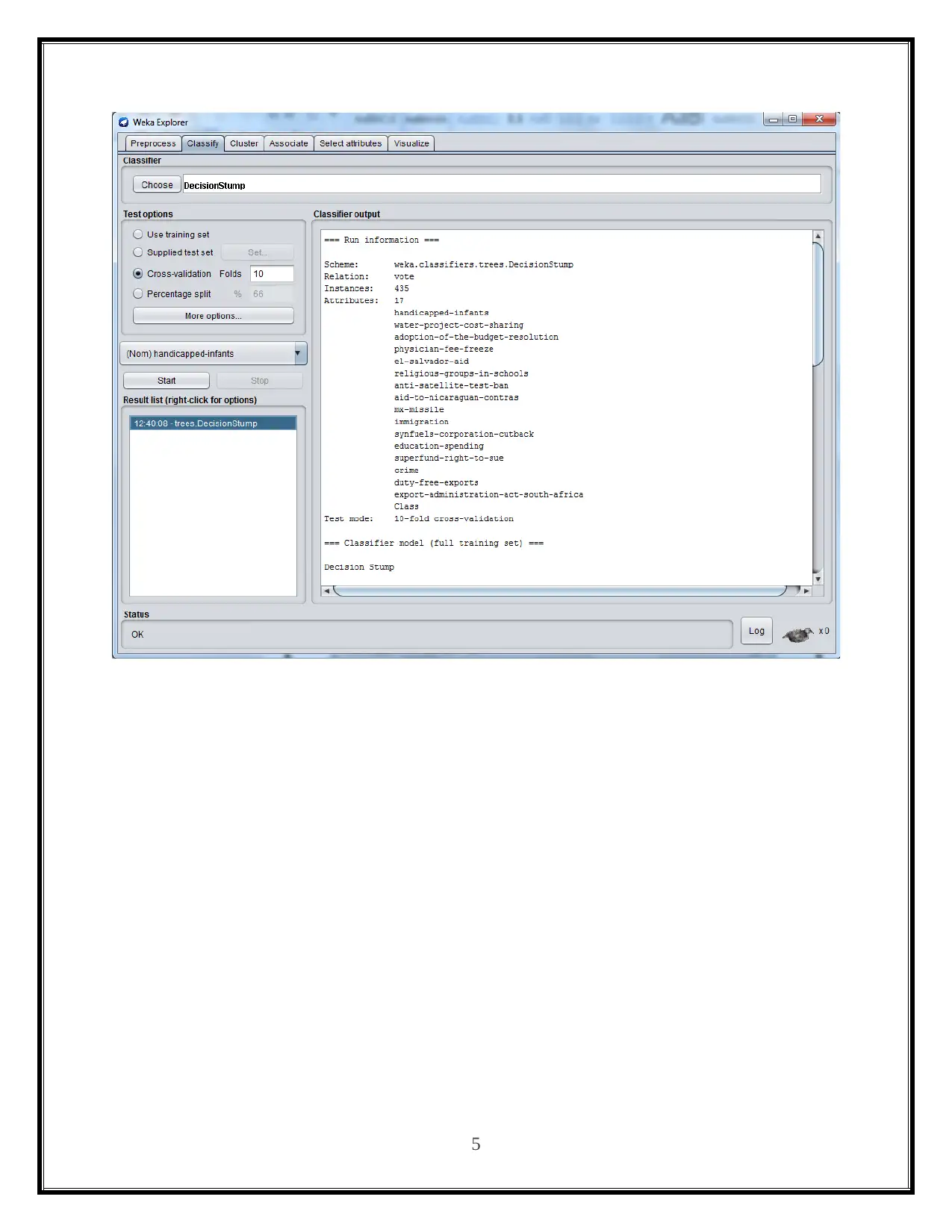

1.2 Practical Work on Decision Tree Algorithm

Decision Tree Classifier are the direct as well as generally utilized for the techniques like

classification, which uses straightforward idea for challenging the classifier related problem.

This algorithm presents the progression of effectively made investigations in terms of test

record’s characteristics. Every time it gets the answer, it receives class mark data from the

subsequent investigation in the record. As the rule, may decision trees can be built from a given

arrangement of properties. While tress’s portion are more exact than others, identifying ideal tree

is technically not feasible, because of the exponential size of search space. But, different

algorithms are created for building precise, but problematic, decision tree in a sensible measure

of time. Such algorithms usually makes use of insatiable system which grows the decision tree

with local ideal decision series related to ascribe and to utilize for dividing the data ("Chapter 3 :

Decision Tree Classifier — Theory – Machine Learning 101 – Medium", 2018).

4

Naïve Bayes

K-Nearest neighbour

1.2 Practical Work on Decision Tree Algorithm

Decision Tree Classifier are the direct as well as generally utilized for the techniques like

classification, which uses straightforward idea for challenging the classifier related problem.

This algorithm presents the progression of effectively made investigations in terms of test

record’s characteristics. Every time it gets the answer, it receives class mark data from the

subsequent investigation in the record. As the rule, may decision trees can be built from a given

arrangement of properties. While tress’s portion are more exact than others, identifying ideal tree

is technically not feasible, because of the exponential size of search space. But, different

algorithms are created for building precise, but problematic, decision tree in a sensible measure

of time. Such algorithms usually makes use of insatiable system which grows the decision tree

with local ideal decision series related to ascribe and to utilize for dividing the data ("Chapter 3 :

Decision Tree Classifier — Theory – Machine Learning 101 – Medium", 2018).

4

5

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

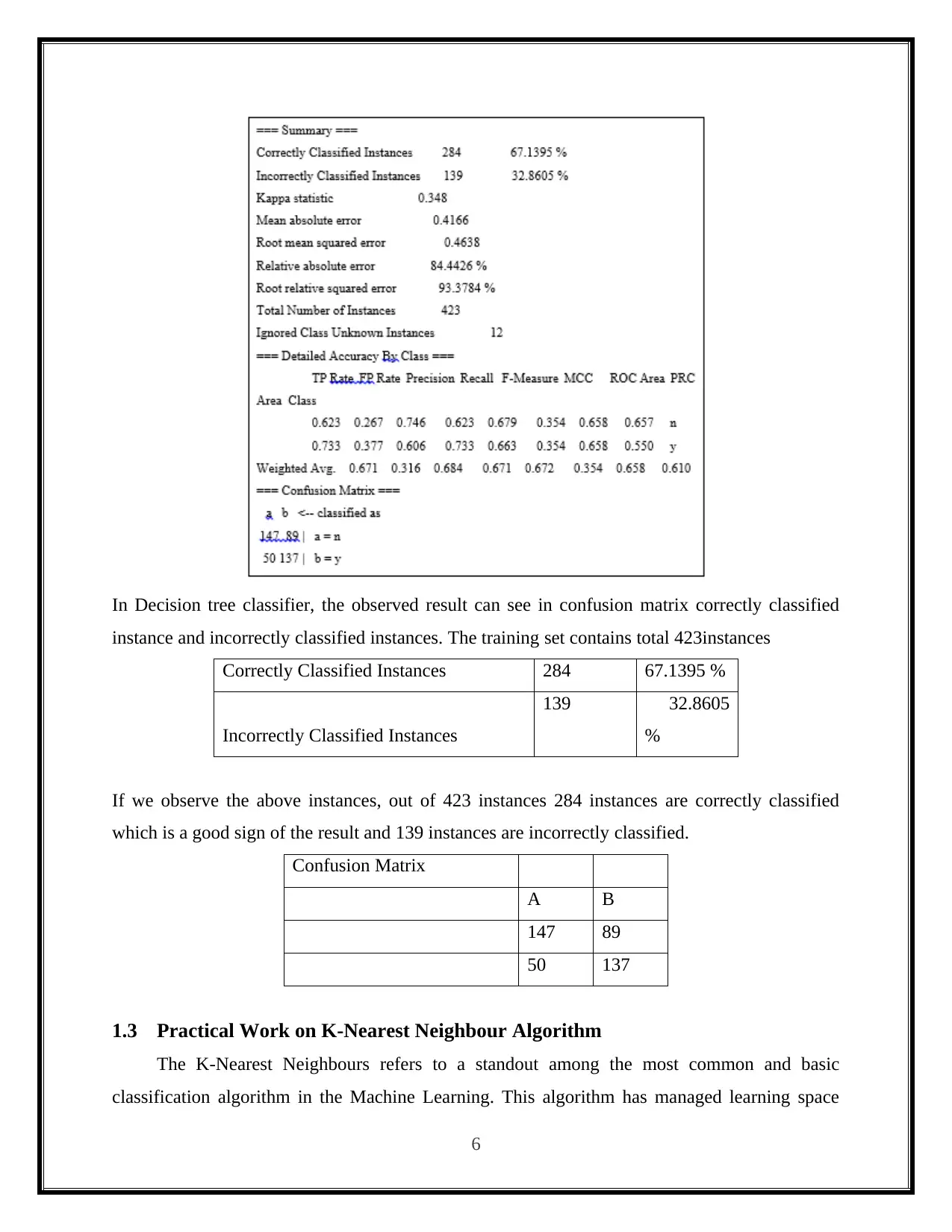

In Decision tree classifier, the observed result can see in confusion matrix correctly classified

instance and incorrectly classified instances. The training set contains total 423instances

Correctly Classified Instances 284 67.1395 %

Incorrectly Classified Instances

139 32.8605

%

If we observe the above instances, out of 423 instances 284 instances are correctly classified

which is a good sign of the result and 139 instances are incorrectly classified.

Confusion Matrix

A B

147 89

50 137

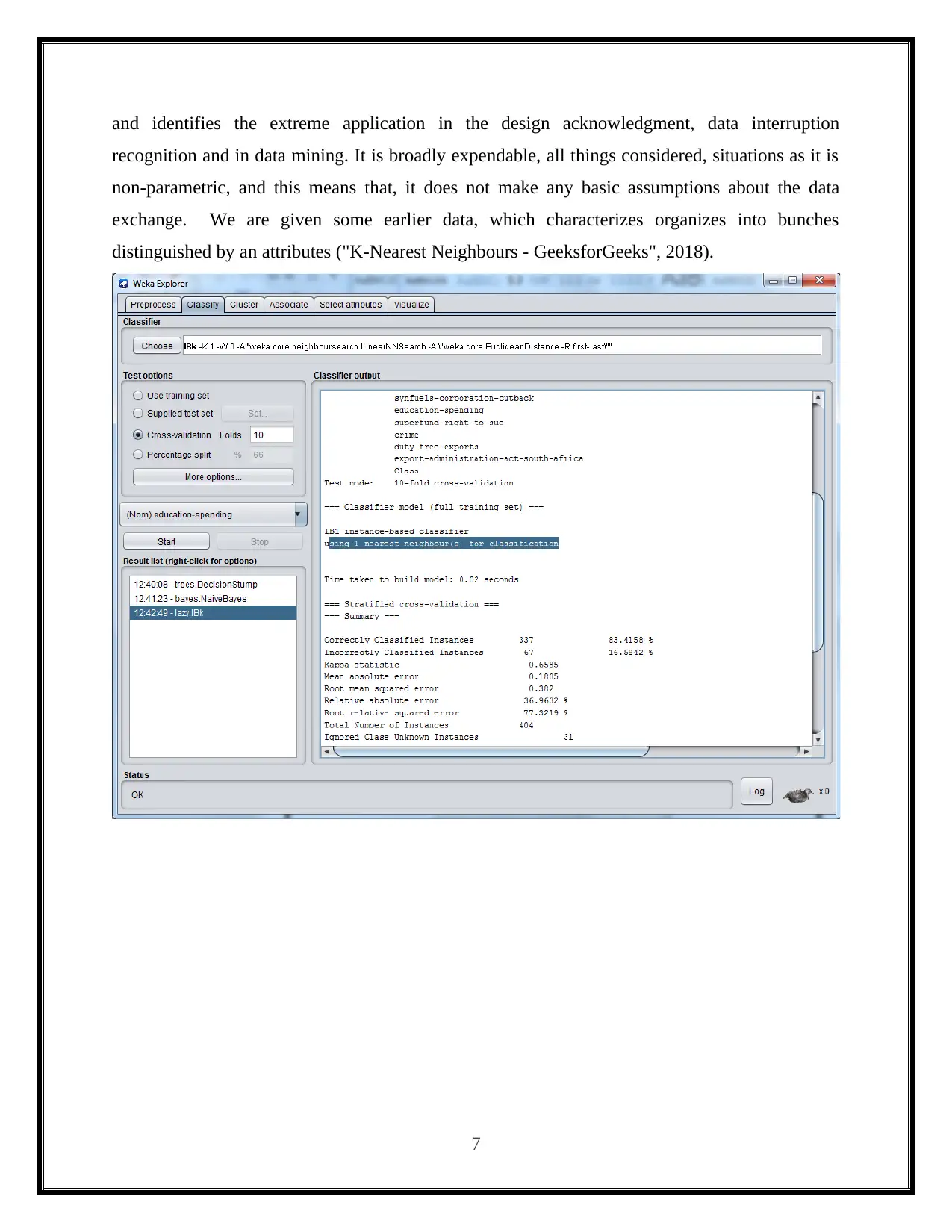

1.3 Practical Work on K-Nearest Neighbour Algorithm

The K-Nearest Neighbours refers to a standout among the most common and basic

classification algorithm in the Machine Learning. This algorithm has managed learning space

6

instance and incorrectly classified instances. The training set contains total 423instances

Correctly Classified Instances 284 67.1395 %

Incorrectly Classified Instances

139 32.8605

%

If we observe the above instances, out of 423 instances 284 instances are correctly classified

which is a good sign of the result and 139 instances are incorrectly classified.

Confusion Matrix

A B

147 89

50 137

1.3 Practical Work on K-Nearest Neighbour Algorithm

The K-Nearest Neighbours refers to a standout among the most common and basic

classification algorithm in the Machine Learning. This algorithm has managed learning space

6

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

and identifies the extreme application in the design acknowledgment, data interruption

recognition and in data mining. It is broadly expendable, all things considered, situations as it is

non-parametric, and this means that, it does not make any basic assumptions about the data

exchange. We are given some earlier data, which characterizes organizes into bunches

distinguished by an attributes ("K-Nearest Neighbours - GeeksforGeeks", 2018).

7

recognition and in data mining. It is broadly expendable, all things considered, situations as it is

non-parametric, and this means that, it does not make any basic assumptions about the data

exchange. We are given some earlier data, which characterizes organizes into bunches

distinguished by an attributes ("K-Nearest Neighbours - GeeksforGeeks", 2018).

7

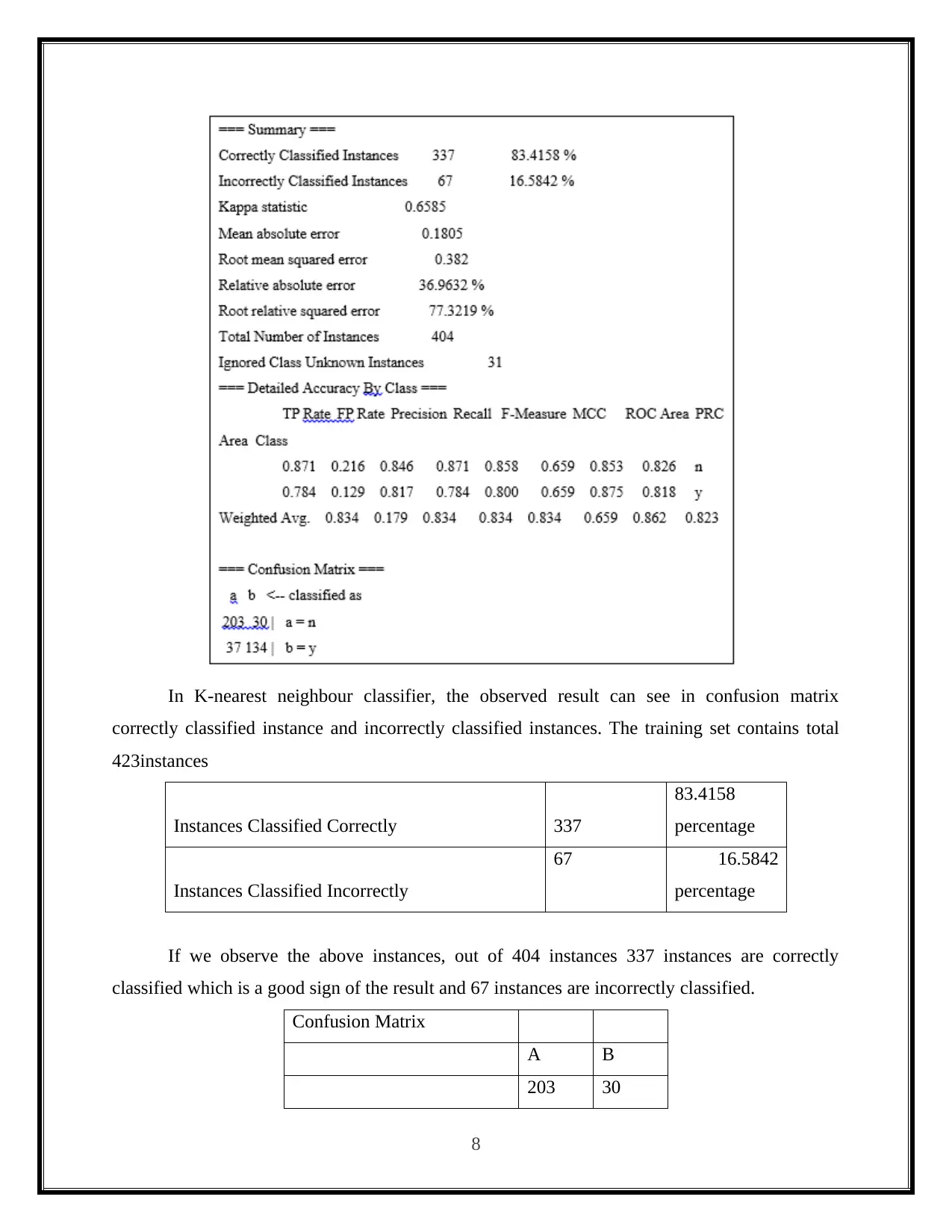

In K-nearest neighbour classifier, the observed result can see in confusion matrix

correctly classified instance and incorrectly classified instances. The training set contains total

423instances

Instances Classified Correctly 337

83.4158

percentage

Instances Classified Incorrectly

67 16.5842

percentage

If we observe the above instances, out of 404 instances 337 instances are correctly

classified which is a good sign of the result and 67 instances are incorrectly classified.

Confusion Matrix

A B

203 30

8

correctly classified instance and incorrectly classified instances. The training set contains total

423instances

Instances Classified Correctly 337

83.4158

percentage

Instances Classified Incorrectly

67 16.5842

percentage

If we observe the above instances, out of 404 instances 337 instances are correctly

classified which is a good sign of the result and 67 instances are incorrectly classified.

Confusion Matrix

A B

203 30

8

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

37 134

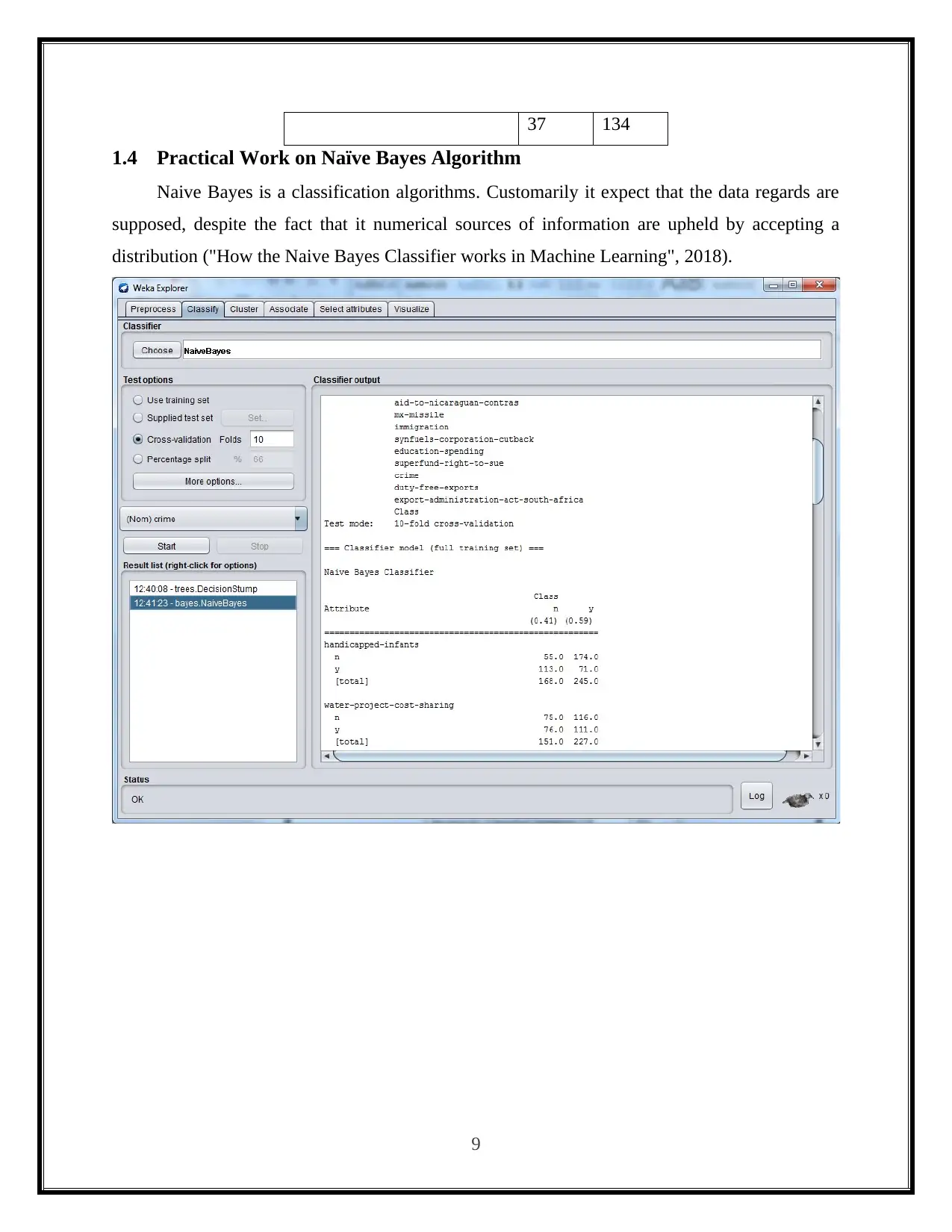

1.4 Practical Work on Naïve Bayes Algorithm

Naive Bayes is a classification algorithms. Customarily it expect that the data regards are

supposed, despite the fact that it numerical sources of information are upheld by accepting a

distribution ("How the Naive Bayes Classifier works in Machine Learning", 2018).

9

1.4 Practical Work on Naïve Bayes Algorithm

Naive Bayes is a classification algorithms. Customarily it expect that the data regards are

supposed, despite the fact that it numerical sources of information are upheld by accepting a

distribution ("How the Naive Bayes Classifier works in Machine Learning", 2018).

9

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

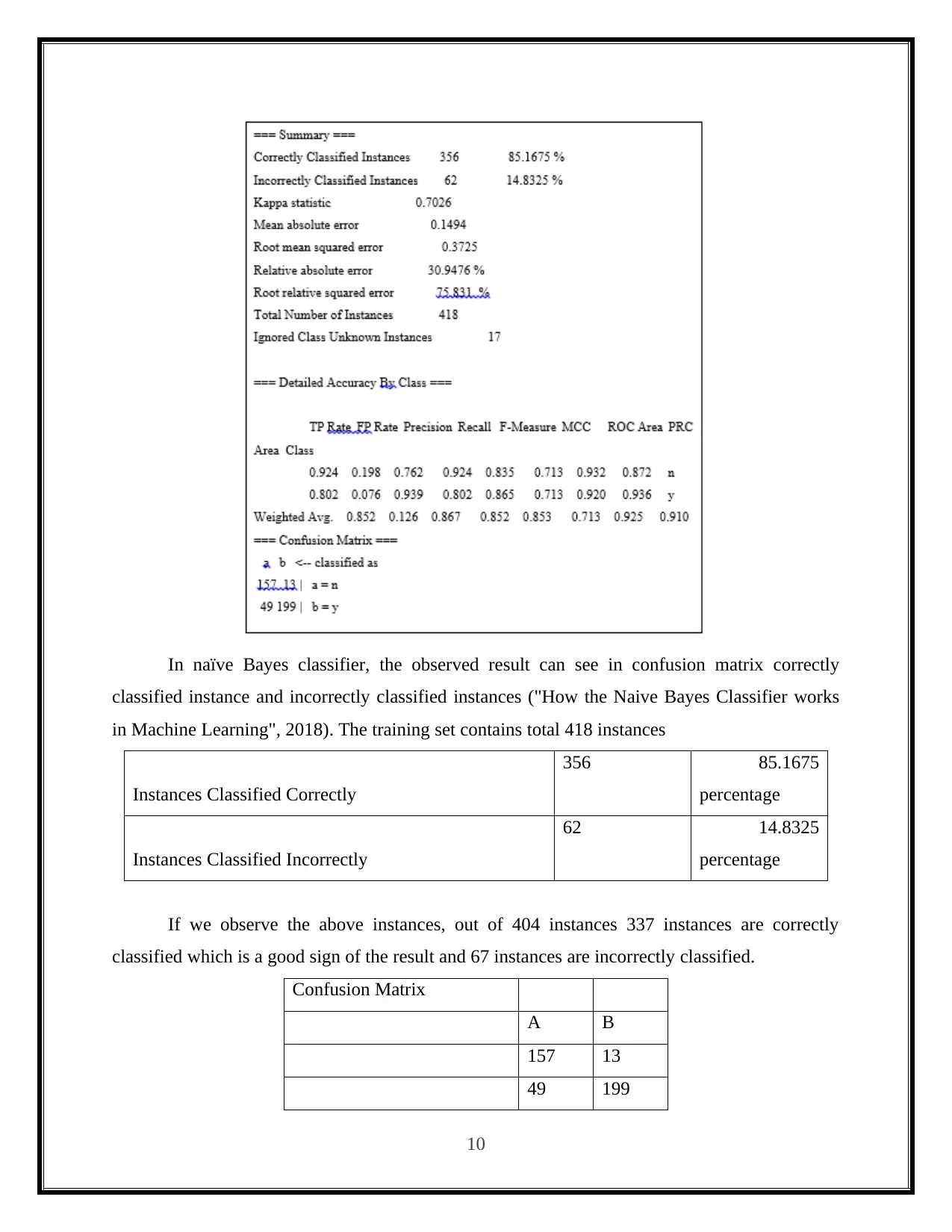

In naïve Bayes classifier, the observed result can see in confusion matrix correctly

classified instance and incorrectly classified instances ("How the Naive Bayes Classifier works

in Machine Learning", 2018). The training set contains total 418 instances

Instances Classified Correctly

356 85.1675

percentage

Instances Classified Incorrectly

62 14.8325

percentage

If we observe the above instances, out of 404 instances 337 instances are correctly

classified which is a good sign of the result and 67 instances are incorrectly classified.

Confusion Matrix

A B

157 13

49 199

10

classified instance and incorrectly classified instances ("How the Naive Bayes Classifier works

in Machine Learning", 2018). The training set contains total 418 instances

Instances Classified Correctly

356 85.1675

percentage

Instances Classified Incorrectly

62 14.8325

percentage

If we observe the above instances, out of 404 instances 337 instances are correctly

classified which is a good sign of the result and 67 instances are incorrectly classified.

Confusion Matrix

A B

157 13

49 199

10

1.5 Discussion and Consequences

Look at three classifier, Naïve Bayes utilizes a straightforward usage of the theorem

called Bayes Theorem where the earlier likelihood for each class is computed from the

preparation data and thought to be autonomous of one another. This is an unlikely assumption

since we anticipate that the factors will associate and be needful, despite the fact that this

suspicion will make the chances quick as well as simple for computing it. Indeed, also under the

unlikely suspicion, the Naive Bayes has appeared as an extremely compelling classification

algorithms. Naive Bayes figures the back likelihood for each class and makes an expectation for

class which has most astounding likelihood. In that capacity, it bolsters both double classification

and multi-class classification issues.

The decision trees will support the classification and regression issues. Decision trees are

all the more as of late alluded to as Classification and Regression Trees (CART). It works by

making a tree to assess the data example, begin at the foundation of the tree and moves down to

leaves (roots) till the point that an expectation could be done. The way toward making the

decision tree works by covetously choosing the split point which is best keeping in mind the end

goal to make forecasts and rehashing the procedure until the point when the tree is an established

("Decision Tree Classifier", 2018).

The k-nearest neighbour’s algorithms underpins both classification and regression. It is

additionally called kNN for short. It works by putting away the whole preparing dataset and

questioning it to find the k most comparable preparing designs when making an expectation. All

things considered, there is no model other than the simple preparing dataset and the main

algorithms performed is the questioning of the preparation dataset when a forecast is asked

(Srivastava, 2018). It is a straightforward algorithms, yet one that does not expect particularly

about the issue other than that the separation between data examples is significant in making

forecasts. Accordingly, it frequently accomplishes great execution.

At long last, the guileless Bayes classifier is utilized to give the powerful data

investigation contrasted with other classifier. It has simple and quick data examination to give

the productive framework to sharing the proof are set up and it is can acquire and successfully

utilize the best of accessible confirmation including lodging deals data assessment. In this Bayes

Classification, the common sense learning algorithms, watched data and earlier learning will be

joined. So we can get a valuable viewpoint data mining to comprehend the all the more learning

11

Look at three classifier, Naïve Bayes utilizes a straightforward usage of the theorem

called Bayes Theorem where the earlier likelihood for each class is computed from the

preparation data and thought to be autonomous of one another. This is an unlikely assumption

since we anticipate that the factors will associate and be needful, despite the fact that this

suspicion will make the chances quick as well as simple for computing it. Indeed, also under the

unlikely suspicion, the Naive Bayes has appeared as an extremely compelling classification

algorithms. Naive Bayes figures the back likelihood for each class and makes an expectation for

class which has most astounding likelihood. In that capacity, it bolsters both double classification

and multi-class classification issues.

The decision trees will support the classification and regression issues. Decision trees are

all the more as of late alluded to as Classification and Regression Trees (CART). It works by

making a tree to assess the data example, begin at the foundation of the tree and moves down to

leaves (roots) till the point that an expectation could be done. The way toward making the

decision tree works by covetously choosing the split point which is best keeping in mind the end

goal to make forecasts and rehashing the procedure until the point when the tree is an established

("Decision Tree Classifier", 2018).

The k-nearest neighbour’s algorithms underpins both classification and regression. It is

additionally called kNN for short. It works by putting away the whole preparing dataset and

questioning it to find the k most comparable preparing designs when making an expectation. All

things considered, there is no model other than the simple preparing dataset and the main

algorithms performed is the questioning of the preparation dataset when a forecast is asked

(Srivastava, 2018). It is a straightforward algorithms, yet one that does not expect particularly

about the issue other than that the separation between data examples is significant in making

forecasts. Accordingly, it frequently accomplishes great execution.

At long last, the guileless Bayes classifier is utilized to give the powerful data

investigation contrasted with other classifier. It has simple and quick data examination to give

the productive framework to sharing the proof are set up and it is can acquire and successfully

utilize the best of accessible confirmation including lodging deals data assessment. In this Bayes

Classification, the common sense learning algorithms, watched data and earlier learning will be

joined. So we can get a valuable viewpoint data mining to comprehend the all the more learning

11

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 14

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2025 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.