Efficient and Robust Driver Assistance System Project Proposal

VerifiedAdded on 2023/06/13

|11

|3457

|470

Project

AI Summary

This project proposal outlines the development of an efficient and robust Advanced Driver Assistance System (ADAS) aimed at improving road safety. The proposal identifies the limitations of current ADAS technologies, such as traffic sign recognition and drowsiness detection systems, which suffer from issues like poor performance under varying environmental conditions, camera viewpoint changes, and slow processing speeds. The project aims to address these challenges by utilizing distributed representation learning techniques to create a system that is more robust, efficient, and applicable in real-world scenarios. The methodology involves data collection, dataset processing, data augmentation, and the definition of a Convolutional Neural Network (CNN) model. The experimental setup will utilize tools like Torch, Theano, Caffe, Tensorflow, and MATLAB, and the performance will be evaluated using standard metrics like recall, precision, F1-score, and accuracy. The project plan includes a detailed Gantt chart outlining the various activities and timelines for completion.

[Please fill your name]

Efficient and Robust Driver Assistance System

By ‘[Please fill your name]

Affiliation (MSc Profile or Track) & Study no.

Efficient and Robust Driver Assistance System

By ‘[Please fill your name]

Affiliation (MSc Profile or Track) & Study no.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

[Please fill your name]

Table of Contents

Efficient and Robust Driver Assistance System...............................................................................1

1. Executive Summary..................................................................................................................3

2. Introduction..............................................................................................................................3

3. State-of-the-art/Literature Review............................................................................................3

4. Research Question, Aim/Objectives and Sub-goals.................................................................4

Research questions...................................................................................................................4

Goals.........................................................................................................................................5

5. Theoretical Content/Methodology............................................................................................5

6. Experimental Setting (Hardware or Software or Both)............................................................6

7. Risk Analysis, Results, Outcome and Relevance.....................................................................7

8. Project Planning and Gantt Chart.............................................................................................7

List of Activities:......................................................................................................................7

Gantt Chart...............................................................................................................................9

9. Conclusions............................................................................................................................10

10. References...........................................................................................................................11

Table of Contents

Efficient and Robust Driver Assistance System...............................................................................1

1. Executive Summary..................................................................................................................3

2. Introduction..............................................................................................................................3

3. State-of-the-art/Literature Review............................................................................................3

4. Research Question, Aim/Objectives and Sub-goals.................................................................4

Research questions...................................................................................................................4

Goals.........................................................................................................................................5

5. Theoretical Content/Methodology............................................................................................5

6. Experimental Setting (Hardware or Software or Both)............................................................6

7. Risk Analysis, Results, Outcome and Relevance.....................................................................7

8. Project Planning and Gantt Chart.............................................................................................7

List of Activities:......................................................................................................................7

Gantt Chart...............................................................................................................................9

9. Conclusions............................................................................................................................10

10. References...........................................................................................................................11

[Please fill your name]

1. Executive Summary

In Automobile Industry, ADAS (Advance Driver Assistance Systems) has been becoming its

integral part because of the advancement in the interest of the consumers and the government

regulations in the aspects related to the safety of the road. There are many beneficial aspects of

the ADAS that includes monitoring the outer environment, generating warnings for the instances

of danger, providing information related to the traffic signals and allowing them to be ahead of

the time. Other benefits are applying brakes for the scenarios where there is the possibility of the

collisions, monitoring the behavior of the drivers while driving including aggressiveness, panic,

sleepy, and many more. There are many drawbacks in the ADAS system resulting in the

decrement of the efficiency and robustness of the system that includes fading of the traffic

signals, change in the view point of the camera, natural conditions, and image blurring. The aim

of this project is to mitigate these challenges through utilizing the “distributed representation

learning techniques” those can be utilized in making the system much efficient, robust, and

applicable in the real world scenarios.

The methodology proposed in this report is capable of improving the ADAS technologies in

manner to make the system much efficient, robust and thus, enhance the performance of the

technology. The scope of the project is to enhance the safety of the drivers on the road focusing

on the long-term administration and road safety for many of the automobile majors, humanity,

and policy makers.

2. Introduction

Artificial Intelligence (AI) has been contributing in the development of the life living of the

individuals through facilitating the automation in the real life and many applications of the AI in

the real world. More or less AI can be applicable in most of the sectors in the real world and so is

in the automobile sector that has been advanced with the technology and resulted in many

beneficial advancement. Most of the accident cases at the roads occur because of the negligence

of the drivers and ADAS is capable of predicting the scenarios and presenting solution for

eliminating these challenges through the real-time data collection and execution. Many

automated cars have been utilizing the LiDAR (Light Detection and Ranging), RADAR (Radio

Detection and Ranging), and IR (infrared) sensors, cameras, and Ultrasonic cameras. However,

the performance of the ADAS technology has not been much reliable until the date and this

report aims at highlighting o that perspective and proposing solutions those could be utilized for

eliminating the problems. It can be stated that the automobile industry’s les in the option of

presenting driverless cars with zero error and 100% efficiency. The objective of this project is to

eliminate the identified issues and propose effective solutions those could be applied for the

enhancement in the performance of the ADAS technology and make it more robust, efficient, and

accurate.

3. State-of-the-art/Literature Review

ADSAS can be represented as encapsulation of various systems comprised of IoT (Internet of

Things) and V2V (vehicle-to-vehicle) wireless communication systems, vision camera systems,

and sensor technologies (kale and Mahajan 2015). The ADAS system comprised of very vast

1. Executive Summary

In Automobile Industry, ADAS (Advance Driver Assistance Systems) has been becoming its

integral part because of the advancement in the interest of the consumers and the government

regulations in the aspects related to the safety of the road. There are many beneficial aspects of

the ADAS that includes monitoring the outer environment, generating warnings for the instances

of danger, providing information related to the traffic signals and allowing them to be ahead of

the time. Other benefits are applying brakes for the scenarios where there is the possibility of the

collisions, monitoring the behavior of the drivers while driving including aggressiveness, panic,

sleepy, and many more. There are many drawbacks in the ADAS system resulting in the

decrement of the efficiency and robustness of the system that includes fading of the traffic

signals, change in the view point of the camera, natural conditions, and image blurring. The aim

of this project is to mitigate these challenges through utilizing the “distributed representation

learning techniques” those can be utilized in making the system much efficient, robust, and

applicable in the real world scenarios.

The methodology proposed in this report is capable of improving the ADAS technologies in

manner to make the system much efficient, robust and thus, enhance the performance of the

technology. The scope of the project is to enhance the safety of the drivers on the road focusing

on the long-term administration and road safety for many of the automobile majors, humanity,

and policy makers.

2. Introduction

Artificial Intelligence (AI) has been contributing in the development of the life living of the

individuals through facilitating the automation in the real life and many applications of the AI in

the real world. More or less AI can be applicable in most of the sectors in the real world and so is

in the automobile sector that has been advanced with the technology and resulted in many

beneficial advancement. Most of the accident cases at the roads occur because of the negligence

of the drivers and ADAS is capable of predicting the scenarios and presenting solution for

eliminating these challenges through the real-time data collection and execution. Many

automated cars have been utilizing the LiDAR (Light Detection and Ranging), RADAR (Radio

Detection and Ranging), and IR (infrared) sensors, cameras, and Ultrasonic cameras. However,

the performance of the ADAS technology has not been much reliable until the date and this

report aims at highlighting o that perspective and proposing solutions those could be utilized for

eliminating the problems. It can be stated that the automobile industry’s les in the option of

presenting driverless cars with zero error and 100% efficiency. The objective of this project is to

eliminate the identified issues and propose effective solutions those could be applied for the

enhancement in the performance of the ADAS technology and make it more robust, efficient, and

accurate.

3. State-of-the-art/Literature Review

ADSAS can be represented as encapsulation of various systems comprised of IoT (Internet of

Things) and V2V (vehicle-to-vehicle) wireless communication systems, vision camera systems,

and sensor technologies (kale and Mahajan 2015). The ADAS system comprised of very vast

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

[Please fill your name]

sections those cannot be covered in a single file and hence, this report emphasizes on two

important visions including the drowsiness alert system and traffic sign recognition system. In

these both the scenarios, the input data is recorded in the form of the image and/ or video and

passed to the processing unit for the real-time data execution (Villalon Torres & Flores 2017).

The processor will be making the computation on the basis of the algorithms embedded within it

and the output decision will be carried out accordingly. Major five stages of the computer vision

pipeline include the “data processing, objects segmentation and detection, feature extraction,

classification and evaluation (Zeng et al. 2015).” The every stage has its different role including

from the collection of data, processing of data and extraction features. The features those have

been extracted will be passing through different classifiers in manner to for the training (Choi,

Song & Lee 2018). “k-Nearest Neighbor, Naïve Bayes, Linear classifier, Decision Trees, Random

Forests, Multi-Layer Perceptron (MLP), Support Vector Machines (SVM), AdaBoost and ensemble

of various classifiers” comprised of the main classifiers within the computer vision research

(Nguyenn, Ryong and Kyu 2014). (Jung et al. 2018) have noticed it that the approaches have

been not very good for the performance of the ADAS technology considering the conventional

computer vision. Previously, the recognition of the traffic sign was delivered through the

traditional conventional computer system however; te current approaches can be delivered for the

identification of the colors, edges, and extraction of the smaller objects (Sun et al. 2015). In manner

to boost up the performance, many attempts were delivered considering the combination of different

classifiers. SVR (Support Vector Regression) and AdaBoost had contributed in the improvement of

the performance however according to Li and Yand (2016); it has been very subjective in manner to

capture and detect many features including the texture, shape, color, spatial location and others and

was limited for the ambient conditions (Gudigar et al. 2017). Experimental results stated how the

natural conditions such as fog, smog, rain, wind and others make the system vulnerable and thus,

affecting the efficiency of the project.

The considerable areas for the delivery of this project is the consideration of the drowsiness alert

system and recognition of the traffic signs as these are the vital factors for the accidents at the roads

(Liu et al. 2016). Many researchers had contributed in the identification of these major concerns and

tried to eliminate the problem for the enhancement in the efficiency of the ADAS technology. Keser,

Kramar and Nozica (2016) proposed the classification based on three different approaches including

the psychological, vehicle, and behavior based approaches in manner to identify the factors affecting

the overall output of the ADAS technology and driverless vehicles.

4. Research Question, Aim/Objectives and Sub-goals

4. a Research questions

The sector of the ADAS covers a vast sector of the technologies those are comprised for this

technology and hence, for this paper, two major visions have been considered as explained

earlier. This paper will be emphasizing on the drowsiness alert system and “Traffic Sign

Recognition System” and following research questions will be addressed in this report:

Question 1: Identification of the factors those have been limiting the application of the ADAS

technologies within the automobile sector

Question 2: Identification of the factors and aspects those could be applied for the enhancement

of the ADAS performance

sections those cannot be covered in a single file and hence, this report emphasizes on two

important visions including the drowsiness alert system and traffic sign recognition system. In

these both the scenarios, the input data is recorded in the form of the image and/ or video and

passed to the processing unit for the real-time data execution (Villalon Torres & Flores 2017).

The processor will be making the computation on the basis of the algorithms embedded within it

and the output decision will be carried out accordingly. Major five stages of the computer vision

pipeline include the “data processing, objects segmentation and detection, feature extraction,

classification and evaluation (Zeng et al. 2015).” The every stage has its different role including

from the collection of data, processing of data and extraction features. The features those have

been extracted will be passing through different classifiers in manner to for the training (Choi,

Song & Lee 2018). “k-Nearest Neighbor, Naïve Bayes, Linear classifier, Decision Trees, Random

Forests, Multi-Layer Perceptron (MLP), Support Vector Machines (SVM), AdaBoost and ensemble

of various classifiers” comprised of the main classifiers within the computer vision research

(Nguyenn, Ryong and Kyu 2014). (Jung et al. 2018) have noticed it that the approaches have

been not very good for the performance of the ADAS technology considering the conventional

computer vision. Previously, the recognition of the traffic sign was delivered through the

traditional conventional computer system however; te current approaches can be delivered for the

identification of the colors, edges, and extraction of the smaller objects (Sun et al. 2015). In manner

to boost up the performance, many attempts were delivered considering the combination of different

classifiers. SVR (Support Vector Regression) and AdaBoost had contributed in the improvement of

the performance however according to Li and Yand (2016); it has been very subjective in manner to

capture and detect many features including the texture, shape, color, spatial location and others and

was limited for the ambient conditions (Gudigar et al. 2017). Experimental results stated how the

natural conditions such as fog, smog, rain, wind and others make the system vulnerable and thus,

affecting the efficiency of the project.

The considerable areas for the delivery of this project is the consideration of the drowsiness alert

system and recognition of the traffic signs as these are the vital factors for the accidents at the roads

(Liu et al. 2016). Many researchers had contributed in the identification of these major concerns and

tried to eliminate the problem for the enhancement in the efficiency of the ADAS technology. Keser,

Kramar and Nozica (2016) proposed the classification based on three different approaches including

the psychological, vehicle, and behavior based approaches in manner to identify the factors affecting

the overall output of the ADAS technology and driverless vehicles.

4. Research Question, Aim/Objectives and Sub-goals

4. a Research questions

The sector of the ADAS covers a vast sector of the technologies those are comprised for this

technology and hence, for this paper, two major visions have been considered as explained

earlier. This paper will be emphasizing on the drowsiness alert system and “Traffic Sign

Recognition System” and following research questions will be addressed in this report:

Question 1: Identification of the factors those have been limiting the application of the ADAS

technologies within the automobile sector

Question 2: Identification of the factors and aspects those could be applied for the enhancement

of the ADAS performance

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

[Please fill your name]

Question 3: Identification of the factors those could be applied for enhancing the efficiency of the

ADAS system and making it immune from ambient natural conditions.

Question 4: Identification of the aspects those could be applied in ADAS for the enhancement in

the computation for the real-time decision-making and deployment of the technology in the real

world.

4. b Goals

The goal of the report is to make the project much efficient, faster processing, and highly robust

in contrast to be applicable in the real world. The answers of the research question will be

identified through dividing the whole project in sub-activities that will also be helpful in auditing

and monitoring the growth and progress of the report. The list of activities and sub activities

along with the start date, finish date, and time required has been presented in the section 7, based

on which the Gantt chart has been prepared.

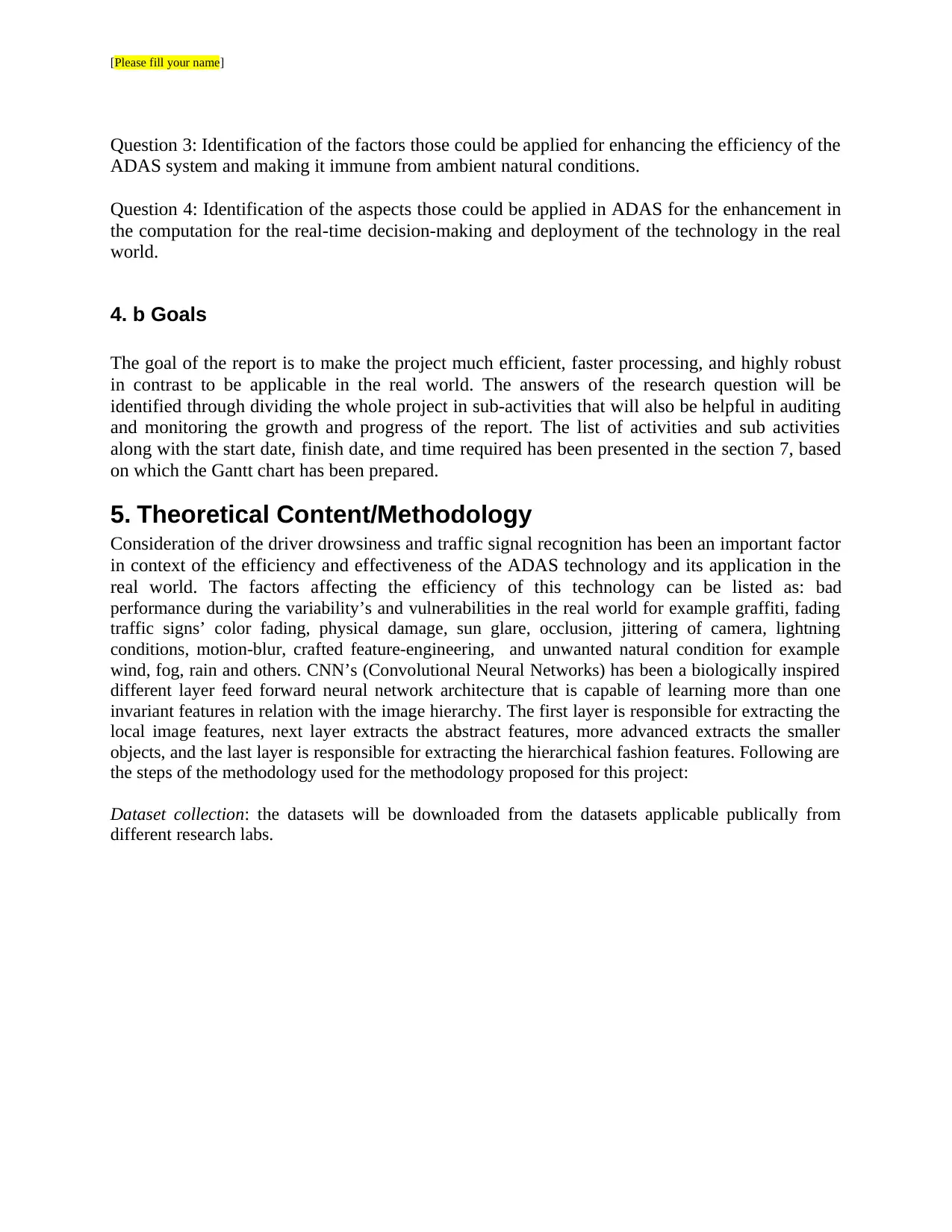

5. Theoretical Content/Methodology

Consideration of the driver drowsiness and traffic signal recognition has been an important factor

in context of the efficiency and effectiveness of the ADAS technology and its application in the

real world. The factors affecting the efficiency of this technology can be listed as: bad

performance during the variability’s and vulnerabilities in the real world for example graffiti, fading

traffic signs’ color fading, physical damage, sun glare, occlusion, jittering of camera, lightning

conditions, motion-blur, crafted feature-engineering, and unwanted natural condition for example

wind, fog, rain and others. CNN’s (Convolutional Neural Networks) has been a biologically inspired

different layer feed forward neural network architecture that is capable of learning more than one

invariant features in relation with the image hierarchy. The first layer is responsible for extracting the

local image features, next layer extracts the abstract features, more advanced extracts the smaller

objects, and the last layer is responsible for extracting the hierarchical fashion features. Following are

the steps of the methodology used for the methodology proposed for this project:

Dataset collection: the datasets will be downloaded from the datasets applicable publically from

different research labs.

Question 3: Identification of the factors those could be applied for enhancing the efficiency of the

ADAS system and making it immune from ambient natural conditions.

Question 4: Identification of the aspects those could be applied in ADAS for the enhancement in

the computation for the real-time decision-making and deployment of the technology in the real

world.

4. b Goals

The goal of the report is to make the project much efficient, faster processing, and highly robust

in contrast to be applicable in the real world. The answers of the research question will be

identified through dividing the whole project in sub-activities that will also be helpful in auditing

and monitoring the growth and progress of the report. The list of activities and sub activities

along with the start date, finish date, and time required has been presented in the section 7, based

on which the Gantt chart has been prepared.

5. Theoretical Content/Methodology

Consideration of the driver drowsiness and traffic signal recognition has been an important factor

in context of the efficiency and effectiveness of the ADAS technology and its application in the

real world. The factors affecting the efficiency of this technology can be listed as: bad

performance during the variability’s and vulnerabilities in the real world for example graffiti, fading

traffic signs’ color fading, physical damage, sun glare, occlusion, jittering of camera, lightning

conditions, motion-blur, crafted feature-engineering, and unwanted natural condition for example

wind, fog, rain and others. CNN’s (Convolutional Neural Networks) has been a biologically inspired

different layer feed forward neural network architecture that is capable of learning more than one

invariant features in relation with the image hierarchy. The first layer is responsible for extracting the

local image features, next layer extracts the abstract features, more advanced extracts the smaller

objects, and the last layer is responsible for extracting the hierarchical fashion features. Following are

the steps of the methodology used for the methodology proposed for this project:

Dataset collection: the datasets will be downloaded from the datasets applicable publically from

different research labs.

[Please fill your name]

Figure 1: Hierarchy of Visual features by CNN

(Source: Charalampous & Gasteratos 2013)

Processing the explored dataset: the dataset will be explored prior of the processing in manner to

save the time and for future jobs. After ensuring dataset has been done the dataset will be filtered.

Data Augmentation: It will be playing vital role in manner to create the original dataset’s replica in

manner to enhance the training data size and equalizing the samples in each class.

Defining CNN Model: Considering the functionality and relevance to the CNN architecture following

layers can be recommended as the best approach: Convolution layer, Max-pooling layers, Dropout,

regularization, optimizers, and early stopping.

Hyperparameters tuning, virtualization of the model performance, and identification of the results is

the last phase of the methodology.

6. Experimental Setting (Hardware or Software or Both)

For the purpose of the evaluation of performance of presented methodology on the alert system

for driver drowsiness and recognition of traffic sign, the experimental phase will be accomplished

through the application of Torch, Theano, Caffe, Tensorflow, MATLAB, and various wrappers such

as Scikit-Learn, Lasagne, and Keras. The list of the tollboxes required for the accomplishment of the

experimental setup of MATLAB (2017a) includes “Optimization Toolbox, Image Processing

Toolbox, Image Acquisition Toolbox, Computer Vision Systems Toolbox, Statistics and Machine

Learning Toolbox, Neural Network Toolbox, and Automated Driving System Toolbox.”

Figure 1: Hierarchy of Visual features by CNN

(Source: Charalampous & Gasteratos 2013)

Processing the explored dataset: the dataset will be explored prior of the processing in manner to

save the time and for future jobs. After ensuring dataset has been done the dataset will be filtered.

Data Augmentation: It will be playing vital role in manner to create the original dataset’s replica in

manner to enhance the training data size and equalizing the samples in each class.

Defining CNN Model: Considering the functionality and relevance to the CNN architecture following

layers can be recommended as the best approach: Convolution layer, Max-pooling layers, Dropout,

regularization, optimizers, and early stopping.

Hyperparameters tuning, virtualization of the model performance, and identification of the results is

the last phase of the methodology.

6. Experimental Setting (Hardware or Software or Both)

For the purpose of the evaluation of performance of presented methodology on the alert system

for driver drowsiness and recognition of traffic sign, the experimental phase will be accomplished

through the application of Torch, Theano, Caffe, Tensorflow, MATLAB, and various wrappers such

as Scikit-Learn, Lasagne, and Keras. The list of the tollboxes required for the accomplishment of the

experimental setup of MATLAB (2017a) includes “Optimization Toolbox, Image Processing

Toolbox, Image Acquisition Toolbox, Computer Vision Systems Toolbox, Statistics and Machine

Learning Toolbox, Neural Network Toolbox, and Automated Driving System Toolbox.”

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

[Please fill your name]

7. Risk Analysis, Results, Outcome and Relevance

Many standard evaluation techniques can be used for comparing the results on comparing large

datasets that is comprised of all unwanted factors however; general evaluation metrics can be

executed that consists of classifying recall, precision, F1-score, and accuracy. Following are the

formulas for the calculation of these factors:

Recall (R): R = (True positive) / (false positive + true positive)

Precision (P): P = (True positive) / (false positive + true positive)

F-Score (F): F = (1 + B2) * Recall * Precision / (B2 * Precision + Recall)

Accuracy (A) A = (True Negative + True Positive) / (False Positive + True Positive + True

Negative + False Negative)

8. Project Planning and Gantt Chart

8. a List of Activities:

WBS Task Name Duration Start Finish

1

Estimated Plan for

Engineering Graduate

Project

89 days Mon 4/23/18 Thu 8/23/18

1.1 Project Preparation 14 days Mon 4/23/18 Thu 5/10/18

1.1.1 Collection of dataset 4 days Mon 4/23/18 Thu 4/26/18

1.1.2 Understanding the dataset 3 days Fri 4/27/18 Tue 5/1/18

1.1.3 Dataset visualization 4 days Wed 5/2/18 Mon 5/7/18

1.1.4 kick-off review 3 days Tue 5/8/18 Thu 5/10/18

1.1.5 MS 1: Project preparation

review and closing 0 days Thu 5/10/18 Thu 5/10/18

1.2 Dataset processing 14 days Fri 5/11/18 Wed 5/30/18

1.2.1 Dataset filtering 7 days Fri 5/11/18 Mon 5/21/18

1.2.2 Dataset segmentation 7 days Tue 5/22/18 Wed 5/30/18

1.2.3 MS 2: Dataset Processing

closing 0 days Wed 5/30/18 Wed 5/30/18

1.3 Project implementation 21 days Thu 5/31/18 Thu 6/28/18

1.3.1 CNN model selection 2 days Thu 5/31/18 Fri 6/1/18

1.3.2 Defining layers of model 3 days Mon 6/4/18 Wed 6/6/18

1.3.3 Maxpoling 3 days Thu 6/7/18 Mon 6/11/18

1.3.4 Dropout 2 days Tue 6/12/18 Wed 6/13/18

1.3.5 L2 regularization 3 days Thu 6/14/18 Mon 6/18/18

1.3.6 Optimization 1 day Tue 6/19/18 Tue 6/19/18

1.3.7 Early Stopping 2 days Wed 6/20/18 Thu 6/21/18

1.3.8 Implementation 2 days Fri 6/22/18 Mon 6/25/18

7. Risk Analysis, Results, Outcome and Relevance

Many standard evaluation techniques can be used for comparing the results on comparing large

datasets that is comprised of all unwanted factors however; general evaluation metrics can be

executed that consists of classifying recall, precision, F1-score, and accuracy. Following are the

formulas for the calculation of these factors:

Recall (R): R = (True positive) / (false positive + true positive)

Precision (P): P = (True positive) / (false positive + true positive)

F-Score (F): F = (1 + B2) * Recall * Precision / (B2 * Precision + Recall)

Accuracy (A) A = (True Negative + True Positive) / (False Positive + True Positive + True

Negative + False Negative)

8. Project Planning and Gantt Chart

8. a List of Activities:

WBS Task Name Duration Start Finish

1

Estimated Plan for

Engineering Graduate

Project

89 days Mon 4/23/18 Thu 8/23/18

1.1 Project Preparation 14 days Mon 4/23/18 Thu 5/10/18

1.1.1 Collection of dataset 4 days Mon 4/23/18 Thu 4/26/18

1.1.2 Understanding the dataset 3 days Fri 4/27/18 Tue 5/1/18

1.1.3 Dataset visualization 4 days Wed 5/2/18 Mon 5/7/18

1.1.4 kick-off review 3 days Tue 5/8/18 Thu 5/10/18

1.1.5 MS 1: Project preparation

review and closing 0 days Thu 5/10/18 Thu 5/10/18

1.2 Dataset processing 14 days Fri 5/11/18 Wed 5/30/18

1.2.1 Dataset filtering 7 days Fri 5/11/18 Mon 5/21/18

1.2.2 Dataset segmentation 7 days Tue 5/22/18 Wed 5/30/18

1.2.3 MS 2: Dataset Processing

closing 0 days Wed 5/30/18 Wed 5/30/18

1.3 Project implementation 21 days Thu 5/31/18 Thu 6/28/18

1.3.1 CNN model selection 2 days Thu 5/31/18 Fri 6/1/18

1.3.2 Defining layers of model 3 days Mon 6/4/18 Wed 6/6/18

1.3.3 Maxpoling 3 days Thu 6/7/18 Mon 6/11/18

1.3.4 Dropout 2 days Tue 6/12/18 Wed 6/13/18

1.3.5 L2 regularization 3 days Thu 6/14/18 Mon 6/18/18

1.3.6 Optimization 1 day Tue 6/19/18 Tue 6/19/18

1.3.7 Early Stopping 2 days Wed 6/20/18 Thu 6/21/18

1.3.8 Implementation 2 days Fri 6/22/18 Mon 6/25/18

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

[Please fill your name]

1.3.9 Mid term review 3 days Tue 6/26/18 Thu 6/28/18

1.3.10 MS 3: Project Implementation

review and closing 0 days Thu 6/28/18 Thu 6/28/18

1.4 Hyper parameter tuning 12 days Fri 6/29/18 Mon 7/16/18

1.4.1 Tuning model hyper parameter 6 days Fri 6/29/18 Fri 7/6/18

1.4.2 Training model 4 days Mon 7/9/18 Thu 7/12/18

1.4.3 visualization of model 2 days Fri 7/13/18 Mon 7/16/18

1.4.4 Milestone 4: Hyper parameter

tuning closing 0 days Mon 7/16/18 Mon 7/16/18

1.5 Evaluation based n test and

best selection 7 days Tue 7/17/18 Wed 7/25/18

1.5.1 Selection of best model 3 days Tue 7/17/18 Thu 7/19/18

1.5.2 Final test results 2 days Fri 7/20/18 Mon 7/23/18

1.5.3 green light review 2 days Tue 7/24/18 Wed 7/25/18

1.5.4 MS 5: Evaluation Review and

Closing 0 days Wed 7/25/18 Wed 7/25/18

1.6 project report delivery 21 days Thu 7/26/18 Thu 8/23/18

1.6.1 Report Writing 14 days Thu 7/26/18 Tue 8/14/18

1.6.2 Final review 4 days Wed 8/15/18 Mon 8/20/18

1.6.3 Final Submission 2 days Tue 8/21/18 Wed 8/22/18

1.6.4 Submission of report 1 day Thu 8/23/18 Thu 8/23/18

1.6.5 MS 6: Closing Project 0 days Thu 8/23/18 Thu 8/23/18

1.6.6 Closing Project 0 days Thu 8/23/18 Thu 8/23/18

1.3.9 Mid term review 3 days Tue 6/26/18 Thu 6/28/18

1.3.10 MS 3: Project Implementation

review and closing 0 days Thu 6/28/18 Thu 6/28/18

1.4 Hyper parameter tuning 12 days Fri 6/29/18 Mon 7/16/18

1.4.1 Tuning model hyper parameter 6 days Fri 6/29/18 Fri 7/6/18

1.4.2 Training model 4 days Mon 7/9/18 Thu 7/12/18

1.4.3 visualization of model 2 days Fri 7/13/18 Mon 7/16/18

1.4.4 Milestone 4: Hyper parameter

tuning closing 0 days Mon 7/16/18 Mon 7/16/18

1.5 Evaluation based n test and

best selection 7 days Tue 7/17/18 Wed 7/25/18

1.5.1 Selection of best model 3 days Tue 7/17/18 Thu 7/19/18

1.5.2 Final test results 2 days Fri 7/20/18 Mon 7/23/18

1.5.3 green light review 2 days Tue 7/24/18 Wed 7/25/18

1.5.4 MS 5: Evaluation Review and

Closing 0 days Wed 7/25/18 Wed 7/25/18

1.6 project report delivery 21 days Thu 7/26/18 Thu 8/23/18

1.6.1 Report Writing 14 days Thu 7/26/18 Tue 8/14/18

1.6.2 Final review 4 days Wed 8/15/18 Mon 8/20/18

1.6.3 Final Submission 2 days Tue 8/21/18 Wed 8/22/18

1.6.4 Submission of report 1 day Thu 8/23/18 Thu 8/23/18

1.6.5 MS 6: Closing Project 0 days Thu 8/23/18 Thu 8/23/18

1.6.6 Closing Project 0 days Thu 8/23/18 Thu 8/23/18

[Please fill your name]

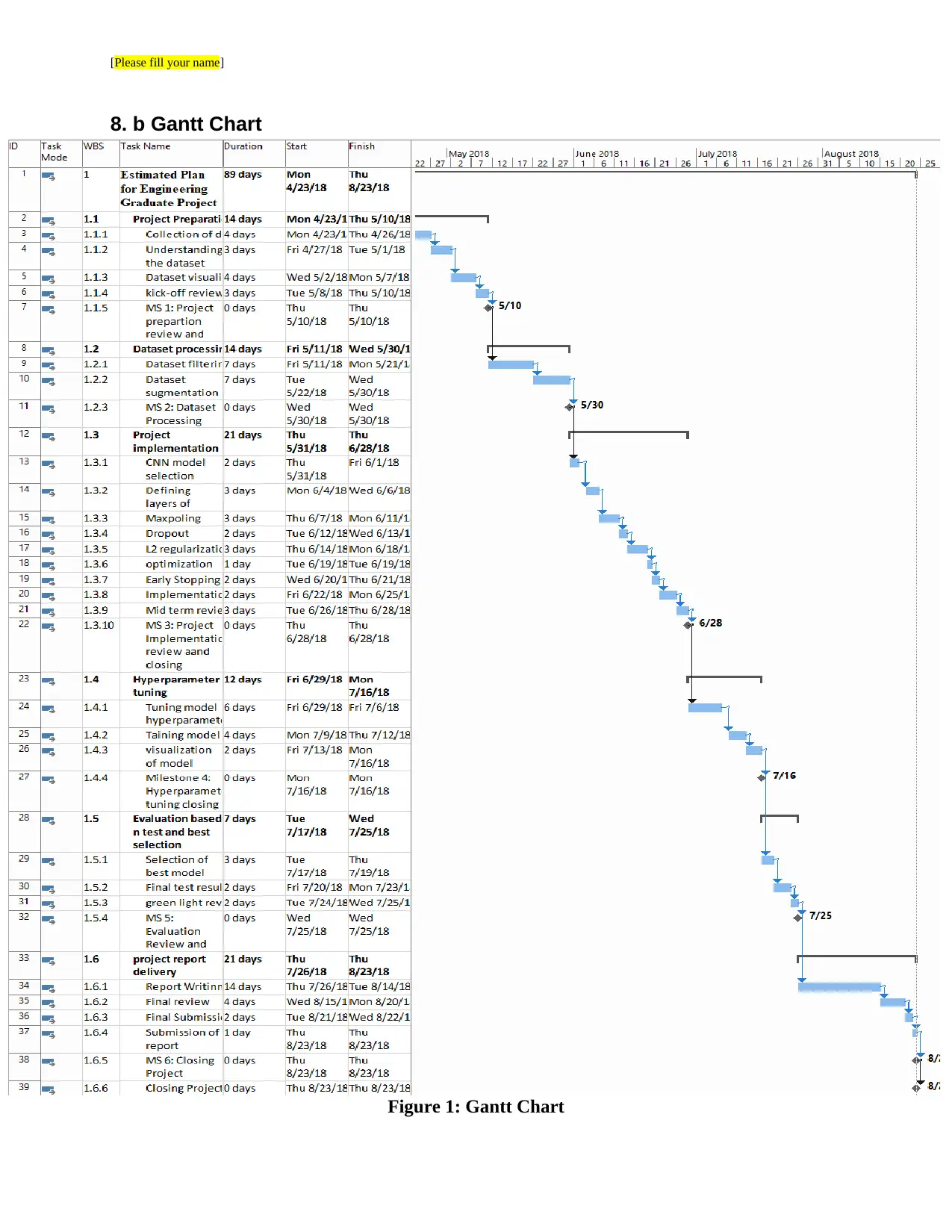

8. b Gantt Chart

Figure 1: Gantt Chart

8. b Gantt Chart

Figure 1: Gantt Chart

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

[Please fill your name]

(Source: Created by Author Using MS Project)

9. Conclusions

ADAS can be referred to the drivers for better and secured driving in an automated manner and

the technology can be applicable in the real world through enhancing the real world practice.

There are many beneficial aspects n the application of this technology however; it has not been

much efficient and effective in the real world due to many natural phenomenon. In the various

conditions, the technology has not been very efficient because of the factors such as rotation,

scaling, shearing, blur, shifting, motion, occlusion, and many more. The project was delivered aiming

at the elimination of the challenges being identified in the application of the ADAS and improves the

efficiency, robustness, and performance in manner to make the technology much reliable for every

individual using the ADSAS technology. The report presents a single integrated model that has been

working on two different modalities through the addition of other integrated functionalities within a

system that result in the reduction of economic cost. This project contributes in the addition of the

information within the context of the literature available in the context of the driverless cars.

(Source: Created by Author Using MS Project)

9. Conclusions

ADAS can be referred to the drivers for better and secured driving in an automated manner and

the technology can be applicable in the real world through enhancing the real world practice.

There are many beneficial aspects n the application of this technology however; it has not been

much efficient and effective in the real world due to many natural phenomenon. In the various

conditions, the technology has not been very efficient because of the factors such as rotation,

scaling, shearing, blur, shifting, motion, occlusion, and many more. The project was delivered aiming

at the elimination of the challenges being identified in the application of the ADAS and improves the

efficiency, robustness, and performance in manner to make the technology much reliable for every

individual using the ADSAS technology. The report presents a single integrated model that has been

working on two different modalities through the addition of other integrated functionalities within a

system that result in the reduction of economic cost. This project contributes in the addition of the

information within the context of the literature available in the context of the driverless cars.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

[Please fill your name]

10. References

Charalampous, K. & Gasteratos, A. 2013, 'Bio-inspired deep learning model for object recognition',

2013 IEEE International Conference on Imaging Systems and Techniques (IST), pp. 51-5.

Choi, J., Song, E. & Lee, S., 2018, ‘L-Tree: A Local-Area-Learning-Based Tree Induction Algorithm

for Image Classification’, Sensors, 18(1), p.306.

Gudigar, A., Chokkadi, S., Raghavendra, U. & Acharya, U.R., 2017, ‘An efficient traffic sign

recognition based on graph embedding features. Neural Computing and Applications’, pp.1-

13.

Jung, S., Cho, S., Lee, D., Lee, H. & Shim, D.H., 2018, ‘A direct visual servoing‐based framework

for the 2016 IROS Autonomous Drone Racing Challenge’, Journal of Field Robotics, 35(1),

pp.146-166.

Kale, A.J. & Mahajan, R.C., 2015, October, ‘A road sign detection and the recognition for Driver

Assistance Systems. In Energy Systems and Applications’, 2015 International Conference

on (pp. 69-74). IEEE.

Keser, T., Kramar, G. & Nožica, D., 2016, October, ‘Traffic signs shape recognition based on contour

descriptor analysis. In Smart Systems and Technologies (SST)’, International Conference

on (pp. 199-204). IEEE.

Li, C. & Yang, C., 2016, September, ‘The research on traffic sign recognition based on deep learning.

In Communications and Information Technologies (ISCIT)’, 2016 16th International

Symposium on (pp. 156-161). IEEE.

Liu, H., Stoll, N., Junginger, S., Zhang, J., Ghandour, M. & Thurow, K., 2016, July, ‘Human-Mobile

Robot Interaction in laboratories using Kinect Sensor and ELM based face feature

recognition. In Human System Interactions (HSI)’, 2016 9th International Conference on (pp.

197-202). IEEE.

Nguyen, B.T., Ryong, S.J. & Kyu, K.J., 2014, July, ‘Fast traffic sign detection under challenging

conditions. In Audio, Language and Image Processing (ICALIP)’, 2014 International

Conference on (pp. 749-752). IEEE.

Sun, X., Liu, L., Wang, H., Song, W. and Lu, J., 2015, December, ‘Image classification via support

vector machine. In Computer Science and Network Technology (ICCSNT)’, 2015 4th

International Conference on (Vol. 1, pp. 485-489). IEEE.

Villalón-Sepúlveda, G., Torres-Torriti, M. & Flores-Calero, M., 2017, ‘Traffic sign detection system

for locating road intersections and roundabouts: the Chilean case’, Sensors, 17(6), p.1207.

Zeng, Y., Xu, X., Fang, Y. & Zhao, K., 2015, ‘Traffic sign recognition using deep convolutional

networks and extreme learning machine in Intelligence Science and Big Data Engineering’,

Image and Video Data Engineering. In 5th International Conference. IScIDE.

10. References

Charalampous, K. & Gasteratos, A. 2013, 'Bio-inspired deep learning model for object recognition',

2013 IEEE International Conference on Imaging Systems and Techniques (IST), pp. 51-5.

Choi, J., Song, E. & Lee, S., 2018, ‘L-Tree: A Local-Area-Learning-Based Tree Induction Algorithm

for Image Classification’, Sensors, 18(1), p.306.

Gudigar, A., Chokkadi, S., Raghavendra, U. & Acharya, U.R., 2017, ‘An efficient traffic sign

recognition based on graph embedding features. Neural Computing and Applications’, pp.1-

13.

Jung, S., Cho, S., Lee, D., Lee, H. & Shim, D.H., 2018, ‘A direct visual servoing‐based framework

for the 2016 IROS Autonomous Drone Racing Challenge’, Journal of Field Robotics, 35(1),

pp.146-166.

Kale, A.J. & Mahajan, R.C., 2015, October, ‘A road sign detection and the recognition for Driver

Assistance Systems. In Energy Systems and Applications’, 2015 International Conference

on (pp. 69-74). IEEE.

Keser, T., Kramar, G. & Nožica, D., 2016, October, ‘Traffic signs shape recognition based on contour

descriptor analysis. In Smart Systems and Technologies (SST)’, International Conference

on (pp. 199-204). IEEE.

Li, C. & Yang, C., 2016, September, ‘The research on traffic sign recognition based on deep learning.

In Communications and Information Technologies (ISCIT)’, 2016 16th International

Symposium on (pp. 156-161). IEEE.

Liu, H., Stoll, N., Junginger, S., Zhang, J., Ghandour, M. & Thurow, K., 2016, July, ‘Human-Mobile

Robot Interaction in laboratories using Kinect Sensor and ELM based face feature

recognition. In Human System Interactions (HSI)’, 2016 9th International Conference on (pp.

197-202). IEEE.

Nguyen, B.T., Ryong, S.J. & Kyu, K.J., 2014, July, ‘Fast traffic sign detection under challenging

conditions. In Audio, Language and Image Processing (ICALIP)’, 2014 International

Conference on (pp. 749-752). IEEE.

Sun, X., Liu, L., Wang, H., Song, W. and Lu, J., 2015, December, ‘Image classification via support

vector machine. In Computer Science and Network Technology (ICCSNT)’, 2015 4th

International Conference on (Vol. 1, pp. 485-489). IEEE.

Villalón-Sepúlveda, G., Torres-Torriti, M. & Flores-Calero, M., 2017, ‘Traffic sign detection system

for locating road intersections and roundabouts: the Chilean case’, Sensors, 17(6), p.1207.

Zeng, Y., Xu, X., Fang, Y. & Zhao, K., 2015, ‘Traffic sign recognition using deep convolutional

networks and extreme learning machine in Intelligence Science and Big Data Engineering’,

Image and Video Data Engineering. In 5th International Conference. IScIDE.

1 out of 11

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.