Designing Emotion-Aware Music Recommendation Systems

VerifiedAdded on 2019/10/01

|44

|12685

|396

Report

AI Summary

The provided content consists of a mix of academic articles, conference proceedings, and patents related to music recommendation systems, emotion detection, and ambient intelligence. The articles explore various approaches to recommending music based on users' emotions, preferences, and facial expressions. Additionally, the content includes research methodology guides, introducing students to qualitative and quantitative research methods.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

Emotion detection media player using 3 different

algorithms

1

algorithms

1

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Table of Contents

Chapter 1: Introduction....................................................................................................................3

1.1 Background of the paper........................................................................................................3

1.2 Aim of the research................................................................................................................4

1.3 Objectives of the research......................................................................................................4

1.4 Research questions.................................................................................................................4

1.5 Significance of the paper.......................................................................................................5

1.6 Problem statement.................................................................................................................5

1.7 Research limitation................................................................................................................6

1.8 summary................................................................................................................................6

1.7 structure of the research project.............................................................................................7

Chapter 2: Literature review............................................................................................................8

2.1 Emotion detection of human..................................................................................................8

2.2 Facial expression based music player....................................................................................8

2.3 Edge detection music player................................................................................................10

2.4 Support Vector Machine or SVM........................................................................................12

Chapter 3: Research Methodology................................................................................................16

3.1 Introduction..........................................................................................................................16

3.2 Research Philosophy............................................................................................................16

3.3 Research Approach..............................................................................................................17

3.4 Research design...................................................................................................................17

3.5 Data collection process........................................................................................................18

3.6 Data analysis modes............................................................................................................19

Chapter 4: Data analysis................................................................................................................20

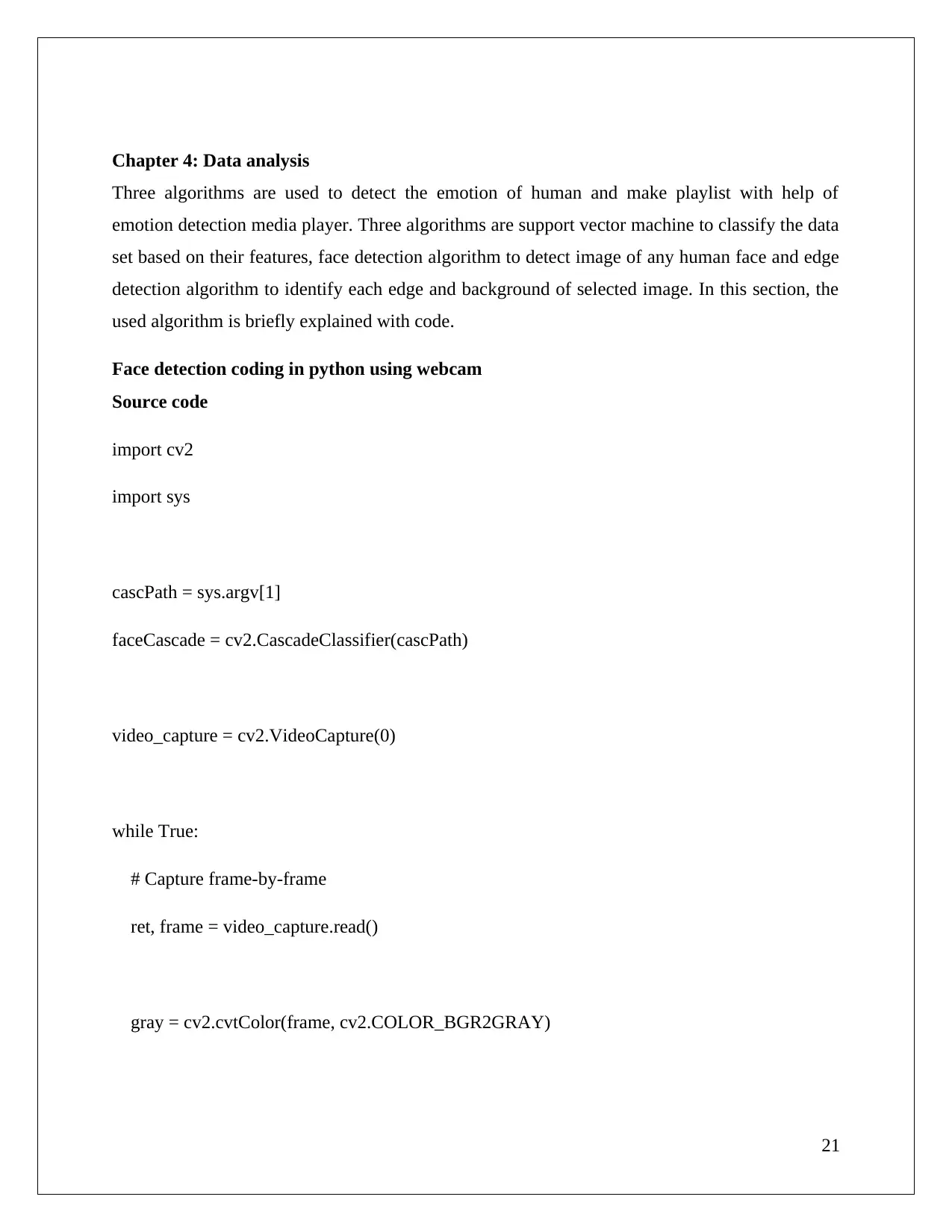

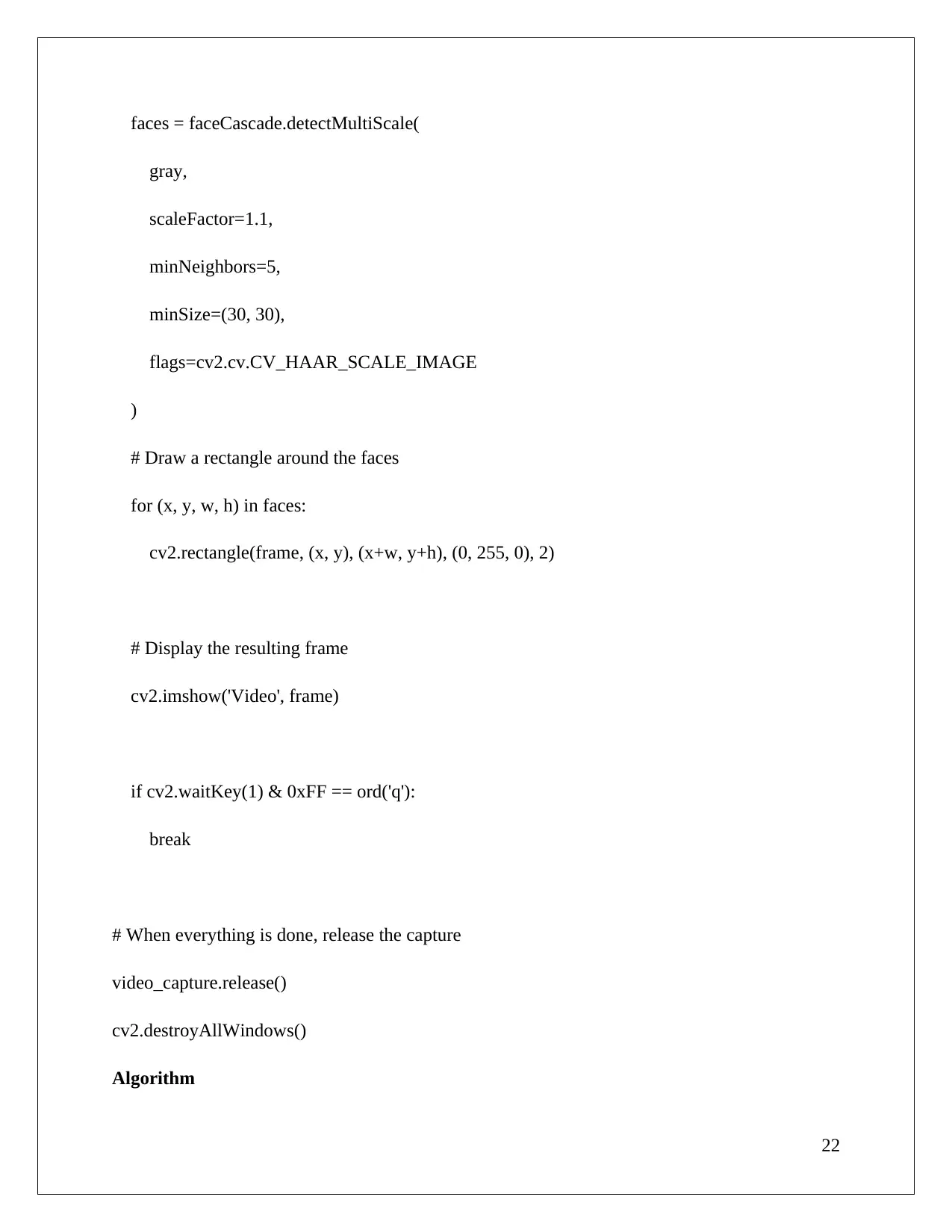

Face detection coding in python using webcam........................................................................20

2

Chapter 1: Introduction....................................................................................................................3

1.1 Background of the paper........................................................................................................3

1.2 Aim of the research................................................................................................................4

1.3 Objectives of the research......................................................................................................4

1.4 Research questions.................................................................................................................4

1.5 Significance of the paper.......................................................................................................5

1.6 Problem statement.................................................................................................................5

1.7 Research limitation................................................................................................................6

1.8 summary................................................................................................................................6

1.7 structure of the research project.............................................................................................7

Chapter 2: Literature review............................................................................................................8

2.1 Emotion detection of human..................................................................................................8

2.2 Facial expression based music player....................................................................................8

2.3 Edge detection music player................................................................................................10

2.4 Support Vector Machine or SVM........................................................................................12

Chapter 3: Research Methodology................................................................................................16

3.1 Introduction..........................................................................................................................16

3.2 Research Philosophy............................................................................................................16

3.3 Research Approach..............................................................................................................17

3.4 Research design...................................................................................................................17

3.5 Data collection process........................................................................................................18

3.6 Data analysis modes............................................................................................................19

Chapter 4: Data analysis................................................................................................................20

Face detection coding in python using webcam........................................................................20

2

Edge detection algorithm...........................................................................................................22

Chapter 5: Conclusion and Recommendation...............................................................................30

References......................................................................................................................................32

3

Chapter 5: Conclusion and Recommendation...............................................................................30

References......................................................................................................................................32

3

Chapter 1: Introduction

1.1 Background of the paper

Music has some important role in enhancing the life of a person because it is a highly significant

relaxation medium for music followers as well as listeners. Now a day, the music technology is

very improved and the music listener uses this improved technology such as local playback,

multicast stream, reverse, and other facilities. The listeners are satisfied with these factors and

play music based on their mood as well as behaviour. Audio feeling recognition provides list of

music that is supported to various mood and emotions of music lover. Various categories of

emotions and audio signal that is received, is classified by audio felling recognition. To explore

some features of audio, AN signal is used and MR is used to extract various important data from

the AN signal of audio. The listener is trying to set their playlist according to their mood.

However, it consumes more time. Various music players provide different kinds of features such

as proper lyrics with singer name and all. In this system, the arrangement of playlist is reacted

based on listener’s emotions to save the time for manually storing playlist.

The best way to express emotion and mood of person is the facial expression and physical

gesture of human. This system is used extract facial expression and based on this expression the

system automatic generate playlist that is completely matched with the mood and behaviour of

listeners. This system consumes less time, reduces the cost hardware and removes the overheads

of memory. The face expression is classified into five different parts like joy, surprise,

excitement, anger and sadness of human. To extract important, related data from any audio

signal, high accurate audio extraction technology is used with lesser time. A model of emotion is

expressed to classify the song into seven types such as unhappy, anger, excitement with joy, sad-

anger, joy, surprise. The emotion-audio module is combination of feeling extraction module and

module of audio feature extraction. This mechanism provides a more huge potentiality and good

real-time performance rather than recent methodology. Various kinds of approaches are used to

process the edge detection of an image such as mouse, speech reorganization using AI and

others. In this case, three algorithms are used these are used like edge detection, face detection

and SVM. The edge detection algorithm helps to reduce computation of processing any large

image. Face detection algorithm provides high accuracy to detect object as well as image. This

4

1.1 Background of the paper

Music has some important role in enhancing the life of a person because it is a highly significant

relaxation medium for music followers as well as listeners. Now a day, the music technology is

very improved and the music listener uses this improved technology such as local playback,

multicast stream, reverse, and other facilities. The listeners are satisfied with these factors and

play music based on their mood as well as behaviour. Audio feeling recognition provides list of

music that is supported to various mood and emotions of music lover. Various categories of

emotions and audio signal that is received, is classified by audio felling recognition. To explore

some features of audio, AN signal is used and MR is used to extract various important data from

the AN signal of audio. The listener is trying to set their playlist according to their mood.

However, it consumes more time. Various music players provide different kinds of features such

as proper lyrics with singer name and all. In this system, the arrangement of playlist is reacted

based on listener’s emotions to save the time for manually storing playlist.

The best way to express emotion and mood of person is the facial expression and physical

gesture of human. This system is used extract facial expression and based on this expression the

system automatic generate playlist that is completely matched with the mood and behaviour of

listeners. This system consumes less time, reduces the cost hardware and removes the overheads

of memory. The face expression is classified into five different parts like joy, surprise,

excitement, anger and sadness of human. To extract important, related data from any audio

signal, high accurate audio extraction technology is used with lesser time. A model of emotion is

expressed to classify the song into seven types such as unhappy, anger, excitement with joy, sad-

anger, joy, surprise. The emotion-audio module is combination of feeling extraction module and

module of audio feature extraction. This mechanism provides a more huge potentiality and good

real-time performance rather than recent methodology. Various kinds of approaches are used to

process the edge detection of an image such as mouse, speech reorganization using AI and

others. In this case, three algorithms are used these are used like edge detection, face detection

and SVM. The edge detection algorithm helps to reduce computation of processing any large

image. Face detection algorithm provides high accuracy to detect object as well as image. This

4

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

algorithm is very fast in nature. This system can extract emotion and mood of human and they

started communication with human being. This system is used to sense facial expression of

human and based on this facial expression it arranges the playlist for human. Human does not

need to select their playlist based on their mood or emotion. Computer can communicate with

human beings like talking, reacting and extracting human emotion, automatically guess the

feelings of human beings. The emotion detection develops new technology in recent years to

process various images, interaction between humans and machines and machine learning. Now a

day, emotion detection plays a vital role in neuroscience, computer science, medical and other

various purposes. To make rational decisions, interaction with social media, emotion detection is

very important. This system can understand what listener wants to listen to according to their

current state of mind.

1.2 Aim of the research

The aim of the research is to understand the functioning of the three algorithms used in order to

develop the Emotion detection Media player.

1.3 Objectives of the research

The objectives of the research are listed below:

To play the song automatically based on human emotions and feelings.

To discuss three algorithms for detecting the emotion of humans

To communicate with the people and extract the gesture and mind state of people

To discuss the impact of three algorithms on emotion detection media player

To develop proper algorithm for recognizing human emotion

To develop the appropriate system as per the human emotion this is used to identify

human gestures using some technology.

1.4 Research questions

How to play the song automatically as per the listener’s mood?

What is main purpose of the three algorithms to detect emotion of human?

How to extract the people's emotions and gestures of humans using media player?

What are the impacts of thee algorithms on emotion detection media player?

How to develop appropriate algorithm for detecting emotion?

5

started communication with human being. This system is used to sense facial expression of

human and based on this facial expression it arranges the playlist for human. Human does not

need to select their playlist based on their mood or emotion. Computer can communicate with

human beings like talking, reacting and extracting human emotion, automatically guess the

feelings of human beings. The emotion detection develops new technology in recent years to

process various images, interaction between humans and machines and machine learning. Now a

day, emotion detection plays a vital role in neuroscience, computer science, medical and other

various purposes. To make rational decisions, interaction with social media, emotion detection is

very important. This system can understand what listener wants to listen to according to their

current state of mind.

1.2 Aim of the research

The aim of the research is to understand the functioning of the three algorithms used in order to

develop the Emotion detection Media player.

1.3 Objectives of the research

The objectives of the research are listed below:

To play the song automatically based on human emotions and feelings.

To discuss three algorithms for detecting the emotion of humans

To communicate with the people and extract the gesture and mind state of people

To discuss the impact of three algorithms on emotion detection media player

To develop proper algorithm for recognizing human emotion

To develop the appropriate system as per the human emotion this is used to identify

human gestures using some technology.

1.4 Research questions

How to play the song automatically as per the listener’s mood?

What is main purpose of the three algorithms to detect emotion of human?

How to extract the people's emotions and gestures of humans using media player?

What are the impacts of thee algorithms on emotion detection media player?

How to develop appropriate algorithm for detecting emotion?

5

How to develop appropriate media player to extract emotion of human?

1.5 Significance of the paper

The importance of emotion detection media player is looking forward to the emotion of users

and it is used to detect human emotion. This system helps to play music automatically by

understanding the emotion of humans. It reduces the time of computation and searching time for

human. Memory overhead is reduced by this emotion detection media player. The system

provides accuracy and efficient results for detecting gestures of humans. It recognizes the facial

expression of people and arranges suitable playlist for them. It mainly focuses on the features of

the detected emotion rather than actual image. Here, listener does not want choose any song from

the playlist automatically. There are no requirements for any playlists. The music lovers do not

want to categorize the song according to their gestures, mental state and feelings. Here SVM

algorithm is used to classify and analyse the regression. It uses hyper-plane for doing this. It is

used to access image with the help of computer devices such as mouse and various computer

networks. It mainly detects the digital image through the video. It classifies data as per their

behaviour and features. Data is transformed by using this technique and according to this

collected data set; it set some boundaries among the promising outcomes. Another algorithm is

edge detection algorithm. In any image the edge parts are the basic thing. The edge is the set of

several pixels. The detection of edge is one of the best techniques to extract the edge of an

image. The output of this algorithm is expressed by the two-dimensional function. The third

algorithm that is used for emotion detection is the face recognition. The main advantage of this

algorithm is to identify the actual emotion of human beings by their facial expressions such as

joy, sadness, anger and other emotions. The algorithm is mainly responsible to reduce the

computation time for detecting the facial expression of any person.

1.6 Problem statement

This is difficult task for music lover to create and segregate the manual playlist among the huge

number of songs. It is quite impossible to keep the track of large number of song in the playlist.

Some songs that are not used for long time waste lot of space and listener have to delete this kind

of song manually. Listeners have some difficulties to identify the suitable song and play the song

based on variable moods. Now a day, people cannot automatically change or update the playlist

in a easiest way. Listener has to update this playlist manually. The list of playlist is not equal all

6

1.5 Significance of the paper

The importance of emotion detection media player is looking forward to the emotion of users

and it is used to detect human emotion. This system helps to play music automatically by

understanding the emotion of humans. It reduces the time of computation and searching time for

human. Memory overhead is reduced by this emotion detection media player. The system

provides accuracy and efficient results for detecting gestures of humans. It recognizes the facial

expression of people and arranges suitable playlist for them. It mainly focuses on the features of

the detected emotion rather than actual image. Here, listener does not want choose any song from

the playlist automatically. There are no requirements for any playlists. The music lovers do not

want to categorize the song according to their gestures, mental state and feelings. Here SVM

algorithm is used to classify and analyse the regression. It uses hyper-plane for doing this. It is

used to access image with the help of computer devices such as mouse and various computer

networks. It mainly detects the digital image through the video. It classifies data as per their

behaviour and features. Data is transformed by using this technique and according to this

collected data set; it set some boundaries among the promising outcomes. Another algorithm is

edge detection algorithm. In any image the edge parts are the basic thing. The edge is the set of

several pixels. The detection of edge is one of the best techniques to extract the edge of an

image. The output of this algorithm is expressed by the two-dimensional function. The third

algorithm that is used for emotion detection is the face recognition. The main advantage of this

algorithm is to identify the actual emotion of human beings by their facial expressions such as

joy, sadness, anger and other emotions. The algorithm is mainly responsible to reduce the

computation time for detecting the facial expression of any person.

1.6 Problem statement

This is difficult task for music lover to create and segregate the manual playlist among the huge

number of songs. It is quite impossible to keep the track of large number of song in the playlist.

Some songs that are not used for long time waste lot of space and listener have to delete this kind

of song manually. Listeners have some difficulties to identify the suitable song and play the song

based on variable moods. Now a day, people cannot automatically change or update the playlist

in a easiest way. Listener has to update this playlist manually. The list of playlist is not equal all

6

time as per the listener requirement. It changes frequently. So there is no such system to reduce

these kinds of problems.

1.7 Research limitation

In this research, there are some limitations to the used methodologies. Three types of algorithms

are used to design the system. There are some chances to use two or more algorithms to make the

new developed system efficient. However, this process takes more cost for using these

algorithms. It is very much time consuming task because of the processing of each algorithm in a

very efficient way. For this reason, it is big limitation for this research. If the research gets much

time and cost then the research will be very effective and the new improved system will more

accurate to give the actual output.

1.8 summary

Chapter 1 depicts the background of the Emotion detection media player. The aim of this

research is to understand the functioning of the three algorithms used to develop the Emotion

detection Media player. The main objective of the research is to develop a new system to detect

the human emotion and based on this emotion this system generates the lists of song

automatically. For this reason, the system uses three algorithms. Edge detection algorithm is used

to detect the edge of the digital image, face detection algorithm is used to detect the facial

expression of the human, and SVM is used to classify the detected image as per the features and

behaviour.

7

these kinds of problems.

1.7 Research limitation

In this research, there are some limitations to the used methodologies. Three types of algorithms

are used to design the system. There are some chances to use two or more algorithms to make the

new developed system efficient. However, this process takes more cost for using these

algorithms. It is very much time consuming task because of the processing of each algorithm in a

very efficient way. For this reason, it is big limitation for this research. If the research gets much

time and cost then the research will be very effective and the new improved system will more

accurate to give the actual output.

1.8 summary

Chapter 1 depicts the background of the Emotion detection media player. The aim of this

research is to understand the functioning of the three algorithms used to develop the Emotion

detection Media player. The main objective of the research is to develop a new system to detect

the human emotion and based on this emotion this system generates the lists of song

automatically. For this reason, the system uses three algorithms. Edge detection algorithm is used

to detect the edge of the digital image, face detection algorithm is used to detect the facial

expression of the human, and SVM is used to classify the detected image as per the features and

behaviour.

7

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Introduction

Literature Review

Methodology

Data Analysis and

Findings

Conclusion and

Recommendations

1.7 structure of the research project

8

Literature Review

Methodology

Data Analysis and

Findings

Conclusion and

Recommendations

1.7 structure of the research project

8

Chapter 2: Literature review

2.1 Emotion detection of human

According to Sarda et al. (2019), a new and improved technology of music generation is

introduced. The mood of the human are detected from different sources such as audio, video,

images and sensors. The mood of humans is identified by their facial expressions as well as the

tone of speech. The physical activities of human can be recognized by the cell phone, which is

carried by the human. According to the large amount of data, the computation is very sufficient

to find the human’s actions. With the help of trained information, the machine learning is used to

classify and predict the outcomes. It is very advantageous for the human by using some

improved technologies to identify the mood and gesture of human. This media player

continuously follows the listening habits and provides playlist as per the recognized mood of

humans. It is also called the generator of personalized playlist.

On behalf of Rahman and Mohamed (2016), music plays an important role in the human life. To

motivate the mood and divert the state of mind, human does listen to different kinds of music.

The best way to capture the human emotion is the facial expression. Facial expression

recognition is used to detect the emotion of humans by their faces. For a particular function, the

face recognition techniques help in the system to identify the human emotion. Some renowned

playlist like “Spotify” gives some lists of song that is mood or emotion based and listener is not

able to modify the song lists. The provided playlist is based on the facial expression of the

human that is detected from recent emotion. Then the listeners play from this list according to

their mood. The proposed system is used some methodologies like Rapid application

development and Android studio. For recognizing facial expression, Affdex SDK is used. The

system gives lists of song that can play the song according to the current emotion of human and

it gives the relevant song for listener.

2.2 Facial expression based music player

It states Kamble and Kulkarni (2016), the method of listening to some music based on the

current emotion of the human. Some automated system is introduced to do the automatic task.

For detecting the current emotion of human and play suitable music, an improved algorithm is

used. It is very cost-effective and reduces the time than manual search and updating of playlist

9

2.1 Emotion detection of human

According to Sarda et al. (2019), a new and improved technology of music generation is

introduced. The mood of the human are detected from different sources such as audio, video,

images and sensors. The mood of humans is identified by their facial expressions as well as the

tone of speech. The physical activities of human can be recognized by the cell phone, which is

carried by the human. According to the large amount of data, the computation is very sufficient

to find the human’s actions. With the help of trained information, the machine learning is used to

classify and predict the outcomes. It is very advantageous for the human by using some

improved technologies to identify the mood and gesture of human. This media player

continuously follows the listening habits and provides playlist as per the recognized mood of

humans. It is also called the generator of personalized playlist.

On behalf of Rahman and Mohamed (2016), music plays an important role in the human life. To

motivate the mood and divert the state of mind, human does listen to different kinds of music.

The best way to capture the human emotion is the facial expression. Facial expression

recognition is used to detect the emotion of humans by their faces. For a particular function, the

face recognition techniques help in the system to identify the human emotion. Some renowned

playlist like “Spotify” gives some lists of song that is mood or emotion based and listener is not

able to modify the song lists. The provided playlist is based on the facial expression of the

human that is detected from recent emotion. Then the listeners play from this list according to

their mood. The proposed system is used some methodologies like Rapid application

development and Android studio. For recognizing facial expression, Affdex SDK is used. The

system gives lists of song that can play the song according to the current emotion of human and

it gives the relevant song for listener.

2.2 Facial expression based music player

It states Kamble and Kulkarni (2016), the method of listening to some music based on the

current emotion of the human. Some automated system is introduced to do the automatic task.

For detecting the current emotion of human and play suitable music, an improved algorithm is

used. It is very cost-effective and reduces the time than manual search and updating of playlist

9

based on the current emotion of the human. PCA algorithm and Euclidean algorithm is used to

detect the facial expression of human very easily. In order to capture the facial expression of the

human, an inbuilt camera is used that mitigates the time as well as the cost of system design. The

accuracy level of this system is near about 84.82 percentages.

According to Noblejas et al. (2017), to identify the emotion and gesture of human, some new

model is used from song of Filipino. The songs are classified as per their characteristics and then

this list of classifications is presented to the listeners. To classify the songs from this playlist

some classifiers are used such as Naive Bayes, SVM, and K-nearest neighbour. The main aim of

the researcher is to find out the suitable classifier to extract song from Filipino song. To check

the accuracy of the model, the model is again tested with different data set model.

On behalf of Iyer et al. (2017), to change and divert the mind of people, music plays an

important role in their lives. It is especially affects the emotion and mind of the people. Music is

more effective rather than other media like video, images, etc. When people feeling low then

they listen to some sad song and when they are happy then they listen to happy songs. They have

to choose their song as per their current mood. However, this is done automatically and it takes

lots of time. Researcher introduces an improved system that is "EmoPlayer" to remove this

problem and provides better way to arrange this kind of song automatically based on the current

emotion of human. The main function of this system is to capture the image of people and then

detect the face using some face detection methods. After getting all information, they create a

suitable playlist for the listener to motivate their mood. It uses the Viola Jones algorithm to

detect the face and uses the Fisherfaces classifier to classify the emotion.

According to Altieri et al. (2019), the a new system is developed to implement the emotion of the

human based on current situations. The system is responsible to manage the multimedia based on

the human emotions. The human is mainly detected by the facial expression of the human used

some specific algorithm. The collected information is stored in the 2D matrix and then it is

mapped with some lighting colours. The system has some ability to maintain the current

environment and current emotions of the human. This emotion can be detected by the edge

detection algorithm to detect the background of the detected image and actual edge of the image

to recognize emotion and based on this emotion the system provides the playlist. The playlist is

automatically updated by the system when the mood of the human is changed. The system is

10

detect the facial expression of human very easily. In order to capture the facial expression of the

human, an inbuilt camera is used that mitigates the time as well as the cost of system design. The

accuracy level of this system is near about 84.82 percentages.

According to Noblejas et al. (2017), to identify the emotion and gesture of human, some new

model is used from song of Filipino. The songs are classified as per their characteristics and then

this list of classifications is presented to the listeners. To classify the songs from this playlist

some classifiers are used such as Naive Bayes, SVM, and K-nearest neighbour. The main aim of

the researcher is to find out the suitable classifier to extract song from Filipino song. To check

the accuracy of the model, the model is again tested with different data set model.

On behalf of Iyer et al. (2017), to change and divert the mind of people, music plays an

important role in their lives. It is especially affects the emotion and mind of the people. Music is

more effective rather than other media like video, images, etc. When people feeling low then

they listen to some sad song and when they are happy then they listen to happy songs. They have

to choose their song as per their current mood. However, this is done automatically and it takes

lots of time. Researcher introduces an improved system that is "EmoPlayer" to remove this

problem and provides better way to arrange this kind of song automatically based on the current

emotion of human. The main function of this system is to capture the image of people and then

detect the face using some face detection methods. After getting all information, they create a

suitable playlist for the listener to motivate their mood. It uses the Viola Jones algorithm to

detect the face and uses the Fisherfaces classifier to classify the emotion.

According to Altieri et al. (2019), the a new system is developed to implement the emotion of the

human based on current situations. The system is responsible to manage the multimedia based on

the human emotions. The human is mainly detected by the facial expression of the human used

some specific algorithm. The collected information is stored in the 2D matrix and then it is

mapped with some lighting colours. The system has some ability to maintain the current

environment and current emotions of the human. This emotion can be detected by the edge

detection algorithm to detect the background of the detected image and actual edge of the image

to recognize emotion and based on this emotion the system provides the playlist. The playlist is

automatically updated by the system when the mood of the human is changed. The system is

10

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

responsible to interact with the people and react to human emotion. The system reduces the cost

of system design including the time needed to detect the emotion of people. Now a day, this

system is very effective to provide the good services to the people within a short time period.

2.3 Edge detection music player

According to Gilda et al., (2017), songs acts as the medium for expressions that is very popular

for depicting as well as understanding the various emotions of humans. There are several

researches in the same subject that is classification of music as per the human emotions, which

has not given optimal outcomes in the practical field. The author suggested for an effective

music player that is based on a cross-platform or in other words EMP that endorses music in the

basis of present mood of the person. The EMP also has the ability to provide music

recommendations based on the moods that is smart detection of moods by the integration of the

capability of emotions reasoning within the adaptive music player or system. The music player

consists of three different modules that include emotion module, recommendation module as

well as classification module. As an input, the emotion module takes picture of the face and then

it uses the deep learning algorithms for the identification of moods effectively with 90.23%

accuracy (Aljanaki et al., 2016). Then the classification module will do the classification of the

songs in four classes of moods with the implementation of internal audio feature with an

accuracy of 97%. Lastly, the recommendation module makes the proper suggestion of the songs

with the mapping technology of the different emotions with that suggested song considering the

user’s preference.

However, Sen et al., (2018), states that for keeping an individual stress-free, different options of

releasing stress are adopted by the classification of the works of those individuals. The moods of

those persons can be depicted by their emotional changes within their workplaces. With the help

of the various facial expressions, the present psychology of a person can be analyzed. The

authors have proposed a smart music player that will be user intuitive and this player will have

the ability to detect the various facial expressions of the user as well as identify the present

emotional condition of the user who will work on computers. This music player can be used for

relaxing the users in their workplace. The smart music player can analyze the various processes

within the computer system or the smartphone that the user is executing. As there are various

types of music that can boost the zeal of an individual, the smart music player will generate a

11

of system design including the time needed to detect the emotion of people. Now a day, this

system is very effective to provide the good services to the people within a short time period.

2.3 Edge detection music player

According to Gilda et al., (2017), songs acts as the medium for expressions that is very popular

for depicting as well as understanding the various emotions of humans. There are several

researches in the same subject that is classification of music as per the human emotions, which

has not given optimal outcomes in the practical field. The author suggested for an effective

music player that is based on a cross-platform or in other words EMP that endorses music in the

basis of present mood of the person. The EMP also has the ability to provide music

recommendations based on the moods that is smart detection of moods by the integration of the

capability of emotions reasoning within the adaptive music player or system. The music player

consists of three different modules that include emotion module, recommendation module as

well as classification module. As an input, the emotion module takes picture of the face and then

it uses the deep learning algorithms for the identification of moods effectively with 90.23%

accuracy (Aljanaki et al., 2016). Then the classification module will do the classification of the

songs in four classes of moods with the implementation of internal audio feature with an

accuracy of 97%. Lastly, the recommendation module makes the proper suggestion of the songs

with the mapping technology of the different emotions with that suggested song considering the

user’s preference.

However, Sen et al., (2018), states that for keeping an individual stress-free, different options of

releasing stress are adopted by the classification of the works of those individuals. The moods of

those persons can be depicted by their emotional changes within their workplaces. With the help

of the various facial expressions, the present psychology of a person can be analyzed. The

authors have proposed a smart music player that will be user intuitive and this player will have

the ability to detect the various facial expressions of the user as well as identify the present

emotional condition of the user who will work on computers. This music player can be used for

relaxing the users in their workplace. The smart music player can analyze the various processes

within the computer system or the smartphone that the user is executing. As there are various

types of music that can boost the zeal of an individual, the smart music player will generate a

11

playlist for the user by analyzing the tasks performed in their computers with their present

emotions. There will be another option for the user to modify the playlist suggested by the music

player to add more flexibility in the emotional detection and music recommendations. Therefore,

by using this music player, the various working professionals can have a good option to relax by

doing stressful works (Sánchez-Moreno et al., 2016).

As per Kabani et al., (2015) different algorithms have been designed for generating an automatic

playlist generation within a music player according to the different emotional states of the user.

The authors also said that human face is the most important part of the human body, which plays

a key role to extract the current emotions as well as behaviour of the person. Moreover, manual

process for selecting music as per the moods and emotions of the user will be a time consuming

as well as tedious task. So in this context, the algorithms designed will help in the automatic

generation of the music playlist based on the current mood or emotions of the user. The existing

algorithms that are used in the music players practically slow or have less accuracy and it can

require other sensors externally. However, the proposed system by the authors will work on the

basis of various facial expressions detection by the sensors for generating an automatic playlist

and reducing the effort and time for making a manual playlist. The algorithms that are used in

this system will minimize the processing time in order to obtain the results as well as overall

costs of the system. Due to these factors, the accuracy of the smart music player will be

increased as compared to the existing music players that are available in the market.

Bhardwaj et al., (2015) states that testing is done for the systems on datasets by user dependent

as well as user independent, in the other words, dynamic and static datasets. The inbuilt camera

of the device will work for capturing the various facial expressions of the users by which the

accuracy of the algorithm used for emotion detection will be approximately 85-90% with the real

time images. However with the static images, accuracy will be approximately 98-100%. In

addition, this algorithm has the ability to generate a music playlist on the basis emotion detection

in around 0.95-1.05 second in an average calculation as well as estimation. Therefore, the

proposed algorithms by the authors provide enhanced accuracy in its performance and its

operational time by reducing the costs of designing as compared to other existing algorithms.

Different methods or approaches are developed by for classifying the emotions and behaviours

of human beings. The approaches are basically based on some regular emotions and for

12

emotions. There will be another option for the user to modify the playlist suggested by the music

player to add more flexibility in the emotional detection and music recommendations. Therefore,

by using this music player, the various working professionals can have a good option to relax by

doing stressful works (Sánchez-Moreno et al., 2016).

As per Kabani et al., (2015) different algorithms have been designed for generating an automatic

playlist generation within a music player according to the different emotional states of the user.

The authors also said that human face is the most important part of the human body, which plays

a key role to extract the current emotions as well as behaviour of the person. Moreover, manual

process for selecting music as per the moods and emotions of the user will be a time consuming

as well as tedious task. So in this context, the algorithms designed will help in the automatic

generation of the music playlist based on the current mood or emotions of the user. The existing

algorithms that are used in the music players practically slow or have less accuracy and it can

require other sensors externally. However, the proposed system by the authors will work on the

basis of various facial expressions detection by the sensors for generating an automatic playlist

and reducing the effort and time for making a manual playlist. The algorithms that are used in

this system will minimize the processing time in order to obtain the results as well as overall

costs of the system. Due to these factors, the accuracy of the smart music player will be

increased as compared to the existing music players that are available in the market.

Bhardwaj et al., (2015) states that testing is done for the systems on datasets by user dependent

as well as user independent, in the other words, dynamic and static datasets. The inbuilt camera

of the device will work for capturing the various facial expressions of the users by which the

accuracy of the algorithm used for emotion detection will be approximately 85-90% with the real

time images. However with the static images, accuracy will be approximately 98-100%. In

addition, this algorithm has the ability to generate a music playlist on the basis emotion detection

in around 0.95-1.05 second in an average calculation as well as estimation. Therefore, the

proposed algorithms by the authors provide enhanced accuracy in its performance and its

operational time by reducing the costs of designing as compared to other existing algorithms.

Different methods or approaches are developed by for classifying the emotions and behaviours

of human beings. The approaches are basically based on some regular emotions and for

12

proposing feature recognition, various facial expressions are divided into two different forms that

are “Appearance based” as well as “Geometric based” extraction. With the geometric based

extraction methodology, shapes or prominent areas of the various facial expressions like eyes

and mouth are considered (Schedl et al., 2015). However, around 58 prominent points has been

chosen while designing the ASM and the appearance was on the basis of the texture that was also

considered in the development of the algorithm. In this system, effective techniques have been

used for designing, coding as well as implementing the facial expressions along with multi-

orientations sets.

Hsu et al., 2017 proposed a system for playing music along with the playing method that will be

suitable for the user by the recognition of the speech recognition. The authors also proposed for a

playing method for the music with a few steps. The plurality of the songs will be mapped into the

emotional coordinates of the songs to form a graph and will be stored in the database. Data will

be captured by the voice recognition and then analyzed as per the coordinate of the real time

emotions of the user. Moreover, these coordinates of the voice data will be mapped on the

coordinate graph of the emotions as per the second database and this way the setting of the

coordinated is received. After that a specific song with the real-time emotional coordinate is

found and they are sequentially played considering the emotion coordinates within the database.

Knight (2016), proposed that the system will design a music system that will work on the basis

of the recognition of the speech emotions. Four units of the systems play an important role in

giving efficient output with much accuracy. The units will include the two database systems,

voice recognition unit along with a control device. In the first database, plurality will be stored

long with emotional coordinates of songs that will be mapped on the coordinate graph of

emotions. Moreover, the parameters of the emotion recognition will be stored in the other

database. Voice data are received by the voice unit and the control device will do the analysis of

the input data that will detect a present emotional coordinate of the user mapped as per the

second database.

2.4 Support Vector Machine or SVM

It is a challenge to increase and maintain the productivity of an individual for various tasks as per

Patel et al., (2016). Moreover, the authors suggested that music will help in enhancing the mood

as well as the state of mind that acts as a catalyst for improving the productivity of an individual.

13

are “Appearance based” as well as “Geometric based” extraction. With the geometric based

extraction methodology, shapes or prominent areas of the various facial expressions like eyes

and mouth are considered (Schedl et al., 2015). However, around 58 prominent points has been

chosen while designing the ASM and the appearance was on the basis of the texture that was also

considered in the development of the algorithm. In this system, effective techniques have been

used for designing, coding as well as implementing the facial expressions along with multi-

orientations sets.

Hsu et al., 2017 proposed a system for playing music along with the playing method that will be

suitable for the user by the recognition of the speech recognition. The authors also proposed for a

playing method for the music with a few steps. The plurality of the songs will be mapped into the

emotional coordinates of the songs to form a graph and will be stored in the database. Data will

be captured by the voice recognition and then analyzed as per the coordinate of the real time

emotions of the user. Moreover, these coordinates of the voice data will be mapped on the

coordinate graph of the emotions as per the second database and this way the setting of the

coordinated is received. After that a specific song with the real-time emotional coordinate is

found and they are sequentially played considering the emotion coordinates within the database.

Knight (2016), proposed that the system will design a music system that will work on the basis

of the recognition of the speech emotions. Four units of the systems play an important role in

giving efficient output with much accuracy. The units will include the two database systems,

voice recognition unit along with a control device. In the first database, plurality will be stored

long with emotional coordinates of songs that will be mapped on the coordinate graph of

emotions. Moreover, the parameters of the emotion recognition will be stored in the other

database. Voice data are received by the voice unit and the control device will do the analysis of

the input data that will detect a present emotional coordinate of the user mapped as per the

second database.

2.4 Support Vector Machine or SVM

It is a challenge to increase and maintain the productivity of an individual for various tasks as per

Patel et al., (2016). Moreover, the authors suggested that music will help in enhancing the mood

as well as the state of mind that acts as a catalyst for improving the productivity of an individual.

13

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

If any person has the tendency to listen continuous music then it will require a long personalized

playlist that has to be created and managed by the user, which will require much time. This

challenge can be eliminated by the music player if it can create a playlist as per the real-time

moods of the user. The detection of moods can be analyzed with the various facial expressions of

the user. The detection of facial expression of an individual must should define three issues such

as image detection, extraction of facial feature as well as classification of the facial expressions.

Firstly, from an image, the detection of the face will be made in which different methods will be

involved like face tracking on the basis of models. The detection will also be associated with the

real-time detection of faces and matching of the edge orientations. Face detection will be robust

by the implementation of the Huasdroff distance that will cascade the algorithm of viola as well

as Jones along with the HOG.

After this process, the next step is to extract the facial features from the face. There will be two

approaches regarding the extraction of the features that includes the Gabor filters and the other

one is PCA or “Principle Component Analysis”. Finally, the process of image classification will

be done for the detection of moods, in which different classifiers will be used. The classifiers

will include the BrownBoost, SVM and AdaBoost for the classification of moods. The proposed

system by the authors will implement the HOG or “Histograms of Oriented Gradients” with the

method of facial detection that will pass the detected features within the SVM for the mood

prediction of the user (Van der Steen et al., 2018). This prediction of the moods will be used to

generate the automatic music playlist in accordance with the real time mood of the user.

The entire system can be categorized into different stages that include the image capturing, face

detection, landmark point’s findings on the face that will be detected and then classifying the

facial features with the application of the SVM classifier and then creating the music playlist as

per the detected mood in real time basis. Initially, some datasets will be generated for initializing

the SVM classifier. The SVM will classify the various moods like happy, neutral as well as sad

by the analyzing the images. After that, the player will then be able to sort the various songs as

per their genre with the moods (Scirea et al., 2015). Moreover, with the help of these sorted

playlist, heuristics can be created within the music player.

Iyer et al., (2017) also proposed an Android application called as EmoPlayer that helps in

recommending playlist as per the real time emotion of the user. This will help in the eliminating

14

playlist that has to be created and managed by the user, which will require much time. This

challenge can be eliminated by the music player if it can create a playlist as per the real-time

moods of the user. The detection of moods can be analyzed with the various facial expressions of

the user. The detection of facial expression of an individual must should define three issues such

as image detection, extraction of facial feature as well as classification of the facial expressions.

Firstly, from an image, the detection of the face will be made in which different methods will be

involved like face tracking on the basis of models. The detection will also be associated with the

real-time detection of faces and matching of the edge orientations. Face detection will be robust

by the implementation of the Huasdroff distance that will cascade the algorithm of viola as well

as Jones along with the HOG.

After this process, the next step is to extract the facial features from the face. There will be two

approaches regarding the extraction of the features that includes the Gabor filters and the other

one is PCA or “Principle Component Analysis”. Finally, the process of image classification will

be done for the detection of moods, in which different classifiers will be used. The classifiers

will include the BrownBoost, SVM and AdaBoost for the classification of moods. The proposed

system by the authors will implement the HOG or “Histograms of Oriented Gradients” with the

method of facial detection that will pass the detected features within the SVM for the mood

prediction of the user (Van der Steen et al., 2018). This prediction of the moods will be used to

generate the automatic music playlist in accordance with the real time mood of the user.

The entire system can be categorized into different stages that include the image capturing, face

detection, landmark point’s findings on the face that will be detected and then classifying the

facial features with the application of the SVM classifier and then creating the music playlist as

per the detected mood in real time basis. Initially, some datasets will be generated for initializing

the SVM classifier. The SVM will classify the various moods like happy, neutral as well as sad

by the analyzing the images. After that, the player will then be able to sort the various songs as

per their genre with the moods (Scirea et al., 2015). Moreover, with the help of these sorted

playlist, heuristics can be created within the music player.

Iyer et al., (2017) also proposed an Android application called as EmoPlayer that helps in

recommending playlist as per the real time emotion of the user. This will help in the eliminating

14

manual selection of songs and creating a playlist according to the mood of the users. Music

skilfully plays with the human emotions that directly affect the different moods. In comparison

with movies, books as well as television shows, music will work most of the times in changing

the changing the moods. Music has the ability of empowering an individual at the time of bad

moods. With the proposed system by the authors, the music player will have the ability to detect

the facial features from the camera of the device. After capturing of the current image of the

user, the application can detect the emotion of the user and then generate a playlist of songs that

will improve the mood of the user. The algorithm that is used in the EmoPlayer is Viola Jones

for the detection of face and for the classification of the emotions, Fisherfaces classifier has been

used. As the popularity and trend of digital music is increasing on a daily basis, proper

recommendations of music are much helpful. Earlier, the recommendations were made by the

music player according to the music preferences of the user. However, sometimes these

recommendations should be on the basis of user’s emotion as in the recent times, the preferences

of the users are changing. Users’ needs music recommendations for improving their moods as in

this busy world, this improvement will make a huge difference.

The authors proposed a music player that will use a novel model for recommending music as the

emotions of the user. They have investigated the extraction of the music features and then have

made changes in the affinity graph to link the emotions as well as features of the music. Finally,

in the practical field, this proposed system has given accuracy of 85% on an average testing and

implementation.

In accordance with the recommending music to the users as per the moods by the different

streaming services, Yepes et al., (2018) sates that the sales of music albums or the digital

versions has decreased in the past few years. The major part of the streaming systems is the

recommendation systems that help the software in facilitating music to the users. The authors

proposed an approach for the recommendation that will be based on the contents searched by the

users. This system will use the algorithm of one-class SVM classification that will act as the

anomaly detector. Their main purpose for developing this system is to create a music playlist in

accordance with the tastes of users, and will integrate the new releases too. The proposed model

will also have the ability to detect the various elements that will reflect the preference of the user

with an enhanced accuracy that will work in associated with an Android application. This system

15

skilfully plays with the human emotions that directly affect the different moods. In comparison

with movies, books as well as television shows, music will work most of the times in changing

the changing the moods. Music has the ability of empowering an individual at the time of bad

moods. With the proposed system by the authors, the music player will have the ability to detect

the facial features from the camera of the device. After capturing of the current image of the

user, the application can detect the emotion of the user and then generate a playlist of songs that

will improve the mood of the user. The algorithm that is used in the EmoPlayer is Viola Jones

for the detection of face and for the classification of the emotions, Fisherfaces classifier has been

used. As the popularity and trend of digital music is increasing on a daily basis, proper

recommendations of music are much helpful. Earlier, the recommendations were made by the

music player according to the music preferences of the user. However, sometimes these

recommendations should be on the basis of user’s emotion as in the recent times, the preferences

of the users are changing. Users’ needs music recommendations for improving their moods as in

this busy world, this improvement will make a huge difference.

The authors proposed a music player that will use a novel model for recommending music as the

emotions of the user. They have investigated the extraction of the music features and then have

made changes in the affinity graph to link the emotions as well as features of the music. Finally,

in the practical field, this proposed system has given accuracy of 85% on an average testing and

implementation.

In accordance with the recommending music to the users as per the moods by the different

streaming services, Yepes et al., (2018) sates that the sales of music albums or the digital

versions has decreased in the past few years. The major part of the streaming systems is the

recommendation systems that help the software in facilitating music to the users. The authors

proposed an approach for the recommendation that will be based on the contents searched by the

users. This system will use the algorithm of one-class SVM classification that will act as the

anomaly detector. Their main purpose for developing this system is to create a music playlist in

accordance with the tastes of users, and will integrate the new releases too. The proposed model

will also have the ability to detect the various elements that will reflect the preference of the user

with an enhanced accuracy that will work in associated with an Android application. This system

15

also has the capability in detecting the different changes in the user preferences. With this model,

it will help to manage the recommended playlist along with the integration of the new releases in

the profile of the user to form a new music list.

The conventional music player will need a human interaction in order to play music as per the

mood of an individual as per Kamble and Kulkarni, (2016). But the migration of this

conventional system has been done with the integration of AI and technology for the automation.

For achieving this objective, algorithms have been used for classifying the various human

expressions and then generating a music playlist in accordance with the real time emotions of the

users that will be detected by the system. This will reduce the time as well as effort of searching

for a required song according to the mood of the user. By the extractions of the facial features of

the user, the expressions of the user can be detected. This system will use the PCA algorithm for

the extraction and Euclidean Distance classifier will be used to classify the songs according to

the mood. The various expressions of the user is captured by the front camera of the device,

which helps in reducing the costs of designing the model in comparison with other models or

methods.

The outcomes of this system by using the PCA algorithm will help in achieving the accuracy up

to 84% for recognizing the various facial expressions of user. The development of the

application is done in such a way that the contents of the user can be analysed and the

determination of the mood can identified. The work is done capturing the images of user with the

help of inbuilt camera and then the various facial features are analyzed in the accordance to

which the music playlist is generated to improve the emotional state of the user.

16

it will help to manage the recommended playlist along with the integration of the new releases in

the profile of the user to form a new music list.

The conventional music player will need a human interaction in order to play music as per the

mood of an individual as per Kamble and Kulkarni, (2016). But the migration of this

conventional system has been done with the integration of AI and technology for the automation.

For achieving this objective, algorithms have been used for classifying the various human

expressions and then generating a music playlist in accordance with the real time emotions of the

users that will be detected by the system. This will reduce the time as well as effort of searching

for a required song according to the mood of the user. By the extractions of the facial features of

the user, the expressions of the user can be detected. This system will use the PCA algorithm for

the extraction and Euclidean Distance classifier will be used to classify the songs according to

the mood. The various expressions of the user is captured by the front camera of the device,

which helps in reducing the costs of designing the model in comparison with other models or

methods.

The outcomes of this system by using the PCA algorithm will help in achieving the accuracy up

to 84% for recognizing the various facial expressions of user. The development of the

application is done in such a way that the contents of the user can be analysed and the

determination of the mood can identified. The work is done capturing the images of user with the

help of inbuilt camera and then the various facial features are analyzed in the accordance to

which the music playlist is generated to improve the emotional state of the user.

16

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Chapter 3: Research Methodology

3.1 Introduction

The methodology plays a key role in enhancing the outcomes that are resulted from the

conducting of the research (Kumar, 2019). It has been understood from the fact that the aspect of

the research is glorified with the use of the different research methodology tools. One of the

major facets that need to be covered by any scientific research is the induction of a proper

framework or guideline, on which the research is conducted. The use of the research

methodology helps in accomplishing this particular facet and enhances the outcomes of the

research. The presence of the guidelines play an important role in the researches of the modern

era as the research topics are quite complicated and beckons the use of stipulated rules and

regulations (Taylor et al., 2015). It is the part where the efficacies of the research methodology is

noteworthy and increases the quality of the project. One of the necessities that are surfaced by

the research methodology is the provision of the specified milestone. It is understood that the

accomplishment of the project within specified timelines has gained popularity because of the

monetary constraints that come into play. The research methodology is vested with the

parameters that allow the project to be accomplished within the stipulated milestone as well as

maintain the quality of the research simultaneously. Thus, the research methodology has been

implemented within the research. The following section describes the tools of research

methodology that has been inducted within the research. The tools of the research methodology

that has been considered for the research includes the Research Philosophy, Research Approach,

Research design, Sample size distribution, data collection techniques and the ethical

considerations.

3.2 Research Philosophy

The facets of the research philosophy shed light on the manner in which the researcher acquires

information about the topic (Mackey and Gass, 2015). This in turn assists the researcher to

devise the aim of the research. The research philosophy is vested with the features of enhancing

the quality of the research. The research philosophy serves as the belief on which the data

assimilation and assessment is based on. The research philosophy is composed of the positivism,

intrepretivism and the realism. The facets of the positivism are described by its affluences in

17

3.1 Introduction

The methodology plays a key role in enhancing the outcomes that are resulted from the

conducting of the research (Kumar, 2019). It has been understood from the fact that the aspect of

the research is glorified with the use of the different research methodology tools. One of the

major facets that need to be covered by any scientific research is the induction of a proper

framework or guideline, on which the research is conducted. The use of the research

methodology helps in accomplishing this particular facet and enhances the outcomes of the

research. The presence of the guidelines play an important role in the researches of the modern

era as the research topics are quite complicated and beckons the use of stipulated rules and

regulations (Taylor et al., 2015). It is the part where the efficacies of the research methodology is

noteworthy and increases the quality of the project. One of the necessities that are surfaced by

the research methodology is the provision of the specified milestone. It is understood that the

accomplishment of the project within specified timelines has gained popularity because of the

monetary constraints that come into play. The research methodology is vested with the

parameters that allow the project to be accomplished within the stipulated milestone as well as

maintain the quality of the research simultaneously. Thus, the research methodology has been

implemented within the research. The following section describes the tools of research

methodology that has been inducted within the research. The tools of the research methodology

that has been considered for the research includes the Research Philosophy, Research Approach,

Research design, Sample size distribution, data collection techniques and the ethical

considerations.

3.2 Research Philosophy

The facets of the research philosophy shed light on the manner in which the researcher acquires

information about the topic (Mackey and Gass, 2015). This in turn assists the researcher to

devise the aim of the research. The research philosophy is vested with the features of enhancing

the quality of the research. The research philosophy serves as the belief on which the data

assimilation and assessment is based on. The research philosophy is composed of the positivism,

intrepretivism and the realism. The facets of the positivism are described by its affluences in

17

imparting a scientific assessment to the domains of the research topic. It plays a major role in the

selection of the research objectives as it lays the down the framework for its development. The

intrepretivism is focused on the integration of the perceptions of human nature within the

research. The particular research has been conducted with the help of the positivism research

philosophy. The research topic includes the use of different algorithms that would develop the

Emotion Detection Music Player and the appropriate selection of the algorithm incurs the

implementation of the logical reasoning models. It could only be possible if the research is

endowed with the efficacies of the positivism philosophy as it is provisioned with exemplary

scientific evaluation techniques. Thus the introduction of the positivism philosophy is justified.

3.3 Research Approach

The aspect of the research approach has important significance in drawing the backgrounds on

which the research is propagated (Silverman, 2016). It also plays a key role in the selection of

the research topic. The research approach provides the platform on which the facets of research

objectives and data analysis gains prominence. The research approach is implemented on the

basis of the deductive and the inductive model. The Deductive model reflects on the provision of

outcomes and results through the interpretation of the research objectives by implementing the

theoretical frameworks and models. The inductive model resembles on the development of the

hypothesis through the conclusive results that is drawn from the research. In the particular

research, the inductive model has been introduced. The research topic reciprocates on the

development of the algorithms for the AI programs and thus it beckons the creation of the new

frameworks through the conclusive results drawn from the project. It could be only

accomplished with the help of the inductive model as it bestowed with the provisions of testing

null hypothesis, which has proven beneficial to the cause of the project. Thus the

implementation of the inductive model has been justified.

3.4 Research design

It is one of the important tools of the research methodology, as it provides the research with the

protocols that needs to be adhered to, in order to come good on accomplishing the research

objectives within the stipulated milestones (Flick, 2015). It plays an important role in providing

the research with the guidelines that is necessary for the research objectives to be accomplished

with clinical precision. The research design endowed with the presence of the exploratory,

18

selection of the research objectives as it lays the down the framework for its development. The

intrepretivism is focused on the integration of the perceptions of human nature within the

research. The particular research has been conducted with the help of the positivism research

philosophy. The research topic includes the use of different algorithms that would develop the

Emotion Detection Music Player and the appropriate selection of the algorithm incurs the

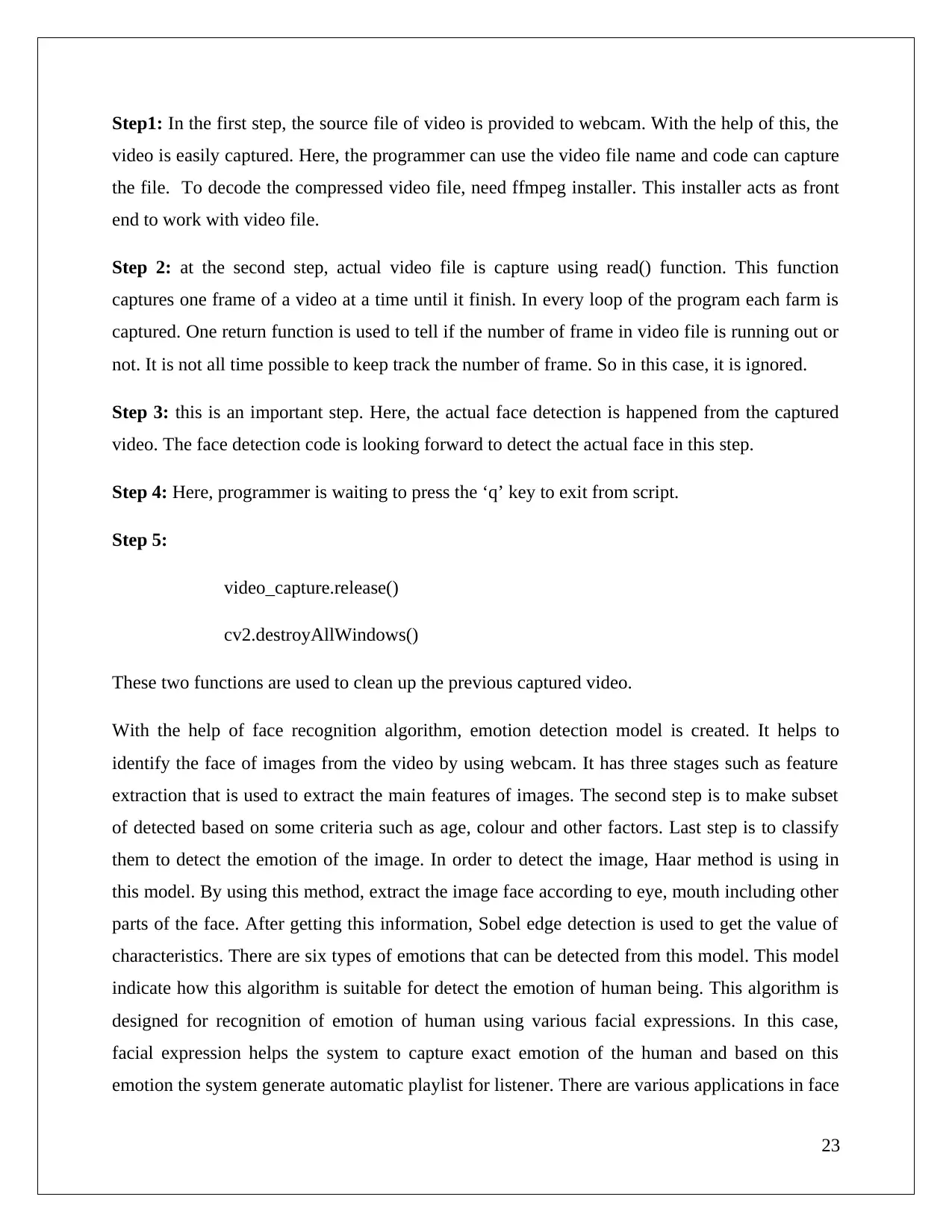

implementation of the logical reasoning models. It could only be possible if the research is