Enterprise BI Project: Health News Analysis using WEKA

VerifiedAdded on 2023/06/05

|69

|5378

|174

Project

AI Summary

This project provides an opportunity to utilize data mining and machine learning methods for knowledge discovery in a dataset, exploring applications for business intelligence. It analyzes a health news dataset using WEKA, dividing the project into ten practical sections. The first practical involves installing WEKA and downloading a data repository. The second focuses on data pre-processing. The third covers data visualization and dimension reduction. The fourth implements clustering algorithms like K-Means, both manually and using WEKA. The fifth performs supervised mining with classification algorithms. The sixth evaluates performance using WEKA Experimenter and Knowledge Flow. The seventh predicts time series using WEKA's package manager. The eighth focuses on text mining, and the final practical explores image analytics in WEKA. Each practical is analyzed and demonstrated in detail.

Enterprise Business Intelligence

Abstract

This project is designed to provide the good opportunity to use the data mining and

machine learning method in discovering knowledge from a dataset and explore the

Abstract

This project is designed to provide the good opportunity to use the data mining and

machine learning method in discovering knowledge from a dataset and explore the

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

applications for business intelligence. This project analysis the health news dataset to explore

the weka data mining applications. This project divided in to 10 practical. In First practical,

we are going to install the weka software and download the Data repository. In Second

practical, this practical is used to do data and data pre-processing for provided data set. In

third practical, this practical is used for data visualization and dimension reduction. In fourth

practical, this practical is used to do the clustering algorithm like K-Means. In Fifth practical,

this task is used to do supervised mining that is classification algorithm on weka. In Sixth

practical, this practical is used to do performance evaluation on weka experimenter and

Knowledge Flow. In seventh practical, this practical is used for predicting the time series on

weka package manager. In eighth practical, this task is used to do text mining. In final, this

practical is used to do the image analytics on weka. These are will be analysed and

demonstrated in detail.

Table of Contents

1 Introduction......................................................................................................................3

2 Data set..............................................................................................................................3

1

the weka data mining applications. This project divided in to 10 practical. In First practical,

we are going to install the weka software and download the Data repository. In Second

practical, this practical is used to do data and data pre-processing for provided data set. In

third practical, this practical is used for data visualization and dimension reduction. In fourth

practical, this practical is used to do the clustering algorithm like K-Means. In Fifth practical,

this task is used to do supervised mining that is classification algorithm on weka. In Sixth

practical, this practical is used to do performance evaluation on weka experimenter and

Knowledge Flow. In seventh practical, this practical is used for predicting the time series on

weka package manager. In eighth practical, this task is used to do text mining. In final, this

practical is used to do the image analytics on weka. These are will be analysed and

demonstrated in detail.

Table of Contents

1 Introduction......................................................................................................................3

2 Data set..............................................................................................................................3

1

3 Data mining Techniques..................................................................................................4

4 Evaluation and Demonstration.......................................................................................4

4.1 Practical – 1................................................................................................................5

4.2 Practical – 2................................................................................................................6

4.3 Practical – 3................................................................................................................9

4.3.1 Visualising the Dataset.......................................................................................9

4.3.2 Visualising the Dataset using Classifiers........................................................15

4.4 Practical – 4..............................................................................................................21

4.4.1 Manually Working with K-Means..................................................................21

4.4.2 Unsupervised Learning in WEKA – Clustering............................................24

4.5 Practical – 5..............................................................................................................26

4.6 Practical – 6..............................................................................................................33

4.6.1 Weka Experimenter.........................................................................................33

4.6.2 Weka Knowledge Flow....................................................................................37

4.7 Practical – 7..............................................................................................................43

4.8 Practical – 8..............................................................................................................56

4.8.1 Training the Classifier Model.........................................................................56

4.8.2 Predict the Class in Test..................................................................................58

4.9 Practical – 9..............................................................................................................61

5 Conclusion.......................................................................................................................65

References...............................................................................................................................66

1 Introduction

This project is designed to provide the good opportunity to use the data mining and

machine learning method in discovering knowledge from a dataset and explore the

2

4 Evaluation and Demonstration.......................................................................................4

4.1 Practical – 1................................................................................................................5

4.2 Practical – 2................................................................................................................6

4.3 Practical – 3................................................................................................................9

4.3.1 Visualising the Dataset.......................................................................................9

4.3.2 Visualising the Dataset using Classifiers........................................................15

4.4 Practical – 4..............................................................................................................21

4.4.1 Manually Working with K-Means..................................................................21

4.4.2 Unsupervised Learning in WEKA – Clustering............................................24

4.5 Practical – 5..............................................................................................................26

4.6 Practical – 6..............................................................................................................33

4.6.1 Weka Experimenter.........................................................................................33

4.6.2 Weka Knowledge Flow....................................................................................37

4.7 Practical – 7..............................................................................................................43

4.8 Practical – 8..............................................................................................................56

4.8.1 Training the Classifier Model.........................................................................56

4.8.2 Predict the Class in Test..................................................................................58

4.9 Practical – 9..............................................................................................................61

5 Conclusion.......................................................................................................................65

References...............................................................................................................................66

1 Introduction

This project is designed to provide the good opportunity to use the data mining and

machine learning method in discovering knowledge from a dataset and explore the

2

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

applications for business intelligence. This project analysis the health news dataset to explore

the weka data mining applications. This project divided in to 10 practical. In First practical,

we are going to install the weka software and download the Data repository. In Second

practical, this practical is used to do data and data pre-processing for provided data set. In

third practical, this practical is used for data visualization and dimension reduction. In fourth

practical, this practical is used to do the clustering algorithm like K-Means. This practical

divided into two parts such as Part 1 and part 2. The part 1 is manually calculated the K-

means for provided data set. The part 2 is to use weka clustering algorithm to calculate the K-

Means. In Fifth practical, this task is used to do supervised mining that is classification

algorithm on weka. In Sixth practical, this practical is used to do performance evaluation on

weka experimenter and Knowledge Flow. In seventh practical, this practical is used for

predicting the time series on weka package manager. In eighth practical, this task is used to

do text mining. In final, this practical is used to do the image analytics on weka. These are

will be analysed and demonstrated in detail.

2 Data set

Each record is identified with one Twitter record of a news office. For instance,

bbchealth.txt is identified with BBC wellbeing news. Each line contains tweet id | date and

time | tweet. The separator is '|'. This content information has been utilized to assess the

execution of point models on short content information. Be that as it may, it very well may be

utilized for different assignments, for example, clustering.

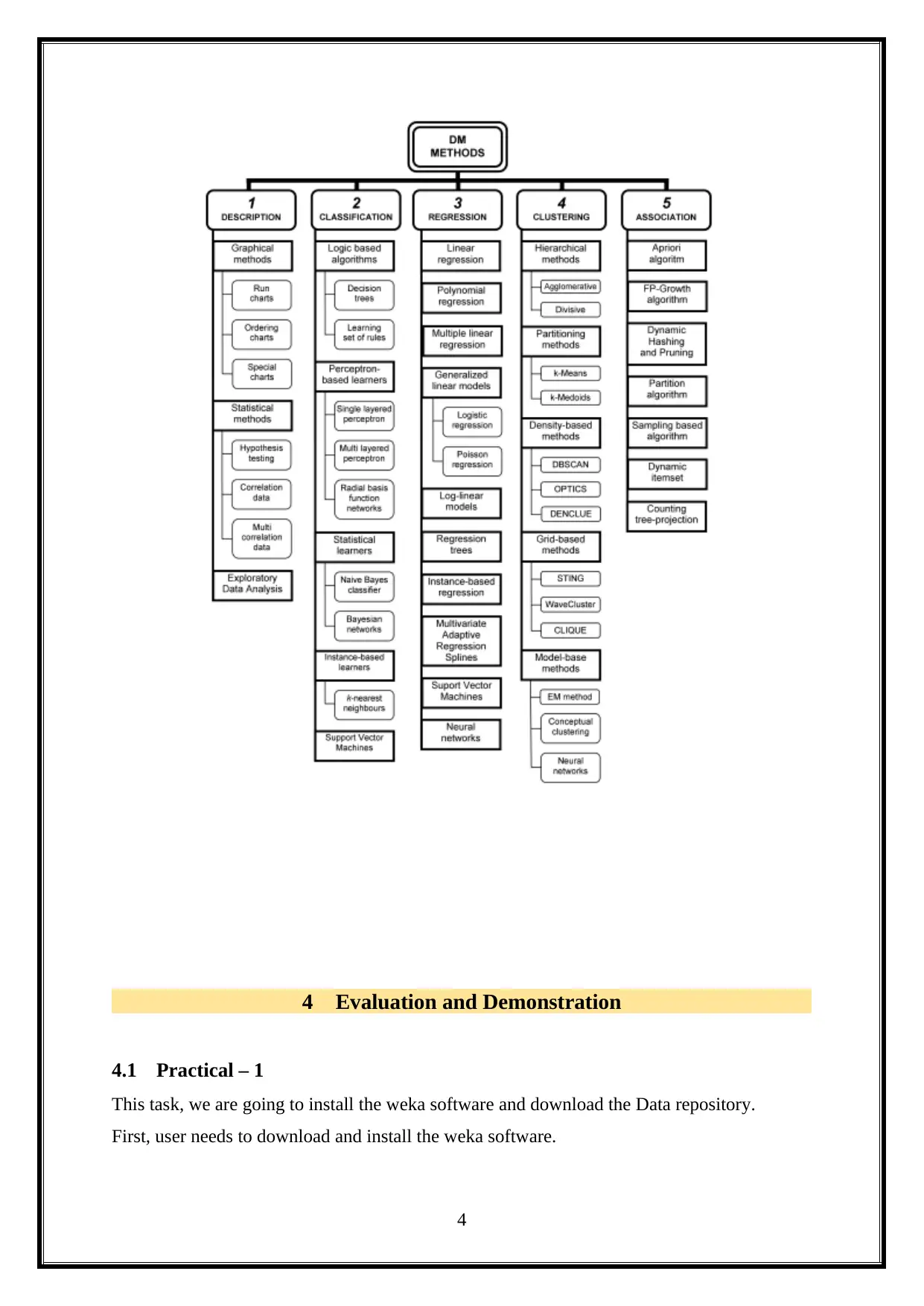

3 Data mining Techniques

Data mining techniques is illustrated as below.

3

the weka data mining applications. This project divided in to 10 practical. In First practical,

we are going to install the weka software and download the Data repository. In Second

practical, this practical is used to do data and data pre-processing for provided data set. In

third practical, this practical is used for data visualization and dimension reduction. In fourth

practical, this practical is used to do the clustering algorithm like K-Means. This practical

divided into two parts such as Part 1 and part 2. The part 1 is manually calculated the K-

means for provided data set. The part 2 is to use weka clustering algorithm to calculate the K-

Means. In Fifth practical, this task is used to do supervised mining that is classification

algorithm on weka. In Sixth practical, this practical is used to do performance evaluation on

weka experimenter and Knowledge Flow. In seventh practical, this practical is used for

predicting the time series on weka package manager. In eighth practical, this task is used to

do text mining. In final, this practical is used to do the image analytics on weka. These are

will be analysed and demonstrated in detail.

2 Data set

Each record is identified with one Twitter record of a news office. For instance,

bbchealth.txt is identified with BBC wellbeing news. Each line contains tweet id | date and

time | tweet. The separator is '|'. This content information has been utilized to assess the

execution of point models on short content information. Be that as it may, it very well may be

utilized for different assignments, for example, clustering.

3 Data mining Techniques

Data mining techniques is illustrated as below.

3

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4 Evaluation and Demonstration

4.1 Practical – 1

This task, we are going to install the weka software and download the Data repository.

First, user needs to download and install the weka software.

4

4.1 Practical – 1

This task, we are going to install the weka software and download the Data repository.

First, user needs to download and install the weka software.

4

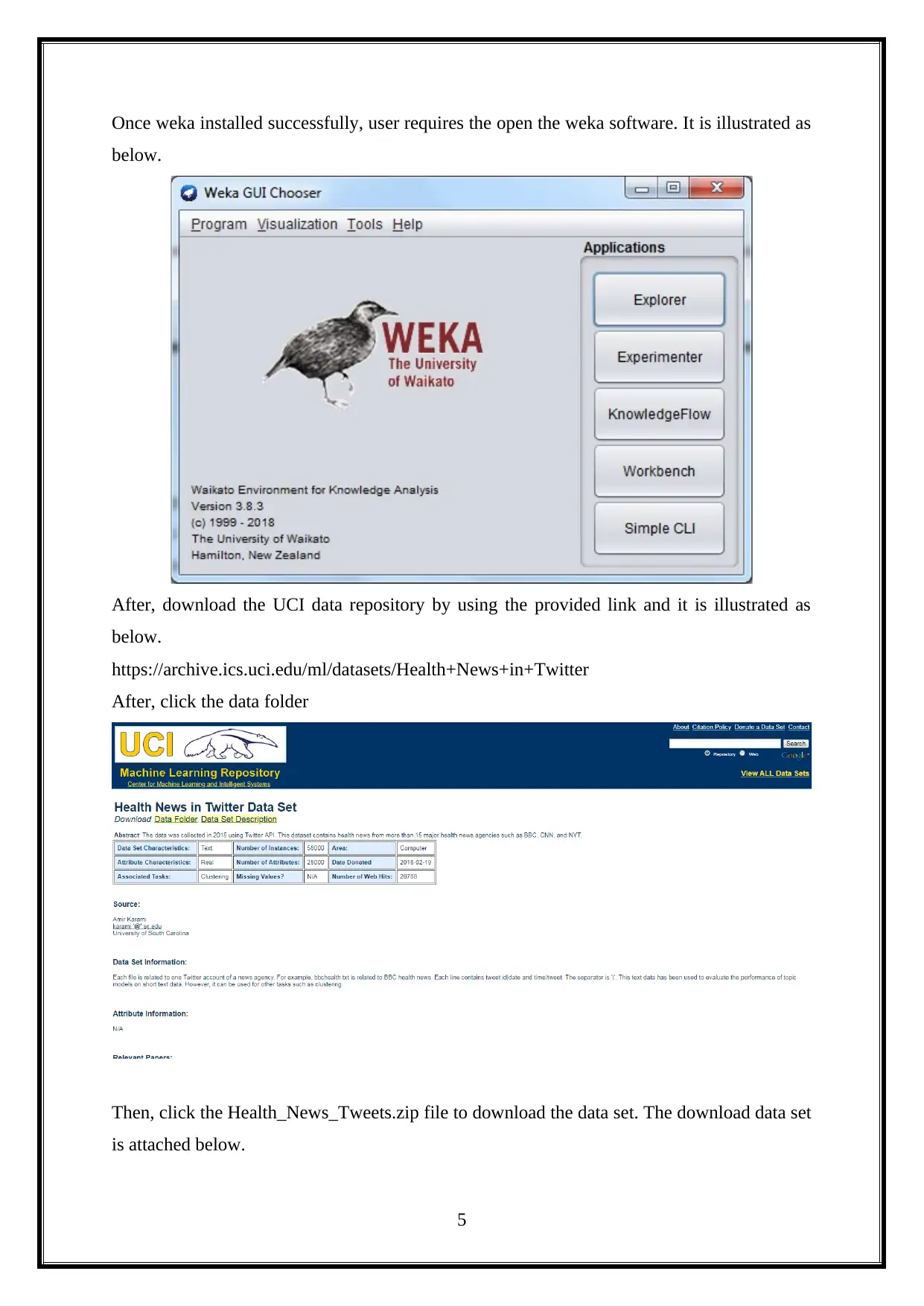

Once weka installed successfully, user requires the open the weka software. It is illustrated as

below.

After, download the UCI data repository by using the provided link and it is illustrated as

below.

https://archive.ics.uci.edu/ml/datasets/Health+News+in+Twitter

After, click the data folder

Then, click the Health_News_Tweets.zip file to download the data set. The download data set

is attached below.

5

below.

After, download the UCI data repository by using the provided link and it is illustrated as

below.

https://archive.ics.uci.edu/ml/datasets/Health+News+in+Twitter

After, click the data folder

Then, click the Health_News_Tweets.zip file to download the data set. The download data set

is attached below.

5

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

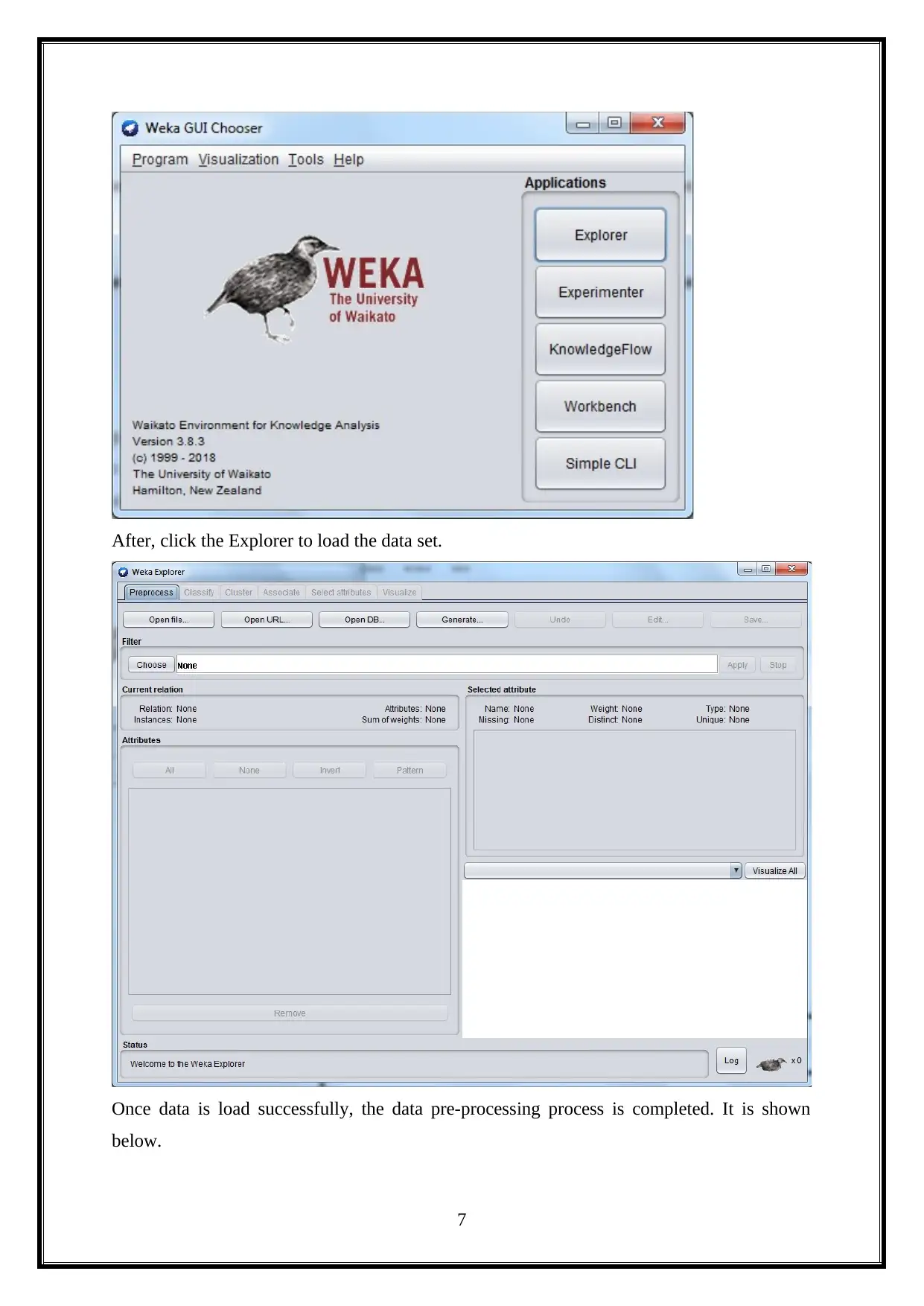

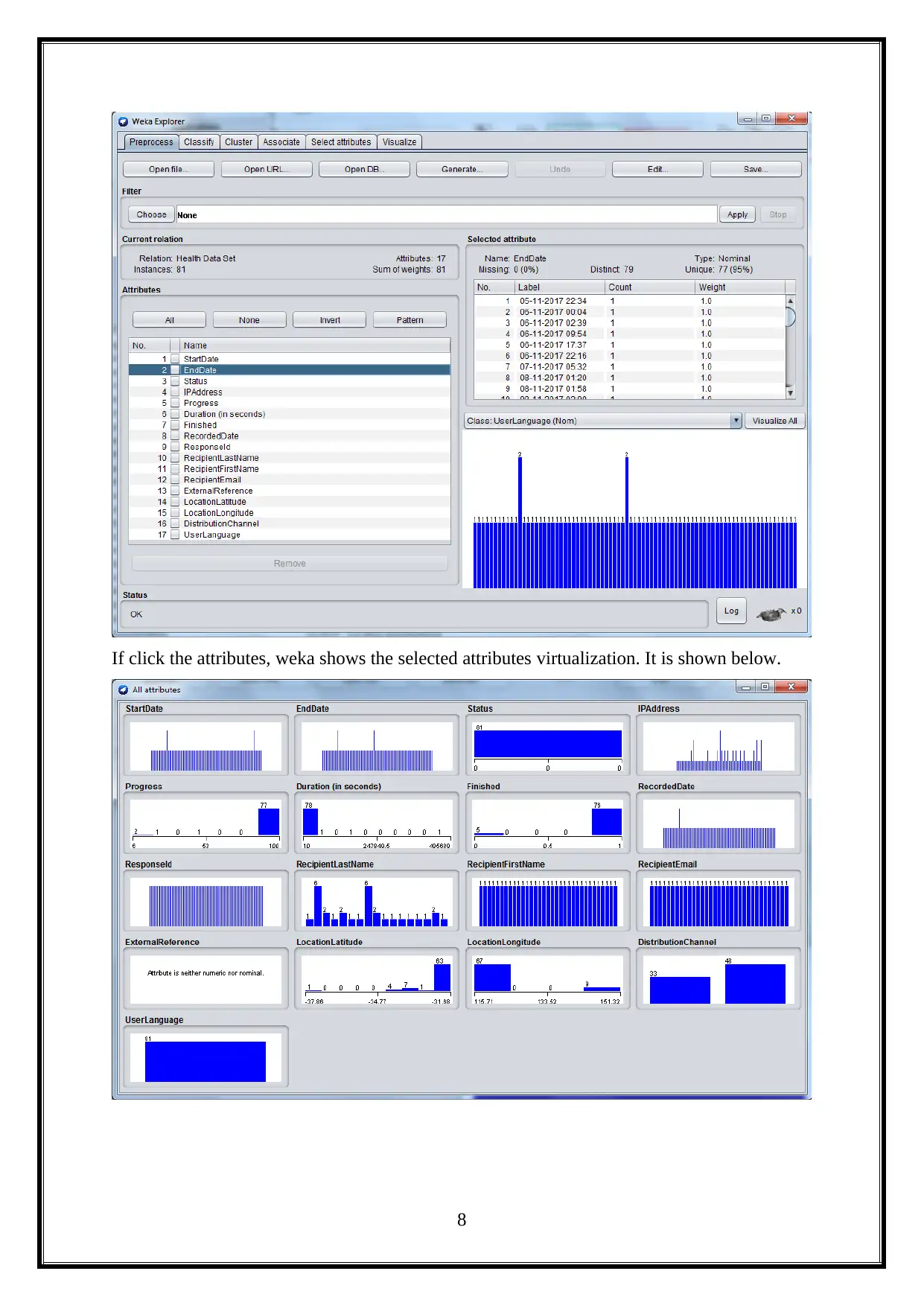

4.2 Practical – 2

This practical is used to do data and data pre-processing for provided data set. To do the

data pre-processing on Weka by follows the below steps.

First, user needs to open the weka.

6

This practical is used to do data and data pre-processing for provided data set. To do the

data pre-processing on Weka by follows the below steps.

First, user needs to open the weka.

6

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

After, click the Explorer to load the data set.

Once data is load successfully, the data pre-processing process is completed. It is shown

below.

7

Once data is load successfully, the data pre-processing process is completed. It is shown

below.

7

If click the attributes, weka shows the selected attributes virtualization. It is shown below.

8

8

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

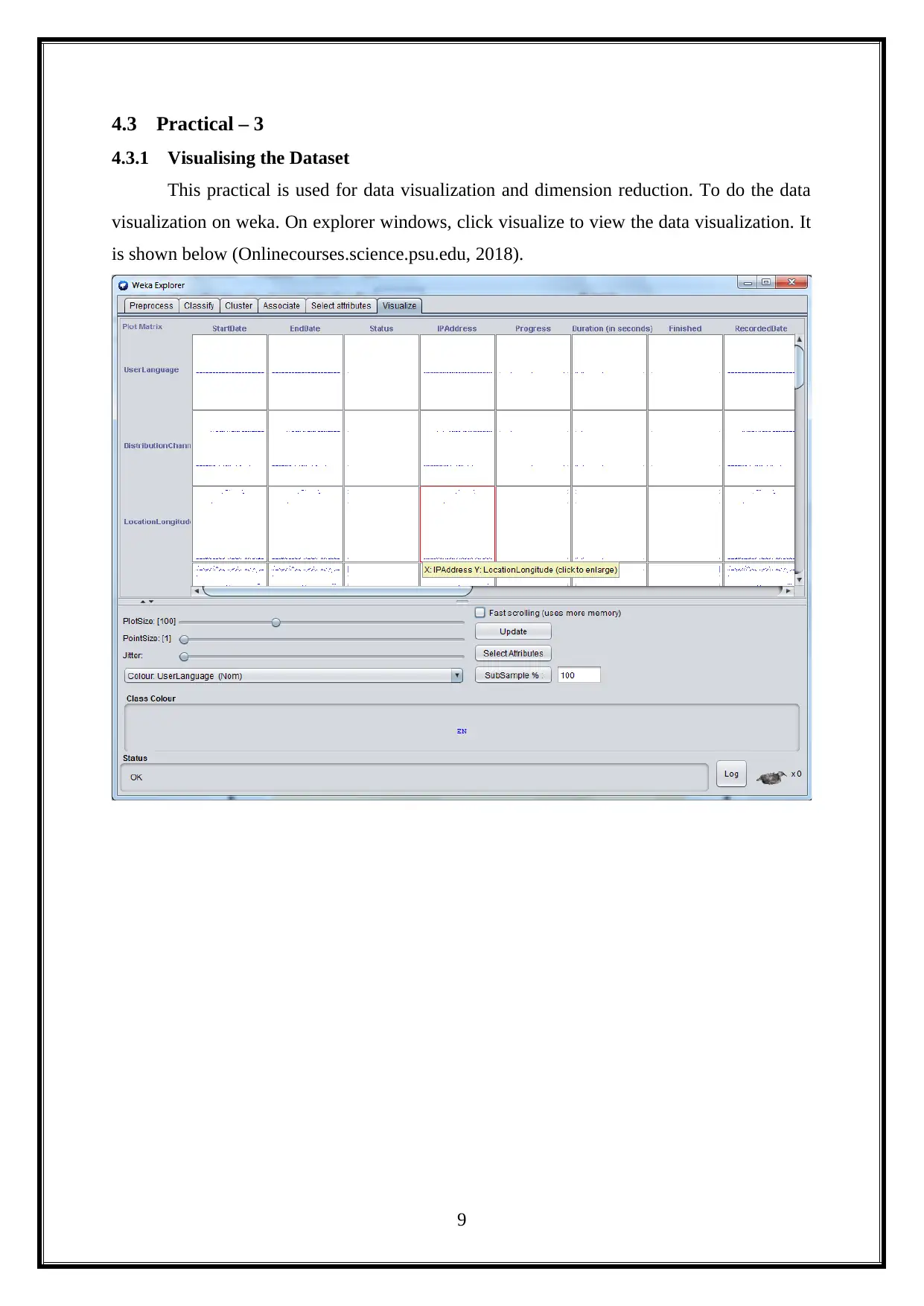

4.3 Practical – 3

4.3.1 Visualising the Dataset

This practical is used for data visualization and dimension reduction. To do the data

visualization on weka. On explorer windows, click visualize to view the data visualization. It

is shown below (Onlinecourses.science.psu.edu, 2018).

9

4.3.1 Visualising the Dataset

This practical is used for data visualization and dimension reduction. To do the data

visualization on weka. On explorer windows, click visualize to view the data visualization. It

is shown below (Onlinecourses.science.psu.edu, 2018).

9

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

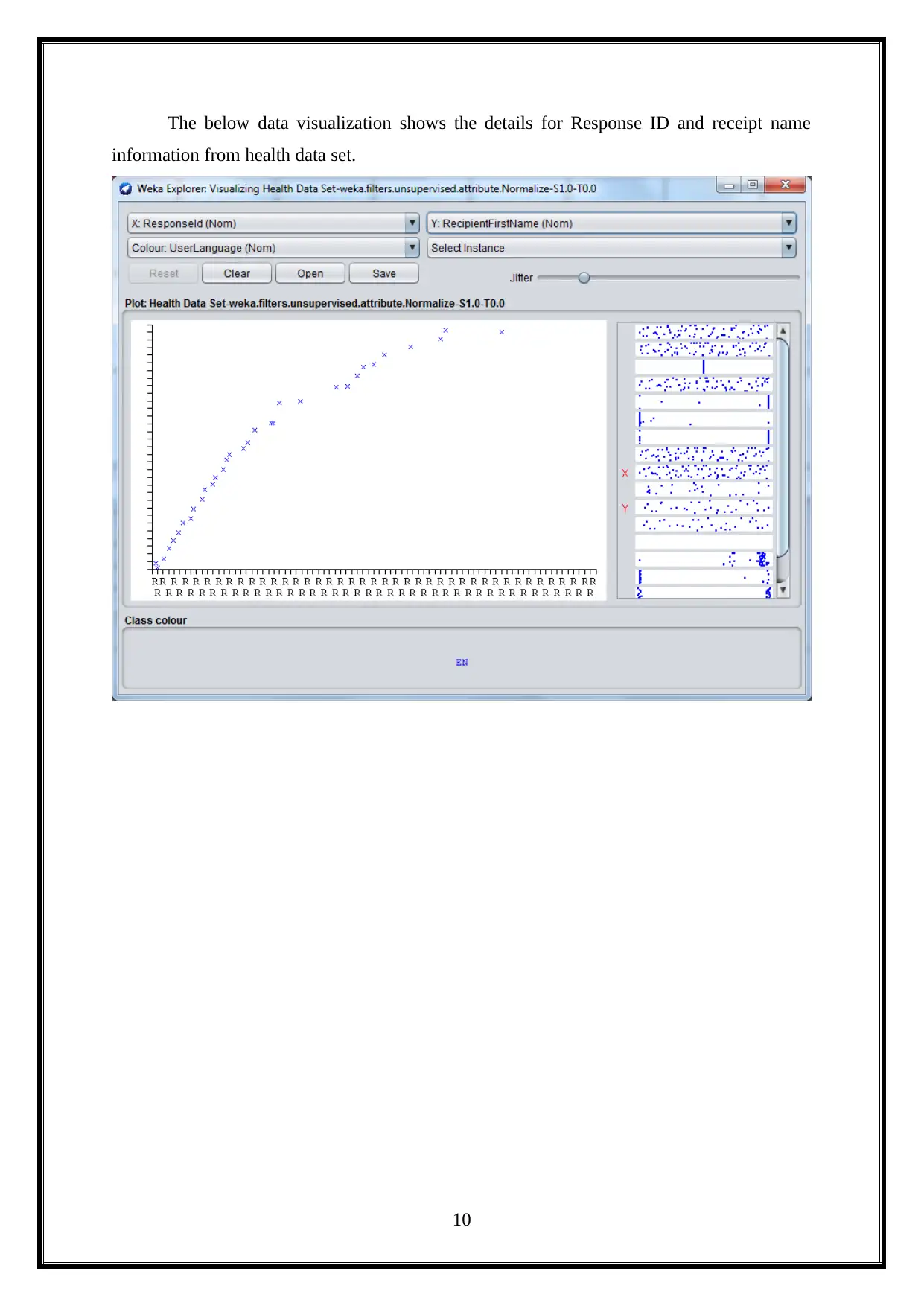

The below data visualization shows the details for Response ID and receipt name

information from health data set.

10

information from health data set.

10

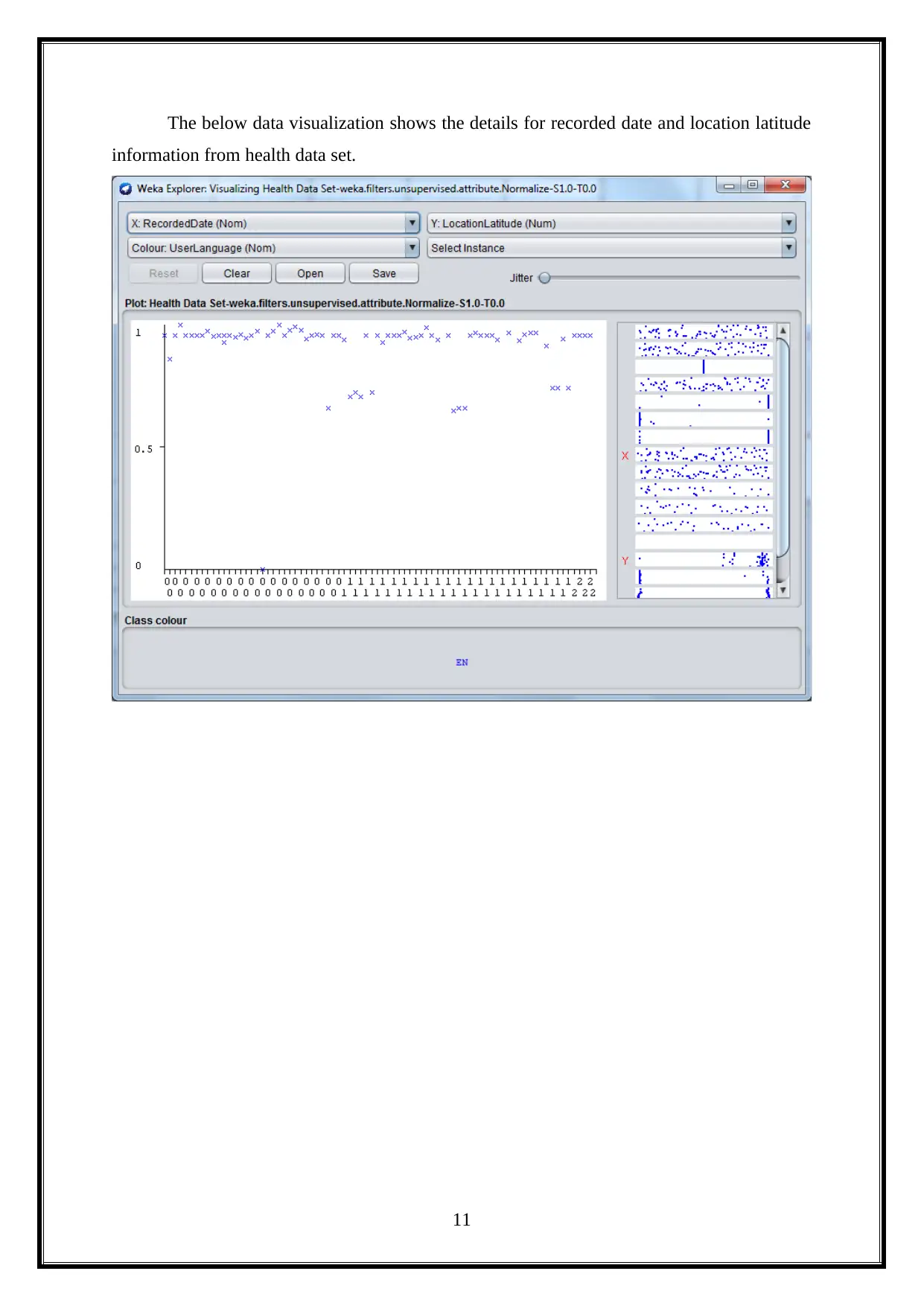

The below data visualization shows the details for recorded date and location latitude

information from health data set.

11

information from health data set.

11

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 69

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.