Analyzing Faculty Data: Hadoop Pig with Cloudera HUE System

VerifiedAdded on 2023/06/04

|7

|1339

|492

Practical Assignment

AI Summary

This practical assignment focuses on utilizing Hadoop Pig and Cloudera HUE to analyze a large dataset representing a university's Faculty Information System (FIS). The goal is to migrate the FIS data from a relational database to Hadoop and perform basic analytics. The assignment involves uploading the 'CIS_FacultyList.csv' dataset into the Cloudera HUE environment and using Pig Latin scripts to categorize the data based on degree level (Bachelors, Masters, Doctorate), years of teaching experience (less than or more than five years), and the location of the last degree obtained (North America or elsewhere). The process includes detailed documentation with screenshots, demonstrating the steps to develop and execute the Pig Latin scripts, and finally, transferring the processed datasets from HDFS back to the local file system. The project effectively showcases the use of Pig Latin for data flow scripting in a Hadoop environment to extract meaningful insights from a large dataset.

Big Data

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Contents

1. Introduction...................................................................................................................................3

2. Dataset...........................................................................................................................................3

3. Upload the dataset.........................................................................................................................3

4. Pig Latin Scripts............................................................................................................................4

Task: 1..............................................................................................................................................5

Task: 2..............................................................................................................................................5

Task: 3..............................................................................................................................................5

5. Discussion.....................................................................................................................................5

6. Conclusion.....................................................................................................................................6

References.............................................................................................................................................7

1. Introduction...................................................................................................................................3

2. Dataset...........................................................................................................................................3

3. Upload the dataset.........................................................................................................................3

4. Pig Latin Scripts............................................................................................................................4

Task: 1..............................................................................................................................................5

Task: 2..............................................................................................................................................5

Task: 3..............................................................................................................................................5

5. Discussion.....................................................................................................................................5

6. Conclusion.....................................................................................................................................6

References.............................................................................................................................................7

1. Introduction

A large, state-owned, multi-campus University wanted to move its Faculty

Information System (FIS) from a relational database management system (RDBMS)

implemented using MS-Access to Hadoop. The production server comprises of data that is

older than fifty years, which is stored and compiled from the 20 campuses of the university.

The plan aims to move this increasing size of the data (i.e., the relational tables and

associated data files), which has started to effect the response system and is increasing related

data issues.

The Pig Latin is referred as the data flow language, where the result of every single

processing step forms a new dataset, or a relation (Gates and Dai, 2016). To create a dataset

and separate all categories. We are using cloudera hue environment to create the project. The

initial step includes, uploading the provided dataset and importing to the Cloudera HUE

environment. We are using the pig script for separate the file for all categorires.The given

dataset are employee dataset and it upload through the cloudera hue environment. Hue means

hadoop user experience and its support the apache hadoop and ecosystem. It is a web based

query, which visualizes the data, where its output completely depends on the dataset and

related query.

The objective of this report is to carry out further work using the datasets, especially

for moving them from the local file system into the storage on the Hadoop system, which

later helps to extract certain basic analytics.

2. Dataset

The provided dataset depends on the list of the faculty and comprises of the following

information- Name of the staff, their location, title, grade, university, course, date of joining,

type, LWD, division, highest qualification, major, all their qualifications, reports, document

and criteria. In the dataset can be separated by the categories. The initial task is required to

separate the dataset based on the degree of the staff. Then, the next task requires separating

the dataset based on the experience of the staff and the last task is separated depending on the

place of the staff’s last degree.

A large, state-owned, multi-campus University wanted to move its Faculty

Information System (FIS) from a relational database management system (RDBMS)

implemented using MS-Access to Hadoop. The production server comprises of data that is

older than fifty years, which is stored and compiled from the 20 campuses of the university.

The plan aims to move this increasing size of the data (i.e., the relational tables and

associated data files), which has started to effect the response system and is increasing related

data issues.

The Pig Latin is referred as the data flow language, where the result of every single

processing step forms a new dataset, or a relation (Gates and Dai, 2016). To create a dataset

and separate all categories. We are using cloudera hue environment to create the project. The

initial step includes, uploading the provided dataset and importing to the Cloudera HUE

environment. We are using the pig script for separate the file for all categorires.The given

dataset are employee dataset and it upload through the cloudera hue environment. Hue means

hadoop user experience and its support the apache hadoop and ecosystem. It is a web based

query, which visualizes the data, where its output completely depends on the dataset and

related query.

The objective of this report is to carry out further work using the datasets, especially

for moving them from the local file system into the storage on the Hadoop system, which

later helps to extract certain basic analytics.

2. Dataset

The provided dataset depends on the list of the faculty and comprises of the following

information- Name of the staff, their location, title, grade, university, course, date of joining,

type, LWD, division, highest qualification, major, all their qualifications, reports, document

and criteria. In the dataset can be separated by the categories. The initial task is required to

separate the dataset based on the degree of the staff. Then, the next task requires separating

the dataset based on the experience of the staff and the last task is separated depending on the

place of the staff’s last degree.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

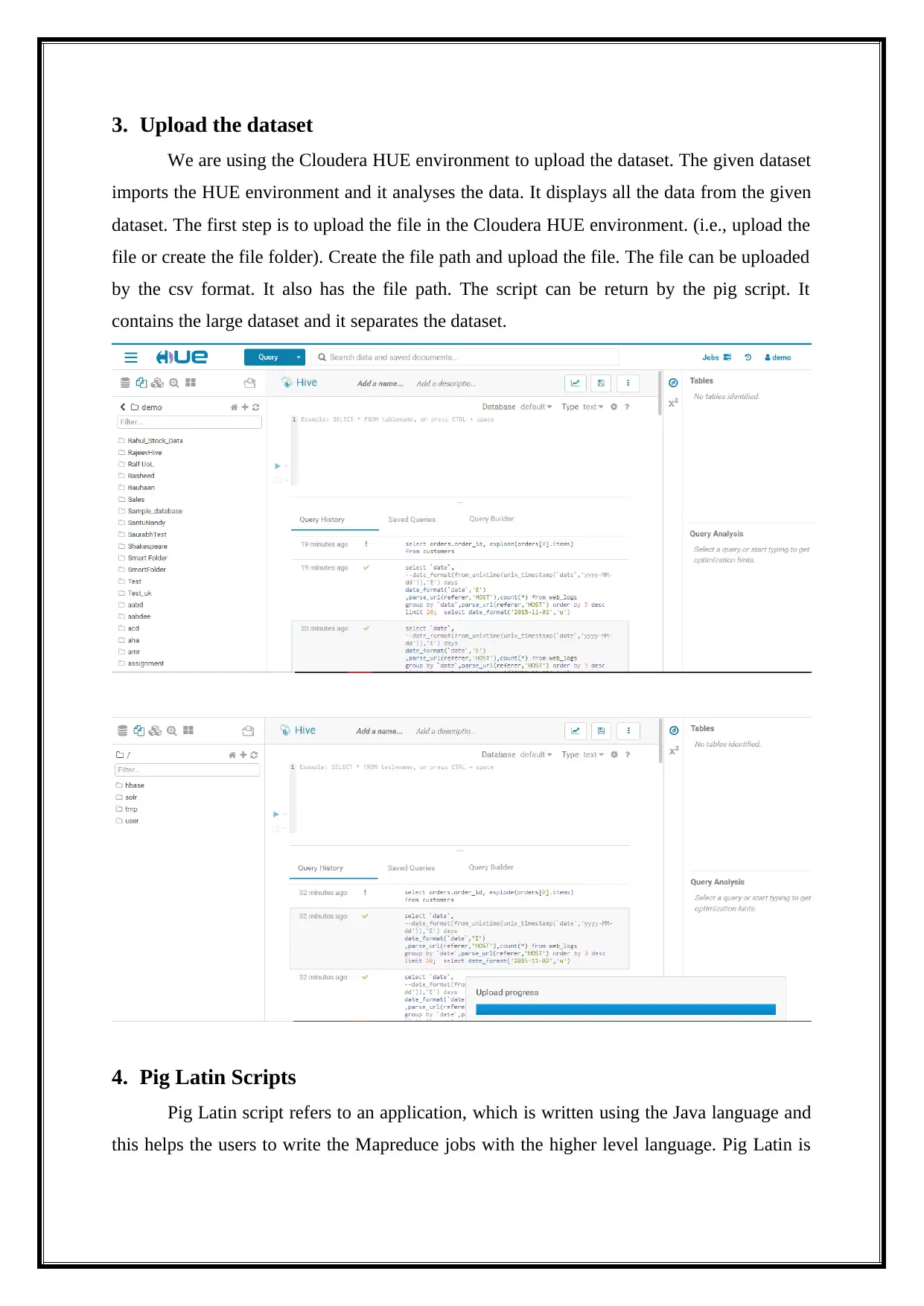

3. Upload the dataset

We are using the Cloudera HUE environment to upload the dataset. The given dataset

imports the HUE environment and it analyses the data. It displays all the data from the given

dataset. The first step is to upload the file in the Cloudera HUE environment. (i.e., upload the

file or create the file folder). Create the file path and upload the file. The file can be uploaded

by the csv format. It also has the file path. The script can be return by the pig script. It

contains the large dataset and it separates the dataset.

4. Pig Latin Scripts

Pig Latin script refers to an application, which is written using the Java language and

this helps the users to write the Mapreduce jobs with the higher level language. Pig Latin is

We are using the Cloudera HUE environment to upload the dataset. The given dataset

imports the HUE environment and it analyses the data. It displays all the data from the given

dataset. The first step is to upload the file in the Cloudera HUE environment. (i.e., upload the

file or create the file folder). Create the file path and upload the file. The file can be uploaded

by the csv format. It also has the file path. The script can be return by the pig script. It

contains the large dataset and it separates the dataset.

4. Pig Latin Scripts

Pig Latin script refers to an application, which is written using the Java language and

this helps the users to write the Mapreduce jobs with the higher level language. Pig Latin is

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

its native language and its syntax is somewhat same like the SQL, but comparatively Pig

Latin script is extremely powerful (Henson, 2015).

It is also possible to embed Pig in the host languages like, Python, Java and

JavaScript, as it helps in integrating Pig with the currently available applications. It even

supports in overcoming the limitations of Pig language such as, the Pig lacks supporting the

control flow statements like- if/else statement, for loop, while loop and the condition

statements.

In the script run by the Cloudera HUE environment. It runs by the apache hadoop and

it is high level program for create programs. We are using the demo cloudera environment

and it use to upload the csv file. The script using to separate the dataset. The dataset divide

the different category. The category is degree level, number of years of teaching experience

and last degree of the faculty (DeRoos et al., 2014)

Thus, the following are the actions of all the three tasks:

Task: 1

The task 1 separates the file in degree level. The dataset has the highest qualification

of faculty. The degree level separated by the bachelor, master degree level.

Task: 2

Hence, the task 2 separates the file, in number of years of teaching. The dataset

contains the degree, location, highest qualification and experience. The dataset is separated in

terms of experience.

Task: 3

Therefore, the task 3 obtained the last degree of the faculty and, it separates the

dataset in terms of degree. The dataset is attached from HDFS to the local storage.

5. Discussion

The file named, “CIS_FacultyList.csv” is utilized to complete the provided practical

exercise. This report totally completes three tasks such as follows steps:

1) Initially, in your Cloudera Hue environment, the dataset named as,

“CIS_FacultyList.csv”, is uploaded into the HDFS storage. To accomplish

this, Cloudera HUE environment is utilized.

Latin script is extremely powerful (Henson, 2015).

It is also possible to embed Pig in the host languages like, Python, Java and

JavaScript, as it helps in integrating Pig with the currently available applications. It even

supports in overcoming the limitations of Pig language such as, the Pig lacks supporting the

control flow statements like- if/else statement, for loop, while loop and the condition

statements.

In the script run by the Cloudera HUE environment. It runs by the apache hadoop and

it is high level program for create programs. We are using the demo cloudera environment

and it use to upload the csv file. The script using to separate the dataset. The dataset divide

the different category. The category is degree level, number of years of teaching experience

and last degree of the faculty (DeRoos et al., 2014)

Thus, the following are the actions of all the three tasks:

Task: 1

The task 1 separates the file in degree level. The dataset has the highest qualification

of faculty. The degree level separated by the bachelor, master degree level.

Task: 2

Hence, the task 2 separates the file, in number of years of teaching. The dataset

contains the degree, location, highest qualification and experience. The dataset is separated in

terms of experience.

Task: 3

Therefore, the task 3 obtained the last degree of the faculty and, it separates the

dataset in terms of degree. The dataset is attached from HDFS to the local storage.

5. Discussion

The file named, “CIS_FacultyList.csv” is utilized to complete the provided practical

exercise. This report totally completes three tasks such as follows steps:

1) Initially, in your Cloudera Hue environment, the dataset named as,

“CIS_FacultyList.csv”, is uploaded into the HDFS storage. To accomplish

this, Cloudera HUE environment is utilized.

2) The documentation part is completed with the step-by-step screenshots, which

describes the taken steps to develop the Pig Latin scripts/commands, which

helped in completing the three tasks. Also, the Pig Latin scripts is described.

3) For creating new datasets, Pig is used which uses the source file and are

categorized, with the following aspects:

a) The task 1 is categorized based on the degrees such as,

Bachelors, Masters and Doctorate.

b) The task 2 is categorized based on the number of teaching years

i.e., less than five years, or more than five years.

c) The task 3 is categorized based on, whether the last degree was

obtained from North America or from other place.

For each and Group statement constructs, it is considered to use the Pig Latin Split

(Partition).

4) Finally, the datasets from HDFS is attached back to the local file system

storage and sends with this document.

6. Conclusion

This project creates the dataset in cloudera hue environment. The cloudera contains

the large dataset. The task 1 is categorized based on the degrees such as, Bachelors, Masters

and Doctorate, then the task 2 is categorized based on the number of teaching years i.e., less

than five years, or more than five years, and the task 3 is categorized based on, whether the

last degree was obtained from North America or from other place. Finally, the dataset attach

from HDFS to local file system.

describes the taken steps to develop the Pig Latin scripts/commands, which

helped in completing the three tasks. Also, the Pig Latin scripts is described.

3) For creating new datasets, Pig is used which uses the source file and are

categorized, with the following aspects:

a) The task 1 is categorized based on the degrees such as,

Bachelors, Masters and Doctorate.

b) The task 2 is categorized based on the number of teaching years

i.e., less than five years, or more than five years.

c) The task 3 is categorized based on, whether the last degree was

obtained from North America or from other place.

For each and Group statement constructs, it is considered to use the Pig Latin Split

(Partition).

4) Finally, the datasets from HDFS is attached back to the local file system

storage and sends with this document.

6. Conclusion

This project creates the dataset in cloudera hue environment. The cloudera contains

the large dataset. The task 1 is categorized based on the degrees such as, Bachelors, Masters

and Doctorate, then the task 2 is categorized based on the number of teaching years i.e., less

than five years, or more than five years, and the task 3 is categorized based on, whether the

last degree was obtained from North America or from other place. Finally, the dataset attach

from HDFS to local file system.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

References

DeRoos, D., Zikopoulos, P., Melnyk, R., Brown, B. and Coss, R. (2014). Hadoop for

dummies. John Wiley & Sons.

Gates, A. and Dai, D. (2016). Programming Pig: Dataflow Scripting with Hadoop. 2nd ed.

O'Reilly Media.

Henson, T. (2015). Example Pig Latin Script. [online] Thomas Henson. Available at:

https://www.thomashenson.com/example-pig-latin-script/ [Accessed 4 Dec. 2018].

DeRoos, D., Zikopoulos, P., Melnyk, R., Brown, B. and Coss, R. (2014). Hadoop for

dummies. John Wiley & Sons.

Gates, A. and Dai, D. (2016). Programming Pig: Dataflow Scripting with Hadoop. 2nd ed.

O'Reilly Media.

Henson, T. (2015). Example Pig Latin Script. [online] Thomas Henson. Available at:

https://www.thomashenson.com/example-pig-latin-script/ [Accessed 4 Dec. 2018].

1 out of 7

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.