ICT515 Foundations of Data Science: Algorithm Applications Report

VerifiedAdded on 2023/01/19

|11

|2431

|57

Report

AI Summary

This report provides a comprehensive overview of machine learning algorithms and their applications in various data science domains. It begins by discussing the use of machine learning algorithms in IP traffic flows, network traffic anomaly detection, and hard disk drive failure. The report compares several algorithms, including Native Bayes, Decision Tree Classifier, Support Vector Machines (SVM), k-Nearest Neighbor, AdaBoost, Linear Regression, Decision Table, and Sequential Minimal Optimization (SMO). The report analyzes the performance of these algorithms, providing insights into their strengths and weaknesses. The report also includes a discussion on feature selection and the use of different feature combinations to improve the accuracy of the algorithms. The conclusion highlights the best and worst-performing algorithms for each application, offering recommendations for algorithm selection based on the specific problem and desired outcomes. The report draws on current literature to support its findings and provides valuable insights into the practical application of machine learning in data science.

Running head: FOUNDATION OF DATA SCIENCE

FOUNDATION OF DATA SCIENCE

Name of the Student

Name of the University

Author Note

FOUNDATION OF DATA SCIENCE

Name of the Student

Name of the University

Author Note

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

FOUDATION OF DATA SCIENCE 1

Abstract:

This paper will discuss about the use of the machine learning algorithms in traffic flows,

network traffic anomaly detection and in hard disk drive failure. In this paper the machine

learning algorithms will also be compared with each other and in the conclusion part the

worst and best algorithm will be discussed.

Abstract:

This paper will discuss about the use of the machine learning algorithms in traffic flows,

network traffic anomaly detection and in hard disk drive failure. In this paper the machine

learning algorithms will also be compared with each other and in the conclusion part the

worst and best algorithm will be discussed.

2FOUDATION OF DATA SCIENCE

Introduction:

In so many disciplines of the science, the major objective is to make a model of the

relationship that is between the input and output. Where the input means is the set of

quantities that are observable and the output means one more set of the variables which is

related with these. The time when, a mathematical model is being determined, the prediction

of value of the variables that are desired is possible by doing the measurement of the

observables. In other words, the machine learning is one of the app lications of the Artificial

Intelligence which offers the system, the ability to learn automatically [1]. A sytem where the

machine learning is implemented is ready to learn new thing whenever it identifies some new

patterns in the data. To select the suitable algorithm is the major part of any project that is

related to the machine learning. This paper will discuss about the use of the machine learning

algorithms in traffic flows, network traffic anomaly detection and in robot hard disk drive

failure. In this paper the machine learning algorithms will also be compared with each other

and in the conclusion part the worst and best algorithm will be discussed.

IP Traffic flows:

The traffic state detection is done conventionally by using the sensors that are point

based that include the microwave radars. The researchers have developed several

mechanisms for detecting the congestion through comparing the measures from the loops that

are inductive across several locations. The use of suitable algorithms and to classify the flows

of the traffic correctly is so much important. The algorithms that has been used to clarify the

traffic flows are given below:

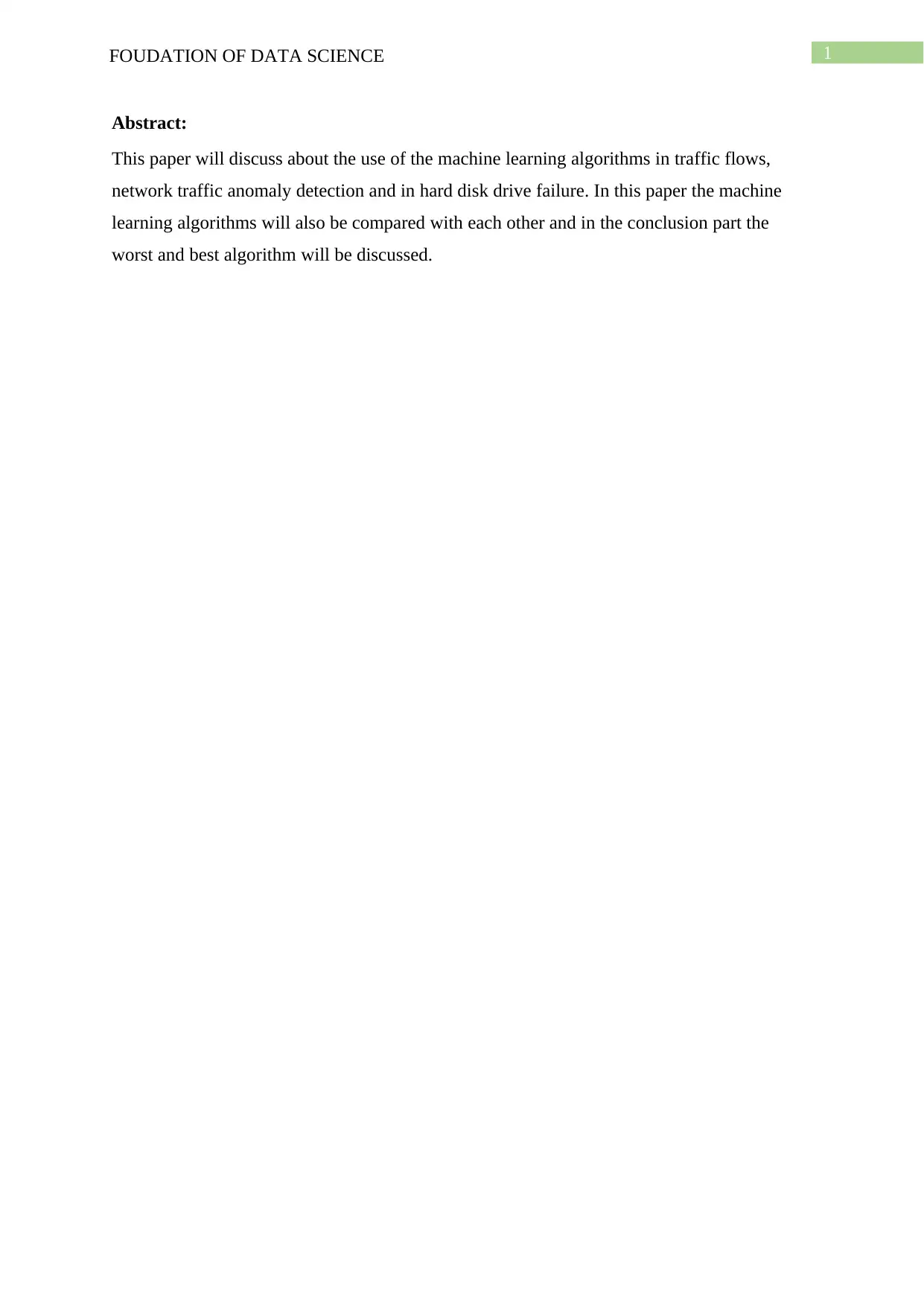

Native Bayes:

The classification of the native Bayes uses the theorem of Bayes for classifying and

predicting the labels for the information and data. Assigning of the algorithm is based on the

Introduction:

In so many disciplines of the science, the major objective is to make a model of the

relationship that is between the input and output. Where the input means is the set of

quantities that are observable and the output means one more set of the variables which is

related with these. The time when, a mathematical model is being determined, the prediction

of value of the variables that are desired is possible by doing the measurement of the

observables. In other words, the machine learning is one of the app lications of the Artificial

Intelligence which offers the system, the ability to learn automatically [1]. A sytem where the

machine learning is implemented is ready to learn new thing whenever it identifies some new

patterns in the data. To select the suitable algorithm is the major part of any project that is

related to the machine learning. This paper will discuss about the use of the machine learning

algorithms in traffic flows, network traffic anomaly detection and in robot hard disk drive

failure. In this paper the machine learning algorithms will also be compared with each other

and in the conclusion part the worst and best algorithm will be discussed.

IP Traffic flows:

The traffic state detection is done conventionally by using the sensors that are point

based that include the microwave radars. The researchers have developed several

mechanisms for detecting the congestion through comparing the measures from the loops that

are inductive across several locations. The use of suitable algorithms and to classify the flows

of the traffic correctly is so much important. The algorithms that has been used to clarify the

traffic flows are given below:

Native Bayes:

The classification of the native Bayes uses the theorem of Bayes for classifying and

predicting the labels for the information and data. Assigning of the algorithm is based on the

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3FOUDATION OF DATA SCIENCE

posterior probability of the vector that is estimated maximum [2]. Each of the features are

considered by it, that to be more independent of each other where the original class of it is

given.

Figure: Feature Comparison of Natıve Bayes Algorithm

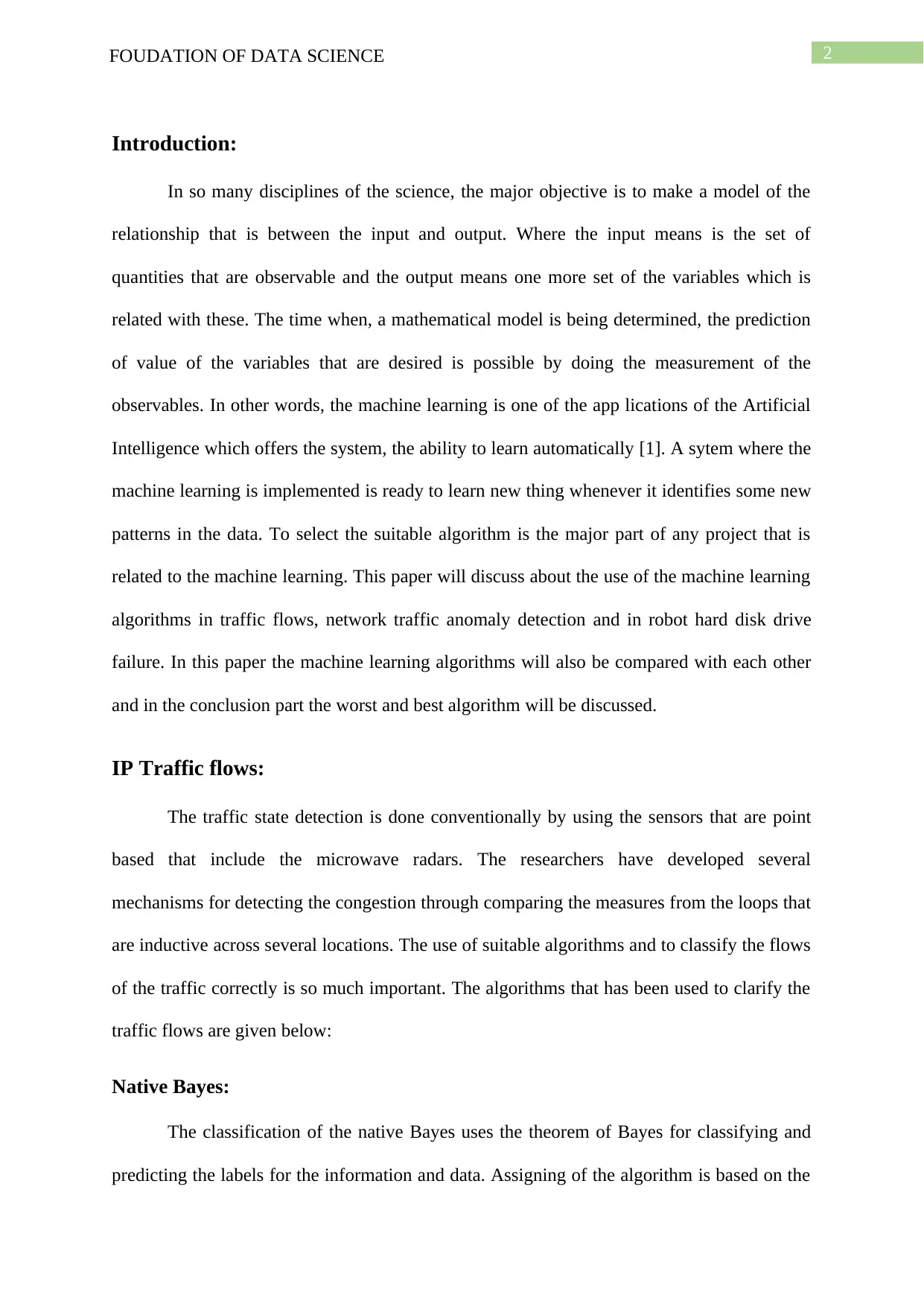

Decision tree classifier:

One more non parametric method that is belonged to the machine learning is that it

predicts value that is called the leaves of a tree, from a feature set which is called as the

branches that lead the leaves, can be defined as the decision tree classifier [3]. Through

segregating set of the sources into one subset of the set of attribute, the tree is being learned

that is divided further into one more subset in a manner that is recursive. The major

advantage of this method is, the part of the visualization.

Anomalies→ Test ↓ Present Absent

Positive True Positive False Positive

Negative False Negative False Positive

posterior probability of the vector that is estimated maximum [2]. Each of the features are

considered by it, that to be more independent of each other where the original class of it is

given.

Figure: Feature Comparison of Natıve Bayes Algorithm

Decision tree classifier:

One more non parametric method that is belonged to the machine learning is that it

predicts value that is called the leaves of a tree, from a feature set which is called as the

branches that lead the leaves, can be defined as the decision tree classifier [3]. Through

segregating set of the sources into one subset of the set of attribute, the tree is being learned

that is divided further into one more subset in a manner that is recursive. The major

advantage of this method is, the part of the visualization.

Anomalies→ Test ↓ Present Absent

Positive True Positive False Positive

Negative False Negative False Positive

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4FOUDATION OF DATA SCIENCE

Table 3: Observation

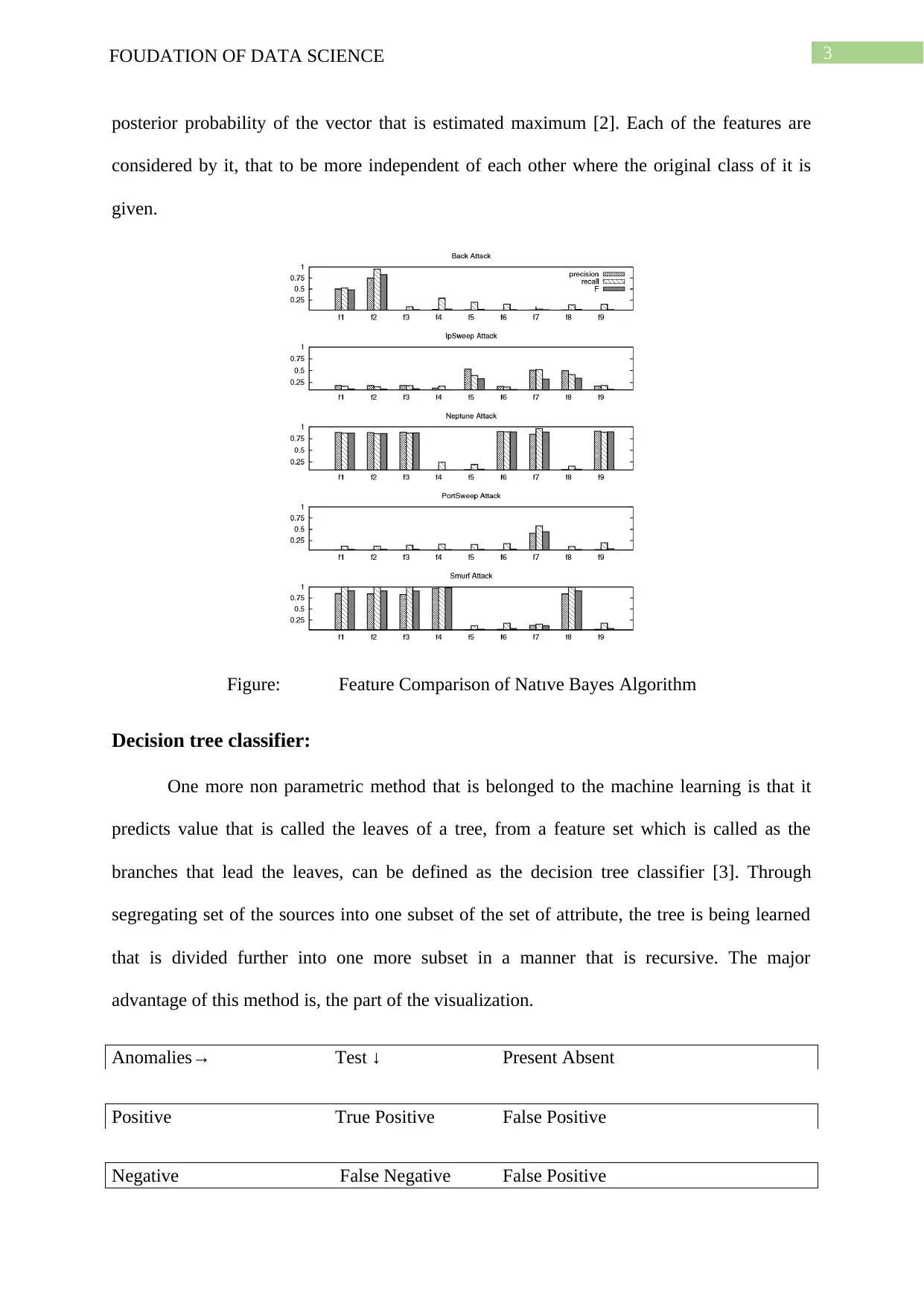

Support vector machines:

The support vector machines are categorised information and data into several classes by

solving an optimization problem that is constrained. The result to be obtained is one optimal

hyper plane. The main parameter that is depended by the SVM is on the size of the kernel,

and varying that the accuracy can also be altered [4]. The SVM is widely used with most of

the algorithms that are shallow till the date which has been compared with the accuracy of the

neural networks that are deep.

Feature section:

𝑟𝑒𝑐𝑎𝑙𝑙 = 𝑇𝑃 𝑇𝑃+𝐹𝑁 13…………… (1)

𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛 = 𝑇𝑃 𝑇𝑃+𝐹𝑃 14 ……………………(2)

𝑓1 − 𝑠𝑐𝑜𝑟𝑒 = 2 ∗ (𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛 ∗ 𝑟𝑒𝑐𝑎𝑙𝑙 𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛+𝑟𝑒𝑐𝑎𝑙𝑙 15 )…………… (3)

𝑎𝑣𝑒𝑟𝑎𝑔𝑒 𝑡𝑜 𝑡𝑜𝑡𝑎𝑙 𝑓1 − 𝑠𝑐𝑜𝑟𝑒 = ∑ (𝑓1−𝑠𝑐𝑜𝑟𝑒 𝑓𝑜𝑟 𝑐𝑙𝑎𝑠𝑠 𝑖∗𝑓1−𝑠𝑐𝑜𝑟𝑒 𝑓𝑜𝑟

𝑠𝑢𝑝𝑝𝑜𝑟𝑡 𝑜𝑓 𝑐𝑙𝑎𝑠𝑠 𝑖) 2 / ∑𝑇𝑜𝑡𝑎𝑙 𝑖𝑚𝑎𝑔𝑒𝑠 16 …………………….(4)

Base Feature Average f1-score

Ski-Thomasi 78.28

ORB 86.59

Find contours 70.09

Structured edge detection toolbox 82.36

Table 1: Average f1-score for SVM - one feature extractor at a time

Table 3: Observation

Support vector machines:

The support vector machines are categorised information and data into several classes by

solving an optimization problem that is constrained. The result to be obtained is one optimal

hyper plane. The main parameter that is depended by the SVM is on the size of the kernel,

and varying that the accuracy can also be altered [4]. The SVM is widely used with most of

the algorithms that are shallow till the date which has been compared with the accuracy of the

neural networks that are deep.

Feature section:

𝑟𝑒𝑐𝑎𝑙𝑙 = 𝑇𝑃 𝑇𝑃+𝐹𝑁 13…………… (1)

𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛 = 𝑇𝑃 𝑇𝑃+𝐹𝑃 14 ……………………(2)

𝑓1 − 𝑠𝑐𝑜𝑟𝑒 = 2 ∗ (𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛 ∗ 𝑟𝑒𝑐𝑎𝑙𝑙 𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛+𝑟𝑒𝑐𝑎𝑙𝑙 15 )…………… (3)

𝑎𝑣𝑒𝑟𝑎𝑔𝑒 𝑡𝑜 𝑡𝑜𝑡𝑎𝑙 𝑓1 − 𝑠𝑐𝑜𝑟𝑒 = ∑ (𝑓1−𝑠𝑐𝑜𝑟𝑒 𝑓𝑜𝑟 𝑐𝑙𝑎𝑠𝑠 𝑖∗𝑓1−𝑠𝑐𝑜𝑟𝑒 𝑓𝑜𝑟

𝑠𝑢𝑝𝑝𝑜𝑟𝑡 𝑜𝑓 𝑐𝑙𝑎𝑠𝑠 𝑖) 2 / ∑𝑇𝑜𝑡𝑎𝑙 𝑖𝑚𝑎𝑔𝑒𝑠 16 …………………….(4)

Base Feature Average f1-score

Ski-Thomasi 78.28

ORB 86.59

Find contours 70.09

Structured edge detection toolbox 82.36

Table 1: Average f1-score for SVM - one feature extractor at a time

5FOUDATION OF DATA SCIENCE

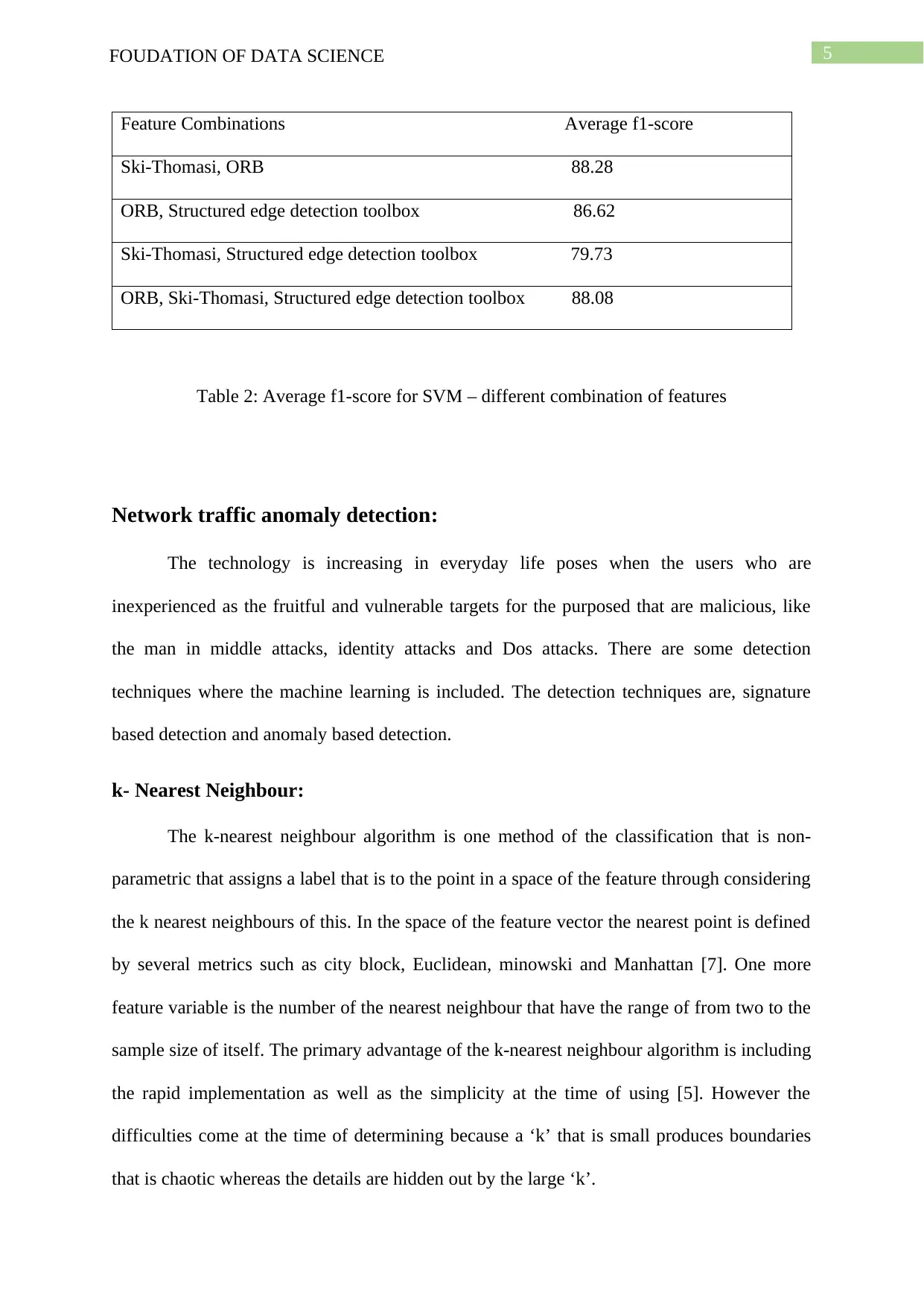

Feature Combinations Average f1-score

Ski-Thomasi, ORB 88.28

ORB, Structured edge detection toolbox 86.62

Ski-Thomasi, Structured edge detection toolbox 79.73

ORB, Ski-Thomasi, Structured edge detection toolbox 88.08

Table 2: Average f1-score for SVM – different combination of features

Network traffic anomaly detection:

The technology is increasing in everyday life poses when the users who are

inexperienced as the fruitful and vulnerable targets for the purposed that are malicious, like

the man in middle attacks, identity attacks and Dos attacks. There are some detection

techniques where the machine learning is included. The detection techniques are, signature

based detection and anomaly based detection.

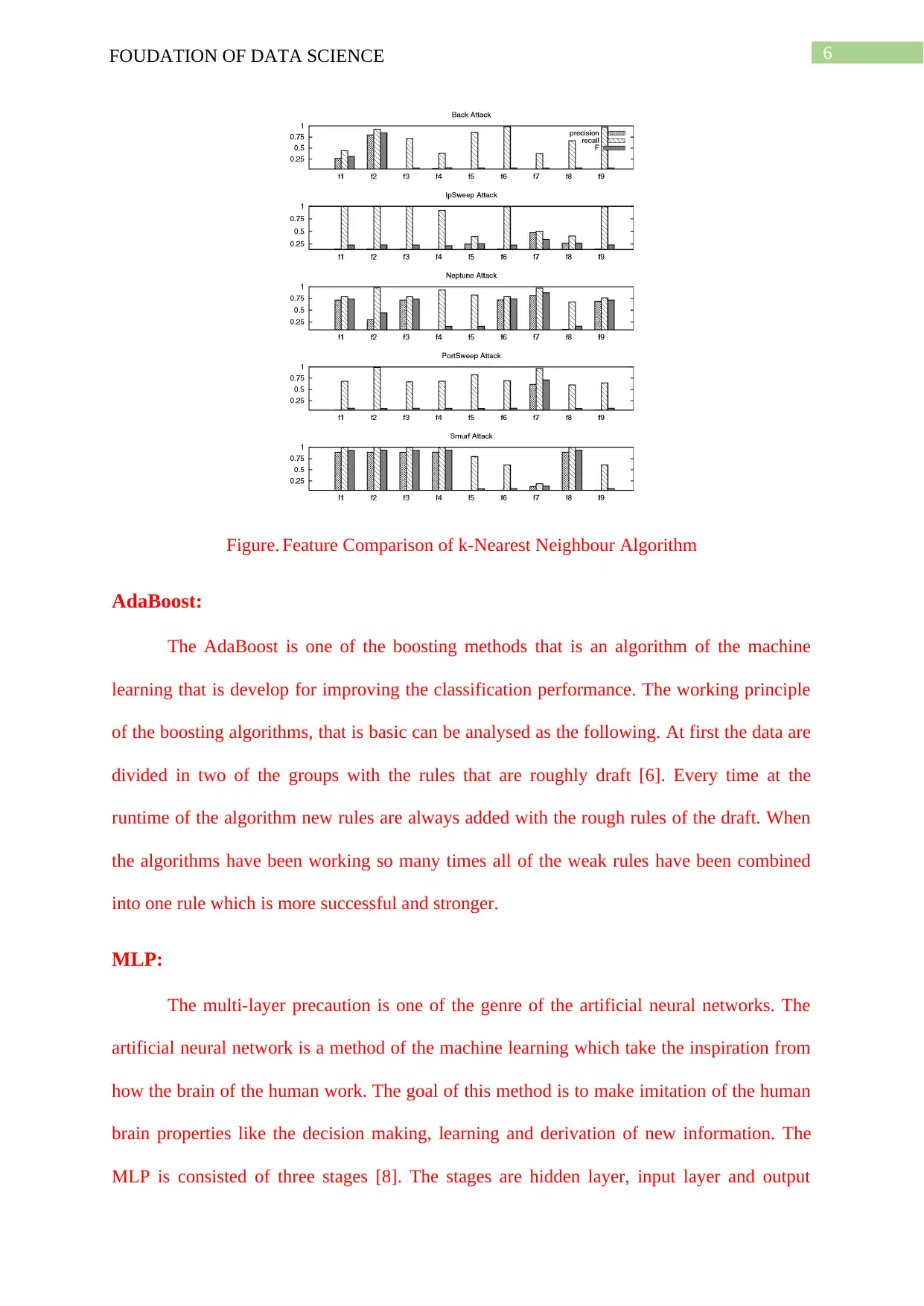

k- Nearest Neighbour:

The k-nearest neighbour algorithm is one method of the classification that is non-

parametric that assigns a label that is to the point in a space of the feature through considering

the k nearest neighbours of this. In the space of the feature vector the nearest point is defined

by several metrics such as city block, Euclidean, minowski and Manhattan [7]. One more

feature variable is the number of the nearest neighbour that have the range of from two to the

sample size of itself. The primary advantage of the k-nearest neighbour algorithm is including

the rapid implementation as well as the simplicity at the time of using [5]. However the

difficulties come at the time of determining because a ‘k’ that is small produces boundaries

that is chaotic whereas the details are hidden out by the large ‘k’.

Feature Combinations Average f1-score

Ski-Thomasi, ORB 88.28

ORB, Structured edge detection toolbox 86.62

Ski-Thomasi, Structured edge detection toolbox 79.73

ORB, Ski-Thomasi, Structured edge detection toolbox 88.08

Table 2: Average f1-score for SVM – different combination of features

Network traffic anomaly detection:

The technology is increasing in everyday life poses when the users who are

inexperienced as the fruitful and vulnerable targets for the purposed that are malicious, like

the man in middle attacks, identity attacks and Dos attacks. There are some detection

techniques where the machine learning is included. The detection techniques are, signature

based detection and anomaly based detection.

k- Nearest Neighbour:

The k-nearest neighbour algorithm is one method of the classification that is non-

parametric that assigns a label that is to the point in a space of the feature through considering

the k nearest neighbours of this. In the space of the feature vector the nearest point is defined

by several metrics such as city block, Euclidean, minowski and Manhattan [7]. One more

feature variable is the number of the nearest neighbour that have the range of from two to the

sample size of itself. The primary advantage of the k-nearest neighbour algorithm is including

the rapid implementation as well as the simplicity at the time of using [5]. However the

difficulties come at the time of determining because a ‘k’ that is small produces boundaries

that is chaotic whereas the details are hidden out by the large ‘k’.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6FOUDATION OF DATA SCIENCE

Figure. Feature Comparison of k-Nearest Neighbour Algorithm

AdaBoost:

The AdaBoost is one of the boosting methods that is an algorithm of the machine

learning that is develop for improving the classification performance. The working principle

of the boosting algorithms, that is basic can be analysed as the following. At first the data are

divided in two of the groups with the rules that are roughly draft [6]. Every time at the

runtime of the algorithm new rules are always added with the rough rules of the draft. When

the algorithms have been working so many times all of the weak rules have been combined

into one rule which is more successful and stronger.

MLP:

The multi-layer precaution is one of the genre of the artificial neural networks. The

artificial neural network is a method of the machine learning which take the inspiration from

how the brain of the human work. The goal of this method is to make imitation of the human

brain properties like the decision making, learning and derivation of new information. The

MLP is consisted of three stages [8]. The stages are hidden layer, input layer and output

Figure. Feature Comparison of k-Nearest Neighbour Algorithm

AdaBoost:

The AdaBoost is one of the boosting methods that is an algorithm of the machine

learning that is develop for improving the classification performance. The working principle

of the boosting algorithms, that is basic can be analysed as the following. At first the data are

divided in two of the groups with the rules that are roughly draft [6]. Every time at the

runtime of the algorithm new rules are always added with the rough rules of the draft. When

the algorithms have been working so many times all of the weak rules have been combined

into one rule which is more successful and stronger.

MLP:

The multi-layer precaution is one of the genre of the artificial neural networks. The

artificial neural network is a method of the machine learning which take the inspiration from

how the brain of the human work. The goal of this method is to make imitation of the human

brain properties like the decision making, learning and derivation of new information. The

MLP is consisted of three stages [8]. The stages are hidden layer, input layer and output

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7FOUDATION OF DATA SCIENCE

layer. Where the input layer have the responsibility to receive data. In the hidden layer the

data is sent from the input layer to the output layer. And finally in the output layer that is the

last layer each of the cells are tied into all the cells and provide results of the processing of

the data of the hidden layer, have been served at this step.

Hard disk drive failure:

Linear regression:

Logistic regression which is a technique of the linear regression, can be used for the

prediction of the instances of the binary class. The logic boost is used by this algorithm, for

building the regression model as well as it is improved further for increasing the model

construction speed [11]. The major advantage of this method is that, one small amount of

information or data for the purpose of training is required by it but the main drawback of this

algorithm is that the classification performance is very low comparing with the other

algorithms that are discriminative.

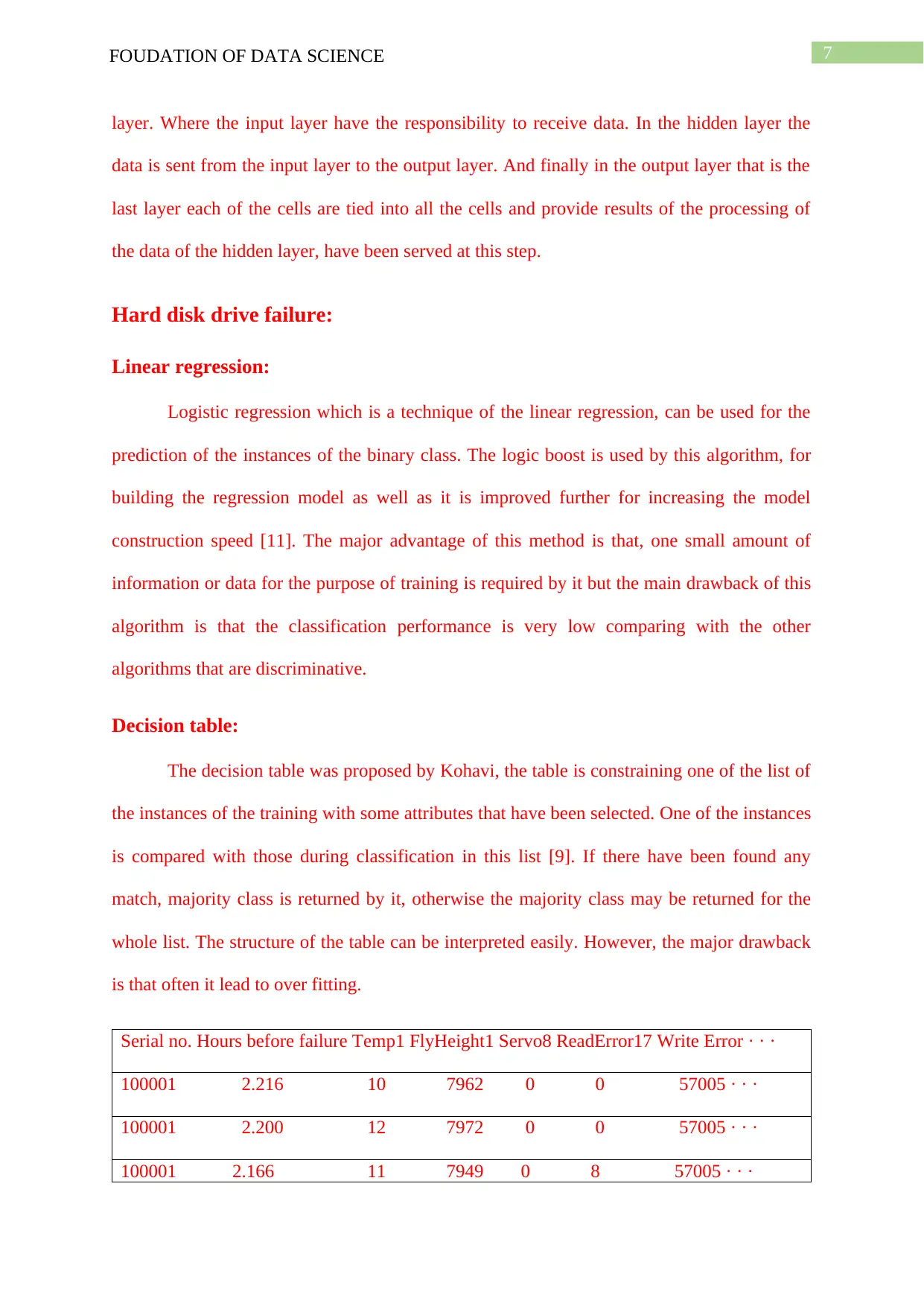

Decision table:

The decision table was proposed by Kohavi, the table is constraining one of the list of

the instances of the training with some attributes that have been selected. One of the instances

is compared with those during classification in this list [9]. If there have been found any

match, majority class is returned by it, otherwise the majority class may be returned for the

whole list. The structure of the table can be interpreted easily. However, the major drawback

is that often it lead to over fitting.

Serial no. Hours before failure Temp1 FlyHeight1 Servo8 ReadError17 Write Error · · ·

100001 2.216 10 7962 0 0 57005 · · ·

100001 2.200 12 7972 0 0 57005 · · ·

100001 2.166 11 7949 0 8 57005 · · ·

layer. Where the input layer have the responsibility to receive data. In the hidden layer the

data is sent from the input layer to the output layer. And finally in the output layer that is the

last layer each of the cells are tied into all the cells and provide results of the processing of

the data of the hidden layer, have been served at this step.

Hard disk drive failure:

Linear regression:

Logistic regression which is a technique of the linear regression, can be used for the

prediction of the instances of the binary class. The logic boost is used by this algorithm, for

building the regression model as well as it is improved further for increasing the model

construction speed [11]. The major advantage of this method is that, one small amount of

information or data for the purpose of training is required by it but the main drawback of this

algorithm is that the classification performance is very low comparing with the other

algorithms that are discriminative.

Decision table:

The decision table was proposed by Kohavi, the table is constraining one of the list of

the instances of the training with some attributes that have been selected. One of the instances

is compared with those during classification in this list [9]. If there have been found any

match, majority class is returned by it, otherwise the majority class may be returned for the

whole list. The structure of the table can be interpreted easily. However, the major drawback

is that often it lead to over fitting.

Serial no. Hours before failure Temp1 FlyHeight1 Servo8 ReadError17 Write Error · · ·

100001 2.216 10 7962 0 0 57005 · · ·

100001 2.200 12 7972 0 0 57005 · · ·

100001 2.166 11 7949 0 8 57005 · · ·

8FOUDATION OF DATA SCIENCE

100001 2.133 9 7955 0 1280008 57005

Figure: Dataset example

Sequential Minimal Optimization (SMO):

Sequential Minimal Optimization is proposed by Platt, it is a technique for the

improvement of the SVM for speeding up the phase of the training [10]. The internal

computation of the problems that are quadratic is reduced into sub problems that are small,

can be analytically solved by Sequential Minimal Optimization (SMO).

Conclusion:

The detection of the proactive failure in one approach that is aimed for foresee a

failure that is pending as well as for issuing a initiate and warning an action for recovery

before that failure will be cause for the damage of the system.

For IP Traffic flows the SVM and native bayes is the best algorithm but the decision

tree classifier is not as good as these algorithm as it is difficult to implement. And for

Network traffic anomaly detection the k nearest algorithm is the best algorithm for machine

learning. But the MLP and Adaboost is not so good. For the Hard disk drive failure the

Sequential Minimal Optimization (SMO) is the worst algorithm as it is not so flexible.

However the decision table and linear regression is the best for implementation [12].

To choose the best algorithm for one specific application, is needed to be taken into

the account to predict the quality and prediction and training times.

100001 2.133 9 7955 0 1280008 57005

Figure: Dataset example

Sequential Minimal Optimization (SMO):

Sequential Minimal Optimization is proposed by Platt, it is a technique for the

improvement of the SVM for speeding up the phase of the training [10]. The internal

computation of the problems that are quadratic is reduced into sub problems that are small,

can be analytically solved by Sequential Minimal Optimization (SMO).

Conclusion:

The detection of the proactive failure in one approach that is aimed for foresee a

failure that is pending as well as for issuing a initiate and warning an action for recovery

before that failure will be cause for the damage of the system.

For IP Traffic flows the SVM and native bayes is the best algorithm but the decision

tree classifier is not as good as these algorithm as it is difficult to implement. And for

Network traffic anomaly detection the k nearest algorithm is the best algorithm for machine

learning. But the MLP and Adaboost is not so good. For the Hard disk drive failure the

Sequential Minimal Optimization (SMO) is the worst algorithm as it is not so flexible.

However the decision table and linear regression is the best for implementation [12].

To choose the best algorithm for one specific application, is needed to be taken into

the account to predict the quality and prediction and training times.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9FOUDATION OF DATA SCIENCE

References:

[1] Ahmed, Tarem, Boris Oreshkin, and Mark Coates. "Machine learning approaches to

network anomaly detection." In Proceedings of the 2nd USENIX workshop on

Tackling computer systems problems with machine learning techniques, pp. 1-6.

USENIX Association, 2007.

[2] Chakraborty, Pranamesh, Yaw Okyere Adu-Gyamfi, Subhadipto Poddar, Vesal

Ahsani, Anuj Sharma, and Soumik Sarkar. "Traffic congestion detection from camera

images using deep convolution neural networks." Transportation Research

Record 2672, no. 45 (2018): 222-231.

[3] Garcia-Teodoro, Pedro, Jesus Diaz-Verdejo, Gabriel Maciá-Fernández, and Enrique

Vázquez. "Anomaly-based network intrusion detection: Techniques, systems and

challenges." computers & security 28, no. 1-2 (2009): 18-28.

[4] Hamerly, Greg, and Charles Elkan. "Bayesian approaches to failure prediction for

disk drives." In ICML, vol. 1, pp. 202-209. 2001.

[5] Murray, Joseph F., Gordon F. Hughes, and Kenneth Kreutz-Delgado. "Machine

learning methods for predicting failures in hard drives: A multiple-instance

application." Journal of Machine Learning Research 6, no. May (2005): 783-816.

[6] Murray, Joseph F., Gordon F. Hughes, and Kenneth Kreutz-Delgado. "Hard drive

failure prediction using non-parametric statistical methods." In Proceedings of

ICANN/ICONIP. 2003.

[7] Nguyen, Thuy TT, and Grenville J. Armitage. "A survey of techniques for internet

traffic classification using machine learning." IEEE Communications Surveys and

Tutorials 10, no. 1-4 (2008): 56-76.

[8] Pitakrat, Teerat, André van Hoorn, and Lars Grunske. "A comparison of machine

learning algorithms for proactive hard disk drive failure detection." In Proceedings of

References:

[1] Ahmed, Tarem, Boris Oreshkin, and Mark Coates. "Machine learning approaches to

network anomaly detection." In Proceedings of the 2nd USENIX workshop on

Tackling computer systems problems with machine learning techniques, pp. 1-6.

USENIX Association, 2007.

[2] Chakraborty, Pranamesh, Yaw Okyere Adu-Gyamfi, Subhadipto Poddar, Vesal

Ahsani, Anuj Sharma, and Soumik Sarkar. "Traffic congestion detection from camera

images using deep convolution neural networks." Transportation Research

Record 2672, no. 45 (2018): 222-231.

[3] Garcia-Teodoro, Pedro, Jesus Diaz-Verdejo, Gabriel Maciá-Fernández, and Enrique

Vázquez. "Anomaly-based network intrusion detection: Techniques, systems and

challenges." computers & security 28, no. 1-2 (2009): 18-28.

[4] Hamerly, Greg, and Charles Elkan. "Bayesian approaches to failure prediction for

disk drives." In ICML, vol. 1, pp. 202-209. 2001.

[5] Murray, Joseph F., Gordon F. Hughes, and Kenneth Kreutz-Delgado. "Machine

learning methods for predicting failures in hard drives: A multiple-instance

application." Journal of Machine Learning Research 6, no. May (2005): 783-816.

[6] Murray, Joseph F., Gordon F. Hughes, and Kenneth Kreutz-Delgado. "Hard drive

failure prediction using non-parametric statistical methods." In Proceedings of

ICANN/ICONIP. 2003.

[7] Nguyen, Thuy TT, and Grenville J. Armitage. "A survey of techniques for internet

traffic classification using machine learning." IEEE Communications Surveys and

Tutorials 10, no. 1-4 (2008): 56-76.

[8] Pitakrat, Teerat, André van Hoorn, and Lars Grunske. "A comparison of machine

learning algorithms for proactive hard disk drive failure detection." In Proceedings of

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

10FOUDATION OF DATA SCIENCE

the 4th international ACM Sigsoft symposium on Architecting critical systems, pp. 1-

10. ACM, 2013.

[9] Shon, Taeshik, and Jongsub Moon. "A hybrid machine learning approach to network

anomaly detection." Information Sciences 177, no. 18 (2007): 3799-3821.

[10] Sommer, Robin, and Vern Paxson. "Outside the closed world: On using

machine learning for network intrusion detection." In 2010 IEEE symposium on

security and privacy, pp. 305-316. IEEE, 2010.

[11] Williams, Nigel, Sebastian Zander, and Grenville Armitage. "A preliminary

performance comparison of five machine learning algorithms for practical IP traffic

flow classification." ACM SIGCOMM Computer Communication Review 36, no. 5

(2006): 5-16.

[12] Zander, Sebastian, Thuy Nguyen, and Grenville Armitage. "Automated traffic

classification and application identification using machine learning." In The IEEE

Conference on Local Computer Networks 30th Anniversary (LCN'05) l, pp. 250-257.

IEEE, 2005.

the 4th international ACM Sigsoft symposium on Architecting critical systems, pp. 1-

10. ACM, 2013.

[9] Shon, Taeshik, and Jongsub Moon. "A hybrid machine learning approach to network

anomaly detection." Information Sciences 177, no. 18 (2007): 3799-3821.

[10] Sommer, Robin, and Vern Paxson. "Outside the closed world: On using

machine learning for network intrusion detection." In 2010 IEEE symposium on

security and privacy, pp. 305-316. IEEE, 2010.

[11] Williams, Nigel, Sebastian Zander, and Grenville Armitage. "A preliminary

performance comparison of five machine learning algorithms for practical IP traffic

flow classification." ACM SIGCOMM Computer Communication Review 36, no. 5

(2006): 5-16.

[12] Zander, Sebastian, Thuy Nguyen, and Grenville Armitage. "Automated traffic

classification and application identification using machine learning." In The IEEE

Conference on Local Computer Networks 30th Anniversary (LCN'05) l, pp. 250-257.

IEEE, 2005.

1 out of 11

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2025 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.