GENDER AND ARTIFICIAL INTELLIGENCE

VerifiedAdded on 2022/08/22

|14

|3662

|14

AI Summary

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

Running head: - GENDER AND ARTIFICIAL INTELLIGENCE

GENDER AND ARTIFICIAL INTELLIGENCE

Name of the Student

Name of the University

Author Note

GENDER AND ARTIFICIAL INTELLIGENCE

Name of the Student

Name of the University

Author Note

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

1GENDER AND ARTIFICIAL INTELLIGENCE

Table of Contents

Gender and Artificial Intelligence..............................................................................................2

References................................................................................................................................11

Table of Contents

Gender and Artificial Intelligence..............................................................................................2

References................................................................................................................................11

2GENDER AND ARTIFICIAL INTELLIGENCE

Gender and Artificial Intelligence

The relative researches that have been carried out in reference to the particular needs

of Artificial Intelligence is to recognize the fact that all of these existing biases are mainly

resulting from the inherent biases of human beings. The models along with the systems that

have been created as well as trained are the particular reflection of the humans, typically

termed as Artificial Intelligence.

Hence, it is a clear fact that has been placed forward regarding the fact that Artificial

Intelligence has been continuously learning gender bias from the humans in particular (Smith

& Neupane, 2018). For instance, the natural language processing (NLP) , refers to a critical

ingredient belonging to the commonly existing AI systems like that of Amazon’s Alexa and

that of Apple’s Siri that have been visibly identified to put forward gender bias. However,

this incident is not considered a standalone event in particular. There is the shared existence

of a number of high profile cases regarding the fact of gender bias, having the primary

inclusion of visions systems belonging to the computers for the purpose of gender

recognition. These systems have been identified to recognize women more than that of men

primarily the ones having a darker skin tone. As a reason, the production of technology that

has been considering fair, must have the inclusion of concerted effort from that of the carried

out researches as well as the teams related to machine learning across the entire industry

towards the correctness of the visible imbalance (Costa & Ribas, 2019). Fortunately, the

researchers have been looking at how the imbalance can be rectified with the manufacturing

and developing of newer systems in particular.

In particular, the research regarding the bias has been carried out in regards to the

word embedding, which refers to the process of converting the numerical representations that

have the provision to be used in the form of inputs within the natural language processing

Gender and Artificial Intelligence

The relative researches that have been carried out in reference to the particular needs

of Artificial Intelligence is to recognize the fact that all of these existing biases are mainly

resulting from the inherent biases of human beings. The models along with the systems that

have been created as well as trained are the particular reflection of the humans, typically

termed as Artificial Intelligence.

Hence, it is a clear fact that has been placed forward regarding the fact that Artificial

Intelligence has been continuously learning gender bias from the humans in particular (Smith

& Neupane, 2018). For instance, the natural language processing (NLP) , refers to a critical

ingredient belonging to the commonly existing AI systems like that of Amazon’s Alexa and

that of Apple’s Siri that have been visibly identified to put forward gender bias. However,

this incident is not considered a standalone event in particular. There is the shared existence

of a number of high profile cases regarding the fact of gender bias, having the primary

inclusion of visions systems belonging to the computers for the purpose of gender

recognition. These systems have been identified to recognize women more than that of men

primarily the ones having a darker skin tone. As a reason, the production of technology that

has been considering fair, must have the inclusion of concerted effort from that of the carried

out researches as well as the teams related to machine learning across the entire industry

towards the correctness of the visible imbalance (Costa & Ribas, 2019). Fortunately, the

researchers have been looking at how the imbalance can be rectified with the manufacturing

and developing of newer systems in particular.

In particular, the research regarding the bias has been carried out in regards to the

word embedding, which refers to the process of converting the numerical representations that

have the provision to be used in the form of inputs within the natural language processing

3GENDER AND ARTIFICIAL INTELLIGENCE

models (Kim et al., 2019). Word embedding specifically put forward the representation of

words in the form of sequence, numbers or as vectors. Situations where two existing words

consists of similar meanings, the associated embedding to them shall have closeness to each

other in reference to the mathematical sense. These mentioned embedding encode all such

information by carrying out assessment of the context wherein the word has the occurrence.

For instance, artificial intelligence has the potential capability to fill in the word ‘queen’ in a

sentence that says, “Man is referred to as the kind, woman be the X”. However, the

associated issue arises in the scenario where AI fills in such sentences like that of “Fathers is

to doctor as mother is to nurse.” The inherent biasing in regards to the gender within the

existing remark puts forward a reflection regarding the outdated perception of the women

present within the society that has no dependency upon the fact of equality of gender

discrimination to be exact.

Few of the researches that have been carried out have assessed the effects of this

gender bias present in the speech in respect to the emotion (Leavy, 2018). The emotion in the

field of Artificial Intelligence has played a primary role towards the future of this work, along

with that of marketing as well as every single industry in this regard. Within the human

beings, this bias takes place whenever a particular individual misinterprets all the emotions in

the form of a demographic category frequently than that of the other existing instances. This

similar kind of a bias in the present situation has been observed within the machines as well

as how they misclassify the relative information having a direct relation with that of the

emotions (Daugherty, Wilson & Chowdhury, 2018). In order to have a proper understanding

of the same, as well as to get know of the proper method for the fixation, the primarily

important attention should be typically placed upon Artificial Intelligence along with the

gender bias particularly.

Causes of AI bias

models (Kim et al., 2019). Word embedding specifically put forward the representation of

words in the form of sequence, numbers or as vectors. Situations where two existing words

consists of similar meanings, the associated embedding to them shall have closeness to each

other in reference to the mathematical sense. These mentioned embedding encode all such

information by carrying out assessment of the context wherein the word has the occurrence.

For instance, artificial intelligence has the potential capability to fill in the word ‘queen’ in a

sentence that says, “Man is referred to as the kind, woman be the X”. However, the

associated issue arises in the scenario where AI fills in such sentences like that of “Fathers is

to doctor as mother is to nurse.” The inherent biasing in regards to the gender within the

existing remark puts forward a reflection regarding the outdated perception of the women

present within the society that has no dependency upon the fact of equality of gender

discrimination to be exact.

Few of the researches that have been carried out have assessed the effects of this

gender bias present in the speech in respect to the emotion (Leavy, 2018). The emotion in the

field of Artificial Intelligence has played a primary role towards the future of this work, along

with that of marketing as well as every single industry in this regard. Within the human

beings, this bias takes place whenever a particular individual misinterprets all the emotions in

the form of a demographic category frequently than that of the other existing instances. This

similar kind of a bias in the present situation has been observed within the machines as well

as how they misclassify the relative information having a direct relation with that of the

emotions (Daugherty, Wilson & Chowdhury, 2018). In order to have a proper understanding

of the same, as well as to get know of the proper method for the fixation, the primarily

important attention should be typically placed upon Artificial Intelligence along with the

gender bias particularly.

Causes of AI bias

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

4GENDER AND ARTIFICIAL INTELLIGENCE

In reference to the context of machine learning, the term bias can have a meaning that

refers to a greater level in terms of error belonging to certain categories of demography. As a

reason, there is no real existence of a root cause that initiates this particular type of a bias.

There is the shared existence of multiple variables that all the researchers within this common

field should consider while developing as well as training the models of machine learning

having the inclusion of the following factors,

Presence of an incomplete or a skewed dataset for training: this particularly takes

place when the categories belonging to demography have been missing from the data

that was considered for training (Coeckelbergh, 2019). Models that have been

developed with the proper utilization of this dataset have the carried possibility of

failing during the process of scaling the times when such data are specifically applied

to those of the missing categories. For instance, whenever a female speaker builds up

10% of the data for training, the application of the same into the model for machine

learning in regards to the female, there is a higher possibility of major errors taking

place.

Training labels: majority of the commercially existing AI systems make use of

supervised machine learning putting forward the meaning the data meant for training

has been labelled to teach the model with certain traits of behaviour in particular.

Most often, humans put forward such labels as well as give provision to the

occurrence of such biases taking place within the models of machine learning. This

form of data have the provision to be unintentionally encoded into the models of

machine learning (Vachovsky, 2016). It has been stated that the models of machine

learning have been designed for the purpose of estimating such labels, followed by a

misclassification as well as unfairness towards the category of particular gender and

hence, giving rise to gender bias in terms of Artificial Intelligence.

In reference to the context of machine learning, the term bias can have a meaning that

refers to a greater level in terms of error belonging to certain categories of demography. As a

reason, there is no real existence of a root cause that initiates this particular type of a bias.

There is the shared existence of multiple variables that all the researchers within this common

field should consider while developing as well as training the models of machine learning

having the inclusion of the following factors,

Presence of an incomplete or a skewed dataset for training: this particularly takes

place when the categories belonging to demography have been missing from the data

that was considered for training (Coeckelbergh, 2019). Models that have been

developed with the proper utilization of this dataset have the carried possibility of

failing during the process of scaling the times when such data are specifically applied

to those of the missing categories. For instance, whenever a female speaker builds up

10% of the data for training, the application of the same into the model for machine

learning in regards to the female, there is a higher possibility of major errors taking

place.

Training labels: majority of the commercially existing AI systems make use of

supervised machine learning putting forward the meaning the data meant for training

has been labelled to teach the model with certain traits of behaviour in particular.

Most often, humans put forward such labels as well as give provision to the

occurrence of such biases taking place within the models of machine learning. This

form of data have the provision to be unintentionally encoded into the models of

machine learning (Vachovsky, 2016). It has been stated that the models of machine

learning have been designed for the purpose of estimating such labels, followed by a

misclassification as well as unfairness towards the category of particular gender and

hence, giving rise to gender bias in terms of Artificial Intelligence.

5GENDER AND ARTIFICIAL INTELLIGENCE

Modelling techniques and features: the measurements that are utilized in the form of

inputs for the purpose of models in machine learning, or might as well refer to the real

time models for the same also have the possible inclusion of getting introduced with

gender bias. For example, over a period of decades, text-to-speech technology as well

as speech recognition technology has been seen performing poorly in respect to the

female speakers when compared to the males. This has been attributed towards the

fact that the method following which the speech is analysed as well as modelled is

considered to have more accuracy for taller speakers carrying longer vocal cords

along with lower pitched voce (Sutko, 2019). As a reason, the technology related to

speech has been considered to be much more accurate for the speakers carrying these

specific characteristics that are usually males. On the contrary, higher pitched voices

refer to that of females.

Practices to avoid the occurrence of gender bias

In reference to majority of the things that have a shared existence in real life, the

causes along with the solutions in terms of biases occurring within the field of Artificial

Intelligence are neither black nor white (Bellamy et al., 2019). However, fairness in terms of

skin tone shall also be quantified to provision with a helping hand towards the mitigation of

such effect that might give rise to gender bias within the systems of Artificial Intelligence. In

regards to executives who have been visibly identified to remain interested into the

procedures of tapping the power of AI, but have been more concerned regarding the fact of

bias. The fact that has been considered to be important is to particularly ensure with the

following events in terms of teams for the purpose of machine learning,

Ensuring of diversity within the presence of training samples.

Modelling techniques and features: the measurements that are utilized in the form of

inputs for the purpose of models in machine learning, or might as well refer to the real

time models for the same also have the possible inclusion of getting introduced with

gender bias. For example, over a period of decades, text-to-speech technology as well

as speech recognition technology has been seen performing poorly in respect to the

female speakers when compared to the males. This has been attributed towards the

fact that the method following which the speech is analysed as well as modelled is

considered to have more accuracy for taller speakers carrying longer vocal cords

along with lower pitched voce (Sutko, 2019). As a reason, the technology related to

speech has been considered to be much more accurate for the speakers carrying these

specific characteristics that are usually males. On the contrary, higher pitched voices

refer to that of females.

Practices to avoid the occurrence of gender bias

In reference to majority of the things that have a shared existence in real life, the

causes along with the solutions in terms of biases occurring within the field of Artificial

Intelligence are neither black nor white (Bellamy et al., 2019). However, fairness in terms of

skin tone shall also be quantified to provision with a helping hand towards the mitigation of

such effect that might give rise to gender bias within the systems of Artificial Intelligence. In

regards to executives who have been visibly identified to remain interested into the

procedures of tapping the power of AI, but have been more concerned regarding the fact of

bias. The fact that has been considered to be important is to particularly ensure with the

following events in terms of teams for the purpose of machine learning,

Ensuring of diversity within the presence of training samples.

6GENDER AND ARTIFICIAL INTELLIGENCE

Ensuring the fact that human labelling the samples of audio are retrieved from

diversified backgrounds in particular (Rocha, Carneiro & Novais, 2019).

Provisioning encouragement towards the teams of machine learning to perfectly

measure the levels of accuracy having a separation between the differently existing

categories of demography as well as to visibly identify the scenarios wherein one of

the category is provided with unfavourable treatment.

Finding solutions for solving the unfairness with the help of collecting more of data to

be used for training in association to the sensitive groups in particular. Herein, it can

be understood that application of modern means of machine learning with the help of

techniques in relation to de-biasing put forward with variable methods for the purpose

of penalizing not just the existent errors but also the primarily important variable in

this regard (Noriega, 2019). However, this also has the primary inclusion of additional

penalties for the production of unfairness.

Carrying out examination of such causes along with their solutions has been

considered to be an important first step, there is the possibility of a number of questions that

are not left unanswered. Beyond the scope of machine learning and its relative training, the

industry also has the inclusion of a primary need for the development of much more holistic

approach towards the addressing of three primary causes for the purpose of biasing that have

been briefly described above. In addition to this, the researches that shall be carried out in

future, should take into consideration the data having the inclusion of a broader

representation of the variants present among gender such as the like of transgender, non-

binary and so on (Buolamwini & Gebru, 2018). This will provision with a helping hand

towards a better understanding of how to handle the expansion that is occurring within

diversity.

Ensuring the fact that human labelling the samples of audio are retrieved from

diversified backgrounds in particular (Rocha, Carneiro & Novais, 2019).

Provisioning encouragement towards the teams of machine learning to perfectly

measure the levels of accuracy having a separation between the differently existing

categories of demography as well as to visibly identify the scenarios wherein one of

the category is provided with unfavourable treatment.

Finding solutions for solving the unfairness with the help of collecting more of data to

be used for training in association to the sensitive groups in particular. Herein, it can

be understood that application of modern means of machine learning with the help of

techniques in relation to de-biasing put forward with variable methods for the purpose

of penalizing not just the existent errors but also the primarily important variable in

this regard (Noriega, 2019). However, this also has the primary inclusion of additional

penalties for the production of unfairness.

Carrying out examination of such causes along with their solutions has been

considered to be an important first step, there is the possibility of a number of questions that

are not left unanswered. Beyond the scope of machine learning and its relative training, the

industry also has the inclusion of a primary need for the development of much more holistic

approach towards the addressing of three primary causes for the purpose of biasing that have

been briefly described above. In addition to this, the researches that shall be carried out in

future, should take into consideration the data having the inclusion of a broader

representation of the variants present among gender such as the like of transgender, non-

binary and so on (Buolamwini & Gebru, 2018). This will provision with a helping hand

towards a better understanding of how to handle the expansion that is occurring within

diversity.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7GENDER AND ARTIFICIAL INTELLIGENCE

There is the existence of an obligation towards the creation of technology that is

seemingly effective as well as fair towards every single individual. There is the shared

existence of a belief that the benefits aligned to that of artificial intelligence will specifically

outweigh all of the risks that can be addressed collectively. Hence, the practitioners as well as

the leaders within the field of Artificial Intelligence have been entrusted upon with the

responsibility to collaborate, carry out research and to properly develop solutions for the

same.

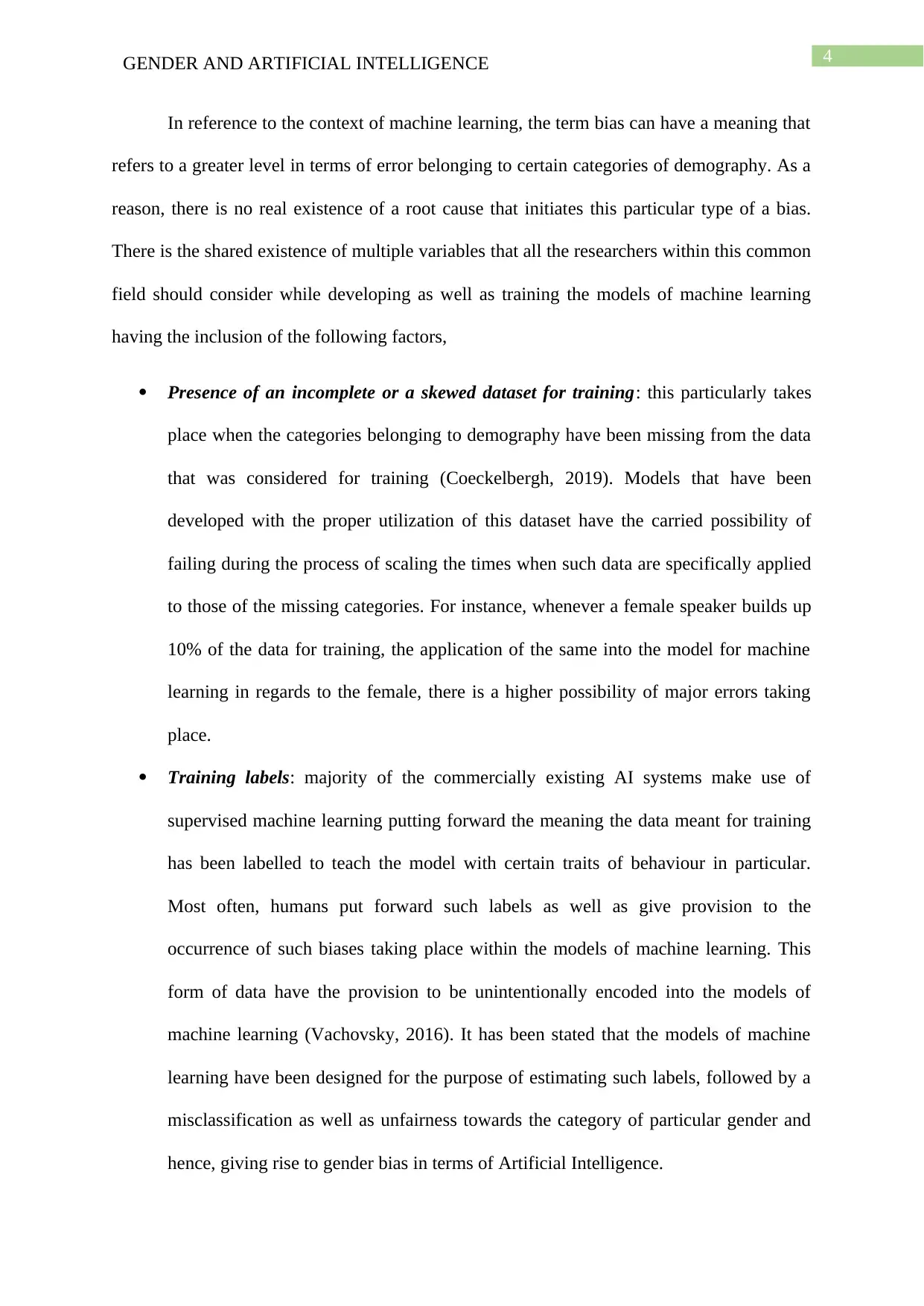

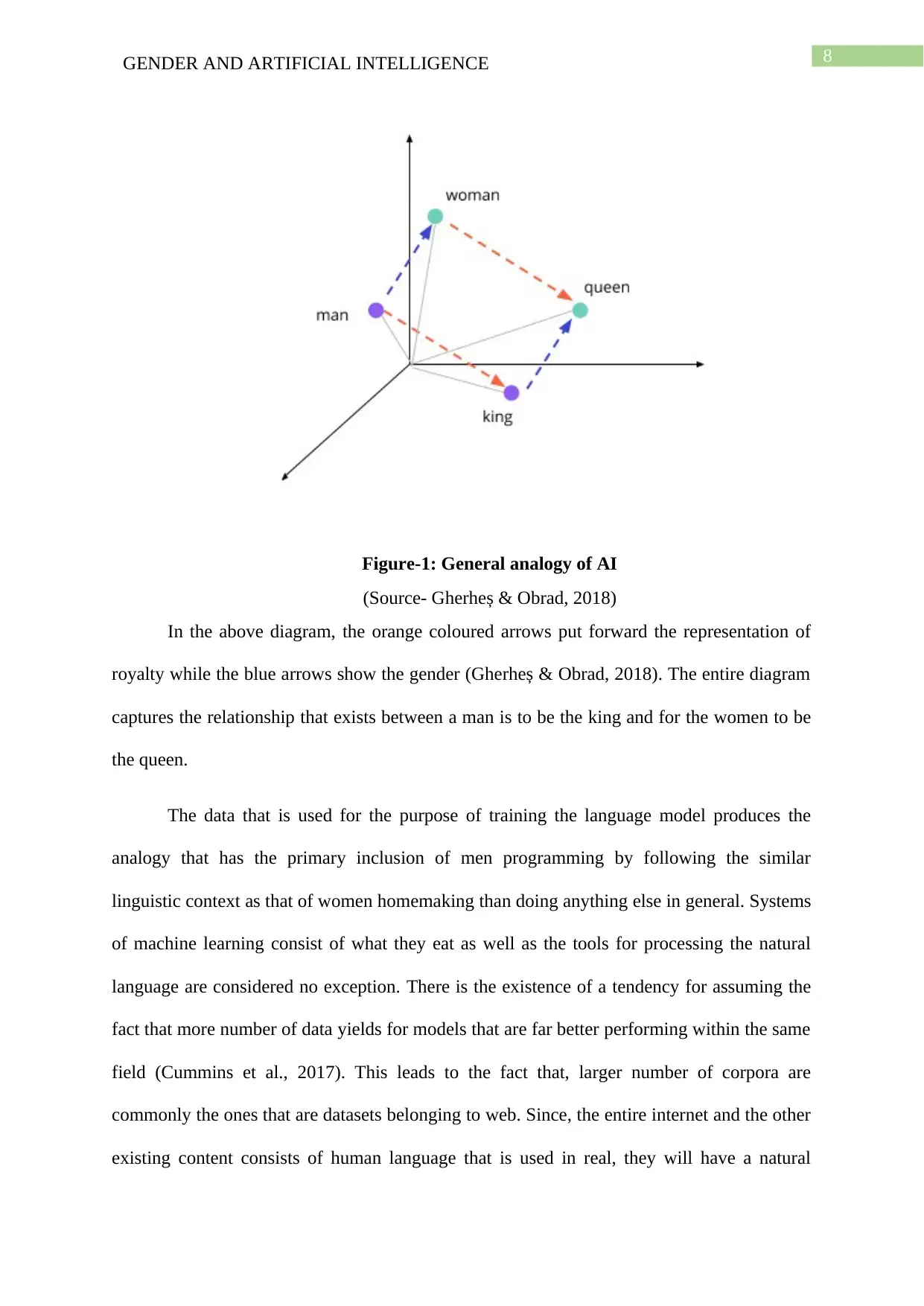

To have a better understanding of how the entire procedure works out, there is a

requirement to understand the working of computer program and the methods that is follows

to display the expected outcome (da Costa, 2018). Computer systems have been built in such

a way that they keep running the procedure of learning from every single word that is been

provided to them due course of training the data with the help of millions of lines consisting

of texts.

Since, the system of word embedding refers to numbers, they can be represented with

the help of visualization with the help of coordinates present in a plane along with a specific

distance present between the words, specifically aiming towards the angle present between

them.

There is the existence of an obligation towards the creation of technology that is

seemingly effective as well as fair towards every single individual. There is the shared

existence of a belief that the benefits aligned to that of artificial intelligence will specifically

outweigh all of the risks that can be addressed collectively. Hence, the practitioners as well as

the leaders within the field of Artificial Intelligence have been entrusted upon with the

responsibility to collaborate, carry out research and to properly develop solutions for the

same.

To have a better understanding of how the entire procedure works out, there is a

requirement to understand the working of computer program and the methods that is follows

to display the expected outcome (da Costa, 2018). Computer systems have been built in such

a way that they keep running the procedure of learning from every single word that is been

provided to them due course of training the data with the help of millions of lines consisting

of texts.

Since, the system of word embedding refers to numbers, they can be represented with

the help of visualization with the help of coordinates present in a plane along with a specific

distance present between the words, specifically aiming towards the angle present between

them.

8GENDER AND ARTIFICIAL INTELLIGENCE

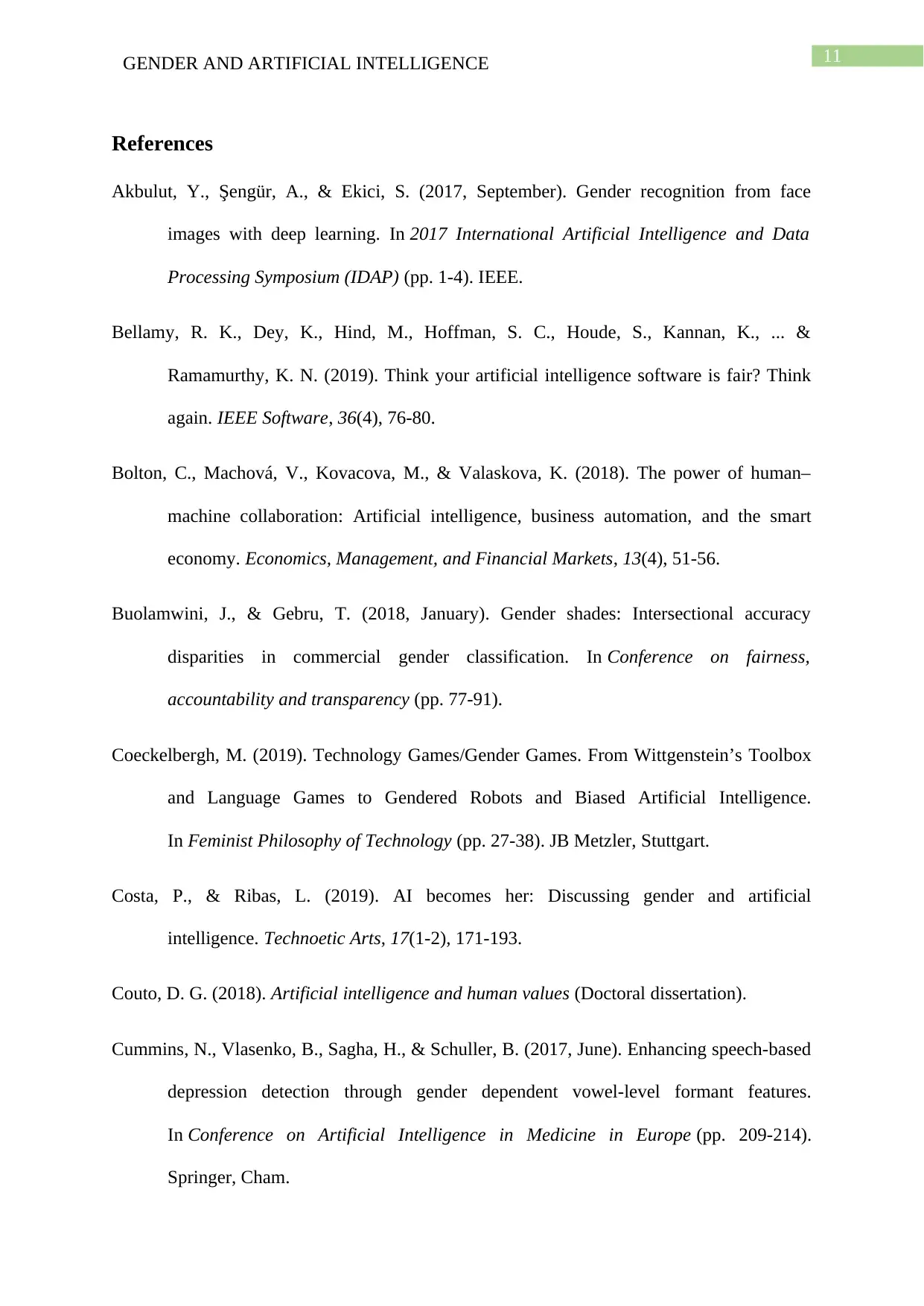

Figure-1: General analogy of AI

(Source- Gherheș & Obrad, 2018)

In the above diagram, the orange coloured arrows put forward the representation of

royalty while the blue arrows show the gender (Gherheș & Obrad, 2018). The entire diagram

captures the relationship that exists between a man is to be the king and for the women to be

the queen.

The data that is used for the purpose of training the language model produces the

analogy that has the primary inclusion of men programming by following the similar

linguistic context as that of women homemaking than doing anything else in general. Systems

of machine learning consist of what they eat as well as the tools for processing the natural

language are considered no exception. There is the existence of a tendency for assuming the

fact that more number of data yields for models that are far better performing within the same

field (Cummins et al., 2017). This leads to the fact that, larger number of corpora are

commonly the ones that are datasets belonging to web. Since, the entire internet and the other

existing content consists of human language that is used in real, they will have a natural

Figure-1: General analogy of AI

(Source- Gherheș & Obrad, 2018)

In the above diagram, the orange coloured arrows put forward the representation of

royalty while the blue arrows show the gender (Gherheș & Obrad, 2018). The entire diagram

captures the relationship that exists between a man is to be the king and for the women to be

the queen.

The data that is used for the purpose of training the language model produces the

analogy that has the primary inclusion of men programming by following the similar

linguistic context as that of women homemaking than doing anything else in general. Systems

of machine learning consist of what they eat as well as the tools for processing the natural

language are considered no exception. There is the existence of a tendency for assuming the

fact that more number of data yields for models that are far better performing within the same

field (Cummins et al., 2017). This leads to the fact that, larger number of corpora are

commonly the ones that are datasets belonging to web. Since, the entire internet and the other

existing content consists of human language that is used in real, they will have a natural

9GENDER AND ARTIFICIAL INTELLIGENCE

tendency to carry out biases that most of the humans usually do and do not pay heed to the

same more often.

For the removal of this gender bias from the machine learning systems working

within the field of Artificial Intelligence, there is a dire need to change the data for training. If

the researches put forward the results, which say that the machines learn the bias from the

data that is fed into them, there is an urgent requirement to de-bias the same. One such

primary method that has been highlighted is referred to as ‘gender-swapping’ (Bolton et al.,

2018). In this method, the primary activity is to augment the training data following such a

method that will create an additional sentence to every initial sentence followed by replacing

the pronouns and the gendered words with that of the opposite gender. This also includes the

substitution of names with that of the entity placeholders.

However, it shall be noted that the above-mentioned method refers to an approach that

is straightforward within the field of English, which is again a language without having the

real existence of a grammatical gender. On the other hand, swapping of pronouns in the other

existing languages is considered non-sufficient (Shah & Warwick, 2016). This is because;

adjectives and other modifiers merely express the gender as well. For instance, the romance

languages such as the like of French, Spanish or Portuguese have no inclusion of any

grammatical gender.

A different method that might be taken into consideration within the process of

machine translation that provisions with a helping hand towards the translations are more

accurately gender-accurate involving the like of metadata within the sentences storing the

gender belonging to the subject of the same (Michael, Garcia-Souto & Dabnichki, 2017).

After the entire training has been carried out, request for translation followed by supply of the

tendency to carry out biases that most of the humans usually do and do not pay heed to the

same more often.

For the removal of this gender bias from the machine learning systems working

within the field of Artificial Intelligence, there is a dire need to change the data for training. If

the researches put forward the results, which say that the machines learn the bias from the

data that is fed into them, there is an urgent requirement to de-bias the same. One such

primary method that has been highlighted is referred to as ‘gender-swapping’ (Bolton et al.,

2018). In this method, the primary activity is to augment the training data following such a

method that will create an additional sentence to every initial sentence followed by replacing

the pronouns and the gendered words with that of the opposite gender. This also includes the

substitution of names with that of the entity placeholders.

However, it shall be noted that the above-mentioned method refers to an approach that

is straightforward within the field of English, which is again a language without having the

real existence of a grammatical gender. On the other hand, swapping of pronouns in the other

existing languages is considered non-sufficient (Shah & Warwick, 2016). This is because;

adjectives and other modifiers merely express the gender as well. For instance, the romance

languages such as the like of French, Spanish or Portuguese have no inclusion of any

grammatical gender.

A different method that might be taken into consideration within the process of

machine translation that provisions with a helping hand towards the translations are more

accurately gender-accurate involving the like of metadata within the sentences storing the

gender belonging to the subject of the same (Michael, Garcia-Souto & Dabnichki, 2017).

After the entire training has been carried out, request for translation followed by supply of the

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

10GENDER AND ARTIFICIAL INTELLIGENCE

desired gender tag, the specific model under consideration should give back the correct one

and not just the one relative to majority.

For instance, if the Hungarian-English system had been trained following the above

method, there could be the possibility of asking it to translate “Ő egy orvos” and in the end

receive the translation as “She is a doctor”, or “Ő egy nővér” and receive “He is a nurse” to

this context (Akbulut, Şengür & Ekici, 2017). To carry out this performance in reference to

this scale, there would be the particular need to train a different model that carries out the

classification of the gender present within a sentence and then to utilize the same to tag all of

the sentences. This again adds an additional layer of complexity.

All of these mentioned methods and the relative procedures are considered to be

effective towards the reduction of gender bias present within the NLP models. However, the

mentioned methods are again complex and take a lot of time for a successful implementation

of the same (Couto, 2018). This is because; there is an added requirement of an added

linguistic information that shall not be readily present or available for a possible obtainment

to be implemented within the procedures of Artificial Intelligence in reference to the models

of machine learning.

desired gender tag, the specific model under consideration should give back the correct one

and not just the one relative to majority.

For instance, if the Hungarian-English system had been trained following the above

method, there could be the possibility of asking it to translate “Ő egy orvos” and in the end

receive the translation as “She is a doctor”, or “Ő egy nővér” and receive “He is a nurse” to

this context (Akbulut, Şengür & Ekici, 2017). To carry out this performance in reference to

this scale, there would be the particular need to train a different model that carries out the

classification of the gender present within a sentence and then to utilize the same to tag all of

the sentences. This again adds an additional layer of complexity.

All of these mentioned methods and the relative procedures are considered to be

effective towards the reduction of gender bias present within the NLP models. However, the

mentioned methods are again complex and take a lot of time for a successful implementation

of the same (Couto, 2018). This is because; there is an added requirement of an added

linguistic information that shall not be readily present or available for a possible obtainment

to be implemented within the procedures of Artificial Intelligence in reference to the models

of machine learning.

11GENDER AND ARTIFICIAL INTELLIGENCE

References

Akbulut, Y., Şengür, A., & Ekici, S. (2017, September). Gender recognition from face

images with deep learning. In 2017 International Artificial Intelligence and Data

Processing Symposium (IDAP) (pp. 1-4). IEEE.

Bellamy, R. K., Dey, K., Hind, M., Hoffman, S. C., Houde, S., Kannan, K., ... &

Ramamurthy, K. N. (2019). Think your artificial intelligence software is fair? Think

again. IEEE Software, 36(4), 76-80.

Bolton, C., Machová, V., Kovacova, M., & Valaskova, K. (2018). The power of human–

machine collaboration: Artificial intelligence, business automation, and the smart

economy. Economics, Management, and Financial Markets, 13(4), 51-56.

Buolamwini, J., & Gebru, T. (2018, January). Gender shades: Intersectional accuracy

disparities in commercial gender classification. In Conference on fairness,

accountability and transparency (pp. 77-91).

Coeckelbergh, M. (2019). Technology Games/Gender Games. From Wittgenstein’s Toolbox

and Language Games to Gendered Robots and Biased Artificial Intelligence.

In Feminist Philosophy of Technology (pp. 27-38). JB Metzler, Stuttgart.

Costa, P., & Ribas, L. (2019). AI becomes her: Discussing gender and artificial

intelligence. Technoetic Arts, 17(1-2), 171-193.

Couto, D. G. (2018). Artificial intelligence and human values (Doctoral dissertation).

Cummins, N., Vlasenko, B., Sagha, H., & Schuller, B. (2017, June). Enhancing speech-based

depression detection through gender dependent vowel-level formant features.

In Conference on Artificial Intelligence in Medicine in Europe (pp. 209-214).

Springer, Cham.

References

Akbulut, Y., Şengür, A., & Ekici, S. (2017, September). Gender recognition from face

images with deep learning. In 2017 International Artificial Intelligence and Data

Processing Symposium (IDAP) (pp. 1-4). IEEE.

Bellamy, R. K., Dey, K., Hind, M., Hoffman, S. C., Houde, S., Kannan, K., ... &

Ramamurthy, K. N. (2019). Think your artificial intelligence software is fair? Think

again. IEEE Software, 36(4), 76-80.

Bolton, C., Machová, V., Kovacova, M., & Valaskova, K. (2018). The power of human–

machine collaboration: Artificial intelligence, business automation, and the smart

economy. Economics, Management, and Financial Markets, 13(4), 51-56.

Buolamwini, J., & Gebru, T. (2018, January). Gender shades: Intersectional accuracy

disparities in commercial gender classification. In Conference on fairness,

accountability and transparency (pp. 77-91).

Coeckelbergh, M. (2019). Technology Games/Gender Games. From Wittgenstein’s Toolbox

and Language Games to Gendered Robots and Biased Artificial Intelligence.

In Feminist Philosophy of Technology (pp. 27-38). JB Metzler, Stuttgart.

Costa, P., & Ribas, L. (2019). AI becomes her: Discussing gender and artificial

intelligence. Technoetic Arts, 17(1-2), 171-193.

Couto, D. G. (2018). Artificial intelligence and human values (Doctoral dissertation).

Cummins, N., Vlasenko, B., Sagha, H., & Schuller, B. (2017, June). Enhancing speech-based

depression detection through gender dependent vowel-level formant features.

In Conference on Artificial Intelligence in Medicine in Europe (pp. 209-214).

Springer, Cham.

12GENDER AND ARTIFICIAL INTELLIGENCE

da Costa, P. C. F. (2018). Conversing with personal digital assistants: on gender and artificial

intelligence. Journal of Science and Technology of the Arts, 10(3), 2-59.

Daugherty, P., Wilson, H., & Chowdhury, R. (2018). Using artificial intelligence to promote

diversity. MIT Sloan Management Review.

Gherheș, V., & Obrad, C. (2018). Technical and Humanities Students’ Perspectives on the

Development and Sustainability of Artificial Intelligence (AI). Sustainability, 10(9),

3066.

Kim, A., Cho, M., Ahn, J., & Sung, Y. (2019). Effects of Gender and Relationship Type on

the Response to Artificial Intelligence. Cyberpsychology, Behavior, and Social

Networking, 22(4), 249-253.

Leavy, S. (2018, May). Gender bias in artificial intelligence: The need for diversity and

gender theory in machine learning. In Proceedings of the 1st international workshop

on gender equality in software engineering (pp. 14-16).

Michael, K., Garcia-Souto, M. P., & Dabnichki, P. (2017). An investigation of the suitability

of Artificial Neural Networks for the prediction of core and local skin temperatures

when trained with a large and gender-balanced database. Applied Soft Computing, 50,

327-343.

Noriega, M. N. (2019). The Application of Artificial Intelligence in Police Interrogations An

Analysis Addressing the Proposed Effect AI Has on Racial and Gender Bias,

Cooperation, and False Confessions (Master's thesis).

Rocha, R., Carneiro, D., & Novais, P. (2019, September). The Influence of Age and Gender

in the Interaction with Touch Screens. In EPIA Conference on Artificial

Intelligence (pp. 3-12). Springer, Cham.

da Costa, P. C. F. (2018). Conversing with personal digital assistants: on gender and artificial

intelligence. Journal of Science and Technology of the Arts, 10(3), 2-59.

Daugherty, P., Wilson, H., & Chowdhury, R. (2018). Using artificial intelligence to promote

diversity. MIT Sloan Management Review.

Gherheș, V., & Obrad, C. (2018). Technical and Humanities Students’ Perspectives on the

Development and Sustainability of Artificial Intelligence (AI). Sustainability, 10(9),

3066.

Kim, A., Cho, M., Ahn, J., & Sung, Y. (2019). Effects of Gender and Relationship Type on

the Response to Artificial Intelligence. Cyberpsychology, Behavior, and Social

Networking, 22(4), 249-253.

Leavy, S. (2018, May). Gender bias in artificial intelligence: The need for diversity and

gender theory in machine learning. In Proceedings of the 1st international workshop

on gender equality in software engineering (pp. 14-16).

Michael, K., Garcia-Souto, M. P., & Dabnichki, P. (2017). An investigation of the suitability

of Artificial Neural Networks for the prediction of core and local skin temperatures

when trained with a large and gender-balanced database. Applied Soft Computing, 50,

327-343.

Noriega, M. N. (2019). The Application of Artificial Intelligence in Police Interrogations An

Analysis Addressing the Proposed Effect AI Has on Racial and Gender Bias,

Cooperation, and False Confessions (Master's thesis).

Rocha, R., Carneiro, D., & Novais, P. (2019, September). The Influence of Age and Gender

in the Interaction with Touch Screens. In EPIA Conference on Artificial

Intelligence (pp. 3-12). Springer, Cham.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

13GENDER AND ARTIFICIAL INTELLIGENCE

Shah, H., & Warwick, K. (2016). Imitating Gender as a Measure for Artificial Intelligence:-Is

It Necessary?. In ICAART (1) (pp. 126-131).

Smith, M., & Neupane, S. (2018). Artificial intelligence and human development: toward a

research agenda.

Sutko, D. M. (2019). Theorizing femininity in artificial intelligence: a framework for undoing

technology’s gender troubles. Cultural Studies, 1-26.

Vachovsky, M. E., Wu, G., Chaturapruek, S., Russakovsky, O., Sommer, R., & Fei-Fei, L.

(2016, February). Toward more gender diversity in CS through an artificial

intelligence summer program for high school girls. In Proceedings of the 47th ACM

Technical Symposium on Computing Science Education (pp. 303-308).

Shah, H., & Warwick, K. (2016). Imitating Gender as a Measure for Artificial Intelligence:-Is

It Necessary?. In ICAART (1) (pp. 126-131).

Smith, M., & Neupane, S. (2018). Artificial intelligence and human development: toward a

research agenda.

Sutko, D. M. (2019). Theorizing femininity in artificial intelligence: a framework for undoing

technology’s gender troubles. Cultural Studies, 1-26.

Vachovsky, M. E., Wu, G., Chaturapruek, S., Russakovsky, O., Sommer, R., & Fei-Fei, L.

(2016, February). Toward more gender diversity in CS through an artificial

intelligence summer program for high school girls. In Proceedings of the 47th ACM

Technical Symposium on Computing Science Education (pp. 303-308).

1 out of 14

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.