IMAT5209 - Human Factors: Usability Evaluation of a Fall Risk App

VerifiedAdded on 2023/04/11

|26

|4938

|345

Report

AI Summary

This report presents a usability evaluation of a smartphone application designed to detect fall risk in older adults, a component of the WIISEL (Wireless Insole for Independent and Safe Elderly Living) system. The evaluation employs a human-centered design approach, adhering to ISO 9241-210 standards, to ensure user needs are central to the design process. The methodology involves use case development, expert analysis, and end-user analysis to identify usability problems and propose solutions. The study details the architecture of the fall risk detection system, the challenges faced by older adults when interacting with technology, and the specific scenarios where users interact with the smartphone interface. The evaluation incorporates feedback from experts in gerontology, nursing, and engineering, as well as older adults, to refine the app's design and improve its usability. The iterative process includes the development of paper prototypes and working prototypes, with continuous feedback integration to enhance the user experience and effectiveness of the fall risk detection system.

Running head: HUMAN FACTORS IN SYSTEM DESIGN

Human Factors in System Design: Usability of Phone App Detecting Health

Fall Risk Detection System for Adult Users

Name of Student-

Name of University-

Author’s Note-

Human Factors in System Design: Usability of Phone App Detecting Health

Fall Risk Detection System for Adult Users

Name of Student-

Name of University-

Author’s Note-

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1HUMAN FACTORS IN SYSTEM DESIGN

Part One: Interactive System and its Users

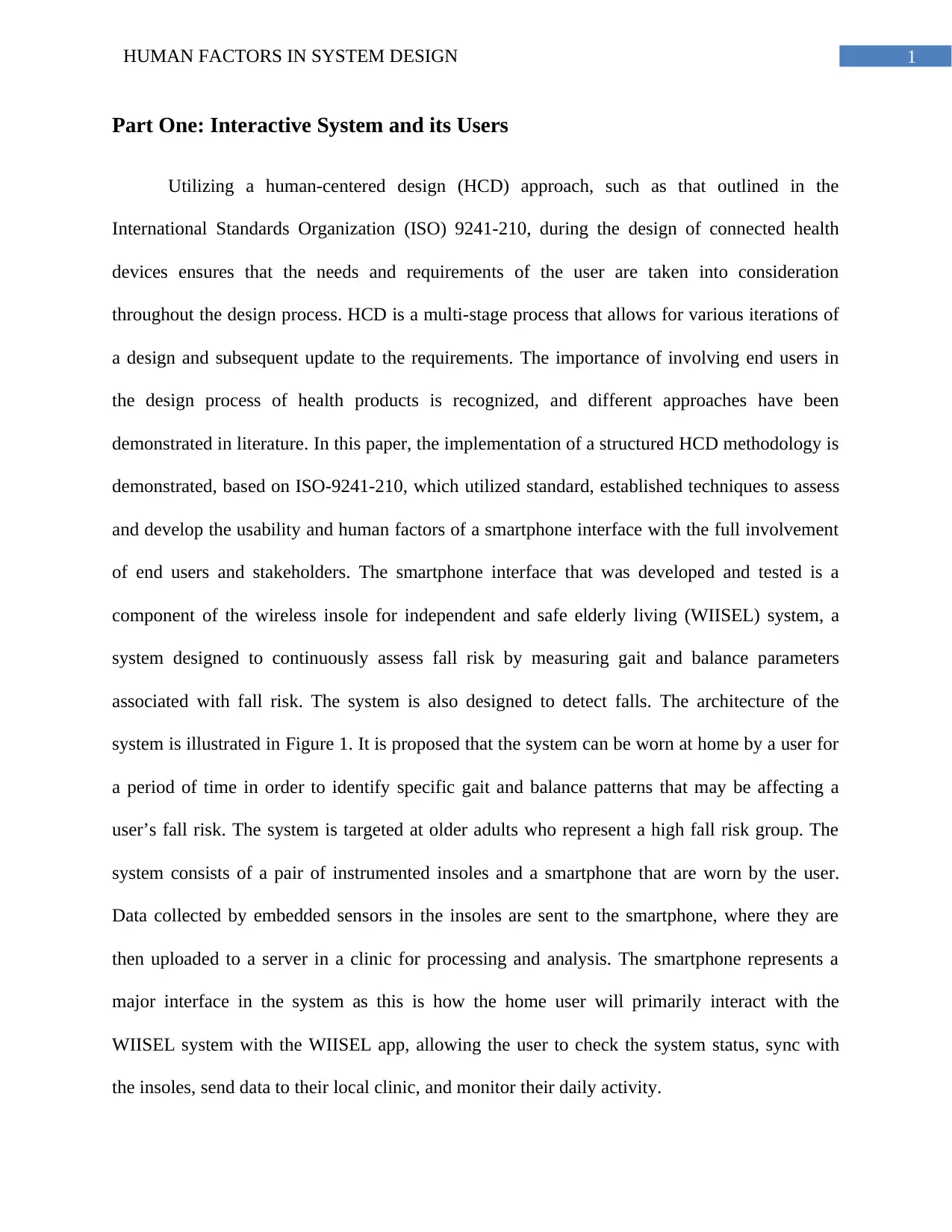

Utilizing a human-centered design (HCD) approach, such as that outlined in the

International Standards Organization (ISO) 9241-210, during the design of connected health

devices ensures that the needs and requirements of the user are taken into consideration

throughout the design process. HCD is a multi-stage process that allows for various iterations of

a design and subsequent update to the requirements. The importance of involving end users in

the design process of health products is recognized, and different approaches have been

demonstrated in literature. In this paper, the implementation of a structured HCD methodology is

demonstrated, based on ISO-9241-210, which utilized standard, established techniques to assess

and develop the usability and human factors of a smartphone interface with the full involvement

of end users and stakeholders. The smartphone interface that was developed and tested is a

component of the wireless insole for independent and safe elderly living (WIISEL) system, a

system designed to continuously assess fall risk by measuring gait and balance parameters

associated with fall risk. The system is also designed to detect falls. The architecture of the

system is illustrated in Figure 1. It is proposed that the system can be worn at home by a user for

a period of time in order to identify specific gait and balance patterns that may be affecting a

user’s fall risk. The system is targeted at older adults who represent a high fall risk group. The

system consists of a pair of instrumented insoles and a smartphone that are worn by the user.

Data collected by embedded sensors in the insoles are sent to the smartphone, where they are

then uploaded to a server in a clinic for processing and analysis. The smartphone represents a

major interface in the system as this is how the home user will primarily interact with the

WIISEL system with the WIISEL app, allowing the user to check the system status, sync with

the insoles, send data to their local clinic, and monitor their daily activity.

Part One: Interactive System and its Users

Utilizing a human-centered design (HCD) approach, such as that outlined in the

International Standards Organization (ISO) 9241-210, during the design of connected health

devices ensures that the needs and requirements of the user are taken into consideration

throughout the design process. HCD is a multi-stage process that allows for various iterations of

a design and subsequent update to the requirements. The importance of involving end users in

the design process of health products is recognized, and different approaches have been

demonstrated in literature. In this paper, the implementation of a structured HCD methodology is

demonstrated, based on ISO-9241-210, which utilized standard, established techniques to assess

and develop the usability and human factors of a smartphone interface with the full involvement

of end users and stakeholders. The smartphone interface that was developed and tested is a

component of the wireless insole for independent and safe elderly living (WIISEL) system, a

system designed to continuously assess fall risk by measuring gait and balance parameters

associated with fall risk. The system is also designed to detect falls. The architecture of the

system is illustrated in Figure 1. It is proposed that the system can be worn at home by a user for

a period of time in order to identify specific gait and balance patterns that may be affecting a

user’s fall risk. The system is targeted at older adults who represent a high fall risk group. The

system consists of a pair of instrumented insoles and a smartphone that are worn by the user.

Data collected by embedded sensors in the insoles are sent to the smartphone, where they are

then uploaded to a server in a clinic for processing and analysis. The smartphone represents a

major interface in the system as this is how the home user will primarily interact with the

WIISEL system with the WIISEL app, allowing the user to check the system status, sync with

the insoles, send data to their local clinic, and monitor their daily activity.

2HUMAN FACTORS IN SYSTEM DESIGN

Figure 1: Architecture of the Risk Detection System

(Source: )

Figure 1: Architecture of the Risk Detection System

(Source: )

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3HUMAN FACTORS IN SYSTEM DESIGN

The acquisition and comprehension of information from interfaces can become more

difficult as a person progresses into older age. Interfaces in electronic health or medical apps can

often be crowded with text and characters, have poor contrast, contain many different colors, and

may not present adequate haptic or audio feedback. In terms of visual perception, age-related

declines in acuity, contrast sensitivity, and ability to discriminate colors can affect reading rates,

character and symbol identification, and button striking accuracy, even with optimal corrections

in place. Age-related cognitive decline in domains such as reasoning and memory can affect the

ability of the user to comprehend the process they are perceiving on the interface. Deterioration

of psychomotor processes such as fine motor control and dexterity can cause problems for users

attempting to interact with the physical hardware of the interface. Typically between the ages of

60 and 80 years, individuals can expect up to a 50% decline in visual acuity (particularly in low

luminance, low contrast, and glare environments), a reduction in hearing sensitivity by 20dBs, a

14% decline in short-term memory, and a 30% decline in power grip strength, all of which

impact how one interacts with computer interfaces. In addition to these physical considerations,

older adults can also present a complex user group in terms of attitude toward and previous

experience with technology.

Part Two: Use Cases

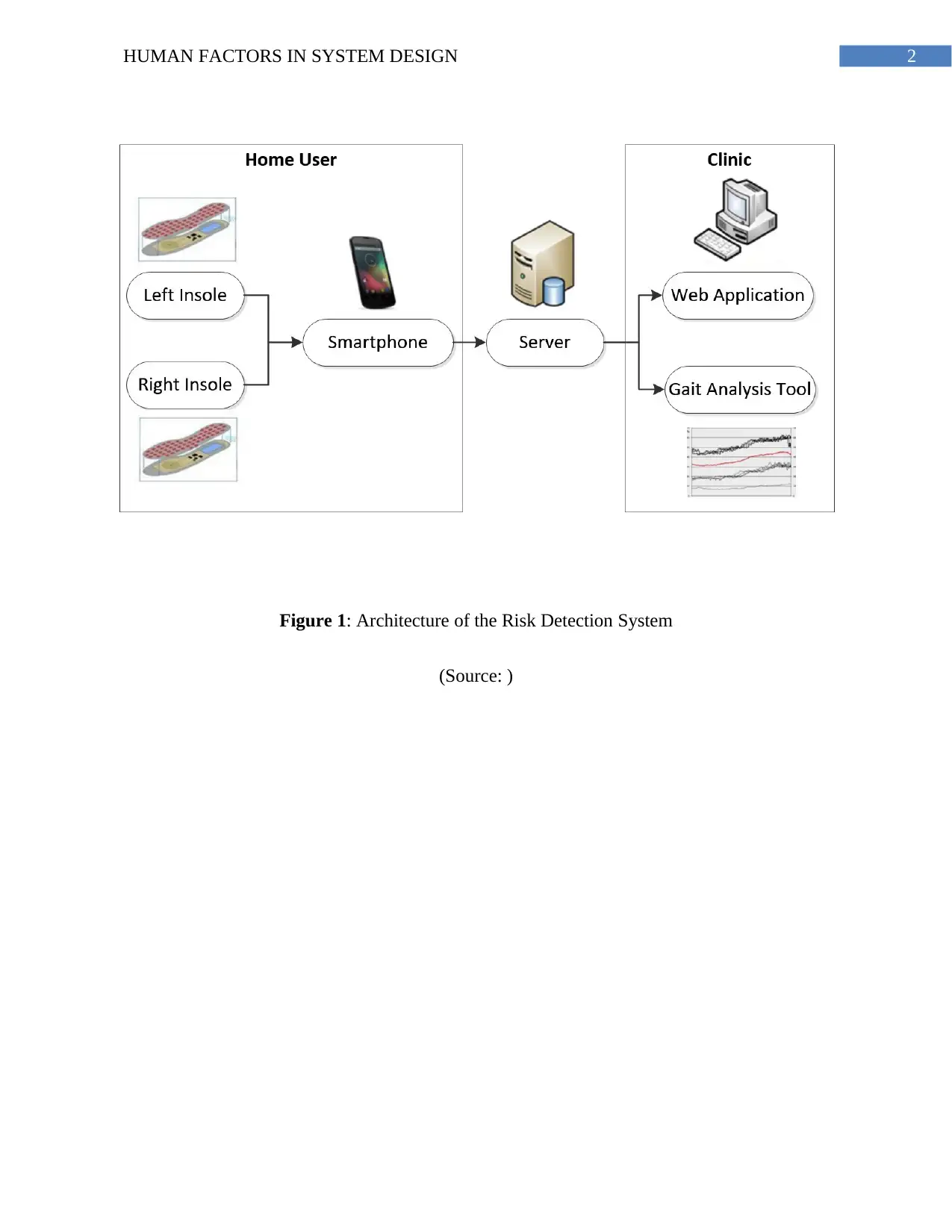

The use case document outlined 7 scenarios where the user must directly interact with the

smartphone interface. These scenarios were (1) the user logs in to the app, (2) the user syncs the

app to the insoles, (3) the user checks the system status, (4) the user uploads the data, (5) the user

minimizes the app, (6) the user resets the app, and (7) the user triggers a fall alarm. The use case,

which was termed paper prototype version 1, was exposed to 2 groups of stakeholders in the

form of structured analysis in order to illicit their feedback.

The acquisition and comprehension of information from interfaces can become more

difficult as a person progresses into older age. Interfaces in electronic health or medical apps can

often be crowded with text and characters, have poor contrast, contain many different colors, and

may not present adequate haptic or audio feedback. In terms of visual perception, age-related

declines in acuity, contrast sensitivity, and ability to discriminate colors can affect reading rates,

character and symbol identification, and button striking accuracy, even with optimal corrections

in place. Age-related cognitive decline in domains such as reasoning and memory can affect the

ability of the user to comprehend the process they are perceiving on the interface. Deterioration

of psychomotor processes such as fine motor control and dexterity can cause problems for users

attempting to interact with the physical hardware of the interface. Typically between the ages of

60 and 80 years, individuals can expect up to a 50% decline in visual acuity (particularly in low

luminance, low contrast, and glare environments), a reduction in hearing sensitivity by 20dBs, a

14% decline in short-term memory, and a 30% decline in power grip strength, all of which

impact how one interacts with computer interfaces. In addition to these physical considerations,

older adults can also present a complex user group in terms of attitude toward and previous

experience with technology.

Part Two: Use Cases

The use case document outlined 7 scenarios where the user must directly interact with the

smartphone interface. These scenarios were (1) the user logs in to the app, (2) the user syncs the

app to the insoles, (3) the user checks the system status, (4) the user uploads the data, (5) the user

minimizes the app, (6) the user resets the app, and (7) the user triggers a fall alarm. The use case,

which was termed paper prototype version 1, was exposed to 2 groups of stakeholders in the

form of structured analysis in order to illicit their feedback.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4HUMAN FACTORS IN SYSTEM DESIGN

Figure 2: Use Case of Clinical Decision Support Tool

(Source: Created by Author)

Part Three: The Usability Requirement

Expert Use Case Analysis

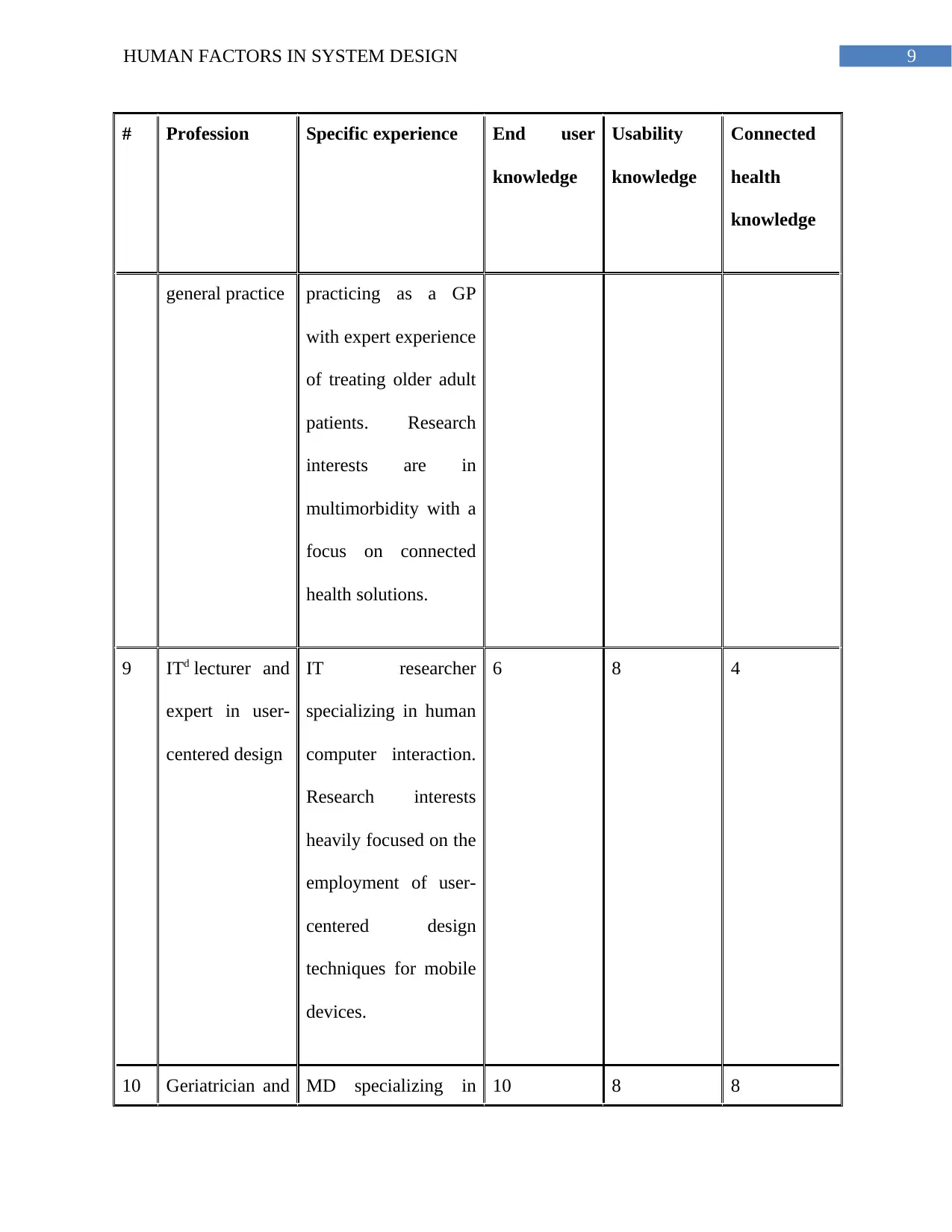

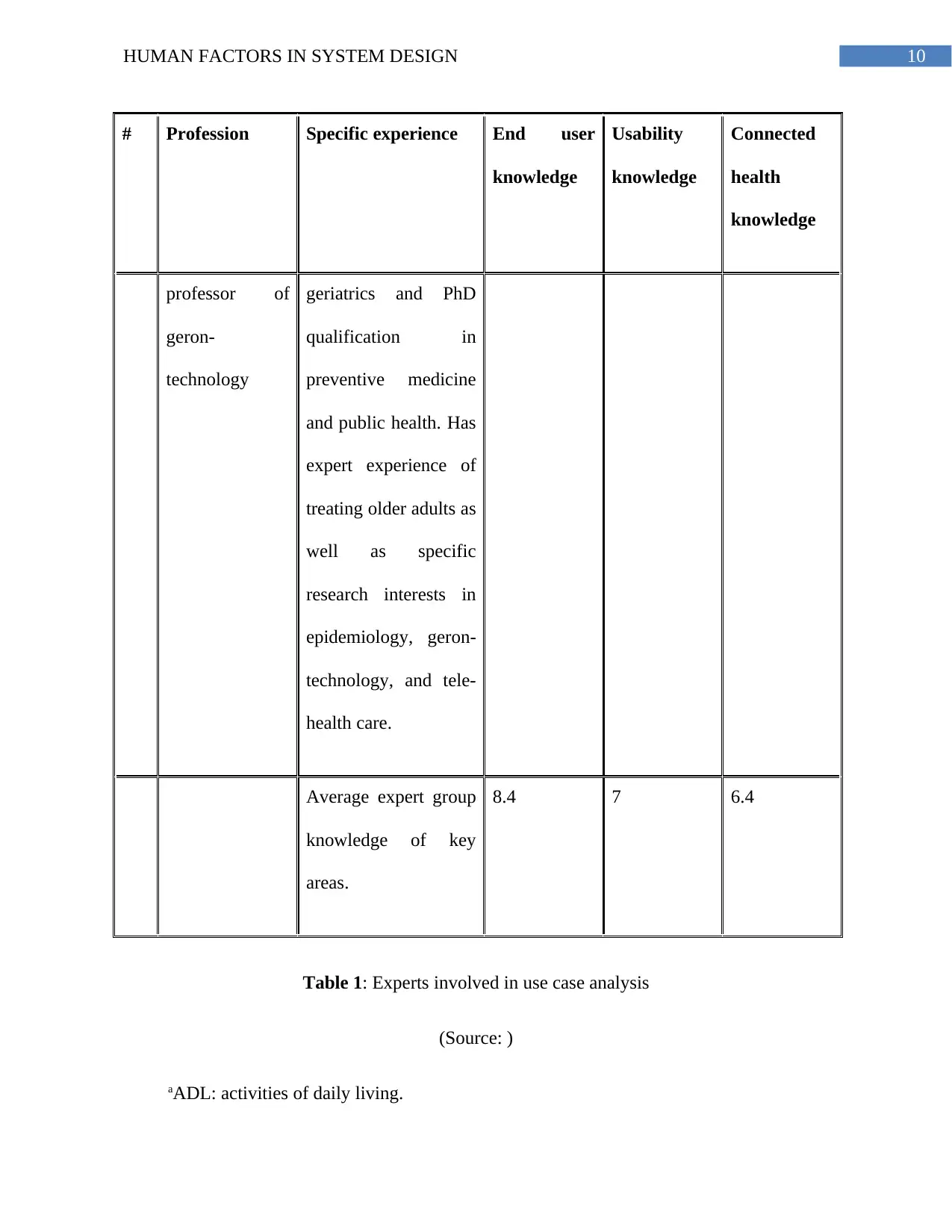

A total of 10 experts were selected to analyze the use case. The experts were selected

from National University of Ireland (NUI), Galway based on their involvement with work related

to the use of technology by older adults. There were multi-disciplinary perspectives, as advised

in ISO-92410, and therefore the group consisted of nurses, occupational therapists,

physiotherapists, general practitioners, gerontologists, and engineers. The precise expertise of

Figure 2: Use Case of Clinical Decision Support Tool

(Source: Created by Author)

Part Three: The Usability Requirement

Expert Use Case Analysis

A total of 10 experts were selected to analyze the use case. The experts were selected

from National University of Ireland (NUI), Galway based on their involvement with work related

to the use of technology by older adults. There were multi-disciplinary perspectives, as advised

in ISO-92410, and therefore the group consisted of nurses, occupational therapists,

physiotherapists, general practitioners, gerontologists, and engineers. The precise expertise of

5HUMAN FACTORS IN SYSTEM DESIGN

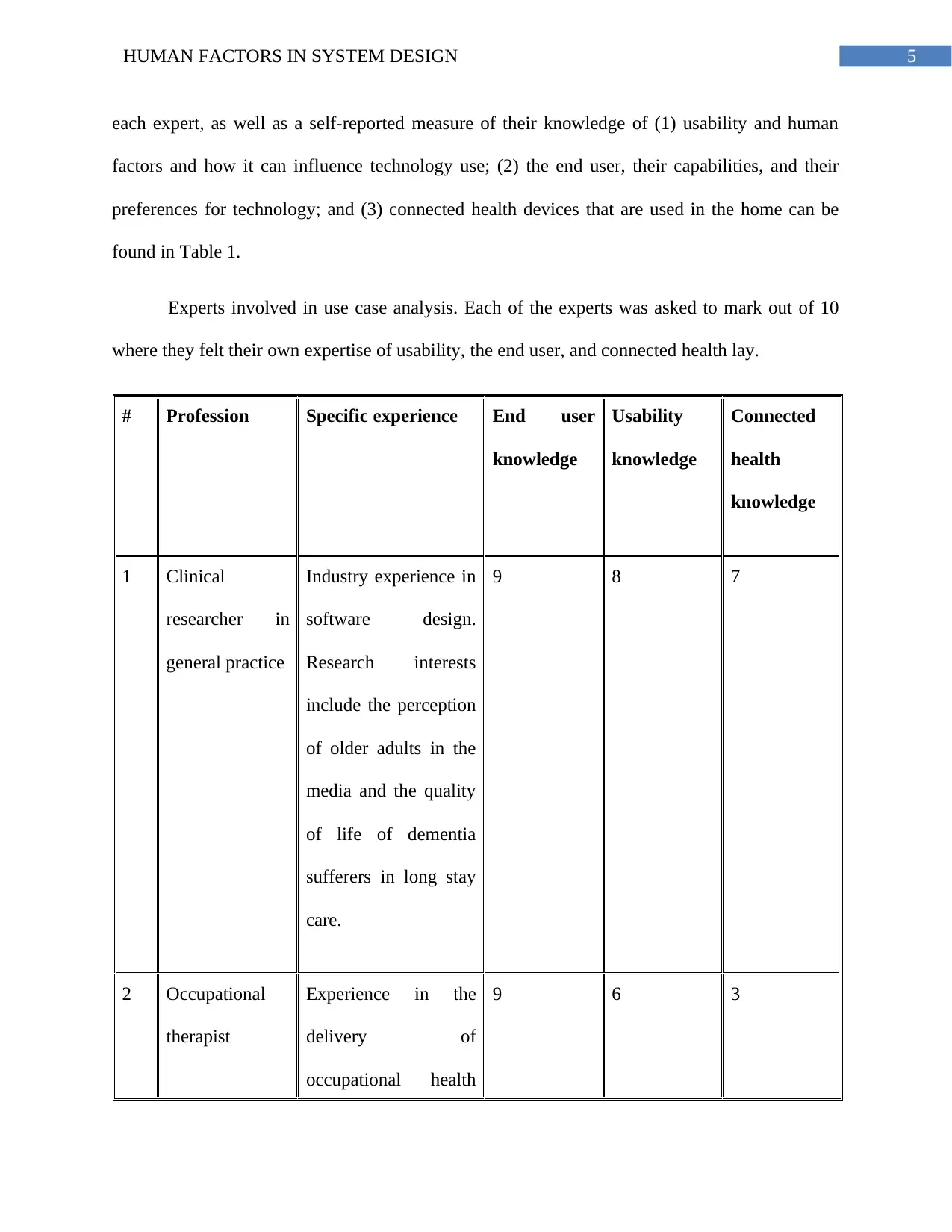

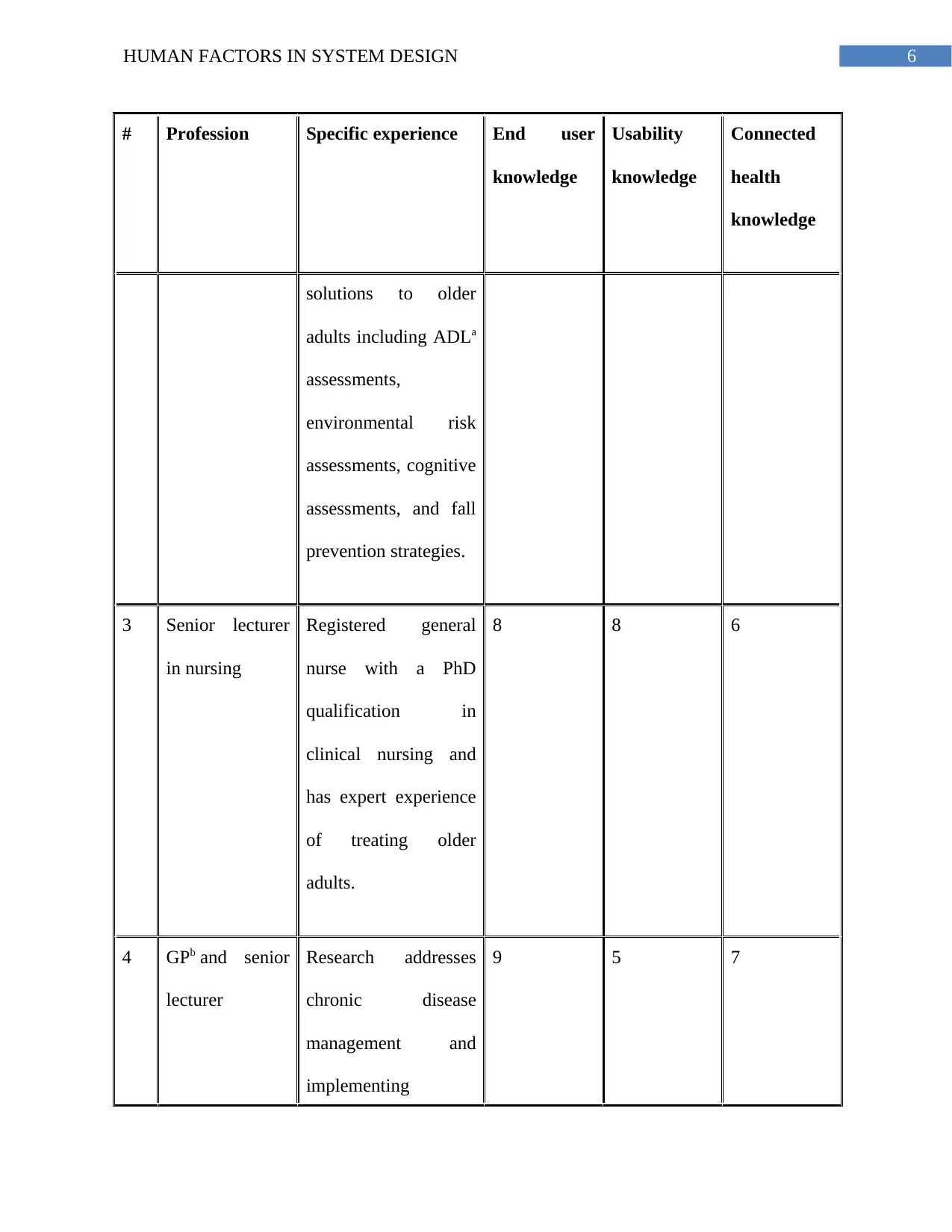

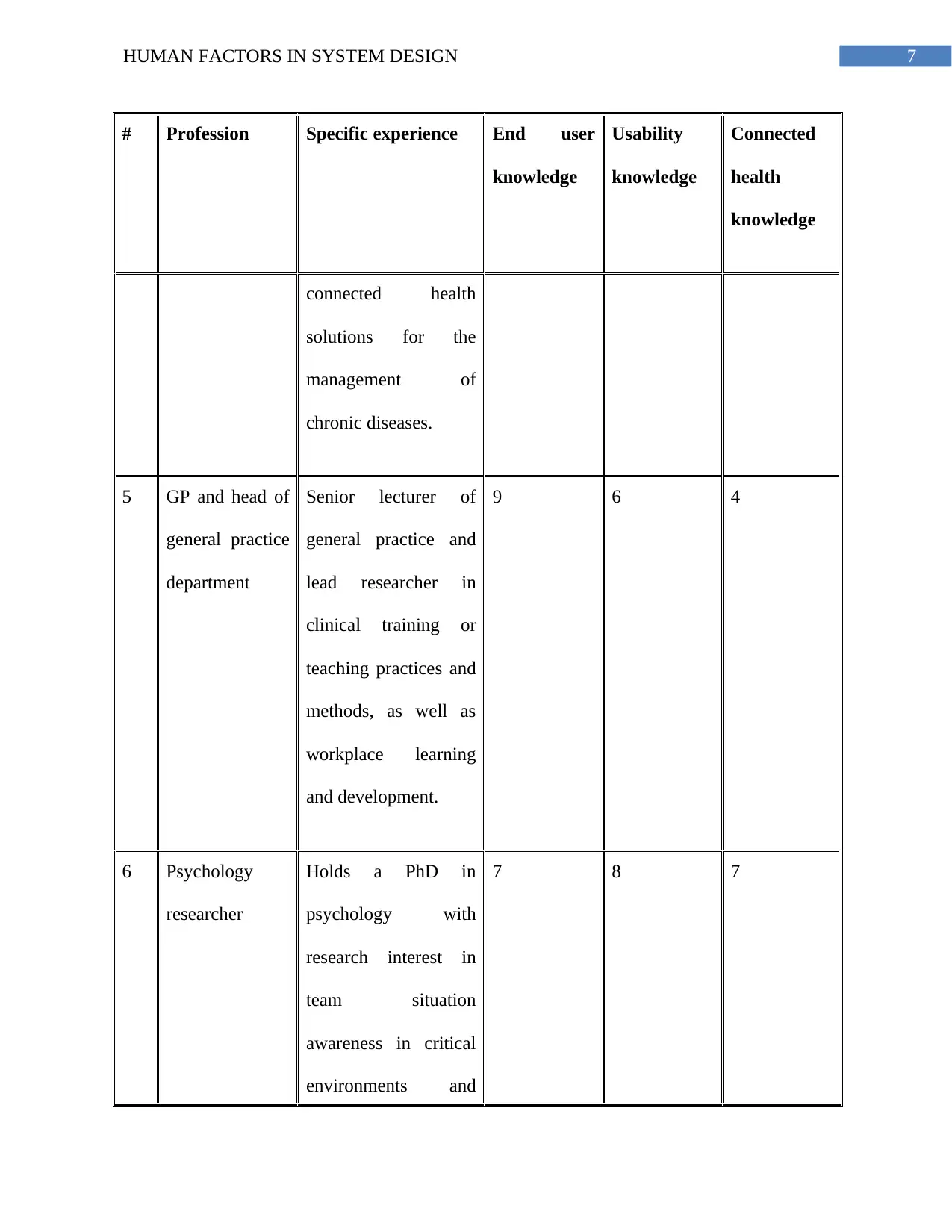

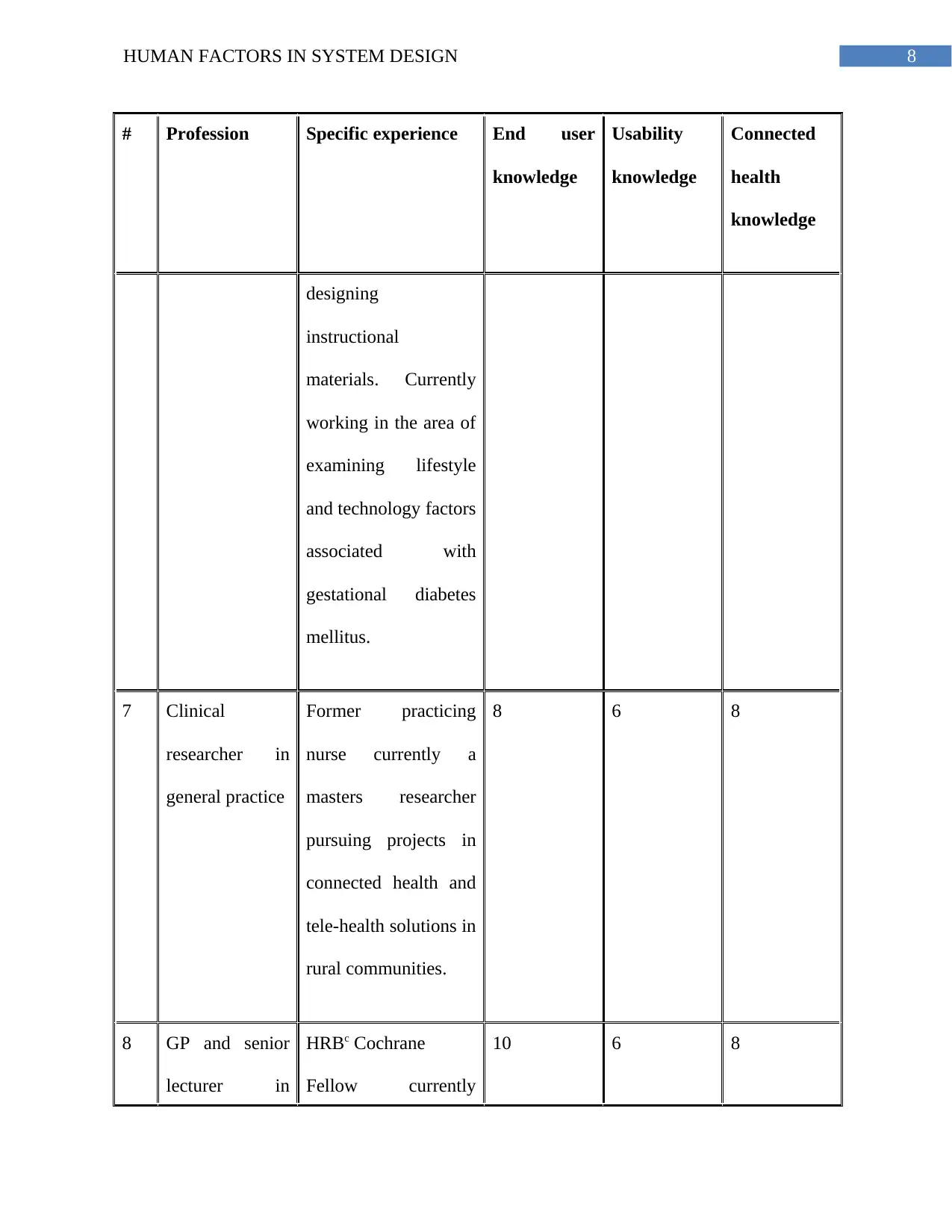

each expert, as well as a self-reported measure of their knowledge of (1) usability and human

factors and how it can influence technology use; (2) the end user, their capabilities, and their

preferences for technology; and (3) connected health devices that are used in the home can be

found in Table 1.

Experts involved in use case analysis. Each of the experts was asked to mark out of 10

where they felt their own expertise of usability, the end user, and connected health lay.

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

1 Clinical

researcher in

general practice

Industry experience in

software design.

Research interests

include the perception

of older adults in the

media and the quality

of life of dementia

sufferers in long stay

care.

9 8 7

2 Occupational

therapist

Experience in the

delivery of

occupational health

9 6 3

each expert, as well as a self-reported measure of their knowledge of (1) usability and human

factors and how it can influence technology use; (2) the end user, their capabilities, and their

preferences for technology; and (3) connected health devices that are used in the home can be

found in Table 1.

Experts involved in use case analysis. Each of the experts was asked to mark out of 10

where they felt their own expertise of usability, the end user, and connected health lay.

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

1 Clinical

researcher in

general practice

Industry experience in

software design.

Research interests

include the perception

of older adults in the

media and the quality

of life of dementia

sufferers in long stay

care.

9 8 7

2 Occupational

therapist

Experience in the

delivery of

occupational health

9 6 3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6HUMAN FACTORS IN SYSTEM DESIGN

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

solutions to older

adults including ADLa

assessments,

environmental risk

assessments, cognitive

assessments, and fall

prevention strategies.

3 Senior lecturer

in nursing

Registered general

nurse with a PhD

qualification in

clinical nursing and

has expert experience

of treating older

adults.

8 8 6

4 GPb and senior

lecturer

Research addresses

chronic disease

management and

implementing

9 5 7

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

solutions to older

adults including ADLa

assessments,

environmental risk

assessments, cognitive

assessments, and fall

prevention strategies.

3 Senior lecturer

in nursing

Registered general

nurse with a PhD

qualification in

clinical nursing and

has expert experience

of treating older

adults.

8 8 6

4 GPb and senior

lecturer

Research addresses

chronic disease

management and

implementing

9 5 7

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7HUMAN FACTORS IN SYSTEM DESIGN

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

connected health

solutions for the

management of

chronic diseases.

5 GP and head of

general practice

department

Senior lecturer of

general practice and

lead researcher in

clinical training or

teaching practices and

methods, as well as

workplace learning

and development.

9 6 4

6 Psychology

researcher

Holds a PhD in

psychology with

research interest in

team situation

awareness in critical

environments and

7 8 7

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

connected health

solutions for the

management of

chronic diseases.

5 GP and head of

general practice

department

Senior lecturer of

general practice and

lead researcher in

clinical training or

teaching practices and

methods, as well as

workplace learning

and development.

9 6 4

6 Psychology

researcher

Holds a PhD in

psychology with

research interest in

team situation

awareness in critical

environments and

7 8 7

8HUMAN FACTORS IN SYSTEM DESIGN

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

designing

instructional

materials. Currently

working in the area of

examining lifestyle

and technology factors

associated with

gestational diabetes

mellitus.

7 Clinical

researcher in

general practice

Former practicing

nurse currently a

masters researcher

pursuing projects in

connected health and

tele-health solutions in

rural communities.

8 6 8

8 GP and senior

lecturer in

HRBc Cochrane

Fellow currently

10 6 8

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

designing

instructional

materials. Currently

working in the area of

examining lifestyle

and technology factors

associated with

gestational diabetes

mellitus.

7 Clinical

researcher in

general practice

Former practicing

nurse currently a

masters researcher

pursuing projects in

connected health and

tele-health solutions in

rural communities.

8 6 8

8 GP and senior

lecturer in

HRBc Cochrane

Fellow currently

10 6 8

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9HUMAN FACTORS IN SYSTEM DESIGN

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

general practice practicing as a GP

with expert experience

of treating older adult

patients. Research

interests are in

multimorbidity with a

focus on connected

health solutions.

9 ITd lecturer and

expert in user-

centered design

IT researcher

specializing in human

computer interaction.

Research interests

heavily focused on the

employment of user-

centered design

techniques for mobile

devices.

6 8 4

10 Geriatrician and MD specializing in 10 8 8

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

general practice practicing as a GP

with expert experience

of treating older adult

patients. Research

interests are in

multimorbidity with a

focus on connected

health solutions.

9 ITd lecturer and

expert in user-

centered design

IT researcher

specializing in human

computer interaction.

Research interests

heavily focused on the

employment of user-

centered design

techniques for mobile

devices.

6 8 4

10 Geriatrician and MD specializing in 10 8 8

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

10HUMAN FACTORS IN SYSTEM DESIGN

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

professor of

geron-

technology

geriatrics and PhD

qualification in

preventive medicine

and public health. Has

expert experience of

treating older adults as

well as specific

research interests in

epidemiology, geron-

technology, and tele-

health care.

Average expert group

knowledge of key

areas.

8.4 7 6.4

Table 1: Experts involved in use case analysis

(Source: )

aADL: activities of daily living.

# Profession Specific experience End user

knowledge

Usability

knowledge

Connected

health

knowledge

professor of

geron-

technology

geriatrics and PhD

qualification in

preventive medicine

and public health. Has

expert experience of

treating older adults as

well as specific

research interests in

epidemiology, geron-

technology, and tele-

health care.

Average expert group

knowledge of key

areas.

8.4 7 6.4

Table 1: Experts involved in use case analysis

(Source: )

aADL: activities of daily living.

11HUMAN FACTORS IN SYSTEM DESIGN

bGP: general practitioner.

cHRB: health research board.

dIT: information technology.

In addition to filling out the Likert statements at the end of each scenario, the expert was

instructed to engage in a think-aloud protocol as they walked through each scenario. All

feedback was captured by an audio recorder.

Part Four: The Evaluation Methodology

Phase 1

Use Case Development

The use case document outlined 7 scenarios where the user must directly interact with the

smartphone interface. These scenarios were (1) the user logs in to the app, (2) the user syncs the

app to the insoles, (3) the user checks the system status, (4) the user uploads the data, (5) the user

minimizes the app, (6) the user resets the app, and (7) the user triggers a fall alarm. The use case,

which was termed paper prototype version 1, was exposed to 2 groups of stakeholders in the

form of structured analysis in order to illicit their feedback.

End User Representatives Use Case Analysis

A total of 12 older adults were recruited using a typical purposive sample (Inclusion: age

65+ years, community dwelling; Exclusion: profound hearing or vision loss, psychiatric

morbidities, and severe neurological impairments) to analyze the use case. The same protocol

and interview structure was used to expose the use case document to the older adults and was

carried out in the home of the participant. Ethical approval to carry out the interviews and

bGP: general practitioner.

cHRB: health research board.

dIT: information technology.

In addition to filling out the Likert statements at the end of each scenario, the expert was

instructed to engage in a think-aloud protocol as they walked through each scenario. All

feedback was captured by an audio recorder.

Part Four: The Evaluation Methodology

Phase 1

Use Case Development

The use case document outlined 7 scenarios where the user must directly interact with the

smartphone interface. These scenarios were (1) the user logs in to the app, (2) the user syncs the

app to the insoles, (3) the user checks the system status, (4) the user uploads the data, (5) the user

minimizes the app, (6) the user resets the app, and (7) the user triggers a fall alarm. The use case,

which was termed paper prototype version 1, was exposed to 2 groups of stakeholders in the

form of structured analysis in order to illicit their feedback.

End User Representatives Use Case Analysis

A total of 12 older adults were recruited using a typical purposive sample (Inclusion: age

65+ years, community dwelling; Exclusion: profound hearing or vision loss, psychiatric

morbidities, and severe neurological impairments) to analyze the use case. The same protocol

and interview structure was used to expose the use case document to the older adults and was

carried out in the home of the participant. Ethical approval to carry out the interviews and

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 26