Leveraging Big Data for Enhancing Decision Making and Creating New Business Model for Amazon Go

VerifiedAdded on 2023/06/11

|13

|4055

|225

AI Summary

This research report focuses on how Amazon Go uses big data to enhance customer experience and create a new business model. It covers business strategy, initiatives & objectives, data sources, technology stack, data analytics, MDM, NoSQL, and critical success factors.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

P a g e | 0

RESEARCH REPORT

ON

LEVERAGING BIG DATA FOR

ENHANCING DECISION MAKING AND

CREATING NEW BUSINESS MODEL

For

AMAZON GO

Course Title/Name:

Student Number/Name:

Lecturer/Tutor Name:

RESEARCH REPORT

ON

LEVERAGING BIG DATA FOR

ENHANCING DECISION MAKING AND

CREATING NEW BUSINESS MODEL

For

AMAZON GO

Course Title/Name:

Student Number/Name:

Lecturer/Tutor Name:

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

P a g e | 1

Contents

Page

Introduction……………………………………………………………………………………………… 2-2

Business Use Case……………………………………………………………………………………… 2-2

Business Strategy………………………………………………………………………………………. 3-4

Business Initiatives & Objectives……………………………………………………………….. 4-5

Data Sources……………………………………………………………………………………………… 6-6

Technology Stack ………………………………………………………………………………………. 5-6

Data Analytics and MDM …………………………………………………………………………… 6-7

Role of NoSQL ……………………………………………………………………………………………. 7-9

Role of Social Media in decision making……………………………………………………… 9-9

Critical Success Factors……………………………………………………………………………….. 9-10

Reflections & Conclusion……………………………………………………………………………… 10-10

References…………………………………………………………………………………………………… 11-12

Contents

Page

Introduction……………………………………………………………………………………………… 2-2

Business Use Case……………………………………………………………………………………… 2-2

Business Strategy………………………………………………………………………………………. 3-4

Business Initiatives & Objectives……………………………………………………………….. 4-5

Data Sources……………………………………………………………………………………………… 6-6

Technology Stack ………………………………………………………………………………………. 5-6

Data Analytics and MDM …………………………………………………………………………… 6-7

Role of NoSQL ……………………………………………………………………………………………. 7-9

Role of Social Media in decision making……………………………………………………… 9-9

Critical Success Factors……………………………………………………………………………….. 9-10

Reflections & Conclusion……………………………………………………………………………… 10-10

References…………………………………………………………………………………………………… 11-12

P a g e | 2

Introduction:

Amazon manages a marketplace platform that leverages big data and customer-centric approach to

improve customer experience.

In this research study, the focus is to understand how “Amazon Go” uses the vast variety of data,

derives useful information from the data and uses data-driven approach to enhance customer

experience using predictive models to recommend products to customers. This study also aims to

understand the importance of big data, how it helps in driving growth in business and improving

business model from online portal to automated physical store (amazon go).

Big Data Use Case

Seattle-based e-commerce giant Amazon, is leveraging big data on its 200 million customer accounts

by hosting their 1,000,000,000 GB of data on more than 1,400,000 servers to increase sales through

predictive analytics. Amazon’s concept of convenience store “Amazon Go” opened to public in

January 2018. The store has an array of cameras which track purchases and automatically charge

customer through a smartphone app.

Features of Amazon Go Store:

Small Store, Big Impact

The “Amazon Go” store is only 1,800 square feet. Amazon Go has prices printed on the shelf

tags. The shopping experience is amazing, one can just browse around, place items into the

bag and just walk out. But in reality, customer gets charged automatically from their Amazon

account for what all they take out the door. The deep learning technology is able to

recognize every item in the store without any special tags like RFID chips.

The entire store can be accessed with an account on Amazon and Amazon Go app that can

be easily installed.

No Barcode Needed

Amazon Go eliminates the need for barcodes by using sensors and cameras to track which

items leaves shelves with customers. Cameras and sensors are meant to tract inventory.

Amazon Go used artificial intelligence to track inventory

Fewer Cash Registers, More Data

Amazon Go store facilitates automated checkout process. It is a convenience to customers of

simply walking out with purchases without any queue for checkout.

Amazon Go aims to deliver better customer experience. The idea is simple but there are layers of

complexity to figure out what products people buy at what times of day, when to reorder and

restock the shelves, how to present merchandise to capture customer attention and optimize traffic

flow around the store.

Amazon has plans of opening 20,000+ stores in the coming days. “Amazon Go” is the combination of

artificial intelligence, computer vision (cameras to track a user’s action in the store) and data pulled

from multiple sensors.

Introduction:

Amazon manages a marketplace platform that leverages big data and customer-centric approach to

improve customer experience.

In this research study, the focus is to understand how “Amazon Go” uses the vast variety of data,

derives useful information from the data and uses data-driven approach to enhance customer

experience using predictive models to recommend products to customers. This study also aims to

understand the importance of big data, how it helps in driving growth in business and improving

business model from online portal to automated physical store (amazon go).

Big Data Use Case

Seattle-based e-commerce giant Amazon, is leveraging big data on its 200 million customer accounts

by hosting their 1,000,000,000 GB of data on more than 1,400,000 servers to increase sales through

predictive analytics. Amazon’s concept of convenience store “Amazon Go” opened to public in

January 2018. The store has an array of cameras which track purchases and automatically charge

customer through a smartphone app.

Features of Amazon Go Store:

Small Store, Big Impact

The “Amazon Go” store is only 1,800 square feet. Amazon Go has prices printed on the shelf

tags. The shopping experience is amazing, one can just browse around, place items into the

bag and just walk out. But in reality, customer gets charged automatically from their Amazon

account for what all they take out the door. The deep learning technology is able to

recognize every item in the store without any special tags like RFID chips.

The entire store can be accessed with an account on Amazon and Amazon Go app that can

be easily installed.

No Barcode Needed

Amazon Go eliminates the need for barcodes by using sensors and cameras to track which

items leaves shelves with customers. Cameras and sensors are meant to tract inventory.

Amazon Go used artificial intelligence to track inventory

Fewer Cash Registers, More Data

Amazon Go store facilitates automated checkout process. It is a convenience to customers of

simply walking out with purchases without any queue for checkout.

Amazon Go aims to deliver better customer experience. The idea is simple but there are layers of

complexity to figure out what products people buy at what times of day, when to reorder and

restock the shelves, how to present merchandise to capture customer attention and optimize traffic

flow around the store.

Amazon has plans of opening 20,000+ stores in the coming days. “Amazon Go” is the combination of

artificial intelligence, computer vision (cameras to track a user’s action in the store) and data pulled

from multiple sensors.

P a g e | 3

Business Strategy

Amazon as a company understands the value of data from long time (around 17 years) and uses

various data mining techniques to study online consumer purchasing behaviour with the help of data

provided by customer during their online purchasing activities. All this data mining is done with the

help of its 200 million customer accounts.

In 2013, Amazon used item-item similarity methods from collaborative filtering engine(CFE). It

analyses what items customer purchased previously, what is in online shopping cart, on wish list,

which products were reviewed and rated and what items which customer search the most. This

information is used to recommend additional products that other customers purchased when

busying those same items. Since them Amazon has improved its recommender system and today

they and ruling the marketplace. They use customer click-stream (online data collected by each click

done by customer) data and historical purchase data of all customers and it provides customized

results for each customer.

Amazon Go, the retail outlet known to be the experimental outlet is a bold move that transform

retail by the help of deep learning technology (a part of machine learning that enables computers to

learn by continuously collecting and analysing digital data), specifically image recognition algorithms

used to identify the products picked by customers for purchase or for removal from the cart if its

kept back on the shelf. “Amazon Go aims to provide enhanced customer experience with the help

of data-driven decision.”

Business Initiatives & Objectives:

The growing challenge faced by the retail industry is the management of larger datasets, databases,

in just no time. Insights from data needed in milliseconds and if it takes minutes or even hour, the

value that the information could have provided will change. The modern retailer needs to process

large amounts of retail data in real time in order to encash the insights discovered in their retail

analytics.

Big data technology focusses on speed. Amazon is leveraging big data on its 200 million customer

accounts by hosting their 1,000,000,000 GB of data on more than 1,400,000 servers to increase sales

through predictive analytics. Big data analytics is the backbone for Amazon that helps form better

customer relationships.

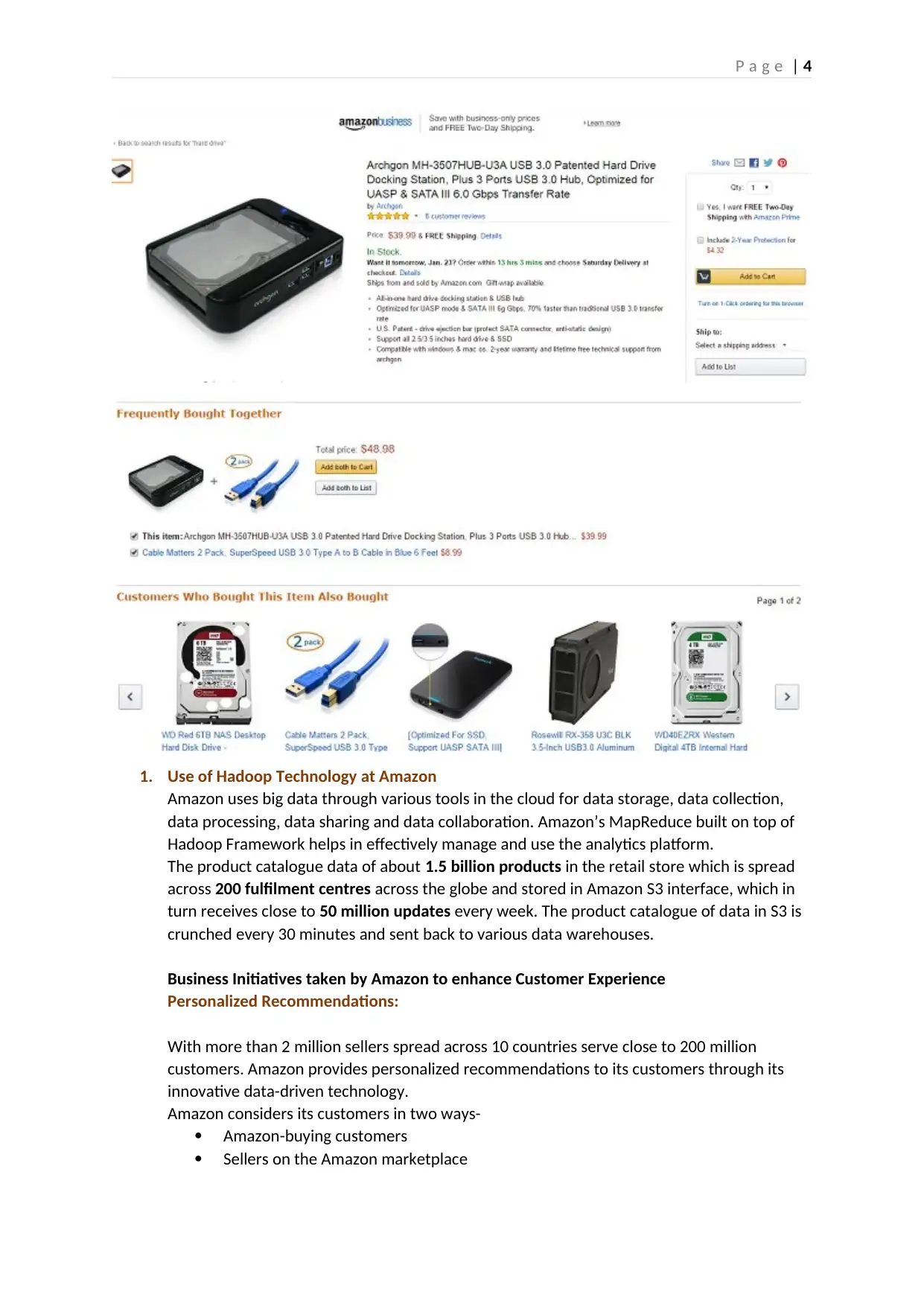

Amazon Go tracks everything customers do at their store, to collect as much data as it can. Amazon

have a very good account management, to collect data from its customers. On the customer home

page, they track customer activity at various sections of homepage like- “Inspired by Your Wish List”,

“Recommendations for You”, “Inspired by your browsing History”, “Related to items you have

viewed”, “Customers Who Bought This Item Also Bought”.

For example: If a customer selects to buy a hard drive in Amazon.com, customer will also be shown

products that are frequently bought with hard drive and other choices besides the hard drive that

customer would like to purchase.

Business Strategy

Amazon as a company understands the value of data from long time (around 17 years) and uses

various data mining techniques to study online consumer purchasing behaviour with the help of data

provided by customer during their online purchasing activities. All this data mining is done with the

help of its 200 million customer accounts.

In 2013, Amazon used item-item similarity methods from collaborative filtering engine(CFE). It

analyses what items customer purchased previously, what is in online shopping cart, on wish list,

which products were reviewed and rated and what items which customer search the most. This

information is used to recommend additional products that other customers purchased when

busying those same items. Since them Amazon has improved its recommender system and today

they and ruling the marketplace. They use customer click-stream (online data collected by each click

done by customer) data and historical purchase data of all customers and it provides customized

results for each customer.

Amazon Go, the retail outlet known to be the experimental outlet is a bold move that transform

retail by the help of deep learning technology (a part of machine learning that enables computers to

learn by continuously collecting and analysing digital data), specifically image recognition algorithms

used to identify the products picked by customers for purchase or for removal from the cart if its

kept back on the shelf. “Amazon Go aims to provide enhanced customer experience with the help

of data-driven decision.”

Business Initiatives & Objectives:

The growing challenge faced by the retail industry is the management of larger datasets, databases,

in just no time. Insights from data needed in milliseconds and if it takes minutes or even hour, the

value that the information could have provided will change. The modern retailer needs to process

large amounts of retail data in real time in order to encash the insights discovered in their retail

analytics.

Big data technology focusses on speed. Amazon is leveraging big data on its 200 million customer

accounts by hosting their 1,000,000,000 GB of data on more than 1,400,000 servers to increase sales

through predictive analytics. Big data analytics is the backbone for Amazon that helps form better

customer relationships.

Amazon Go tracks everything customers do at their store, to collect as much data as it can. Amazon

have a very good account management, to collect data from its customers. On the customer home

page, they track customer activity at various sections of homepage like- “Inspired by Your Wish List”,

“Recommendations for You”, “Inspired by your browsing History”, “Related to items you have

viewed”, “Customers Who Bought This Item Also Bought”.

For example: If a customer selects to buy a hard drive in Amazon.com, customer will also be shown

products that are frequently bought with hard drive and other choices besides the hard drive that

customer would like to purchase.

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

P a g e | 4

1. Use of Hadoop Technology at Amazon

Amazon uses big data through various tools in the cloud for data storage, data collection,

data processing, data sharing and data collaboration. Amazon’s MapReduce built on top of

Hadoop Framework helps in effectively manage and use the analytics platform.

The product catalogue data of about 1.5 billion products in the retail store which is spread

across 200 fulfilment centres across the globe and stored in Amazon S3 interface, which in

turn receives close to 50 million updates every week. The product catalogue of data in S3 is

crunched every 30 minutes and sent back to various data warehouses.

Business Initiatives taken by Amazon to enhance Customer Experience

Personalized Recommendations:

With more than 2 million sellers spread across 10 countries serve close to 200 million

customers. Amazon provides personalized recommendations to its customers through its

innovative data-driven technology.

Amazon considers its customers in two ways-

Amazon-buying customers

Sellers on the Amazon marketplace

1. Use of Hadoop Technology at Amazon

Amazon uses big data through various tools in the cloud for data storage, data collection,

data processing, data sharing and data collaboration. Amazon’s MapReduce built on top of

Hadoop Framework helps in effectively manage and use the analytics platform.

The product catalogue data of about 1.5 billion products in the retail store which is spread

across 200 fulfilment centres across the globe and stored in Amazon S3 interface, which in

turn receives close to 50 million updates every week. The product catalogue of data in S3 is

crunched every 30 minutes and sent back to various data warehouses.

Business Initiatives taken by Amazon to enhance Customer Experience

Personalized Recommendations:

With more than 2 million sellers spread across 10 countries serve close to 200 million

customers. Amazon provides personalized recommendations to its customers through its

innovative data-driven technology.

Amazon considers its customers in two ways-

Amazon-buying customers

Sellers on the Amazon marketplace

P a g e | 5

The most challenging and powerful criteria for satisfying customers is to manage the

inventory well. One of the popular suggestion Amazon provides is to its sellers is about the

inventory being out of stock. The recommendations algorithms analyse how much a

particular seller is selling and how much inventory does a seller have in stock. Based on all

these factors the recommendation algorithms at Amazon makes suggestions to sellers on

the expected demand for their product so that the sellers can add more inventory to their

marketplace in Amazon.

Dynamic Price Optimization:

Price management is done closely at Amazon to attract buyers to attract buyers, beat

competition and grow the business. Dynamic pricing helped Amazon stay competitive by

monitoring the price 24x7, 365 days. Product pricing strategy at Amazon supports rea-time

pricing by considering data from various sources like customer activity on the website,

available inventory of a product, competitor pricing for a product, order history, preferences

set for a product, expected margin on the product and more. Amazon changes the price of

its products every 10 minutes.

Amazon provides huge discounts on best-selling products and making profits on less popular

products.

Supply Chain Optimization:

Amazon as real-time links with manufacturers, tracks the inventory demand based on the

data to provide same day or next day delivery options to customers.

Amazon uses big data systems to select data warehouses based on the presence of vendors

which is balanced against the presence of customers to reduce the distribution costs. The big

data systems help Amazon predict the number of data warehouses needed and the capacity

each warehouse should have. Amazon uses graph theory to minimize the delivery costs by

choosing optimal schedules, routes and product groupings.

Anticipatory Shipping:

Amazon has patented the process of shipping a product to a customer with an expectation

that the customer will order that product based on the power of predictive analytics. The

patent signifies that Amazon believes, that the predictive analytics systems will become so

accurate that they will be able to predict what a customer will buy and when.

Amazon Web Services:

Through Amazon Web Services, companies can create scalable big data applications and

secure them without using hardware or maintaining infrastructure. Big data applications

such as clickstream analytics, data warehousing, recommendation engines, fraud detection,

event-driven ETL, and internet-of-things (IoT) processing are done through cloud-based

computing.

Companies may benefit from Amazon Web Services by analysing customer demographics,

spending habits and other pertinent information to more effectively cross-sell company

products in ways similar to Amazon.

Other initiatives are:

Analysis of customer buying patterns

The most challenging and powerful criteria for satisfying customers is to manage the

inventory well. One of the popular suggestion Amazon provides is to its sellers is about the

inventory being out of stock. The recommendations algorithms analyse how much a

particular seller is selling and how much inventory does a seller have in stock. Based on all

these factors the recommendation algorithms at Amazon makes suggestions to sellers on

the expected demand for their product so that the sellers can add more inventory to their

marketplace in Amazon.

Dynamic Price Optimization:

Price management is done closely at Amazon to attract buyers to attract buyers, beat

competition and grow the business. Dynamic pricing helped Amazon stay competitive by

monitoring the price 24x7, 365 days. Product pricing strategy at Amazon supports rea-time

pricing by considering data from various sources like customer activity on the website,

available inventory of a product, competitor pricing for a product, order history, preferences

set for a product, expected margin on the product and more. Amazon changes the price of

its products every 10 minutes.

Amazon provides huge discounts on best-selling products and making profits on less popular

products.

Supply Chain Optimization:

Amazon as real-time links with manufacturers, tracks the inventory demand based on the

data to provide same day or next day delivery options to customers.

Amazon uses big data systems to select data warehouses based on the presence of vendors

which is balanced against the presence of customers to reduce the distribution costs. The big

data systems help Amazon predict the number of data warehouses needed and the capacity

each warehouse should have. Amazon uses graph theory to minimize the delivery costs by

choosing optimal schedules, routes and product groupings.

Anticipatory Shipping:

Amazon has patented the process of shipping a product to a customer with an expectation

that the customer will order that product based on the power of predictive analytics. The

patent signifies that Amazon believes, that the predictive analytics systems will become so

accurate that they will be able to predict what a customer will buy and when.

Amazon Web Services:

Through Amazon Web Services, companies can create scalable big data applications and

secure them without using hardware or maintaining infrastructure. Big data applications

such as clickstream analytics, data warehousing, recommendation engines, fraud detection,

event-driven ETL, and internet-of-things (IoT) processing are done through cloud-based

computing.

Companies may benefit from Amazon Web Services by analysing customer demographics,

spending habits and other pertinent information to more effectively cross-sell company

products in ways similar to Amazon.

Other initiatives are:

Analysis of customer buying patterns

P a g e | 6

Conducting retail analytics at scale

Analysing traffic patterns

Inventory Management

Data Sources

The most common data sources are:

Databases

Traditional data source in BI. E.g., Oracle, NoSQL, Hadoop etc.

Flat Files

E.g., Excel & .csv files

Web Services

Other Sources- Social Media, Internet of Things (IOT), Financial market data, Big Data

Amazon Go uses accelerated analytics using system of cameras, sensors/RFID readers to

identify shoppers and the items they have chosen.

Cameras take photos of various actions- when they enter store, while selecting

items, removing items, and while keeping the items back in shelf.

Facial recognition & captures customer Profile (height, weight, biometrics, purchase

history etc)

Purchase behaviour

Visit behaviour/action in the store

Amazon Go make of use RGB cameras, depth sensing cameras and infrared sensors to collect data.

Based on collected data, they are trained to identify the user and activities they carry out inside the

store with the items on display. When Sensor Fusion is paired with computer vision, it helps in

determining if someone has picked up an item or placed it back on the shelf.

Technology Stack

The most fundamental units of big data technology are:

1. Hadoop

It’s an ecosystem where most applications that deal with big data run. A big data

architect’s world, is Hadoop.

Components of the Hadoop ecosystem:

Hadoop Distributed File System (HDFS)

HDFS is the File System for managing Apache Hadoop. It provides distributed

storage by storing the large mounds of data across several machines. It is highly

fault tolerant, and stores data in a very redundant manner, ensuring there is no

data loss in case of a failure.

MapReduce

MapReduce is the brain behind Hadoop and coordinates all the distributed

processing. MapReduce has primarily two components; the Job Tracker and the

Task Tracker. The Job Tracker services requests between you and the Hadoop

ecosystem. It keeps track of the jobs processed, failures, etc., while to put it

simply, a Task Tracker is to the Job Tracker. In the Hadoop ecosystem, the

Conducting retail analytics at scale

Analysing traffic patterns

Inventory Management

Data Sources

The most common data sources are:

Databases

Traditional data source in BI. E.g., Oracle, NoSQL, Hadoop etc.

Flat Files

E.g., Excel & .csv files

Web Services

Other Sources- Social Media, Internet of Things (IOT), Financial market data, Big Data

Amazon Go uses accelerated analytics using system of cameras, sensors/RFID readers to

identify shoppers and the items they have chosen.

Cameras take photos of various actions- when they enter store, while selecting

items, removing items, and while keeping the items back in shelf.

Facial recognition & captures customer Profile (height, weight, biometrics, purchase

history etc)

Purchase behaviour

Visit behaviour/action in the store

Amazon Go make of use RGB cameras, depth sensing cameras and infrared sensors to collect data.

Based on collected data, they are trained to identify the user and activities they carry out inside the

store with the items on display. When Sensor Fusion is paired with computer vision, it helps in

determining if someone has picked up an item or placed it back on the shelf.

Technology Stack

The most fundamental units of big data technology are:

1. Hadoop

It’s an ecosystem where most applications that deal with big data run. A big data

architect’s world, is Hadoop.

Components of the Hadoop ecosystem:

Hadoop Distributed File System (HDFS)

HDFS is the File System for managing Apache Hadoop. It provides distributed

storage by storing the large mounds of data across several machines. It is highly

fault tolerant, and stores data in a very redundant manner, ensuring there is no

data loss in case of a failure.

MapReduce

MapReduce is the brain behind Hadoop and coordinates all the distributed

processing. MapReduce has primarily two components; the Job Tracker and the

Task Tracker. The Job Tracker services requests between you and the Hadoop

ecosystem. It keeps track of the jobs processed, failures, etc., while to put it

simply, a Task Tracker is to the Job Tracker. In the Hadoop ecosystem, the

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

P a g e | 7

NameNode services are parallel to storage, and the Job Tracker services are

parallel to processing.

Hadoop Common

Hadoop Common provides a set of utilities and libraries. All other Hadoop

modules use these. It is an essential part of the Hadoop ecosystem.

2. Spark

It’s a platform that can work alone or in conjunction with Hadoop to process data a

hundred times faster. Most practical applications of Big Data nowadays use Spark.

3. Pig, Hive, Sqoop, Flume, Kafka, Mahout, Drill, and a host of other applications

contribute in one way or another to making data processing simpler, faster, cheaper, or

all three.

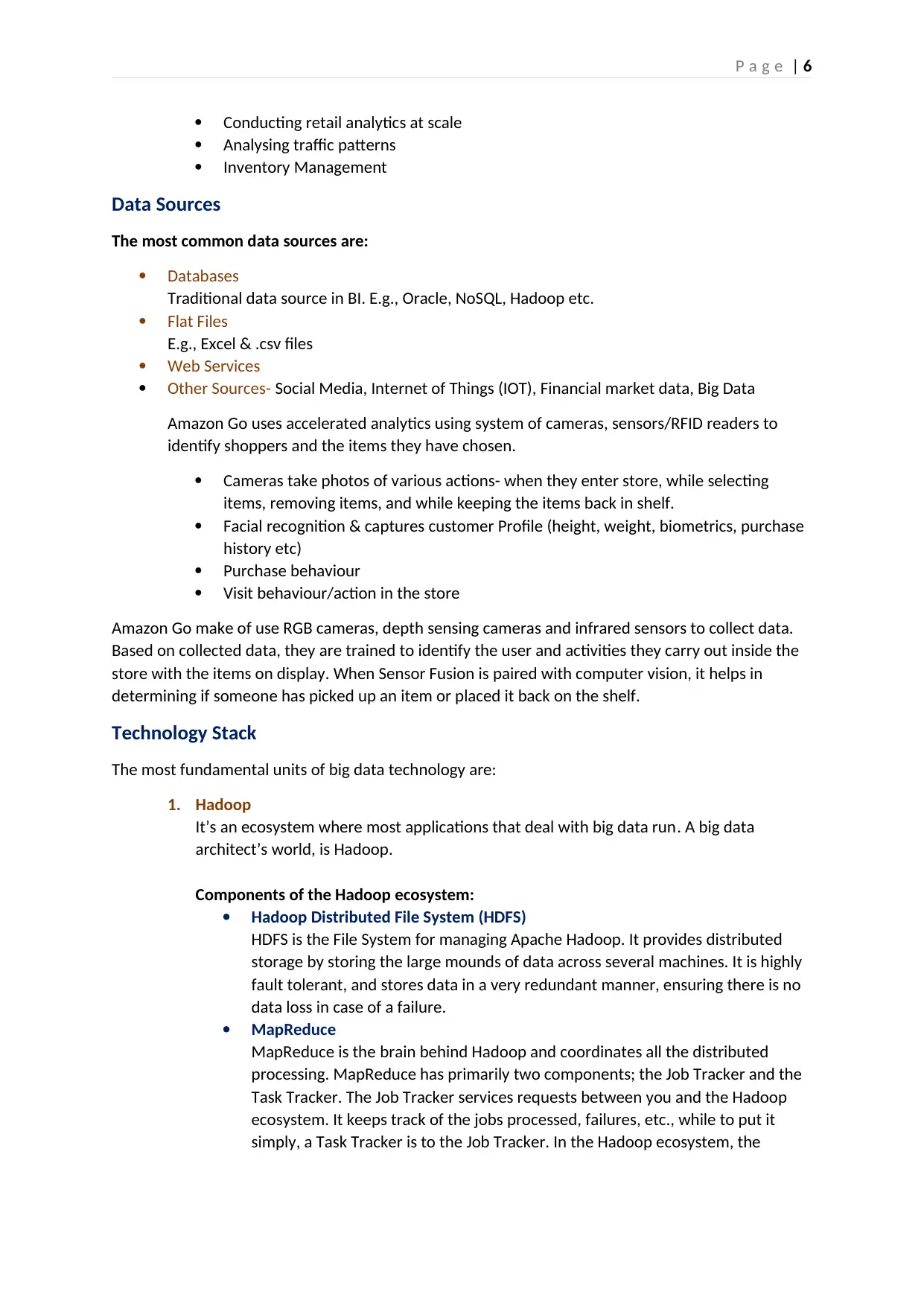

Data Analytics and Master Data Management to support Decision Support &

Business Intelligence

With the rapidly increasing demand to predict the future of markets and consumer behaviour- data

analytics and data management has become a necessity for business users. Companies are seeing a

wide variety of business users with an increased level of activity, with the consumption of data-

driven insight and with their level of interaction with data due to availability of variety of data.

Big data cannot be converted into an asset unless it is analysed and insights are derived from it. The

process of mining relevant and useful insights from the plethora of raw data generated to make

smart business decisions is essential for data-driven decisions.

Leading companies seek to capture the right data, transform it into insight, and leverage that insight

to generate a real business impact. Current business environment demands merging Master Data

management(MDM) with technologies for data integration, data governance, and analytics in order

to spread insights to more users.

NameNode services are parallel to storage, and the Job Tracker services are

parallel to processing.

Hadoop Common

Hadoop Common provides a set of utilities and libraries. All other Hadoop

modules use these. It is an essential part of the Hadoop ecosystem.

2. Spark

It’s a platform that can work alone or in conjunction with Hadoop to process data a

hundred times faster. Most practical applications of Big Data nowadays use Spark.

3. Pig, Hive, Sqoop, Flume, Kafka, Mahout, Drill, and a host of other applications

contribute in one way or another to making data processing simpler, faster, cheaper, or

all three.

Data Analytics and Master Data Management to support Decision Support &

Business Intelligence

With the rapidly increasing demand to predict the future of markets and consumer behaviour- data

analytics and data management has become a necessity for business users. Companies are seeing a

wide variety of business users with an increased level of activity, with the consumption of data-

driven insight and with their level of interaction with data due to availability of variety of data.

Big data cannot be converted into an asset unless it is analysed and insights are derived from it. The

process of mining relevant and useful insights from the plethora of raw data generated to make

smart business decisions is essential for data-driven decisions.

Leading companies seek to capture the right data, transform it into insight, and leverage that insight

to generate a real business impact. Current business environment demands merging Master Data

management(MDM) with technologies for data integration, data governance, and analytics in order

to spread insights to more users.

P a g e | 8

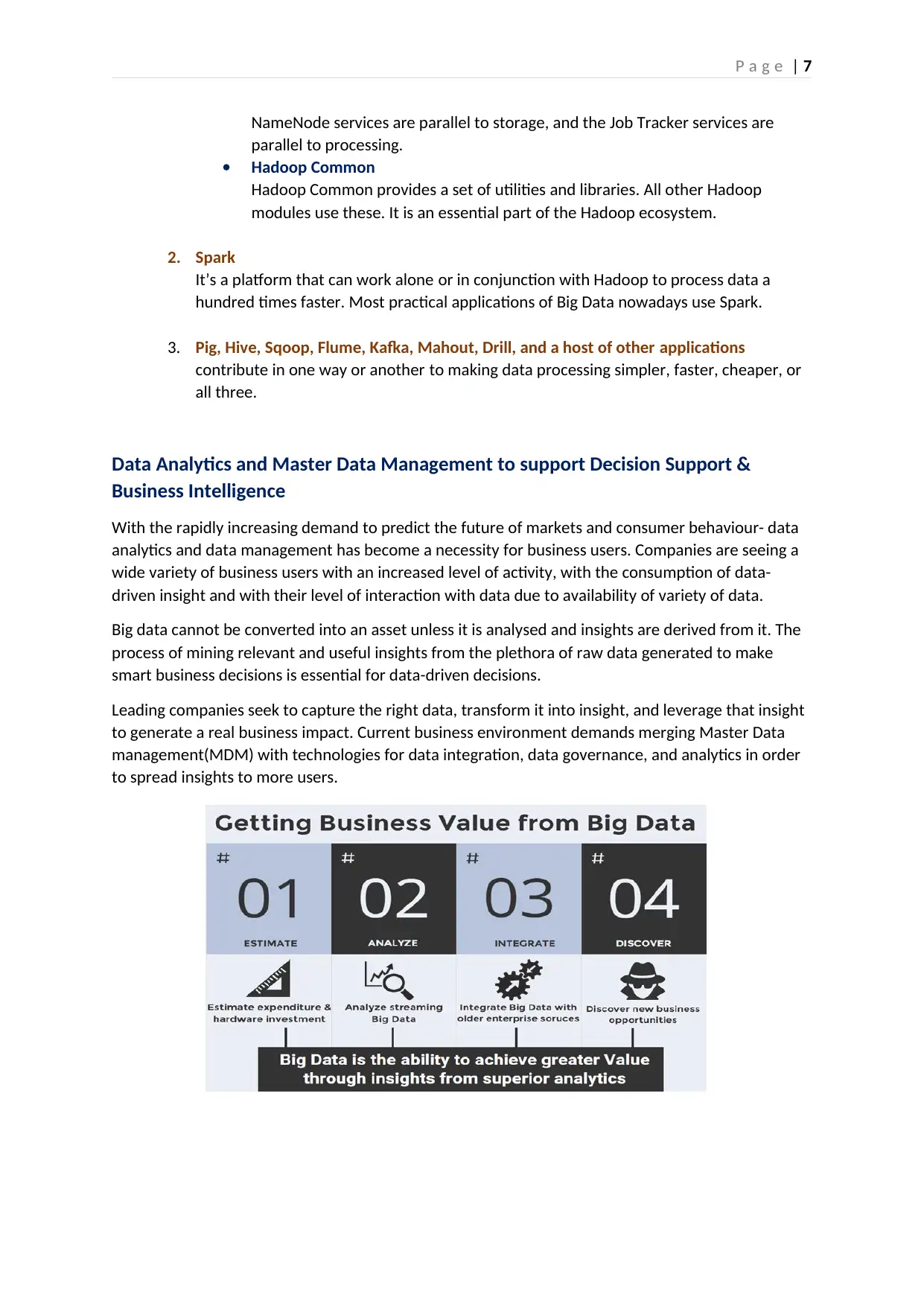

NoSQL for Big Data Analytics.

We are surrounded by data everywhere. Data is stored in many forms such as images, videos, texts,

voice notes, graphs, etc. Storing data in huge amounts makes it vital for an increased RAM on our

mobile phones, laptops, and PCs for faster and improved performance.

In big data analytics, we are storing big data and storing big data requires databases. Big data has

found the term “NoSQL” in the database management system.

Traditionally, databases store data in tables containing rows and columns which is structured data.

These are called Relational databases or SQL Databases. In today’s time, we want to store data in

any format. So, NoSQL databases were introduced to store unstructured data.

Relational databases store data in tables and structure of each table is fixed. They require a strict

structure on how and what kind of data can be stored. However, nowadays there is a lot of highly

unstructured data that needs to store or operated. Also, relational databases were not designed to

provide the required scalability and agility of Big Data. To enable this, we need a different database

technology that can handle such unstructured data.

NoSQL databases exist in many types:

Document databases: Structured data formats like JSON, XML.

E.g.- MongoDB, CouchDB

Graph databases: Graph databases information in nodes, which can be connected to other

nodes.

E.g.-Neo4J, HyperGraphDB

Key-value database: Each entry is a simple key-value pair.

E.g.- Voldemort, Redis, Riak

Wide-Column databases: Like relational databases but it stores data in a column-oriented

manner.

E.g.- Apache’s Cassandra, HBase

NoSQL databases are designed to scale horizontally across many servers, which makes them

appealing for large data volumes or application loads that exceed the capacity of a single

server.

MongoDB is the most popular of all NoSQL database as it preserves the best features of

relational databases while incorporating the advantages of NoSQL.

Advantages of NoSQL Databases:

Better at handling unstructured data

Ability to scale well, i.e., it can grow horizontal

Ability to grow vertical by storing data on different servers

Lower cost

NoSQL for Big Data Analytics.

We are surrounded by data everywhere. Data is stored in many forms such as images, videos, texts,

voice notes, graphs, etc. Storing data in huge amounts makes it vital for an increased RAM on our

mobile phones, laptops, and PCs for faster and improved performance.

In big data analytics, we are storing big data and storing big data requires databases. Big data has

found the term “NoSQL” in the database management system.

Traditionally, databases store data in tables containing rows and columns which is structured data.

These are called Relational databases or SQL Databases. In today’s time, we want to store data in

any format. So, NoSQL databases were introduced to store unstructured data.

Relational databases store data in tables and structure of each table is fixed. They require a strict

structure on how and what kind of data can be stored. However, nowadays there is a lot of highly

unstructured data that needs to store or operated. Also, relational databases were not designed to

provide the required scalability and agility of Big Data. To enable this, we need a different database

technology that can handle such unstructured data.

NoSQL databases exist in many types:

Document databases: Structured data formats like JSON, XML.

E.g.- MongoDB, CouchDB

Graph databases: Graph databases information in nodes, which can be connected to other

nodes.

E.g.-Neo4J, HyperGraphDB

Key-value database: Each entry is a simple key-value pair.

E.g.- Voldemort, Redis, Riak

Wide-Column databases: Like relational databases but it stores data in a column-oriented

manner.

E.g.- Apache’s Cassandra, HBase

NoSQL databases are designed to scale horizontally across many servers, which makes them

appealing for large data volumes or application loads that exceed the capacity of a single

server.

MongoDB is the most popular of all NoSQL database as it preserves the best features of

relational databases while incorporating the advantages of NoSQL.

Advantages of NoSQL Databases:

Better at handling unstructured data

Ability to scale well, i.e., it can grow horizontal

Ability to grow vertical by storing data on different servers

Lower cost

P a g e | 9

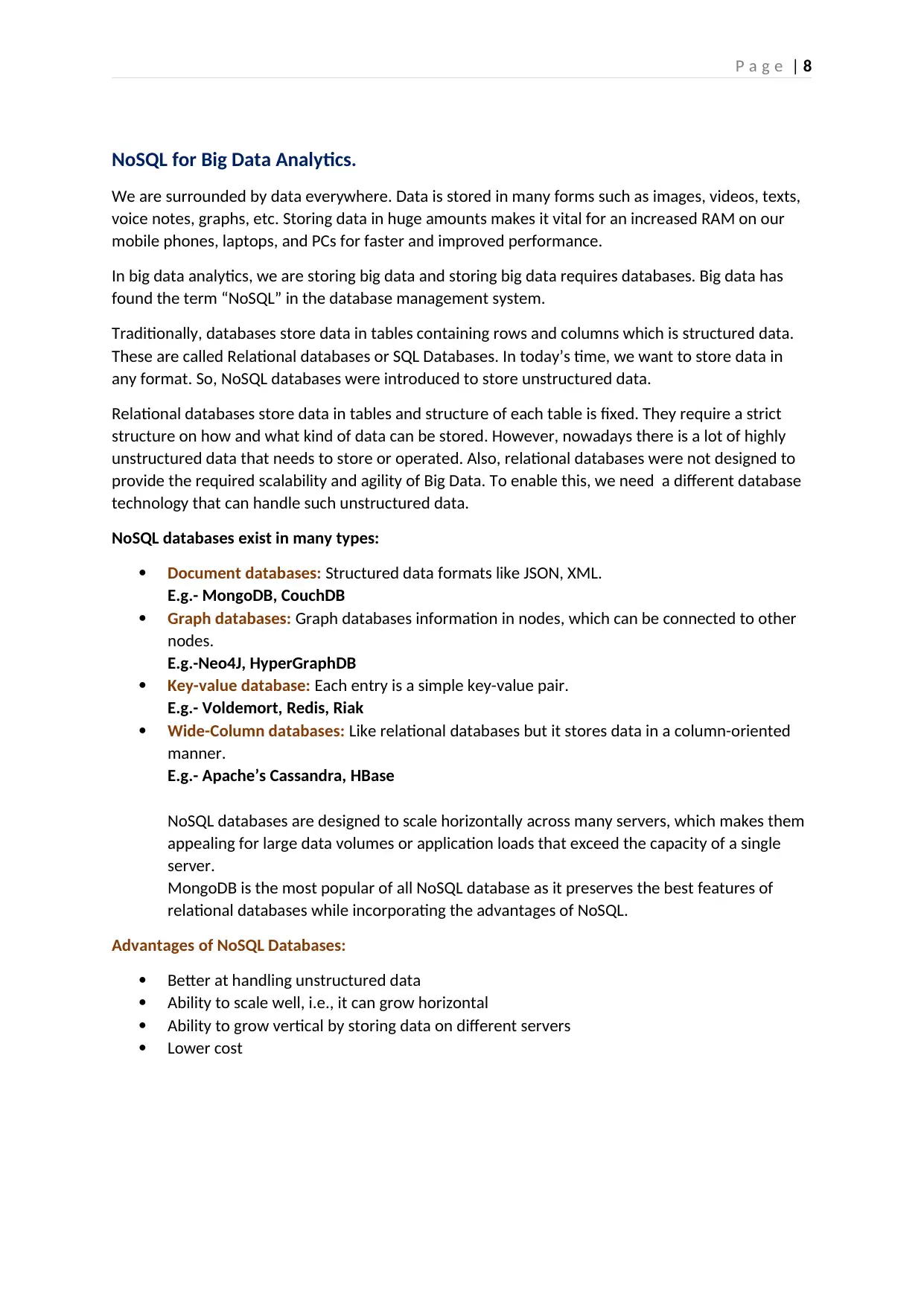

Real-Time Big Data with NoSQL:

In the past, operational databases and analytical databases were maintained as different

environments. The operational database powered applications while the analytical database was

part of the business intelligence and reporting environment. Today, NoSQL is used as both the front-

end – to store and manage operational data from any source, and to feed data to Hadoop – as well

as the back-end to receive, store and serve analytic results from Hadoop.

In 2007, Amazon introduced a NoSQL database service called SimpleDB and now its offering

DynamoDB. Like SimpleDB, DynamoDB is one of many Amazon Web Services (AWS). A set of tools

offering online access to various computing resources, from virtual servers to virtual storage to

databases and other software. Amazon have been using NoSQL databases to help manage their own

online operations.

Role of Social media and human elements in organisations decision making

process.

This era of Business-to-Person (B2P) communications brought by all things social has emerged as a

new model of engagement. The use of social media is playing an important role in the professional

lives of decision-makers as they use the tools and mediums to engage their decision-making

processes. Through the use of professional networks and online communities, decision-makers are

connecting and collaborating with peers, experts, and colleagues based on requirements. The top

social networks, LinkedIn, Facebook and Twitter now emerge as professional networks.

Social media networks are enabling businesses to become more socially engaged, exploiting new

business model based on firm’s ability to extract value from crowd generated data and content. E.g.,

Amazon managed to build a marketplace nourished by voluntary contributions (like user-generated

reviews) which allows the users to actively participate in the communication flow around their

products. Organisations are focusing on social media and trying to leverage opportunities.

Critical Success Factors

Generally, companies use the descriptive approach to make decisions, by conducting an analysis

based on the historical data. The only focus on the past makes it difficult to concentrate on

strategies for the future. In present scenario, companies recognize the value of predictive approach

Real-Time Big Data with NoSQL:

In the past, operational databases and analytical databases were maintained as different

environments. The operational database powered applications while the analytical database was

part of the business intelligence and reporting environment. Today, NoSQL is used as both the front-

end – to store and manage operational data from any source, and to feed data to Hadoop – as well

as the back-end to receive, store and serve analytic results from Hadoop.

In 2007, Amazon introduced a NoSQL database service called SimpleDB and now its offering

DynamoDB. Like SimpleDB, DynamoDB is one of many Amazon Web Services (AWS). A set of tools

offering online access to various computing resources, from virtual servers to virtual storage to

databases and other software. Amazon have been using NoSQL databases to help manage their own

online operations.

Role of Social media and human elements in organisations decision making

process.

This era of Business-to-Person (B2P) communications brought by all things social has emerged as a

new model of engagement. The use of social media is playing an important role in the professional

lives of decision-makers as they use the tools and mediums to engage their decision-making

processes. Through the use of professional networks and online communities, decision-makers are

connecting and collaborating with peers, experts, and colleagues based on requirements. The top

social networks, LinkedIn, Facebook and Twitter now emerge as professional networks.

Social media networks are enabling businesses to become more socially engaged, exploiting new

business model based on firm’s ability to extract value from crowd generated data and content. E.g.,

Amazon managed to build a marketplace nourished by voluntary contributions (like user-generated

reviews) which allows the users to actively participate in the communication flow around their

products. Organisations are focusing on social media and trying to leverage opportunities.

Critical Success Factors

Generally, companies use the descriptive approach to make decisions, by conducting an analysis

based on the historical data. The only focus on the past makes it difficult to concentrate on

strategies for the future. In present scenario, companies recognize the value of predictive approach

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

P a g e | 10

in order to provide recommendation to solve a business problem, based on decision makers’

knowledge, judgement and use of technology-Big Data, Business Intelligence (BI) and Decision

Support (DS).

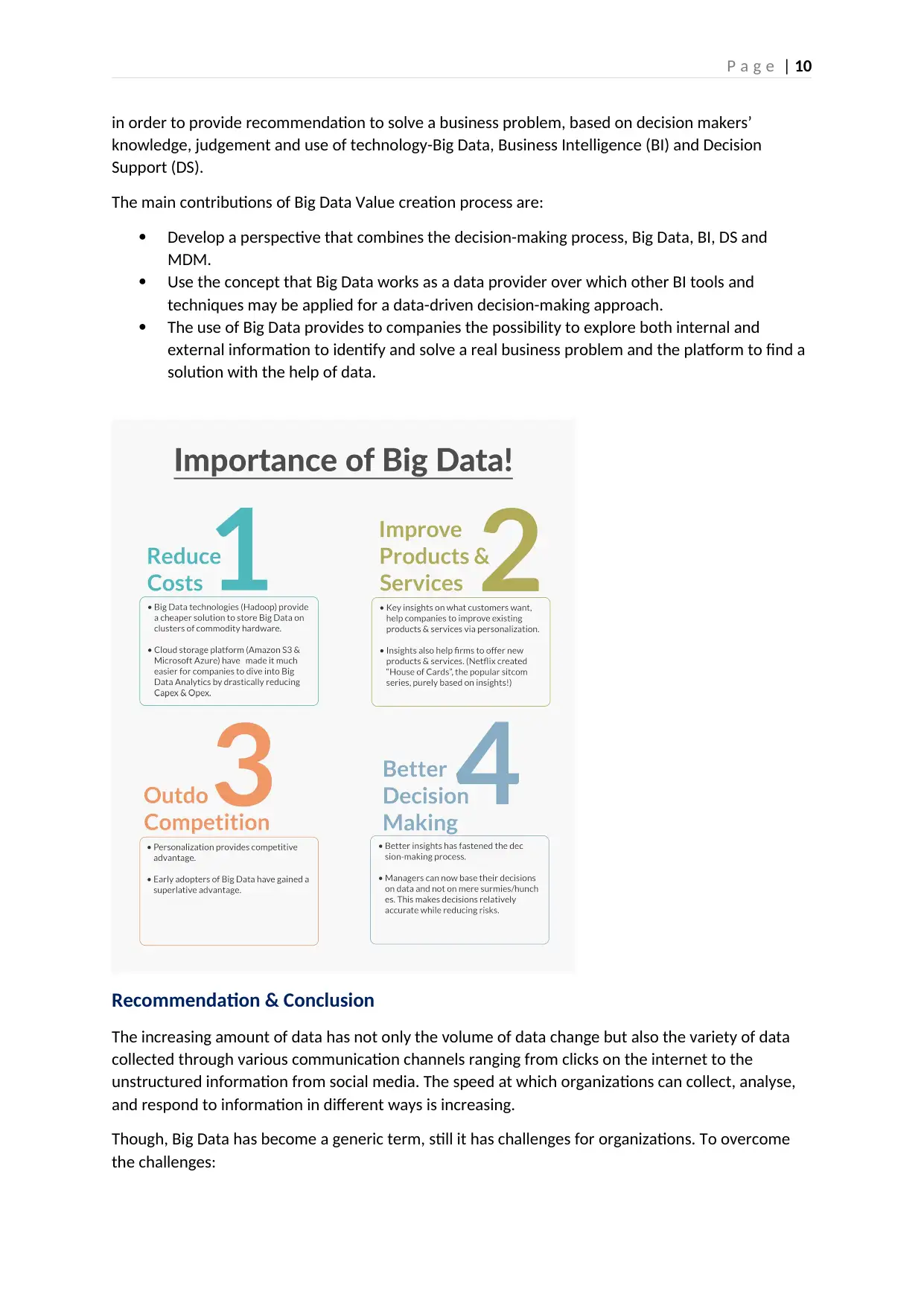

The main contributions of Big Data Value creation process are:

Develop a perspective that combines the decision-making process, Big Data, BI, DS and

MDM.

Use the concept that Big Data works as a data provider over which other BI tools and

techniques may be applied for a data-driven decision-making approach.

The use of Big Data provides to companies the possibility to explore both internal and

external information to identify and solve a real business problem and the platform to find a

solution with the help of data.

Recommendation & Conclusion

The increasing amount of data has not only the volume of data change but also the variety of data

collected through various communication channels ranging from clicks on the internet to the

unstructured information from social media. The speed at which organizations can collect, analyse,

and respond to information in different ways is increasing.

Though, Big Data has become a generic term, still it has challenges for organizations. To overcome

the challenges:

in order to provide recommendation to solve a business problem, based on decision makers’

knowledge, judgement and use of technology-Big Data, Business Intelligence (BI) and Decision

Support (DS).

The main contributions of Big Data Value creation process are:

Develop a perspective that combines the decision-making process, Big Data, BI, DS and

MDM.

Use the concept that Big Data works as a data provider over which other BI tools and

techniques may be applied for a data-driven decision-making approach.

The use of Big Data provides to companies the possibility to explore both internal and

external information to identify and solve a real business problem and the platform to find a

solution with the help of data.

Recommendation & Conclusion

The increasing amount of data has not only the volume of data change but also the variety of data

collected through various communication channels ranging from clicks on the internet to the

unstructured information from social media. The speed at which organizations can collect, analyse,

and respond to information in different ways is increasing.

Though, Big Data has become a generic term, still it has challenges for organizations. To overcome

the challenges:

P a g e | 11

Business leaders must implement new technologies and then prepare for collection and

measurement of information from the data.

The organization as a whole must understand the philosophy about how decisions are made

by understanding the real value of Big Data.

Organizations must understand the role of the Big Data associated with decision-making, with the

emphasis on creating opportunities from these decisions because consumer preferences change

every hour.

The main contribution of this research is to promote and support the integrated view of Big Data, BI,

DS & MDM in the context of decision-making process, assisting business users to create new

opportunities to resolve a specific problem.

The crucial point is to look for new sources of data to help make a decision. Big Data can be very

useful if used adequately in the decision-making process.

References:

Ann (2016) How Amazon Uses Its Own Cloud to Process Vast, Multidimensional Datasets Tate

[online].

Available from: https://dzone.com/articles/big-data-analytics-delivering-business-value-at-am

[Accessed 1 June 2018]

Gutierrez Daniel (2018) Amazon Go – Deep Learning Conquers Retail. Tate [online].

Available from: https://insidebigdata.com/2018/02/15/amazon-go-deep-learning-conquers-retail/

[Accessed 1 June 2018]

Karsten Jack and Darrell M. West (2018) Amazon Go store offers quicker checkout for greater data

collection. Tate [online].

Available from: https://www.brookings.edu/blog/techtank/2018/02/13/amazon-go-store-offers-

quicker-checkout-for-greater-data-collection/

[Accessed 1 June 2018]

Salkowitz Rob (2018) Amazon Starts With Go. Where Will The Technology End Up? Tate [online].

Available from: https://www.forbes.com/sites/robsalkowitz/2018/01/22/amazons-new-data-

driven-convenience-store-uses-ai-to-check-you-out/#12f95ef7537e

[Accessed 1 June 2018]

JOYCE GEMMA (2018) Amazon Go Data: How is the Internet Reacting to the Store of the Future?

Tate [online].

Available from: https://www.brandwatch.com/blog/react-amazon-go-data/

[Accessed 2 June 2018]

DEORAS SRISHTI (2018) Understanding The AI behind Amazon Go. Tate [online].

Available from:https://analyticsindiamag.com/understanding-ai-behind-amazon-go/

[Accessed 2 June 2018]

Daniel Gutierrez (2018) insideBIGDATA Special Report: Reinventing the Retail Industry through

Machine and Deep Learning (Part 3) Tate [online].

Available from: https://insidebigdata.com/2018/02/27/insidebigdata-special-report-reinventing-

retail-industry-machine-deep-learning-part-3/

[Accessed 2 June 2018]

Business leaders must implement new technologies and then prepare for collection and

measurement of information from the data.

The organization as a whole must understand the philosophy about how decisions are made

by understanding the real value of Big Data.

Organizations must understand the role of the Big Data associated with decision-making, with the

emphasis on creating opportunities from these decisions because consumer preferences change

every hour.

The main contribution of this research is to promote and support the integrated view of Big Data, BI,

DS & MDM in the context of decision-making process, assisting business users to create new

opportunities to resolve a specific problem.

The crucial point is to look for new sources of data to help make a decision. Big Data can be very

useful if used adequately in the decision-making process.

References:

Ann (2016) How Amazon Uses Its Own Cloud to Process Vast, Multidimensional Datasets Tate

[online].

Available from: https://dzone.com/articles/big-data-analytics-delivering-business-value-at-am

[Accessed 1 June 2018]

Gutierrez Daniel (2018) Amazon Go – Deep Learning Conquers Retail. Tate [online].

Available from: https://insidebigdata.com/2018/02/15/amazon-go-deep-learning-conquers-retail/

[Accessed 1 June 2018]

Karsten Jack and Darrell M. West (2018) Amazon Go store offers quicker checkout for greater data

collection. Tate [online].

Available from: https://www.brookings.edu/blog/techtank/2018/02/13/amazon-go-store-offers-

quicker-checkout-for-greater-data-collection/

[Accessed 1 June 2018]

Salkowitz Rob (2018) Amazon Starts With Go. Where Will The Technology End Up? Tate [online].

Available from: https://www.forbes.com/sites/robsalkowitz/2018/01/22/amazons-new-data-

driven-convenience-store-uses-ai-to-check-you-out/#12f95ef7537e

[Accessed 1 June 2018]

JOYCE GEMMA (2018) Amazon Go Data: How is the Internet Reacting to the Store of the Future?

Tate [online].

Available from: https://www.brandwatch.com/blog/react-amazon-go-data/

[Accessed 2 June 2018]

DEORAS SRISHTI (2018) Understanding The AI behind Amazon Go. Tate [online].

Available from:https://analyticsindiamag.com/understanding-ai-behind-amazon-go/

[Accessed 2 June 2018]

Daniel Gutierrez (2018) insideBIGDATA Special Report: Reinventing the Retail Industry through

Machine and Deep Learning (Part 3) Tate [online].

Available from: https://insidebigdata.com/2018/02/27/insidebigdata-special-report-reinventing-

retail-industry-machine-deep-learning-part-3/

[Accessed 2 June 2018]

P a g e | 12

Rijmenam van Mark (2018) How Amazon Is Leveraging Big Data. Tate [online].

Available from: https://datafloq.com/read/amazon-leveraging-big-data/517

[Accessed 2 June 2018]

Ann (2016) How Amazon Uses Its Own Cloud to Process Vast, Multidimensional Datasets

Available from:https://dzone.com/articles/big-data-analytics-delivering-business-value-at-am

[Accessed 2 June 2018]

Walter (2018) Amazon and Big Data. Tate [online].

Available from: https://digit.hbs.org/submission/amazon-and-big-data/

[Accessed 2 June 2018]

Wills Jennifer (2016) 7 Ways Amazon Uses Big Data to Stalk You (AMZN). Tate [online].

Available from:https://www.investopedia.com/articles/insights/090716/7-ways-amazon-uses-big-

data-stalk-you-amzn.asp

[Accessed 2 June 2018]

(2015) How Big Data Analysis helped increase Walmarts Sales turnover? Tate [online].

Available from: https://www.dezyre.com/article/how-big-data-analysis-helped-increase-walmarts-

sales-turnover/109

[Accessed 2 June 2018]

McAuliffe Keith (2018) PayThink Amazon needs a smart 'data river' to make Go work. Tate [online].

Available from: https://www.paymentssource.com/opinion/amazon-go-requires-a-strong-data-

management-strategy

[Accessed 2 June 2018]

Rijmenam van Mark (2018) How Amazon Is Leveraging Big Data. Tate [online].

Available from: https://datafloq.com/read/amazon-leveraging-big-data/517

[Accessed 2 June 2018]

Ann (2016) How Amazon Uses Its Own Cloud to Process Vast, Multidimensional Datasets

Available from:https://dzone.com/articles/big-data-analytics-delivering-business-value-at-am

[Accessed 2 June 2018]

Walter (2018) Amazon and Big Data. Tate [online].

Available from: https://digit.hbs.org/submission/amazon-and-big-data/

[Accessed 2 June 2018]

Wills Jennifer (2016) 7 Ways Amazon Uses Big Data to Stalk You (AMZN). Tate [online].

Available from:https://www.investopedia.com/articles/insights/090716/7-ways-amazon-uses-big-

data-stalk-you-amzn.asp

[Accessed 2 June 2018]

(2015) How Big Data Analysis helped increase Walmarts Sales turnover? Tate [online].

Available from: https://www.dezyre.com/article/how-big-data-analysis-helped-increase-walmarts-

sales-turnover/109

[Accessed 2 June 2018]

McAuliffe Keith (2018) PayThink Amazon needs a smart 'data river' to make Go work. Tate [online].

Available from: https://www.paymentssource.com/opinion/amazon-go-requires-a-strong-data-

management-strategy

[Accessed 2 June 2018]

1 out of 13

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.