Derivation of Linear Regression Coefficients and Ridge Regression vs LASSO

VerifiedAdded on 2023/06/10

|5

|715

|100

AI Summary

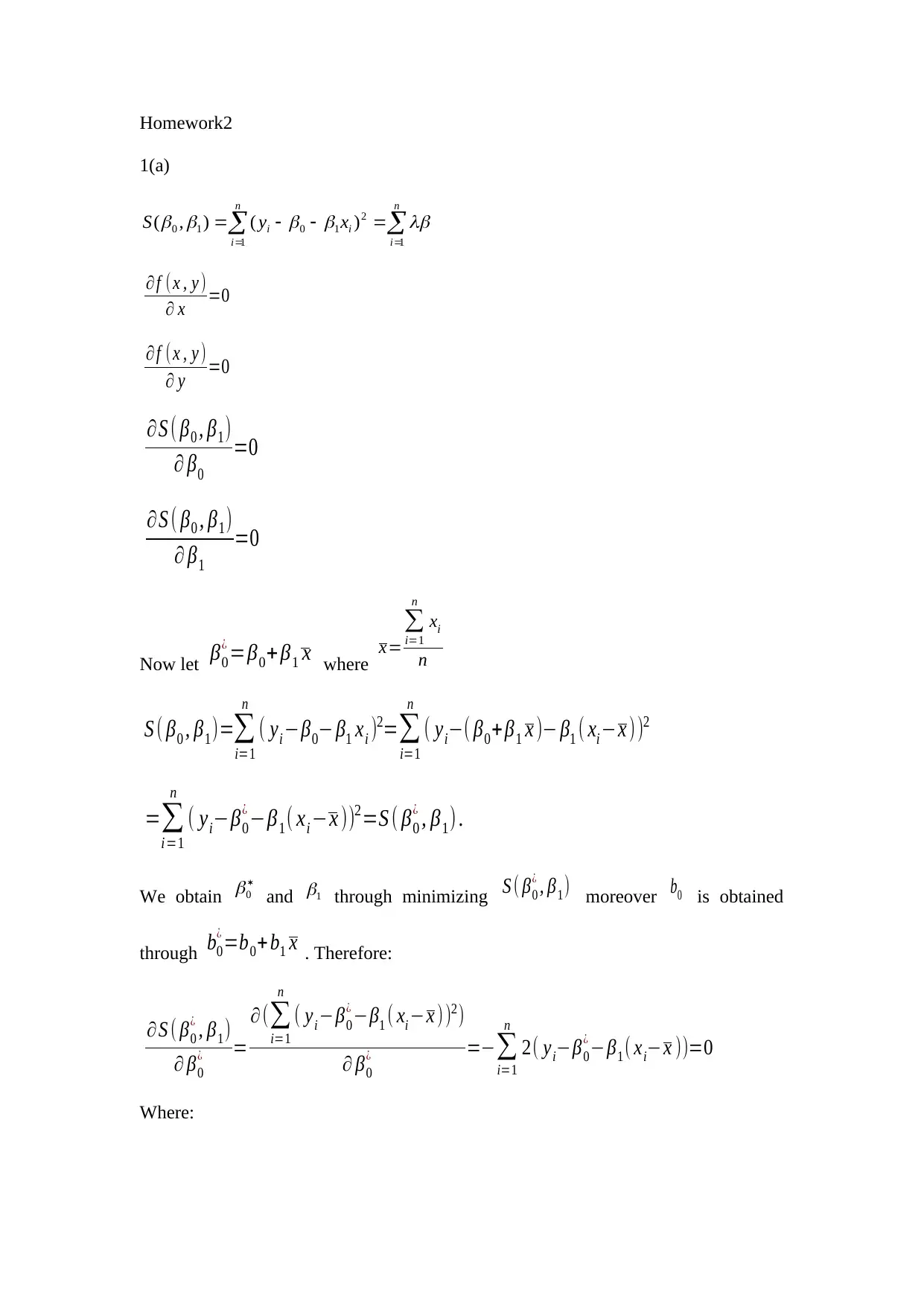

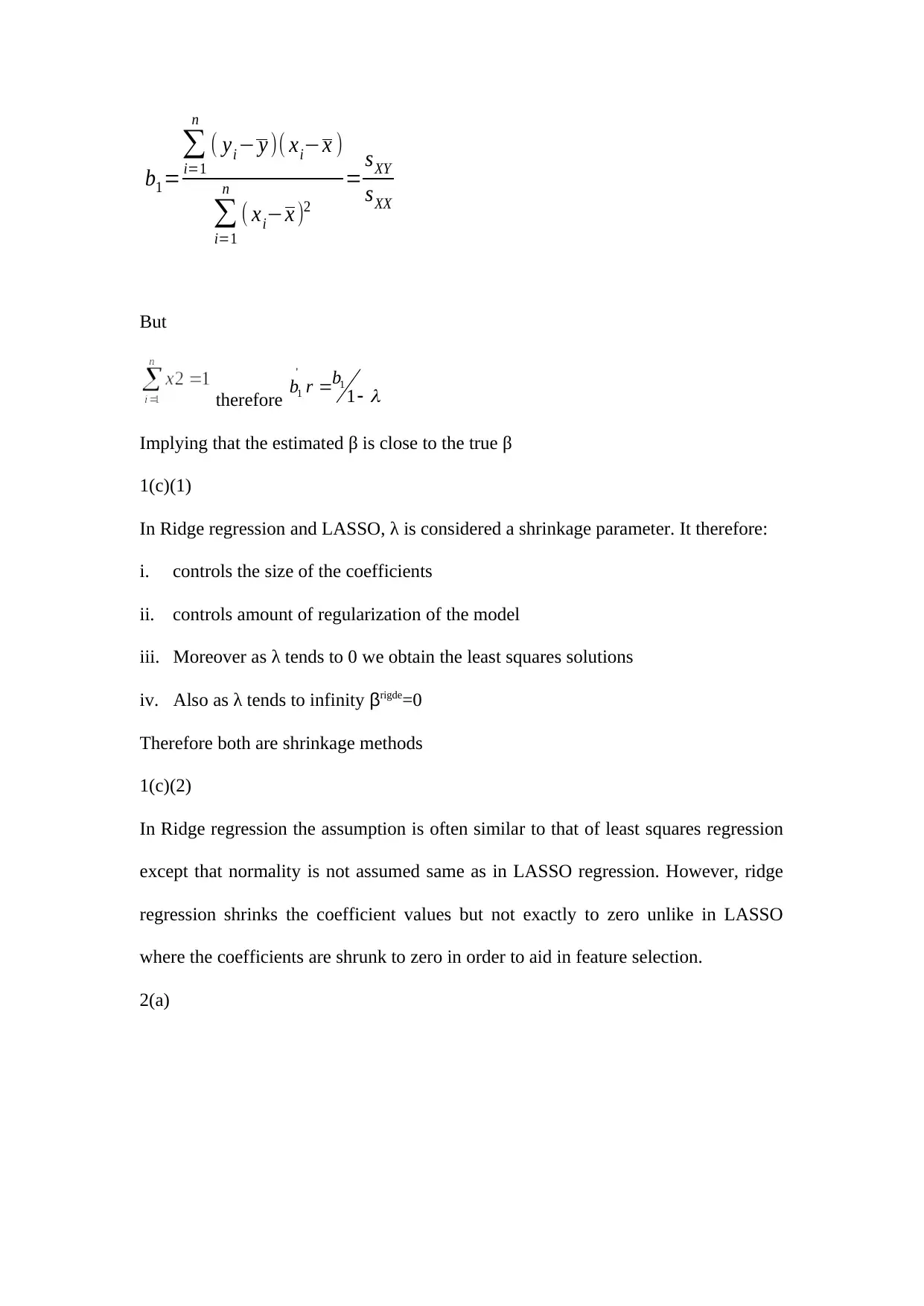

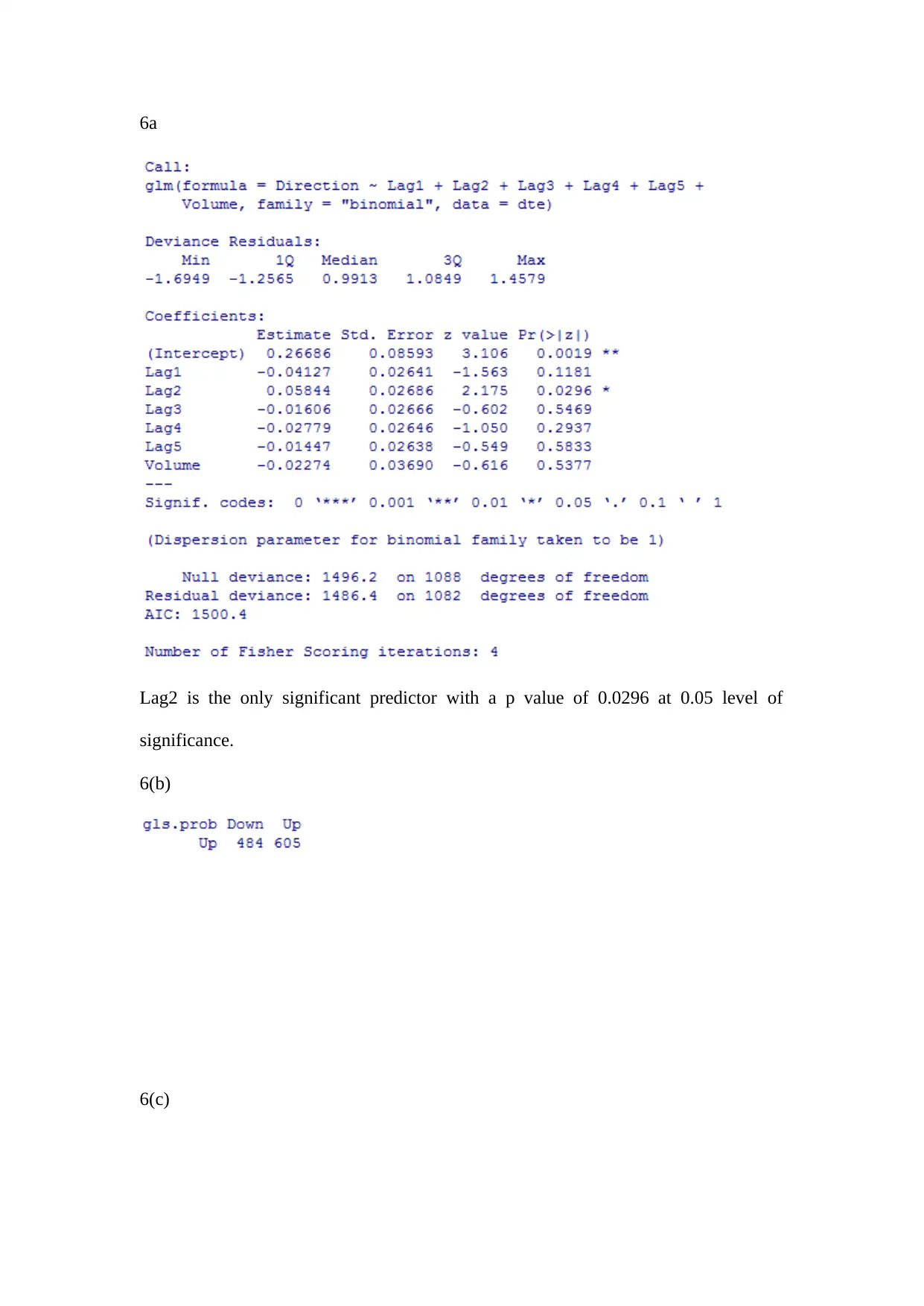

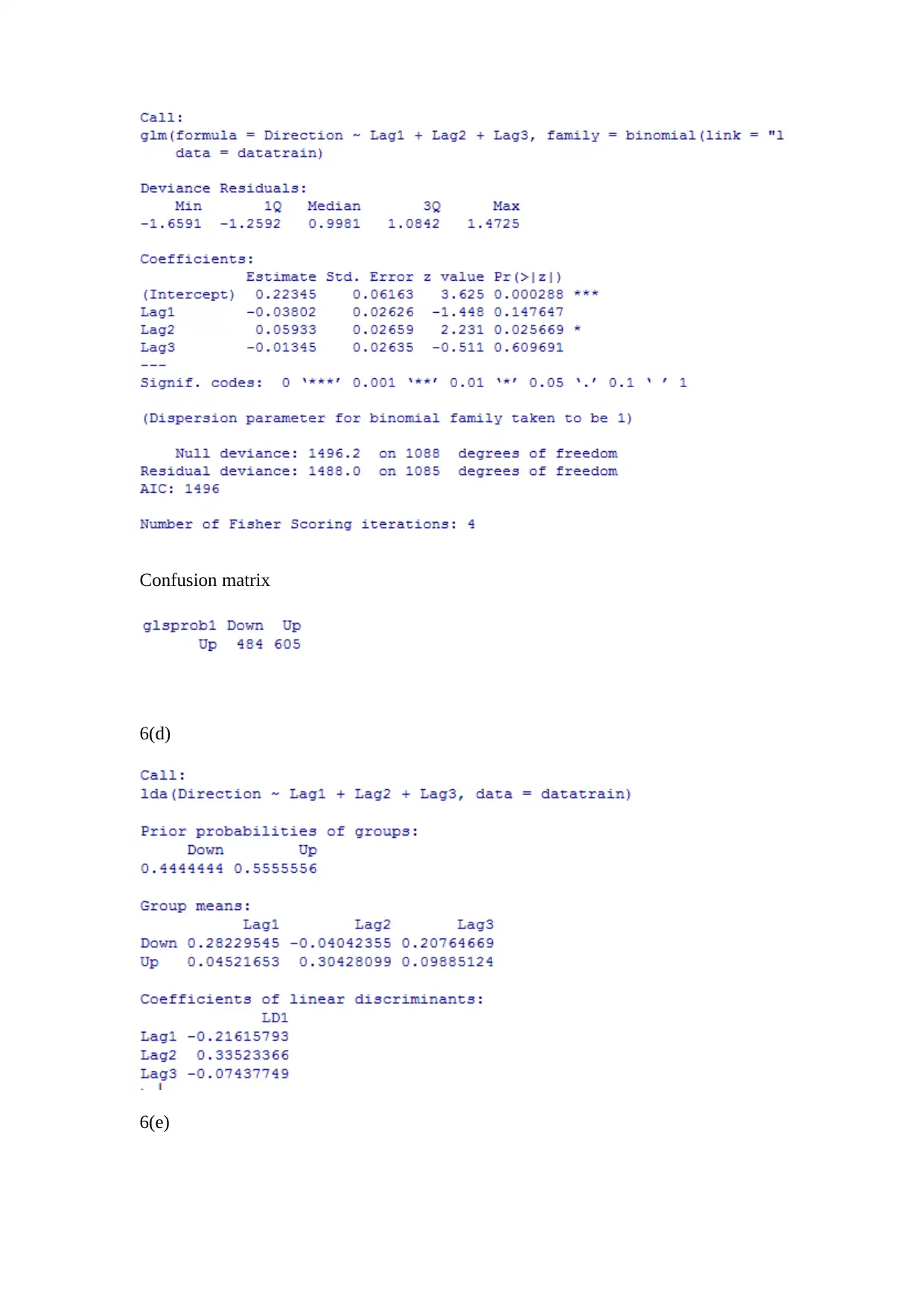

This article explains the derivation of linear regression coefficients and how they are obtained through minimizing the sum of squared errors. It also discusses the differences between Ridge Regression and LASSO, both of which are shrinkage methods used to control the size of coefficients and amount of regularization in the model. The article also covers the effects of the shrinkage parameter on the coefficients. Additionally, it includes a practical example of linear regression analysis with a confusion matrix.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

1 out of 5

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)