Logistic Regression vs Decision Tree: A Comparative Analysis

VerifiedAdded on 2024/06/21

|17

|904

|199

AI Summary

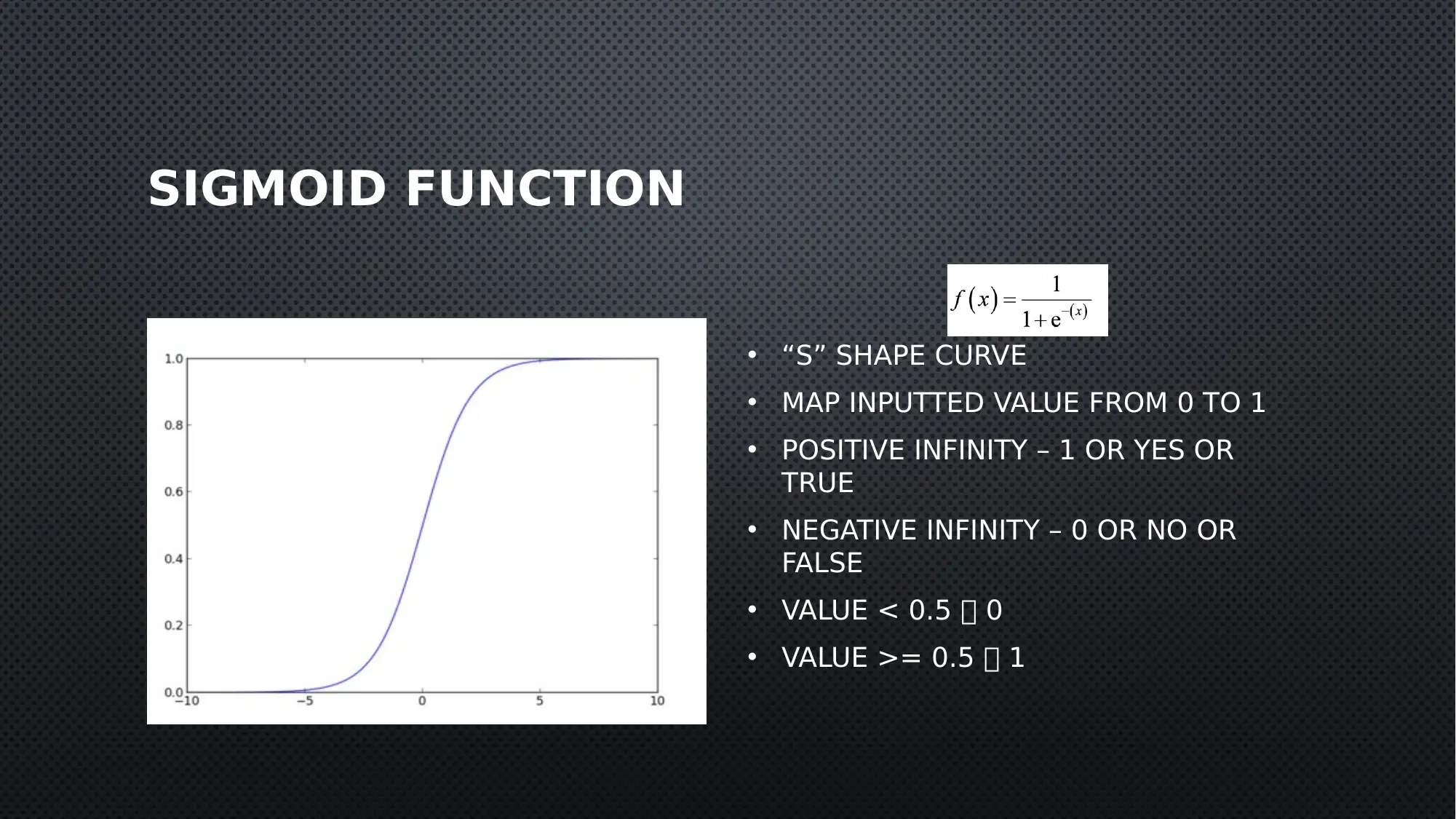

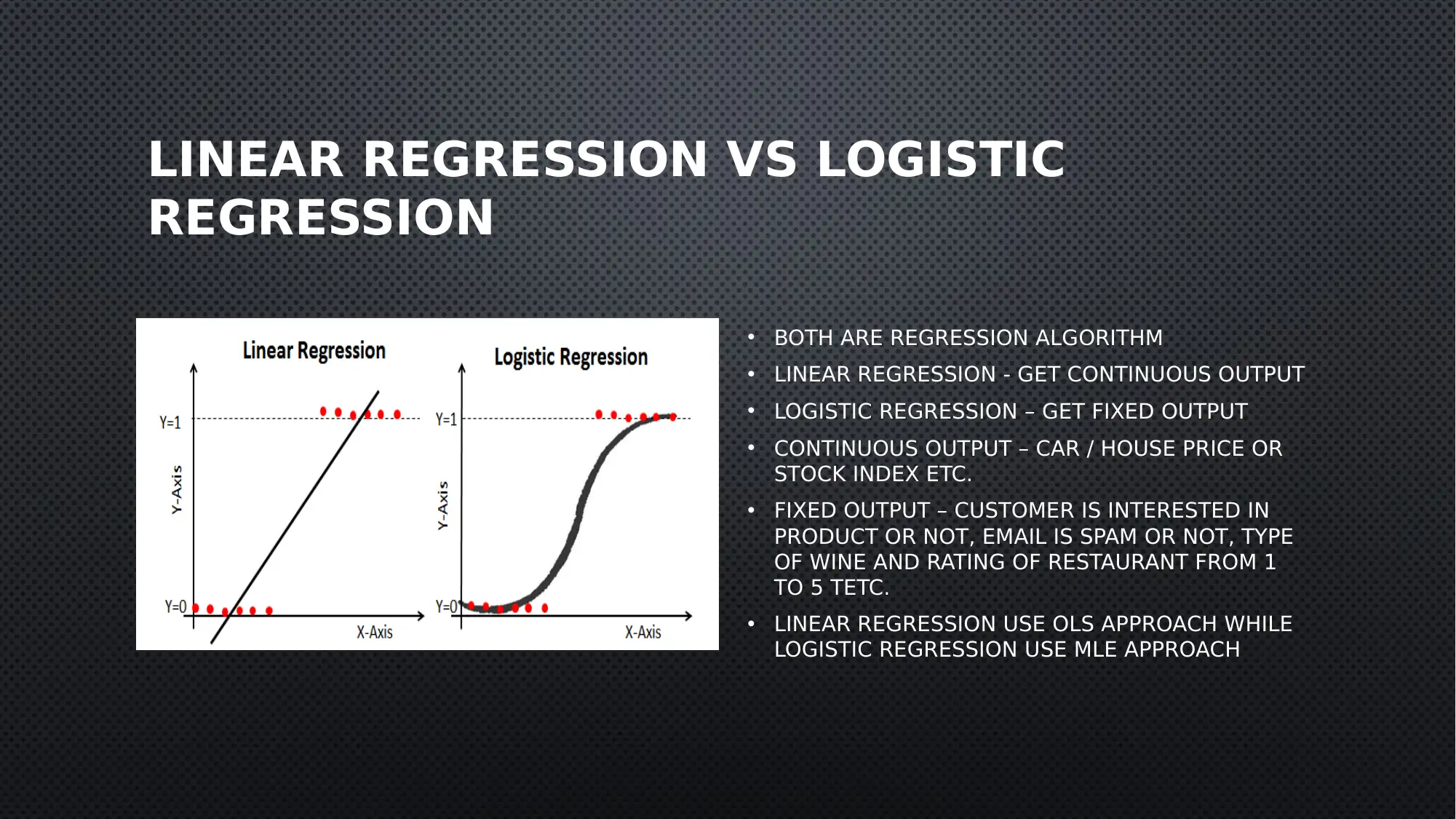

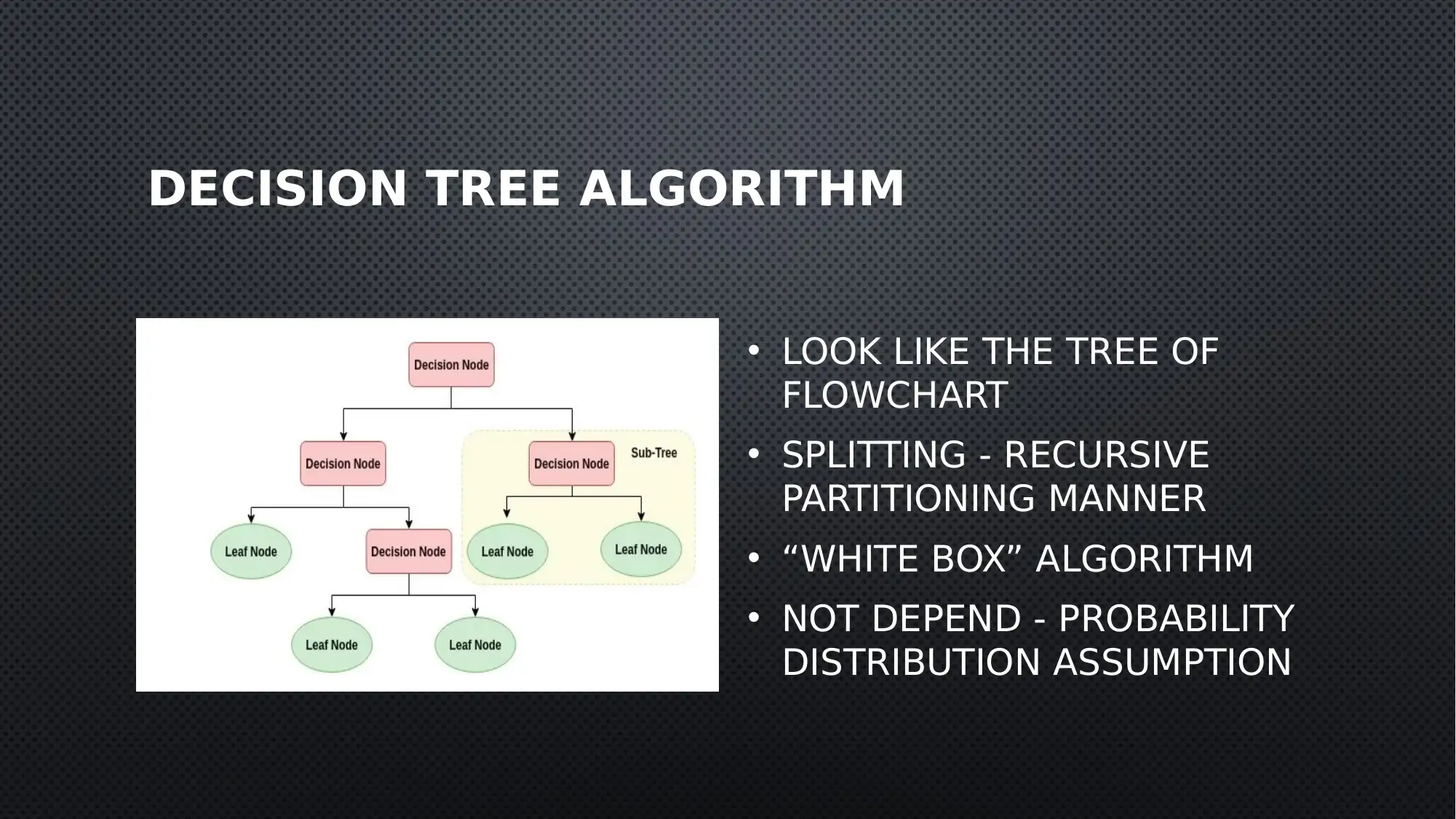

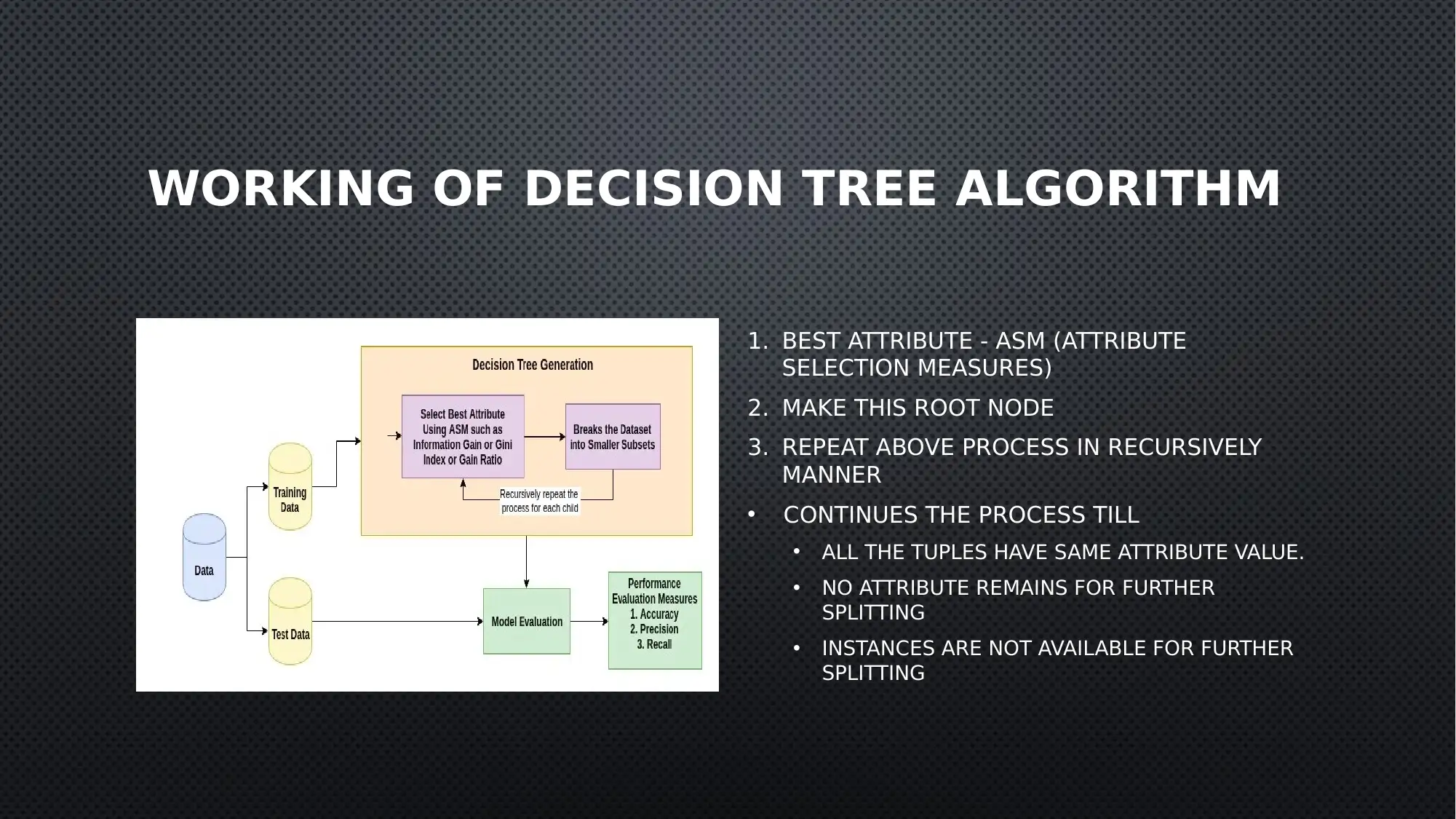

This document delves into the comparison of two prominent classification algorithms: Logistic Regression and Decision Tree. It explores their working principles, advantages, disadvantages, and key differences. Logistic Regression, a linear model, excels in predicting binary outcomes, while Decision Tree, a non-linear model, provides a visual representation of the decision-making process. The document also discusses the concept of Maximum Likelihood Estimation (MLE) and Ordinary Least Squares (OLS) in the context of these algorithms. It further examines the role of attribute selection measures like Information Gain, Gain Ratio, and Gini Index in Decision Tree construction.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

1 out of 17

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)