Plagiarism Detection: Methods, Challenges, and Future Directions

VerifiedAdded on 2022/11/15

|19

|4527

|420

Report

AI Summary

This report delves into the critical issue of plagiarism detection, exploring its increasing significance in the academic and research landscape. It begins by defining plagiarism, outlining its various forms (copy and paste, style, idea, word switch, and metaphor plagiarism), and emphasizing the impact of the internet and digital libraries on its prevalence. The core of the report focuses on the application of Singular Value Decomposition (SVD) as a key technique for plagiarism detection, particularly in the context of clustering documents to identify similarities. It explains the SVD process, including the use of the SVDLIBC software and the construction of matrices (U, Σ, VT) for analysis. The report also discusses the rationale behind using SVD to reduce the search time for plagiarized content, and the potential use of neural networks for local matching and differentiation. The problem statement highlights the challenges of plagiarism detection, the limitations of existing tools, and the importance of finding the location of similar content. It examines string and vector similarity metrics used in plagiarism detection, and the need for scalable solutions. The report concludes by emphasizing the ongoing need to address plagiarism, promote academic integrity, and explore advanced detection methods.

Running head: PLAGIARISM DETECTION

Plagiarism Detection

Name of the Student

Name of the University

Author Note

Plagiarism Detection

Name of the Student

Name of the University

Author Note

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1PLAGIARISM DETECTION

Table of Contents

Chapter 1: Introduction........................................................................................................2

1.1 Background:...............................................................................................................2

1.2 Rationale....................................................................................................................4

1.3 Problem Statement:....................................................................................................6

1.4 Aim, Objectives and research questions:...................................................................9

1.5 Significance of the Research:..................................................................................10

1.6 Research structure:...................................................................................................12

Bibliography:.....................................................................................................................16

Table of Contents

Chapter 1: Introduction........................................................................................................2

1.1 Background:...............................................................................................................2

1.2 Rationale....................................................................................................................4

1.3 Problem Statement:....................................................................................................6

1.4 Aim, Objectives and research questions:...................................................................9

1.5 Significance of the Research:..................................................................................10

1.6 Research structure:...................................................................................................12

Bibliography:.....................................................................................................................16

2PLAGIARISM DETECTION

Chapter 1: Introduction

1.1 Background:

Plagiarism is becoming an important factor for the researchers due to the importance and

the fast growing rates. The primary areas covered by the researchers are the faster search tools

and the effective clustering process1. There are various tools or techniques to determine the

plagiarism in the document. The main reasons for the plagiarism is the development of internet.

The development of the World Wide Web and the digital libraries increased the rate of

plagiarism in the documents. The main concern is that some people just copy and paste their

document without informing the actual owner of the document. The main objective is to use a

fast, efficient and effective plagiarism detector which will detect the plagiarism easily in the

given document. Basically plagiarism is the act off stealing someone’s writing and uploading it

with his her name without the acknowledgement of the actual writer of the document. The

plagiarism can be of five types2: Copy and paste plagiarism, Style plagiarism, idea plagiarism,

Word Switch plagiarism and Metaphor Plagiarism.

Thaw SVD or Singular Value Decomposition is one of the major tools in the application

which is used to retrieve the information. It is considered to the most appropriate technique for

the sparse matrix. There are various theorems in the SVD like: (a) “Let A is an m n rank-r

matrix. Be σ1≥· · ·≥σr Eigen values of a matrix .Then there exist orthogonal matrices U =

(u1 , . . . , ur ) and V = (v1 , . . . , vr ), whose column vectors are orthonormal, and a diagonal

matrix Σ = diag (σ1 , . . . , σr ). The decomposition A = U ΣV T is called singular value

1Franco-Salvador, Marc, Paolo Rosso, and Manuel Montes-y-Gómez. "A systematic study of knowledge graph

analysis for cross-language plagiarism detection." Information Processing & Management 52, no. 4 (2016): 550-

570.

2Miranda-Jiménez, Sabino, and Efstathios Stamatatos. "Automatic Generation of Summary Obfuscation Corpus for

Plagiarism Detection." Acta Polytechnica Hungarica 14, no. 3 (2017).

Chapter 1: Introduction

1.1 Background:

Plagiarism is becoming an important factor for the researchers due to the importance and

the fast growing rates. The primary areas covered by the researchers are the faster search tools

and the effective clustering process1. There are various tools or techniques to determine the

plagiarism in the document. The main reasons for the plagiarism is the development of internet.

The development of the World Wide Web and the digital libraries increased the rate of

plagiarism in the documents. The main concern is that some people just copy and paste their

document without informing the actual owner of the document. The main objective is to use a

fast, efficient and effective plagiarism detector which will detect the plagiarism easily in the

given document. Basically plagiarism is the act off stealing someone’s writing and uploading it

with his her name without the acknowledgement of the actual writer of the document. The

plagiarism can be of five types2: Copy and paste plagiarism, Style plagiarism, idea plagiarism,

Word Switch plagiarism and Metaphor Plagiarism.

Thaw SVD or Singular Value Decomposition is one of the major tools in the application

which is used to retrieve the information. It is considered to the most appropriate technique for

the sparse matrix. There are various theorems in the SVD like: (a) “Let A is an m n rank-r

matrix. Be σ1≥· · ·≥σr Eigen values of a matrix .Then there exist orthogonal matrices U =

(u1 , . . . , ur ) and V = (v1 , . . . , vr ), whose column vectors are orthonormal, and a diagonal

matrix Σ = diag (σ1 , . . . , σr ). The decomposition A = U ΣV T is called singular value

1Franco-Salvador, Marc, Paolo Rosso, and Manuel Montes-y-Gómez. "A systematic study of knowledge graph

analysis for cross-language plagiarism detection." Information Processing & Management 52, no. 4 (2016): 550-

570.

2Miranda-Jiménez, Sabino, and Efstathios Stamatatos. "Automatic Generation of Summary Obfuscation Corpus for

Plagiarism Detection." Acta Polytechnica Hungarica 14, no. 3 (2017).

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3PLAGIARISM DETECTION

decomposition of matrix A and numbers σ1 , . . . , σr are singular values of the matrix A.

Columns of U (or V ) are called left (or right) singular vectors of matrix A”.

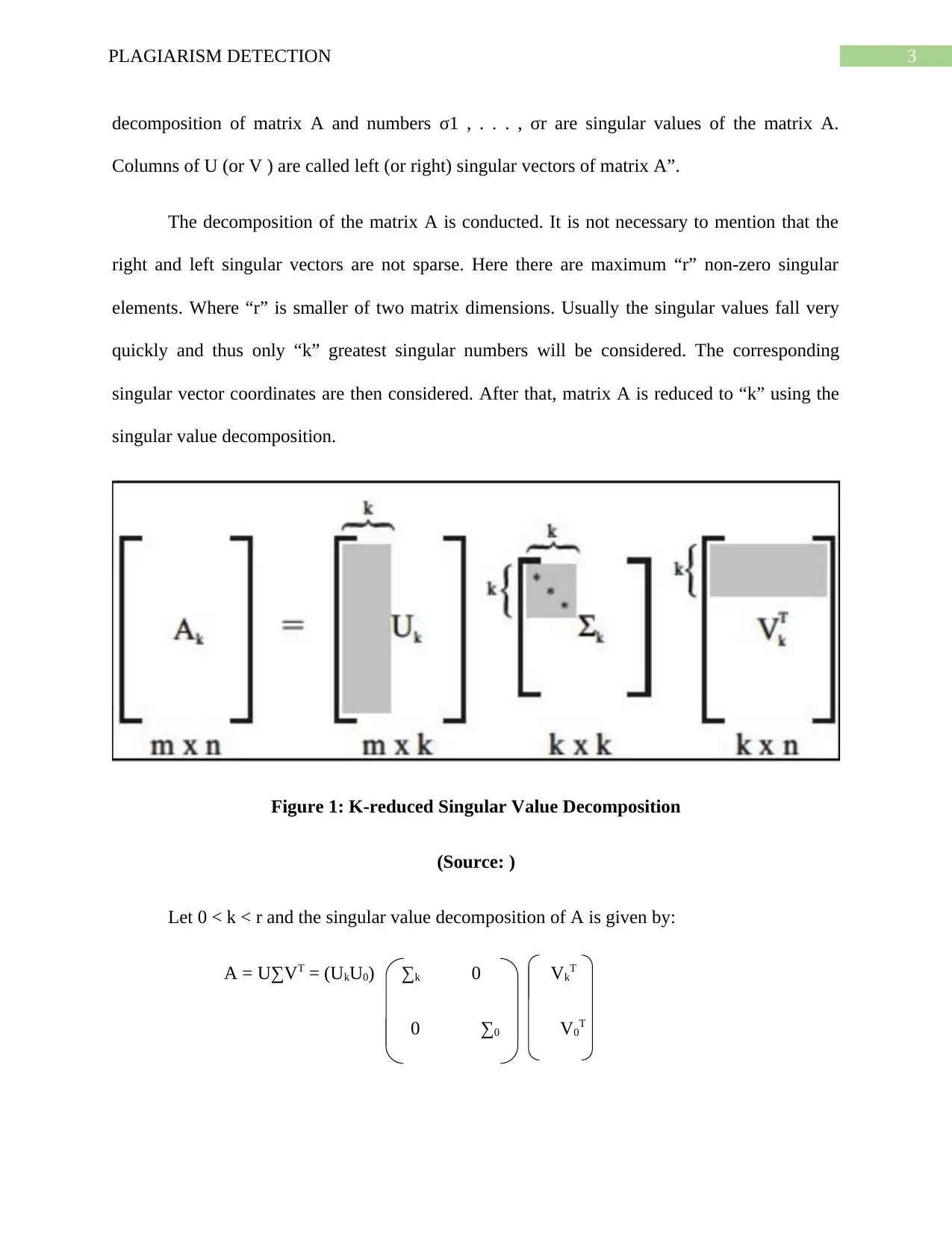

The decomposition of the matrix A is conducted. It is not necessary to mention that the

right and left singular vectors are not sparse. Here there are maximum “r” non-zero singular

elements. Where “r” is smaller of two matrix dimensions. Usually the singular values fall very

quickly and thus only “k” greatest singular numbers will be considered. The corresponding

singular vector coordinates are then considered. After that, matrix A is reduced to “k” using the

singular value decomposition.

Figure 1: K-reduced Singular Value Decomposition

(Source: )

Let 0 < k < r and the singular value decomposition of A is given by:

A = U∑VT = (UkU0) ∑k 0 VkT

0 ∑0 V0T

decomposition of matrix A and numbers σ1 , . . . , σr are singular values of the matrix A.

Columns of U (or V ) are called left (or right) singular vectors of matrix A”.

The decomposition of the matrix A is conducted. It is not necessary to mention that the

right and left singular vectors are not sparse. Here there are maximum “r” non-zero singular

elements. Where “r” is smaller of two matrix dimensions. Usually the singular values fall very

quickly and thus only “k” greatest singular numbers will be considered. The corresponding

singular vector coordinates are then considered. After that, matrix A is reduced to “k” using the

singular value decomposition.

Figure 1: K-reduced Singular Value Decomposition

(Source: )

Let 0 < k < r and the singular value decomposition of A is given by:

A = U∑VT = (UkU0) ∑k 0 VkT

0 ∑0 V0T

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4PLAGIARISM DETECTION

Ak = UkΣk Vk T is known as the singular value decomposition of rank “k”. In case of the

information retrieval if all the documents are related to a single topic then latent semantics are

obtained3. Moreover the documents will contain same vectors in the small space. The grey areas

in the figure 1 determine first k coordinates from used the singular vectors.

Theorem 2: “Among all m x n matrices C of rank at most k Ak is one that minimize

2

AK - A = ∑i,j (Ai,j – Cw,j )2

F

The SVD determination is the very complex. It represents the decomposition of original matrix.

SVD-updating is the partial solution. Whenever the error rises the updates occur simultaneously.

Thus, the recalculation is required.

1.2 Rationale

The main objective of the plagiarism detector tool is to decrease the time in searching any

plagiarized work in the given document. It also helps to simplify the plagiarized work and help

the author to remove the plagiarized section. Thus the better way to reduce this search time is to

use the clustering mechanism in the plagiarism tool. Singular Value Decomposition is used to

assist clustering mechanism of documents by generating new matrix with less dimensions which

is utilized to cluster the document with the suspected one4. This is the first stage and the second

stage includes usage of the neural networks for the local matching as well as differentiating

among the suspicious document with the source document and also to use the Kohenen maps to

visualize.

3Prado, Bruno, Kalil A. Bispo, and Raul Andrade. "X9: An Obfuscation Resilient Approach for Source Code

Plagiarism Detection in Virtual Learning Environments." In ICEIS (1), pp. 517-524. 2018.

4Abdi, Asad, Norisma Idris, Rasim M. Alguliyev, and Ramiz M. Aliguliyev. "PDLK: Plagiarism detection using

linguistic knowledge." Expert Systems with Applications 42, no. 22 (2015): 8936-8946.

Ak = UkΣk Vk T is known as the singular value decomposition of rank “k”. In case of the

information retrieval if all the documents are related to a single topic then latent semantics are

obtained3. Moreover the documents will contain same vectors in the small space. The grey areas

in the figure 1 determine first k coordinates from used the singular vectors.

Theorem 2: “Among all m x n matrices C of rank at most k Ak is one that minimize

2

AK - A = ∑i,j (Ai,j – Cw,j )2

F

The SVD determination is the very complex. It represents the decomposition of original matrix.

SVD-updating is the partial solution. Whenever the error rises the updates occur simultaneously.

Thus, the recalculation is required.

1.2 Rationale

The main objective of the plagiarism detector tool is to decrease the time in searching any

plagiarized work in the given document. It also helps to simplify the plagiarized work and help

the author to remove the plagiarized section. Thus the better way to reduce this search time is to

use the clustering mechanism in the plagiarism tool. Singular Value Decomposition is used to

assist clustering mechanism of documents by generating new matrix with less dimensions which

is utilized to cluster the document with the suspected one4. This is the first stage and the second

stage includes usage of the neural networks for the local matching as well as differentiating

among the suspicious document with the source document and also to use the Kohenen maps to

visualize.

3Prado, Bruno, Kalil A. Bispo, and Raul Andrade. "X9: An Obfuscation Resilient Approach for Source Code

Plagiarism Detection in Virtual Learning Environments." In ICEIS (1), pp. 517-524. 2018.

4Abdi, Asad, Norisma Idris, Rasim M. Alguliyev, and Ramiz M. Aliguliyev. "PDLK: Plagiarism detection using

linguistic knowledge." Expert Systems with Applications 42, no. 22 (2015): 8936-8946.

5PLAGIARISM DETECTION

Stage 1: It uses the SVD reduction mechanism for the effective clustering of the document.

A graph is used to display the similarity of the source document with the suspicious

document. The row indicates the document and the column of the graph indicates the

attributes of the document. Then data in the adjacency matrix is read and transformed5. This

data has been transformed and analyzed by utilizing the Singular Value Decomposition

Method. The outcome is recorded in three matrixes by computing A = U ∑ VT. Then

Singular Value Decomposition is conducted on the computed and original matrix. The U-

Matrix and the SOMs are used as the learning stage in the Singular Value Decomposition

stage. In the reading and transforming data stage the data are collected from the student

assignment where we obtain a (92 X 10) matrix (Assuming there are 10 assignment copies of

students) in the Singular Value Decomposition, NMF computations and the formal concept.

SVDLIBC is software used in the Singular Value Decomposition to compute three different

matrices of a given matrix. For example in this case it computes U, ∑ and VT. In the

Visualization of result we apply Singular Value Decomposition to original data. We compute

two different SOMs networks with respect to the input data. The Unified matrix (U - matrix)

algorithm is also used to compute and analyze the data of the Singular valued Decomposition

method. In stage 2 the clustering tools can also be used to group all the same documents

which is about the same topic. It is followed by another level of the palgiarism analysis6. The

plagiarism detected by the Singular Value Decomposition should be reduced such that the

duplicity factor is reduced and the plagiarized document is now easily be analyzed and the

detected plagiarism is reduced according to the necessities. This decreases the time for

5Gasparyan, Armen Yuri, Bekaidar Nurmashev, Bakhytzhan Seksenbayev, Vladimir I. Trukhachev, Elena I.

Kostyukova, and George D. Kitas. "Plagiarism in the context of education and evolving detection

strategies." Journal of Korean medical science 32, no. 8 (2017): 1220-1227.

6Kuznetsov, Mikhail P., Anastasia Motrenko, Rita Kuznetsova, and Vadim V. Strijov. "Methods for Intrinsic

Plagiarism Detection and Author Diarization." In CLEF (Working Notes), pp. 912-919. 2016.

Stage 1: It uses the SVD reduction mechanism for the effective clustering of the document.

A graph is used to display the similarity of the source document with the suspicious

document. The row indicates the document and the column of the graph indicates the

attributes of the document. Then data in the adjacency matrix is read and transformed5. This

data has been transformed and analyzed by utilizing the Singular Value Decomposition

Method. The outcome is recorded in three matrixes by computing A = U ∑ VT. Then

Singular Value Decomposition is conducted on the computed and original matrix. The U-

Matrix and the SOMs are used as the learning stage in the Singular Value Decomposition

stage. In the reading and transforming data stage the data are collected from the student

assignment where we obtain a (92 X 10) matrix (Assuming there are 10 assignment copies of

students) in the Singular Value Decomposition, NMF computations and the formal concept.

SVDLIBC is software used in the Singular Value Decomposition to compute three different

matrices of a given matrix. For example in this case it computes U, ∑ and VT. In the

Visualization of result we apply Singular Value Decomposition to original data. We compute

two different SOMs networks with respect to the input data. The Unified matrix (U - matrix)

algorithm is also used to compute and analyze the data of the Singular valued Decomposition

method. In stage 2 the clustering tools can also be used to group all the same documents

which is about the same topic. It is followed by another level of the palgiarism analysis6. The

plagiarism detected by the Singular Value Decomposition should be reduced such that the

duplicity factor is reduced and the plagiarized document is now easily be analyzed and the

detected plagiarism is reduced according to the necessities. This decreases the time for

5Gasparyan, Armen Yuri, Bekaidar Nurmashev, Bakhytzhan Seksenbayev, Vladimir I. Trukhachev, Elena I.

Kostyukova, and George D. Kitas. "Plagiarism in the context of education and evolving detection

strategies." Journal of Korean medical science 32, no. 8 (2017): 1220-1227.

6Kuznetsov, Mikhail P., Anastasia Motrenko, Rita Kuznetsova, and Vadim V. Strijov. "Methods for Intrinsic

Plagiarism Detection and Author Diarization." In CLEF (Working Notes), pp. 912-919. 2016.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

6PLAGIARISM DETECTION

detecting the plagiarism document and the tool also help to analyze the plagiarized section in

the source document.

1.3 Problem Statement:

With the standardization of the academic journals and articles as well as the availability

of the access to the internet the access to the information became easy for the users7. This lead to

the increased incidents of plagiarism in daily life. Plagiarism can be defined in simple words as

the unethical act of the take or use (whole or partial) another authors research, work , without

proper referencing or citation and claiming the ownerships of the work.

In the field of academics the plagiarism has become most challenging problems when it

comes to the publication of the engineering, scientific as well as papers, documents or articles

published digitally and made available on the internet network.

Due to the abundant availability of scientific papers or information through extensive

utilization of the Internet the issues of plagiarism has been increased extensively. The plagiarism

does not only include the copy of a paper but it additionally includes the rewording, adapting

parts of the paper without reference, missing references or inclusion of the wrong in an article.

The above mentioned issues makes the problem of plagiarism detection more difficult to

manage or handle in a proper manner and strategy. Most of the plagiarism detecting applications

available in the present day are not that much efficient8. Plagiarized papers articles could be

easily bypassed through using simple techniques from those applications.

7Unger, Nik, Sahithi Thandra, and Ian Goldberg. "Elxa: Scalable Privacy-Preserving Plagiarism Detection."

In Proceedings of the 2016 ACM on Workshop on Privacy in the Electronic Society, pp. 153-164. ACM, 2016.

8Daud, Ali, Jamal Ahmad Khan, Jamal Abdul Nasir, Rabeeh Ayaz Abbasi, Naif Radi Aljohani, and Jalal S.

Alowibdi. "Latent Dirichlet Allocation and POS Tags based method for external plagiarism detection: LDA and

POS tags based plagiarism detection." International Journal on Semantic Web and Information Systems

(IJSWIS) 14, no. 3 (2018): 53-69.

detecting the plagiarism document and the tool also help to analyze the plagiarized section in

the source document.

1.3 Problem Statement:

With the standardization of the academic journals and articles as well as the availability

of the access to the internet the access to the information became easy for the users7. This lead to

the increased incidents of plagiarism in daily life. Plagiarism can be defined in simple words as

the unethical act of the take or use (whole or partial) another authors research, work , without

proper referencing or citation and claiming the ownerships of the work.

In the field of academics the plagiarism has become most challenging problems when it

comes to the publication of the engineering, scientific as well as papers, documents or articles

published digitally and made available on the internet network.

Due to the abundant availability of scientific papers or information through extensive

utilization of the Internet the issues of plagiarism has been increased extensively. The plagiarism

does not only include the copy of a paper but it additionally includes the rewording, adapting

parts of the paper without reference, missing references or inclusion of the wrong in an article.

The above mentioned issues makes the problem of plagiarism detection more difficult to

manage or handle in a proper manner and strategy. Most of the plagiarism detecting applications

available in the present day are not that much efficient8. Plagiarized papers articles could be

easily bypassed through using simple techniques from those applications.

7Unger, Nik, Sahithi Thandra, and Ian Goldberg. "Elxa: Scalable Privacy-Preserving Plagiarism Detection."

In Proceedings of the 2016 ACM on Workshop on Privacy in the Electronic Society, pp. 153-164. ACM, 2016.

8Daud, Ali, Jamal Ahmad Khan, Jamal Abdul Nasir, Rabeeh Ayaz Abbasi, Naif Radi Aljohani, and Jalal S.

Alowibdi. "Latent Dirichlet Allocation and POS Tags based method for external plagiarism detection: LDA and

POS tags based plagiarism detection." International Journal on Semantic Web and Information Systems

(IJSWIS) 14, no. 3 (2018): 53-69.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

7PLAGIARISM DETECTION

Finding the location of the consecutive similar content is a significant method for

plagiarism detection. It is required to give the situation of the copied substance in the archive

notwithstanding figuring the report likeness. By-word examination is a typical finding technique.

It finds out a section as an examination unit, and parts it into a gathering of back to back words.

The situation of the words in the paragraph is recorded9. At that point utilizing by-word

examination, the longest generally progressive word can be recognized. In the end, the substance

and position of the comparable content in the report can be gotten.

String Similarity Metric: This similarity metric is utilized by outward plagiarism

detection application. Hamming separation is an outstanding case of this metric which appraisals

number of characters distinctive between two strings x and y of equivalent length is another

model, that characterizes least alter separation which change x into y, also, Longest Common

Subsequence measures the length of the longest lending of characters between a couple of

strings, x and y as for the request of the characters.

Vector Similarity Metric: Over the decade, a great number of vector comparability

measurements have been presented. A vector based closeness metric is helpful in computing

similitude between two unique records. Coordinating Coefficient is such a measurement that

ascertains likeness between two equivalent length vectors10. Jaccard Coefficient is creator such

measurement used to characterize number of shared terms against absolute number of terms

between two indistinguishable vectors, Dice Coefficient is like Jaccard yet it diminishes the

quantity of shared terms, Overlap Coefficient can register likeness as far as subset coordinating,

9Desai, Takshak, Udit Deshmukh, Mihir Gandhi, and Lakshmi Kurup. "A hybrid approach for detection of

plagiarism using natural language processing." In Proceedings of the Second International Conference on

Information and Communication Technology for Competitive Strategies, p. 6. ACM, 2016.

10Meuschke, Norman, Vincent Stange, Moritz Schubotz, Michael Karmer, and Bela Gipp. "Improving academic

plagiarism detection for STEM documents by analyzing mathematical content and citations." arXiv preprint

arXiv:1906.11761 (2019).

Finding the location of the consecutive similar content is a significant method for

plagiarism detection. It is required to give the situation of the copied substance in the archive

notwithstanding figuring the report likeness. By-word examination is a typical finding technique.

It finds out a section as an examination unit, and parts it into a gathering of back to back words.

The situation of the words in the paragraph is recorded9. At that point utilizing by-word

examination, the longest generally progressive word can be recognized. In the end, the substance

and position of the comparable content in the report can be gotten.

String Similarity Metric: This similarity metric is utilized by outward plagiarism

detection application. Hamming separation is an outstanding case of this metric which appraisals

number of characters distinctive between two strings x and y of equivalent length is another

model, that characterizes least alter separation which change x into y, also, Longest Common

Subsequence measures the length of the longest lending of characters between a couple of

strings, x and y as for the request of the characters.

Vector Similarity Metric: Over the decade, a great number of vector comparability

measurements have been presented. A vector based closeness metric is helpful in computing

similitude between two unique records. Coordinating Coefficient is such a measurement that

ascertains likeness between two equivalent length vectors10. Jaccard Coefficient is creator such

measurement used to characterize number of shared terms against absolute number of terms

between two indistinguishable vectors, Dice Coefficient is like Jaccard yet it diminishes the

quantity of shared terms, Overlap Coefficient can register likeness as far as subset coordinating,

9Desai, Takshak, Udit Deshmukh, Mihir Gandhi, and Lakshmi Kurup. "A hybrid approach for detection of

plagiarism using natural language processing." In Proceedings of the Second International Conference on

Information and Communication Technology for Competitive Strategies, p. 6. ACM, 2016.

10Meuschke, Norman, Vincent Stange, Moritz Schubotz, Michael Karmer, and Bela Gipp. "Improving academic

plagiarism detection for STEM documents by analyzing mathematical content and citations." arXiv preprint

arXiv:1906.11761 (2019).

8PLAGIARISM DETECTION

Cosine Coefficient to discover the cosine edge between two vectors, Euclidean Distance the

geometric separation between two vectors, Squared Euclidean Distance places more prominent

load on that are further separated, and Manhattan Distance can assess the normal distinction

crosswise over measurements and yields results like the straightforward euclidean separation.

Most of this applications employs comprehensive sentence based comparison in order to

detect plagiarism for the targeted paper. This technique for plagiarism detection is not scalable

for the diverse and large set of papers or articles11. In this plagiarism detecting applications

whenever some paper, article or document is compared with a registered document for the

similarity check an information retrieval method is used in order to preprocess the targeted

documents so that the semantic meaning of the information contained in the paper can be

extracted. If a match for the subject is found then the comparison to different papers of different

other subjects is not required and could be avoided.

Difficulties to the ethics in the field of research will proceed to advance as the Internet

makes it simple for every user to copy some information and paste that in their papers to claim it

as their own. Exertion should keep on assessing research articles and expositions in order to look

for answers for the security of protected innovation of individuals and to find deceiving and

literary theft in all work, beginning from students assignments to research work.

Joint efforts between research bodies' the board and programming designers should keep

on beat any challenges and fix any bug in plagiarism detecting applications. In future research,

consideration ought to be paid to advise all understudies just as specialists that their work will be

exposed to checking, and they ought to be prepared to utilize the accessible to check their work

11Ferrero, Jérémy, Laurent Besacier, Didier Schwab, and Frédéric Agnes. "Deep Investigation of Cross-Language

Plagiarism Detection Methods." arXiv preprint arXiv:1705.08828 (2017).

Cosine Coefficient to discover the cosine edge between two vectors, Euclidean Distance the

geometric separation between two vectors, Squared Euclidean Distance places more prominent

load on that are further separated, and Manhattan Distance can assess the normal distinction

crosswise over measurements and yields results like the straightforward euclidean separation.

Most of this applications employs comprehensive sentence based comparison in order to

detect plagiarism for the targeted paper. This technique for plagiarism detection is not scalable

for the diverse and large set of papers or articles11. In this plagiarism detecting applications

whenever some paper, article or document is compared with a registered document for the

similarity check an information retrieval method is used in order to preprocess the targeted

documents so that the semantic meaning of the information contained in the paper can be

extracted. If a match for the subject is found then the comparison to different papers of different

other subjects is not required and could be avoided.

Difficulties to the ethics in the field of research will proceed to advance as the Internet

makes it simple for every user to copy some information and paste that in their papers to claim it

as their own. Exertion should keep on assessing research articles and expositions in order to look

for answers for the security of protected innovation of individuals and to find deceiving and

literary theft in all work, beginning from students assignments to research work.

Joint efforts between research bodies' the board and programming designers should keep

on beat any challenges and fix any bug in plagiarism detecting applications. In future research,

consideration ought to be paid to advise all understudies just as specialists that their work will be

exposed to checking, and they ought to be prepared to utilize the accessible to check their work

11Ferrero, Jérémy, Laurent Besacier, Didier Schwab, and Frédéric Agnes. "Deep Investigation of Cross-Language

Plagiarism Detection Methods." arXiv preprint arXiv:1705.08828 (2017).

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

9PLAGIARISM DETECTION

before submission12. This can establish an essential degree of morals among them all, and would

further limit the exertion of checking.

1.4 Aim, Objectives and research questions:

Aim of the Research:

The aim of this research paper will be addressing and examining the issues important to

plagiarism detection as it is one of the most pitched types of content reuse around the world

today. This paper covers the various sorts of copyright infringement, various kinds of detection

strategies and general methods which are useful to the exploration researchers. The freely

available and accessible plagiarism detection tools and their working mechanism will be also

discussed and explored in the paper.

Objectives:

The objective of the research is to find out the most efficient and accurate plagiarism

detection method that can be used in order to ensured that the authors can secure their ownership

on the original piece of work.

Research Questions:

Detriment a plagiarism detection method for text data as well as source code which can

be capable of ensuring completeness and originality of an source of piece of work

Determine the proximity measure which can guarantee the detection of plagiarized

segments in both intrinsic and extrinsic way.

12Shen, Victor RL. "Novel Code Plagiarism Detection Based on Abstract Syntax Tree and Fuzzy Petri

Nets." International Journal of Engineering Education 1, no. 1 (2019).

before submission12. This can establish an essential degree of morals among them all, and would

further limit the exertion of checking.

1.4 Aim, Objectives and research questions:

Aim of the Research:

The aim of this research paper will be addressing and examining the issues important to

plagiarism detection as it is one of the most pitched types of content reuse around the world

today. This paper covers the various sorts of copyright infringement, various kinds of detection

strategies and general methods which are useful to the exploration researchers. The freely

available and accessible plagiarism detection tools and their working mechanism will be also

discussed and explored in the paper.

Objectives:

The objective of the research is to find out the most efficient and accurate plagiarism

detection method that can be used in order to ensured that the authors can secure their ownership

on the original piece of work.

Research Questions:

Detriment a plagiarism detection method for text data as well as source code which can

be capable of ensuring completeness and originality of an source of piece of work

Determine the proximity measure which can guarantee the detection of plagiarized

segments in both intrinsic and extrinsic way.

12Shen, Victor RL. "Novel Code Plagiarism Detection Based on Abstract Syntax Tree and Fuzzy Petri

Nets." International Journal of Engineering Education 1, no. 1 (2019).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

10PLAGIARISM DETECTION

Finding out and proposing a method for cross-lingual plagiarism checking which will be

capable of finding plagiarism without external references while ensuring high accuracy.

1.5 Significance of the Research:

In the current generation academic integrity is very much important as it provides value

to the achieved degree of the students. Also, the employers prefers to hire the graduates whom

they believe to have higher integrity of the academic rules and regulation13. In the current time to

check the academic integrity one of the easiest way is the detection of the plagiarism. To do this

various of methods for detecting the plagiarism can be used in this aspect. In this aspect

plagiarism detection using the Kohonen Maps and Singular Value Decomposition has been

selected for the research. This research is having a quite importance in the academic field as this

will be detecting the plagiarism issue in the submitted papers of the students in more accurate

way. In the current situation many of the academic organizations uses the internet search engine

for the detection of the plagiarism. Often it has been seen that this type of plagiarism detection is

not efficient at all and miss many of the plagiarized content. Thus this type of plagiarism

detecting program is required which will be efficiently detecting the plagiarized contents. The

proposed plagiarism detecting mechanism will be offering more sources which includes large

databases and periodical books which might not be available in the online environment14. In this

way the selected research on the Kohonen Maps and Singular Value Decomposition for detection

of the plagiarism will be able to detecting the plagiarism in more refined way so that better

academic integrity can be maintained. In this aspect this research is having a significant

importance.

13Jhi, Yoon-Chan, Xiaoqi Jia, Xinran Wang, Sencun Zhu, Peng Liu, and Dinghao Wu. "Program characterization

using runtime values and its application to software plagiarism detection." IEEE Transactions on Software

Engineering 41, no. 9 (2015): 925-943.

14Zrnec, Aljaž, and Dejan Lavbič. "Social network aided plagiarism detection." British Journal of Educational

Technology 48, no. 1 (2017): 113-128.

Finding out and proposing a method for cross-lingual plagiarism checking which will be

capable of finding plagiarism without external references while ensuring high accuracy.

1.5 Significance of the Research:

In the current generation academic integrity is very much important as it provides value

to the achieved degree of the students. Also, the employers prefers to hire the graduates whom

they believe to have higher integrity of the academic rules and regulation13. In the current time to

check the academic integrity one of the easiest way is the detection of the plagiarism. To do this

various of methods for detecting the plagiarism can be used in this aspect. In this aspect

plagiarism detection using the Kohonen Maps and Singular Value Decomposition has been

selected for the research. This research is having a quite importance in the academic field as this

will be detecting the plagiarism issue in the submitted papers of the students in more accurate

way. In the current situation many of the academic organizations uses the internet search engine

for the detection of the plagiarism. Often it has been seen that this type of plagiarism detection is

not efficient at all and miss many of the plagiarized content. Thus this type of plagiarism

detecting program is required which will be efficiently detecting the plagiarized contents. The

proposed plagiarism detecting mechanism will be offering more sources which includes large

databases and periodical books which might not be available in the online environment14. In this

way the selected research on the Kohonen Maps and Singular Value Decomposition for detection

of the plagiarism will be able to detecting the plagiarism in more refined way so that better

academic integrity can be maintained. In this aspect this research is having a significant

importance.

13Jhi, Yoon-Chan, Xiaoqi Jia, Xinran Wang, Sencun Zhu, Peng Liu, and Dinghao Wu. "Program characterization

using runtime values and its application to software plagiarism detection." IEEE Transactions on Software

Engineering 41, no. 9 (2015): 925-943.

14Zrnec, Aljaž, and Dejan Lavbič. "Social network aided plagiarism detection." British Journal of Educational

Technology 48, no. 1 (2017): 113-128.

11PLAGIARISM DETECTION

Also, this type of plagiarism detection technique will be generating a plagiarism report in

which percentage of the overall plagiarism will be mentioned. In this way removing the

plagiarism will become easy for the students and total originality of the paper can be identified15.

This similarity percentage also have a significance for the academic organizations and the

universities. Most of the universities have a standard percentage of plagiarism up to which

plagiarism is accepted. Beyond that no more plagiarism is accepted. Upon successful research

this Kohonen Maps and Singular Value Decomposition technique of plagiarism detection will be

providing a proper percentage of the similarity. For this reason this research is having a greater

significance in this context.

This research will be also providing invaluable educational aids. This Kohonen Maps and

Singular Value Decomposition method of plagiarism detection will be providing a proper type of

plagiarism report and will be highlighting plagiarized content that are not cited properly. In this

aspect the lecturer or the instructor can easily find which student is accused for the plagiarism

and can help those students to appropriately cite the references so that plagiarism can be avoided.

In this way this research will be providing an invaluable educational aids which is quite

significant.

This research will be also offer learners to get more educational experience. Here the

learners who are more aware about the consequence of the plagiarism will likely to be going to

have better success in their academic careers16. In this way plagiarism can be checked of the e-

learners so that ethical and moral boundaries can be created with the respect to the content they

15Gupta, Deepa. "Study on Extrinsic Text Plagiarism Detection Techniques and Tools." Journal of Engineering

Science & Technology Review 9, no. 5 (2016).

16Tian, Zhenzhou, Qinghua Zheng, Ting Liu, Ming Fan, Eryue Zhuang, and Zijiang Yang. "Software plagiarism

detection with birthmarks based on dynamic key instruction sequences." IEEE Transactions on Software

Engineering 41, no. 12 (2015): 1217-1235.

Also, this type of plagiarism detection technique will be generating a plagiarism report in

which percentage of the overall plagiarism will be mentioned. In this way removing the

plagiarism will become easy for the students and total originality of the paper can be identified15.

This similarity percentage also have a significance for the academic organizations and the

universities. Most of the universities have a standard percentage of plagiarism up to which

plagiarism is accepted. Beyond that no more plagiarism is accepted. Upon successful research

this Kohonen Maps and Singular Value Decomposition technique of plagiarism detection will be

providing a proper percentage of the similarity. For this reason this research is having a greater

significance in this context.

This research will be also providing invaluable educational aids. This Kohonen Maps and

Singular Value Decomposition method of plagiarism detection will be providing a proper type of

plagiarism report and will be highlighting plagiarized content that are not cited properly. In this

aspect the lecturer or the instructor can easily find which student is accused for the plagiarism

and can help those students to appropriately cite the references so that plagiarism can be avoided.

In this way this research will be providing an invaluable educational aids which is quite

significant.

This research will be also offer learners to get more educational experience. Here the

learners who are more aware about the consequence of the plagiarism will likely to be going to

have better success in their academic careers16. In this way plagiarism can be checked of the e-

learners so that ethical and moral boundaries can be created with the respect to the content they

15Gupta, Deepa. "Study on Extrinsic Text Plagiarism Detection Techniques and Tools." Journal of Engineering

Science & Technology Review 9, no. 5 (2016).

16Tian, Zhenzhou, Qinghua Zheng, Ting Liu, Ming Fan, Eryue Zhuang, and Zijiang Yang. "Software plagiarism

detection with birthmarks based on dynamic key instruction sequences." IEEE Transactions on Software

Engineering 41, no. 12 (2015): 1217-1235.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 19

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.