King's College: Predictive Maintenance for Industrial Machines Report

VerifiedAdded on 2023/06/11

|56

|19002

|80

Report

AI Summary

This report focuses on predictive maintenance for industrial machines, leveraging the capabilities of Artificial Neural Networks (ANN) to predict potential malfunctions. It begins with an introduction to predictive maintenance and its importance in modern industries, emphasizing the need for proactive measures to prevent equipment failures and reduce operational costs. The report provides a technical overview of predictive maintenance, discussing methods such as vibration signature analysis and failure mode analysis. It then delves into the application of machine learning algorithms, particularly ANN, for function approximation, clustering, and forecasting. The study includes a literature survey, a discussion of various machine learning algorithms, and a detailed exploration of ANN's technical aspects, design, and methodology. The methodology section covers fuzzy-based decision systems and classification techniques. The report also includes a comparative study of different classification algorithms, evaluation results, and a comprehensive analysis of the findings, including comparisons of classification accuracy and false alarm rates. The conclusion summarizes the key findings and the effectiveness of ANN in predictive maintenance.

PREDICTIVE MAINTENANCE FOR INDUSTRIAL MACHINES USING

ARTIFICAL NEURAL NETWORK

Abstract:

The concept of predictive analysis plays a vital role around industries due to its enormity and

complexity. Complex information retrieval and categorization systems are needed to process

queries, filter, and store and organize huge amount of data which are mainly composed of texts.

As soon as datasets becomes large, most information combines with algorithms that might not

perform well. For example, if an algorithm needs to load data into memory constantly, the

program may run out of memory for large datasets. Moreover prediction is really important in

today’s industrial purposes since that could reduce the issues of heavy asset loss towards the

organization. The purpose of prediction is really necessary in every field since it could help us to

stop the cause of occurring the error before any vulnerable activities could happen. Predictive

maintenance is a method, which consumes the direct monitoring of mechanical condition of plant

equipments to decide the actual mean time to malfunction for each preferred machine. Based on

the mechanical construction of the equipment we could able to estimate the fault that could occur

in the machines and decide the time that could cause a critical situation. This prediction should

be done effectively and for this purpose we have stepped into the concept of machine learning.

Machine learning algorithm plays the future of the world at the present situation. In this

dissertation paper we’re going to deal with the Artificial Neural Network (ANN) that could be

best used for the prediction purpose when compared to other algorithms since it does the best

purpose of function approximation, clustering and forecasting. Finally the comparison has been

made between ANN and other algorithms in terms of accuracy, precision and specification.

ARTIFICAL NEURAL NETWORK

Abstract:

The concept of predictive analysis plays a vital role around industries due to its enormity and

complexity. Complex information retrieval and categorization systems are needed to process

queries, filter, and store and organize huge amount of data which are mainly composed of texts.

As soon as datasets becomes large, most information combines with algorithms that might not

perform well. For example, if an algorithm needs to load data into memory constantly, the

program may run out of memory for large datasets. Moreover prediction is really important in

today’s industrial purposes since that could reduce the issues of heavy asset loss towards the

organization. The purpose of prediction is really necessary in every field since it could help us to

stop the cause of occurring the error before any vulnerable activities could happen. Predictive

maintenance is a method, which consumes the direct monitoring of mechanical condition of plant

equipments to decide the actual mean time to malfunction for each preferred machine. Based on

the mechanical construction of the equipment we could able to estimate the fault that could occur

in the machines and decide the time that could cause a critical situation. This prediction should

be done effectively and for this purpose we have stepped into the concept of machine learning.

Machine learning algorithm plays the future of the world at the present situation. In this

dissertation paper we’re going to deal with the Artificial Neural Network (ANN) that could be

best used for the prediction purpose when compared to other algorithms since it does the best

purpose of function approximation, clustering and forecasting. Finally the comparison has been

made between ANN and other algorithms in terms of accuracy, precision and specification.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Table of contents Page.no

1 Predictive Maintenance 6

1.1 Introduction 6

1.2 Technical Overview 7

1.3 Objective 8

1.4 Problem Formulation 9

1.5 Research Outcome 10

1.6 Research impact 11

2 Literature Survey 14

2.1 Background 14

2.2 Literature 16

3 Machine Learning Algorithms 18

3.1 Theoretical Background 18

3.2 Dimension Reduction Techniques 19

3.2.1 Feature selection 20

3.2.2 Sequential Forward Selection 21

3.2.3 Sequential Backward Selection 22

3.2.4 Feature Extraction 22

3.3 Machine Learning Algorithms 22

3.3.1 k-Nearest Neighbor 22

3.3.2 Support Vector Machines (SVM) 24

3.3.3 Random Forest 28

3.3.4 Naïve Bayes 28

3.3.5 Ada Boost 29

4 Artificial Neural Network 31

1 Predictive Maintenance 6

1.1 Introduction 6

1.2 Technical Overview 7

1.3 Objective 8

1.4 Problem Formulation 9

1.5 Research Outcome 10

1.6 Research impact 11

2 Literature Survey 14

2.1 Background 14

2.2 Literature 16

3 Machine Learning Algorithms 18

3.1 Theoretical Background 18

3.2 Dimension Reduction Techniques 19

3.2.1 Feature selection 20

3.2.2 Sequential Forward Selection 21

3.2.3 Sequential Backward Selection 22

3.2.4 Feature Extraction 22

3.3 Machine Learning Algorithms 22

3.3.1 k-Nearest Neighbor 22

3.3.2 Support Vector Machines (SVM) 24

3.3.3 Random Forest 28

3.3.4 Naïve Bayes 28

3.3.5 Ada Boost 29

4 Artificial Neural Network 31

4.1 Technical overview 31

4.2 Specification 34

4.3 Design 35

4.4 Methodology 36

4.4.1 Fuzzy based decision system: 36

5 Classification Techniques 38

5.1 Comparative study 38

5 Result 42

5.1 Evaluation 44

5.2 Analysis 45

6 Conclusion 48

References 49

4.2 Specification 34

4.3 Design 35

4.4 Methodology 36

4.4.1 Fuzzy based decision system: 36

5 Classification Techniques 38

5.1 Comparative study 38

5 Result 42

5.1 Evaluation 44

5.2 Analysis 45

6 Conclusion 48

References 49

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Figures

Figure 1: Manufacturing industry quality and analysis 8

Figure 2: Predictive analysis with the degree of intelligence 15

Figure 3: General process in the Artificial neural network 17

Figure 4: Feature Selection 21

Figure 5: Xn set of independent features or dimensions are reduced to Yn 22

Figure 6: k- Nearest Neighbour. Modified from k-Nearest Neighbour 23

and Dynamic Time Wrapping (2016)

Figure 7: Classification based on linear SVM 26

Figure 8: Classification based on Hard SVM 27

Figure 9: Schematic of Adaboost 29

Figure 10: 2-dimensional neural network model 33

Figure 11: Kohenen self-organizing feature map. 34

Figure 12: Block diagram for the fuzzy logical system 37

Figure 13: Comparison of Classification accuracy 46

Figure 14: Prediction of false alarm rate 47

Figure 1: Manufacturing industry quality and analysis 8

Figure 2: Predictive analysis with the degree of intelligence 15

Figure 3: General process in the Artificial neural network 17

Figure 4: Feature Selection 21

Figure 5: Xn set of independent features or dimensions are reduced to Yn 22

Figure 6: k- Nearest Neighbour. Modified from k-Nearest Neighbour 23

and Dynamic Time Wrapping (2016)

Figure 7: Classification based on linear SVM 26

Figure 8: Classification based on Hard SVM 27

Figure 9: Schematic of Adaboost 29

Figure 10: 2-dimensional neural network model 33

Figure 11: Kohenen self-organizing feature map. 34

Figure 12: Block diagram for the fuzzy logical system 37

Figure 13: Comparison of Classification accuracy 46

Figure 14: Prediction of false alarm rate 47

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Tables

Table 1: Advantages of different classification algorithms 39

Table 2: Feature comparisons 40

Table 3: Comparison of Classification Algorithms 40

Table 4: Comparison of classifiers employing the method of cross validation 45

Table 1: Advantages of different classification algorithms 39

Table 2: Feature comparisons 40

Table 3: Comparison of Classification Algorithms 40

Table 4: Comparison of classifiers employing the method of cross validation 45

Chapter 1: Predictive Maintenance

1.1 Introduction:

In this modern era new technologies, innovation and developments are increasing day by day

that leads to the evolvement of mass production of voluminous data. This huge volume of data

seems to be more informative, which leads to the production and enhancement of the

manufacturing process in various industries. The purpose of prediction is really necessary in

every field since it could help us to stop the cause of occurring the error before any vulnerable

activities could happen. This could save the asset of a production industry. The main role of

prediction is best suitable in the field of health monitoring that helps in continously monitoring

the health of the patient without the need of a caretaker near them. Motivated by the quality of

life and less expensive healthcare systems, a change in existing healthcare system should be

focused to a home centered setting including dealing with illness to preserving wellness.

Innovative user-centered preventive healthcare model can be regarded as a promising solution

for this transformation. It doesn’t substitute traditional healthcare, but rather directed towards

this technology. The technology used in the pervasive healthcare could be considered from two

aspects: i) as pervasive computing tools for healthcare, and ii) as enabling it anywhere, anytime

and to anyone. It has progressed on biomedical engineering (BE), medical informatics (MI), and

pervasive computing. Biomedical engineering is the integration of both engineering and medical

science that helps in the improvement of the equipments used in the hospitals. Medical

informatics comprises of huge amount of medical resources to enhance storage, retrieval, and

employ these resources in healthcare [1]. The advancement has been done to monitor the health

of the patients and provide the details to the caretakers, who are near by the remote areas. This

could be done in a real-time with the help of the internet access. Due to the condition of

monitoring the patient at a real-time, the caretaker can provide the suggestions regarding their

essential signs of their body situation through a video conference.

Another key aspect of predictive maintenance deals with the condition monitoring or condition

based maintenance. Condition based monitoring deals with the analysis of the machine or

anything without interrupting its regular work. Moreover monitoring the condition of equipment

is like decision making strategy, which could avoid any types of faults or failures that happens at

the near future to that equipment and its components. This is similar to the Prognostic and Health

Monitoring (PHM) that is mentioned above. Prognostic is nothing but analyzing the upcoming

situation that could occur for the patient. Similarly for the machines it could be stated as

Remaining Useful Life (RUL). The latest advancement of computerized control, information

techniques and communication networks have made potential accumulating mass amount of

operation and process’s conditions data to be harvested in making an automated Fault Detection

and Diagnosis (FDD), and increasing more resourceful approaches for intelligent defensive

maintenance behavior, also termed as predictive maintenance.

1.1 Introduction:

In this modern era new technologies, innovation and developments are increasing day by day

that leads to the evolvement of mass production of voluminous data. This huge volume of data

seems to be more informative, which leads to the production and enhancement of the

manufacturing process in various industries. The purpose of prediction is really necessary in

every field since it could help us to stop the cause of occurring the error before any vulnerable

activities could happen. This could save the asset of a production industry. The main role of

prediction is best suitable in the field of health monitoring that helps in continously monitoring

the health of the patient without the need of a caretaker near them. Motivated by the quality of

life and less expensive healthcare systems, a change in existing healthcare system should be

focused to a home centered setting including dealing with illness to preserving wellness.

Innovative user-centered preventive healthcare model can be regarded as a promising solution

for this transformation. It doesn’t substitute traditional healthcare, but rather directed towards

this technology. The technology used in the pervasive healthcare could be considered from two

aspects: i) as pervasive computing tools for healthcare, and ii) as enabling it anywhere, anytime

and to anyone. It has progressed on biomedical engineering (BE), medical informatics (MI), and

pervasive computing. Biomedical engineering is the integration of both engineering and medical

science that helps in the improvement of the equipments used in the hospitals. Medical

informatics comprises of huge amount of medical resources to enhance storage, retrieval, and

employ these resources in healthcare [1]. The advancement has been done to monitor the health

of the patients and provide the details to the caretakers, who are near by the remote areas. This

could be done in a real-time with the help of the internet access. Due to the condition of

monitoring the patient at a real-time, the caretaker can provide the suggestions regarding their

essential signs of their body situation through a video conference.

Another key aspect of predictive maintenance deals with the condition monitoring or condition

based maintenance. Condition based monitoring deals with the analysis of the machine or

anything without interrupting its regular work. Moreover monitoring the condition of equipment

is like decision making strategy, which could avoid any types of faults or failures that happens at

the near future to that equipment and its components. This is similar to the Prognostic and Health

Monitoring (PHM) that is mentioned above. Prognostic is nothing but analyzing the upcoming

situation that could occur for the patient. Similarly for the machines it could be stated as

Remaining Useful Life (RUL). The latest advancement of computerized control, information

techniques and communication networks have made potential accumulating mass amount of

operation and process’s conditions data to be harvested in making an automated Fault Detection

and Diagnosis (FDD), and increasing more resourceful approaches for intelligent defensive

maintenance behavior, also termed as predictive maintenance.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

According to the estimation about 20 to 30 percent of the periodically monitored equipments for

predictive maintenance have been affected from its production and quality that has to be

examined regularly. In fact monitoring the equipments in a weekly or monthly manner does not

prove to be enough for detecting certain abnormalities in the machines [2]. If we change the

equipments from periodical to continuous monitoring then it could lead to lower the cost of

expenditure for the machines considerably. This could save the expenditure from on-line

monitoring systems on PC and accelerometer. Artificial Neural Network (ANN) could be used

effectively in this type of evaluation for detecting the abnormal patterns of the sensor validation

and for trend evaluation. In this chapter we’re going to discuss regarding the effect of predictive

maintenance in industries and the methods of ANN that could helps us with the maintenance.

1.2 Technical Overview:

Predictive maintenance is a method, which consumes the direct monitoring of mechanical

condition of plant equipments to decide the actual mean time to malfunction for each preferred

machine [3]. Based on the mechanical construction of the equipment we could able to estimate

the fault that could occur in the machines and decide the time that could cause a critical situation.

With the help of predictive maintenance we could able to detect the equipment that could

seriously be affected before the happenings of the situation. The information could be generated

before any hazardous situation could occur in the equipment. For this estimation, one of the

most popularly used equipment is vibration signature analysis. With the help of this analysis we

could able to predict the mechanical condition of the machines. But there is a condition that the

this particular analysis method alone could not be used for the estimation of the mechanical

failure, which does not include the oil lubricating condition, displacement of axis and many other

parameters. There is another method called failure modes that could estimate the components

and the magnitude obtained by the method could detect the fault evolution and machine

operating conditions.

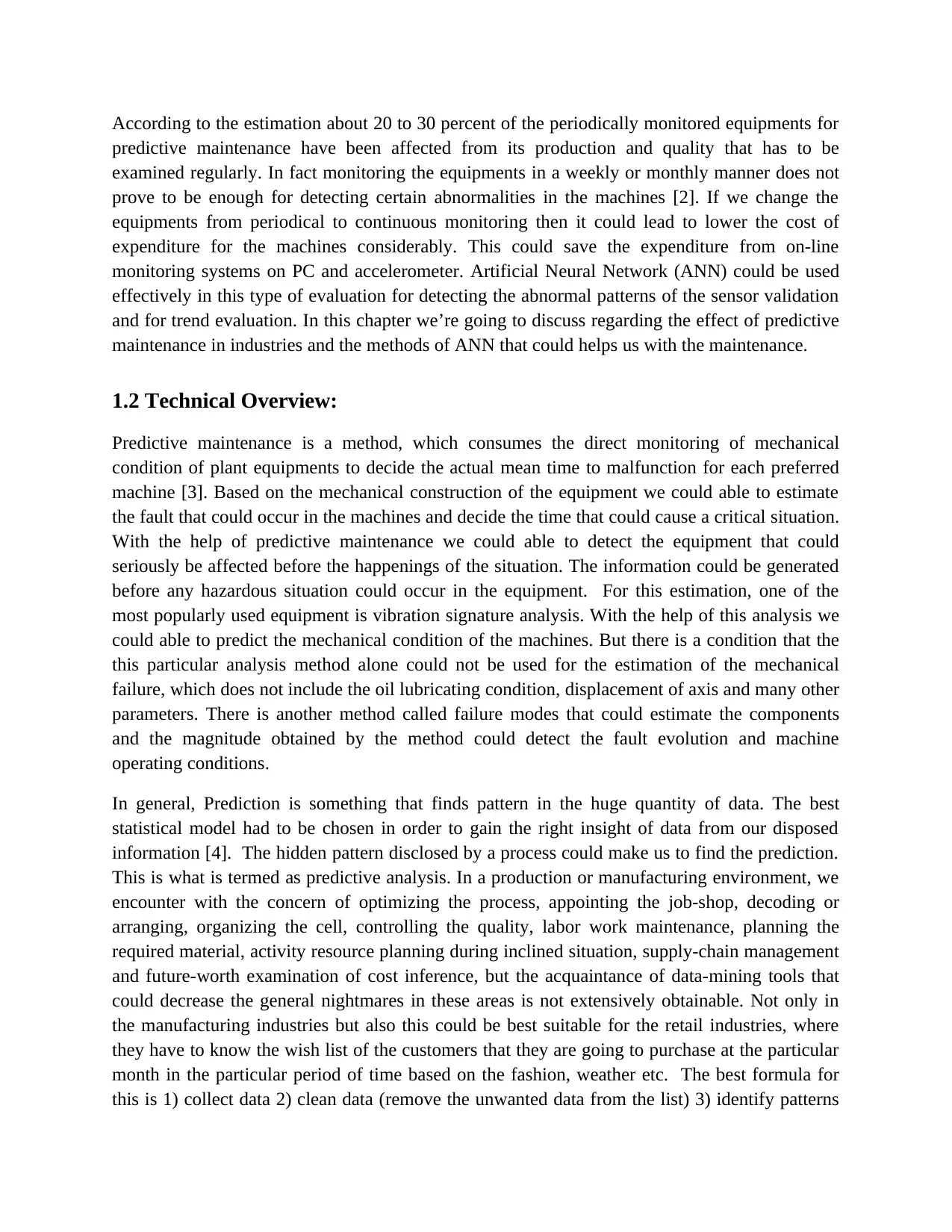

In general, Prediction is something that finds pattern in the huge quantity of data. The best

statistical model had to be chosen in order to gain the right insight of data from our disposed

information [4]. The hidden pattern disclosed by a process could make us to find the prediction.

This is what is termed as predictive analysis. In a production or manufacturing environment, we

encounter with the concern of optimizing the process, appointing the job-shop, decoding or

arranging, organizing the cell, controlling the quality, labor work maintenance, planning the

required material, activity resource planning during inclined situation, supply-chain management

and future-worth examination of cost inference, but the acquaintance of data-mining tools that

could decrease the general nightmares in these areas is not extensively obtainable. Not only in

the manufacturing industries but also this could be best suitable for the retail industries, where

they have to know the wish list of the customers that they are going to purchase at the particular

month in the particular period of time based on the fashion, weather etc. The best formula for

this is 1) collect data 2) clean data (remove the unwanted data from the list) 3) identify patterns

predictive maintenance have been affected from its production and quality that has to be

examined regularly. In fact monitoring the equipments in a weekly or monthly manner does not

prove to be enough for detecting certain abnormalities in the machines [2]. If we change the

equipments from periodical to continuous monitoring then it could lead to lower the cost of

expenditure for the machines considerably. This could save the expenditure from on-line

monitoring systems on PC and accelerometer. Artificial Neural Network (ANN) could be used

effectively in this type of evaluation for detecting the abnormal patterns of the sensor validation

and for trend evaluation. In this chapter we’re going to discuss regarding the effect of predictive

maintenance in industries and the methods of ANN that could helps us with the maintenance.

1.2 Technical Overview:

Predictive maintenance is a method, which consumes the direct monitoring of mechanical

condition of plant equipments to decide the actual mean time to malfunction for each preferred

machine [3]. Based on the mechanical construction of the equipment we could able to estimate

the fault that could occur in the machines and decide the time that could cause a critical situation.

With the help of predictive maintenance we could able to detect the equipment that could

seriously be affected before the happenings of the situation. The information could be generated

before any hazardous situation could occur in the equipment. For this estimation, one of the

most popularly used equipment is vibration signature analysis. With the help of this analysis we

could able to predict the mechanical condition of the machines. But there is a condition that the

this particular analysis method alone could not be used for the estimation of the mechanical

failure, which does not include the oil lubricating condition, displacement of axis and many other

parameters. There is another method called failure modes that could estimate the components

and the magnitude obtained by the method could detect the fault evolution and machine

operating conditions.

In general, Prediction is something that finds pattern in the huge quantity of data. The best

statistical model had to be chosen in order to gain the right insight of data from our disposed

information [4]. The hidden pattern disclosed by a process could make us to find the prediction.

This is what is termed as predictive analysis. In a production or manufacturing environment, we

encounter with the concern of optimizing the process, appointing the job-shop, decoding or

arranging, organizing the cell, controlling the quality, labor work maintenance, planning the

required material, activity resource planning during inclined situation, supply-chain management

and future-worth examination of cost inference, but the acquaintance of data-mining tools that

could decrease the general nightmares in these areas is not extensively obtainable. Not only in

the manufacturing industries but also this could be best suitable for the retail industries, where

they have to know the wish list of the customers that they are going to purchase at the particular

month in the particular period of time based on the fashion, weather etc. The best formula for

this is 1) collect data 2) clean data (remove the unwanted data from the list) 3) identify patterns

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

(it is necessary to group the data making a similarity list from the insights) and finally 4) Make

predictions (foresight).

In relevant forms predictive modeling, decision analysis and optimization, deal outlining and

prognostic hunt and explore. Predictive analytics can be functional to an assortment of industry

approaches and plays a key role in search marketing and commendation engines that is shown in

the figure given below.

Figure 1: Manufacturing industry quality and analysis

1.3 Objective:

The main objective of our project is to predict the malfunction that may occur at the future in

certain function. These malfunctions could be identified with the data that could be derived from

the machine. For instance, every machine tends to have its own natural or harmonic frequency.

Every natural frequency produced by the instruments has its own vibrational mode or its own

wave pattern. When there is an effect in these natural frequencies then it means that a machine

can have an error. These frequency patterns due to vibration of the machine could be determined

by the vibrational sensor. Not only by vibrations but also we can include temperature, pressure

etc. Extreme variations of these parameters from the limit or set point would tend the machine to

malfunction. This should be known previously before any error could happen in the machine.

This is entirely possible by prediction. By this type of prediction is very difficult for the humans

to handle since it requires a lot of man power with highly monitoring skills. What if the

intelligence is attached to the head of the machine? That’s the concept of machine learning.

There are several machine learning algorithms but Artificial neural network could be the best

predictions (foresight).

In relevant forms predictive modeling, decision analysis and optimization, deal outlining and

prognostic hunt and explore. Predictive analytics can be functional to an assortment of industry

approaches and plays a key role in search marketing and commendation engines that is shown in

the figure given below.

Figure 1: Manufacturing industry quality and analysis

1.3 Objective:

The main objective of our project is to predict the malfunction that may occur at the future in

certain function. These malfunctions could be identified with the data that could be derived from

the machine. For instance, every machine tends to have its own natural or harmonic frequency.

Every natural frequency produced by the instruments has its own vibrational mode or its own

wave pattern. When there is an effect in these natural frequencies then it means that a machine

can have an error. These frequency patterns due to vibration of the machine could be determined

by the vibrational sensor. Not only by vibrations but also we can include temperature, pressure

etc. Extreme variations of these parameters from the limit or set point would tend the machine to

malfunction. This should be known previously before any error could happen in the machine.

This is entirely possible by prediction. By this type of prediction is very difficult for the humans

to handle since it requires a lot of man power with highly monitoring skills. What if the

intelligence is attached to the head of the machine? That’s the concept of machine learning.

There are several machine learning algorithms but Artificial neural network could be the best

suitable algorithm for the prediction since this could perform intelligent tasks as performed by

the human brain [5]. ANN gains knowledge through learning and this knowledge could be stored

in the inter-neuron connection known as weights. They have Multilayer perceptron, which means

they have input layer followed by certain hidden layers then finally the output layer. Back

propagation is the process where the input data is repeatedly presented by the input layer. In this

process we have to train the data. Supervised training involves passing of historical data into the

input layer followed by the hidden layers. Then the desired output is measured. This type could

produce a model that could map the input to output with the historical data. This model could

provide with the necessary output when the preferred output is not known. The concept that has

been used for our project is supervised neural network model that works with Multiple layer

perceptron (MLP) with classification that could train using back propagation. MLP works with

too arrays X_array that includes samples and features for training the data and Y_array for holds

target values for training the samples. Finally the accuracy, precision and the specifications have

been derived at the end of the project with the comparison of other algorithm.

1.4 Problem Formulation:

Predictive modeling and analysis could be the utmost importance factor in every business

organizations that leads to noteworthy development in their quality and function and also plays a

major role in the decisions they take that could improve their asset value. In present scenario we

could consider the Amazon and EBay which depends on the online ad network like Face book

and Google. Each and every organization could find a statistical analysis of their data and could

analyze their environment well at certain limits like present clients, turnover activities, follow the

supplies and so on. The maximum profit could be yield by the predictive maintenance thus

identifying the best course of plan and work accordingly [6]. In spite of the achievement stories

of integrated predictive analytics by practitioner’s large touchable and considerable payback to

organization, information systems researchers as well comprise the significance and meaning of

predictive analytics when discussing the future trends and evolution of decision support systems.

Moreover the data could be taken for the analysis of a machine in a manufacturing. But a

noteworthy point is that what type of data has to be considered for classifying it under the

predictive maintenance is a question here [7]. Millions and millions of data will be generated

annually. Considering all data could be tedious and its waste of time. Therefore efficient data

should be predicted. Second part to be considered is that once the data is generated, there is a

situation that certain operator should be alert when any possible malfunction is ready to occur. If

the operator missed his sight then what could happen to a machine. Human error is normal in

every case, but in the higher industries when the errors persist then there could be heavy loss

towards the whole industry [8]. This has to be noted.

the human brain [5]. ANN gains knowledge through learning and this knowledge could be stored

in the inter-neuron connection known as weights. They have Multilayer perceptron, which means

they have input layer followed by certain hidden layers then finally the output layer. Back

propagation is the process where the input data is repeatedly presented by the input layer. In this

process we have to train the data. Supervised training involves passing of historical data into the

input layer followed by the hidden layers. Then the desired output is measured. This type could

produce a model that could map the input to output with the historical data. This model could

provide with the necessary output when the preferred output is not known. The concept that has

been used for our project is supervised neural network model that works with Multiple layer

perceptron (MLP) with classification that could train using back propagation. MLP works with

too arrays X_array that includes samples and features for training the data and Y_array for holds

target values for training the samples. Finally the accuracy, precision and the specifications have

been derived at the end of the project with the comparison of other algorithm.

1.4 Problem Formulation:

Predictive modeling and analysis could be the utmost importance factor in every business

organizations that leads to noteworthy development in their quality and function and also plays a

major role in the decisions they take that could improve their asset value. In present scenario we

could consider the Amazon and EBay which depends on the online ad network like Face book

and Google. Each and every organization could find a statistical analysis of their data and could

analyze their environment well at certain limits like present clients, turnover activities, follow the

supplies and so on. The maximum profit could be yield by the predictive maintenance thus

identifying the best course of plan and work accordingly [6]. In spite of the achievement stories

of integrated predictive analytics by practitioner’s large touchable and considerable payback to

organization, information systems researchers as well comprise the significance and meaning of

predictive analytics when discussing the future trends and evolution of decision support systems.

Moreover the data could be taken for the analysis of a machine in a manufacturing. But a

noteworthy point is that what type of data has to be considered for classifying it under the

predictive maintenance is a question here [7]. Millions and millions of data will be generated

annually. Considering all data could be tedious and its waste of time. Therefore efficient data

should be predicted. Second part to be considered is that once the data is generated, there is a

situation that certain operator should be alert when any possible malfunction is ready to occur. If

the operator missed his sight then what could happen to a machine. Human error is normal in

every case, but in the higher industries when the errors persist then there could be heavy loss

towards the whole industry [8]. This has to be noted.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1.5 Research Outcome:

In the above problem statement Artificial Neural Network (ANN) could be a greatest solution.

The evaluation of On-line monitoring of the mechanical condition of the equipments could be

reasonable by the low cost and highly reliable data acquisition system. First before entering into

ANN, the major concept to be noted is that whether the data retrieves a supervised or

unsupervised approach. Supervised approach is the concept that could give a specified output for

the given input and unsupervised approach means that we retrieve output that could be unknown.

The unsupervised method comes with the concept of involvement in experimentation. This

involves the selection of sample data from the existing dataset that could require further

handling, clustering of an object that involves the inner structure to which the data belong and

making inverse or direct method for prediction in a quantitative manner. Once we have

determined the type of dataset we have then ANN could be done related to that. In our strategy

we have the data that belongs to supervised approach.

The concept of ANN could be briefly said as Artificial Neural Network (ANN) that stress on the

work neural which is neuron. The meaning for this abbreviation is that how “artificial neurons”

are networked or associated together and how these individual neurons perform its operation.

These Artificial neurons copy the action of the biological neurons, which could accept various

signals from the neighboring neurons and then process them in the pre-defined way. Based on

the result of this process, the neuron makes the decision whether to provide an output. The output

signal could be in terms of 0 and 1 or any real value between 0 and 1 that could be based on the

values that we deal.

The fuzzy logic system associated with the Artificial neural network could helps us in finding the

reliable data and could indicate and identify features that will authorize to permit a consistent

diagnostic with the machines. Any abnormal or worsening condition in the machine could be

reported by the Artificial neural network and this particular system should be trained in certain

manner. Recently deploying the fuzzy logic with the neural network has been increased and this

integrated behavior could form a successful path towards the hybrid neural networks as well as

the expert systems with the help of fuzzy rule based system rather than using the traditional

systems.

Certain points are to be considered while following the rules. The machines are trained in certain

manner of detecting the fault and at the first defect indication itself the fault should be noted

since the similar signal pattern and various fault mechanism could led a machine to show

evidence of analogous vibrational symptoms. But with the help the inherent capacity of the

generalization of ANN will be very useful in handling the situation. Expert systems are

particularly useful where the knowledge of an expert is unambiguously available. ANN could

“take out” the knowledge with the accessible information although the operator is not available.

For the alarm system and diagnosing the fault the best integrated system that could be used with

the neural network is the expert system. Connectionist expert systems along with the ANN in its

In the above problem statement Artificial Neural Network (ANN) could be a greatest solution.

The evaluation of On-line monitoring of the mechanical condition of the equipments could be

reasonable by the low cost and highly reliable data acquisition system. First before entering into

ANN, the major concept to be noted is that whether the data retrieves a supervised or

unsupervised approach. Supervised approach is the concept that could give a specified output for

the given input and unsupervised approach means that we retrieve output that could be unknown.

The unsupervised method comes with the concept of involvement in experimentation. This

involves the selection of sample data from the existing dataset that could require further

handling, clustering of an object that involves the inner structure to which the data belong and

making inverse or direct method for prediction in a quantitative manner. Once we have

determined the type of dataset we have then ANN could be done related to that. In our strategy

we have the data that belongs to supervised approach.

The concept of ANN could be briefly said as Artificial Neural Network (ANN) that stress on the

work neural which is neuron. The meaning for this abbreviation is that how “artificial neurons”

are networked or associated together and how these individual neurons perform its operation.

These Artificial neurons copy the action of the biological neurons, which could accept various

signals from the neighboring neurons and then process them in the pre-defined way. Based on

the result of this process, the neuron makes the decision whether to provide an output. The output

signal could be in terms of 0 and 1 or any real value between 0 and 1 that could be based on the

values that we deal.

The fuzzy logic system associated with the Artificial neural network could helps us in finding the

reliable data and could indicate and identify features that will authorize to permit a consistent

diagnostic with the machines. Any abnormal or worsening condition in the machine could be

reported by the Artificial neural network and this particular system should be trained in certain

manner. Recently deploying the fuzzy logic with the neural network has been increased and this

integrated behavior could form a successful path towards the hybrid neural networks as well as

the expert systems with the help of fuzzy rule based system rather than using the traditional

systems.

Certain points are to be considered while following the rules. The machines are trained in certain

manner of detecting the fault and at the first defect indication itself the fault should be noted

since the similar signal pattern and various fault mechanism could led a machine to show

evidence of analogous vibrational symptoms. But with the help the inherent capacity of the

generalization of ANN will be very useful in handling the situation. Expert systems are

particularly useful where the knowledge of an expert is unambiguously available. ANN could

“take out” the knowledge with the accessible information although the operator is not available.

For the alarm system and diagnosing the fault the best integrated system that could be used with

the neural network is the expert system. Connectionist expert systems along with the ANN in its

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

knowledge bases could provide maximum reimbursement in terms of velocity, toughness and

knowledge attainment.

The software that we’re going to use to implement the ANN will be python. Python is a popular

programming language that could be used for software development, web development,

mathematics and scripting language. We used python since it’s a simple language that could run

in any platform such as linux, windows, Mac etc. Moreover the syntax of the language could be

very simple when compared to the other scripting language. It runs on the interpreter system

which means that it could be executed as soon as possible. It could be either object-oriented

language or procedural language or functional language. Huge amount of calculations could be

carried out with the python of python very easily.

1.6 Research impact:

The impacts of the predictive analysis are many. There is a common question that arises around

so many people that why there is a need for the predictive analysis and why the companies invest

plenty of money for the technical equipment to do a prediction. There was a study undergone at

Honeywell where the responders says that the company that involved in this predictive

maintenance make a real-time decisions at a rate of 64%, limits the wastage at the rate of 74%

and estimates the risk at the rate of 73%. This is not in the case of those companies who don’t

like to have this model, for those who try to build the process later and for those who does not

want to spend for this maintenance [5]. These companies could face heavy asset expenditure

when compared to the companies who involved in this maintenance construction.

The companies who involved in this predictive maintenance could achieve practical as well as

achievable results due to the following factor:

Assembling the correct quality data:

In every manufacturing company it is necessary to collect the data regarding the quality of the

product, machine safety, personnel fulfillment as well as the recording level. These data could

be collected with the help of product and quality manager, professionals and the operators who

are in charge of each and every particular task. Through these data we could obtain the efficiency

of a machine [6], circumvent injuries, avoiding the unwanted products to pass through the

process and the companies should have these details to face the audit. These details are collected

normally in a pen and paper method where later it could be digitalized. There is a common

thinking that the collection of these relevant data could help to improve the quality of the

company but the real factor is different. The problem arises with the quantity of data that could

be useful as well as useless. The reality is it does not matter about the quantity of data but the

right data and better analysis. The collected data should be meaningful and brief. Bringing the

Statistical Process Control (SPC) method in the place of accurate data will necessarily help us in

a proactive control process and avert the quality of manufacture malfunction before they appear.

knowledge attainment.

The software that we’re going to use to implement the ANN will be python. Python is a popular

programming language that could be used for software development, web development,

mathematics and scripting language. We used python since it’s a simple language that could run

in any platform such as linux, windows, Mac etc. Moreover the syntax of the language could be

very simple when compared to the other scripting language. It runs on the interpreter system

which means that it could be executed as soon as possible. It could be either object-oriented

language or procedural language or functional language. Huge amount of calculations could be

carried out with the python of python very easily.

1.6 Research impact:

The impacts of the predictive analysis are many. There is a common question that arises around

so many people that why there is a need for the predictive analysis and why the companies invest

plenty of money for the technical equipment to do a prediction. There was a study undergone at

Honeywell where the responders says that the company that involved in this predictive

maintenance make a real-time decisions at a rate of 64%, limits the wastage at the rate of 74%

and estimates the risk at the rate of 73%. This is not in the case of those companies who don’t

like to have this model, for those who try to build the process later and for those who does not

want to spend for this maintenance [5]. These companies could face heavy asset expenditure

when compared to the companies who involved in this maintenance construction.

The companies who involved in this predictive maintenance could achieve practical as well as

achievable results due to the following factor:

Assembling the correct quality data:

In every manufacturing company it is necessary to collect the data regarding the quality of the

product, machine safety, personnel fulfillment as well as the recording level. These data could

be collected with the help of product and quality manager, professionals and the operators who

are in charge of each and every particular task. Through these data we could obtain the efficiency

of a machine [6], circumvent injuries, avoiding the unwanted products to pass through the

process and the companies should have these details to face the audit. These details are collected

normally in a pen and paper method where later it could be digitalized. There is a common

thinking that the collection of these relevant data could help to improve the quality of the

company but the real factor is different. The problem arises with the quantity of data that could

be useful as well as useless. The reality is it does not matter about the quantity of data but the

right data and better analysis. The collected data should be meaningful and brief. Bringing the

Statistical Process Control (SPC) method in the place of accurate data will necessarily help us in

a proactive control process and avert the quality of manufacture malfunction before they appear.

Knowledge regarding the prediction of suitable opportunity:

In this case the best opportunity should be identified by the plant, which could help in improving

the company. For instance let’s take a client who comes to buy a product of a company. He finds

a defect in that product and informs the plant manager regarding the defect. Immediately the

manager should necessarily take action if the information given by the client is valid. The plant

manager comes into action and determines the defect of the product that did not meet the

requirement. It could take certain expenditure to cure this defect but that expenditure could be

given as a profit to the company. Within certain time period the plant could run at the top that

could reduce the cost and defects.

Maintenance of best practices:

It is necessary to make adjustments in the wide range of enterprise by having maintenance over

the entire project not to their own plant. In a company both the quality and the plant manager

should take decision wisely. For example if the plant manager tries to cut down the cost of $ 1

million dollars by neglecting the raw materials that hold minimum good to a product then there

could be sustainable cost reduction to the profit which could exceed more than a million dollar in

that particular year [7]. So it is necessary to take the decisions wisely not quickly.

Manufacturers give their concentration more on the quality of the product. They also needs to

ensure that there is an optimal functioning around the whole manufacturing plant, updated,

efficiency of the workers, appropriate measurements, and the finest creation potential. With

predictive analytics, it’s doable to not only get better the quality of manufacture, increase

equipment return on investment (ROI) and overall equipment effectiveness (OEE), and look

forward to the needs across the plant and enterprise, but also improve the brand’s status, leave

behind the competition and make sure the safety of the customer. Hence, mainly focusing on the

predictive analysis benefits, it is necessary to find the possible routes that how it could be used

within the organization in order to save the industry.

They have certain advantages that are as follows:

1) Minimization of downtime cost: when the machine has malfunction and the time

required to make its functionality to normal condition is known as downtime. This could

be totally minimized with the predictive analysis since it could predict the abnormal

condition of the machine at a very early stage.

2) Minimize the production loss: The major advantage of predictive analysis is that it

could necessarily indicate us at which part the replacement has to be done rather than

indicating us the maintenance around the whole machine.

3) Reduction of man work: Since prediction analysis with the procedure of ANN helps us

in detecting the defect found in the particular part of the machine, it could reduce the

effort of the man power. Hence the labour cost could be reduced abruptly.

In this case the best opportunity should be identified by the plant, which could help in improving

the company. For instance let’s take a client who comes to buy a product of a company. He finds

a defect in that product and informs the plant manager regarding the defect. Immediately the

manager should necessarily take action if the information given by the client is valid. The plant

manager comes into action and determines the defect of the product that did not meet the

requirement. It could take certain expenditure to cure this defect but that expenditure could be

given as a profit to the company. Within certain time period the plant could run at the top that

could reduce the cost and defects.

Maintenance of best practices:

It is necessary to make adjustments in the wide range of enterprise by having maintenance over

the entire project not to their own plant. In a company both the quality and the plant manager

should take decision wisely. For example if the plant manager tries to cut down the cost of $ 1

million dollars by neglecting the raw materials that hold minimum good to a product then there

could be sustainable cost reduction to the profit which could exceed more than a million dollar in

that particular year [7]. So it is necessary to take the decisions wisely not quickly.

Manufacturers give their concentration more on the quality of the product. They also needs to

ensure that there is an optimal functioning around the whole manufacturing plant, updated,

efficiency of the workers, appropriate measurements, and the finest creation potential. With

predictive analytics, it’s doable to not only get better the quality of manufacture, increase

equipment return on investment (ROI) and overall equipment effectiveness (OEE), and look

forward to the needs across the plant and enterprise, but also improve the brand’s status, leave

behind the competition and make sure the safety of the customer. Hence, mainly focusing on the

predictive analysis benefits, it is necessary to find the possible routes that how it could be used

within the organization in order to save the industry.

They have certain advantages that are as follows:

1) Minimization of downtime cost: when the machine has malfunction and the time

required to make its functionality to normal condition is known as downtime. This could

be totally minimized with the predictive analysis since it could predict the abnormal

condition of the machine at a very early stage.

2) Minimize the production loss: The major advantage of predictive analysis is that it

could necessarily indicate us at which part the replacement has to be done rather than

indicating us the maintenance around the whole machine.

3) Reduction of man work: Since prediction analysis with the procedure of ANN helps us

in detecting the defect found in the particular part of the machine, it could reduce the

effort of the man power. Hence the labour cost could be reduced abruptly.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 56

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.