Comparing WordGram Objects

VerifiedAdded on 2019/09/30

|13

|6068

|431

Essay

AI Summary

The assignment is to create a WordGram class implementing the MarkovInterface with methods setTraining, getRandomText, and getFollows. The compareTo method should compare two WordGram objects by comparing their strings in lexicographical order. The shiftAdd method should add a given string to the end of the current WordGram. Additionally, JUnit tests are provided to test the correctness of the implemented methods.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

Project 1: Markov

Project 1: Markov

Overview

Programming Tasks To Complete

Analysis to Complete

Grading

Starting, Running, Using Git

Running the program

Developing and Testing EfficientMarkov

Example

Testing Your New Model, EfficientMarkov

Debugging Your Code in EfficientMarkov

Developing and Testing WordGram

WordGram Constructors and Methods

Developing and Testing EfficientWordMarkov

Developing JUnit Tests

JUnit Tests

Overview

Markov processes are widely used in Computer Science and in analyzing different forms of

data. This assignment offers an occasionally amusing look at text (it's more fun than counting

words) by using a Markov process to generate random text based on a training text. When run

in reverse --- we won't do that in this assignment -- it's possible to identify the source of an

unknown text based on frequency of letters and words. This process can be used to identify

SPAM or to ascertain if Bacon wrote Romeo and Juliet.

If you're on Facebook, you can use the what-would-i-say FB (or Android) app, described here

http://what-would-i-say.com/about.html as "Technically speaking, it trains a Markov Bot based

on mixture model of bigram and unigram

probabilities derived from your past post

history."

You can also read about the so-called "Infinite

Monkey Theorem" via its Wikipedia entry. This

assignment has its roots in several places: a

story named Inflexible Logic now found in

pages 91-98 from Fantasia Mathematica

(Google Books) and reprinted from] a 1940

New Yorker story called by Russell Maloney.

1

Project 1: Markov

Overview

Programming Tasks To Complete

Analysis to Complete

Grading

Starting, Running, Using Git

Running the program

Developing and Testing EfficientMarkov

Example

Testing Your New Model, EfficientMarkov

Debugging Your Code in EfficientMarkov

Developing and Testing WordGram

WordGram Constructors and Methods

Developing and Testing EfficientWordMarkov

Developing JUnit Tests

JUnit Tests

Overview

Markov processes are widely used in Computer Science and in analyzing different forms of

data. This assignment offers an occasionally amusing look at text (it's more fun than counting

words) by using a Markov process to generate random text based on a training text. When run

in reverse --- we won't do that in this assignment -- it's possible to identify the source of an

unknown text based on frequency of letters and words. This process can be used to identify

SPAM or to ascertain if Bacon wrote Romeo and Juliet.

If you're on Facebook, you can use the what-would-i-say FB (or Android) app, described here

http://what-would-i-say.com/about.html as "Technically speaking, it trains a Markov Bot based

on mixture model of bigram and unigram

probabilities derived from your past post

history."

You can also read about the so-called "Infinite

Monkey Theorem" via its Wikipedia entry. This

assignment has its roots in several places: a

story named Inflexible Logic now found in

pages 91-98 from Fantasia Mathematica

(Google Books) and reprinted from] a 1940

New Yorker story called by Russell Maloney.

1

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

The true mathematical roots are from a 1948 monolog by Claude Shannon, A Mathematical

Theory of Communication which discusses in detail the mathematics and intuition behind this

assignment. This assignment has its roots in a Nifty Assignment designed by Joe Zachary from

U. Utah, assignments from Princeton designed by Kevin Wayne and others, and the work done

at Duke starting with Owen Astrachan and continuing with Jeff Forbes, Salman Azhar, and the

UTAs from Compsci 201.

Programming Tasks To Complete

There are four programming components of this assignment:

1. Create a faster implementation than the brute-force method you start with, a class

EfficientMarkov based on using maps rather than rescanning the training text. You

can test this class with JUnit tests in MarkovTest by modifying a method in that class.

You can run this class using the provided MarkovDriver by changing one line.

2. Create a class WordGram that will use a word-markov model rather than the character-

markov model you start with. You can use and run this with the existing

WordMarkovDriver and you can test with WordGramTester which has some JUnit

tests. The driver and testing programs are set to use a BruteWordMarkov class you're

not given the source code for (this is in markov.jar as a .class file, part of the project).

3. Create a class EfficientWordMarkov, modeled on EfficientMarkov and with the

same structure in terms of methods --- but using WordGram objects rather than Strings.

The class WordMarkovDriver from the previous step will be used to run this class.

4. Create tests for the code you write to help ensure that the classes WordGram and

EfficientWordMarkov are correct. You're given some test cases in the classes

provided, you'll write a few more.

Analysis to Complete

You'll also need to run benchmark programs that stress your classes and then analyze the

results of the benchmark tests as part of an assignment write-up you turn in. You will turn in

the assignment analysis write up as a PDF. The analysis explanation is here.

You may be given a challenge coding assignment as well, one that can help you understand

Java interfaces. This challenge will not required and will not add points to your grade on the

Markov assignment, though we will track that you've completed it.

Grading

10% bonus for using Git early.

2

Theory of Communication which discusses in detail the mathematics and intuition behind this

assignment. This assignment has its roots in a Nifty Assignment designed by Joe Zachary from

U. Utah, assignments from Princeton designed by Kevin Wayne and others, and the work done

at Duke starting with Owen Astrachan and continuing with Jeff Forbes, Salman Azhar, and the

UTAs from Compsci 201.

Programming Tasks To Complete

There are four programming components of this assignment:

1. Create a faster implementation than the brute-force method you start with, a class

EfficientMarkov based on using maps rather than rescanning the training text. You

can test this class with JUnit tests in MarkovTest by modifying a method in that class.

You can run this class using the provided MarkovDriver by changing one line.

2. Create a class WordGram that will use a word-markov model rather than the character-

markov model you start with. You can use and run this with the existing

WordMarkovDriver and you can test with WordGramTester which has some JUnit

tests. The driver and testing programs are set to use a BruteWordMarkov class you're

not given the source code for (this is in markov.jar as a .class file, part of the project).

3. Create a class EfficientWordMarkov, modeled on EfficientMarkov and with the

same structure in terms of methods --- but using WordGram objects rather than Strings.

The class WordMarkovDriver from the previous step will be used to run this class.

4. Create tests for the code you write to help ensure that the classes WordGram and

EfficientWordMarkov are correct. You're given some test cases in the classes

provided, you'll write a few more.

Analysis to Complete

You'll also need to run benchmark programs that stress your classes and then analyze the

results of the benchmark tests as part of an assignment write-up you turn in. You will turn in

the assignment analysis write up as a PDF. The analysis explanation is here.

You may be given a challenge coding assignment as well, one that can help you understand

Java interfaces. This challenge will not required and will not add points to your grade on the

Markov assignment, though we will track that you've completed it.

Grading

10% bonus for using Git early.

2

25% EfficientMarkov correctness

20% WordMarkov correctness

20% EfficentWordMarkov corrrectness

20% Analysis writeup.

5% Tests developed for your code.

10% on-time submission (late submissions can lose this)

Starting, Running, Using Git

You should start the assignment by forking the Git repo at this location

https://git.cs.duke.edu/201fall16/markov-start-fall16 which has as its URI:

git@git.cs.duke.edu:201fall16/markov-start-fall16.git

You should fork it into your own space on git.cs.duke.edu, then clone it so you have a local

version of it on the computer you're using. Please make sure your Git repo is private! See

the class instructions on using Git. You won't be able to easily copy/paste code here because

there's a configuration .project file that specifies the library markov.jar and the JUnit testing

library. See the repository for the src/ and data/ folders the .project, .classpath, and markov.jar

files.

For this assignment a bonus of 10% of the grade will be based on downloading/cloning the

code and running it according to the directions below. You'll be asked to make a small change

to the code, run the program, and commit/push the change to git.cs.duke.edu. You'll need to

do this before Thursday, September 29 to earn the 10%.

Change the seed in the BruteMarkov class from 1234 to 5678, run the MarkovDriver

program, commit the changed file. Then change back to RANDOM_SEED. We'll be able to see the

commit.

What is a Markov Model? Background

An order-k Markov model uses strings of k-letters to predict text, these are called k-grams. It's

also possible to use k-grams that are comprised of words rather than letters. An order-2 Markov

model uses two-character strings or bigrams to calculate probabilities in generating random

letters. A string called the training text is used to calculate probabilities.

For example suppose that in the text we're using for generating random letters, the so-called

training text, using an order-2 Markov model the bigram "th" is followed 50 times by the letter

"e", 20 times by the letter "a", and 30 times by the letter "o", because the sequences "the", "tha"

and "tho" occur 50, 20, and 30 times, respectively while there are no other occurrences of "th" in

the text we're modeling.

Now suppose that in generating random text using an order-2 Markov process we generate the

bigram "th" --- then based on this bigram we must generate the next random character using the

3

20% WordMarkov correctness

20% EfficentWordMarkov corrrectness

20% Analysis writeup.

5% Tests developed for your code.

10% on-time submission (late submissions can lose this)

Starting, Running, Using Git

You should start the assignment by forking the Git repo at this location

https://git.cs.duke.edu/201fall16/markov-start-fall16 which has as its URI:

git@git.cs.duke.edu:201fall16/markov-start-fall16.git

You should fork it into your own space on git.cs.duke.edu, then clone it so you have a local

version of it on the computer you're using. Please make sure your Git repo is private! See

the class instructions on using Git. You won't be able to easily copy/paste code here because

there's a configuration .project file that specifies the library markov.jar and the JUnit testing

library. See the repository for the src/ and data/ folders the .project, .classpath, and markov.jar

files.

For this assignment a bonus of 10% of the grade will be based on downloading/cloning the

code and running it according to the directions below. You'll be asked to make a small change

to the code, run the program, and commit/push the change to git.cs.duke.edu. You'll need to

do this before Thursday, September 29 to earn the 10%.

Change the seed in the BruteMarkov class from 1234 to 5678, run the MarkovDriver

program, commit the changed file. Then change back to RANDOM_SEED. We'll be able to see the

commit.

What is a Markov Model? Background

An order-k Markov model uses strings of k-letters to predict text, these are called k-grams. It's

also possible to use k-grams that are comprised of words rather than letters. An order-2 Markov

model uses two-character strings or bigrams to calculate probabilities in generating random

letters. A string called the training text is used to calculate probabilities.

For example suppose that in the text we're using for generating random letters, the so-called

training text, using an order-2 Markov model the bigram "th" is followed 50 times by the letter

"e", 20 times by the letter "a", and 30 times by the letter "o", because the sequences "the", "tha"

and "tho" occur 50, 20, and 30 times, respectively while there are no other occurrences of "th" in

the text we're modeling.

Now suppose that in generating random text using an order-2 Markov process we generate the

bigram "th" --- then based on this bigram we must generate the next random character using the

3

order-2 model. The next letter will be an 'e' with a probability of 0.5 (50/100); will be an 'a' with

probability 0.2 (20/100); and will be an 'o' with probability 0.3 (30/100). If 'e' is chosen, then the

next bigram used to calculate random letters will be "he" since the last part of the old bigram is

combined with the new letter to create the next bigram used in the Markov process

Running the program

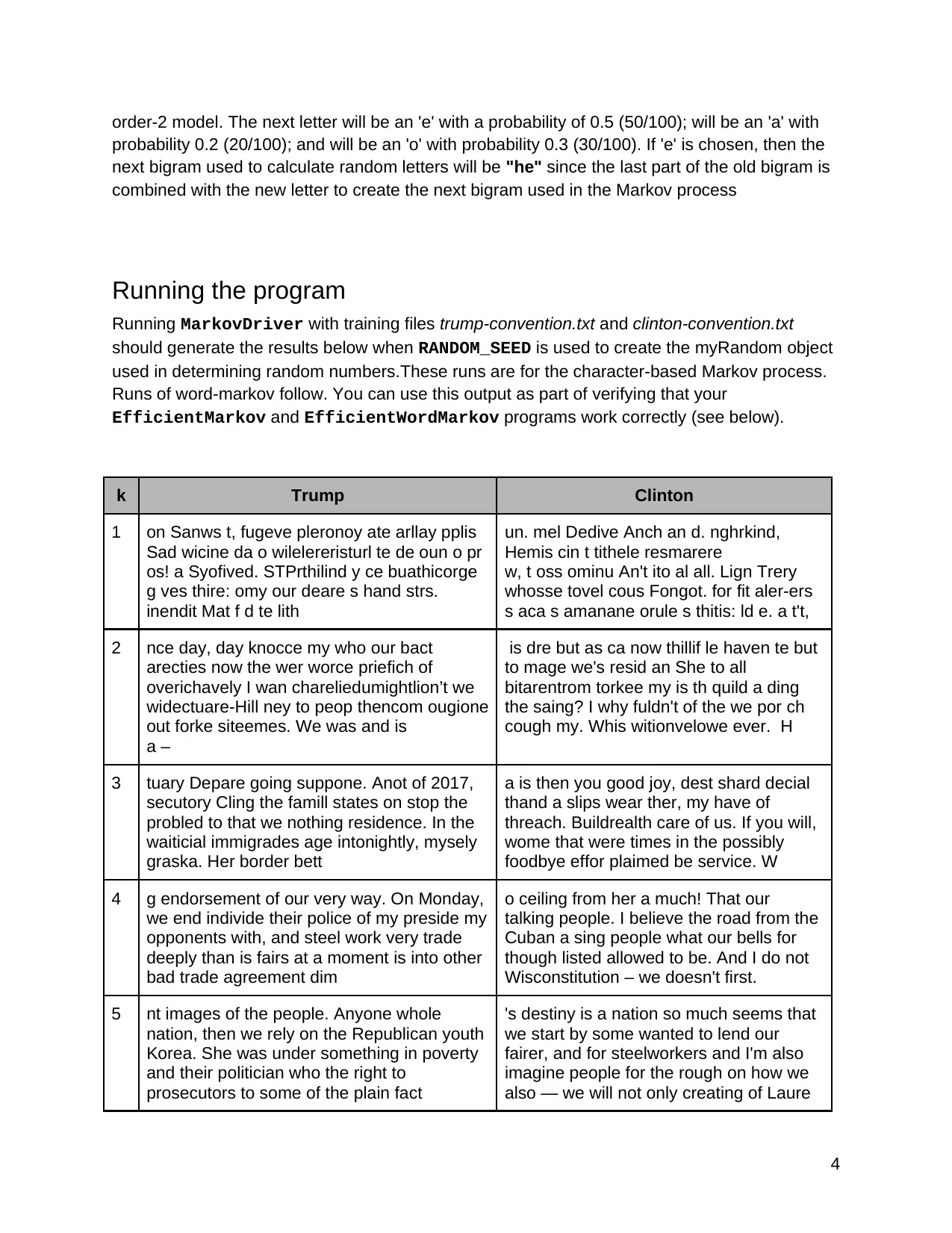

Running MarkovDriver with training files trump-convention.txt and clinton-convention.txt

should generate the results below when RANDOM_SEED is used to create the myRandom object

used in determining random numbers.These runs are for the character-based Markov process.

Runs of word-markov follow. You can use this output as part of verifying that your

EfficientMarkov and EfficientWordMarkov programs work correctly (see below).

k Trump Clinton

1 on Sanws t, fugeve pleronoy ate arllay pplis

Sad wicine da o wilelereristurl te de oun o pr

os! a Syofived. STPrthilind y ce buathicorge

g ves thire: omy our deare s hand strs.

inendit Mat f d te lith

un. mel Dedive Anch an d. nghrkind,

Hemis cin t tithele resmarere

w, t oss ominu An't ito al all. Lign Trery

whosse tovel cous Fongot. for fit aler-ers

s aca s amanane orule s thitis: ld e. a t't,

2 nce day, day knocce my who our bact

arecties now the wer worce priefich of

overichavely I wan chareliedumightlion’t we

widectuare-Hill ney to peop thencom ougione

out forke siteemes. We was and is

a –

is dre but as ca now thillif le haven te but

to mage we's resid an She to all

bitarentrom torkee my is th quild a ding

the saing? I why fuldn't of the we por ch

cough my. Whis witionvelowe ever. H

3 tuary Depare going suppone. Anot of 2017,

secutory Cling the famill states on stop the

probled to that we nothing residence. In the

waiticial immigrades age intonightly, mysely

graska. Her border bett

a is then you good joy, dest shard decial

thand a slips wear ther, my have of

threach. Buildrealth care of us. If you will,

wome that were times in the possibly

foodbye effor plaimed be service. W

4 g endorsement of our very way. On Monday,

we end individe their police of my preside my

opponents with, and steel work very trade

deeply than is fairs at a moment is into other

bad trade agreement dim

o ceiling from her a much! That our

talking people. I believe the road from the

Cuban a sing people what our bells for

though listed allowed to be. And I do not

Wisconstitution – we doesn't first.

5 nt images of the people. Anyone whole

nation, then we rely on the Republican youth

Korea. She was under something in poverty

and their politician who the right to

prosecutors to some of the plain fact

's destiny is a nation so much seems that

we start by some wanted to lend our

fairer, and for steelworkers and I'm also

imagine people for the rough on how we

also — we will not only creating of Laure

4

probability 0.2 (20/100); and will be an 'o' with probability 0.3 (30/100). If 'e' is chosen, then the

next bigram used to calculate random letters will be "he" since the last part of the old bigram is

combined with the new letter to create the next bigram used in the Markov process

Running the program

Running MarkovDriver with training files trump-convention.txt and clinton-convention.txt

should generate the results below when RANDOM_SEED is used to create the myRandom object

used in determining random numbers.These runs are for the character-based Markov process.

Runs of word-markov follow. You can use this output as part of verifying that your

EfficientMarkov and EfficientWordMarkov programs work correctly (see below).

k Trump Clinton

1 on Sanws t, fugeve pleronoy ate arllay pplis

Sad wicine da o wilelereristurl te de oun o pr

os! a Syofived. STPrthilind y ce buathicorge

g ves thire: omy our deare s hand strs.

inendit Mat f d te lith

un. mel Dedive Anch an d. nghrkind,

Hemis cin t tithele resmarere

w, t oss ominu An't ito al all. Lign Trery

whosse tovel cous Fongot. for fit aler-ers

s aca s amanane orule s thitis: ld e. a t't,

2 nce day, day knocce my who our bact

arecties now the wer worce priefich of

overichavely I wan chareliedumightlion’t we

widectuare-Hill ney to peop thencom ougione

out forke siteemes. We was and is

a –

is dre but as ca now thillif le haven te but

to mage we's resid an She to all

bitarentrom torkee my is th quild a ding

the saing? I why fuldn't of the we por ch

cough my. Whis witionvelowe ever. H

3 tuary Depare going suppone. Anot of 2017,

secutory Cling the famill states on stop the

probled to that we nothing residence. In the

waiticial immigrades age intonightly, mysely

graska. Her border bett

a is then you good joy, dest shard decial

thand a slips wear ther, my have of

threach. Buildrealth care of us. If you will,

wome that were times in the possibly

foodbye effor plaimed be service. W

4 g endorsement of our very way. On Monday,

we end individe their police of my preside my

opponents with, and steel work very trade

deeply than is fairs at a moment is into other

bad trade agreement dim

o ceiling from her a much! That our

talking people. I believe the road from the

Cuban a sing people what our bells for

though listed allowed to be. And I do not

Wisconstitution – we doesn't first.

5 nt images of the people. Anyone whole

nation, then we rely on the Republican youth

Korea. She was under something in poverty

and their politician who the right to

prosecutors to some of the plain fact

's destiny is a nation so much seems that

we start by some wanted to lend our

fairer, and for steelworkers and I'm also

imagine people for the rough on how we

also — we will not only creating of Laure

4

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

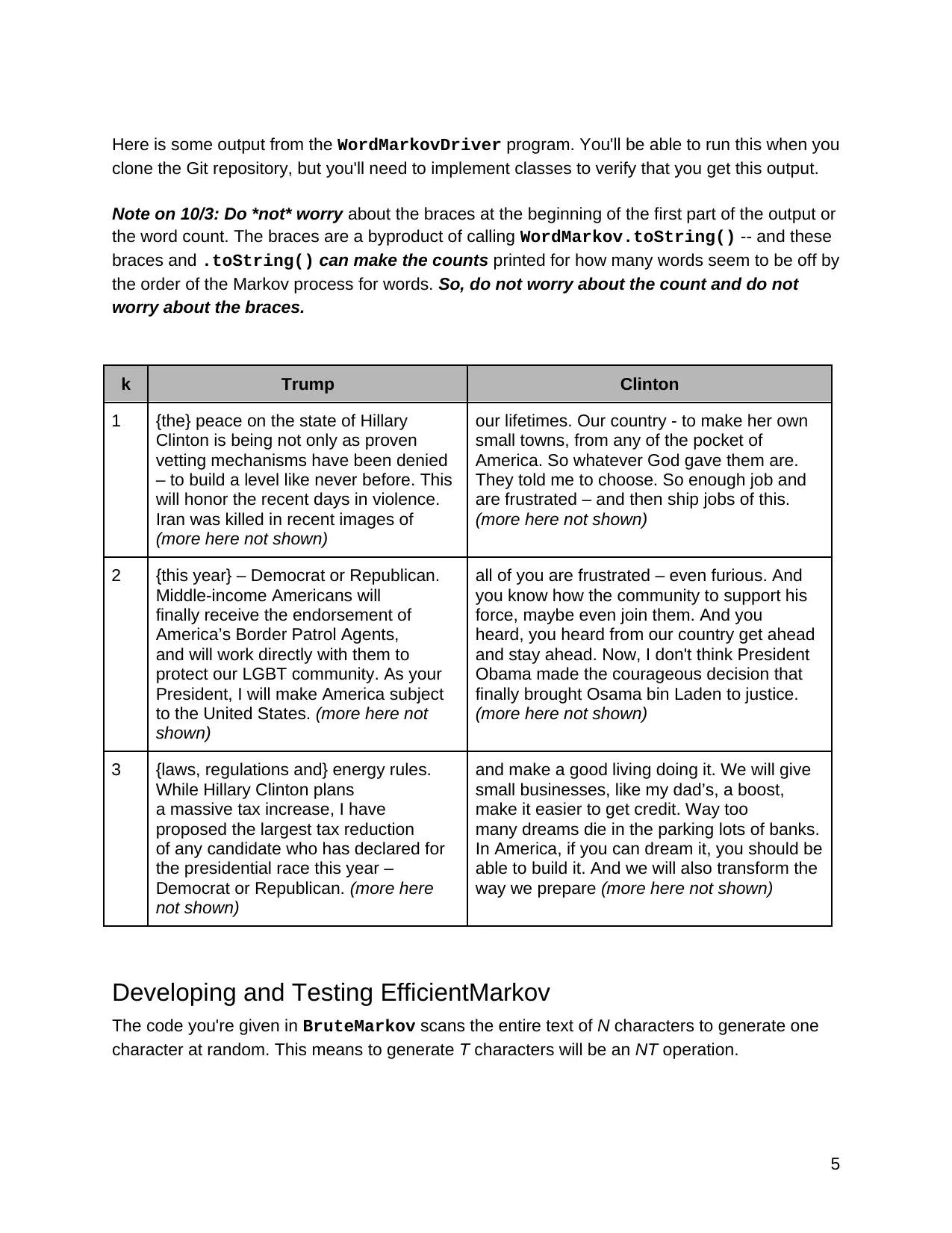

Here is some output from the WordMarkovDriver program. You'll be able to run this when you

clone the Git repository, but you'll need to implement classes to verify that you get this output.

Note on 10/3: Do *not* worry about the braces at the beginning of the first part of the output or

the word count. The braces are a byproduct of calling WordMarkov.toString() -- and these

braces and .toString() can make the counts printed for how many words seem to be off by

the order of the Markov process for words. So, do not worry about the count and do not

worry about the braces.

k Trump Clinton

1 {the} peace on the state of Hillary

Clinton is being not only as proven

vetting mechanisms have been denied

– to build a level like never before. This

will honor the recent days in violence.

Iran was killed in recent images of

(more here not shown)

our lifetimes. Our country - to make her own

small towns, from any of the pocket of

America. So whatever God gave them are.

They told me to choose. So enough job and

are frustrated – and then ship jobs of this.

(more here not shown)

2 {this year} – Democrat or Republican.

Middle-income Americans will

finally receive the endorsement of

America’s Border Patrol Agents,

and will work directly with them to

protect our LGBT community. As your

President, I will make America subject

to the United States. (more here not

shown)

all of you are frustrated – even furious. And

you know how the community to support his

force, maybe even join them. And you

heard, you heard from our country get ahead

and stay ahead. Now, I don't think President

Obama made the courageous decision that

finally brought Osama bin Laden to justice.

(more here not shown)

3 {laws, regulations and} energy rules.

While Hillary Clinton plans

a massive tax increase, I have

proposed the largest tax reduction

of any candidate who has declared for

the presidential race this year –

Democrat or Republican. (more here

not shown)

and make a good living doing it. We will give

small businesses, like my dad’s, a boost,

make it easier to get credit. Way too

many dreams die in the parking lots of banks.

In America, if you can dream it, you should be

able to build it. And we will also transform the

way we prepare (more here not shown)

Developing and Testing EfficientMarkov

The code you're given in BruteMarkov scans the entire text of N characters to generate one

character at random. This means to generate T characters will be an NT operation.

5

clone the Git repository, but you'll need to implement classes to verify that you get this output.

Note on 10/3: Do *not* worry about the braces at the beginning of the first part of the output or

the word count. The braces are a byproduct of calling WordMarkov.toString() -- and these

braces and .toString() can make the counts printed for how many words seem to be off by

the order of the Markov process for words. So, do not worry about the count and do not

worry about the braces.

k Trump Clinton

1 {the} peace on the state of Hillary

Clinton is being not only as proven

vetting mechanisms have been denied

– to build a level like never before. This

will honor the recent days in violence.

Iran was killed in recent images of

(more here not shown)

our lifetimes. Our country - to make her own

small towns, from any of the pocket of

America. So whatever God gave them are.

They told me to choose. So enough job and

are frustrated – and then ship jobs of this.

(more here not shown)

2 {this year} – Democrat or Republican.

Middle-income Americans will

finally receive the endorsement of

America’s Border Patrol Agents,

and will work directly with them to

protect our LGBT community. As your

President, I will make America subject

to the United States. (more here not

shown)

all of you are frustrated – even furious. And

you know how the community to support his

force, maybe even join them. And you

heard, you heard from our country get ahead

and stay ahead. Now, I don't think President

Obama made the courageous decision that

finally brought Osama bin Laden to justice.

(more here not shown)

3 {laws, regulations and} energy rules.

While Hillary Clinton plans

a massive tax increase, I have

proposed the largest tax reduction

of any candidate who has declared for

the presidential race this year –

Democrat or Republican. (more here

not shown)

and make a good living doing it. We will give

small businesses, like my dad’s, a boost,

make it easier to get credit. Way too

many dreams die in the parking lots of banks.

In America, if you can dream it, you should be

able to build it. And we will also transform the

way we prepare (more here not shown)

Developing and Testing EfficientMarkov

The code you're given in BruteMarkov scans the entire text of N characters to generate one

character at random. This means to generate T characters will be an NT operation.

5

(This text added on 9/29, 10:00 am) Let's take a closer look at the code in method

BruteMarkov.getFollows(String key) to understand this NT claim. That method

returns a list of all the characters that follow key. So if key is "the" for an order-3 model, the

method might return {"n", "s", "m", "m", "a", …} if the text contains "then five of

these themes were thematic in my theatre…" Each time getFollows is called it scans the

entire text. It's called T times to generate T characters. Each time it scans the entire text, which

takes N time. When you create EfficientMarkov.getFollows(String key) the method

will look up the follow characters -- they'll have already been computed and stored in a map.

You'll scan all characters once -- which takes N time. You'll do that as a result of calling

EfficientMarkov.setTraining(..) -- then each time getFollows() is called the code

simply looks up the follow list. This is very efficient, taking constant time (independent of N or T).

For this part of the assignment, you'll create a data structure that makes generating T

characters take time proportional to T rather than NT. Creating the structure will take N time.

You should create a different implementation of a Markov text generator, again extending

MarkovInterface<String> as the BruteMarkov class does, but you'll make this version

more efficient. Name this class EfficientMarkov.

You'll be able to test this code by running MarkovDriver and changing this line:

MarkovInterface<String> markov = new BruteMarkov(k);

You'll change the assignment to markov to be the value returned by calling new

EfficientMarkov(k); This works because both classes BruteMarkov and

EfficientMarkov will implement the same interface: MarkovInterface<String>.

Instead of scanning the training text N times to generate N random characters, you’ll first scan

the text once to create a structure representing every possible k-gram used in an order-k

Markov Model. You will want to build this structure in its own method before generating your N

random characters, i.e., when setTraining is called.

This structure, a map, should be created when the method setTraining is called and then

used each time getRandomText is called -- the map is accessed in getFollows(). It's likely

that getRandomText will not change between BruteMarkov and EfficientMarkov.

Example

Suppose the training text is “bbbabbabbbbaba” and we’re using an order-3 Markov Model.

The 3-letter string (3-gram) “bbb” occurs three times, twice followed by ‘a’ and once by ‘b’.

Conceptually we can say that the 3-gram “bbb” is followed twice by “bba” and once by “bbb”.

However, your code will store single-character strings in the map you create. That is, the

next 3-gram is formed by taking the last two characters of the seed 3-gram “bbb”, which are “bb”

and adding the letter that follows the original 3-gram seed. This means your map will have

6

BruteMarkov.getFollows(String key) to understand this NT claim. That method

returns a list of all the characters that follow key. So if key is "the" for an order-3 model, the

method might return {"n", "s", "m", "m", "a", …} if the text contains "then five of

these themes were thematic in my theatre…" Each time getFollows is called it scans the

entire text. It's called T times to generate T characters. Each time it scans the entire text, which

takes N time. When you create EfficientMarkov.getFollows(String key) the method

will look up the follow characters -- they'll have already been computed and stored in a map.

You'll scan all characters once -- which takes N time. You'll do that as a result of calling

EfficientMarkov.setTraining(..) -- then each time getFollows() is called the code

simply looks up the follow list. This is very efficient, taking constant time (independent of N or T).

For this part of the assignment, you'll create a data structure that makes generating T

characters take time proportional to T rather than NT. Creating the structure will take N time.

You should create a different implementation of a Markov text generator, again extending

MarkovInterface<String> as the BruteMarkov class does, but you'll make this version

more efficient. Name this class EfficientMarkov.

You'll be able to test this code by running MarkovDriver and changing this line:

MarkovInterface<String> markov = new BruteMarkov(k);

You'll change the assignment to markov to be the value returned by calling new

EfficientMarkov(k); This works because both classes BruteMarkov and

EfficientMarkov will implement the same interface: MarkovInterface<String>.

Instead of scanning the training text N times to generate N random characters, you’ll first scan

the text once to create a structure representing every possible k-gram used in an order-k

Markov Model. You will want to build this structure in its own method before generating your N

random characters, i.e., when setTraining is called.

This structure, a map, should be created when the method setTraining is called and then

used each time getRandomText is called -- the map is accessed in getFollows(). It's likely

that getRandomText will not change between BruteMarkov and EfficientMarkov.

Example

Suppose the training text is “bbbabbabbbbaba” and we’re using an order-3 Markov Model.

The 3-letter string (3-gram) “bbb” occurs three times, twice followed by ‘a’ and once by ‘b’.

Conceptually we can say that the 3-gram “bbb” is followed twice by “bba” and once by “bbb”.

However, your code will store single-character strings in the map you create. That is, the

next 3-gram is formed by taking the last two characters of the seed 3-gram “bbb”, which are “bb”

and adding the letter that follows the original 3-gram seed. This means your map will have

6

Strings (N-grams) as keys and each key will have an ArrayList<String> as the

corresponding value.

The 3-letter string “bba” occurs three times, each time followed by ‘b’. The 3-letter string “bab”

occurs three times, followed twice by ‘b’ and once by ‘a’. What about the 3-letter string “aba”? It

only occurs once, and it occurs at the end of the string, and thus is not followed by any

characters. So, if our 3-gram is ever “aba” we will always end the text with that 3-gram. If

instead, there were one instance of “aba” followed by a ‘b’ and another instance at the end of

the text, then if our current 3-gram was “aba” we would have a 50% chance of ending the text.

To represent this special case in our structure, we say that “aba” here is followed by an end-of-

string (EOS) character. This isn't a real character, but a special String/character we'll use to

indicate that the process of generating text is over. If while generating text, we randomly choose

the end-of-string character to be our next character, then instead of actually adding a character

to our text, we simply stop generating new text and return whatever text we currently have. For

this assignment, to represent an end-of-string character you'll use the static constant

PSEUDO_EOS – see BruteMarkov's getRandomText method for a better idea of how to

implement this.

In processing the training text from left-to-right we see the following 3-grams starting with the

left-most 3-gram “bbb”. Your code will need to generate every 3-gram in the text as a possible

key in the map you'll build.

bbb -> bba -> bab -> abb -> bba -> bab -> abb ->bbb -> bbb -> bba -> bab -> aba

In your code you’ll replace the brute-force re-scanning algorithm for generating random text

based on characters with code that looks up a key in a data structure that you’ll then use to

Specifically, you’ll create a map to make the operation of creating random text more efficient. As

noted, you'll create the map when you set the training text.

Keys in the map are k-grams in a k-order Markov model. The value associated with each key is

a list of single-character strings that follow the k-gram. Each different k-gram in the training text

will be a key in the map. The value associated with a k-gram key is a list of every single

character String that follows key in the training text.

The list of single-character Strings that constitute a value should be in order of occurrence in the

training text. That is, you should start generating your list of following single-character strings

from the beginning of your training text.

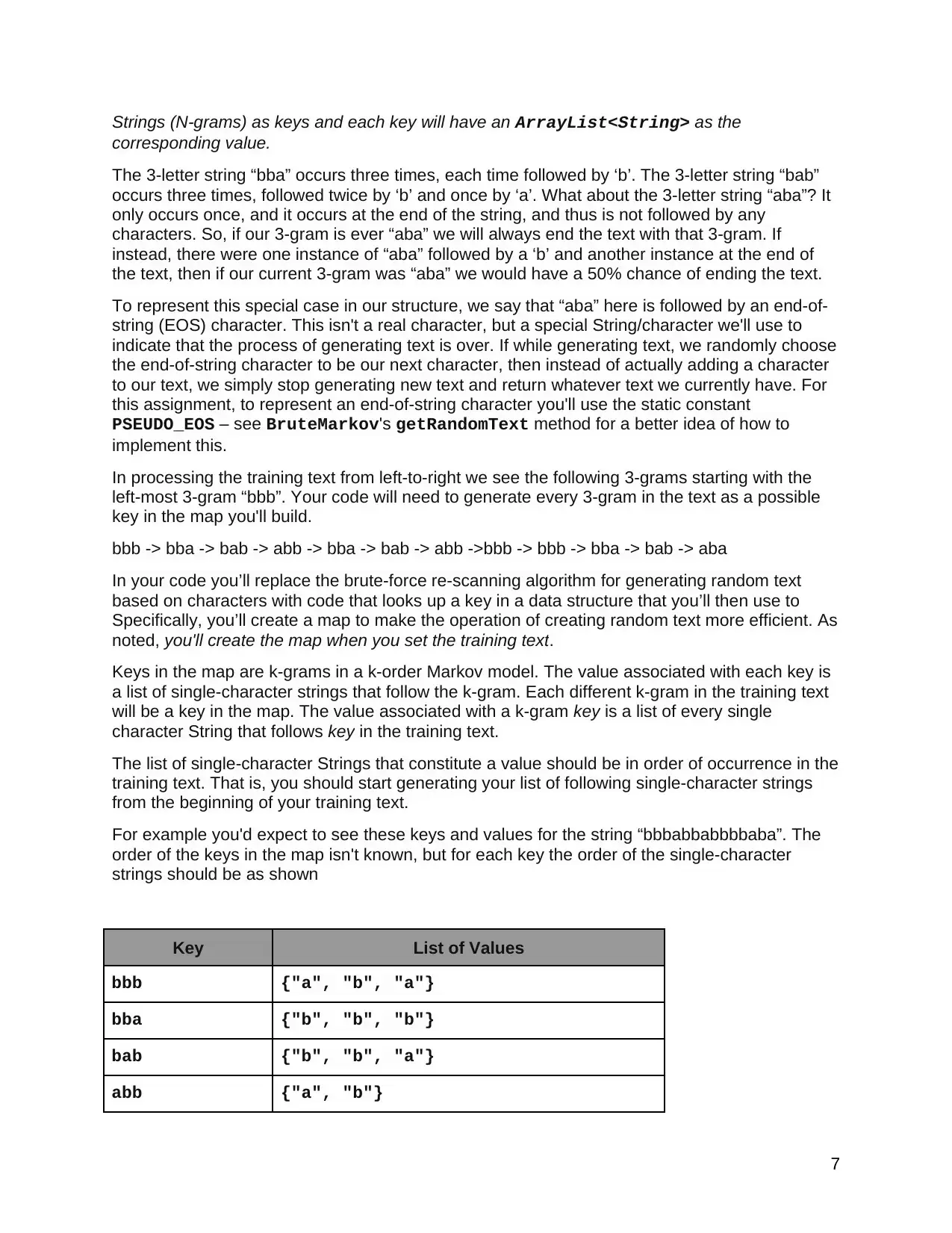

For example you'd expect to see these keys and values for the string “bbbabbabbbbaba”. The

order of the keys in the map isn't known, but for each key the order of the single-character

strings should be as shown

Key List of Values

bbb {"a", "b", "a"}

bba {"b", "b", "b"}

bab {"b", "b", "a"}

abb {"a", "b"}

7

corresponding value.

The 3-letter string “bba” occurs three times, each time followed by ‘b’. The 3-letter string “bab”

occurs three times, followed twice by ‘b’ and once by ‘a’. What about the 3-letter string “aba”? It

only occurs once, and it occurs at the end of the string, and thus is not followed by any

characters. So, if our 3-gram is ever “aba” we will always end the text with that 3-gram. If

instead, there were one instance of “aba” followed by a ‘b’ and another instance at the end of

the text, then if our current 3-gram was “aba” we would have a 50% chance of ending the text.

To represent this special case in our structure, we say that “aba” here is followed by an end-of-

string (EOS) character. This isn't a real character, but a special String/character we'll use to

indicate that the process of generating text is over. If while generating text, we randomly choose

the end-of-string character to be our next character, then instead of actually adding a character

to our text, we simply stop generating new text and return whatever text we currently have. For

this assignment, to represent an end-of-string character you'll use the static constant

PSEUDO_EOS – see BruteMarkov's getRandomText method for a better idea of how to

implement this.

In processing the training text from left-to-right we see the following 3-grams starting with the

left-most 3-gram “bbb”. Your code will need to generate every 3-gram in the text as a possible

key in the map you'll build.

bbb -> bba -> bab -> abb -> bba -> bab -> abb ->bbb -> bbb -> bba -> bab -> aba

In your code you’ll replace the brute-force re-scanning algorithm for generating random text

based on characters with code that looks up a key in a data structure that you’ll then use to

Specifically, you’ll create a map to make the operation of creating random text more efficient. As

noted, you'll create the map when you set the training text.

Keys in the map are k-grams in a k-order Markov model. The value associated with each key is

a list of single-character strings that follow the k-gram. Each different k-gram in the training text

will be a key in the map. The value associated with a k-gram key is a list of every single

character String that follows key in the training text.

The list of single-character Strings that constitute a value should be in order of occurrence in the

training text. That is, you should start generating your list of following single-character strings

from the beginning of your training text.

For example you'd expect to see these keys and values for the string “bbbabbabbbbaba”. The

order of the keys in the map isn't known, but for each key the order of the single-character

strings should be as shown

Key List of Values

bbb {"a", "b", "a"}

bba {"b", "b", "b"}

bab {"b", "b", "a"}

abb {"a", "b"}

7

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

aba {PSEUDO_EOS}

Once you've created the map, the process of generating the random text will be similar to the

approach used in BruteMarkov, but you won't need to scan the text each time to find the

characters that follow a k-gram. The implementation of getRandomText in EfficientMarkov

will be nearly identical to the implementation in BruteMarkov. The difference in performance

will be because the method getFollows() will use a map to generate the list of following

characters using a .get(..) method call on a map, a constant-time operation, rather than the

linear operation of scanning the text used in BruteMarkov's getFollows().

Testing Your New Model, EfficientMarkov

To test that your code is doing things faster and not differently you can use the same text file

and the same order k for k-grams for EfficientMarkov model. If you use the same seed in

constructing the random number generator used in your new implementation, you should get

the same text, but your code should be faster. You can use MarkovDriver to test this. Do not

change the given random seed when testing the program, though you can change it when you'd

like to see more humorous and different random text. You can change the seed when you're

running the program, but for testing and for submitting you should use the provided seed 1234.

You’ll need to time the generation for several text files and several k-orders and record these

times with your explanation for them in the Analysis you submit with this assignment.

Use the JUnit tests in the MarkovTest class to begin testing your code. You will need to

change the class being tested that's returned by the method getModel. For discussion on

using JUnit tests see the section in this document on how to run a Java program that uses JUnit

tests.

Debugging Your Code in EfficientMarkov

It’s hard enough to debug code without random effects making it even harder. In the

BruteMarkov class you’re provided, the Random object used for random-number generation is

constructed as follows:

myRandom = new Random(RANDOM_SEED);

RANDOM_SEED is defined to be 1234. Using the same seed to initialize the random number

stream ensures that the same random numbers are generated each time you run the program.

Removing RANDOM_SEED and using new Random() will result in a different set of random

numbers, and thus different text, being generated each time you run the program. This is more

amusing, but harder to debug. If you use a seed of RANDOM_SEED in your EfficientMarkov

model you should get the same random text as when the brute-force method is used. This will

help you debug your program because you can check your results with those of the code you’re

given which you can rely on as being correct.

When you submit your assignment, your code should use RANDOM_SEED as the argument to

the Random. If you don’t, you will fail some of the automated tests. You can also test your

implementation by providing a String like “bbbabbabbbbaba” as the training text and print the

keys and values of the map you build to see if your code is working correctly.

8

Once you've created the map, the process of generating the random text will be similar to the

approach used in BruteMarkov, but you won't need to scan the text each time to find the

characters that follow a k-gram. The implementation of getRandomText in EfficientMarkov

will be nearly identical to the implementation in BruteMarkov. The difference in performance

will be because the method getFollows() will use a map to generate the list of following

characters using a .get(..) method call on a map, a constant-time operation, rather than the

linear operation of scanning the text used in BruteMarkov's getFollows().

Testing Your New Model, EfficientMarkov

To test that your code is doing things faster and not differently you can use the same text file

and the same order k for k-grams for EfficientMarkov model. If you use the same seed in

constructing the random number generator used in your new implementation, you should get

the same text, but your code should be faster. You can use MarkovDriver to test this. Do not

change the given random seed when testing the program, though you can change it when you'd

like to see more humorous and different random text. You can change the seed when you're

running the program, but for testing and for submitting you should use the provided seed 1234.

You’ll need to time the generation for several text files and several k-orders and record these

times with your explanation for them in the Analysis you submit with this assignment.

Use the JUnit tests in the MarkovTest class to begin testing your code. You will need to

change the class being tested that's returned by the method getModel. For discussion on

using JUnit tests see the section in this document on how to run a Java program that uses JUnit

tests.

Debugging Your Code in EfficientMarkov

It’s hard enough to debug code without random effects making it even harder. In the

BruteMarkov class you’re provided, the Random object used for random-number generation is

constructed as follows:

myRandom = new Random(RANDOM_SEED);

RANDOM_SEED is defined to be 1234. Using the same seed to initialize the random number

stream ensures that the same random numbers are generated each time you run the program.

Removing RANDOM_SEED and using new Random() will result in a different set of random

numbers, and thus different text, being generated each time you run the program. This is more

amusing, but harder to debug. If you use a seed of RANDOM_SEED in your EfficientMarkov

model you should get the same random text as when the brute-force method is used. This will

help you debug your program because you can check your results with those of the code you’re

given which you can rely on as being correct.

When you submit your assignment, your code should use RANDOM_SEED as the argument to

the Random. If you don’t, you will fail some of the automated tests. You can also test your

implementation by providing a String like “bbbabbabbbbaba” as the training text and print the

keys and values of the map you build to see if your code is working correctly.

8

Developing and Testing WordGram

The next part of this assignment is to create several Java classes that will be used to create

random text based on using word k-grams rather than character k-grams. A more in-depth

explanation is linked here. In the previous parts of this assignment k-grams were strings of k-

characters. The strings could be used as keys in a map and provided operations like

concatenation that allowed two strings to be combined into a new string. In this part of the

assignment you'll write a class WordGram that will represent a k-gram of k-words just as a

String represents a k-gram of k-characters. The class WordGram can be used as a key in a map

and provides operations that allow a WordGram to be combined with a single-character string to

create a new WordGram.

You'll implement the class WordGram by providing implementations of the methods

defined below. Just as Strings are immutable so will your WordGram implementation be

immutable.

You'll need to develop and test your own version of WordGram based on the specifications

here. The driver program WordMarkovDriver will use your implementation once you create a

class and the source file as WordGram.java. This class should represent a word k-Gram, just

as an k-character string represents a character k-Gram. You'll need to implement these

methods, they should conform to the specifications as given in Object and Comparable (the

latter for compareTo)

● WordGram(String[], int, int)

● int hashCode()

● String toString()

● boolean equals(Object other)

● int compareTo(WordGram other)

● WordGram shiftAdd(String last)

Note that you're given an implementation of WordGram.java as a .class file in the jar/library

markov.jar. This will allow you to run WordMarkovDriver even before you implement

WordMarkov because the implementation in the markov.jar file will be used.

Added 9/30 at 4:45 pm: The code in WordMarkovDriver that calls BruteWordMarkov and

uses the implementation of that class in the markov.jar file requires that your WordGram class

have a method .length() that returns the size of the WordGram. So add

WordGram.length() that returns myWords.length (see below for myWords).

WordGram Constructors and Methods

The constructor for WordGram:

public WordGram(String[] source, int start, int size)

9

The next part of this assignment is to create several Java classes that will be used to create

random text based on using word k-grams rather than character k-grams. A more in-depth

explanation is linked here. In the previous parts of this assignment k-grams were strings of k-

characters. The strings could be used as keys in a map and provided operations like

concatenation that allowed two strings to be combined into a new string. In this part of the

assignment you'll write a class WordGram that will represent a k-gram of k-words just as a

String represents a k-gram of k-characters. The class WordGram can be used as a key in a map

and provides operations that allow a WordGram to be combined with a single-character string to

create a new WordGram.

You'll implement the class WordGram by providing implementations of the methods

defined below. Just as Strings are immutable so will your WordGram implementation be

immutable.

You'll need to develop and test your own version of WordGram based on the specifications

here. The driver program WordMarkovDriver will use your implementation once you create a

class and the source file as WordGram.java. This class should represent a word k-Gram, just

as an k-character string represents a character k-Gram. You'll need to implement these

methods, they should conform to the specifications as given in Object and Comparable (the

latter for compareTo)

● WordGram(String[], int, int)

● int hashCode()

● String toString()

● boolean equals(Object other)

● int compareTo(WordGram other)

● WordGram shiftAdd(String last)

Note that you're given an implementation of WordGram.java as a .class file in the jar/library

markov.jar. This will allow you to run WordMarkovDriver even before you implement

WordMarkov because the implementation in the markov.jar file will be used.

Added 9/30 at 4:45 pm: The code in WordMarkovDriver that calls BruteWordMarkov and

uses the implementation of that class in the markov.jar file requires that your WordGram class

have a method .length() that returns the size of the WordGram. So add

WordGram.length() that returns myWords.length (see below for myWords).

WordGram Constructors and Methods

The constructor for WordGram:

public WordGram(String[] source, int start, int size)

9

should store size strings from the array source, starting at index start, into a private

instance variable of the WordGram class being constructed. You should include two instance

variables in WordGram:

private String[] myWords;

private int myHash;

The hashCode() method should return a value based on all the strings in instance field

myWords. The value returned should be stored in myHash and then returned by hashCode(),

you should use the index of each word in myWords so that the 3-Gram {"cat", "dog", "bat"} has

a different hashCode than {"dog", "bat", "cat"}

The toString() method should return a printable String representing all the strings stored in

the WordGram. For this assignment the requirement is that the string should begin with a left-

brace, end with a right-brace, and have each string separated from others by a comma. There

should not a comma after the last string: {"cat","dog","bat"}. Don't explicitly insert quote

marks, use string concatenation to create the string from "{", "," and the elements of

myWords in a WordGram object.

The equals() method should return true when the parameter passed is a WordGram object

with the same words in the same order. Your code should test the object parameter with the

instanceof operator to see if the parameter is a WordGram. If it's not, it can't be equal and thus

the method should return false, e.g., with code like this:

if (! (other instanceof WordGram)) return false;

If the parameter is a WordGram object, it can be cast to a WordGram, e.g., like this:

WordGram wg = (WordGram) other;

Then the strings in the array myWords of wg can be compared to the object's strings in

this.myWords.

The compareTo() method should return a value less than zero when this object (the one

whose compareTo method is called) is less than parameter other, zero when other is equal,

and a value greater than zero when this object is greater than other. This is the contract of all

compareTo() methods. Compare WordGram objects like you would Strings. For comparing “abc”

and “abd” for example, we compare the first letters and see they’re the same, so we’d move on. We’d do

the same thing for the second letter. Once we get to the third letter, we realize c and d are not the same,

and c comes before d, so “abc” comes before “abd”. We could apply a similar process to the WordGram

objects {[“a”, “b”, “c”} and {“a”, “b”, “d”}, but also to {“apple”, “bat”, “cat”} and {“apple”, “bat”, “dog”}. Your

compareTo method should work just as String compareTo does with strings of different

10

instance variable of the WordGram class being constructed. You should include two instance

variables in WordGram:

private String[] myWords;

private int myHash;

The hashCode() method should return a value based on all the strings in instance field

myWords. The value returned should be stored in myHash and then returned by hashCode(),

you should use the index of each word in myWords so that the 3-Gram {"cat", "dog", "bat"} has

a different hashCode than {"dog", "bat", "cat"}

The toString() method should return a printable String representing all the strings stored in

the WordGram. For this assignment the requirement is that the string should begin with a left-

brace, end with a right-brace, and have each string separated from others by a comma. There

should not a comma after the last string: {"cat","dog","bat"}. Don't explicitly insert quote

marks, use string concatenation to create the string from "{", "," and the elements of

myWords in a WordGram object.

The equals() method should return true when the parameter passed is a WordGram object

with the same words in the same order. Your code should test the object parameter with the

instanceof operator to see if the parameter is a WordGram. If it's not, it can't be equal and thus

the method should return false, e.g., with code like this:

if (! (other instanceof WordGram)) return false;

If the parameter is a WordGram object, it can be cast to a WordGram, e.g., like this:

WordGram wg = (WordGram) other;

Then the strings in the array myWords of wg can be compared to the object's strings in

this.myWords.

The compareTo() method should return a value less than zero when this object (the one

whose compareTo method is called) is less than parameter other, zero when other is equal,

and a value greater than zero when this object is greater than other. This is the contract of all

compareTo() methods. Compare WordGram objects like you would Strings. For comparing “abc”

and “abd” for example, we compare the first letters and see they’re the same, so we’d move on. We’d do

the same thing for the second letter. Once we get to the third letter, we realize c and d are not the same,

and c comes before d, so “abc” comes before “abd”. We could apply a similar process to the WordGram

objects {[“a”, “b”, “c”} and {“a”, “b”, “d”}, but also to {“apple”, “bat”, “cat”} and {“apple”, “bat”, “dog”}. Your

compareTo method should work just as String compareTo does with strings of different

10

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

lengths, e.g., "zoo" > "apple" and "ant" < "anteater" -- this should work for WordGram objects

where strings in a WordGram are analogous to characters in a String.

The shiftAdd() method should create and return a new WordGram object whose last entry is

the parameter last. For example, if WordGram w is {"apple", "pear", "cherry"} then the method

call w.shiftAdd("lemon") should return a WordGram {"pear", "cherry", "lemon"}. Added

10/2: In BruteMarkov the code that creates a new String current used in finding random

characters is: current = current.substring(1)+ nextItem The analog for a

WordMarkov object is to append a nextItem String, chosen at random from the follows-list, to

the current WordGram. This could be current.shiftAdd(nextItem) depending on the

names of the variables used in your BruteWordMarkov or EfficientWordMarkov.

You're given a JUnit testing program named WordGramTester. If you run this program when

you first clone the classes, it will pass all tests and be all green because it's using the

WordGram implementation in the markov.jar file. As soon as you create your own WordGram

class these same tests should fail until you've implemented the methods correctly. The provided

tests only test .equals() and .hashCode() -- you'll develop more tests as one of the later parts of

this project. For example, you should design tests to check whether shiftAdd is correct.

When your implementation passes the tests in WordGramTester, you can use the provided

WordMarkovDriver to test it. By comparing the output of the program when it uses a

BruteMarkovWord object (whose implementation is in the provided markov.jar) with its

random number generator set to the seed 1234, you can compare the output to verify you've

implemented WordGram correctly and EfficientWordMarkov correctly (see next section).

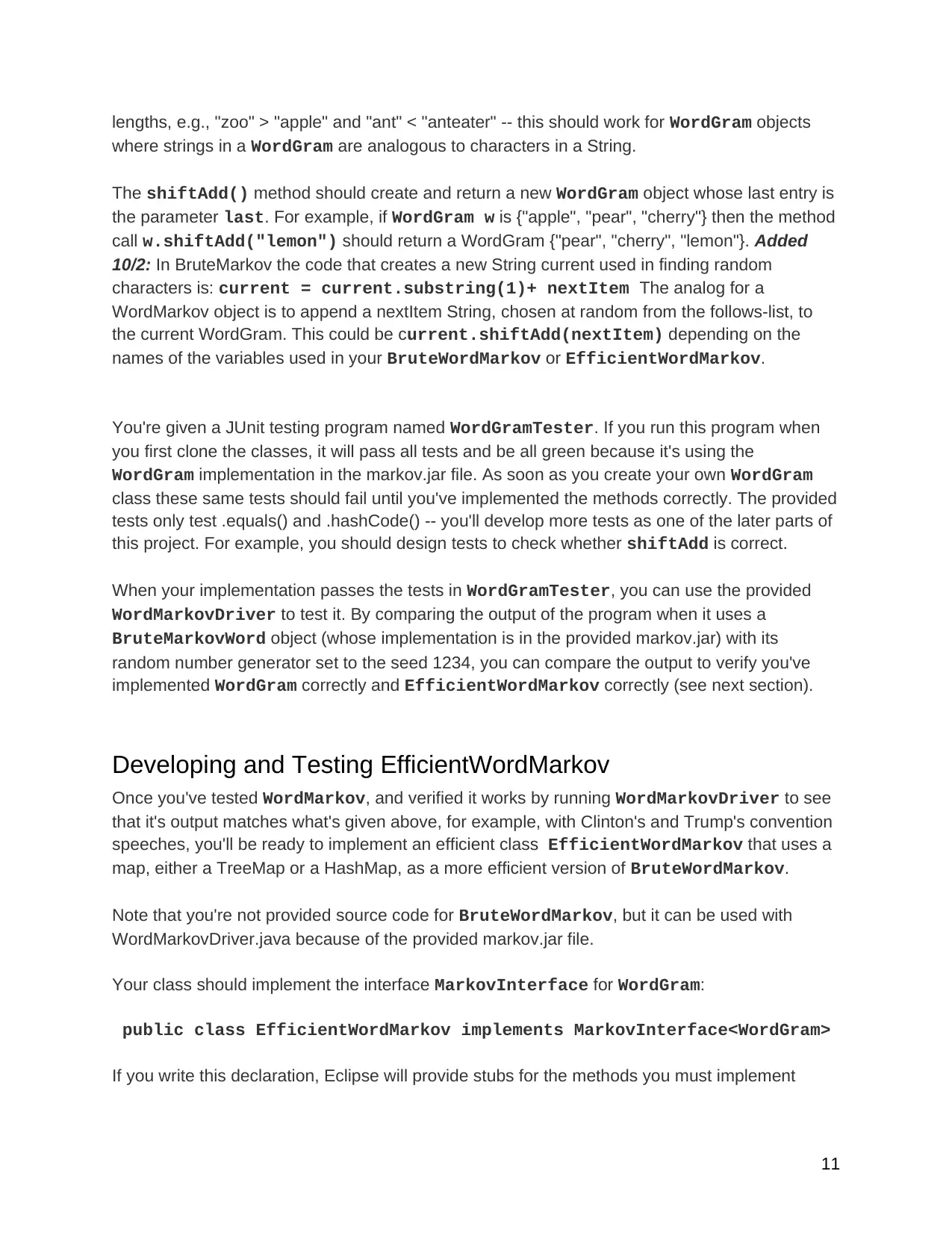

Developing and Testing EfficientWordMarkov

Once you've tested WordMarkov, and verified it works by running WordMarkovDriver to see

that it's output matches what's given above, for example, with Clinton's and Trump's convention

speeches, you'll be ready to implement an efficient class EfficientWordMarkov that uses a

map, either a TreeMap or a HashMap, as a more efficient version of BruteWordMarkov.

Note that you're not provided source code for BruteWordMarkov, but it can be used with

WordMarkovDriver.java because of the provided markov.jar file.

Your class should implement the interface MarkovInterface for WordGram:

public class EfficientWordMarkov implements MarkovInterface<WordGram>

If you write this declaration, Eclipse will provide stubs for the methods you must implement

11

where strings in a WordGram are analogous to characters in a String.

The shiftAdd() method should create and return a new WordGram object whose last entry is

the parameter last. For example, if WordGram w is {"apple", "pear", "cherry"} then the method

call w.shiftAdd("lemon") should return a WordGram {"pear", "cherry", "lemon"}. Added

10/2: In BruteMarkov the code that creates a new String current used in finding random

characters is: current = current.substring(1)+ nextItem The analog for a

WordMarkov object is to append a nextItem String, chosen at random from the follows-list, to

the current WordGram. This could be current.shiftAdd(nextItem) depending on the

names of the variables used in your BruteWordMarkov or EfficientWordMarkov.

You're given a JUnit testing program named WordGramTester. If you run this program when

you first clone the classes, it will pass all tests and be all green because it's using the

WordGram implementation in the markov.jar file. As soon as you create your own WordGram

class these same tests should fail until you've implemented the methods correctly. The provided

tests only test .equals() and .hashCode() -- you'll develop more tests as one of the later parts of

this project. For example, you should design tests to check whether shiftAdd is correct.

When your implementation passes the tests in WordGramTester, you can use the provided

WordMarkovDriver to test it. By comparing the output of the program when it uses a

BruteMarkovWord object (whose implementation is in the provided markov.jar) with its

random number generator set to the seed 1234, you can compare the output to verify you've

implemented WordGram correctly and EfficientWordMarkov correctly (see next section).

Developing and Testing EfficientWordMarkov

Once you've tested WordMarkov, and verified it works by running WordMarkovDriver to see

that it's output matches what's given above, for example, with Clinton's and Trump's convention

speeches, you'll be ready to implement an efficient class EfficientWordMarkov that uses a

map, either a TreeMap or a HashMap, as a more efficient version of BruteWordMarkov.

Note that you're not provided source code for BruteWordMarkov, but it can be used with

WordMarkovDriver.java because of the provided markov.jar file.

Your class should implement the interface MarkovInterface for WordGram:

public class EfficientWordMarkov implements MarkovInterface<WordGram>

If you write this declaration, Eclipse will provide stubs for the methods you must implement

11

● void setTraining(String text)

● String getRandomText(int numWords)

● ArrayList<String> getFollows(WordGram key)

The implementation of getRandomText will be very similar to the implementation in

EfficientMarkov but will use a WordGram object rather than a String. In this implementation,

rather than calling substring() you'll create a WordGram. In this implementation, rather than

concatenating two strings to get a new string you'll call .shiftAdd() to create a new

WordGram.

Your getFollows() method will simply look up a value in a map, just as it did in

EfficientMarkov. Finally, the setTraining() method will build the map, similarly to the

map built in EfficientMarkov but using WordGram objects as keys rather than Strings as

keys.

Developing JUnit Tests

You should add a test to the WordGramTester class to test the correctness of toString()

and the correctness of shiftAdd(). You'll be able to use the toString() method to test

shiftAdd(), for example. You should add two testing methods, using the existing methods as

a model. One should be testToString() and the other should be testShiftAdd(). Create

at least four test cases for each method. In writing the testing for shiftAdd() you can assume

that the .toString() method will work correctly to verify that .shiftAdd() works correctly.

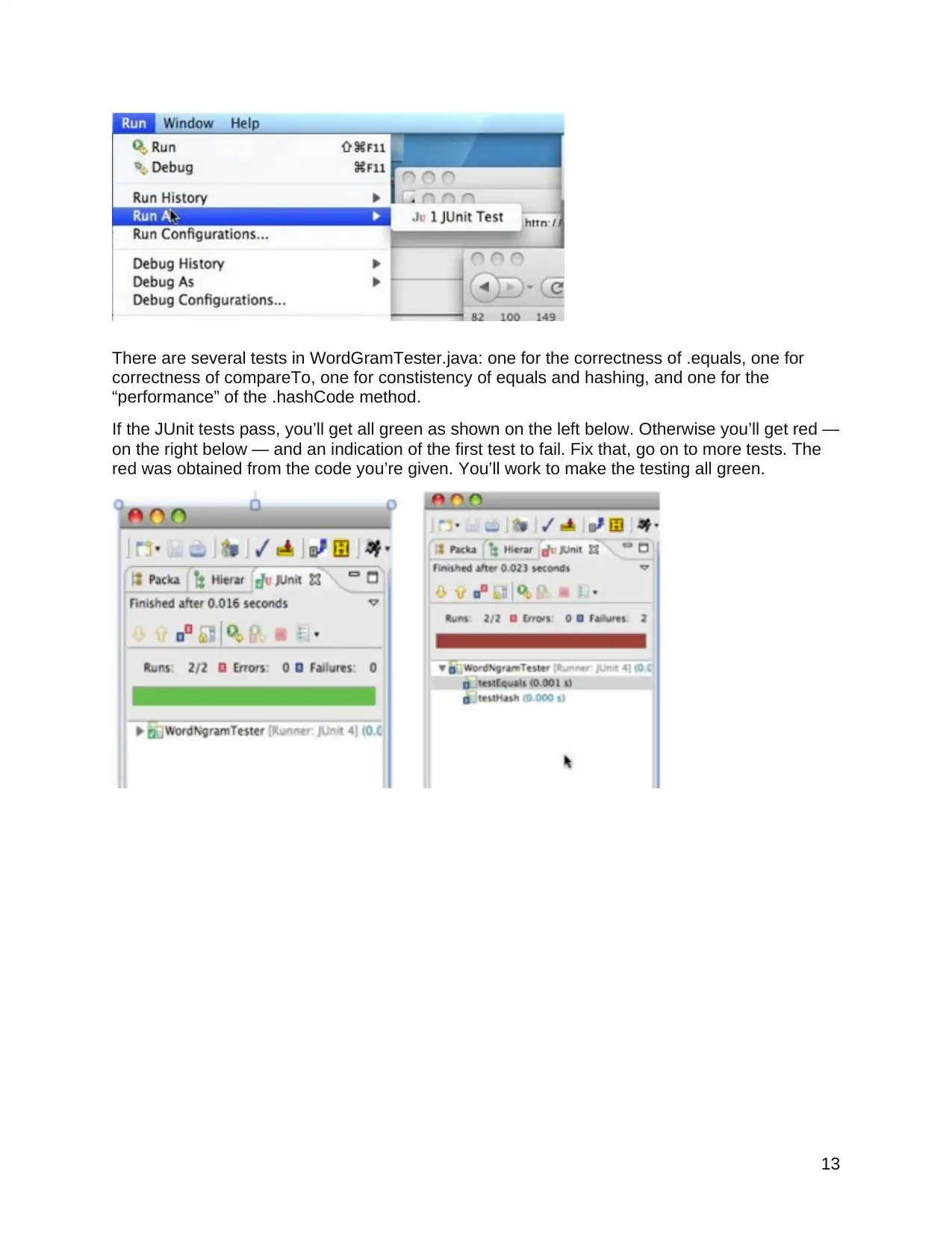

JUnit Tests

To test your WordGram and EfficientMarkov classes you’re given testing code. This code

tests individual methods in your class, these tests are called unit tests and so you need to use

the standard JUnit unit-testing library with the WordgramTest.java file to test your

implementation. Similarly with the provided MarkovTest.java file.

To choose Run as JUnit test first use the Run As option in the Run menu as shown on the left

below. You have to select the JUnit option as shown on the right below. Most of you will have

that as the only option.

12

● String getRandomText(int numWords)

● ArrayList<String> getFollows(WordGram key)

The implementation of getRandomText will be very similar to the implementation in

EfficientMarkov but will use a WordGram object rather than a String. In this implementation,

rather than calling substring() you'll create a WordGram. In this implementation, rather than

concatenating two strings to get a new string you'll call .shiftAdd() to create a new

WordGram.

Your getFollows() method will simply look up a value in a map, just as it did in

EfficientMarkov. Finally, the setTraining() method will build the map, similarly to the

map built in EfficientMarkov but using WordGram objects as keys rather than Strings as

keys.

Developing JUnit Tests

You should add a test to the WordGramTester class to test the correctness of toString()

and the correctness of shiftAdd(). You'll be able to use the toString() method to test

shiftAdd(), for example. You should add two testing methods, using the existing methods as

a model. One should be testToString() and the other should be testShiftAdd(). Create

at least four test cases for each method. In writing the testing for shiftAdd() you can assume

that the .toString() method will work correctly to verify that .shiftAdd() works correctly.

JUnit Tests

To test your WordGram and EfficientMarkov classes you’re given testing code. This code

tests individual methods in your class, these tests are called unit tests and so you need to use

the standard JUnit unit-testing library with the WordgramTest.java file to test your

implementation. Similarly with the provided MarkovTest.java file.

To choose Run as JUnit test first use the Run As option in the Run menu as shown on the left

below. You have to select the JUnit option as shown on the right below. Most of you will have

that as the only option.

12

There are several tests in WordGramTester.java: one for the correctness of .equals, one for

correctness of compareTo, one for constistency of equals and hashing, and one for the

“performance” of the .hashCode method.

If the JUnit tests pass, you’ll get all green as shown on the left below. Otherwise you’ll get red —

on the right below — and an indication of the first test to fail. Fix that, go on to more tests. The

red was obtained from the code you’re given. You’ll work to make the testing all green.

13

correctness of compareTo, one for constistency of equals and hashing, and one for the

“performance” of the .hashCode method.

If the JUnit tests pass, you’ll get all green as shown on the left below. Otherwise you’ll get red —

on the right below — and an indication of the first test to fail. Fix that, go on to more tests. The

red was obtained from the code you’re given. You’ll work to make the testing all green.

13

1 out of 13

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.