Application of Deep Learning (DL) to Natural Language Processing (NLP)

VerifiedAdded on 2021/08/30

|12

|4080

|116

Report

AI Summary

This report proposes an investigation into the application of Deep Learning (DL) in Natural Language Processing (NLP). It begins with an introduction to DL and NLP, highlighting the five major tasks of NLP and the advancements made by DL in these areas. The report then outlines the problem statement, which focuses on the application of DL to tasks such as sentiment analysis and named entity recognition. The aim of the study is to explore multiple DL methods for NLP, with specific objectives to implement and test explainable DL methods and explore practical implications of DL in NLP. A literature review summarizes the use of DL in machine translation, image recovery, and age-based natural language utterances. The report discusses the advantages of DL, such as its ability to learn representations and be applied from start to finish, and its challenges, including the lack of interpretability, handling of long tails, and the need for large datasets. It further explores the motivation for DL in NLP, emphasizing the importance of information representation and the use of neural network language models. The report also touches on the challenges of unsupervised resource learning and the application of the skip-thought model. Overall, the report provides a comprehensive overview of the current state and future prospects of DL in NLP, including its benefits and challenges.

Running Head: PROPOSAL

Application of Deep Learning (DL) to Natural Language Processing (NLP)

[Name of Institute]

[Name of Student]

[Date]

Application of Deep Learning (DL) to Natural Language Processing (NLP)

[Name of Institute]

[Name of Student]

[Date]

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Proposal 2

TABLE OF CONTENTS

Introduction......................................................................................................................................3

Problem Statement.......................................................................................................................4

Aim and Objectives......................................................................................................................4

Literature Review............................................................................................................................4

Advantages and Challenges.........................................................................................................5

Advantages...............................................................................................................................5

Challenges................................................................................................................................5

Motivation for Deep Learning in NLP.........................................................................................6

Dialogue Systems.........................................................................................................................9

Methods...........................................................................................................................................9

Linguistic Phenomena................................................................................................................10

Neural Network Components.....................................................................................................10

REFERENCES..............................................................................................................................11

TABLE OF CONTENTS

Introduction......................................................................................................................................3

Problem Statement.......................................................................................................................4

Aim and Objectives......................................................................................................................4

Literature Review............................................................................................................................4

Advantages and Challenges.........................................................................................................5

Advantages...............................................................................................................................5

Challenges................................................................................................................................5

Motivation for Deep Learning in NLP.........................................................................................6

Dialogue Systems.........................................................................................................................9

Methods...........................................................................................................................................9

Linguistic Phenomena................................................................................................................10

Neural Network Components.....................................................................................................10

REFERENCES..............................................................................................................................11

Proposal 3

Application of Deep Learning (DL) to Natural Language Processing (NLP)

Introduction

Deep Learning (DL) refers to the advances made by artificial intelligence in learning and

using neural networks such as “Deep Neural Networks (DNN), Convolutional Neural Networks

(CNN), and Recurrent Neural Networks (RNN)” (Otter, et al., 2020). Recently, DL has been

effectively applied to “Natural Language Processing (NLP)” and noteworthy progress has been

made (Hasan and Farri, 2019). This article will summarize the recent advances made by DL in

NLP and discusses its benefits and challenges (Rao and McMahan, 2019). There are 5 major

tasks for NLP, including “classification, matching, translation, structured prediction, and

sequential decision-making processes” (Huang, et al., 2019).

The first four tasks can be traced back to DL methods that have overtaken the traditional

methods (Landolt, et al., 2021). Training of system and result-oriented learning from start to

finish are important features of DL, making it a useful resource for NLP. It may not be enough

just to argue and make decisions on certain grounds of language processing, which is essential

for complex issues such as multiple interchange cycles (Zhang, et al., 2020). Moreover,

integrating translation processing and neural processing and dealing with the long-tail

phenomenon is also challenging for DL in NLP (Bacchi, et al., 2019).

“Natural Language Processing (NLP)” is a software engineering subcommand that

provides support between natural language and the PC. It enables machines to acquire, process

and parse human language (Rao and McMahan, 2019). The importance of NLP as a device that

supports the perception of human-generated information is a reasonable consequence of the

contextuality of information. By better understanding the context, information becomes more

important, making it suitable for inspection and text mining (through AI) (Sorin, et al., 2020).

NLP offers this through projects and examples of human communication. The advancement of

NLP technology increasingly relies on information-driven methodologies that can help create

more remarkable and powerful models (Young, et al., 2018).

The constant advancement of computing power, such as the increased accessibility of

vast information, offers opportunities for DL, which is probably the most attractive method in

NLP, especially considering that DL is used in computer vision and speech recognition (Torfi, et

al., 2020). These advancements have led people to move from traditional information-based

Application of Deep Learning (DL) to Natural Language Processing (NLP)

Introduction

Deep Learning (DL) refers to the advances made by artificial intelligence in learning and

using neural networks such as “Deep Neural Networks (DNN), Convolutional Neural Networks

(CNN), and Recurrent Neural Networks (RNN)” (Otter, et al., 2020). Recently, DL has been

effectively applied to “Natural Language Processing (NLP)” and noteworthy progress has been

made (Hasan and Farri, 2019). This article will summarize the recent advances made by DL in

NLP and discusses its benefits and challenges (Rao and McMahan, 2019). There are 5 major

tasks for NLP, including “classification, matching, translation, structured prediction, and

sequential decision-making processes” (Huang, et al., 2019).

The first four tasks can be traced back to DL methods that have overtaken the traditional

methods (Landolt, et al., 2021). Training of system and result-oriented learning from start to

finish are important features of DL, making it a useful resource for NLP. It may not be enough

just to argue and make decisions on certain grounds of language processing, which is essential

for complex issues such as multiple interchange cycles (Zhang, et al., 2020). Moreover,

integrating translation processing and neural processing and dealing with the long-tail

phenomenon is also challenging for DL in NLP (Bacchi, et al., 2019).

“Natural Language Processing (NLP)” is a software engineering subcommand that

provides support between natural language and the PC. It enables machines to acquire, process

and parse human language (Rao and McMahan, 2019). The importance of NLP as a device that

supports the perception of human-generated information is a reasonable consequence of the

contextuality of information. By better understanding the context, information becomes more

important, making it suitable for inspection and text mining (through AI) (Sorin, et al., 2020).

NLP offers this through projects and examples of human communication. The advancement of

NLP technology increasingly relies on information-driven methodologies that can help create

more remarkable and powerful models (Young, et al., 2018).

The constant advancement of computing power, such as the increased accessibility of

vast information, offers opportunities for DL, which is probably the most attractive method in

NLP, especially considering that DL is used in computer vision and speech recognition (Torfi, et

al., 2020). These advancements have led people to move from traditional information-based

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Proposal 4

methods to new information-based methods of promoting NLP. The goal of this transformation

is that the new method is more efficient in terms of results and the design is simpler (Deng and

Liu, 2018).

Problem Statement

DL uses “Deep Neural Networks” to measure a large amount of information and

familiarize with the problem of information processing. It can solve any problem as long as user

have access to a large and relevant dataset(s). It also examines the important features of problem

definition. In the field of NLP, there are many important applications that are widely used by

individuals, similar to machine translation, speech recognition, and information retrieval. As DL

progressed, more and more NLP tasks were solved well with the application of DL methods.

Accordingly, a unique and meaningful AI methodology is provided to make the operation

of DL models in NLP tasks such as sentiment inspection easier and more meaningful. Syntax

entity detection is a fundamental task in NLP and is also used in many applications such as web

indexing and online advertising. However, as a fundamental and important venture in NLP, there

is much work in using deep neural networks to solve “Named Entity Recognition (NER)”

problems, whilst very little work is in applying rational methods of DL.

Aim and Objectives

The aim of this study is to explore multiple deep learning methods for NLP. The

objectives of this study are stated below:

Implement and test the explainable DL methods

To explore the latest practical implication practices of DL in NLP

Literature Review

According to the studies, most of the NLP problems can be formalized in these five tasks

(as mentioned above). In these tasks, words, phrases, sentences, parts and even files are generally

considered to be a series of tags (strings) and although they can handle several complicated facts

in comparison (Sorin, et al., 2020). In addition, sentences are the most common unit of

processing. Studies recently discovered that DL can be updated and viewed in the first four

assignments and is becoming a groundbreaking assignment innovation (Zhang, et al., 2020). Of

methods to new information-based methods of promoting NLP. The goal of this transformation

is that the new method is more efficient in terms of results and the design is simpler (Deng and

Liu, 2018).

Problem Statement

DL uses “Deep Neural Networks” to measure a large amount of information and

familiarize with the problem of information processing. It can solve any problem as long as user

have access to a large and relevant dataset(s). It also examines the important features of problem

definition. In the field of NLP, there are many important applications that are widely used by

individuals, similar to machine translation, speech recognition, and information retrieval. As DL

progressed, more and more NLP tasks were solved well with the application of DL methods.

Accordingly, a unique and meaningful AI methodology is provided to make the operation

of DL models in NLP tasks such as sentiment inspection easier and more meaningful. Syntax

entity detection is a fundamental task in NLP and is also used in many applications such as web

indexing and online advertising. However, as a fundamental and important venture in NLP, there

is much work in using deep neural networks to solve “Named Entity Recognition (NER)”

problems, whilst very little work is in applying rational methods of DL.

Aim and Objectives

The aim of this study is to explore multiple deep learning methods for NLP. The

objectives of this study are stated below:

Implement and test the explainable DL methods

To explore the latest practical implication practices of DL in NLP

Literature Review

According to the studies, most of the NLP problems can be formalized in these five tasks

(as mentioned above). In these tasks, words, phrases, sentences, parts and even files are generally

considered to be a series of tags (strings) and although they can handle several complicated facts

in comparison (Sorin, et al., 2020). In addition, sentences are the most common unit of

processing. Studies recently discovered that DL can be updated and viewed in the first four

assignments and is becoming a groundbreaking assignment innovation (Zhang, et al., 2020). Of

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Proposal 5

all the problems of NLP, the advancement of machine translation deserves a special mention.

Neural machine translation, like machine translation with DL, has in fact overtaken the usual

measurable machine translation (Torfi, et al., 2020). The leading neural translation framework

uses sequence-by-sequence learning models with RNNs.

Interestingly, DL also enables some applications, like, DL has been effectively applied

for image recovery, where texts and images are first converted to vector representations using

CNN (Deng and Liu, 2018). These symbols are matched with the DNN and the meaning of the

image for the query is determined (Rao and McMahan, 2019). DL is also used for age-based

natural language utterances, where the structure responds accordingly to an expression and

prepares the model in sequence-by-sequence learning (Zhang, et al., 2020).

Advantages and Challenges

DL will certainly bring benefits and challenges when implied to NLP.

Advantages

One can imagine that completing the preparation and learning of representation really

separates DL from the usual AI methodologies and makes it an incredible piece of hardware for

NLP (Guo, et al., 2019).

It is normal to prepare for applying DL from start to finish (Guo, et al., 2019). This is due

to the model which is rich in representation and the data contained in the information can be

“coded” into the model. In neural machine translation, for example, the model is developed

naturally from the same corpus, usually without artificial intermediaries (Zhang, et al., 2020).

Associated to the traditional method of actual machine translation, this is obviously an advantage

where feature design is very important.

Through DL, various representations of structured information such as content and

images can be learned as true respectable vectors (Deng and Liu, 2018). This makes it possible to

process the data in different ways. For example, image recovery allows to coordinate the search

(text) for images and keep track of the most important images, as each image is treated as a

vector (Landolt, et al., 2021).

Challenges

DL has more mutual challenges, such as the lack of hypotheses, the lack of

interpretability of models, and the need for large amounts of information and incredible recorded

all the problems of NLP, the advancement of machine translation deserves a special mention.

Neural machine translation, like machine translation with DL, has in fact overtaken the usual

measurable machine translation (Torfi, et al., 2020). The leading neural translation framework

uses sequence-by-sequence learning models with RNNs.

Interestingly, DL also enables some applications, like, DL has been effectively applied

for image recovery, where texts and images are first converted to vector representations using

CNN (Deng and Liu, 2018). These symbols are matched with the DNN and the meaning of the

image for the query is determined (Rao and McMahan, 2019). DL is also used for age-based

natural language utterances, where the structure responds accordingly to an expression and

prepares the model in sequence-by-sequence learning (Zhang, et al., 2020).

Advantages and Challenges

DL will certainly bring benefits and challenges when implied to NLP.

Advantages

One can imagine that completing the preparation and learning of representation really

separates DL from the usual AI methodologies and makes it an incredible piece of hardware for

NLP (Guo, et al., 2019).

It is normal to prepare for applying DL from start to finish (Guo, et al., 2019). This is due

to the model which is rich in representation and the data contained in the information can be

“coded” into the model. In neural machine translation, for example, the model is developed

naturally from the same corpus, usually without artificial intermediaries (Zhang, et al., 2020).

Associated to the traditional method of actual machine translation, this is obviously an advantage

where feature design is very important.

Through DL, various representations of structured information such as content and

images can be learned as true respectable vectors (Deng and Liu, 2018). This makes it possible to

process the data in different ways. For example, image recovery allows to coordinate the search

(text) for images and keep track of the most important images, as each image is treated as a

vector (Landolt, et al., 2021).

Challenges

DL has more mutual challenges, such as the lack of hypotheses, the lack of

interpretability of models, and the need for large amounts of information and incredible recorded

Proposal 6

assets (Deng and Liu, 2018). NLP presents some major challenges, most notably the problem of

handling long tails, the inability to deal directly with symbols, and the inefficiency of reasoning

and decision-making (Rao and McMahan, 2019).

Information in natural language is always sent in accordance with applicable law. For

example, the size of the jargon increases with the size of the information. This means that there

will always be situations that the prepared information cannot cover, no matter how much

prepared information there is. Describing the lengthy management problem is a great test for DL

(Deng and Liu, 2018). This problem is difficult to solve with DL alone.

Speech information is usually image information that is not identical to vector

information (respected real vectors) commonly used in DL (Otter, et al., 2020). Now the image

information in speech is converted into vector information, and then the contribution to the

neural network and the output of the neural network are converted into image information (Deng

and Liu, 2018). In fact, much knowledge of NLP is used as symbols, including language

knowledge (e.g., language structure), vocabulary knowledge, and world knowledge (such as

Wikipedia). Currently, DL strategies do not yet use this knowledge (Rao and McMahan, 2019).

The illustrated representation is not difficult to decipher and control, and the vector

representation is full of ambiguity and turbulence. The description of the amalgamation of image

and vector information and the quality of the use of these two types of information is still an

open question in NLP (Zhang, et al., 2020).

There are complex and compound tasks in NLP that only DL cannot easily detect. For

example, multi-turn exchanges are an extremely complicated process. Includes language

comprehension, language age, executive speech, knowledge base visits, and interpreters (Rao

and McMahan, 2019). Terms of communication (syntaxes) can be formalized as an ongoing

decision-making process and learning support can be used as an integral part (Hasan and Farri,

2019). It is clear that the combination of DL and supervised learning can be useful for the task

that DL itself was in the past (Yang, et al., 2019).

Motivation for Deep Learning in NLP

DL applications are based on the representation of resources and the decision making of

architecture-like DL algorithms. They relate to the information representation or the learning

structure (Hasan and Farri, 2019). The appalling thing about presenting information is that there

assets (Deng and Liu, 2018). NLP presents some major challenges, most notably the problem of

handling long tails, the inability to deal directly with symbols, and the inefficiency of reasoning

and decision-making (Rao and McMahan, 2019).

Information in natural language is always sent in accordance with applicable law. For

example, the size of the jargon increases with the size of the information. This means that there

will always be situations that the prepared information cannot cover, no matter how much

prepared information there is. Describing the lengthy management problem is a great test for DL

(Deng and Liu, 2018). This problem is difficult to solve with DL alone.

Speech information is usually image information that is not identical to vector

information (respected real vectors) commonly used in DL (Otter, et al., 2020). Now the image

information in speech is converted into vector information, and then the contribution to the

neural network and the output of the neural network are converted into image information (Deng

and Liu, 2018). In fact, much knowledge of NLP is used as symbols, including language

knowledge (e.g., language structure), vocabulary knowledge, and world knowledge (such as

Wikipedia). Currently, DL strategies do not yet use this knowledge (Rao and McMahan, 2019).

The illustrated representation is not difficult to decipher and control, and the vector

representation is full of ambiguity and turbulence. The description of the amalgamation of image

and vector information and the quality of the use of these two types of information is still an

open question in NLP (Zhang, et al., 2020).

There are complex and compound tasks in NLP that only DL cannot easily detect. For

example, multi-turn exchanges are an extremely complicated process. Includes language

comprehension, language age, executive speech, knowledge base visits, and interpreters (Rao

and McMahan, 2019). Terms of communication (syntaxes) can be formalized as an ongoing

decision-making process and learning support can be used as an integral part (Hasan and Farri,

2019). It is clear that the combination of DL and supervised learning can be useful for the task

that DL itself was in the past (Yang, et al., 2019).

Motivation for Deep Learning in NLP

DL applications are based on the representation of resources and the decision making of

architecture-like DL algorithms. They relate to the information representation or the learning

structure (Hasan and Farri, 2019). The appalling thing about presenting information is that there

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Proposal 7

is often a mismatch between the information that is important to the work being done and the

presentation that actually leads to great results. For example, in a surveys, it is noted that some

linguists believe that dictionary semantics, syntactic design, and context are important (Hasan

and Farri, 2019). Taking all factors into account, previous research based on the “Bag-of-Word

(BoW) model” showed sufficient executive power (Guo, et al., 2019).

The BoW model, which is generally regarded as a vector space model, contains a

representation that describes only the repetition of words and their events. BoW ignores word

requirements and contexts and considers each word as an exceptional property. BoW does not

take syntactic structure into account, but gives reasonable results for applications that some

people consider inferior. This finding proposes that modest representations in combination with

large amounts of information can fill in more or better than more unpredictable representations

(Bacchi, et al., 2019). These results confirm the debate about the importance of DL architectures

and algorithms. The advancement of NLP is often inextricably linked to powerful speech

demonstrations (Deng and Liu, 2018).

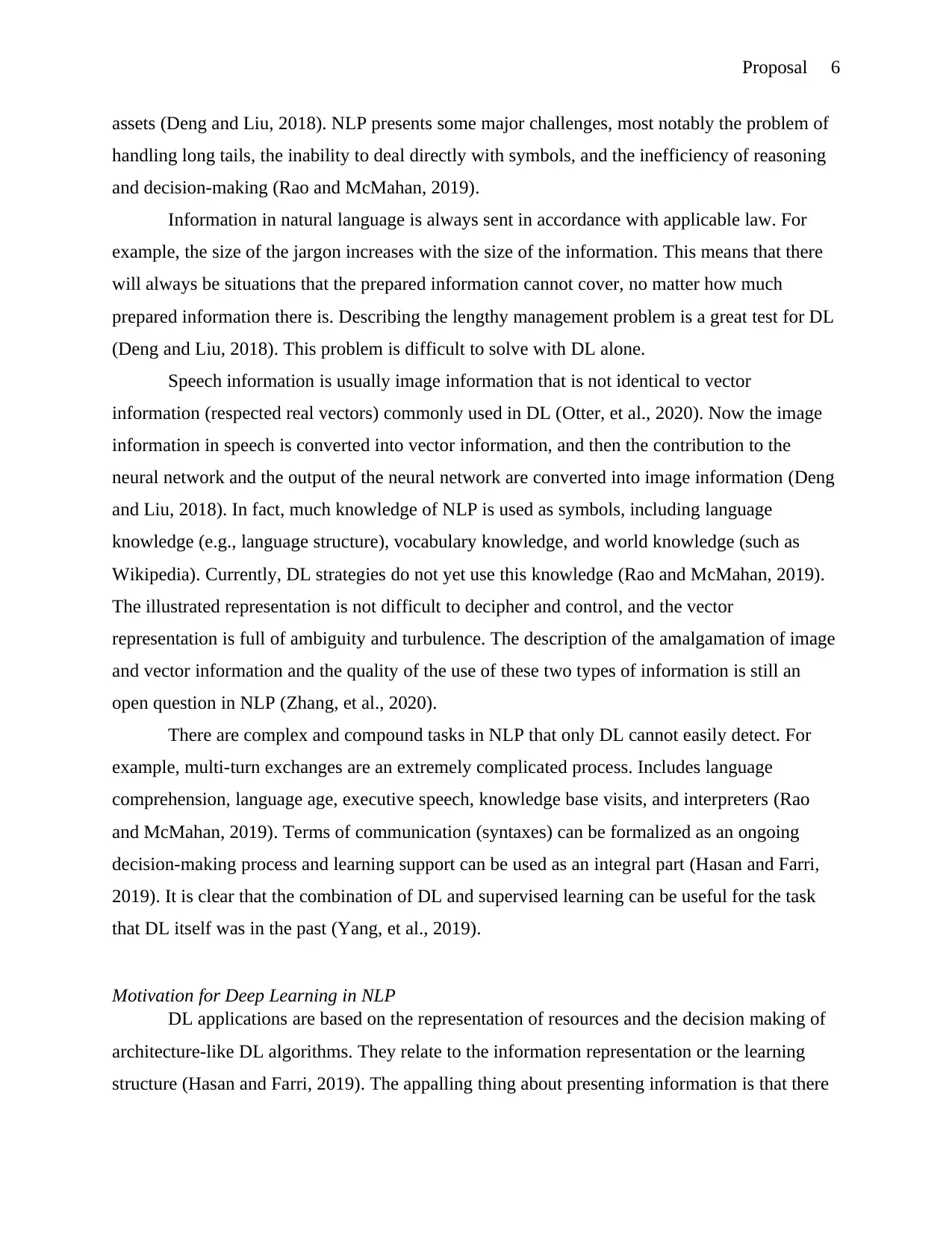

One of the goals of measurable language representation is the probabilistic representation

of word sequences in the language, which is a confusing task due to the scourge of

dimensionality (Hasan and Farri, 2019). The research is a further step in demonstrating neural

network language meant for overcoming the scourge of dimensionality by (1) learning adequate

word representations and (2) providing sequence probabilistic work (Rao and McMahan, 2019).

Compared to other areas, such as computer vision, a key test in NLP research is the use of fact

models to complete the complexity of the language's internal and external representation (Guo, et

al., 2019). A fundamental task in an NLP application is to provide a representation of encrypted

text into the required (understandable) language. This includes the learning function, which

separates important data for further processing and checking from the original information

(Otter, et al., 2020).

is often a mismatch between the information that is important to the work being done and the

presentation that actually leads to great results. For example, in a surveys, it is noted that some

linguists believe that dictionary semantics, syntactic design, and context are important (Hasan

and Farri, 2019). Taking all factors into account, previous research based on the “Bag-of-Word

(BoW) model” showed sufficient executive power (Guo, et al., 2019).

The BoW model, which is generally regarded as a vector space model, contains a

representation that describes only the repetition of words and their events. BoW ignores word

requirements and contexts and considers each word as an exceptional property. BoW does not

take syntactic structure into account, but gives reasonable results for applications that some

people consider inferior. This finding proposes that modest representations in combination with

large amounts of information can fill in more or better than more unpredictable representations

(Bacchi, et al., 2019). These results confirm the debate about the importance of DL architectures

and algorithms. The advancement of NLP is often inextricably linked to powerful speech

demonstrations (Deng and Liu, 2018).

One of the goals of measurable language representation is the probabilistic representation

of word sequences in the language, which is a confusing task due to the scourge of

dimensionality (Hasan and Farri, 2019). The research is a further step in demonstrating neural

network language meant for overcoming the scourge of dimensionality by (1) learning adequate

word representations and (2) providing sequence probabilistic work (Rao and McMahan, 2019).

Compared to other areas, such as computer vision, a key test in NLP research is the use of fact

models to complete the complexity of the language's internal and external representation (Guo, et

al., 2019). A fundamental task in an NLP application is to provide a representation of encrypted

text into the required (understandable) language. This includes the learning function, which

separates important data for further processing and checking from the original information

(Otter, et al., 2020).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Proposal 8

“Considering a given sequence, the skip-thought model generates the surrounding sequences using the trained encoder. The

assumption is that the surrounding sentences are closely related, contextually”

The traditional strategy starts with the manual production of complicated functions

through careful manual review of specific applications and then moves on to algorithms to

separate the genesis of these functions and take advantage of them (Otter, et al., 2020). NLP

technology is highly information-driven and can be used in various efforts to provide rich

representations of information. Due to the vast measurement of unmarked information, learning

from unsupervised resources is seen as an urgent task in NLP (Young, et al., 2018).

Unsupervised resource learning is basically learning from unlabeled information

resources to deliver a low-dimensional representation of a high-dimensional information space

(Bacchi, et al., 2019). For this, some methods have been proposed and effectively implemented,

such as the implicit K-grouping. With the advent of DL and a large amount of unencrypted

information, unsupervised resource learning has become an important task of representative

learning, setting a precedent for NLP applications (Deng and Liu, 2018). Currently, much of

NLP's work relies on clear information, and the acceptance of unannotated information has

further encouraged the use of unsupervised technological research, driven by in-depth

information (Rao and McMahan, 2019).

Distributed representation is the advancement in the representation of conservative and

low-dimensional information, and each representation focuses on certain specific guiding

features (Hasan and Farri, 2019). For the structure of NLP, the computer system learns the

representation of the word because of the problem of the central representation of the symbol.

From the beginning, study focused on solving functions and then on learning different methods

of rendering words. Coding input sources can be characters, words, phrases, or other language

components (Young, et al., 2018). In most cases, the minimized representation of a word is more

attractive than some representations. Until now, the most effective way to choose the design and

level of the text display has been an open-ended question (Yang, et al., 2019).

Therefore, after the word2vec method was proposed, doc2vec was proposed as a verified

algorithm and named “Paragraph Vector (PV)”. The purpose of PV is to obtain a fixed length

representation of variable length and text parts, e.g., Sentences and Reports (Young, et al., 2018).

One of the basic goals of “doc2vec” is to overcome the limitations of models such as BoW and

deliver reliable results for the applications such as content rating testing (Torfi, et al., 2020). The

last method is to skip the thinking model that word2vec applies at the sentence level. When using

“Considering a given sequence, the skip-thought model generates the surrounding sequences using the trained encoder. The

assumption is that the surrounding sentences are closely related, contextually”

The traditional strategy starts with the manual production of complicated functions

through careful manual review of specific applications and then moves on to algorithms to

separate the genesis of these functions and take advantage of them (Otter, et al., 2020). NLP

technology is highly information-driven and can be used in various efforts to provide rich

representations of information. Due to the vast measurement of unmarked information, learning

from unsupervised resources is seen as an urgent task in NLP (Young, et al., 2018).

Unsupervised resource learning is basically learning from unlabeled information

resources to deliver a low-dimensional representation of a high-dimensional information space

(Bacchi, et al., 2019). For this, some methods have been proposed and effectively implemented,

such as the implicit K-grouping. With the advent of DL and a large amount of unencrypted

information, unsupervised resource learning has become an important task of representative

learning, setting a precedent for NLP applications (Deng and Liu, 2018). Currently, much of

NLP's work relies on clear information, and the acceptance of unannotated information has

further encouraged the use of unsupervised technological research, driven by in-depth

information (Rao and McMahan, 2019).

Distributed representation is the advancement in the representation of conservative and

low-dimensional information, and each representation focuses on certain specific guiding

features (Hasan and Farri, 2019). For the structure of NLP, the computer system learns the

representation of the word because of the problem of the central representation of the symbol.

From the beginning, study focused on solving functions and then on learning different methods

of rendering words. Coding input sources can be characters, words, phrases, or other language

components (Young, et al., 2018). In most cases, the minimized representation of a word is more

attractive than some representations. Until now, the most effective way to choose the design and

level of the text display has been an open-ended question (Yang, et al., 2019).

Therefore, after the word2vec method was proposed, doc2vec was proposed as a verified

algorithm and named “Paragraph Vector (PV)”. The purpose of PV is to obtain a fixed length

representation of variable length and text parts, e.g., Sentences and Reports (Young, et al., 2018).

One of the basic goals of “doc2vec” is to overcome the limitations of models such as BoW and

deliver reliable results for the applications such as content rating testing (Torfi, et al., 2020). The

last method is to skip the thinking model that word2vec applies at the sentence level. When using

Proposal 9

an “Encoder-Decoder Architecture (EDA)”, the model uses a specific phrase to create a

containment phrase (Guo, et al., 2019).

Dialogue Systems

Dialogue systems are fast becoming the primary tool for human-machine collaboration,

in part because of their potential and business value (Yang, et al., 2019). An application is an

automated customer service that supports blocks and mortar networks and organisations (Otter,

et al., 2020). Clients expect to be prompt, accurate and respectful at all times in managing the

organisation and its administrative departments (Otter, et al., 2020). Due to the high cost of

skilled staff, companies usually use insightful dialogue machines. Note that the terms

conversation machine and language machine are often used in reverse (Deng and Liu, 2018).

Exchange structure is usually task oriented or not. Whilst there may be parts of “Automatic

Speech Recognition (ASR)” and “Language-to-Speech (L2S)” in a language framework, this part

of the conversation revolves solely around the language part of the exchange framework; Ideas

related to discourse innovation are ignored (Guo, et al., 2019).

Although valuable fact models are used in the backend of the exchange framework

(especially in the language acquisition module), most communicated language structures are

based on expensive manual creation and manual activity functions (Yang, et al., 2019).

Moreover, the versatility of different spaces and functions complicates these physical

construction structures. DL works with the formation of a discourse structure from the beginning

to the end of the task context, which is the systematic summary of the previous interpretation

task - the discussion of the explicit exchange of assets (Zhang, et al., 2020).

Methods

For components of the partner's neural tissue with features of linguistics, the most

popular method is to predict these features from the early stage of the neural tissue. Typically, in

this procedure, a neural model is prepared in a particular task and its payload is paused (Hasan

and Farri, 2019). The prepared model is then used at this point to create a resource representation

for another task, run it in lieu of a spoken-comment corpus, and capture the representation

(masked state formulation). Then use another classification to predict the features of interest (the

[POS] grammatical label) (Huang, et al., 2019).

an “Encoder-Decoder Architecture (EDA)”, the model uses a specific phrase to create a

containment phrase (Guo, et al., 2019).

Dialogue Systems

Dialogue systems are fast becoming the primary tool for human-machine collaboration,

in part because of their potential and business value (Yang, et al., 2019). An application is an

automated customer service that supports blocks and mortar networks and organisations (Otter,

et al., 2020). Clients expect to be prompt, accurate and respectful at all times in managing the

organisation and its administrative departments (Otter, et al., 2020). Due to the high cost of

skilled staff, companies usually use insightful dialogue machines. Note that the terms

conversation machine and language machine are often used in reverse (Deng and Liu, 2018).

Exchange structure is usually task oriented or not. Whilst there may be parts of “Automatic

Speech Recognition (ASR)” and “Language-to-Speech (L2S)” in a language framework, this part

of the conversation revolves solely around the language part of the exchange framework; Ideas

related to discourse innovation are ignored (Guo, et al., 2019).

Although valuable fact models are used in the backend of the exchange framework

(especially in the language acquisition module), most communicated language structures are

based on expensive manual creation and manual activity functions (Yang, et al., 2019).

Moreover, the versatility of different spaces and functions complicates these physical

construction structures. DL works with the formation of a discourse structure from the beginning

to the end of the task context, which is the systematic summary of the previous interpretation

task - the discussion of the explicit exchange of assets (Zhang, et al., 2020).

Methods

For components of the partner's neural tissue with features of linguistics, the most

popular method is to predict these features from the early stage of the neural tissue. Typically, in

this procedure, a neural model is prepared in a particular task and its payload is paused (Hasan

and Farri, 2019). The prepared model is then used at this point to create a resource representation

for another task, run it in lieu of a spoken-comment corpus, and capture the representation

(masked state formulation). Then use another classification to predict the features of interest (the

[POS] grammatical label) (Huang, et al., 2019).

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Proposal 10

Different strategies for finding the correspondence between different parts of nervous

tissue and certain attributes include calculating and accounting for the number of times the

payload matches language attributes (such as anaphora targets) or directly recording the

relationship between NLP activation and certain attributes; For example, link the RNN state

formulation to depth acoustic skills or the “Melfrequency Cepstral Coefficient (MFCC)” in the

syntax tree (Guo, et al., 2019). This form of communication can also be done through suggestion.

For example, examine the characteristics of the ABX separation task to assess how the neural

(vision-based) model of the utterance encodes the phonology (Deng and Liu, 2018).

Linguistic Phenomena

Different types of language information are broken down, from basic attributes such as

sentence length, word position, word existence or basic word requirement to lexical, syntactic

and semantic information. Speech/phoneme information, speaker information, and style and

complement information are concentrated in neural tissue models or common audiovisual

models for speech (Torfi, et al., 2020).

Neural Network Components

With respect to the subject of study, several components of the neural tissue are

examined, including word recording, gate unit, phrase recording, and sequence-to-sequence

model (seq2seq) (Deng and Liu, 2018). In general, there is less work to do to analyze the

convolutional neural network in NLP. In language processing, experts analyze layers in deep

neural networks for language confirmation and unique speaker integration. Some checks have

also been made on the correspondence between the audio-visual or speech-visual mainstream

model or the recording of words and the convolutional representation of the image (Rao and

McMahan, 2019).

Different strategies for finding the correspondence between different parts of nervous

tissue and certain attributes include calculating and accounting for the number of times the

payload matches language attributes (such as anaphora targets) or directly recording the

relationship between NLP activation and certain attributes; For example, link the RNN state

formulation to depth acoustic skills or the “Melfrequency Cepstral Coefficient (MFCC)” in the

syntax tree (Guo, et al., 2019). This form of communication can also be done through suggestion.

For example, examine the characteristics of the ABX separation task to assess how the neural

(vision-based) model of the utterance encodes the phonology (Deng and Liu, 2018).

Linguistic Phenomena

Different types of language information are broken down, from basic attributes such as

sentence length, word position, word existence or basic word requirement to lexical, syntactic

and semantic information. Speech/phoneme information, speaker information, and style and

complement information are concentrated in neural tissue models or common audiovisual

models for speech (Torfi, et al., 2020).

Neural Network Components

With respect to the subject of study, several components of the neural tissue are

examined, including word recording, gate unit, phrase recording, and sequence-to-sequence

model (seq2seq) (Deng and Liu, 2018). In general, there is less work to do to analyze the

convolutional neural network in NLP. In language processing, experts analyze layers in deep

neural networks for language confirmation and unique speaker integration. Some checks have

also been made on the correspondence between the audio-visual or speech-visual mainstream

model or the recording of words and the convolutional representation of the image (Rao and

McMahan, 2019).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Proposal 11

REFERENCES

Bacchi, S., Oakden-Rayner, L., Zerner, T., Kleinig, T., Patel, S. and Jannes, J., 2019. DL natural

language processing successfully predicts the cerebrovascular cause of transient ischemic

attack-like presentations. Stroke, 50(3), pp.758-760.

Deng, L. and Liu, Y. eds., 2018. DL in natural language processing. Springer.

Deng, L. and Liu, Y., 2018. A joint introduction to natural language processing and to DL. In DL

in natural language processing (pp. 1-22). Springer, Singapore.

Guo, W., Gao, H., Shi, J., Long, B., Zhang, L., Chen, B.C. and Agarwal, D., 2019, July. Deep

natural language processing for search and recommender systems. In Proceedings of the

25th ACM SIGKDD International Conference on Knowledge Discovery & Data

Mining (pp. 3199-3200).

Hasan, S.A. and Farri, O., 2019. Clinical natural language processing with DL. In Data Science

for Healthcare (pp. 147-171). Springer, Cham.

Huang, K., Hussain, A., Wang, Q.F. and Zhang, R. eds., 2019. DL: Fundamentals, Theory and

Applications (Vol. 2). Springer.

Landolt, S., Wambsganss, T. and Söllner, M., 2021. A Taxonomy for DL in Natural Language

Processing. Hawaii International Conference on System Sciences.

Otter, D.W., Medina, J.R. and Kalita, J.K., 2020. A survey of the usages of DL for natural

language processing. IEEE Transactions on Neural Networks and Learning Systems.

Rao, D. and McMahan, B., 2019. Natural language processing with PyTorch: build intelligent

language applications using DL. “ O'Reilly Media, Inc.”.

Sorin, V., Barash, Y., Konen, E. and Klang, E., 2020. DL for natural language processing in

radiology—fundamentals and a systematic review. Journal of the American College of

Radiology, 17(5), pp.639-648.

Torfi, A., Shirvani, R.A., Keneshloo, Y., Tavvaf, N. and Fox, E.A., 2020. Natural language

processing advancements by DL: A survey. arXiv preprint arXiv:2003.01200.

Yang, H., Luo, L., Chueng, L.P., Ling, D. and Chin, F., 2019. DL and its applications to natural

language processing. In DL: Fundamentals, theory and applications (pp. 89-109).

Springer, Cham.

REFERENCES

Bacchi, S., Oakden-Rayner, L., Zerner, T., Kleinig, T., Patel, S. and Jannes, J., 2019. DL natural

language processing successfully predicts the cerebrovascular cause of transient ischemic

attack-like presentations. Stroke, 50(3), pp.758-760.

Deng, L. and Liu, Y. eds., 2018. DL in natural language processing. Springer.

Deng, L. and Liu, Y., 2018. A joint introduction to natural language processing and to DL. In DL

in natural language processing (pp. 1-22). Springer, Singapore.

Guo, W., Gao, H., Shi, J., Long, B., Zhang, L., Chen, B.C. and Agarwal, D., 2019, July. Deep

natural language processing for search and recommender systems. In Proceedings of the

25th ACM SIGKDD International Conference on Knowledge Discovery & Data

Mining (pp. 3199-3200).

Hasan, S.A. and Farri, O., 2019. Clinical natural language processing with DL. In Data Science

for Healthcare (pp. 147-171). Springer, Cham.

Huang, K., Hussain, A., Wang, Q.F. and Zhang, R. eds., 2019. DL: Fundamentals, Theory and

Applications (Vol. 2). Springer.

Landolt, S., Wambsganss, T. and Söllner, M., 2021. A Taxonomy for DL in Natural Language

Processing. Hawaii International Conference on System Sciences.

Otter, D.W., Medina, J.R. and Kalita, J.K., 2020. A survey of the usages of DL for natural

language processing. IEEE Transactions on Neural Networks and Learning Systems.

Rao, D. and McMahan, B., 2019. Natural language processing with PyTorch: build intelligent

language applications using DL. “ O'Reilly Media, Inc.”.

Sorin, V., Barash, Y., Konen, E. and Klang, E., 2020. DL for natural language processing in

radiology—fundamentals and a systematic review. Journal of the American College of

Radiology, 17(5), pp.639-648.

Torfi, A., Shirvani, R.A., Keneshloo, Y., Tavvaf, N. and Fox, E.A., 2020. Natural language

processing advancements by DL: A survey. arXiv preprint arXiv:2003.01200.

Yang, H., Luo, L., Chueng, L.P., Ling, D. and Chin, F., 2019. DL and its applications to natural

language processing. In DL: Fundamentals, theory and applications (pp. 89-109).

Springer, Cham.

Proposal 12

Young, T., Hazarika, D., Poria, S. and Cambria, E., 2018. Recent trends in DL based natural

language processing. ieee Computational intelligenCe magazine, 13(3), pp.55-75.

Zhang, W.E., Sheng, Q.Z., Alhazmi, A. and Li, C., 2020. Adversarial attacks on deep-learning

models in natural language processing: A survey. ACM Transactions on Intelligent

Systems and Technology (TIST), 11(3), pp.1-41.

Young, T., Hazarika, D., Poria, S. and Cambria, E., 2018. Recent trends in DL based natural

language processing. ieee Computational intelligenCe magazine, 13(3), pp.55-75.

Zhang, W.E., Sheng, Q.Z., Alhazmi, A. and Li, C., 2020. Adversarial attacks on deep-learning

models in natural language processing: A survey. ACM Transactions on Intelligent

Systems and Technology (TIST), 11(3), pp.1-41.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 12

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.