Detailed Solution to Regression and Distribution Problems

VerifiedAdded on 2023/05/31

|10

|1525

|279

Homework Assignment

AI Summary

This assignment solution covers regression and distribution problems, including maximum likelihood estimation and Fisher Information Matrix analysis. The solution discusses Model A and Model B for pasture yield prediction, providing calculations and standard errors for maximum likelihood estimato...

Last Name 1

REGRESSION AND DISTRIBUTION PROBLEMS

Student's Name

Professor's Name

Course Name

Date

REGRESSION AND DISTRIBUTION PROBLEMS

Student's Name

Professor's Name

Course Name

Date

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Last Name 2

Regression and Distribution Problems

Question 1

Part A

The likelihood functions of different parameters can be maximized with respect to parameter ϴ

to get the estimators of these parameters1.

The maximum likelihood function:

^β1=β1=∑ ( Xi− X ) (Y i −Y )

∑ ( Xi −X )

2

^β2=β2=Y −B1 X

^v=∑ Y i

n

v=

∑

i

( Y i− ^Y i ) 2

n

Part B

Fisher Information Matrix

Segment 1

For Model A

I ( β1 , β2 , v)= ∑

−i=1

n

log ( 1

( 2 π σ 2 )

n

2

e( −1

2 σ2 ∑

i=1

n

( y− β1− β2 t )2¿)

)¿

For Model B

1 Montgomery, Douglas C., Elizabeth A. Peck, and G. Geoffrey Vining. Introduction to Linear

Regression Analysis. Hoboken: John Wiley & Sons, 2012.

Regression and Distribution Problems

Question 1

Part A

The likelihood functions of different parameters can be maximized with respect to parameter ϴ

to get the estimators of these parameters1.

The maximum likelihood function:

^β1=β1=∑ ( Xi− X ) (Y i −Y )

∑ ( Xi −X )

2

^β2=β2=Y −B1 X

^v=∑ Y i

n

v=

∑

i

( Y i− ^Y i ) 2

n

Part B

Fisher Information Matrix

Segment 1

For Model A

I ( β1 , β2 , v)= ∑

−i=1

n

log ( 1

( 2 π σ 2 )

n

2

e( −1

2 σ2 ∑

i=1

n

( y− β1− β2 t )2¿)

)¿

For Model B

1 Montgomery, Douglas C., Elizabeth A. Peck, and G. Geoffrey Vining. Introduction to Linear

Regression Analysis. Hoboken: John Wiley & Sons, 2012.

Last Name 3

I ( β1 , β2 , v)= ∑

−i=1

n

log ( 1

( 2 π σ 2 )

n

2

e( −1

2 σ2 ∑

i=1

n

( y− β1

1 +14 e−β2 t )2

¿)

)¿

Segment 2

For Model A

I ( ^β1 , ^β2 , ^v )−1

=log ¿

For Model B

I ( ^β1 , ^β2 , ^v )−1

=log ¿

Part C

The scatter line and fitted line

Part D

The results are in the Appendix for the estimation computations

Hence for

I ( β1 , β2 , v)= ∑

−i=1

n

log ( 1

( 2 π σ 2 )

n

2

e( −1

2 σ2 ∑

i=1

n

( y− β1

1 +14 e−β2 t )2

¿)

)¿

Segment 2

For Model A

I ( ^β1 , ^β2 , ^v )−1

=log ¿

For Model B

I ( ^β1 , ^β2 , ^v )−1

=log ¿

Part C

The scatter line and fitted line

Part D

The results are in the Appendix for the estimation computations

Hence for

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Last Name 4

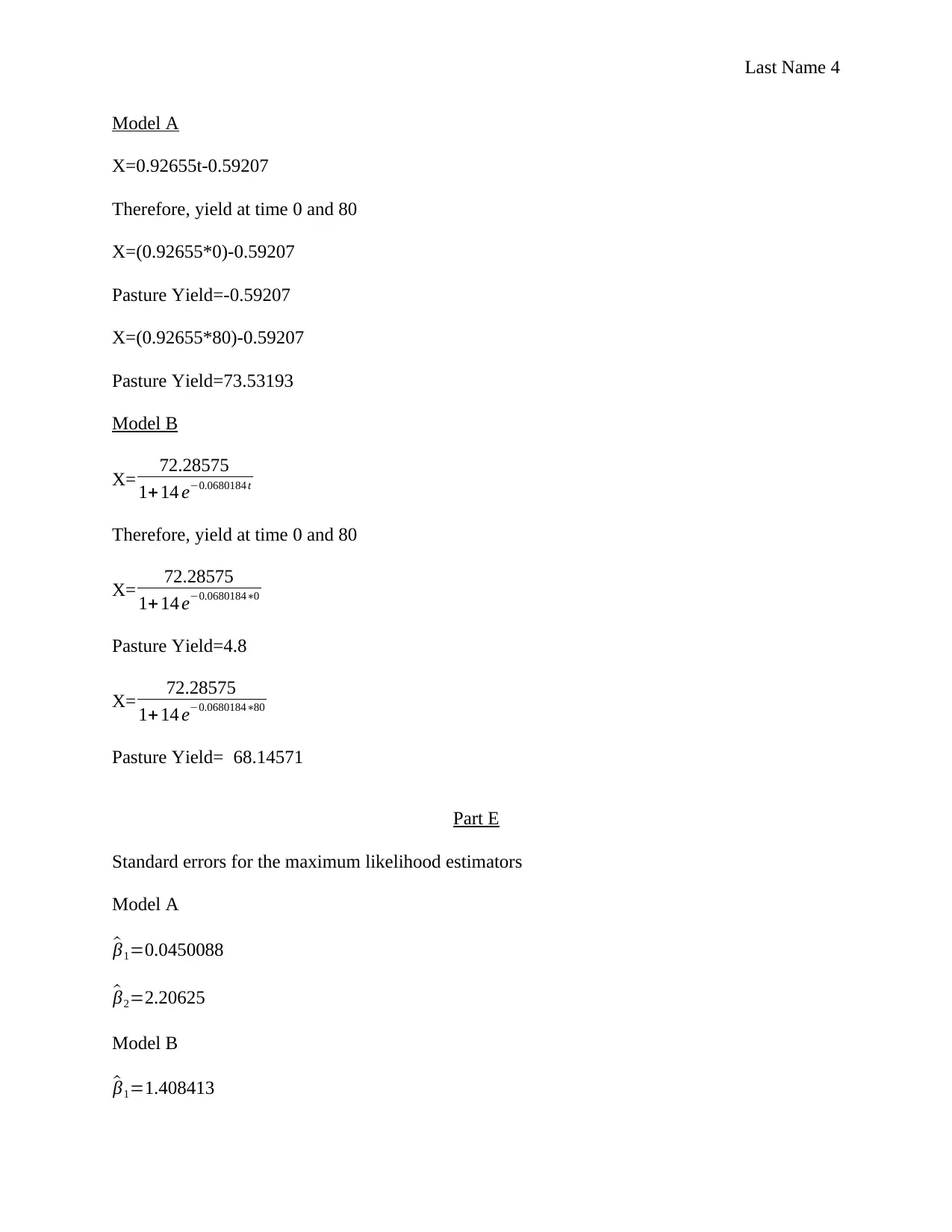

Model A

X=0.92655t-0.59207

Therefore, yield at time 0 and 80

X=(0.92655*0)-0.59207

Pasture Yield=-0.59207

X=(0.92655*80)-0.59207

Pasture Yield=73.53193

Model B

X= 72.28575

1+14 e−0.0680184 t

Therefore, yield at time 0 and 80

X= 72.28575

1+ 14 e−0.0680184∗0

Pasture Yield=4.8

X= 72.28575

1+ 14 e−0.0680184∗80

Pasture Yield= 68.14571

Part E

Standard errors for the maximum likelihood estimators

Model A

^β1=0.0450088

^β2=2.20625

Model B

^β1=1.408413

Model A

X=0.92655t-0.59207

Therefore, yield at time 0 and 80

X=(0.92655*0)-0.59207

Pasture Yield=-0.59207

X=(0.92655*80)-0.59207

Pasture Yield=73.53193

Model B

X= 72.28575

1+14 e−0.0680184 t

Therefore, yield at time 0 and 80

X= 72.28575

1+ 14 e−0.0680184∗0

Pasture Yield=4.8

X= 72.28575

1+ 14 e−0.0680184∗80

Pasture Yield= 68.14571

Part E

Standard errors for the maximum likelihood estimators

Model A

^β1=0.0450088

^β2=2.20625

Model B

^β1=1.408413

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Last Name 5

^β2=0.0018188

Part F

The best model to utilize would have to be model B it fits a majority of the data points and it

explains a significant proportion of the change in the dependent variables (Pasture Yield)

Question 2

Part A

X follows a binomial distribution X Bi(m , p)

Hence the pmf of this can be rewritten as follows

( m

x ) px ( 1− p ) m−x=exp [ ( log p

1− p ) x−mlog (1− p) ] ( m

x )

Giving us the Exponential Family form with n ( p ) =log p

1− p

Part B

X is a complete sufficient statistic because for Eϴ(g(X))=0; for all ϴ, implies therefore that

Pϴ(g(X)=0)= 12

Part C

(i)

If for a sufficient statistic like X, there exists a measurable function where g: ϴ→ X such that

X=g(ϴ)

(ii)

if we let ϴ be equivalent to 0 then Pj(ϴ)=1. As such, X is a complete sufficient statistic.

2 Schervish, Mark J. Theory of Statistics. Berlin: Springer Science & Business Media, 2012.

^β2=0.0018188

Part F

The best model to utilize would have to be model B it fits a majority of the data points and it

explains a significant proportion of the change in the dependent variables (Pasture Yield)

Question 2

Part A

X follows a binomial distribution X Bi(m , p)

Hence the pmf of this can be rewritten as follows

( m

x ) px ( 1− p ) m−x=exp [ ( log p

1− p ) x−mlog (1− p) ] ( m

x )

Giving us the Exponential Family form with n ( p ) =log p

1− p

Part B

X is a complete sufficient statistic because for Eϴ(g(X))=0; for all ϴ, implies therefore that

Pϴ(g(X)=0)= 12

Part C

(i)

If for a sufficient statistic like X, there exists a measurable function where g: ϴ→ X such that

X=g(ϴ)

(ii)

if we let ϴ be equivalent to 0 then Pj(ϴ)=1. As such, X is a complete sufficient statistic.

2 Schervish, Mark J. Theory of Statistics. Berlin: Springer Science & Business Media, 2012.

Last Name 6

Question 3

Part A

Give a statistic T and we have Varθ ( T ) < ∞ for all θ, We also have S= X 1+ X 2+ X 3

0<l ( θ ) < ∞. With this in mind we can state that for all θ

Varθ (T )≥ |φ ' (θ)|

2

l(θ)

Where φ ( θ )= θ

θ +1

So that φ' ( θ ) =

d ( θ

θ+1 )

dθ

φ' ( θ ) =Cov ⌈ ∂ log p(S ; θ)

dθ , T ⌉ for S=X 1+ X 2+ X 3

And we know the

cov (P , Q)≜ E ⌈ ( P−E [ P ] ) ( Q−E ⌈ Q ⌉ ) ⌉

We also know that

l ( θ ) =−E p(x , θ) { ∂2

∂ θ2 log p( S ;θ) } for S= X1+X2+X3

As such

Varθ ( T ) ≥

Cov ⌈ ∂ log p ( S ;θ )

dθ , T ⌉

−E p (x , θ ) { ∂2

∂ θ2 log p ( S ;θ ) } for S=X 1+ X 2+ X 3

By substituting in the values of S=X1+X2+X3 into the inequality above and simplifying we get:

varθ (T )≥ 1

( 0+1 ) 4 { 1

θ ⌈ 1

θ+1 +1+ 1

1−e−1 ⌉ − e−θ

( 1−e−θ )

2 − 1

( θ+1 ) 2 }

−1

Therefore, proving our assumption about T being an unbiased estimator of φ ( θ )

Question 3

Part A

Give a statistic T and we have Varθ ( T ) < ∞ for all θ, We also have S= X 1+ X 2+ X 3

0<l ( θ ) < ∞. With this in mind we can state that for all θ

Varθ (T )≥ |φ ' (θ)|

2

l(θ)

Where φ ( θ )= θ

θ +1

So that φ' ( θ ) =

d ( θ

θ+1 )

dθ

φ' ( θ ) =Cov ⌈ ∂ log p(S ; θ)

dθ , T ⌉ for S=X 1+ X 2+ X 3

And we know the

cov (P , Q)≜ E ⌈ ( P−E [ P ] ) ( Q−E ⌈ Q ⌉ ) ⌉

We also know that

l ( θ ) =−E p(x , θ) { ∂2

∂ θ2 log p( S ;θ) } for S= X1+X2+X3

As such

Varθ ( T ) ≥

Cov ⌈ ∂ log p ( S ;θ )

dθ , T ⌉

−E p (x , θ ) { ∂2

∂ θ2 log p ( S ;θ ) } for S=X 1+ X 2+ X 3

By substituting in the values of S=X1+X2+X3 into the inequality above and simplifying we get:

varθ (T )≥ 1

( 0+1 ) 4 { 1

θ ⌈ 1

θ+1 +1+ 1

1−e−1 ⌉ − e−θ

( 1−e−θ )

2 − 1

( θ+1 ) 2 }

−1

Therefore, proving our assumption about T being an unbiased estimator of φ ( θ )

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Last Name 7

Part B

We need to prove that for when θ assumes a value of zero S will assume a value of 1

Where S=X1+X2+X3

Therefore at least one of the three has to equate to one (while others assume values of 0)

Focusing on X1

We get that S is a complete sufficient statistic because f1(g(t))=0 for all ϴ we see that

S(g(t)=0)=1 (NB: X2 and X3 will be equivalent to 0)

We can test the preposition made above as follows

For g ( θ )=0 hence j=0 and in all cases where j { j=0,1 for a bernoulli distribution

j=0,1, … k −1 for a Poisson distribtion }

Let X1= f 1 ( 0|θ ) = 1

θ+1 for θ=0 we get X 1=1

Let X2= f 2 ( 0|θ )= e−θ θ j

j ! for θ=0 we get X 2=0

Let X3= f 3 ( 0|θ )= e−θ θj

j !(1−e−θ ) we get X 3=0 becausedoes not have g (θ )=0 i . e . j=1,2 , …

Hence

S=X1+X2+Xe

S=1+0+0

S=1

Since S=1, we conclude that S is a complete sufficient statistic of θ

Part C

Since S is a complete sufficient statistic

Therefore the expected value of the following expression will tend to φ (θ)

Part B

We need to prove that for when θ assumes a value of zero S will assume a value of 1

Where S=X1+X2+X3

Therefore at least one of the three has to equate to one (while others assume values of 0)

Focusing on X1

We get that S is a complete sufficient statistic because f1(g(t))=0 for all ϴ we see that

S(g(t)=0)=1 (NB: X2 and X3 will be equivalent to 0)

We can test the preposition made above as follows

For g ( θ )=0 hence j=0 and in all cases where j { j=0,1 for a bernoulli distribution

j=0,1, … k −1 for a Poisson distribtion }

Let X1= f 1 ( 0|θ ) = 1

θ+1 for θ=0 we get X 1=1

Let X2= f 2 ( 0|θ )= e−θ θ j

j ! for θ=0 we get X 2=0

Let X3= f 3 ( 0|θ )= e−θ θj

j !(1−e−θ ) we get X 3=0 becausedoes not have g (θ )=0 i . e . j=1,2 , …

Hence

S=X1+X2+Xe

S=1+0+0

S=1

Since S=1, we conclude that S is a complete sufficient statistic of θ

Part C

Since S is a complete sufficient statistic

Therefore the expected value of the following expression will tend to φ (θ)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Last Name 8

Exp⌈ S ( 2S−1−1 )

2S−1+S(2S−1−1) ⌉

This will create a 1:1 relationship with φ (θ)

Thus implying the expression about is a good UMVU estimator of φ (θ)

Bibliography

Montgomery, Douglas C., Elizabeth A. Peck, and G. Geoffrey Vining. Introduction to Linear

Regression Analysis. Hoboken: John Wiley & Sons, 2012.

Schervish, Mark J. Theory of Statistics. Berlin: Springer Science & Business Media, 2012.

Exp⌈ S ( 2S−1−1 )

2S−1+S(2S−1−1) ⌉

This will create a 1:1 relationship with φ (θ)

Thus implying the expression about is a good UMVU estimator of φ (θ)

Bibliography

Montgomery, Douglas C., Elizabeth A. Peck, and G. Geoffrey Vining. Introduction to Linear

Regression Analysis. Hoboken: John Wiley & Sons, 2012.

Schervish, Mark J. Theory of Statistics. Berlin: Springer Science & Business Media, 2012.

Last Name 9

Appendix

Appendix

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Last Name 10

_cons -.5920715 2.20625 -0.27 0.796 -5.809024 4.62488

t .92655 .0450088 20.59 0.000 .820121 1.032979

X Coef. Std. Err. t P>|t| [95% Conf. Interval]

Total 4648.06336 8 581.007919 Root MSE = 3.2848

Adj R-squared = 0.9814

Residual 75.5288929 7 10.7898418 R-squared = 0.9838

Model 4572.53446 1 4572.53446 Prob > F = 0.0000

F(1, 7) = 423.78

Source SS df MS Number of obs = 9

. regress X t

/b2 .0680184 .0018188 37.40 0.000 .0637177 .0723191

/b1 72.28575 1.408413 51.32 0.000 68.95538 75.61612

X Coef. Std. Err. t P>|t| [95% Conf. Interval]

Total 18223.42 9 2024.82447 Res. dev. = 24.62788

Root MSE = 1.077813

Residual 8.1317662 7 1.16168088 Adj R-squared = 0.9994

Model 18215.288 2 9107.64422 R-squared = 0.9996

Number of obs = 9

Source SS df MS

Iteration 12: residual SS = 8.131766

Iteration 11: residual SS = 8.131766

Iteration 10: residual SS = 8.131767

Iteration 9: residual SS = 8.133913

Iteration 8: residual SS = 11.73754

Iteration 7: residual SS = 104.6428

Iteration 6: residual SS = 141.8845

Iteration 5: residual SS = 173.5564

Iteration 4: residual SS = 188.2788

Iteration 3: residual SS = 220.8791

Iteration 2: residual SS = 1470.832

Iteration 1: residual SS = 2153.957

Iteration 0: residual SS = 4648.063

(obs = 9)

. nl (X = {b1}/(1 +(14*exp(-1*{b2}*t))))

_cons -.5920715 2.20625 -0.27 0.796 -5.809024 4.62488

t .92655 .0450088 20.59 0.000 .820121 1.032979

X Coef. Std. Err. t P>|t| [95% Conf. Interval]

Total 4648.06336 8 581.007919 Root MSE = 3.2848

Adj R-squared = 0.9814

Residual 75.5288929 7 10.7898418 R-squared = 0.9838

Model 4572.53446 1 4572.53446 Prob > F = 0.0000

F(1, 7) = 423.78

Source SS df MS Number of obs = 9

. regress X t

/b2 .0680184 .0018188 37.40 0.000 .0637177 .0723191

/b1 72.28575 1.408413 51.32 0.000 68.95538 75.61612

X Coef. Std. Err. t P>|t| [95% Conf. Interval]

Total 18223.42 9 2024.82447 Res. dev. = 24.62788

Root MSE = 1.077813

Residual 8.1317662 7 1.16168088 Adj R-squared = 0.9994

Model 18215.288 2 9107.64422 R-squared = 0.9996

Number of obs = 9

Source SS df MS

Iteration 12: residual SS = 8.131766

Iteration 11: residual SS = 8.131766

Iteration 10: residual SS = 8.131767

Iteration 9: residual SS = 8.133913

Iteration 8: residual SS = 11.73754

Iteration 7: residual SS = 104.6428

Iteration 6: residual SS = 141.8845

Iteration 5: residual SS = 173.5564

Iteration 4: residual SS = 188.2788

Iteration 3: residual SS = 220.8791

Iteration 2: residual SS = 1470.832

Iteration 1: residual SS = 2153.957

Iteration 0: residual SS = 4648.063

(obs = 9)

. nl (X = {b1}/(1 +(14*exp(-1*{b2}*t))))

1 out of 10

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.