Analysis of 2D Image Nature and Data Capture Rate for Nao Robot

VerifiedAdded on 2021/04/16

|7

|1425

|71

Report

AI Summary

This report investigates the challenges of 2D image capture and data processing in robotics, focusing on the Nao robot's computer vision system. It explores how computer vision provides crucial environmental information for robot navigation and interaction. The study examines the importance of image characteristics like position, size, distance, and volatility, and the difficulties in capturing and processing image data at an optimal rate. The report details an experiment using photometric stereo and AL vision to test the Nao robot's ability to differentiate image signals and its limitations. The findings highlight the relationship between image quality, AL vision sensitivity, and the robot's ability to respond to signals, concluding that the 2D nature of images significantly impacts the rate of quality data capture. The report also discusses risk assessments, testing procedures, outcomes, and reflections on the algorithm's accuracy in image recognition and processing, as well as the impact of image characteristics on data collection efficiency.

A report on 2D dimensional nature of image and rate of capturing image data with the Nao

Name

Institution

Professor

Course

Date

Name

Institution

Professor

Course

Date

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Problem statement

There has been controversy over the planning and navigation of Robots through its

specific environment. In order to create an autonomous planning, clear understanding of the

environment is required. A computer vision system can be used to provide any pertinent

information about required robot environment (Zhang et al, 2013). Captured data would help

robots to interact with its agents while navigating through the environment in order to track

them. In this regard, the concept of computer vision systems and robotic visual tracking is of

great importance (Moreno et al, 2012). To interact with the environment, a robot makes use of

images that contain useful data about objects properties such as its positions, sizes, distance, and

volatility. When capturing required data, the 2-D nature of capturing images and the rate used to

capture these images have been of great challenge (Maini & Aggarwal, 2009). The problem of

capturing images is complicated by the lack of computer vision signals that can process and

transmit image data at the optimal rate. Therefore, this paper tries to investigate the nature of

images and the rate at which required robotic image data is captured through the use of computer

vision.

Background information

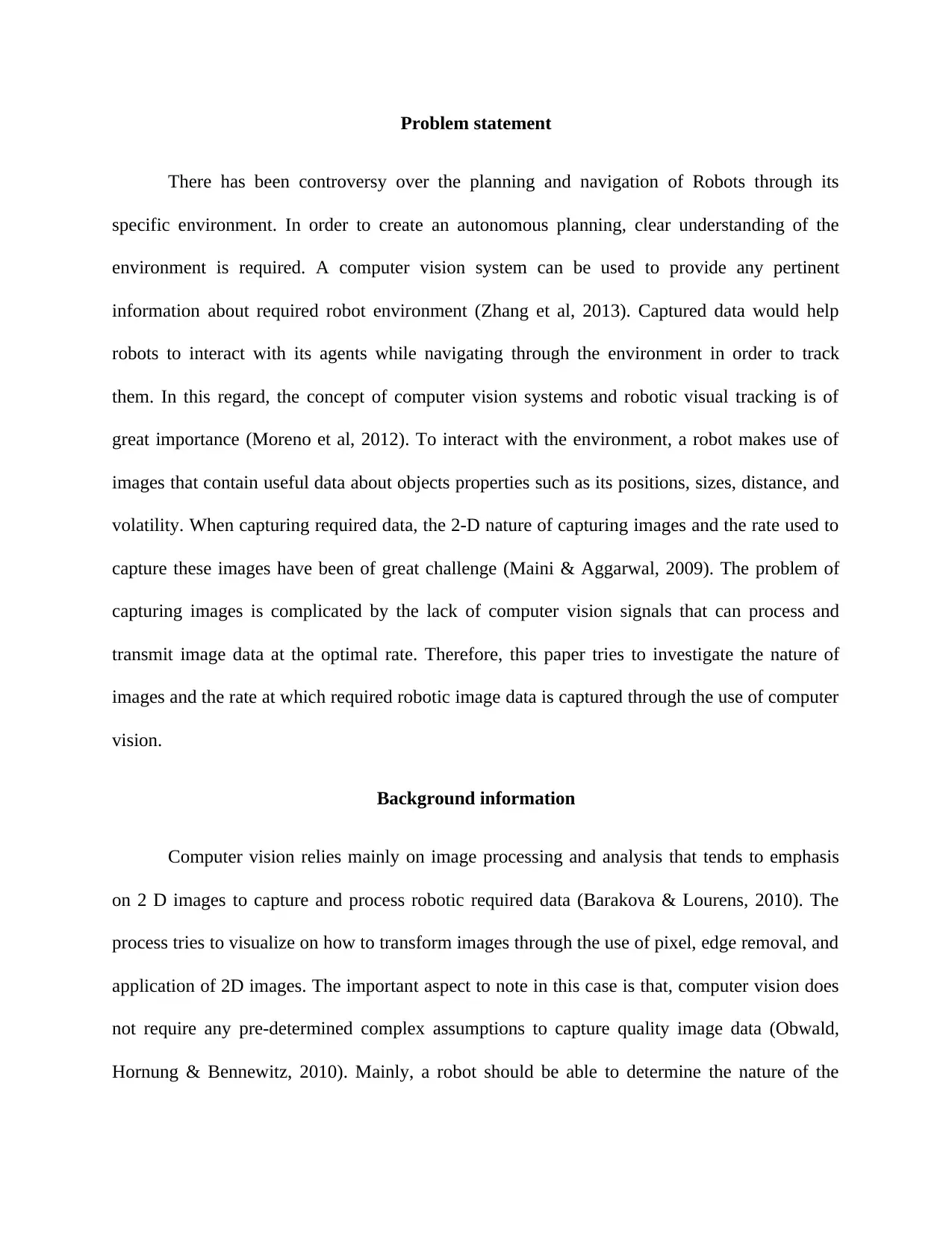

Computer vision relies mainly on image processing and analysis that tends to emphasis

on 2 D images to capture and process robotic required data (Barakova & Lourens, 2010). The

process tries to visualize on how to transform images through the use of pixel, edge removal, and

application of 2D images. The important aspect to note in this case is that, computer vision does

not require any pre-determined complex assumptions to capture quality image data (Obwald,

Hornung & Bennewitz, 2010). Mainly, a robot should be able to determine the nature of the

There has been controversy over the planning and navigation of Robots through its

specific environment. In order to create an autonomous planning, clear understanding of the

environment is required. A computer vision system can be used to provide any pertinent

information about required robot environment (Zhang et al, 2013). Captured data would help

robots to interact with its agents while navigating through the environment in order to track

them. In this regard, the concept of computer vision systems and robotic visual tracking is of

great importance (Moreno et al, 2012). To interact with the environment, a robot makes use of

images that contain useful data about objects properties such as its positions, sizes, distance, and

volatility. When capturing required data, the 2-D nature of capturing images and the rate used to

capture these images have been of great challenge (Maini & Aggarwal, 2009). The problem of

capturing images is complicated by the lack of computer vision signals that can process and

transmit image data at the optimal rate. Therefore, this paper tries to investigate the nature of

images and the rate at which required robotic image data is captured through the use of computer

vision.

Background information

Computer vision relies mainly on image processing and analysis that tends to emphasis

on 2 D images to capture and process robotic required data (Barakova & Lourens, 2010). The

process tries to visualize on how to transform images through the use of pixel, edge removal, and

application of 2D images. The important aspect to note in this case is that, computer vision does

not require any pre-determined complex assumptions to capture quality image data (Obwald,

Hornung & Bennewitz, 2010). Mainly, a robot should be able to determine the nature of the

image as depicted in its operational environment in order to process image characteristics. To

make robot have the capability to process and capture pertinent image information, both control

theory and sensor technology should be incorporated. To make the real-time process of the

image data efficient, the 2D image processing should focus on software and hardware

implementation (Faragasso et al, 2013). Efficient processing of image signals makes it possible

for the robot to navigate through environment swiftly. As system implementation part focuses on

image processing, the concept of pattern recognition is very important. It helps robots to

determine the nature and shape of an image by using various dynamic methods to extract image

data.

Investigations

To better the understanding of image processing and image data collection, the following

research was done. In this case, only one experiment would be used.

Objective

The main goal of the study was to determine how computer vision is used to detect and

process image data in robots. In this study, the effector was the head and the whole body.

Solution design

make robot have the capability to process and capture pertinent image information, both control

theory and sensor technology should be incorporated. To make the real-time process of the

image data efficient, the 2D image processing should focus on software and hardware

implementation (Faragasso et al, 2013). Efficient processing of image signals makes it possible

for the robot to navigate through environment swiftly. As system implementation part focuses on

image processing, the concept of pattern recognition is very important. It helps robots to

determine the nature and shape of an image by using various dynamic methods to extract image

data.

Investigations

To better the understanding of image processing and image data collection, the following

research was done. In this case, only one experiment would be used.

Objective

The main goal of the study was to determine how computer vision is used to detect and

process image data in robots. In this study, the effector was the head and the whole body.

Solution design

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

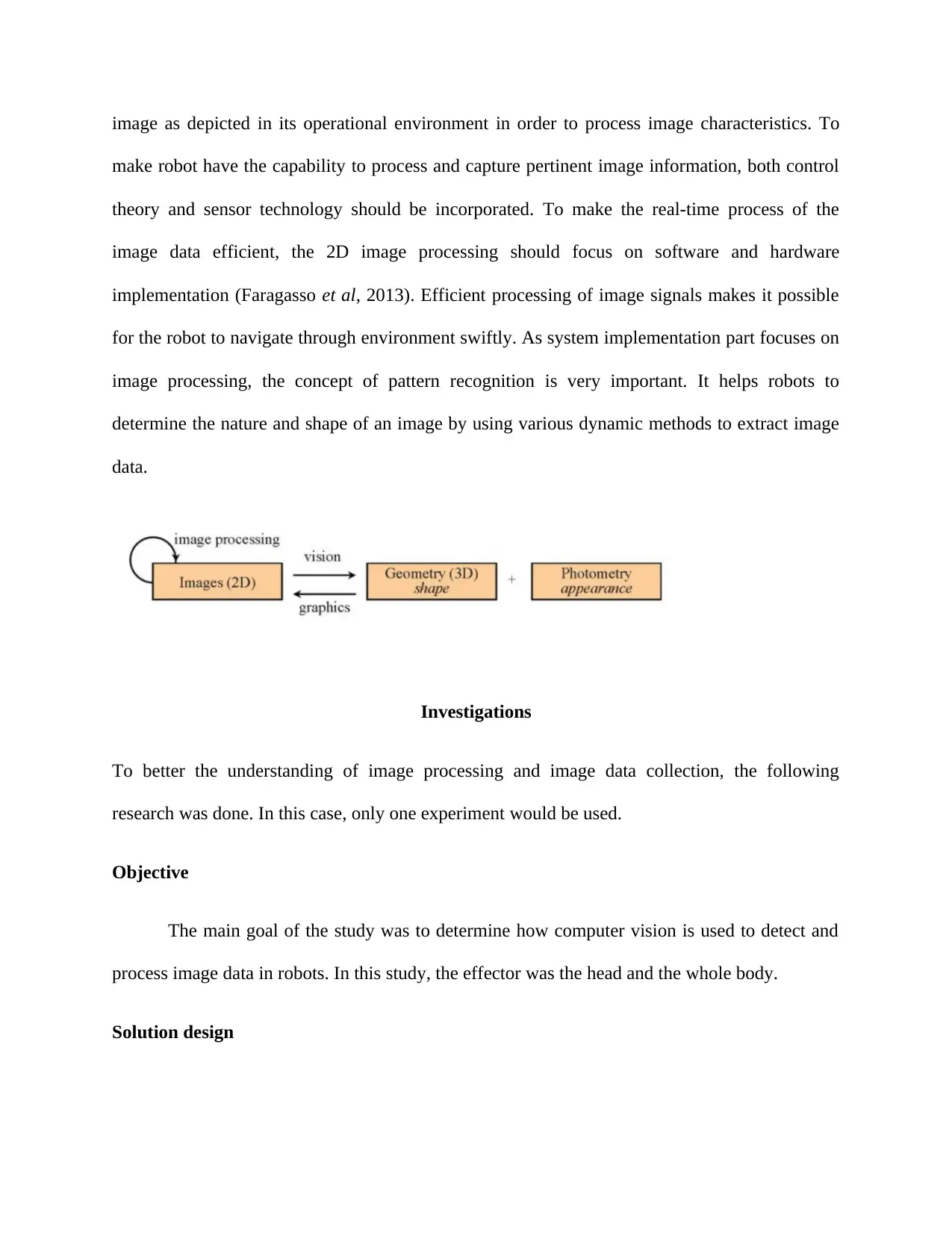

To come up with the solution to the problem, the robotic image detection, and signal

processing collection used the data flow below to test its capability. Once an image was set,

several parameters were set. These included; color, size, and shape. The testing requires a sensor

box to help in detecting images in regard to different image characteristics provided. The

photometric stereo would be used to detect the image and its characteristics and give out signals

in various colors.

Risk assessment and precautions

The robot was faced with various challenges when detecting and processing various

image data. Poor detection and interpretation of the data signals from the image makes the robot

to take wrong actions. A good example would be if a red signal is detected robot should make an

immediate turn to avoid any harm. The unanticipated turn might cause abrupt movement of the

body causing joints dislocation. It is important to make sure photometric stereo processes signals

appropriately to avoid unnecessary movements of the robot.

Testing procedure

processing collection used the data flow below to test its capability. Once an image was set,

several parameters were set. These included; color, size, and shape. The testing requires a sensor

box to help in detecting images in regard to different image characteristics provided. The

photometric stereo would be used to detect the image and its characteristics and give out signals

in various colors.

Risk assessment and precautions

The robot was faced with various challenges when detecting and processing various

image data. Poor detection and interpretation of the data signals from the image makes the robot

to take wrong actions. A good example would be if a red signal is detected robot should make an

immediate turn to avoid any harm. The unanticipated turn might cause abrupt movement of the

body causing joints dislocation. It is important to make sure photometric stereo processes signals

appropriately to avoid unnecessary movements of the robot.

Testing procedure

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

This program makes use of photometric stereo and AL vision which made it difficult to

test it on a simulator. In order to make its actions real-time, a real robot was used to when

running and testing the program. Careful attention was to avoid negative interpretation of the

signals generated by the images that control the robot movement in its environment. Two

NaoMark images were used; negative and positive one to help in determining robotic capability

to detect various signals.

Testing outcomes

The AL vision and photometric stereo worked according to the expectations. The robot

was able to differentiate various signals that were generated from the images. When images

generating the signal distance were moved far, AL vision sensitivity was poor which is quite

normal because it is calculated to work within a specific range of distance from the images. With

the specified distance, the robot responded positively to all images generating signals. When un-

programmed signals (negative) were generated, the robot did not respond, and that was a very

perfect response to limit unrealistic movements that can be harmful. Therefore, the 2D nature of

image perfectly determined the rate of Nao robot to capture quality data.

Reflection

The signals generated by the images to help the robot to sense and detect the nature of

image characteristics in 2D demonstrated the ability of the Nao robot to use signals to perceive

and analyze images. From the analysis of the outcomes, it was found that the algorithm used in

image recognition and processing was accurate. The nature of the signal detected by

photometric stereo controlled robot AL vision. The nature and characteristics of the images

test it on a simulator. In order to make its actions real-time, a real robot was used to when

running and testing the program. Careful attention was to avoid negative interpretation of the

signals generated by the images that control the robot movement in its environment. Two

NaoMark images were used; negative and positive one to help in determining robotic capability

to detect various signals.

Testing outcomes

The AL vision and photometric stereo worked according to the expectations. The robot

was able to differentiate various signals that were generated from the images. When images

generating the signal distance were moved far, AL vision sensitivity was poor which is quite

normal because it is calculated to work within a specific range of distance from the images. With

the specified distance, the robot responded positively to all images generating signals. When un-

programmed signals (negative) were generated, the robot did not respond, and that was a very

perfect response to limit unrealistic movements that can be harmful. Therefore, the 2D nature of

image perfectly determined the rate of Nao robot to capture quality data.

Reflection

The signals generated by the images to help the robot to sense and detect the nature of

image characteristics in 2D demonstrated the ability of the Nao robot to use signals to perceive

and analyze images. From the analysis of the outcomes, it was found that the algorithm used in

image recognition and processing was accurate. The nature of the signal detected by

photometric stereo controlled robot AL vision. The nature and characteristics of the images

generated by the photometric stereo were useful to determine the rate of image data collection.

Poor images meant unreliable data would be collected.

Conclusion

This investigation narrowed down to the ability of a robot to detect the nature of 2D

images through the use of photometric and AL vision. Further, it focused on ability and

limitations of Nao robot to perceive and interpret images through AL vision. Analysis of the final

outcome showed that the nature of the image as perceived by the AL vision was relative to its

characteristics. The poor image depicted low AL vision which made it difficult to capture quality

image data. Finally, various image signals were captured by the AL vision sensor to help in

controlling the Nao robot motion.

References

Barakova, E. I., & Lourens, T. (2010). Expressing and interpreting emotional movements in

social games with robots. Personal and ubiquitous computing, 14(5), 457-467.

Faragasso, A., Oriolo, G., Paolillo, A., & Vendittelli, M. (2013, May). Vision-based corridor

navigation for humanoid robots. In Robotics and Automation (ICRA), 2013 IEEE

International Conference on (pp. 3190-3195). IEEE.

Maini, R., & Aggarwal, H. (2009). Study and comparison of various image edge detection

techniques. International journal of image processing (IJIP), 3(1), 1-11.

Poor images meant unreliable data would be collected.

Conclusion

This investigation narrowed down to the ability of a robot to detect the nature of 2D

images through the use of photometric and AL vision. Further, it focused on ability and

limitations of Nao robot to perceive and interpret images through AL vision. Analysis of the final

outcome showed that the nature of the image as perceived by the AL vision was relative to its

characteristics. The poor image depicted low AL vision which made it difficult to capture quality

image data. Finally, various image signals were captured by the AL vision sensor to help in

controlling the Nao robot motion.

References

Barakova, E. I., & Lourens, T. (2010). Expressing and interpreting emotional movements in

social games with robots. Personal and ubiquitous computing, 14(5), 457-467.

Faragasso, A., Oriolo, G., Paolillo, A., & Vendittelli, M. (2013, May). Vision-based corridor

navigation for humanoid robots. In Robotics and Automation (ICRA), 2013 IEEE

International Conference on (pp. 3190-3195). IEEE.

Maini, R., & Aggarwal, H. (2009). Study and comparison of various image edge detection

techniques. International journal of image processing (IJIP), 3(1), 1-11.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Moreno, R., Grana, M., Ramik, D. M., & Madani, K. (2012). Image segmentation on spherical

coordinate representation of RGB colour space. IET Image Processing, 6(9), 1275-1283.

Obwald, S., Hornung, A., & Bennewitz, M. (2010, May). Learning reliable and efficient

navigation with a humanoid. In Robotics and Automation (ICRA), 2010 IEEE

International Conference on (pp. 2375-2380). IEEE.

Zhang, L., Jiang, M., Farid, D., & Hossain, M. A. (2013). Intelligent facial emotion recognition

and semantic-based topic detection for a humanoid robot. Expert Systems with

Applications, 40(13), 5160-5168.

coordinate representation of RGB colour space. IET Image Processing, 6(9), 1275-1283.

Obwald, S., Hornung, A., & Bennewitz, M. (2010, May). Learning reliable and efficient

navigation with a humanoid. In Robotics and Automation (ICRA), 2010 IEEE

International Conference on (pp. 2375-2380). IEEE.

Zhang, L., Jiang, M., Farid, D., & Hossain, M. A. (2013). Intelligent facial emotion recognition

and semantic-based topic detection for a humanoid robot. Expert Systems with

Applications, 40(13), 5160-5168.

1 out of 7