SPARK Programming Report: Apache SPARK Software Engineering Aspects

VerifiedAdded on 2023/01/16

|10

|1692

|87

Report

AI Summary

This report provides a comprehensive overview of Apache Spark, a powerful application development methodology designed for high-reliability software. The report begins by explaining the structure of Resilient Distributed Datasets (RDDs), Spark's fundamental data structure, and elucidates how transformations and actions are applied to manipulate and process data within RDDs, including code examples. The report then delves into the comparison of stream processing in Apache Kafka and Apache Storm, highlighting their respective strengths and weaknesses. Furthermore, it showcases Spark SQL and its application in generating graphs, providing illustrative examples. Finally, the report discusses the advantages and disadvantages of using Spark, offering a balanced perspective on its capabilities and limitations. The report is structured to provide clear explanations, code examples, and comparisons, fulfilling the assignment's requirements for a detailed and informative analysis of Spark's software engineering aspects.

Running head: SPARK PROGRAMMING 1

SPARK Programming:

Name:

Course:

Date:

SPARK Programming:

Name:

Course:

Date:

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

SPARK PROGRAMMING 2

Introduction

Spark is an application development methodology that is particularly designed for creating

high-reliability software (Aleksiyants, et al., 2015). It is made up of verification toolset,

programming language, and a method of design which when combined together realizes

deployment of error-free application in high-reliability domains, for instance where security

and safety ate primary requirements.

Structure of Resilient Distributed Datasets (RDDs)

RDD is Spark’s essential data structure. Basically, it is an unassailable object collection.

RDD datasets are subdivided into logical subsets that can be processed on various points of

the cluster. RDD can have any form of Scala, Java, or Python objects like classes that are

user-defined. Creation of RDDs can be achieved through deterministic processes on either

data on other RDDs or on stable storage. There exist two methods of creating RDDs: either

by dataset referencing in an external system of storage like HDFS, shared file system, or any

other source of data that provides Hadoop Input type or through parallelizing an accessible

assembly in the driver program. The concept of RDD is used by Spark to realize efficient and

quick MapReduce processes.

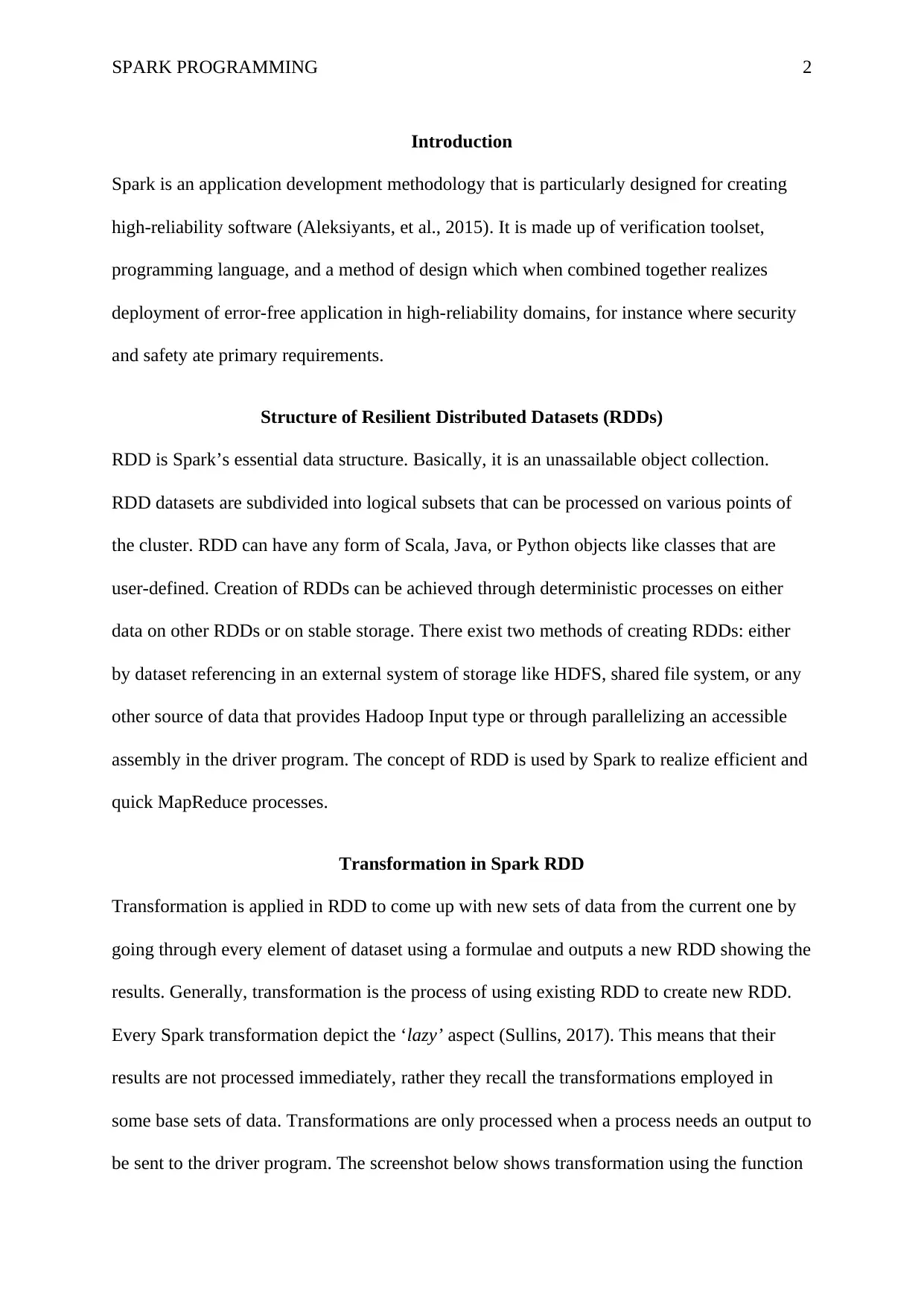

Transformation in Spark RDD

Transformation is applied in RDD to come up with new sets of data from the current one by

going through every element of dataset using a formulae and outputs a new RDD showing the

results. Generally, transformation is the process of using existing RDD to create new RDD.

Every Spark transformation depict the ‘lazy’ aspect (Sullins, 2017). This means that their

results are not processed immediately, rather they recall the transformations employed in

some base sets of data. Transformations are only processed when a process needs an output to

be sent to the driver program. The screenshot below shows transformation using the function

Introduction

Spark is an application development methodology that is particularly designed for creating

high-reliability software (Aleksiyants, et al., 2015). It is made up of verification toolset,

programming language, and a method of design which when combined together realizes

deployment of error-free application in high-reliability domains, for instance where security

and safety ate primary requirements.

Structure of Resilient Distributed Datasets (RDDs)

RDD is Spark’s essential data structure. Basically, it is an unassailable object collection.

RDD datasets are subdivided into logical subsets that can be processed on various points of

the cluster. RDD can have any form of Scala, Java, or Python objects like classes that are

user-defined. Creation of RDDs can be achieved through deterministic processes on either

data on other RDDs or on stable storage. There exist two methods of creating RDDs: either

by dataset referencing in an external system of storage like HDFS, shared file system, or any

other source of data that provides Hadoop Input type or through parallelizing an accessible

assembly in the driver program. The concept of RDD is used by Spark to realize efficient and

quick MapReduce processes.

Transformation in Spark RDD

Transformation is applied in RDD to come up with new sets of data from the current one by

going through every element of dataset using a formulae and outputs a new RDD showing the

results. Generally, transformation is the process of using existing RDD to create new RDD.

Every Spark transformation depict the ‘lazy’ aspect (Sullins, 2017). This means that their

results are not processed immediately, rather they recall the transformations employed in

some base sets of data. Transformations are only processed when a process needs an output to

be sent to the driver program. The screenshot below shows transformation using the function

SPARK PROGRAMMING 3

‘filter’.

Figure 1: Transformation using Filter function (Sullins, 2017)

In Scala, the relevant code can be represented as val myRDD=filename.filter(_.

contains(“keyword”));

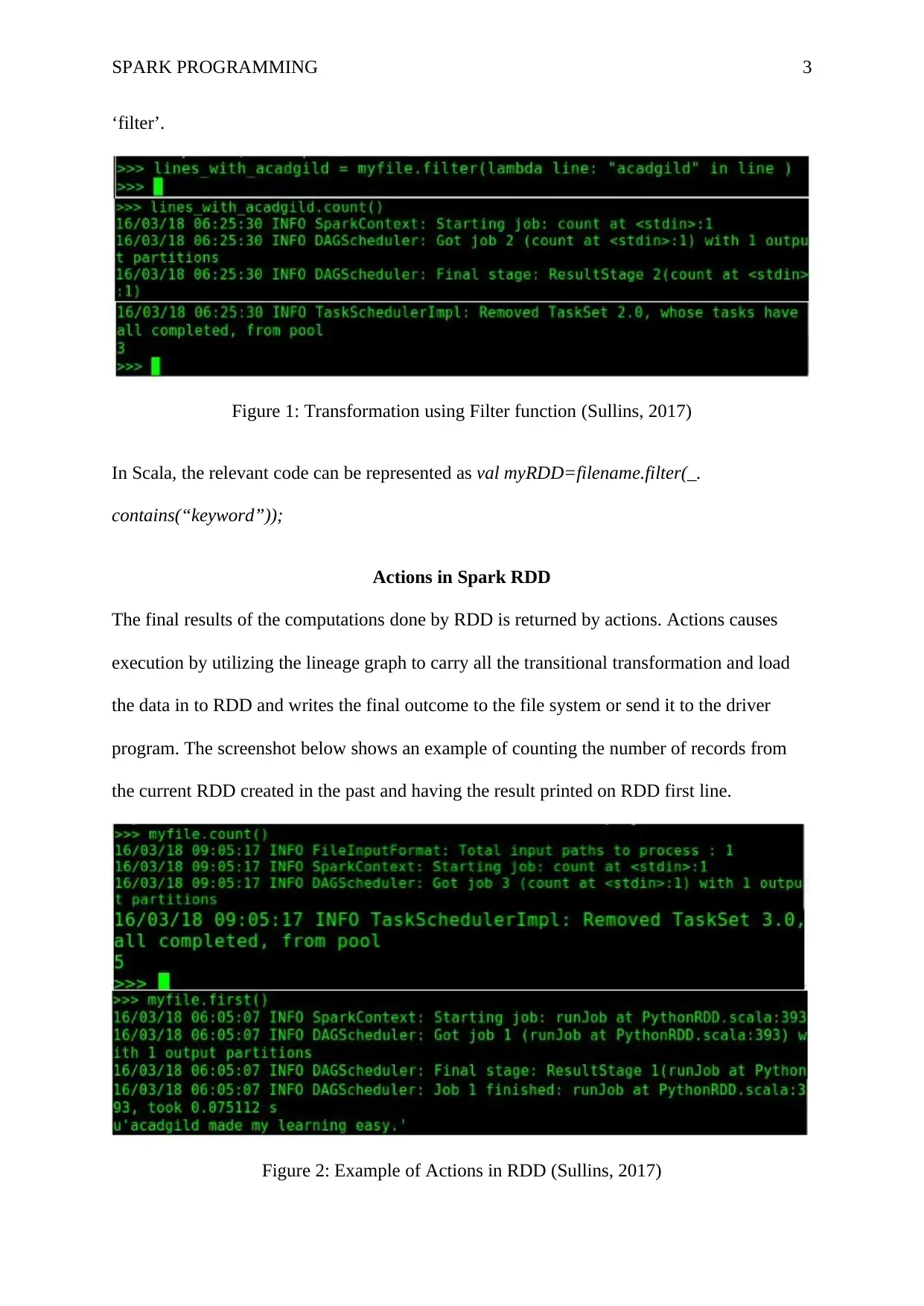

Actions in Spark RDD

The final results of the computations done by RDD is returned by actions. Actions causes

execution by utilizing the lineage graph to carry all the transitional transformation and load

the data in to RDD and writes the final outcome to the file system or send it to the driver

program. The screenshot below shows an example of counting the number of records from

the current RDD created in the past and having the result printed on RDD first line.

Figure 2: Example of Actions in RDD (Sullins, 2017)

‘filter’.

Figure 1: Transformation using Filter function (Sullins, 2017)

In Scala, the relevant code can be represented as val myRDD=filename.filter(_.

contains(“keyword”));

Actions in Spark RDD

The final results of the computations done by RDD is returned by actions. Actions causes

execution by utilizing the lineage graph to carry all the transitional transformation and load

the data in to RDD and writes the final outcome to the file system or send it to the driver

program. The screenshot below shows an example of counting the number of records from

the current RDD created in the past and having the result printed on RDD first line.

Figure 2: Example of Actions in RDD (Sullins, 2017)

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

SPARK PROGRAMMING 4

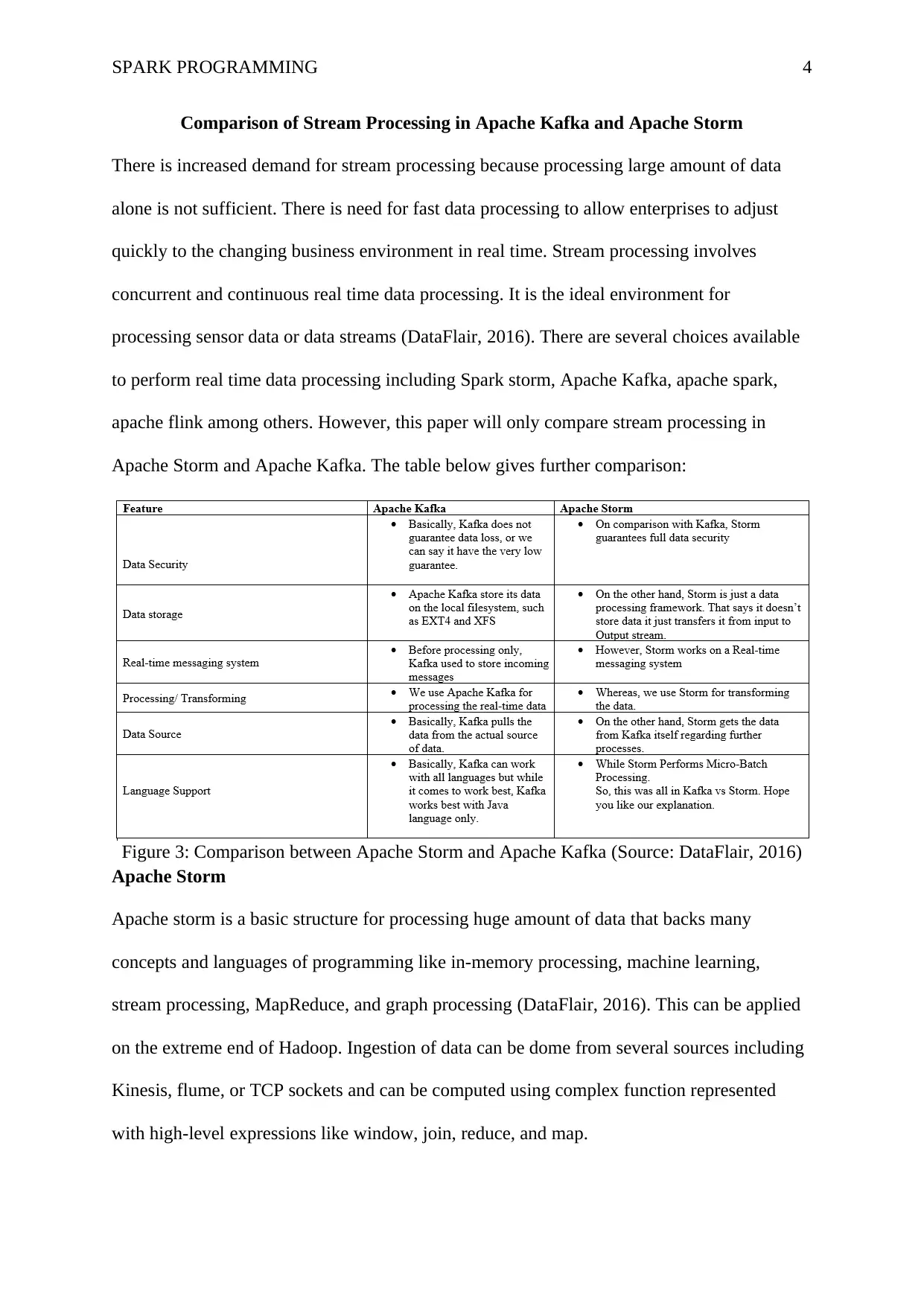

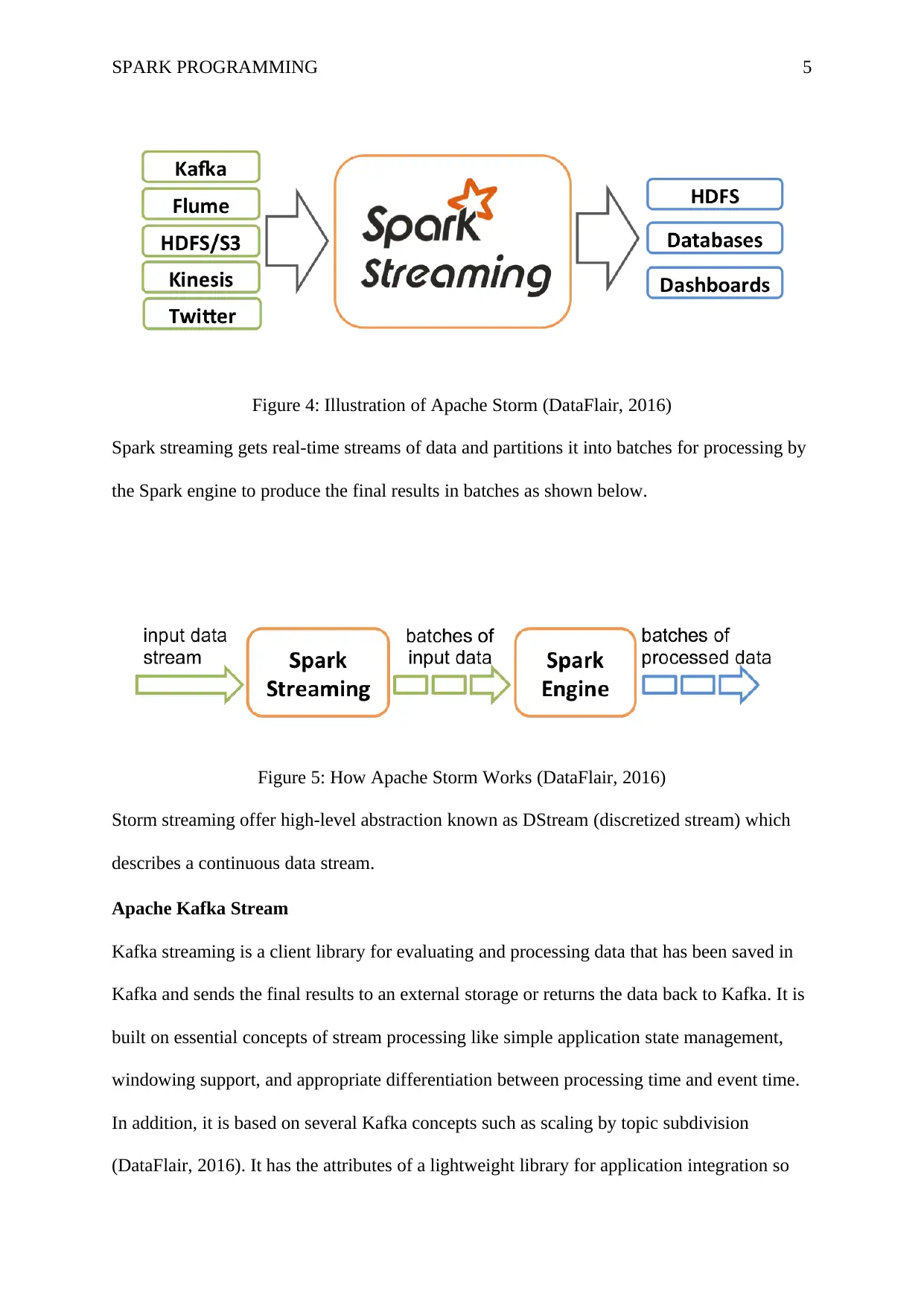

Comparison of Stream Processing in Apache Kafka and Apache Storm

There is increased demand for stream processing because processing large amount of data

alone is not sufficient. There is need for fast data processing to allow enterprises to adjust

quickly to the changing business environment in real time. Stream processing involves

concurrent and continuous real time data processing. It is the ideal environment for

processing sensor data or data streams (DataFlair, 2016). There are several choices available

to perform real time data processing including Spark storm, Apache Kafka, apache spark,

apache flink among others. However, this paper will only compare stream processing in

Apache Storm and Apache Kafka. The table below gives further comparison:

Figure 3: Comparison between Apache Storm and Apache Kafka (Source: DataFlair, 2016)

Apache Storm

Apache storm is a basic structure for processing huge amount of data that backs many

concepts and languages of programming like in-memory processing, machine learning,

stream processing, MapReduce, and graph processing (DataFlair, 2016). This can be applied

on the extreme end of Hadoop. Ingestion of data can be dome from several sources including

Kinesis, flume, or TCP sockets and can be computed using complex function represented

with high-level expressions like window, join, reduce, and map.

Comparison of Stream Processing in Apache Kafka and Apache Storm

There is increased demand for stream processing because processing large amount of data

alone is not sufficient. There is need for fast data processing to allow enterprises to adjust

quickly to the changing business environment in real time. Stream processing involves

concurrent and continuous real time data processing. It is the ideal environment for

processing sensor data or data streams (DataFlair, 2016). There are several choices available

to perform real time data processing including Spark storm, Apache Kafka, apache spark,

apache flink among others. However, this paper will only compare stream processing in

Apache Storm and Apache Kafka. The table below gives further comparison:

Figure 3: Comparison between Apache Storm and Apache Kafka (Source: DataFlair, 2016)

Apache Storm

Apache storm is a basic structure for processing huge amount of data that backs many

concepts and languages of programming like in-memory processing, machine learning,

stream processing, MapReduce, and graph processing (DataFlair, 2016). This can be applied

on the extreme end of Hadoop. Ingestion of data can be dome from several sources including

Kinesis, flume, or TCP sockets and can be computed using complex function represented

with high-level expressions like window, join, reduce, and map.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

SPARK PROGRAMMING 5

Figure 4: Illustration of Apache Storm (DataFlair, 2016)

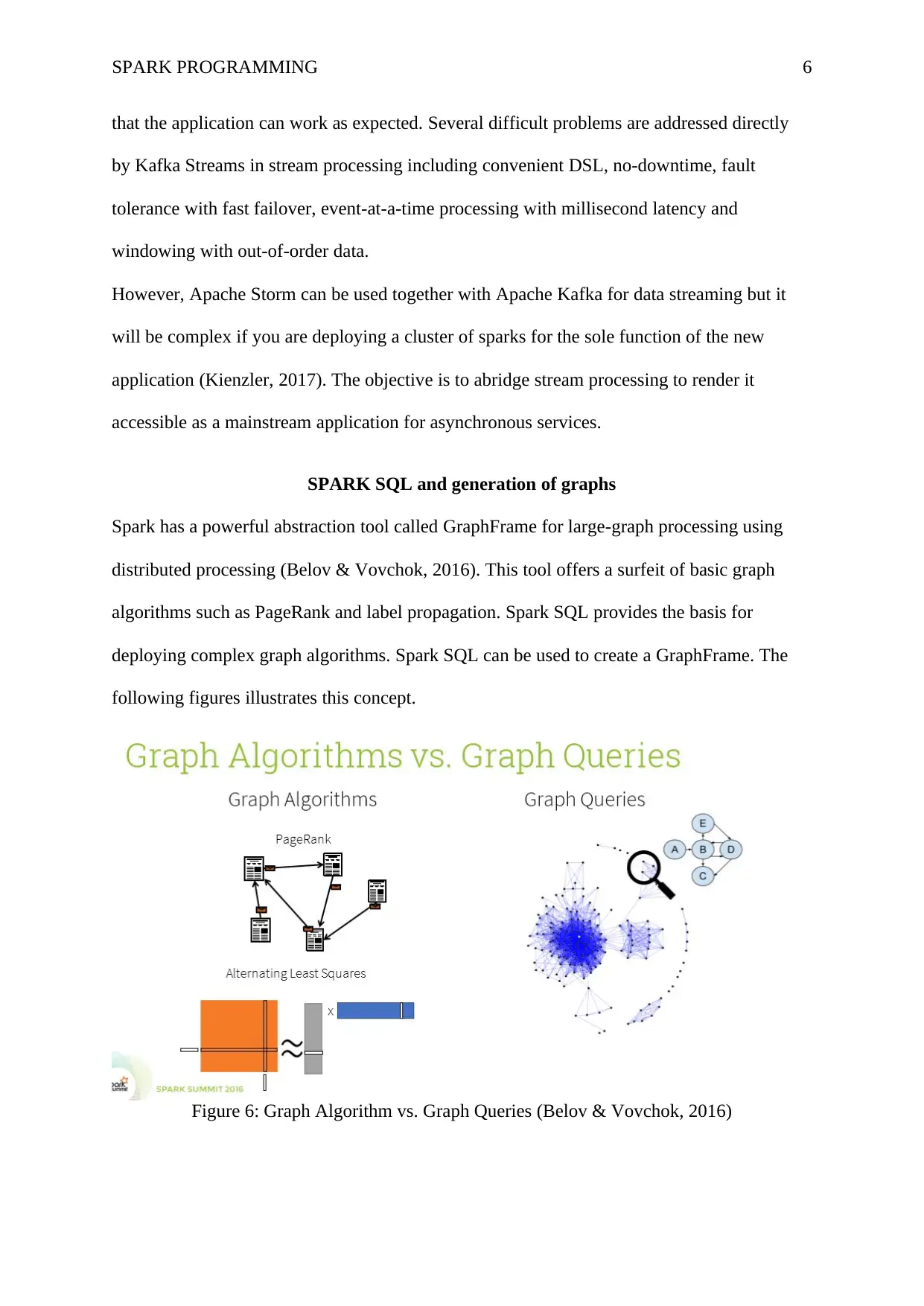

Spark streaming gets real-time streams of data and partitions it into batches for processing by

the Spark engine to produce the final results in batches as shown below.

Figure 5: How Apache Storm Works (DataFlair, 2016)

Storm streaming offer high-level abstraction known as DStream (discretized stream) which

describes a continuous data stream.

Apache Kafka Stream

Kafka streaming is a client library for evaluating and processing data that has been saved in

Kafka and sends the final results to an external storage or returns the data back to Kafka. It is

built on essential concepts of stream processing like simple application state management,

windowing support, and appropriate differentiation between processing time and event time.

In addition, it is based on several Kafka concepts such as scaling by topic subdivision

(DataFlair, 2016). It has the attributes of a lightweight library for application integration so

Figure 4: Illustration of Apache Storm (DataFlair, 2016)

Spark streaming gets real-time streams of data and partitions it into batches for processing by

the Spark engine to produce the final results in batches as shown below.

Figure 5: How Apache Storm Works (DataFlair, 2016)

Storm streaming offer high-level abstraction known as DStream (discretized stream) which

describes a continuous data stream.

Apache Kafka Stream

Kafka streaming is a client library for evaluating and processing data that has been saved in

Kafka and sends the final results to an external storage or returns the data back to Kafka. It is

built on essential concepts of stream processing like simple application state management,

windowing support, and appropriate differentiation between processing time and event time.

In addition, it is based on several Kafka concepts such as scaling by topic subdivision

(DataFlair, 2016). It has the attributes of a lightweight library for application integration so

SPARK PROGRAMMING 6

that the application can work as expected. Several difficult problems are addressed directly

by Kafka Streams in stream processing including convenient DSL, no-downtime, fault

tolerance with fast failover, event-at-a-time processing with millisecond latency and

windowing with out-of-order data.

However, Apache Storm can be used together with Apache Kafka for data streaming but it

will be complex if you are deploying a cluster of sparks for the sole function of the new

application (Kienzler, 2017). The objective is to abridge stream processing to render it

accessible as a mainstream application for asynchronous services.

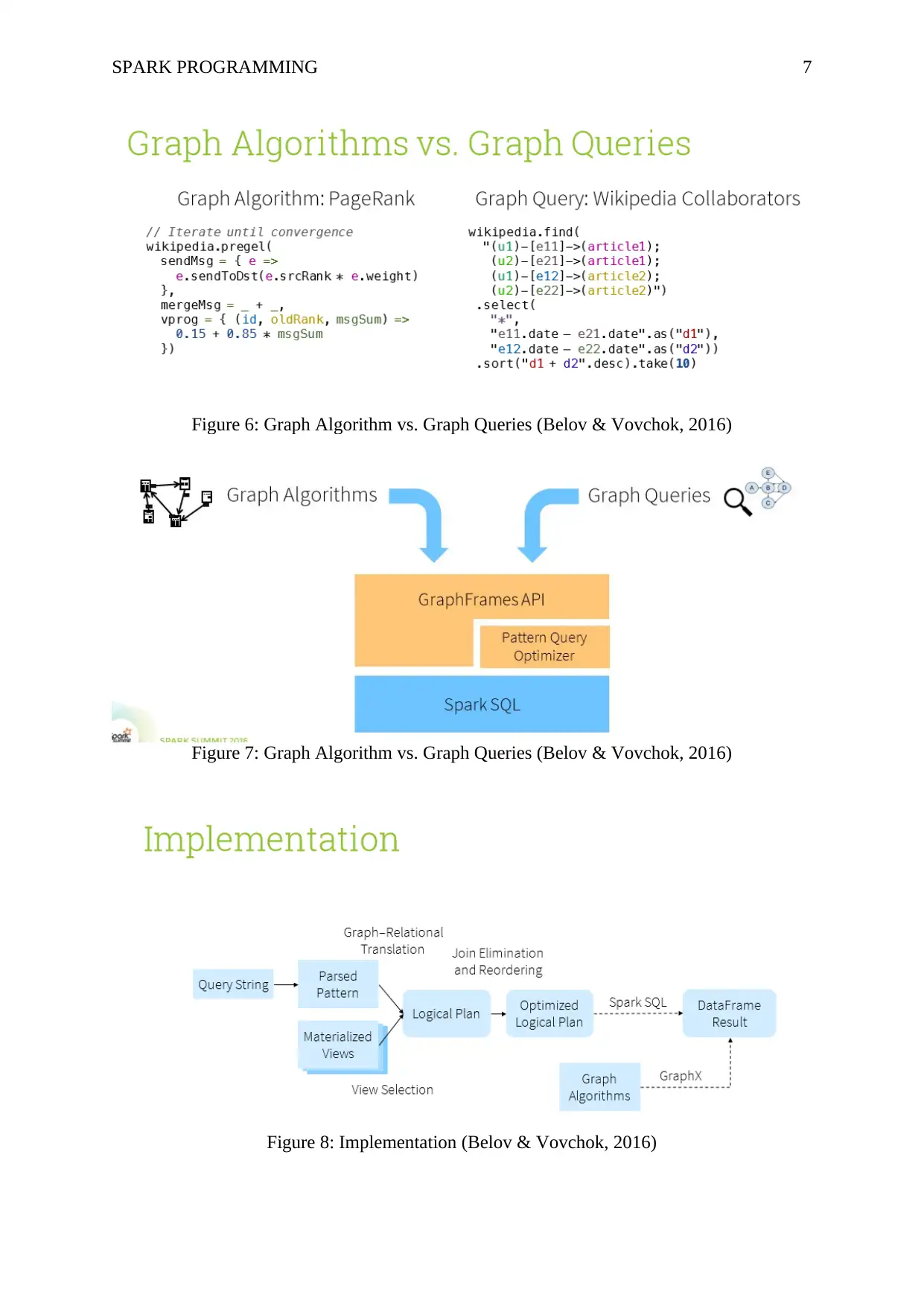

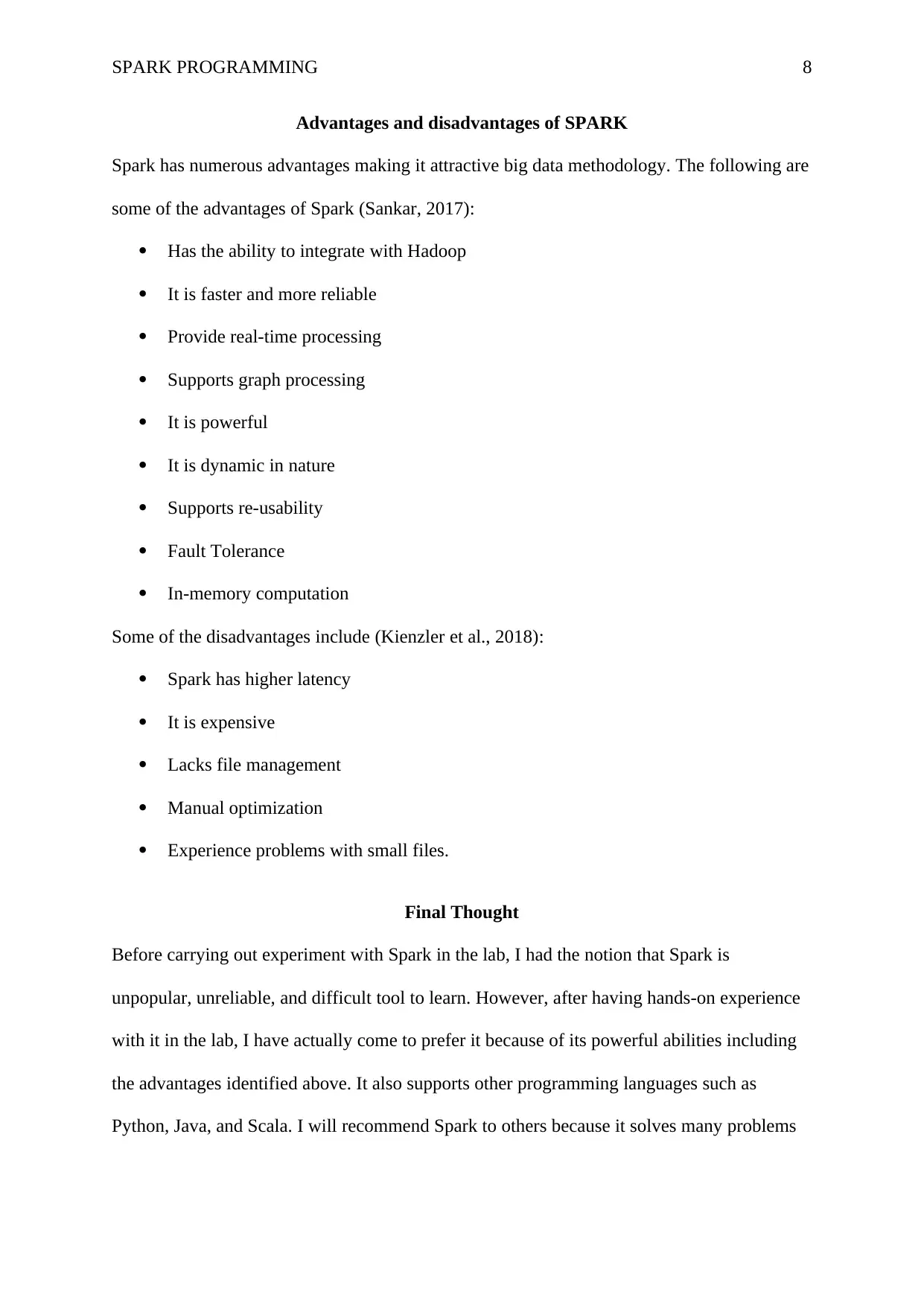

SPARK SQL and generation of graphs

Spark has a powerful abstraction tool called GraphFrame for large-graph processing using

distributed processing (Belov & Vovchok, 2016). This tool offers a surfeit of basic graph

algorithms such as PageRank and label propagation. Spark SQL provides the basis for

deploying complex graph algorithms. Spark SQL can be used to create a GraphFrame. The

following figures illustrates this concept.

Figure 6: Graph Algorithm vs. Graph Queries (Belov & Vovchok, 2016)

that the application can work as expected. Several difficult problems are addressed directly

by Kafka Streams in stream processing including convenient DSL, no-downtime, fault

tolerance with fast failover, event-at-a-time processing with millisecond latency and

windowing with out-of-order data.

However, Apache Storm can be used together with Apache Kafka for data streaming but it

will be complex if you are deploying a cluster of sparks for the sole function of the new

application (Kienzler, 2017). The objective is to abridge stream processing to render it

accessible as a mainstream application for asynchronous services.

SPARK SQL and generation of graphs

Spark has a powerful abstraction tool called GraphFrame for large-graph processing using

distributed processing (Belov & Vovchok, 2016). This tool offers a surfeit of basic graph

algorithms such as PageRank and label propagation. Spark SQL provides the basis for

deploying complex graph algorithms. Spark SQL can be used to create a GraphFrame. The

following figures illustrates this concept.

Figure 6: Graph Algorithm vs. Graph Queries (Belov & Vovchok, 2016)

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

SPARK PROGRAMMING 7

Figure 6: Graph Algorithm vs. Graph Queries (Belov & Vovchok, 2016)

Figure 7: Graph Algorithm vs. Graph Queries (Belov & Vovchok, 2016)

Figure 8: Implementation (Belov & Vovchok, 2016)

Figure 6: Graph Algorithm vs. Graph Queries (Belov & Vovchok, 2016)

Figure 7: Graph Algorithm vs. Graph Queries (Belov & Vovchok, 2016)

Figure 8: Implementation (Belov & Vovchok, 2016)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

SPARK PROGRAMMING 8

Advantages and disadvantages of SPARK

Spark has numerous advantages making it attractive big data methodology. The following are

some of the advantages of Spark (Sankar, 2017):

Has the ability to integrate with Hadoop

It is faster and more reliable

Provide real-time processing

Supports graph processing

It is powerful

It is dynamic in nature

Supports re-usability

Fault Tolerance

In-memory computation

Some of the disadvantages include (Kienzler et al., 2018):

Spark has higher latency

It is expensive

Lacks file management

Manual optimization

Experience problems with small files.

Final Thought

Before carrying out experiment with Spark in the lab, I had the notion that Spark is

unpopular, unreliable, and difficult tool to learn. However, after having hands-on experience

with it in the lab, I have actually come to prefer it because of its powerful abilities including

the advantages identified above. It also supports other programming languages such as

Python, Java, and Scala. I will recommend Spark to others because it solves many problems

Advantages and disadvantages of SPARK

Spark has numerous advantages making it attractive big data methodology. The following are

some of the advantages of Spark (Sankar, 2017):

Has the ability to integrate with Hadoop

It is faster and more reliable

Provide real-time processing

Supports graph processing

It is powerful

It is dynamic in nature

Supports re-usability

Fault Tolerance

In-memory computation

Some of the disadvantages include (Kienzler et al., 2018):

Spark has higher latency

It is expensive

Lacks file management

Manual optimization

Experience problems with small files.

Final Thought

Before carrying out experiment with Spark in the lab, I had the notion that Spark is

unpopular, unreliable, and difficult tool to learn. However, after having hands-on experience

with it in the lab, I have actually come to prefer it because of its powerful abilities including

the advantages identified above. It also supports other programming languages such as

Python, Java, and Scala. I will recommend Spark to others because it solves many problems

SPARK PROGRAMMING 9

which cannot be addressed by Hadoop MapReduce. It is also quick and fast and is being

embraced as an industry favourite.

Conclusion

In conclusion, Spark is made up of verification toolset, programming language, and a method

of design which when combined together realizes deployment of error-free application in

high-reliability domains, for instance where security and safety ate primary requirements.

RDD can have any form of Scala, Java, or Python objects like classes that are user-defined.

Creation of RDDs can be achieved through deterministic processes on either data on other

RDDs or on stable storage. Spark has numerous advantages that have been cited in the paper

and offer reliability and no-downtime.

which cannot be addressed by Hadoop MapReduce. It is also quick and fast and is being

embraced as an industry favourite.

Conclusion

In conclusion, Spark is made up of verification toolset, programming language, and a method

of design which when combined together realizes deployment of error-free application in

high-reliability domains, for instance where security and safety ate primary requirements.

RDD can have any form of Scala, Java, or Python objects like classes that are user-defined.

Creation of RDDs can be achieved through deterministic processes on either data on other

RDDs or on stable storage. Spark has numerous advantages that have been cited in the paper

and offer reliability and no-downtime.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

SPARK PROGRAMMING 10

References

Aleksiyants, A., Borisenko, O., Turdakov, D., Sher, A., & Kuznetsov, S. (2015).

Implementing Apache Spark jobs execution and Apache Spark cluster creation for

Openstack Sahara. Proceedings Of The Institute For System Programming Of

RAS, 27(5), 35-48. doi: 10.15514/ispras-2015-27(5)-3

Belov, Y., & Vovchok, S. (2016). Generation of a Social Network Graph by Using Apache

Spark. Modeling And Analysis Of Information Systems, 23(6), 777-783. doi:

10.18255/1818-1015-2016-6-777-783

DataFlair, T. (2016). Apache Storm vs Spark Streaming - Feature wise Comparison -

DataFlair. Retrieved from https://data-flair.training/blogs/apache-storm-vs-spark-

streaming/

Kienzler, R. (2017). Mastering Apache Spark 2.x - Second Edition. Birmingham: Packt

Publishing.

Kienzler, R., Karim, R., Alla, S., Amirghodsi, S., Rajendran, M., Hall, B., & Mei, S.

(2018). Apache Spark 2. Birmingham: Packt Publishing Ltd.

Sankar, A. (2017). Advantages of Apache Spark ™ - Analytics Training Blog. Retrieved

from https://analyticstraining.com/advantages-apache-spark/

Sullins, B. (2017). Apache Spark Essential Training. [Carpinteria, Calif.]: Lynda.com.

References

Aleksiyants, A., Borisenko, O., Turdakov, D., Sher, A., & Kuznetsov, S. (2015).

Implementing Apache Spark jobs execution and Apache Spark cluster creation for

Openstack Sahara. Proceedings Of The Institute For System Programming Of

RAS, 27(5), 35-48. doi: 10.15514/ispras-2015-27(5)-3

Belov, Y., & Vovchok, S. (2016). Generation of a Social Network Graph by Using Apache

Spark. Modeling And Analysis Of Information Systems, 23(6), 777-783. doi:

10.18255/1818-1015-2016-6-777-783

DataFlair, T. (2016). Apache Storm vs Spark Streaming - Feature wise Comparison -

DataFlair. Retrieved from https://data-flair.training/blogs/apache-storm-vs-spark-

streaming/

Kienzler, R. (2017). Mastering Apache Spark 2.x - Second Edition. Birmingham: Packt

Publishing.

Kienzler, R., Karim, R., Alla, S., Amirghodsi, S., Rajendran, M., Hall, B., & Mei, S.

(2018). Apache Spark 2. Birmingham: Packt Publishing Ltd.

Sankar, A. (2017). Advantages of Apache Spark ™ - Analytics Training Blog. Retrieved

from https://analyticstraining.com/advantages-apache-spark/

Sullins, B. (2017). Apache Spark Essential Training. [Carpinteria, Calif.]: Lynda.com.

1 out of 10

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.