Report on Supervised Learning: Models, Algorithms, and Applications

VerifiedAdded on 2020/03/04

|22

|5751

|79

Report

AI Summary

This report delves into the field of supervised learning, a crucial branch of machine learning within Artificial Intelligence. It begins with an abstract defining machine learning and its applications in areas such as pattern and speech recognition, and then details the concept of supervised learning. The report provides a comprehensive overview of supervised learning, including the supervised learning model and its algorithms, exploring the process of classification, the use of training and testing datasets, and the steps involved in solving supervised learning problems. Furthermore, it discusses key issues to consider when designing supervised learning methods, such as the trade-off between bias and variance, the complexity of the classifier function, and the dimensionality of the input space. The report also outlines popular supervised learning algorithms, like Decision Trees, and their respective advantages, concluding with a summary of the core concepts and their applications.

SUPERVISED LEARNING 1

SUPERVISED LEARNING

SUPERVISED LEARNING

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

SUPERVISED LEARNING 2

Abstract

Machine learning is a field of Artificial intelligence which imparts the computer applications the

ability to learn from the inputs. The field of machine learning deals with the study of algorithms

which have the ability to learn from the training or historical data and make predictions or

classification of that input data by building a model from the sample inputs. Due to their ability

to learn from the input data, classification and prediction machine learning has a wide range of

application from pattern recognition, speech recognition, medical diagnosis applications,

intrusion detection systems, congestion detection systems, optical character recognition,

computer vision etc. Generally, the machine learning methods are classified as supervised

learning, unsupervised learning, and reinforcement learning methods. Supervised learning

method system is presented with a set of input condition and an instructor providing the desired

output, thus making the system understand what to expect for an answer in a certain scenario,

provides the desired outputs. The supervised learning method is the most popular machine

learning method due to its ease of use and flexibility it offers. This work explores supervised

learning in more detailed manner.

Keywords: Machine learning, Supervised learning.

Abstract

Machine learning is a field of Artificial intelligence which imparts the computer applications the

ability to learn from the inputs. The field of machine learning deals with the study of algorithms

which have the ability to learn from the training or historical data and make predictions or

classification of that input data by building a model from the sample inputs. Due to their ability

to learn from the input data, classification and prediction machine learning has a wide range of

application from pattern recognition, speech recognition, medical diagnosis applications,

intrusion detection systems, congestion detection systems, optical character recognition,

computer vision etc. Generally, the machine learning methods are classified as supervised

learning, unsupervised learning, and reinforcement learning methods. Supervised learning

method system is presented with a set of input condition and an instructor providing the desired

output, thus making the system understand what to expect for an answer in a certain scenario,

provides the desired outputs. The supervised learning method is the most popular machine

learning method due to its ease of use and flexibility it offers. This work explores supervised

learning in more detailed manner.

Keywords: Machine learning, Supervised learning.

SUPERVISED LEARNING 3

Table of Contents

Abstract............................................................................................................................................2

Introduction......................................................................................................................................4

Supervised learning.....................................................................................................................5

Supervised learning model and algorithm...............................................................................6

Issues to be considered in supervised learning........................................................................8

Algorithm of supervised learning................................................................................................9

Applications of supervised learning..............................................................................................15

Conclusion.....................................................................................................................................16

References......................................................................................................................................17

Table of Contents

Abstract............................................................................................................................................2

Introduction......................................................................................................................................4

Supervised learning.....................................................................................................................5

Supervised learning model and algorithm...............................................................................6

Issues to be considered in supervised learning........................................................................8

Algorithm of supervised learning................................................................................................9

Applications of supervised learning..............................................................................................15

Conclusion.....................................................................................................................................16

References......................................................................................................................................17

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

SUPERVISED LEARNING 4

Introduction

Machine Learning (ML) could be termed as a branch of Artificial Intelligence, as they contain

the methods, which allows the computers and systems to act more smartly. The result is a unique

way of cumulative working of various functions in an orderly manner, rather than simple data

insertion and retrieval from applications like database and others, making the machines take

better decision are different situations with minimal input from the user. Machine learning is a

branch of study, grouped from various different fields, such as computer science, statistics,

biology, and psychology. The base functionality of Machine Learning is to identify the best

Predictor model for making decisions, by analyzing and learning from previous scenarios, which

is the job of a Classifier. The job of Classification is the prediction of unknown scenarios

(output) by analyzing known scenarios (input). The process of classification is performed over

data set D comprising of the following objects:

Let the set size be {X1, X2, |X|} where |X| signifies the attributes count of the set X

Class label is Y then the target attribute; ? y1, y2, |Y|} where Y is the number of classes and Y

2.

Then the basic goal of the machine learning is prediction or classification over the dataset D,

such that it relates the attributes in X and classes in Y (Mohri et al, 2012).

Classification of Machine Learning is on the basis of the type of input signal or feedback

received by the learning system. These are as follows:

Introduction

Machine Learning (ML) could be termed as a branch of Artificial Intelligence, as they contain

the methods, which allows the computers and systems to act more smartly. The result is a unique

way of cumulative working of various functions in an orderly manner, rather than simple data

insertion and retrieval from applications like database and others, making the machines take

better decision are different situations with minimal input from the user. Machine learning is a

branch of study, grouped from various different fields, such as computer science, statistics,

biology, and psychology. The base functionality of Machine Learning is to identify the best

Predictor model for making decisions, by analyzing and learning from previous scenarios, which

is the job of a Classifier. The job of Classification is the prediction of unknown scenarios

(output) by analyzing known scenarios (input). The process of classification is performed over

data set D comprising of the following objects:

Let the set size be {X1, X2, |X|} where |X| signifies the attributes count of the set X

Class label is Y then the target attribute; ? y1, y2, |Y|} where Y is the number of classes and Y

2.

Then the basic goal of the machine learning is prediction or classification over the dataset D,

such that it relates the attributes in X and classes in Y (Mohri et al, 2012).

Classification of Machine Learning is on the basis of the type of input signal or feedback

received by the learning system. These are as follows:

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

SUPERVISED LEARNING 5

Supervised learning: System is presented with a set of input condition and an instructor

providing the desired output, thus making the system understand what to expect for an answer in

a certain scenario, provides the desired outputs (Zhang et al, 2011).

Unsupervised learning: There are no markers in the learning methodology, thus stranding the

system to discover patterns in the inputs. This type of learning can become a target in itself

(unraveling pattern in data) or the end game (feature learning).

Reinforcement learning: System is challenged with an unprecedented environment to perform

(for instance driving or playing against an opponent). The system is either rewarded or

punished, based on the performance it displayed while navigating in the problem sphere.

This paper deals with Supervised Learning, the various processes and all the functionalities.

Supervised learning

This type of learning is based on understanding the mapping of certain attributes and functions in

a predefined scenario, then using the knowledge gathered in this process to make a decision

based on the mapping learned, in unprecedented scenarios. This way of learning is very

important and is a key functionality in multimedia processing (Mohri et al, 2012).

In supervised learning a computer model represents a learner system which contains two set of

data namely the training data set and the other set is the testing data set. For example consider a

system for classification of a particular disease. In such scenario a system will use a data set

which contains records of the patients with their diseases. This record is split into training data

set and the testing data set. The idea behind this type of learning is to train the learner system

with all possible outcomes in the training set, so it can perform with the highest percentage of

accuracy in the test set. Which means, the target of a learner is to make out the pattern in the

Supervised learning: System is presented with a set of input condition and an instructor

providing the desired output, thus making the system understand what to expect for an answer in

a certain scenario, provides the desired outputs (Zhang et al, 2011).

Unsupervised learning: There are no markers in the learning methodology, thus stranding the

system to discover patterns in the inputs. This type of learning can become a target in itself

(unraveling pattern in data) or the end game (feature learning).

Reinforcement learning: System is challenged with an unprecedented environment to perform

(for instance driving or playing against an opponent). The system is either rewarded or

punished, based on the performance it displayed while navigating in the problem sphere.

This paper deals with Supervised Learning, the various processes and all the functionalities.

Supervised learning

This type of learning is based on understanding the mapping of certain attributes and functions in

a predefined scenario, then using the knowledge gathered in this process to make a decision

based on the mapping learned, in unprecedented scenarios. This way of learning is very

important and is a key functionality in multimedia processing (Mohri et al, 2012).

In supervised learning a computer model represents a learner system which contains two set of

data namely the training data set and the other set is the testing data set. For example consider a

system for classification of a particular disease. In such scenario a system will use a data set

which contains records of the patients with their diseases. This record is split into training data

set and the testing data set. The idea behind this type of learning is to train the learner system

with all possible outcomes in the training set, so it can perform with the highest percentage of

accuracy in the test set. Which means, the target of a learner is to make out the pattern in the

SUPERVISED LEARNING 6

inputs provided in the test set and find a solution from what it has learned in the training set? The

classification then can be the categories of diseases for the given example. Similarly the training

set might include pictures of dogs like terrier and spaniel, along with the identification of each,

now the test set would include another group of unidentified images but of the same set. The

target for the learner is to design a rule to guide itself towards a solution in un-known scenarios

(Mohri et al, 2012).

In supervised learning the training set comprises of various ordered pairs like (a1, b1), (a2,

b2), ...,(an, bn), where each ai is represents the set of measurements of a single example data

point, and bi is the label for that data point. Consider an example where, a ai might be a group of

five attributes for a cricket match, such as run-rate, wickets in hand, strike rate, fielding plan and

individual performance. In such case the corresponding bi would be a classification of the game

as a ‘win” or “loose”. Generally a testing data is comprises of data but without labels: (an+1,

an+2... an+m). As discussed earlier, the target is to make an educated guess in the test set about

“win” or “loose” by using the learning achieved in training set (Mohri et al, 2012).

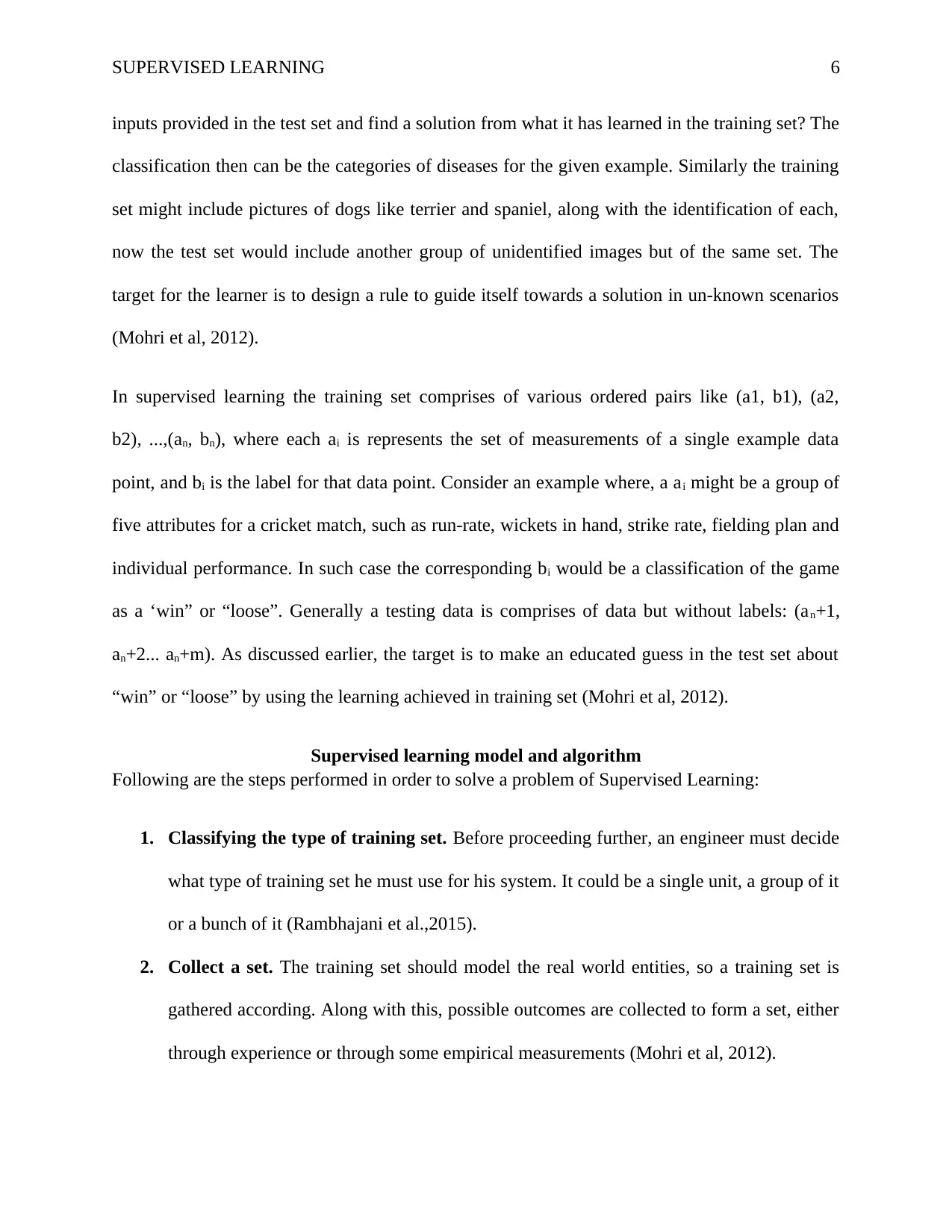

Supervised learning model and algorithm

Following are the steps performed in order to solve a problem of Supervised Learning:

1. Classifying the type of training set. Before proceeding further, an engineer must decide

what type of training set he must use for his system. It could be a single unit, a group of it

or a bunch of it (Rambhajani et al.,2015).

2. Collect a set. The training set should model the real world entities, so a training set is

gathered according. Along with this, possible outcomes are collected to form a set, either

through experience or through some empirical measurements (Mohri et al, 2012).

inputs provided in the test set and find a solution from what it has learned in the training set? The

classification then can be the categories of diseases for the given example. Similarly the training

set might include pictures of dogs like terrier and spaniel, along with the identification of each,

now the test set would include another group of unidentified images but of the same set. The

target for the learner is to design a rule to guide itself towards a solution in un-known scenarios

(Mohri et al, 2012).

In supervised learning the training set comprises of various ordered pairs like (a1, b1), (a2,

b2), ...,(an, bn), where each ai is represents the set of measurements of a single example data

point, and bi is the label for that data point. Consider an example where, a ai might be a group of

five attributes for a cricket match, such as run-rate, wickets in hand, strike rate, fielding plan and

individual performance. In such case the corresponding bi would be a classification of the game

as a ‘win” or “loose”. Generally a testing data is comprises of data but without labels: (an+1,

an+2... an+m). As discussed earlier, the target is to make an educated guess in the test set about

“win” or “loose” by using the learning achieved in training set (Mohri et al, 2012).

Supervised learning model and algorithm

Following are the steps performed in order to solve a problem of Supervised Learning:

1. Classifying the type of training set. Before proceeding further, an engineer must decide

what type of training set he must use for his system. It could be a single unit, a group of it

or a bunch of it (Rambhajani et al.,2015).

2. Collect a set. The training set should model the real world entities, so a training set is

gathered according. Along with this, possible outcomes are collected to form a set, either

through experience or through some empirical measurements (Mohri et al, 2012).

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

SUPERVISED LEARNING 7

3. Ascertain the input predictive model of the educated function. Learned function's

accuracy is directly dependent on the representation of the input. Basically, the input is

converted to a feature vector, comprising of various features to model the object. The

features should neither be too large in number to confuse the prediction nor should they

be too small in number to strangle the decision-making capabilities (Mohri et al, 2012).

4. Ascertain the model of the predictor function and the method employed. The choice

may be any model of support vector machine, neural network, decision tree etc.

5. Design finalization. Repetitive use of the carved out method to sharpen the accuracy.

Some of the methods may require strengthening of a feature by practicing over a subset

or through cross-referencing (Rambhajani et al.,2015).

6. Calculate method's accuracy. Once the accuracy is achieved on the training set, the

system must be presented with a test set to ascertain the final accuracy in real world

scenario (Mohri et al, 2012).

Figure 1: Supervised learning model

3. Ascertain the input predictive model of the educated function. Learned function's

accuracy is directly dependent on the representation of the input. Basically, the input is

converted to a feature vector, comprising of various features to model the object. The

features should neither be too large in number to confuse the prediction nor should they

be too small in number to strangle the decision-making capabilities (Mohri et al, 2012).

4. Ascertain the model of the predictor function and the method employed. The choice

may be any model of support vector machine, neural network, decision tree etc.

5. Design finalization. Repetitive use of the carved out method to sharpen the accuracy.

Some of the methods may require strengthening of a feature by practicing over a subset

or through cross-referencing (Rambhajani et al.,2015).

6. Calculate method's accuracy. Once the accuracy is achieved on the training set, the

system must be presented with a test set to ascertain the final accuracy in real world

scenario (Mohri et al, 2012).

Figure 1: Supervised learning model

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

SUPERVISED LEARNING 8

The base step in supervised learning is handling the dataset. A subject matter expert could be

employed to help with feature selection from a given data set. When a subject matter expert is

not available then the second best method to ascertain the feature is "Brute Force" method, the

use of empirical measurements, judging every possible scenario with all factors in mind and

using statistical techniques for arriving at a conclusion, i.e. a feature. This method although is not

applicable in all the cases like for a perfect induction, as in all of these cases this feature

comprises of significant noise which is to be removed before final induction, thus creating a

requirement of over-head pre-processing of the data set. The next step would deal with

information preparation and pre-process to be used in Assisted Machine Learning (AML)

(Rambhajani et al.,2015).

There are various techniques available from several researchers to deal with missing data.

Rambhajani et al.(2015), performed a survey and deducted a method to remove noise from the

system. Zaremba et al (2016), have also deduced another method for noise removal and is used

in several other systems. Konkol (2014), has made a comparative study on six different noise

removal techniques by working over base data sets and using a hypothetical test data set

(Rambhajani et al.,2015).

Issues to be considered in supervised learning

The main issues which are to be considered while designing the supervised learning method are

as follows:

a. Trade-off between the bias and the variance. Consider a scenario having different set of

training data. In such scenario if the learning model tends to be biased to a particular

The base step in supervised learning is handling the dataset. A subject matter expert could be

employed to help with feature selection from a given data set. When a subject matter expert is

not available then the second best method to ascertain the feature is "Brute Force" method, the

use of empirical measurements, judging every possible scenario with all factors in mind and

using statistical techniques for arriving at a conclusion, i.e. a feature. This method although is not

applicable in all the cases like for a perfect induction, as in all of these cases this feature

comprises of significant noise which is to be removed before final induction, thus creating a

requirement of over-head pre-processing of the data set. The next step would deal with

information preparation and pre-process to be used in Assisted Machine Learning (AML)

(Rambhajani et al.,2015).

There are various techniques available from several researchers to deal with missing data.

Rambhajani et al.(2015), performed a survey and deducted a method to remove noise from the

system. Zaremba et al (2016), have also deduced another method for noise removal and is used

in several other systems. Konkol (2014), has made a comparative study on six different noise

removal techniques by working over base data sets and using a hypothetical test data set

(Rambhajani et al.,2015).

Issues to be considered in supervised learning

The main issues which are to be considered while designing the supervised learning method are

as follows:

a. Trade-off between the bias and the variance. Consider a scenario having different set of

training data. In such scenario if the learning model tends to be biased to a particular

SUPERVISED LEARNING 9

variable y then while training on these data set the model gives incorrect prediction to the

exact value for y. Similarly if the model has high variance for a input y then it predicts

different output values for that variable for different training sets. Generally the

prediction error of any learning model is sum of the bias and the variance of the model.

Thus, there exists a tradeoff between the bias and the variance of the model. In case the

learning model is too flexible in nature then it will make arrangements within the training

data sets differently and thus shall exhibit high variance.

b. The complexity of the function of the classifier and the relative quantity of the training

data is the second issue. In case the function of the classifier is a simple function then it

tends to be inflexible in nature and this result in the learning model having high bias and

low variance values and thus shall be capable to learn from a very small quantity of the

training data. On the other hand if the function is complex in nature than it requires high

number of data set as the learning model shall be flexible with low bias and high variance

making (Marsland, 2015).

c. The dimensionality of the input space also poses challenges to the learning model. In case

the input feature vectors comprise of high dimensions than it becomes difficult for the

learning model to work upon as high dimensions causes the model to have high

variances. Thus the input dimensions need to have low variances and high bias for correct

learning model of the classifier (Marsland, 2015).

d. Another issue which arises is the noise coming from the output values. In case the desired

output values comprise of error due to human error or errors caused by sensors then the

learning model should never try to compute a function that exactly matches the training

variable y then while training on these data set the model gives incorrect prediction to the

exact value for y. Similarly if the model has high variance for a input y then it predicts

different output values for that variable for different training sets. Generally the

prediction error of any learning model is sum of the bias and the variance of the model.

Thus, there exists a tradeoff between the bias and the variance of the model. In case the

learning model is too flexible in nature then it will make arrangements within the training

data sets differently and thus shall exhibit high variance.

b. The complexity of the function of the classifier and the relative quantity of the training

data is the second issue. In case the function of the classifier is a simple function then it

tends to be inflexible in nature and this result in the learning model having high bias and

low variance values and thus shall be capable to learn from a very small quantity of the

training data. On the other hand if the function is complex in nature than it requires high

number of data set as the learning model shall be flexible with low bias and high variance

making (Marsland, 2015).

c. The dimensionality of the input space also poses challenges to the learning model. In case

the input feature vectors comprise of high dimensions than it becomes difficult for the

learning model to work upon as high dimensions causes the model to have high

variances. Thus the input dimensions need to have low variances and high bias for correct

learning model of the classifier (Marsland, 2015).

d. Another issue which arises is the noise coming from the output values. In case the desired

output values comprise of error due to human error or errors caused by sensors then the

learning model should never try to compute a function that exactly matches the training

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

SUPERVISED LEARNING 10

examples. Doing such execution may lead to over fitting of the function (Marsland,

2015).

Algorithm of supervised learning

Supervised learning has attracted researchers and thus many types of efficient algorithms have

been designed for supervised learning. Many of these algorithms have been used for wide range

of applications like for classification of diseases and non-disease attributes, pattern recognition,

speech recognition, etc (Ling et al, 2015; Nguyen et al ,2014; Marsland, 2015). Each algorithm

of supervised learning has its own set of advantages and disadvantages. Still, there exists no

single algorithm that can be used on all supervised learning problem. Some of the popular

supervised learning algorithms are as follows:

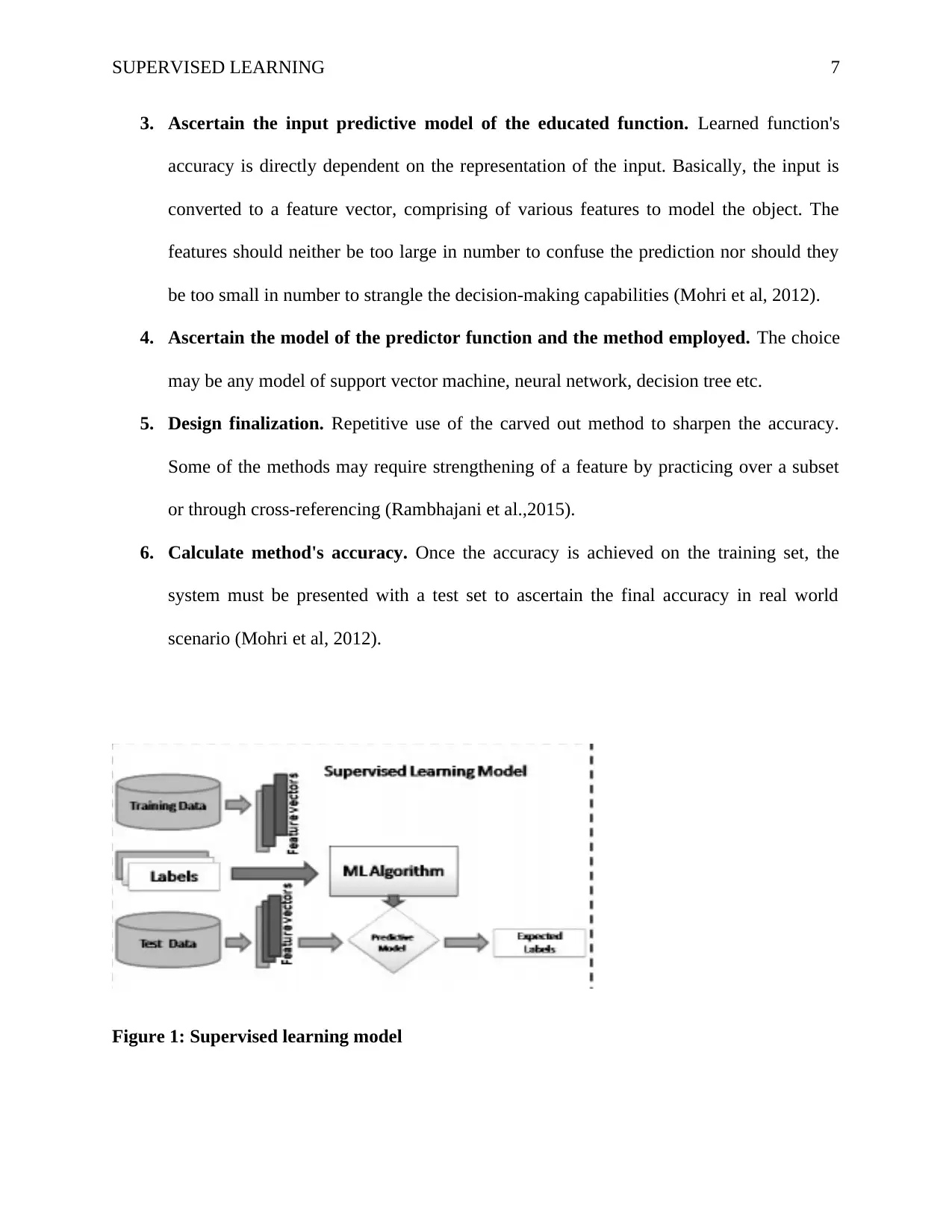

a. Decision Trees:

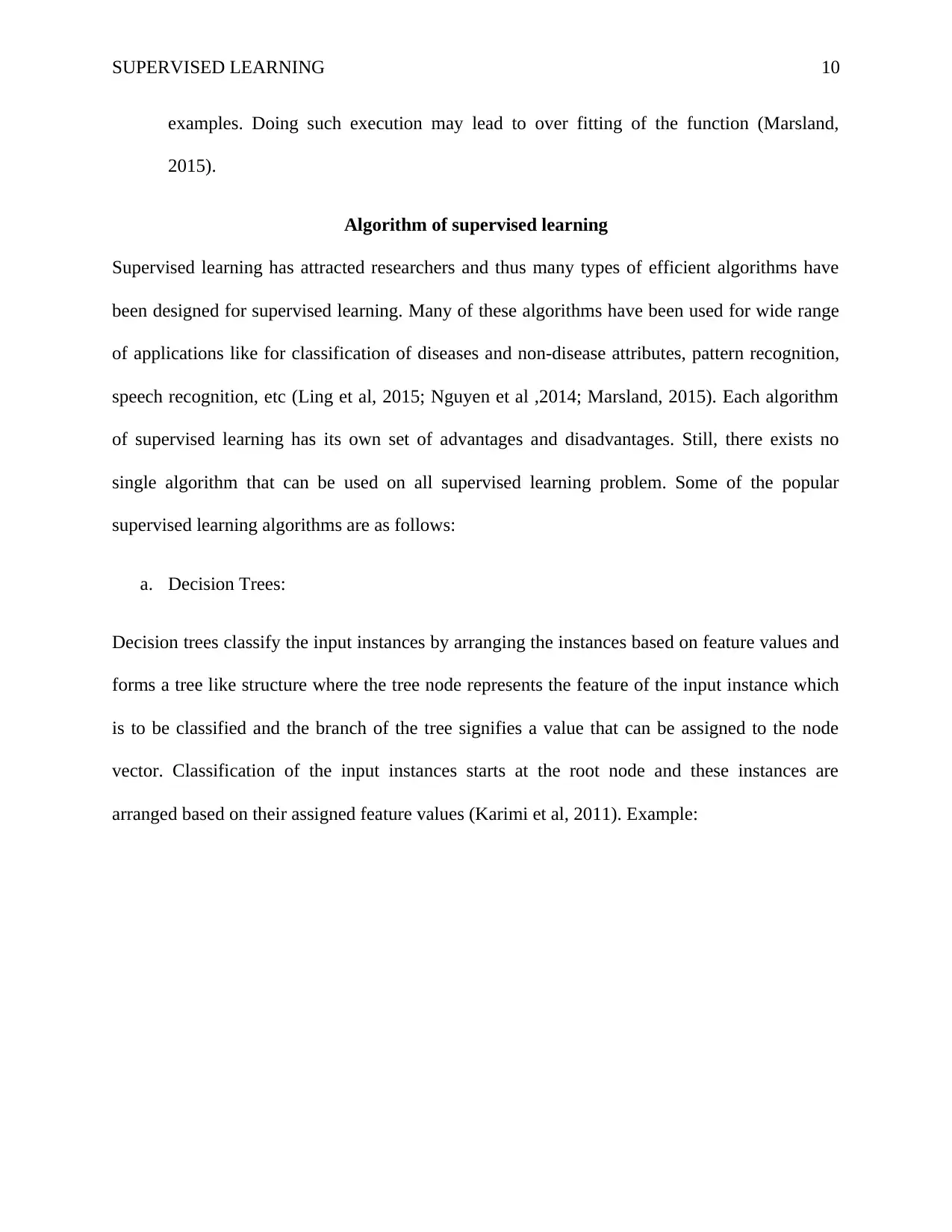

Decision trees classify the input instances by arranging the instances based on feature values and

forms a tree like structure where the tree node represents the feature of the input instance which

is to be classified and the branch of the tree signifies a value that can be assigned to the node

vector. Classification of the input instances starts at the root node and these instances are

arranged based on their assigned feature values (Karimi et al, 2011). Example:

examples. Doing such execution may lead to over fitting of the function (Marsland,

2015).

Algorithm of supervised learning

Supervised learning has attracted researchers and thus many types of efficient algorithms have

been designed for supervised learning. Many of these algorithms have been used for wide range

of applications like for classification of diseases and non-disease attributes, pattern recognition,

speech recognition, etc (Ling et al, 2015; Nguyen et al ,2014; Marsland, 2015). Each algorithm

of supervised learning has its own set of advantages and disadvantages. Still, there exists no

single algorithm that can be used on all supervised learning problem. Some of the popular

supervised learning algorithms are as follows:

a. Decision Trees:

Decision trees classify the input instances by arranging the instances based on feature values and

forms a tree like structure where the tree node represents the feature of the input instance which

is to be classified and the branch of the tree signifies a value that can be assigned to the node

vector. Classification of the input instances starts at the root node and these instances are

arranged based on their assigned feature values (Karimi et al, 2011). Example:

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

SUPERVISED LEARNING 11

Figure 2: Decision tree and data

In the figure above there is a decision tree for the data given in the table on the right side. In this

example an input instance comprising of at1 with value a1 at2 with a2 etc. can be arranged and

classified as a class of Yes or No (Kamiński et al, 2017).

Decision trees are widely used in the classification of input data as they are flexible to be used

for wide range of classification problems. Generally, the tree path or the resulting set of the rules

of the tree are mutually exclusive which makes each data of the input to be covered in a single

rule. This enhances the accuracy of the predictive systems and makes them more scalable

(Kamiński et al, 2017).

b. Naïve Bayes Classifier:

Naïve Bayes classifier comes under the group of Bayesian network based classifier which is

generally statistical learning algorithms (Huang et al, 2014). The Naïve Bayes Classifier

networks comprise of directed acyclic graphs where there is one parent which represents the un

observed node and many children nodes representing the observed nodes. There is a sting

assumption of independence among the children nodes (Archana et al, 2014).

Figure 2: Decision tree and data

In the figure above there is a decision tree for the data given in the table on the right side. In this

example an input instance comprising of at1 with value a1 at2 with a2 etc. can be arranged and

classified as a class of Yes or No (Kamiński et al, 2017).

Decision trees are widely used in the classification of input data as they are flexible to be used

for wide range of classification problems. Generally, the tree path or the resulting set of the rules

of the tree are mutually exclusive which makes each data of the input to be covered in a single

rule. This enhances the accuracy of the predictive systems and makes them more scalable

(Kamiński et al, 2017).

b. Naïve Bayes Classifier:

Naïve Bayes classifier comes under the group of Bayesian network based classifier which is

generally statistical learning algorithms (Huang et al, 2014). The Naïve Bayes Classifier

networks comprise of directed acyclic graphs where there is one parent which represents the un

observed node and many children nodes representing the observed nodes. There is a sting

assumption of independence among the children nodes (Archana et al, 2014).

SUPERVISED LEARNING 12

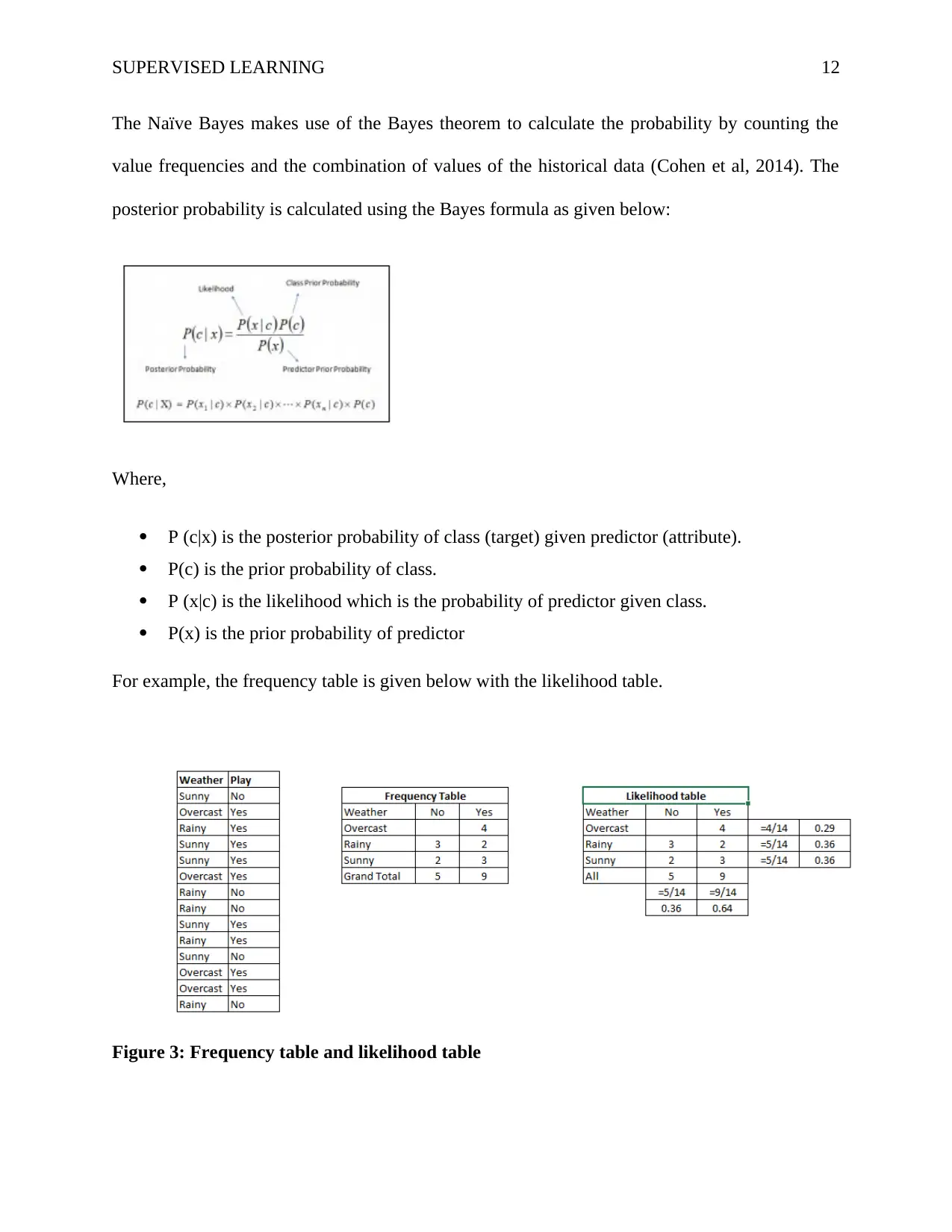

The Naïve Bayes makes use of the Bayes theorem to calculate the probability by counting the

value frequencies and the combination of values of the historical data (Cohen et al, 2014). The

posterior probability is calculated using the Bayes formula as given below:

Where,

P (c|x) is the posterior probability of class (target) given predictor (attribute).

P(c) is the prior probability of class.

P (x|c) is the likelihood which is the probability of predictor given class.

P(x) is the prior probability of predictor

For example, the frequency table is given below with the likelihood table.

Figure 3: Frequency table and likelihood table

The Naïve Bayes makes use of the Bayes theorem to calculate the probability by counting the

value frequencies and the combination of values of the historical data (Cohen et al, 2014). The

posterior probability is calculated using the Bayes formula as given below:

Where,

P (c|x) is the posterior probability of class (target) given predictor (attribute).

P(c) is the prior probability of class.

P (x|c) is the likelihood which is the probability of predictor given class.

P(x) is the prior probability of predictor

For example, the frequency table is given below with the likelihood table.

Figure 3: Frequency table and likelihood table

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 22

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2025 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.