MTEST Application: Test Case Identification and Software Failures

VerifiedAdded on 2023/03/31

|6

|1005

|407

Report

AI Summary

This report delves into the identification of test cases for the MTEST application, a marking and grading system, using several software testing techniques including boundary value analysis (BVA), equivalence class partitioning, negative test cases, and error guessing. The report provides a real-world example of software failure, highlighting a security flaw in an international airline's mobile boarding system. It details the design of test cases for MTEST, focusing on validating record sets for titles, question counts, and student names. The application of BVA is explained with specific boundary values (0, 1, 999, 1000) and corresponding test cases. Furthermore, the report discusses error guessing as a technique, providing a test case related to incorrect column data in input files. The overall aim is to ensure the MTEST application accurately meets its requirements through comprehensive testing.

Running head: TEST CASE IDENTIFICATION FOR MTEST

Test Case Identification for Mtest

Name of the Student

Name of the University

Author Note

Test Case Identification for Mtest

Name of the Student

Name of the University

Author Note

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1

TEST CASE IDENTIFICATION FOR MTEST

Question 1: Real world example of software failure

Various factors can lead to software failures. These factors can be - upgrading of

applications without ensuring changes regression testing and retesting of the software after

the changes (Harman, Jia & Zhang, 2015). Security of a software also plays an important

role. Applications with vulnerabilities present are easier to exploit (Briand et al, 2016). Bugs

and glitches of software applications lead to inaccurate outcome from the software.

A major security flaw got discovered by an international airline which would allow

anyone having computers with the valid URLs in accessing and manipulating the boarding

passes of passenger flights. The software was a mobile boarding system called TSA. Though

this flaw got timely addressed by the airline and have denied customer data getting

compromised, alarming questions arose from this issue regarding security of data with air

travel becoming more and more dependent on software applications in managing all the

complexities involved.

Here the prompt action of the airline in notifying the vendor helped in resolving the

issues with the fix immediately being released so that the bug cannot get exploited any

further. The fix ensured that the software checked all the several layers of security which

prevents customers from making use of boarding passes that were not theirs’. Since the

duration of the failure was very short, the incident did affect the aviation industry by much.

TEST CASE IDENTIFICATION FOR MTEST

Question 1: Real world example of software failure

Various factors can lead to software failures. These factors can be - upgrading of

applications without ensuring changes regression testing and retesting of the software after

the changes (Harman, Jia & Zhang, 2015). Security of a software also plays an important

role. Applications with vulnerabilities present are easier to exploit (Briand et al, 2016). Bugs

and glitches of software applications lead to inaccurate outcome from the software.

A major security flaw got discovered by an international airline which would allow

anyone having computers with the valid URLs in accessing and manipulating the boarding

passes of passenger flights. The software was a mobile boarding system called TSA. Though

this flaw got timely addressed by the airline and have denied customer data getting

compromised, alarming questions arose from this issue regarding security of data with air

travel becoming more and more dependent on software applications in managing all the

complexities involved.

Here the prompt action of the airline in notifying the vendor helped in resolving the

issues with the fix immediately being released so that the bug cannot get exploited any

further. The fix ensured that the software checked all the several layers of security which

prevents customers from making use of boarding passes that were not theirs’. Since the

duration of the failure was very short, the incident did affect the aviation industry by much.

2

TEST CASE IDENTIFICATION FOR MTEST

Question 2: Identifying the test cases according to the scenarios created

based on requirements of the software

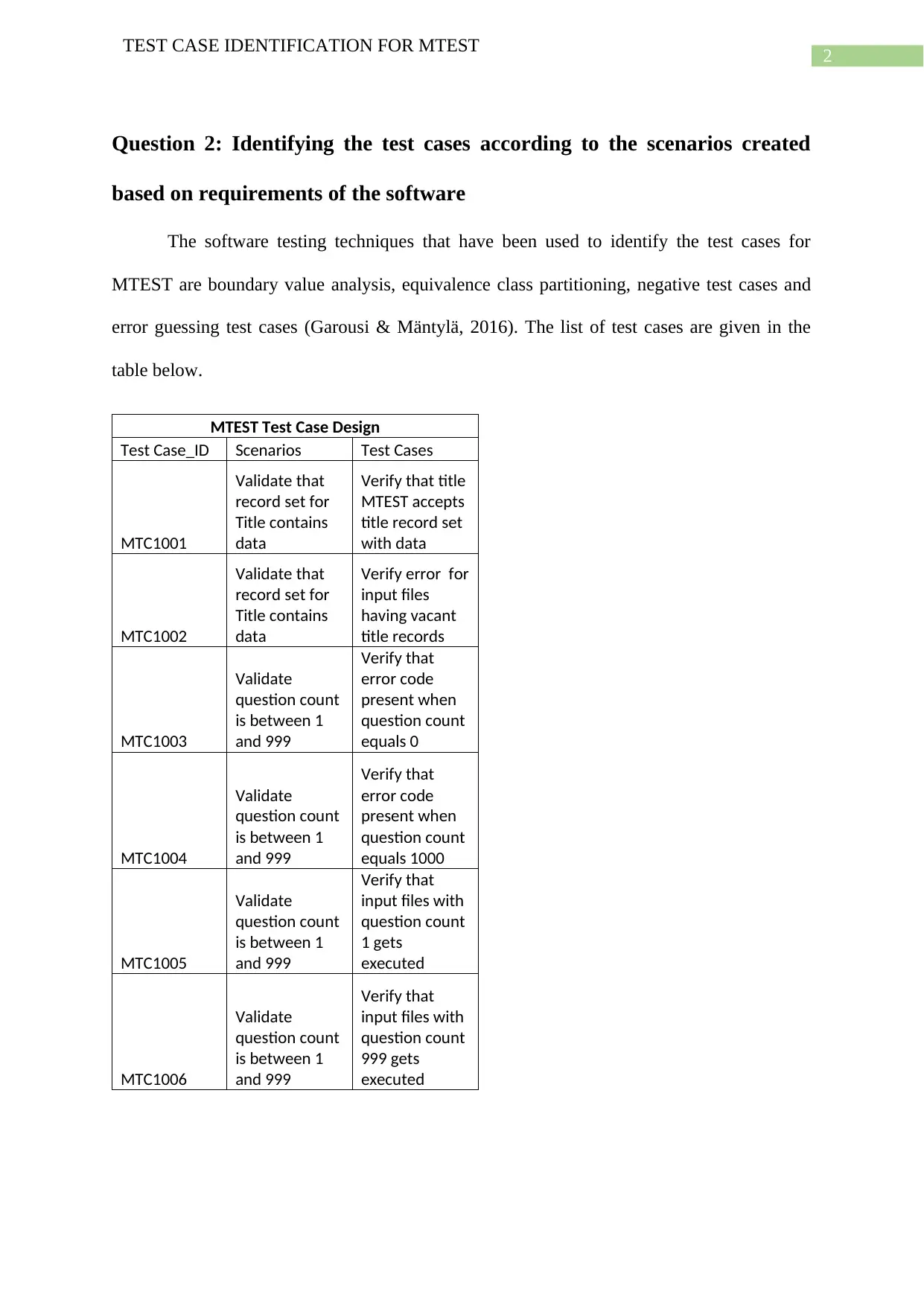

The software testing techniques that have been used to identify the test cases for

MTEST are boundary value analysis, equivalence class partitioning, negative test cases and

error guessing test cases (Garousi & Mäntylä, 2016). The list of test cases are given in the

table below.

MTEST Test Case Design

Test Case_ID Scenarios Test Cases

MTC1001

Validate that

record set for

Title contains

data

Verify that title

MTEST accepts

title record set

with data

MTC1002

Validate that

record set for

Title contains

data

Verify error for

input files

having vacant

title records

MTC1003

Validate

question count

is between 1

and 999

Verify that

error code

present when

question count

equals 0

MTC1004

Validate

question count

is between 1

and 999

Verify that

error code

present when

question count

equals 1000

MTC1005

Validate

question count

is between 1

and 999

Verify that

input files with

question count

1 gets

executed

MTC1006

Validate

question count

is between 1

and 999

Verify that

input files with

question count

999 gets

executed

TEST CASE IDENTIFICATION FOR MTEST

Question 2: Identifying the test cases according to the scenarios created

based on requirements of the software

The software testing techniques that have been used to identify the test cases for

MTEST are boundary value analysis, equivalence class partitioning, negative test cases and

error guessing test cases (Garousi & Mäntylä, 2016). The list of test cases are given in the

table below.

MTEST Test Case Design

Test Case_ID Scenarios Test Cases

MTC1001

Validate that

record set for

Title contains

data

Verify that title

MTEST accepts

title record set

with data

MTC1002

Validate that

record set for

Title contains

data

Verify error for

input files

having vacant

title records

MTC1003

Validate

question count

is between 1

and 999

Verify that

error code

present when

question count

equals 0

MTC1004

Validate

question count

is between 1

and 999

Verify that

error code

present when

question count

equals 1000

MTC1005

Validate

question count

is between 1

and 999

Verify that

input files with

question count

1 gets

executed

MTC1006

Validate

question count

is between 1

and 999

Verify that

input files with

question count

999 gets

executed

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

3

TEST CASE IDENTIFICATION FOR MTEST

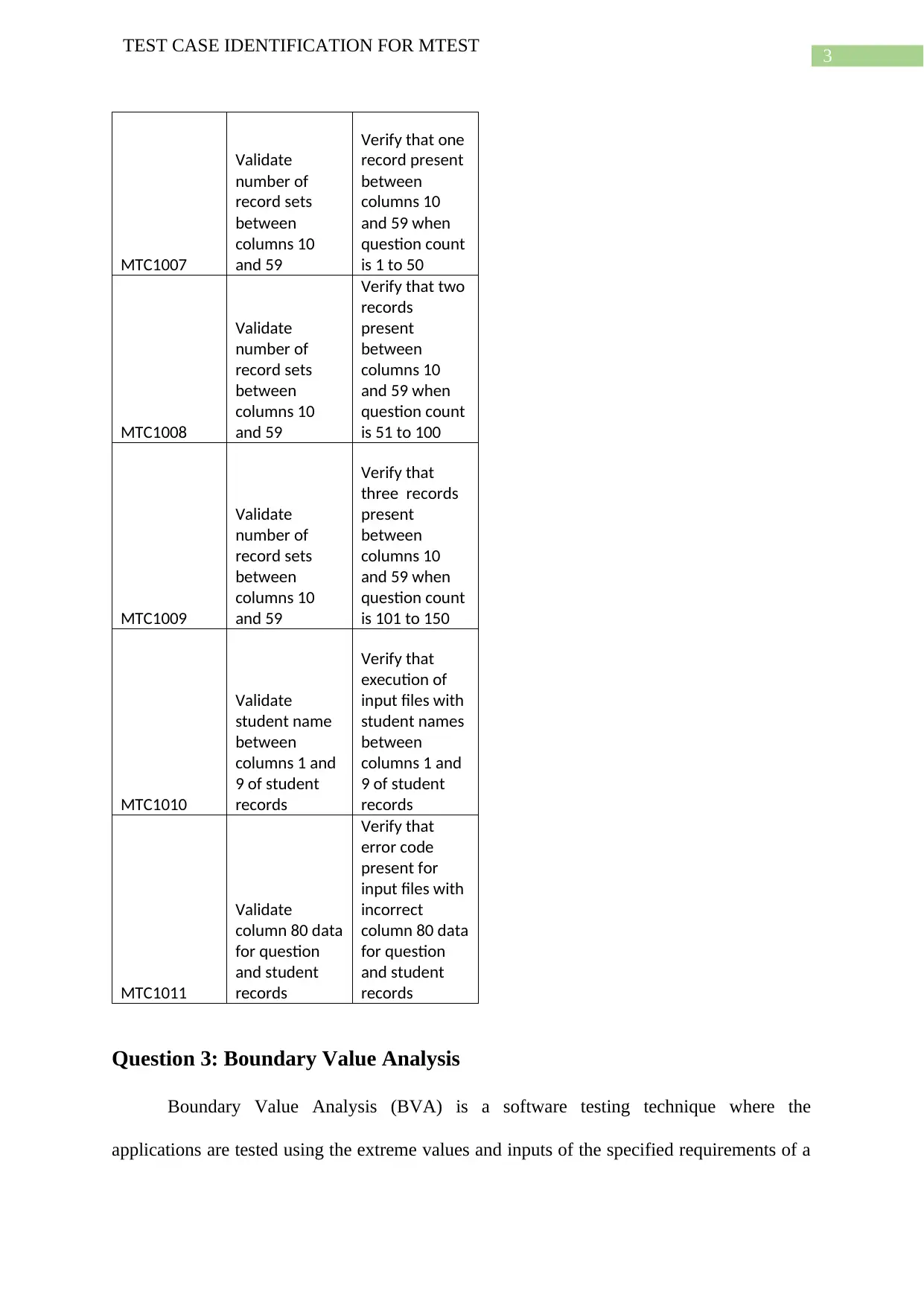

MTC1007

Validate

number of

record sets

between

columns 10

and 59

Verify that one

record present

between

columns 10

and 59 when

question count

is 1 to 50

MTC1008

Validate

number of

record sets

between

columns 10

and 59

Verify that two

records

present

between

columns 10

and 59 when

question count

is 51 to 100

MTC1009

Validate

number of

record sets

between

columns 10

and 59

Verify that

three records

present

between

columns 10

and 59 when

question count

is 101 to 150

MTC1010

Validate

student name

between

columns 1 and

9 of student

records

Verify that

execution of

input files with

student names

between

columns 1 and

9 of student

records

MTC1011

Validate

column 80 data

for question

and student

records

Verify that

error code

present for

input files with

incorrect

column 80 data

for question

and student

records

Question 3: Boundary Value Analysis

Boundary Value Analysis (BVA) is a software testing technique where the

applications are tested using the extreme values and inputs of the specified requirements of a

TEST CASE IDENTIFICATION FOR MTEST

MTC1007

Validate

number of

record sets

between

columns 10

and 59

Verify that one

record present

between

columns 10

and 59 when

question count

is 1 to 50

MTC1008

Validate

number of

record sets

between

columns 10

and 59

Verify that two

records

present

between

columns 10

and 59 when

question count

is 51 to 100

MTC1009

Validate

number of

record sets

between

columns 10

and 59

Verify that

three records

present

between

columns 10

and 59 when

question count

is 101 to 150

MTC1010

Validate

student name

between

columns 1 and

9 of student

records

Verify that

execution of

input files with

student names

between

columns 1 and

9 of student

records

MTC1011

Validate

column 80 data

for question

and student

records

Verify that

error code

present for

input files with

incorrect

column 80 data

for question

and student

records

Question 3: Boundary Value Analysis

Boundary Value Analysis (BVA) is a software testing technique where the

applications are tested using the extreme values and inputs of the specified requirements of a

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

4

TEST CASE IDENTIFICATION FOR MTEST

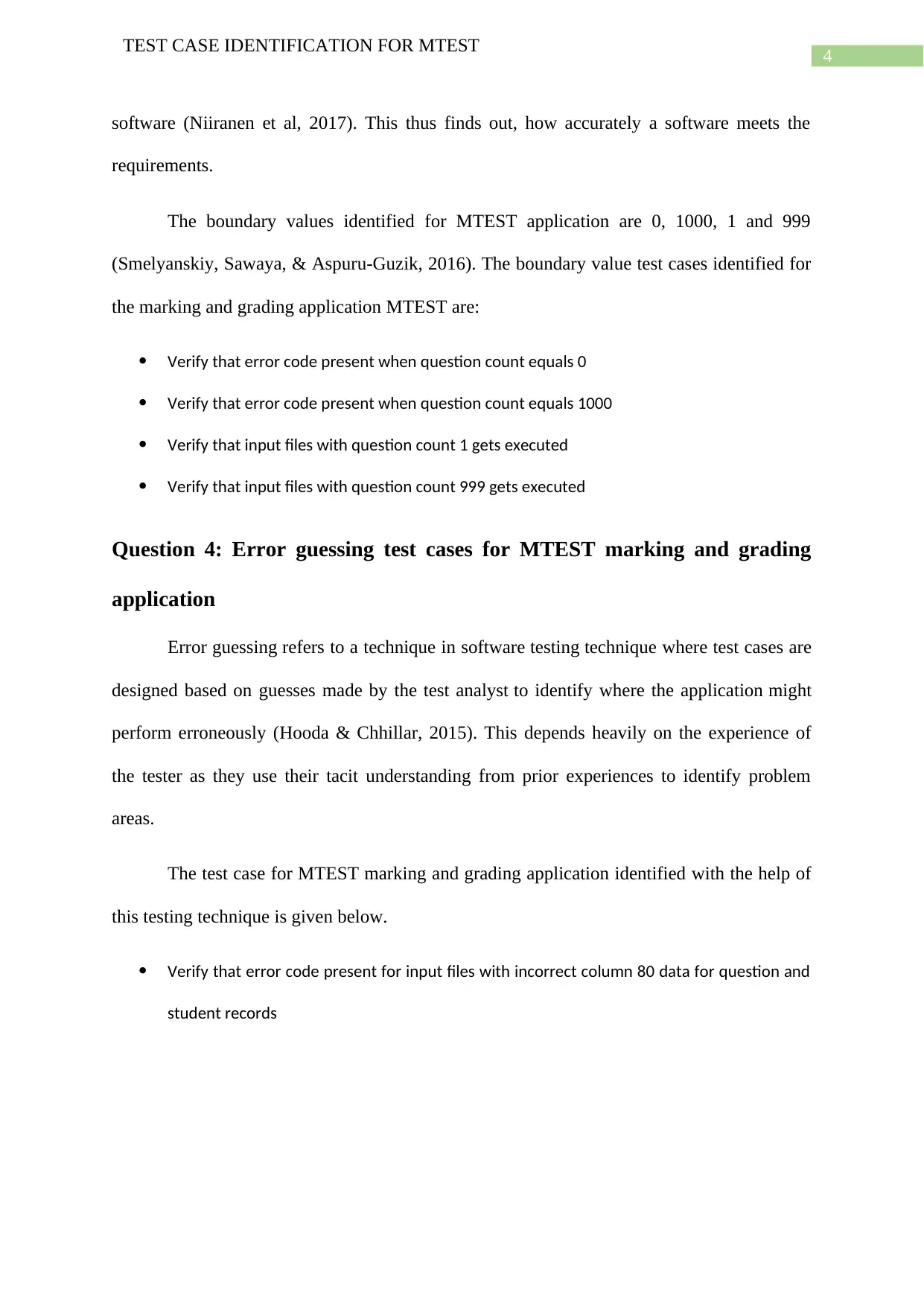

software (Niiranen et al, 2017). This thus finds out, how accurately a software meets the

requirements.

The boundary values identified for MTEST application are 0, 1000, 1 and 999

(Smelyanskiy, Sawaya, & Aspuru-Guzik, 2016). The boundary value test cases identified for

the marking and grading application MTEST are:

Verify that error code present when question count equals 0

Verify that error code present when question count equals 1000

Verify that input files with question count 1 gets executed

Verify that input files with question count 999 gets executed

Question 4: Error guessing test cases for MTEST marking and grading

application

Error guessing refers to a technique in software testing technique where test cases are

designed based on guesses made by the test analyst to identify where the application might

perform erroneously (Hooda & Chhillar, 2015). This depends heavily on the experience of

the tester as they use their tacit understanding from prior experiences to identify problem

areas.

The test case for MTEST marking and grading application identified with the help of

this testing technique is given below.

Verify that error code present for input files with incorrect column 80 data for question and

student records

TEST CASE IDENTIFICATION FOR MTEST

software (Niiranen et al, 2017). This thus finds out, how accurately a software meets the

requirements.

The boundary values identified for MTEST application are 0, 1000, 1 and 999

(Smelyanskiy, Sawaya, & Aspuru-Guzik, 2016). The boundary value test cases identified for

the marking and grading application MTEST are:

Verify that error code present when question count equals 0

Verify that error code present when question count equals 1000

Verify that input files with question count 1 gets executed

Verify that input files with question count 999 gets executed

Question 4: Error guessing test cases for MTEST marking and grading

application

Error guessing refers to a technique in software testing technique where test cases are

designed based on guesses made by the test analyst to identify where the application might

perform erroneously (Hooda & Chhillar, 2015). This depends heavily on the experience of

the tester as they use their tacit understanding from prior experiences to identify problem

areas.

The test case for MTEST marking and grading application identified with the help of

this testing technique is given below.

Verify that error code present for input files with incorrect column 80 data for question and

student records

5

TEST CASE IDENTIFICATION FOR MTEST

References

Briand, L., Nejati, S., Sabetzadeh, M., & Bianculli, D. (2016, May). Testing the untestable:

model testing of complex software-intensive systems. In Proceedings of the 38th

international conference on software engineering companion(pp. 789-792). ACM.

Garousi, V., & Mäntylä, M. V. (2016). When and what to automate in software testing? A

multi-vocal literature review. Information and Software Technology, 76, 92-117.

Harman, M., Jia, Y., & Zhang, Y. (2015, April). Achievements, open problems and

challenges for search based software testing. In 2015 IEEE 8th International

Conference on Software Testing, Verification and Validation (ICST) (pp. 1-12). IEEE.

Hooda, I., & Chhillar, R. S. (2015). Software test process, testing types and

techniques. International Journal of Computer Applications, 111(13).

Niiranen, J., Kiendl, J., Niemi, A. H., & Reali, A. (2017). Isogeometric analysis for sixth-

order boundary value problems of gradient-elastic Kirchhoff plates. Computer

Methods in Applied Mechanics and Engineering, 316, 328-348.

Smelyanskiy, M., Sawaya, N. P., & Aspuru-Guzik, A. (2016). qHiPSTER: the quantum high

performance software testing environment. arXiv preprint arXiv:1601.07195.

TEST CASE IDENTIFICATION FOR MTEST

References

Briand, L., Nejati, S., Sabetzadeh, M., & Bianculli, D. (2016, May). Testing the untestable:

model testing of complex software-intensive systems. In Proceedings of the 38th

international conference on software engineering companion(pp. 789-792). ACM.

Garousi, V., & Mäntylä, M. V. (2016). When and what to automate in software testing? A

multi-vocal literature review. Information and Software Technology, 76, 92-117.

Harman, M., Jia, Y., & Zhang, Y. (2015, April). Achievements, open problems and

challenges for search based software testing. In 2015 IEEE 8th International

Conference on Software Testing, Verification and Validation (ICST) (pp. 1-12). IEEE.

Hooda, I., & Chhillar, R. S. (2015). Software test process, testing types and

techniques. International Journal of Computer Applications, 111(13).

Niiranen, J., Kiendl, J., Niemi, A. H., & Reali, A. (2017). Isogeometric analysis for sixth-

order boundary value problems of gradient-elastic Kirchhoff plates. Computer

Methods in Applied Mechanics and Engineering, 316, 328-348.

Smelyanskiy, M., Sawaya, N. P., & Aspuru-Guzik, A. (2016). qHiPSTER: the quantum high

performance software testing environment. arXiv preprint arXiv:1601.07195.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 6

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.