TorchCraft: a Library for Machine Learning Research on Real-Time Strategy Games

VerifiedAdded on 2023/06/15

|6

|3790

|66

AI Summary

TorchCraft is a library that enables deep learning research on Real-Time Strategy (RTS) games such as StarCraft: Brood War, by making it easier to control these games from a machine learning framework, here Torch. This white paper argues for using RTS games as a benchmark for AI research, and describes the design and components of TorchCraft.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

TorchCraft:a Library for Machine Learning Research

on Real-Time Strategy Games

Gabriel Synnaeve, Nantas Nardelli, Alex Auvolat, Soumith Chintala,

Timothée Lacroix, Zeming Lin, Florian Richoux, Nicolas Usunier

gab@fb.com, nantas@robots.ox.ac.uk

November 7, 2016

Abstract

We present TorchCraft, a library that enables deep learning research on Real-Time

Strategy (RTS) games such as StarCraft:Brood War, by making it easier to control these

games from a machine learning framework, here Torch [9]. This white paper argues for

using RTS games as a benchmark for AI research, and describes the design and components

of TorchCraft.

1 Introduction

Deep Learning techniques [13] have recently enabled researchers to successfully tackle low-level

perception problems in a supervised learning fashion.In the field of Reinforcement Learning this

has transferred into the ability to develop agents able to learn to act in high-dimensional input

spaces.In particular, deep neural networks have been used to help reinforcement learning scale

to environments with visual inputs, allowing them to learn policies in testbeds that previously

were completely intractable.For instance,algorithms such as Deep Q-Network (DQN) [14]

have been shown to reach human-level performances on most of the classic ATARI 2600 games

by learning a controller directly from raw pixels, and without any additional supervision beside

the score.Most of the work spawned in this new area has however tackled environments where

the state is fully observable,the reward function has no or low delay,and the action set is

relatively small.To solve the great majority of real life problems agents must instead be able to

handle partial observability, structured and complex dynamics, and noisy and high-dimensional

control interfaces.

To provide the community with usefulresearch environments,work was done towards

building platforms based on videogames such as Torcs [27], Mario AI [20], Unreal’s BotPrize

[10], the Atari Learning Environment [3], VizDoom [12], and Minecraft [11], all of which have

allowed researchers to train deep learning models with imitation learning, reinforcement learning

and various decision making algorithms on increasingly difficult problems.Recently there have

also been efforts to unite those and many other such environments in one platform to provide

a standard interface for interacting with them [4]. We propose a bridge between StarCraft:

Brood War, an RTS game with an active AI research community and annual AI competitions

[16, 6, 1], and Lua, with examples in Torch [9] (a machine learning library).

1

arXiv:1611.00625v2 [cs.LG] 3 Nov 2016

on Real-Time Strategy Games

Gabriel Synnaeve, Nantas Nardelli, Alex Auvolat, Soumith Chintala,

Timothée Lacroix, Zeming Lin, Florian Richoux, Nicolas Usunier

gab@fb.com, nantas@robots.ox.ac.uk

November 7, 2016

Abstract

We present TorchCraft, a library that enables deep learning research on Real-Time

Strategy (RTS) games such as StarCraft:Brood War, by making it easier to control these

games from a machine learning framework, here Torch [9]. This white paper argues for

using RTS games as a benchmark for AI research, and describes the design and components

of TorchCraft.

1 Introduction

Deep Learning techniques [13] have recently enabled researchers to successfully tackle low-level

perception problems in a supervised learning fashion.In the field of Reinforcement Learning this

has transferred into the ability to develop agents able to learn to act in high-dimensional input

spaces.In particular, deep neural networks have been used to help reinforcement learning scale

to environments with visual inputs, allowing them to learn policies in testbeds that previously

were completely intractable.For instance,algorithms such as Deep Q-Network (DQN) [14]

have been shown to reach human-level performances on most of the classic ATARI 2600 games

by learning a controller directly from raw pixels, and without any additional supervision beside

the score.Most of the work spawned in this new area has however tackled environments where

the state is fully observable,the reward function has no or low delay,and the action set is

relatively small.To solve the great majority of real life problems agents must instead be able to

handle partial observability, structured and complex dynamics, and noisy and high-dimensional

control interfaces.

To provide the community with usefulresearch environments,work was done towards

building platforms based on videogames such as Torcs [27], Mario AI [20], Unreal’s BotPrize

[10], the Atari Learning Environment [3], VizDoom [12], and Minecraft [11], all of which have

allowed researchers to train deep learning models with imitation learning, reinforcement learning

and various decision making algorithms on increasingly difficult problems.Recently there have

also been efforts to unite those and many other such environments in one platform to provide

a standard interface for interacting with them [4]. We propose a bridge between StarCraft:

Brood War, an RTS game with an active AI research community and annual AI competitions

[16, 6, 1], and Lua, with examples in Torch [9] (a machine learning library).

1

arXiv:1611.00625v2 [cs.LG] 3 Nov 2016

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

2 Real-Time Strategy for Games AI

Real-time strategy (RTS) games have historically been a domain of interest of the planning and

decision making research communities [5, 2, 6, 16, 17]. This type of games aims to simulate

the control of multiple units in a military setting at different scales and level of complexity,

usually in a fixed-size 2D map, in duel or in small teams.The goal of the player is to collect

resources which can be used to expand their control on the map, create buildings and units

to fight off enemy deployments, and ultimately destroy the opponents.These games exhibit

durative moves (with complex game dynamics) with simultaneous actions (all players can give

commands to any of their units at any time), and very often partial observability (a “fog of

war”:opponent units not in the vicinity of a player’s units are not shown).

RTS gameplay: Components RTS game play are economy and battles (“macro”and

“micro” respectively):players need to gather resources to build military units and defeat their

opponents.To that end, they often have worker units (or extraction structures) that can gather

resources needed to build workers, buildings, military units and research upgrades.Workers

are often also builders (as in StarCraft),and are weak in fights compared to military units.

Resources may be of varying degrees of abundance and importance.For instance, in StarCraft

minerals are used for everything, whereas gas is only required for advanced buildings or military

units, and technology upgrades.Buildings and research define technology trees (directed acyclic

graphs) and each state of a “tech tree” allow for the production of different unit types and the

training of new unit abilities.Each unit and building has a range of sight that provides the

player with a view of the map.Parts of the map not in the sight range of the player’s units are

under fog of war and the player cannot observe what happens there.A considerable part of the

strategy and the tactics lies in which armies to deploy and where.

Military units in RTS games have multiple properties which differ between unit types, such

as: attack range (including melee),damage types,armor,speed,area of effects,invisibility,

flight, and special abilities.Units can have attacks and defenses that counter each others in a

rock-paper-scissors fashion, making planning armies a extremely challenging and strategically

rich process.An “opening” denotes the same thing as in Chess:an early game plan for which

the player has to make choices.That is the case in Chess because one can move only one

piece at a time (each turn), and in RTS games because, during the development phase, one is

economically limited and has to choose which tech paths to pursue.Available resources constrain

the technology advancement and the number of units one can produce.As producing buildings

and units also take time,the arbitrage between investing in the economy,in technological

advancement, and in units production is the crux of the strategy during the whole game.

Related work: Classical AI approaches normally involving planning and search [2, 15,

24, 7] are extremely challenged by the combinatorial action space and the complex dynamics

of RTS games, making simulation (and thus Monte Carlo tree search) difficult [8, 22]. Other

characteristics such as partial observability, the non-obvious quantification of the value of the

state, and the problem of featurizing a dynamic and structured state contribute to making them

an interesting problem, which altogether ultimately also make them an excellent benchmark for

AI. As the scope of this paper is not to give a review of RTS AI research, we refer the reader to

these surveys about existing research on RTS and StarCraft AI [16, 17].

It is currently tedious to do machine learning research in this domain.Most previous

reinforcement learning research involve simple models or limited experimental settings [26, 23].

Other models are trained on offline datasets of highly skilled players [25, 18, 19, 21]. Contrary

to most Atari games [3], RTS games have much higher action spaces and much more structured

states.Thus, we advocate here to have not only the pixels as input and keyboard/mouse

for commands, as in [3, 4, 12], but also a structured representation of the game state, as in

2

Real-time strategy (RTS) games have historically been a domain of interest of the planning and

decision making research communities [5, 2, 6, 16, 17]. This type of games aims to simulate

the control of multiple units in a military setting at different scales and level of complexity,

usually in a fixed-size 2D map, in duel or in small teams.The goal of the player is to collect

resources which can be used to expand their control on the map, create buildings and units

to fight off enemy deployments, and ultimately destroy the opponents.These games exhibit

durative moves (with complex game dynamics) with simultaneous actions (all players can give

commands to any of their units at any time), and very often partial observability (a “fog of

war”:opponent units not in the vicinity of a player’s units are not shown).

RTS gameplay: Components RTS game play are economy and battles (“macro”and

“micro” respectively):players need to gather resources to build military units and defeat their

opponents.To that end, they often have worker units (or extraction structures) that can gather

resources needed to build workers, buildings, military units and research upgrades.Workers

are often also builders (as in StarCraft),and are weak in fights compared to military units.

Resources may be of varying degrees of abundance and importance.For instance, in StarCraft

minerals are used for everything, whereas gas is only required for advanced buildings or military

units, and technology upgrades.Buildings and research define technology trees (directed acyclic

graphs) and each state of a “tech tree” allow for the production of different unit types and the

training of new unit abilities.Each unit and building has a range of sight that provides the

player with a view of the map.Parts of the map not in the sight range of the player’s units are

under fog of war and the player cannot observe what happens there.A considerable part of the

strategy and the tactics lies in which armies to deploy and where.

Military units in RTS games have multiple properties which differ between unit types, such

as: attack range (including melee),damage types,armor,speed,area of effects,invisibility,

flight, and special abilities.Units can have attacks and defenses that counter each others in a

rock-paper-scissors fashion, making planning armies a extremely challenging and strategically

rich process.An “opening” denotes the same thing as in Chess:an early game plan for which

the player has to make choices.That is the case in Chess because one can move only one

piece at a time (each turn), and in RTS games because, during the development phase, one is

economically limited and has to choose which tech paths to pursue.Available resources constrain

the technology advancement and the number of units one can produce.As producing buildings

and units also take time,the arbitrage between investing in the economy,in technological

advancement, and in units production is the crux of the strategy during the whole game.

Related work: Classical AI approaches normally involving planning and search [2, 15,

24, 7] are extremely challenged by the combinatorial action space and the complex dynamics

of RTS games, making simulation (and thus Monte Carlo tree search) difficult [8, 22]. Other

characteristics such as partial observability, the non-obvious quantification of the value of the

state, and the problem of featurizing a dynamic and structured state contribute to making them

an interesting problem, which altogether ultimately also make them an excellent benchmark for

AI. As the scope of this paper is not to give a review of RTS AI research, we refer the reader to

these surveys about existing research on RTS and StarCraft AI [16, 17].

It is currently tedious to do machine learning research in this domain.Most previous

reinforcement learning research involve simple models or limited experimental settings [26, 23].

Other models are trained on offline datasets of highly skilled players [25, 18, 19, 21]. Contrary

to most Atari games [3], RTS games have much higher action spaces and much more structured

states.Thus, we advocate here to have not only the pixels as input and keyboard/mouse

for commands, as in [3, 4, 12], but also a structured representation of the game state, as in

2

-- main game engine loop:

while true do

game.receive_player_actions()

game.compute_dynamics()

-- our injected code:

torchcraft.send_state()

torchcraft.receive_actions()

end

featurize, model = init()

tc = require ’torchcraft’

tc:connect(port)

while not tc.state.game_ended do

tc:receive()

features = featurize(tc.state)

actions = model:forward(features)

tc:send(tc:tocommand(actions))

end

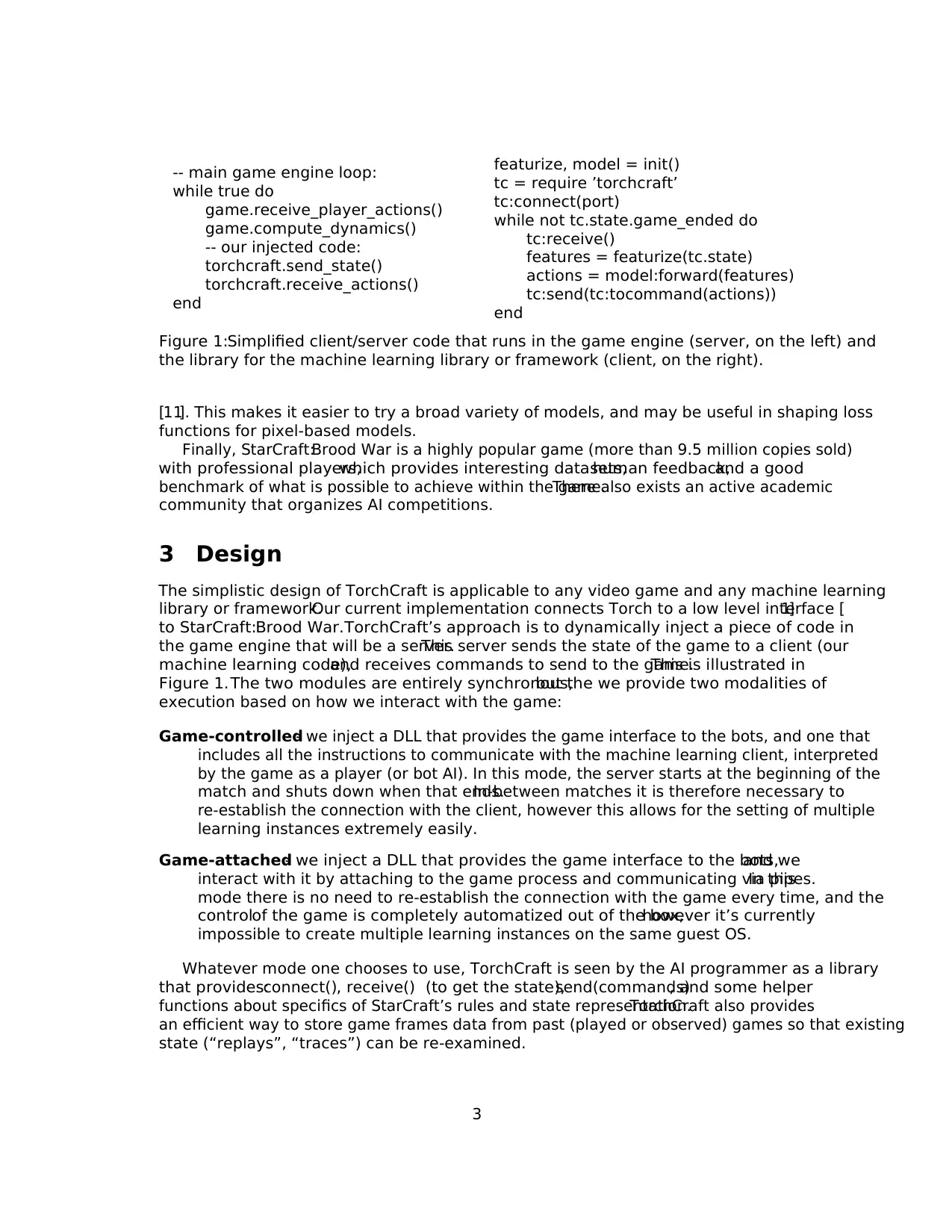

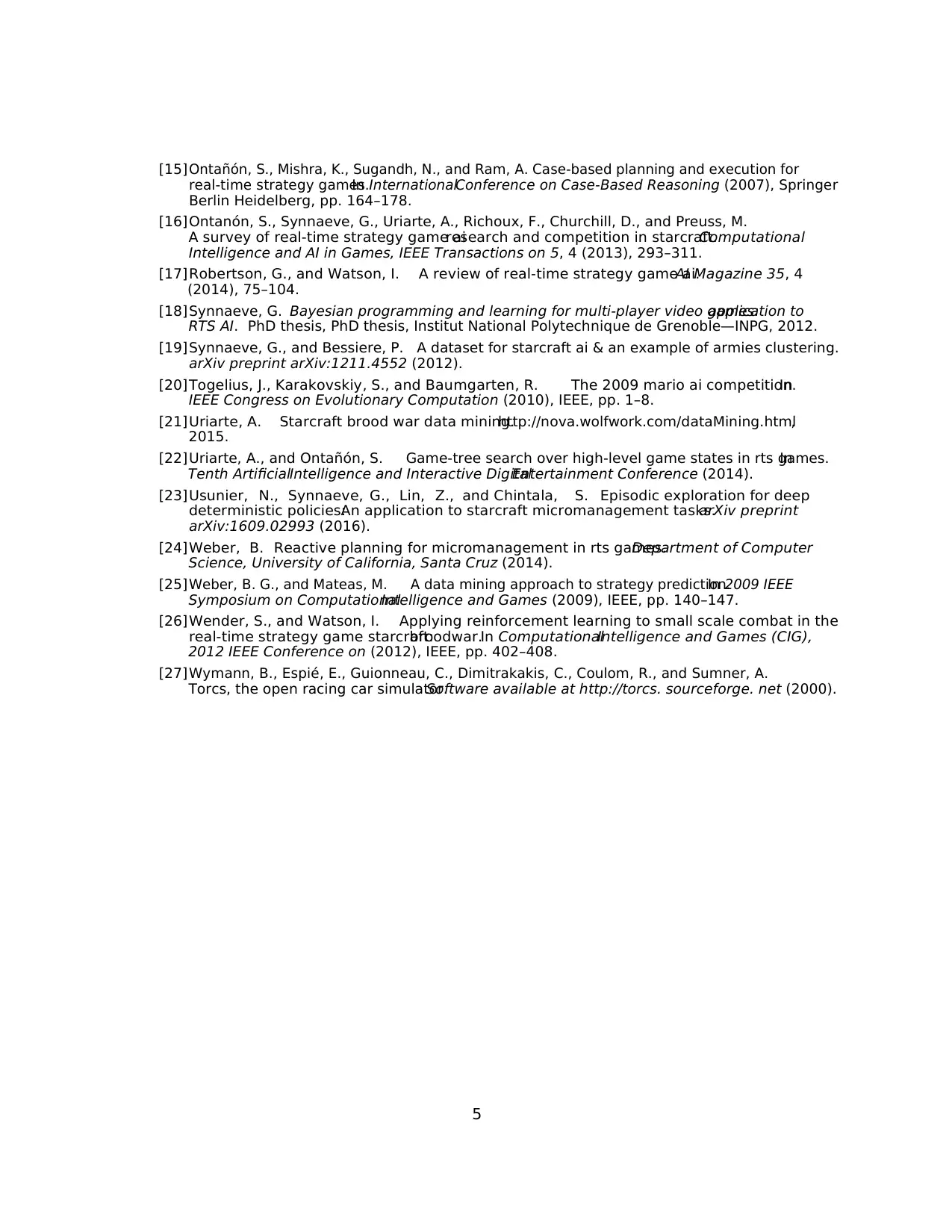

Figure 1:Simplified client/server code that runs in the game engine (server, on the left) and

the library for the machine learning library or framework (client, on the right).

[11]. This makes it easier to try a broad variety of models, and may be useful in shaping loss

functions for pixel-based models.

Finally, StarCraft:Brood War is a highly popular game (more than 9.5 million copies sold)

with professional players,which provides interesting datasets,human feedback,and a good

benchmark of what is possible to achieve within the game.There also exists an active academic

community that organizes AI competitions.

3 Design

The simplistic design of TorchCraft is applicable to any video game and any machine learning

library or framework.Our current implementation connects Torch to a low level interface [1]

to StarCraft:Brood War.TorchCraft’s approach is to dynamically inject a piece of code in

the game engine that will be a server.This server sends the state of the game to a client (our

machine learning code),and receives commands to send to the game.This is illustrated in

Figure 1.The two modules are entirely synchronous,but the we provide two modalities of

execution based on how we interact with the game:

Game-controlled- we inject a DLL that provides the game interface to the bots, and one that

includes all the instructions to communicate with the machine learning client, interpreted

by the game as a player (or bot AI). In this mode, the server starts at the beginning of the

match and shuts down when that ends.In-between matches it is therefore necessary to

re-establish the connection with the client, however this allows for the setting of multiple

learning instances extremely easily.

Game-attached- we inject a DLL that provides the game interface to the bots,and we

interact with it by attaching to the game process and communicating via pipes.In this

mode there is no need to re-establish the connection with the game every time, and the

controlof the game is completely automatized out of the box,however it’s currently

impossible to create multiple learning instances on the same guest OS.

Whatever mode one chooses to use, TorchCraft is seen by the AI programmer as a library

that provides:connect(), receive() (to get the state),send(commands), and some helper

functions about specifics of StarCraft’s rules and state representation.TorchCraft also provides

an efficient way to store game frames data from past (played or observed) games so that existing

state (“replays”, “traces”) can be re-examined.

3

while true do

game.receive_player_actions()

game.compute_dynamics()

-- our injected code:

torchcraft.send_state()

torchcraft.receive_actions()

end

featurize, model = init()

tc = require ’torchcraft’

tc:connect(port)

while not tc.state.game_ended do

tc:receive()

features = featurize(tc.state)

actions = model:forward(features)

tc:send(tc:tocommand(actions))

end

Figure 1:Simplified client/server code that runs in the game engine (server, on the left) and

the library for the machine learning library or framework (client, on the right).

[11]. This makes it easier to try a broad variety of models, and may be useful in shaping loss

functions for pixel-based models.

Finally, StarCraft:Brood War is a highly popular game (more than 9.5 million copies sold)

with professional players,which provides interesting datasets,human feedback,and a good

benchmark of what is possible to achieve within the game.There also exists an active academic

community that organizes AI competitions.

3 Design

The simplistic design of TorchCraft is applicable to any video game and any machine learning

library or framework.Our current implementation connects Torch to a low level interface [1]

to StarCraft:Brood War.TorchCraft’s approach is to dynamically inject a piece of code in

the game engine that will be a server.This server sends the state of the game to a client (our

machine learning code),and receives commands to send to the game.This is illustrated in

Figure 1.The two modules are entirely synchronous,but the we provide two modalities of

execution based on how we interact with the game:

Game-controlled- we inject a DLL that provides the game interface to the bots, and one that

includes all the instructions to communicate with the machine learning client, interpreted

by the game as a player (or bot AI). In this mode, the server starts at the beginning of the

match and shuts down when that ends.In-between matches it is therefore necessary to

re-establish the connection with the client, however this allows for the setting of multiple

learning instances extremely easily.

Game-attached- we inject a DLL that provides the game interface to the bots,and we

interact with it by attaching to the game process and communicating via pipes.In this

mode there is no need to re-establish the connection with the game every time, and the

controlof the game is completely automatized out of the box,however it’s currently

impossible to create multiple learning instances on the same guest OS.

Whatever mode one chooses to use, TorchCraft is seen by the AI programmer as a library

that provides:connect(), receive() (to get the state),send(commands), and some helper

functions about specifics of StarCraft’s rules and state representation.TorchCraft also provides

an efficient way to store game frames data from past (played or observed) games so that existing

state (“replays”, “traces”) can be re-examined.

3

4 Conclusion

We presented several work that established RTS games as a source of interesting and relevant

problems for the AI research community to work on.We believe that an efficient bridge between

low levelexisting APIs and machine learning frameworks/libraries would enable and foster

research on such games.We presented TorchCraft:a library that enables state-of-the-art

machine learning research on real game data by interfacing Torch with StarCraft:BroodWar.

TorchCraft has already been used in reinforcement learning experiments on StarCraft, which

led to the results in [23] (soon to be open-sourced too and included within TorchCraft).

5 Acknowledgements

We would like to thank Yann LeCun, Léon Bottou, Pushmeet Kohli, Subramanian Ramamoorthy,

and Phil Torr for the continuous feedback and help with various aspects of this work.Many

thanks to David Churchill for proofreading early versions of this paper.

References

[1] BWAPI: Brood war api, an api for interacting with starcraft:Broodwar (1.16.1).https://bwapi.

github.io/, 2009–2015.

[2] Aha, D. W., Molineaux, M., and Ponsen, M. Learning to win:Case-based plan selection in

a real-time strategy game.In InternationalConference on Case-Based Reasoning (2005), Springer,

pp. 5–20.

[3] Bellemare, M. G., Naddaf, Y., Veness, J., and Bowling, M. The arcade learning

environment:An evaluation platform for general agents.Journal of Artificial Intelligence Research

(2012).

[4] Brockman, G., Cheung, V., Pettersson, L., Schneider, J., Schulman, J., Tang, J.,

and Zaremba, W. Openai gym.arXiv preprint arXiv:1606.01540 (2016).

[5] Buro, M., and Furtak, T. Rts games and real-time ai research.In Proceedings of the Behavior

Representation in Modeling and Simulation Conference (BRIMS) (2004), vol. 6370.

[6] Churchill, D. Starcraft ai competition. http://www.cs.mun.ca/~dchurchill/

starcraftaicomp/, 2011–2016.

[7] Churchill, D. Heuristic Search Techniques for Real-Time Strategy Games. PhD thesis, University

of Alberta, 2016.

[8] Churchill, D., Saffidine, A., and Buro, M. Fast heuristic search for rts game combat

scenarios.In AIIDE (2012).

[9] Collobert, R., Kavukcuoglu, K., and Farabet, C. Torch7:A matlab-like environment for

machine learning.In BigLearn, NIPS Workshop (2011), no. EPFL-CONF-192376.

[10] Hingston, P. A turing test for computer game bots.IEEE Transactions on Computational

Intelligence and AI in Games 1, 3 (2009), 169–186.

[11] Johnson, M., Hofmann, K., Hutton, T., and Bignell, D. The malmo platform for artificial

intelligence experimentation.In Internationaljoint conference on artificialintelligence (IJCAI)

(2016).

[12] Kempka, M., Wydmuch, M., Runc, G., Toczek, J., and Jaśkowski, W. Vizdoom: A doom-

based ai research platform for visual reinforcement learning.arXiv preprint arXiv:1605.02097

(2016).

[13]LeCun, Y., Bengio, Y., and Hinton, G. Deep learning.Nature 521, 7553 (2015), 436–444.

[14] Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G.,

Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., et al. Human-level control

through deep reinforcement learning.Nature 518, 7540 (2015), 529–533.

4

We presented several work that established RTS games as a source of interesting and relevant

problems for the AI research community to work on.We believe that an efficient bridge between

low levelexisting APIs and machine learning frameworks/libraries would enable and foster

research on such games.We presented TorchCraft:a library that enables state-of-the-art

machine learning research on real game data by interfacing Torch with StarCraft:BroodWar.

TorchCraft has already been used in reinforcement learning experiments on StarCraft, which

led to the results in [23] (soon to be open-sourced too and included within TorchCraft).

5 Acknowledgements

We would like to thank Yann LeCun, Léon Bottou, Pushmeet Kohli, Subramanian Ramamoorthy,

and Phil Torr for the continuous feedback and help with various aspects of this work.Many

thanks to David Churchill for proofreading early versions of this paper.

References

[1] BWAPI: Brood war api, an api for interacting with starcraft:Broodwar (1.16.1).https://bwapi.

github.io/, 2009–2015.

[2] Aha, D. W., Molineaux, M., and Ponsen, M. Learning to win:Case-based plan selection in

a real-time strategy game.In InternationalConference on Case-Based Reasoning (2005), Springer,

pp. 5–20.

[3] Bellemare, M. G., Naddaf, Y., Veness, J., and Bowling, M. The arcade learning

environment:An evaluation platform for general agents.Journal of Artificial Intelligence Research

(2012).

[4] Brockman, G., Cheung, V., Pettersson, L., Schneider, J., Schulman, J., Tang, J.,

and Zaremba, W. Openai gym.arXiv preprint arXiv:1606.01540 (2016).

[5] Buro, M., and Furtak, T. Rts games and real-time ai research.In Proceedings of the Behavior

Representation in Modeling and Simulation Conference (BRIMS) (2004), vol. 6370.

[6] Churchill, D. Starcraft ai competition. http://www.cs.mun.ca/~dchurchill/

starcraftaicomp/, 2011–2016.

[7] Churchill, D. Heuristic Search Techniques for Real-Time Strategy Games. PhD thesis, University

of Alberta, 2016.

[8] Churchill, D., Saffidine, A., and Buro, M. Fast heuristic search for rts game combat

scenarios.In AIIDE (2012).

[9] Collobert, R., Kavukcuoglu, K., and Farabet, C. Torch7:A matlab-like environment for

machine learning.In BigLearn, NIPS Workshop (2011), no. EPFL-CONF-192376.

[10] Hingston, P. A turing test for computer game bots.IEEE Transactions on Computational

Intelligence and AI in Games 1, 3 (2009), 169–186.

[11] Johnson, M., Hofmann, K., Hutton, T., and Bignell, D. The malmo platform for artificial

intelligence experimentation.In Internationaljoint conference on artificialintelligence (IJCAI)

(2016).

[12] Kempka, M., Wydmuch, M., Runc, G., Toczek, J., and Jaśkowski, W. Vizdoom: A doom-

based ai research platform for visual reinforcement learning.arXiv preprint arXiv:1605.02097

(2016).

[13]LeCun, Y., Bengio, Y., and Hinton, G. Deep learning.Nature 521, 7553 (2015), 436–444.

[14] Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G.,

Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., et al. Human-level control

through deep reinforcement learning.Nature 518, 7540 (2015), 529–533.

4

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

[15] Ontañón, S., Mishra, K., Sugandh, N., and Ram, A. Case-based planning and execution for

real-time strategy games.In InternationalConference on Case-Based Reasoning (2007), Springer

Berlin Heidelberg, pp. 164–178.

[16]Ontanón, S., Synnaeve, G., Uriarte, A., Richoux, F., Churchill, D., and Preuss, M.

A survey of real-time strategy game airesearch and competition in starcraft.Computational

Intelligence and AI in Games, IEEE Transactions on 5, 4 (2013), 293–311.

[17] Robertson, G., and Watson, I. A review of real-time strategy game ai.AI Magazine 35, 4

(2014), 75–104.

[18]Synnaeve, G. Bayesian programming and learning for multi-player video games:application to

RTS AI. PhD thesis, PhD thesis, Institut National Polytechnique de Grenoble—INPG, 2012.

[19]Synnaeve, G., and Bessiere, P. A dataset for starcraft ai & an example of armies clustering.

arXiv preprint arXiv:1211.4552 (2012).

[20] Togelius, J., Karakovskiy, S., and Baumgarten, R. The 2009 mario ai competition.In

IEEE Congress on Evolutionary Computation (2010), IEEE, pp. 1–8.

[21] Uriarte, A. Starcraft brood war data mining.http://nova.wolfwork.com/dataMining.html,

2015.

[22]Uriarte, A., and Ontañón, S. Game-tree search over high-level game states in rts games.In

Tenth ArtificialIntelligence and Interactive DigitalEntertainment Conference (2014).

[23] Usunier, N., Synnaeve, G., Lin, Z., and Chintala, S. Episodic exploration for deep

deterministic policies:An application to starcraft micromanagement tasks.arXiv preprint

arXiv:1609.02993 (2016).

[24] Weber, B. Reactive planning for micromanagement in rts games.Department of Computer

Science, University of California, Santa Cruz (2014).

[25] Weber, B. G., and Mateas, M. A data mining approach to strategy prediction.In 2009 IEEE

Symposium on ComputationalIntelligence and Games (2009), IEEE, pp. 140–147.

[26] Wender, S., and Watson, I. Applying reinforcement learning to small scale combat in the

real-time strategy game starcraft:broodwar.In ComputationalIntelligence and Games (CIG),

2012 IEEE Conference on (2012), IEEE, pp. 402–408.

[27] Wymann, B., Espié, E., Guionneau, C., Dimitrakakis, C., Coulom, R., and Sumner, A.

Torcs, the open racing car simulator.Software available at http://torcs. sourceforge. net (2000).

5

real-time strategy games.In InternationalConference on Case-Based Reasoning (2007), Springer

Berlin Heidelberg, pp. 164–178.

[16]Ontanón, S., Synnaeve, G., Uriarte, A., Richoux, F., Churchill, D., and Preuss, M.

A survey of real-time strategy game airesearch and competition in starcraft.Computational

Intelligence and AI in Games, IEEE Transactions on 5, 4 (2013), 293–311.

[17] Robertson, G., and Watson, I. A review of real-time strategy game ai.AI Magazine 35, 4

(2014), 75–104.

[18]Synnaeve, G. Bayesian programming and learning for multi-player video games:application to

RTS AI. PhD thesis, PhD thesis, Institut National Polytechnique de Grenoble—INPG, 2012.

[19]Synnaeve, G., and Bessiere, P. A dataset for starcraft ai & an example of armies clustering.

arXiv preprint arXiv:1211.4552 (2012).

[20] Togelius, J., Karakovskiy, S., and Baumgarten, R. The 2009 mario ai competition.In

IEEE Congress on Evolutionary Computation (2010), IEEE, pp. 1–8.

[21] Uriarte, A. Starcraft brood war data mining.http://nova.wolfwork.com/dataMining.html,

2015.

[22]Uriarte, A., and Ontañón, S. Game-tree search over high-level game states in rts games.In

Tenth ArtificialIntelligence and Interactive DigitalEntertainment Conference (2014).

[23] Usunier, N., Synnaeve, G., Lin, Z., and Chintala, S. Episodic exploration for deep

deterministic policies:An application to starcraft micromanagement tasks.arXiv preprint

arXiv:1609.02993 (2016).

[24] Weber, B. Reactive planning for micromanagement in rts games.Department of Computer

Science, University of California, Santa Cruz (2014).

[25] Weber, B. G., and Mateas, M. A data mining approach to strategy prediction.In 2009 IEEE

Symposium on ComputationalIntelligence and Games (2009), IEEE, pp. 140–147.

[26] Wender, S., and Watson, I. Applying reinforcement learning to small scale combat in the

real-time strategy game starcraft:broodwar.In ComputationalIntelligence and Games (CIG),

2012 IEEE Conference on (2012), IEEE, pp. 402–408.

[27] Wymann, B., Espié, E., Guionneau, C., Dimitrakakis, C., Coulom, R., and Sumner, A.

Torcs, the open racing car simulator.Software available at http://torcs. sourceforge. net (2000).

5

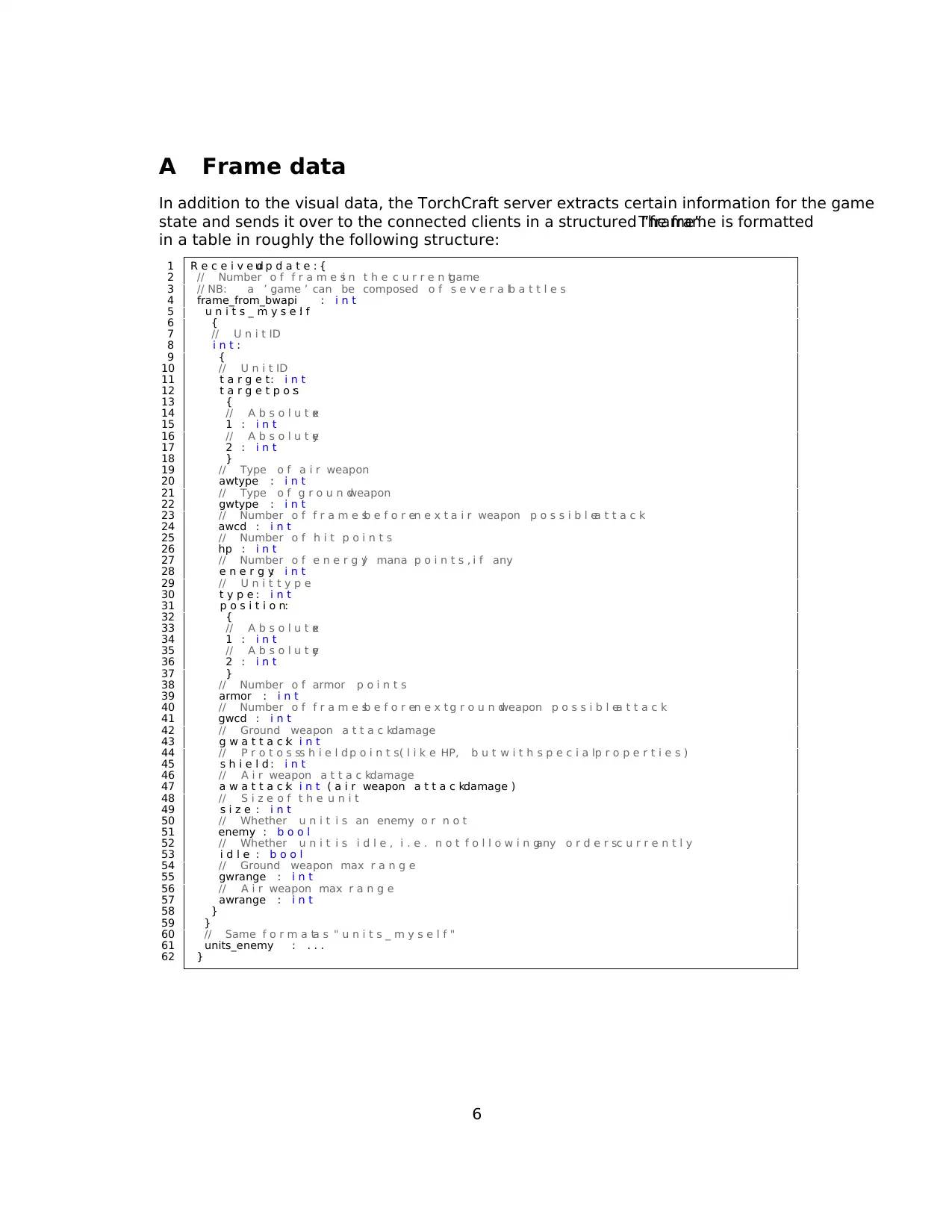

A Frame data

In addition to the visual data, the TorchCraft server extracts certain information for the game

state and sends it over to the connected clients in a structured “frame”.The frame is formatted

in a table in roughly the following structure:

1 R e c e i v e du p d a t e : {

2 // Number o f f r a m e si n t h e c u r r e n tgame

3 // NB: a ’ game ’ can be composed o f s e v e r a lb a t t l e s

4 frame_from_bwapi : i n t

5 u n i t s _ m y s e l f:

6 {

7 // U n i t ID

8 i n t :

9 {

10 // U n i t ID

11 t a r g e t: i n t

12 t a r g e t p o s:

13 {

14 // A b s o l u t ex

15 1 : i n t

16 // A b s o l u t ey

17 2 : i n t

18 }

19 // Type o f a i r weapon

20 awtype : i n t

21 // Type o f g r o u n dweapon

22 gwtype : i n t

23 // Number o f f r a m e sb e f o r en e x t a i r weapon p o s s i b l ea t t a c k

24 awcd : i n t

25 // Number o f h i t p o i n t s

26 hp : i n t

27 // Number o f e n e r g y/ mana p o i n t s , i f any

28 e n e r g y: i n t

29 // U n i t t y p e

30 t y p e : i n t

31 p o s i t i o n:

32 {

33 // A b s o l u t ex

34 1 : i n t

35 // A b s o l u t ey

36 2 : i n t

37 }

38 // Number o f armor p o i n t s

39 armor : i n t

40 // Number o f f r a m e sb e f o r en e x t g r o u n dweapon p o s s i b l ea t t a c k

41 gwcd : i n t

42 // Ground weapon a t t a c kdamage

43 g w a t t a c k: i n t

44 // P r o t o s ss h i e l d p o i n t s( l i k e HP, b u t w i t h s p e c i a lp r o p e r t i e s )

45 s h i e l d : i n t

46 // A i r weapon a t t a c kdamage

47 a w a t t a c k: i n t ( a i r weapon a t t a c kdamage )

48 // S i z e o f t h e u n i t

49 s i z e : i n t

50 // Whether u n i t i s an enemy o r n o t

51 enemy : b o o l

52 // Whether u n i t i s i d l e , i . e . n o t f o l l o w i n gany o r d e r sc u r r e n t l y

53 i d l e : b o o l

54 // Ground weapon max r a n g e

55 gwrange : i n t

56 // A i r weapon max r a n g e

57 awrange : i n t

58 }

59 }

60 // Same f o r m a ta s " u n i t s _ m y s e l f "

61 units_enemy : . . .

62 }

6

In addition to the visual data, the TorchCraft server extracts certain information for the game

state and sends it over to the connected clients in a structured “frame”.The frame is formatted

in a table in roughly the following structure:

1 R e c e i v e du p d a t e : {

2 // Number o f f r a m e si n t h e c u r r e n tgame

3 // NB: a ’ game ’ can be composed o f s e v e r a lb a t t l e s

4 frame_from_bwapi : i n t

5 u n i t s _ m y s e l f:

6 {

7 // U n i t ID

8 i n t :

9 {

10 // U n i t ID

11 t a r g e t: i n t

12 t a r g e t p o s:

13 {

14 // A b s o l u t ex

15 1 : i n t

16 // A b s o l u t ey

17 2 : i n t

18 }

19 // Type o f a i r weapon

20 awtype : i n t

21 // Type o f g r o u n dweapon

22 gwtype : i n t

23 // Number o f f r a m e sb e f o r en e x t a i r weapon p o s s i b l ea t t a c k

24 awcd : i n t

25 // Number o f h i t p o i n t s

26 hp : i n t

27 // Number o f e n e r g y/ mana p o i n t s , i f any

28 e n e r g y: i n t

29 // U n i t t y p e

30 t y p e : i n t

31 p o s i t i o n:

32 {

33 // A b s o l u t ex

34 1 : i n t

35 // A b s o l u t ey

36 2 : i n t

37 }

38 // Number o f armor p o i n t s

39 armor : i n t

40 // Number o f f r a m e sb e f o r en e x t g r o u n dweapon p o s s i b l ea t t a c k

41 gwcd : i n t

42 // Ground weapon a t t a c kdamage

43 g w a t t a c k: i n t

44 // P r o t o s ss h i e l d p o i n t s( l i k e HP, b u t w i t h s p e c i a lp r o p e r t i e s )

45 s h i e l d : i n t

46 // A i r weapon a t t a c kdamage

47 a w a t t a c k: i n t ( a i r weapon a t t a c kdamage )

48 // S i z e o f t h e u n i t

49 s i z e : i n t

50 // Whether u n i t i s an enemy o r n o t

51 enemy : b o o l

52 // Whether u n i t i s i d l e , i . e . n o t f o l l o w i n gany o r d e r sc u r r e n t l y

53 i d l e : b o o l

54 // Ground weapon max r a n g e

55 gwrange : i n t

56 // A i r weapon max r a n g e

57 awrange : i n t

58 }

59 }

60 // Same f o r m a ta s " u n i t s _ m y s e l f "

61 units_enemy : . . .

62 }

6

1 out of 6

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

© 2024 | Zucol Services PVT LTD | All rights reserved.