Unmanned Aircraft Vehicle (UAV) Pilot Identification PhD Thesis

VerifiedAdded on 2023/01/11

|32

|6106

|32

Thesis and Dissertation

AI Summary

This PhD thesis by Ahmed Saeed Al Shemeili, submitted to Khalifa University in February 2019, investigates the identification of Unmanned Aerial Vehicle (UAV) pilots using a novel machine learning approach. The research applies logistic regression, random forest, and neural network models, leveraging radio-controlled (RC) measurement features to detect rogue drones. The study finds that the machine learning approach performs effectively at higher altitudes, with detection accuracy decreasing at lower altitudes. The thesis includes an analysis of the random forest algorithm to identify key features for pilot identification, potentially enhancing feature selection and the development of artificial intelligence applications. The document covers the technological framework of UAVs, including navigation, control, and communication, and reviews related works in these areas. The research methodology, including data collection and tools used, is detailed, along with preliminary results and conclusions. The thesis also includes an acknowledgment section, a declaration of originality, and a table of contents, figures, and tables. The project's aim is to propose a novel machine learning approach to determine rogue UAVs in space based on radio waves sent by User Equipment (UEs) to Base Stations (BSs).

Running head: UAV PILOT IDENTIFICATION

1

Unmanned Aircraft Vehicle

(UAV) Pilot Identification Using

Machine Learning

Ahmed Saeed Al Shemeili

PhD. Thesis

February 2019

A thesis submitted to Khalifa University of Science and Technology in accordance with the

requirements of the degree of PhD in Engineering in the Department of (Electrical and

Computer Engineering).

1

Unmanned Aircraft Vehicle

(UAV) Pilot Identification Using

Machine Learning

Ahmed Saeed Al Shemeili

PhD. Thesis

February 2019

A thesis submitted to Khalifa University of Science and Technology in accordance with the

requirements of the degree of PhD in Engineering in the Department of (Electrical and

Computer Engineering).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

UAV PILOT IDENTIFICATION 2

Unmanned Aircraft Vehicle (UAV) Pilot Identification Using Machine Learning

by

Ahmed Saeed Al Shemeili

A thesis submitted in partial fulfillment of the

requirements for the degree of

PhD in Engineering

at

Khalifa University

Thesis Committee

Dr. Abdulhadi Shoufan (Supervisor),

Khalifa University

Prof. Ernesto Damiani (Co-Supervisor)

Khalifa University

Dr. VWY (Industrial Supervisor),

EBTIC

Prof. XYZ (External Examiner &

Committee Chair),

University of TTT

Dr. MNV (Internal Examiner)

Khalifa University

February 2019

Unmanned Aircraft Vehicle (UAV) Pilot Identification Using Machine Learning

by

Ahmed Saeed Al Shemeili

A thesis submitted in partial fulfillment of the

requirements for the degree of

PhD in Engineering

at

Khalifa University

Thesis Committee

Dr. Abdulhadi Shoufan (Supervisor),

Khalifa University

Prof. Ernesto Damiani (Co-Supervisor)

Khalifa University

Dr. VWY (Industrial Supervisor),

EBTIC

Prof. XYZ (External Examiner &

Committee Chair),

University of TTT

Dr. MNV (Internal Examiner)

Khalifa University

February 2019

Abstract

This document focus on identification of Unmanned Aerial Vehicle using a novel

machine learning approach. In doing so, three classification machine learning models

including logistics regression, random forest and neural network are applied by using radio

controlled RC measurement features to detect rogue drones. The findings suggest that for

high altitude the proposed machine learning approach can perform effectively compared to

lower altitudes since the detection degrades with decrease in altitudes.

Indexing Terms: UAV, machine learning, random forest, ensemble, bagging, MATLAB.

This document focus on identification of Unmanned Aerial Vehicle using a novel

machine learning approach. In doing so, three classification machine learning models

including logistics regression, random forest and neural network are applied by using radio

controlled RC measurement features to detect rogue drones. The findings suggest that for

high altitude the proposed machine learning approach can perform effectively compared to

lower altitudes since the detection degrades with decrease in altitudes.

Indexing Terms: UAV, machine learning, random forest, ensemble, bagging, MATLAB.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Acknowledgement

Undertaking this PhD has been a major work experience change for me where I shift

my career from networking in cyber security and artificial intelligence and this was possible

with the support I received from many people and Khalifa University.

I would like first to thank my supervisor Dr. Abdulhadi Shoufan for all the support he

gave me and the continuous feedback, which helps during my PhD study.

Many thanks to Prof. Ernest Damiani for his support and guidance to ensure that my

PhD study will be successful and of benefit to our society

I gratefully acknowledge the funding received towards my PhD from Khalifa

University. Thanks to Prof. Mahmoud Al Qutairi for supporting my study at Khalifa

University. Thanks to my Father, Mom, Brothers and sisters

Thank you my Wife and kids

Undertaking this PhD has been a major work experience change for me where I shift

my career from networking in cyber security and artificial intelligence and this was possible

with the support I received from many people and Khalifa University.

I would like first to thank my supervisor Dr. Abdulhadi Shoufan for all the support he

gave me and the continuous feedback, which helps during my PhD study.

Many thanks to Prof. Ernest Damiani for his support and guidance to ensure that my

PhD study will be successful and of benefit to our society

I gratefully acknowledge the funding received towards my PhD from Khalifa

University. Thanks to Prof. Mahmoud Al Qutairi for supporting my study at Khalifa

University. Thanks to my Father, Mom, Brothers and sisters

Thank you my Wife and kids

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Declaration and Copyright

Declaration

I declare that the work in this thesis was carried out in accordance with the regulations

of Khalifa University of Science and Technology. The work is entirely my own except where

indicated by special reference in the text. Any views expressed in the thesis are those of the

author and in no way represent those of Khalifa University of Science and Technology. No

part of the thesis has been presented to any other university for any degree.

Author Name: Ahmed Saeed Al Shemeili

Author Signature:

Date: 24-02-2019

Copyright ©

No part of this thesis may be reproduced, stored in a retrieval system, or transmitted,

in any form or by any means, electronic, mechanical, photocopying, recording, scanning or

otherwise, without prior written permission of the author. The thesis may be made available

for consultation in Khalifa University of Science and Technology Library and for inter-

library lending for use in another library and may be copied in full or in part for any bona fide

library or research worker, on the understanding that users are made aware of their

obligations under copyright, i.e. that no quotation and no information derived from it may be

published without the author's prior consent.

Declaration

I declare that the work in this thesis was carried out in accordance with the regulations

of Khalifa University of Science and Technology. The work is entirely my own except where

indicated by special reference in the text. Any views expressed in the thesis are those of the

author and in no way represent those of Khalifa University of Science and Technology. No

part of the thesis has been presented to any other university for any degree.

Author Name: Ahmed Saeed Al Shemeili

Author Signature:

Date: 24-02-2019

Copyright ©

No part of this thesis may be reproduced, stored in a retrieval system, or transmitted,

in any form or by any means, electronic, mechanical, photocopying, recording, scanning or

otherwise, without prior written permission of the author. The thesis may be made available

for consultation in Khalifa University of Science and Technology Library and for inter-

library lending for use in another library and may be copied in full or in part for any bona fide

library or research worker, on the understanding that users are made aware of their

obligations under copyright, i.e. that no quotation and no information derived from it may be

published without the author's prior consent.

Table of Contents

Abstract.......................................................................................................................................i

Acknowledgement....................................................................................................................iii

Declaration and Copyright..........................................................................................................i

Declaration.............................................................................................................................i

Copyright ©...........................................................................................................................i

List of Figures...........................................................................................................................iii

List of tables..............................................................................................................................iv

List of Abbreviations..................................................................................................................v

CHAPTER 1...............................................................................................................................6

1.0 Introduction....................................................................................................................6

The Aim of the Project.......................................................................................................12

Project Objective................................................................................................................12

CHAPTER 2.............................................................................................................................12

Related Works....................................................................................................................12

Fundamental technological framework of UAV..........................................................12

Drones and cyber security..............................................................................................16

CHAPTER 3.............................................................................................................................17

3.0 Research Methodology.................................................................................................17

Techniques to be used.....................................................................................................17

Data Collection................................................................................................................18

Tools and Applications...................................................................................................19

CHAPTER 4.............................................................................................................................21

Preliminary Results........................................................................................................21

Five Pilots Dataset...........................................................................................................21

CHAPTER 5.............................................................................................................................29

Abstract.......................................................................................................................................i

Acknowledgement....................................................................................................................iii

Declaration and Copyright..........................................................................................................i

Declaration.............................................................................................................................i

Copyright ©...........................................................................................................................i

List of Figures...........................................................................................................................iii

List of tables..............................................................................................................................iv

List of Abbreviations..................................................................................................................v

CHAPTER 1...............................................................................................................................6

1.0 Introduction....................................................................................................................6

The Aim of the Project.......................................................................................................12

Project Objective................................................................................................................12

CHAPTER 2.............................................................................................................................12

Related Works....................................................................................................................12

Fundamental technological framework of UAV..........................................................12

Drones and cyber security..............................................................................................16

CHAPTER 3.............................................................................................................................17

3.0 Research Methodology.................................................................................................17

Techniques to be used.....................................................................................................17

Data Collection................................................................................................................18

Tools and Applications...................................................................................................19

CHAPTER 4.............................................................................................................................21

Preliminary Results........................................................................................................21

Five Pilots Dataset...........................................................................................................21

CHAPTER 5.............................................................................................................................29

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Conclusion...........................................................................................................................29

Moving Forward.............................................................................................................30

Project Time Plan...............................................................................................................30

Moving Forward.............................................................................................................30

Project Time Plan...............................................................................................................30

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

List of Figures

Figure 1: Navigation system architecture ………………………………………..…14

Figure 2: UAV directions………………………………………………………….….15

Figure 3: General architecture of a drone including the ground control station….16

Fig 4: UAV detection …………………………………………………………………..17

Figure 5: Cross validation……………………………………………………………...20

Figure 6: Performance of all classifiers on the same dataset………...………………22

Figure 7. Features test accuracy with different crosses starting from 50% up to

98%....................................................................................................................................24

Figure8: Single features output compared to all features output accuracy………...25

Figure 9: All Data Vs. RC Data Classification Performance………………………...26

Figure 10: Pilot performance…………………………………………………………..27

Figure 11: project timeline plan……………………………………………………….31

List of tables

Table 1: Pilots data…………………………………………………………………….20

Table 2: Different classifiers accuracy test…………………………………………..21

Table 3: Cross validation test…………………………………………………………23

Table 4: Single feature test……………………………………………………….…..24

Table 5. Effectiveness order of the features…………………………….………..….25

Table 6: All data vs RC data performance………………………………………….26

Table 7: Pilot performance…………………………………………………………...27

Table 8: Features accuracy test……………………………………………………...29

Figure 1: Navigation system architecture ………………………………………..…14

Figure 2: UAV directions………………………………………………………….….15

Figure 3: General architecture of a drone including the ground control station….16

Fig 4: UAV detection …………………………………………………………………..17

Figure 5: Cross validation……………………………………………………………...20

Figure 6: Performance of all classifiers on the same dataset………...………………22

Figure 7. Features test accuracy with different crosses starting from 50% up to

98%....................................................................................................................................24

Figure8: Single features output compared to all features output accuracy………...25

Figure 9: All Data Vs. RC Data Classification Performance………………………...26

Figure 10: Pilot performance…………………………………………………………..27

Figure 11: project timeline plan……………………………………………………….31

List of tables

Table 1: Pilots data…………………………………………………………………….20

Table 2: Different classifiers accuracy test…………………………………………..21

Table 3: Cross validation test…………………………………………………………23

Table 4: Single feature test……………………………………………………….…..24

Table 5. Effectiveness order of the features…………………………….………..….25

Table 6: All data vs RC data performance………………………………………….26

Table 7: Pilot performance…………………………………………………………...27

Table 8: Features accuracy test……………………………………………………...29

List of Abbreviations

GCS: Ground Control Station

GPS: Global Positioning System

IMU: Internal Measurement Unit

IVDR: In-Vehicle Data Recorders

SDN: Software Defined Network

RC: Remote Control

UAV: Unmanned Aircraft Vehicle

UE: User Equipment

SIM: Simulated Identity Module

BSs: Base Stations

GCS: Ground Control Station

GPS: Global Positioning System

IMU: Internal Measurement Unit

IVDR: In-Vehicle Data Recorders

SDN: Software Defined Network

RC: Remote Control

UAV: Unmanned Aircraft Vehicle

UE: User Equipment

SIM: Simulated Identity Module

BSs: Base Stations

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

CHAPTER 1

1.0 Introduction

A conspicuous proliferation of Drones/Unmanned Aerial Vehicles (UAVs) have been

experienced in the recent years. Besides the commercial application use like in precision

agriculture where the drones are used for surveying farms during crop spraying and pest

control, they are also being used for military strikes, scientific research, journalism,

environmental protection and border security among other uses. Besides the potential benefits

of the UAVs, there is worry that the UAVs are currently causing many problems. They are

associated with the risk to privacy and safety.

Reports of drones violating public privacy and security of critical facilities including

airports and nuclear plants have become common in the recent times [1]. As reported by [2],

a UAV got cashed intentionally into a nuclear power plant in France in the year 2018.

Moreover, Federal Aviation Administration reports that safety incidents involving UAVs are

exceeding 250 [3]. Commonly, most of these events arise from rogue drones which violates

fly zones restrictions. Additionally, terror groups have also exploited the UAVs for

smuggling explosive devices and chemicals. [4] Reports that two UAVs carrying explosives

got detonated near Venezuela president in an outdoor event. This is a clear indication that

there is an urgent need to protect the national airspace from such unusual threats. This can be

protected by accurately determining the rogue drones through the help of artificial

intelligence.

Various techniques have gotten proposed to mitigate such unconventional problems to

no avail. Conventional radar based approaches which have been widely used in identifying

airplanes have been tried to no avail as it mostly fails to determine drones. Similarly, sound

and video based detection techniques have also been tried without success; these techniques

are recommended for short range instances. Some of these challenges can be addressed by

machine learning approach. In addition to machine learning, part of the analysis is to analyze

all steps of random forest in deciding the right features to identify the pilot, this will enable us

to identify possible enhancement of feature selection by going through each tree and see how

each node or leaf is selected by random forest. By doing this there will be a chance to

discover common behavior between the trees which in return might make it easier to

1.0 Introduction

A conspicuous proliferation of Drones/Unmanned Aerial Vehicles (UAVs) have been

experienced in the recent years. Besides the commercial application use like in precision

agriculture where the drones are used for surveying farms during crop spraying and pest

control, they are also being used for military strikes, scientific research, journalism,

environmental protection and border security among other uses. Besides the potential benefits

of the UAVs, there is worry that the UAVs are currently causing many problems. They are

associated with the risk to privacy and safety.

Reports of drones violating public privacy and security of critical facilities including

airports and nuclear plants have become common in the recent times [1]. As reported by [2],

a UAV got cashed intentionally into a nuclear power plant in France in the year 2018.

Moreover, Federal Aviation Administration reports that safety incidents involving UAVs are

exceeding 250 [3]. Commonly, most of these events arise from rogue drones which violates

fly zones restrictions. Additionally, terror groups have also exploited the UAVs for

smuggling explosive devices and chemicals. [4] Reports that two UAVs carrying explosives

got detonated near Venezuela president in an outdoor event. This is a clear indication that

there is an urgent need to protect the national airspace from such unusual threats. This can be

protected by accurately determining the rogue drones through the help of artificial

intelligence.

Various techniques have gotten proposed to mitigate such unconventional problems to

no avail. Conventional radar based approaches which have been widely used in identifying

airplanes have been tried to no avail as it mostly fails to determine drones. Similarly, sound

and video based detection techniques have also been tried without success; these techniques

are recommended for short range instances. Some of these challenges can be addressed by

machine learning approach. In addition to machine learning, part of the analysis is to analyze

all steps of random forest in deciding the right features to identify the pilot, this will enable us

to identify possible enhancement of feature selection by going through each tree and see how

each node or leaf is selected by random forest. By doing this there will be a chance to

discover common behavior between the trees which in return might make it easier to

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

eliminate part of process selection. This part can be applicable to any datasets (not limited to

our UAV test), which will help in the development and enhancement of many artificial

intelligence applications. This document seek to propose a novel machine learning approach

to determine rogue UAVs in space based on radio waves which are sent by User Equipment

UEs to Base Station BSs.

The Aim of the Project

UAVs are normally flown at high altitudes where the interfering signals may be

strong if not managed properly [5]. It is essential to identify if a drone is legit or not. It is

therefore important to use mobile networks to identify legit and non-legit UAVs. For legit

UAVs, a standard mechanism like a Subscriber Identity Module SIM cards can get enforced

so that the UAVs can be identified by network. Nevertheless, it is very challenging to

determine the drones which are not legitimate i.e. those that are not registered with any

network, an instance which has drawn much attentions as flying unregistered UAV may lead

to excessive interference to networks, and it is not also allowed by policies in certain regions.

This may lead to security and privacy issues like drone aided terror attack and privacy

breaches mentioned earlier [20], [21]. As a result, it is important to detect and determine

these non-legit drones as mentioned earlier. The aim of this paper is to propose a novel

machine learning approach to determine rogue UAVs in space based on radio waves which

are sent by UEs to BSs.

Project Objective

Our primary objective is to develop a robust and highly integrated system which has a

capacity to detect and identify UAVs using machine learning approach.

CHAPTER 2

Related Works

Fundamental technological framework of UAV

The machine learning strategies for detection and identification of the UAVs can be

done without knowing technological framework of the drones. This framework is classified

under three subheadings including navigation, control and communication. This section

presents related works for the above mentioned technological framework of UAVs.

Navigation

Drone navigation involves the process by which the drones make a plan which helps it

to safely arrive at its target destination. Navigation mostly depends on the current

our UAV test), which will help in the development and enhancement of many artificial

intelligence applications. This document seek to propose a novel machine learning approach

to determine rogue UAVs in space based on radio waves which are sent by User Equipment

UEs to Base Station BSs.

The Aim of the Project

UAVs are normally flown at high altitudes where the interfering signals may be

strong if not managed properly [5]. It is essential to identify if a drone is legit or not. It is

therefore important to use mobile networks to identify legit and non-legit UAVs. For legit

UAVs, a standard mechanism like a Subscriber Identity Module SIM cards can get enforced

so that the UAVs can be identified by network. Nevertheless, it is very challenging to

determine the drones which are not legitimate i.e. those that are not registered with any

network, an instance which has drawn much attentions as flying unregistered UAV may lead

to excessive interference to networks, and it is not also allowed by policies in certain regions.

This may lead to security and privacy issues like drone aided terror attack and privacy

breaches mentioned earlier [20], [21]. As a result, it is important to detect and determine

these non-legit drones as mentioned earlier. The aim of this paper is to propose a novel

machine learning approach to determine rogue UAVs in space based on radio waves which

are sent by UEs to BSs.

Project Objective

Our primary objective is to develop a robust and highly integrated system which has a

capacity to detect and identify UAVs using machine learning approach.

CHAPTER 2

Related Works

Fundamental technological framework of UAV

The machine learning strategies for detection and identification of the UAVs can be

done without knowing technological framework of the drones. This framework is classified

under three subheadings including navigation, control and communication. This section

presents related works for the above mentioned technological framework of UAVs.

Navigation

Drone navigation involves the process by which the drones make a plan which helps it

to safely arrive at its target destination. Navigation mostly depends on the current

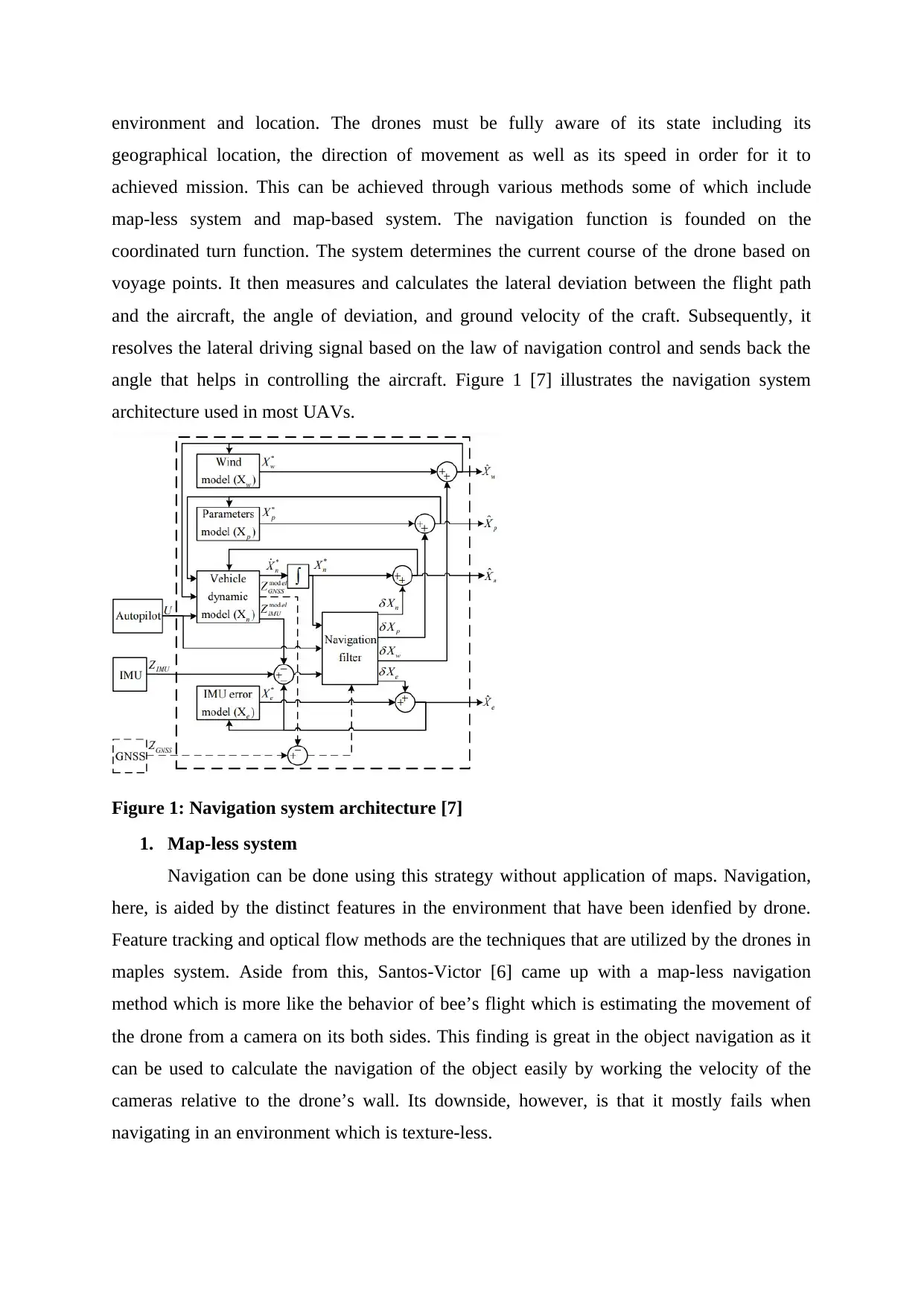

environment and location. The drones must be fully aware of its state including its

geographical location, the direction of movement as well as its speed in order for it to

achieved mission. This can be achieved through various methods some of which include

map-less system and map-based system. The navigation function is founded on the

coordinated turn function. The system determines the current course of the drone based on

voyage points. It then measures and calculates the lateral deviation between the flight path

and the aircraft, the angle of deviation, and ground velocity of the craft. Subsequently, it

resolves the lateral driving signal based on the law of navigation control and sends back the

angle that helps in controlling the aircraft. Figure 1 [7] illustrates the navigation system

architecture used in most UAVs.

Figure 1: Navigation system architecture [7]

1. Map-less system

Navigation can be done using this strategy without application of maps. Navigation,

here, is aided by the distinct features in the environment that have been idenfied by drone.

Feature tracking and optical flow methods are the techniques that are utilized by the drones in

maples system. Aside from this, Santos-Victor [6] came up with a map-less navigation

method which is more like the behavior of bee’s flight which is estimating the movement of

the drone from a camera on its both sides. This finding is great in the object navigation as it

can be used to calculate the navigation of the object easily by working the velocity of the

cameras relative to the drone’s wall. Its downside, however, is that it mostly fails when

navigating in an environment which is texture-less.

geographical location, the direction of movement as well as its speed in order for it to

achieved mission. This can be achieved through various methods some of which include

map-less system and map-based system. The navigation function is founded on the

coordinated turn function. The system determines the current course of the drone based on

voyage points. It then measures and calculates the lateral deviation between the flight path

and the aircraft, the angle of deviation, and ground velocity of the craft. Subsequently, it

resolves the lateral driving signal based on the law of navigation control and sends back the

angle that helps in controlling the aircraft. Figure 1 [7] illustrates the navigation system

architecture used in most UAVs.

Figure 1: Navigation system architecture [7]

1. Map-less system

Navigation can be done using this strategy without application of maps. Navigation,

here, is aided by the distinct features in the environment that have been idenfied by drone.

Feature tracking and optical flow methods are the techniques that are utilized by the drones in

maples system. Aside from this, Santos-Victor [6] came up with a map-less navigation

method which is more like the behavior of bee’s flight which is estimating the movement of

the drone from a camera on its both sides. This finding is great in the object navigation as it

can be used to calculate the navigation of the object easily by working the velocity of the

cameras relative to the drone’s wall. Its downside, however, is that it mostly fails when

navigating in an environment which is texture-less.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 32

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.