Methodology of the Youth Risk Behavior Surveillance System — 2013

VerifiedAdded on 2023/05/29

|21

|20505

|430

AI Summary

This report by CDC describes the methodology of the Youth Risk Behavior Surveillance System (YRBSS) established in 1991. YRBSS monitors six categories of priority health-risk behaviors among youths and young adults. The report includes questionnaire content, operational procedures, sampling, weighting, and response rates, data-collection protocols, data-processing procedures, reports and publications, and data quality.

Contribute Materials

Your contribution can guide someone’s learning journey. Share your

documents today.

Centers for Disease Control & Prevention (CDC)

Methodology of the Youth Risk Behavior Surveillance System — 2013

Author(s): Nancy D. Brener, Laura Kann, Shari Shanklin, Steve Kinchen, Danice K. Eaton,

Joseph Hawkins and Katherine H. Flint

Source: Morbidity and Mortality Weekly Report: Recommendations and Reports, Vol. 62,

No. 1 (March 1, 2013), pp. 1-20

Published by: Centers for Disease Control & Prevention (CDC)

Stable URL: https://www.jstor.org/stable/10.2307/24832543

REFERENCES

Linked references are available on JSTOR for this article:

https://www.jstor.org/stable/10.2307/24832543?seq=1&cid=pdf-

reference#references_tab_contents

You may need to log in to JSTOR to access the linked references.

JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide

range of content in a trusted digital archive. We use information technology and tools to increase productivity and

facilitate new forms of scholarship. For more information about JSTOR, please contact support@jstor.org.

Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at

https://about.jstor.org/terms

Centers for Disease Control & Prevention (CDC)is collaborating with JSTOR to digitize, preserve

and extend access toMorbidity and Mortality Weekly Report: Recommendations and Reports

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

Methodology of the Youth Risk Behavior Surveillance System — 2013

Author(s): Nancy D. Brener, Laura Kann, Shari Shanklin, Steve Kinchen, Danice K. Eaton,

Joseph Hawkins and Katherine H. Flint

Source: Morbidity and Mortality Weekly Report: Recommendations and Reports, Vol. 62,

No. 1 (March 1, 2013), pp. 1-20

Published by: Centers for Disease Control & Prevention (CDC)

Stable URL: https://www.jstor.org/stable/10.2307/24832543

REFERENCES

Linked references are available on JSTOR for this article:

https://www.jstor.org/stable/10.2307/24832543?seq=1&cid=pdf-

reference#references_tab_contents

You may need to log in to JSTOR to access the linked references.

JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide

range of content in a trusted digital archive. We use information technology and tools to increase productivity and

facilitate new forms of scholarship. For more information about JSTOR, please contact support@jstor.org.

Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at

https://about.jstor.org/terms

Centers for Disease Control & Prevention (CDC)is collaborating with JSTOR to digitize, preserve

and extend access toMorbidity and Mortality Weekly Report: Recommendations and Reports

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Recommendations and Reports

Methodology of the

Youth Risk Behavior Surveillance System — 2013

Prepared by

Nancy D. Brener, PhD1

Laura Kann, PhD1

Shari Shanklin, MS1

Steve Kinchen1

Danice K. Eaton, PhD2

Joseph Hawkins, MA3

Katherine H. Flint, MS4

1Division of Adolescent and School Health, National Center for HIV/AIDS, Viral Hepatitis, STD, and TB Prevention, C

2Division of Human Development and Disability, National Center for Birth Defects and Developmental Disabilities

3Westat, Rockville, Maryland

4ICF International, Calverton, Maryland

Summary

Priority health-risk behaviors (i.e., interrelated and preventable behaviors that contribute to the lead

and mortality among youths and adults) often are established during childhood and adolescence and

Youth Risk Behavior Surveillance System (YRBSS), established in 1991, monitors six categories of prio

among youths and young adults: 1) behaviors that contribute to unintentional injuries and violence; 2

contribute to human immunodeficiency virus (HIV) infection, other sexually transmitted diseases, and

3) tobacco use; 4) alcohol and other drug use; 5) unhealthy dietary behaviors; and 6) physical inactivi

monitors the prevalence of obesity and asthma among this population.

YRBSS data are obtained from multiple sources including a national school-based survey conducted

based state, territorial, tribal, and large urban school district surveys conducted by education and hea

have been conducted biennially since 1991 and include representative samples of students in grades

of the YRBSS methodology was published (CDC. Methodology of the Youth Risk Behavior Surveillance

[No RR-12]). Since 2004, improvements have been made to YRBSS, including increases in coverage a

This report describes these changes and updates earlier descriptions of the system, including questio

procedures; sampling, weighting, and response rates; data-collection protocols; data-processing proce

and data quality. This report also includes results of methods studies that systematically examined ho

affect prevalence estimates. YRBSS continues to evolve to meet the needs of CDC and other data use

of the questionnaire, the addition of new populations, and the development of innovative methods for

had been ongoing since 1975 (1). This study measureBackground and Rationale use and related determinants in a national sample of

Data from surveillance systems are critical for planning andin grade 12; it has since been expanded to include st

evaluating public health programs. During the late 1980s,in grades 8 and 10 and a broader health-risk behavio

when CDC began funding education agencies to implementIn 1987, the one-time National Adolescent Student He

school-based programs to prevent human immunodeficiencySurvey was administered to a nationally representativ

virus (HIV), only a limited number of health-related school-of students in grades 8 and 10; this survey measured

based surveys existed in the United States to inform programskills (e.g., reading food and drug labels), alcohol and

planning and evaluation. The Monitoring the Future studydrug use, injury prevention, nutrition, knowledge and

The material in this report originated in the National Center for HIV/

AIDS, Viral Hepatitis, STD, and TB Prevention, Rima F. Khabbaz,

MD, Acting Director; and the Division of Adolescent and School

Health, Howell Wechsler, EdD, Director.

Corresponding preparer: Nancy D. Brener, PhD, National Center for

HIV/AIDS, Viral Hepatitis, STD, and TB Prevention, 4770 Buford

Highway NE, MS K-33, Atlanta, GA 30341; Telephone: 770-488-6184;

Fax: 770-488-6156; E-mail: nad1@cdc.gov.

about sexually transmitted diseases (STDs) and a

immunodeficiency syndrome (AIDS), attempted suicid

violence-related behaviors (2). In addition, in 198

conducted a national survey to measure knowledge, b

and behaviors concerning HIV among high school stu

(3). However, surveys conducted only on a national le

time surveys, and surveys addressing only certain ca

of health-risk behaviors could not meet the need

MMWR / March 1, 2013/ Vol. 62/ No. 1 1

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

Methodology of the

Youth Risk Behavior Surveillance System — 2013

Prepared by

Nancy D. Brener, PhD1

Laura Kann, PhD1

Shari Shanklin, MS1

Steve Kinchen1

Danice K. Eaton, PhD2

Joseph Hawkins, MA3

Katherine H. Flint, MS4

1Division of Adolescent and School Health, National Center for HIV/AIDS, Viral Hepatitis, STD, and TB Prevention, C

2Division of Human Development and Disability, National Center for Birth Defects and Developmental Disabilities

3Westat, Rockville, Maryland

4ICF International, Calverton, Maryland

Summary

Priority health-risk behaviors (i.e., interrelated and preventable behaviors that contribute to the lead

and mortality among youths and adults) often are established during childhood and adolescence and

Youth Risk Behavior Surveillance System (YRBSS), established in 1991, monitors six categories of prio

among youths and young adults: 1) behaviors that contribute to unintentional injuries and violence; 2

contribute to human immunodeficiency virus (HIV) infection, other sexually transmitted diseases, and

3) tobacco use; 4) alcohol and other drug use; 5) unhealthy dietary behaviors; and 6) physical inactivi

monitors the prevalence of obesity and asthma among this population.

YRBSS data are obtained from multiple sources including a national school-based survey conducted

based state, territorial, tribal, and large urban school district surveys conducted by education and hea

have been conducted biennially since 1991 and include representative samples of students in grades

of the YRBSS methodology was published (CDC. Methodology of the Youth Risk Behavior Surveillance

[No RR-12]). Since 2004, improvements have been made to YRBSS, including increases in coverage a

This report describes these changes and updates earlier descriptions of the system, including questio

procedures; sampling, weighting, and response rates; data-collection protocols; data-processing proce

and data quality. This report also includes results of methods studies that systematically examined ho

affect prevalence estimates. YRBSS continues to evolve to meet the needs of CDC and other data use

of the questionnaire, the addition of new populations, and the development of innovative methods for

had been ongoing since 1975 (1). This study measureBackground and Rationale use and related determinants in a national sample of

Data from surveillance systems are critical for planning andin grade 12; it has since been expanded to include st

evaluating public health programs. During the late 1980s,in grades 8 and 10 and a broader health-risk behavio

when CDC began funding education agencies to implementIn 1987, the one-time National Adolescent Student He

school-based programs to prevent human immunodeficiencySurvey was administered to a nationally representativ

virus (HIV), only a limited number of health-related school-of students in grades 8 and 10; this survey measured

based surveys existed in the United States to inform programskills (e.g., reading food and drug labels), alcohol and

planning and evaluation. The Monitoring the Future studydrug use, injury prevention, nutrition, knowledge and

The material in this report originated in the National Center for HIV/

AIDS, Viral Hepatitis, STD, and TB Prevention, Rima F. Khabbaz,

MD, Acting Director; and the Division of Adolescent and School

Health, Howell Wechsler, EdD, Director.

Corresponding preparer: Nancy D. Brener, PhD, National Center for

HIV/AIDS, Viral Hepatitis, STD, and TB Prevention, 4770 Buford

Highway NE, MS K-33, Atlanta, GA 30341; Telephone: 770-488-6184;

Fax: 770-488-6156; E-mail: nad1@cdc.gov.

about sexually transmitted diseases (STDs) and a

immunodeficiency syndrome (AIDS), attempted suicid

violence-related behaviors (2). In addition, in 198

conducted a national survey to measure knowledge, b

and behaviors concerning HIV among high school stu

(3). However, surveys conducted only on a national le

time surveys, and surveys addressing only certain ca

of health-risk behaviors could not meet the need

MMWR / March 1, 2013/ Vol. 62/ No. 1 1

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

Recommendations and Reports

territorial, and local education and health agencies that had

begun receiving funding to implement school health programs.

More specifically, in 1987, CDC began providing financial

and technical assistance to state, territorial, and local education

agencies to implement effective HIV prevention programs

for youths. Since 1992, CDC also has provided financial and

technical assistance to state education agencies to implement

additional broad-based programs, often referred to as

“coordinated school health programs,” which focus on obesity

and tobacco use prevention. Since 2008, CDC also has funded

tribal governments for HIV prevention and coordinated school

health programs.

Before 1991, school-based HIV prevention programs and

coordinated school health programs frequently were developed

without empiric information on the prevalence of key behaviors

that most influence health and on how those behaviors varied

over time and across subgroups of students. To plan and

help determine the effectiveness of school health programs,

public health and education officials need to understand how

programs influence the health-risk behaviors associated with

the leading causes of morbidity and mortality among youths

and adults in the United States.

In 1991, to address the need for data on the health-risk

behaviors that contribute substantially to the leading causes of

morbidity and mortality among U.S. youths and young adults,

CDC developed the Youth Risk Behavior Surveillance System

(YRBSS), which monitors six categories of priority health-risk

behaviors among youths and young adults: 1) behaviors that

contribute to unintentional injuries and violence; 2) sexual

behaviors that contribute to HIV infection, other STDs, and

unintended pregnancy; 3) tobacco use; 4) alcohol and other drug

use; 5) unhealthy dietary behaviors; and 6) physical inactivity.

In addition, the surveillance system monitors the prevalence of

obesity and asthma among this population. The system includes

a national school-based survey conducted by CDC as well as

school-based state, territorial, tribal, and large urban school

district surveys conducted by education and health agencies. In

these surveys, conducted biennially since 1991, representative

samples of students typically in grades 9–12 are drawn. In 2004,

a description of the YRBSS methodology was published (4).

This updated report discusses changes that have been made to

YRBSS since 2004 and provides an updated, detailed description

of the features of the system, including questionnaire content;

operational procedures; sampling, weighting, and response rates;

data-collection protocols; data-processing procedures; reports

and publications; and data quality. This report also includes

results of new methods studies on the use of computer-based data

collection and describes enhancements made to the technical

assistance system that supports state, territorial, tribal, and large

urban school district surveys.

Purposes of YRBSS

YRBSS has multiple purposes. The system was desig

enable public health professionals, educators, policy m

and researchers to 1) describe the prevalence of hea

behaviors among youths, 2) assess trends in hea

behaviors over time, and 3) evaluate and improv

related policies and programs. YRBSS also was de

to provide comparable national, state, territorial,

urban school district data as well as comparable data

subpopulations of youths (e.g., racial/ethnic subgroup

monitor progress toward achieving national health ob

(5–7) (Table 1) and other program indicators (e.g., CD

performance on selected Government Performance a

Act measures) (8). Although YRBSS is designed to pro

information to help assess the effect of broad nationa

territorial, tribal, and local policies and programs, it w

designed to evaluate the effectiveness of specific inte

(e.g., a professional development program, school cu

or media campaign).

As YRBSS was being developed, CDC decided that t

system should focus almost exclusively on health-risk

rather than on the determinants of these behavio

knowledge, attitudes, beliefs, and skills), because

more direct connection between specific health-risk b

and specific health outcomes than between determin

behaviors and health outcomes. Many behaviors (e.g.

and other drug use and sexual behaviors) measured b

also are associated with educational and social o

including absenteeism, poor academic achievemen

dropping out of school (9).

Data Sources

YRBSS data sources include ongoing surveys as

one-time national surveys, special-population surve

methods studies. The ongoing surveys include school

national, state, tribal, and large urban school district

of representative samples of high school students

certain sites, representative state, territorial, and larg

school district surveys of middle school students. The

surveys are conducted biennially; each cycle beg

of the preceding even-numbered year (e.g., in 2010 f

2011 cycle) when the questionnaire for the upcoming

released and continues until the data are published in

the following even-numbered year (e.g., in 2012 for t

cycle). This section describes the ongoing surveys, on

national surveys, and special-population surveys.

studies are described elsewhere in this report (see Da

MMWR / March 1, 2013/ Vol. 62/ No. 12

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

territorial, and local education and health agencies that had

begun receiving funding to implement school health programs.

More specifically, in 1987, CDC began providing financial

and technical assistance to state, territorial, and local education

agencies to implement effective HIV prevention programs

for youths. Since 1992, CDC also has provided financial and

technical assistance to state education agencies to implement

additional broad-based programs, often referred to as

“coordinated school health programs,” which focus on obesity

and tobacco use prevention. Since 2008, CDC also has funded

tribal governments for HIV prevention and coordinated school

health programs.

Before 1991, school-based HIV prevention programs and

coordinated school health programs frequently were developed

without empiric information on the prevalence of key behaviors

that most influence health and on how those behaviors varied

over time and across subgroups of students. To plan and

help determine the effectiveness of school health programs,

public health and education officials need to understand how

programs influence the health-risk behaviors associated with

the leading causes of morbidity and mortality among youths

and adults in the United States.

In 1991, to address the need for data on the health-risk

behaviors that contribute substantially to the leading causes of

morbidity and mortality among U.S. youths and young adults,

CDC developed the Youth Risk Behavior Surveillance System

(YRBSS), which monitors six categories of priority health-risk

behaviors among youths and young adults: 1) behaviors that

contribute to unintentional injuries and violence; 2) sexual

behaviors that contribute to HIV infection, other STDs, and

unintended pregnancy; 3) tobacco use; 4) alcohol and other drug

use; 5) unhealthy dietary behaviors; and 6) physical inactivity.

In addition, the surveillance system monitors the prevalence of

obesity and asthma among this population. The system includes

a national school-based survey conducted by CDC as well as

school-based state, territorial, tribal, and large urban school

district surveys conducted by education and health agencies. In

these surveys, conducted biennially since 1991, representative

samples of students typically in grades 9–12 are drawn. In 2004,

a description of the YRBSS methodology was published (4).

This updated report discusses changes that have been made to

YRBSS since 2004 and provides an updated, detailed description

of the features of the system, including questionnaire content;

operational procedures; sampling, weighting, and response rates;

data-collection protocols; data-processing procedures; reports

and publications; and data quality. This report also includes

results of new methods studies on the use of computer-based data

collection and describes enhancements made to the technical

assistance system that supports state, territorial, tribal, and large

urban school district surveys.

Purposes of YRBSS

YRBSS has multiple purposes. The system was desig

enable public health professionals, educators, policy m

and researchers to 1) describe the prevalence of hea

behaviors among youths, 2) assess trends in hea

behaviors over time, and 3) evaluate and improv

related policies and programs. YRBSS also was de

to provide comparable national, state, territorial,

urban school district data as well as comparable data

subpopulations of youths (e.g., racial/ethnic subgroup

monitor progress toward achieving national health ob

(5–7) (Table 1) and other program indicators (e.g., CD

performance on selected Government Performance a

Act measures) (8). Although YRBSS is designed to pro

information to help assess the effect of broad nationa

territorial, tribal, and local policies and programs, it w

designed to evaluate the effectiveness of specific inte

(e.g., a professional development program, school cu

or media campaign).

As YRBSS was being developed, CDC decided that t

system should focus almost exclusively on health-risk

rather than on the determinants of these behavio

knowledge, attitudes, beliefs, and skills), because

more direct connection between specific health-risk b

and specific health outcomes than between determin

behaviors and health outcomes. Many behaviors (e.g.

and other drug use and sexual behaviors) measured b

also are associated with educational and social o

including absenteeism, poor academic achievemen

dropping out of school (9).

Data Sources

YRBSS data sources include ongoing surveys as

one-time national surveys, special-population surve

methods studies. The ongoing surveys include school

national, state, tribal, and large urban school district

of representative samples of high school students

certain sites, representative state, territorial, and larg

school district surveys of middle school students. The

surveys are conducted biennially; each cycle beg

of the preceding even-numbered year (e.g., in 2010 f

2011 cycle) when the questionnaire for the upcoming

released and continues until the data are published in

the following even-numbered year (e.g., in 2012 for t

cycle). This section describes the ongoing surveys, on

national surveys, and special-population surveys.

studies are described elsewhere in this report (see Da

MMWR / March 1, 2013/ Vol. 62/ No. 12

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

Recommendations and Reports

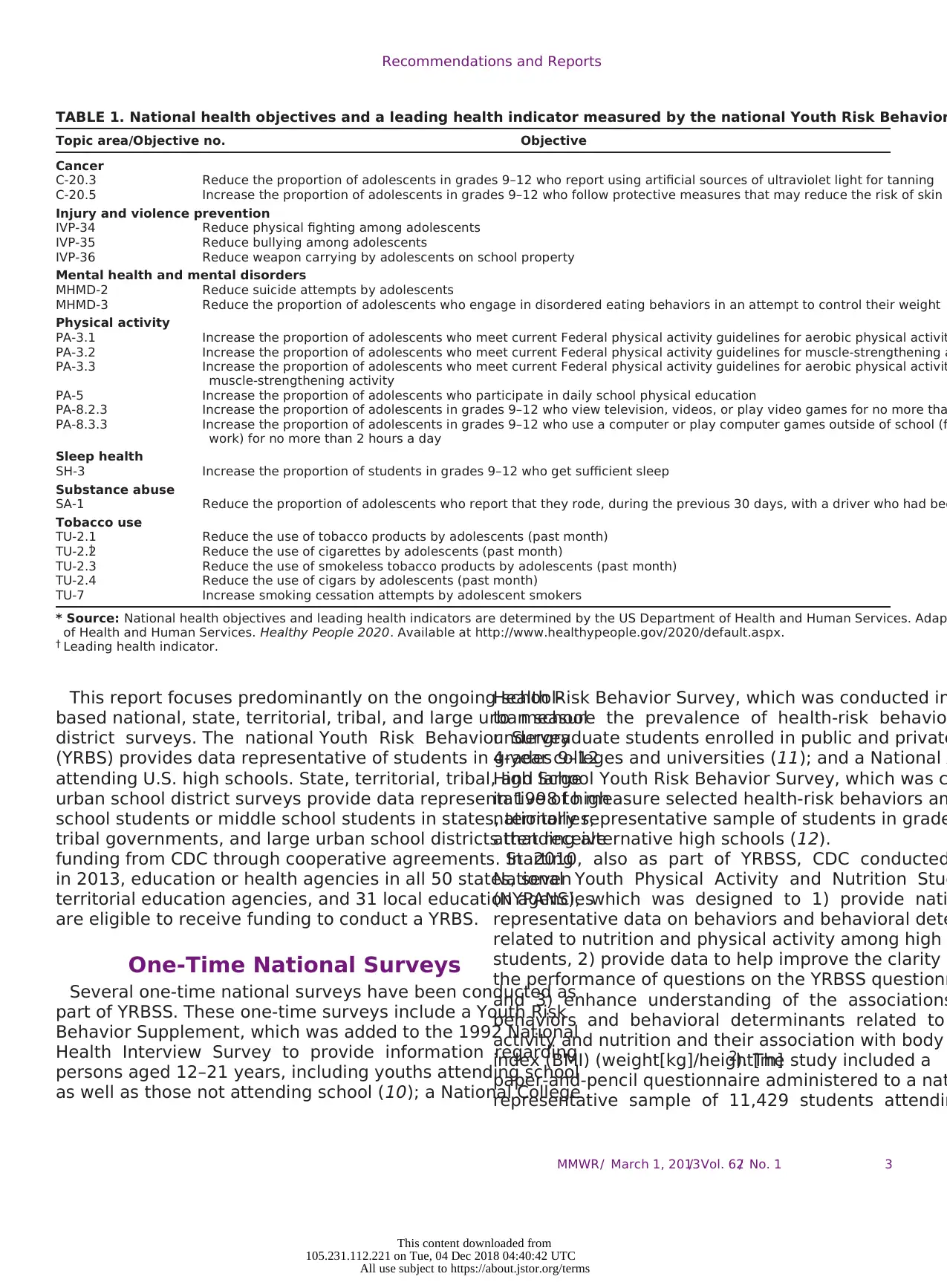

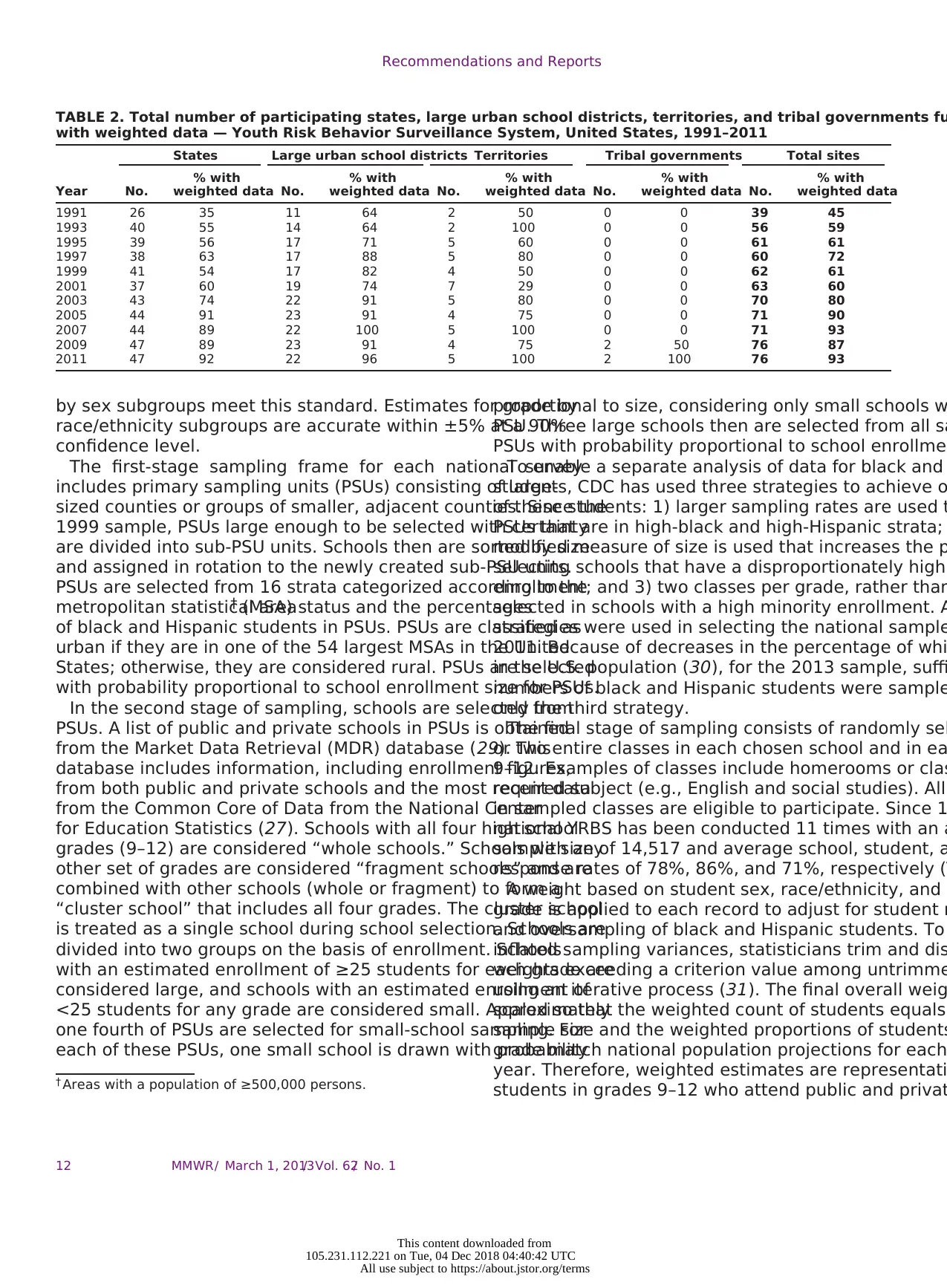

TABLE 1. National health objectives and a leading health indicator measured by the national Youth Risk Behavior

Topic area/Objective no. Objective

Cancer

C-20.3 Reduce the proportion of adolescents in grades 9–12 who report using artificial sources of ultraviolet light for tanning

C-20.5 Increase the proportion of adolescents in grades 9–12 who follow protective measures that may reduce the risk of skin c

Injury and violence prevention

IVP-34 Reduce physical fighting among adolescents

IVP-35 Reduce bullying among adolescents

IVP-36 Reduce weapon carrying by adolescents on school property

Mental health and mental disorders

MHMD-2 Reduce suicide attempts by adolescents

MHMD-3 Reduce the proportion of adolescents who engage in disordered eating behaviors in an attempt to control their weight

Physical activity

PA-3.1 Increase the proportion of adolescents who meet current Federal physical activity guidelines for aerobic physical activit

PA-3.2 Increase the proportion of adolescents who meet current Federal physical activity guidelines for muscle-strengthening a

PA-3.3 Increase the proportion of adolescents who meet current Federal physical activity guidelines for aerobic physical activit

muscle-strengthening activity

PA-5 Increase the proportion of adolescents who participate in daily school physical education

PA-8.2.3 Increase the proportion of adolescents in grades 9–12 who view television, videos, or play video games for no more tha

PA-8.3.3 Increase the proportion of adolescents in grades 9–12 who use a computer or play computer games outside of school (f

work) for no more than 2 hours a day

Sleep health

SH-3 Increase the proportion of students in grades 9–12 who get sufficient sleep

Substance abuse

SA-1 Reduce the proportion of adolescents who report that they rode, during the previous 30 days, with a driver who had bee

Tobacco use

TU-2.1 Reduce the use of tobacco products by adolescents (past month)

TU-2.2† Reduce the use of cigarettes by adolescents (past month)

TU-2.3 Reduce the use of smokeless tobacco products by adolescents (past month)

TU-2.4 Reduce the use of cigars by adolescents (past month)

TU-7 Increase smoking cessation attempts by adolescent smokers

* Source: National health objectives and leading health indicators are determined by the US Department of Health and Human Services. Adap

of Health and Human Services. Healthy People 2020. Available at http://www.healthypeople.gov/2020/default.aspx.

† Leading health indicator.

This report focuses predominantly on the ongoing school-

based national, state, territorial, tribal, and large urban school

district surveys. The national Youth Risk Behavior Survey

(YRBS) provides data representative of students in grades 9–12

attending U.S. high schools. State, territorial, tribal, and large

urban school district surveys provide data representative of high

school students or middle school students in states, territories,

tribal governments, and large urban school districts that receive

funding from CDC through cooperative agreements. Starting

in 2013, education or health agencies in all 50 states, seven

territorial education agencies, and 31 local education agencies

are eligible to receive funding to conduct a YRBS.

One-Time National Surveys

Several one-time national surveys have been conducted as

part of YRBSS. These one-time surveys include a Youth Risk

Behavior Supplement, which was added to the 1992 National

Health Interview Survey to provide information regarding

persons aged 12–21 years, including youths attending school

as well as those not attending school (10); a National College

Health Risk Behavior Survey, which was conducted in

to measure the prevalence of health-risk behavior

undergraduate students enrolled in public and private

4-year colleges and universities (11); and a National A

High School Youth Risk Behavior Survey, which was c

in 1998 to measure selected health-risk behaviors am

nationally representative sample of students in grade

attending alternative high schools (12).

In 2010, also as part of YRBSS, CDC conducted

National Youth Physical Activity and Nutrition Stud

(NYPANS), which was designed to 1) provide nati

representative data on behaviors and behavioral dete

related to nutrition and physical activity among high

students, 2) provide data to help improve the clarity a

the performance of questions on the YRBSS questionn

and 3) enhance understanding of the associations

behaviors and behavioral determinants related to

activity and nutrition and their association with body

index (BMI) (weight[kg]/height[m]2). The study included a

paper-and-pencil questionnaire administered to a nat

representative sample of 11,429 students attendin

MMWR / March 1, 2013/ Vol. 62/ No. 1 3

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

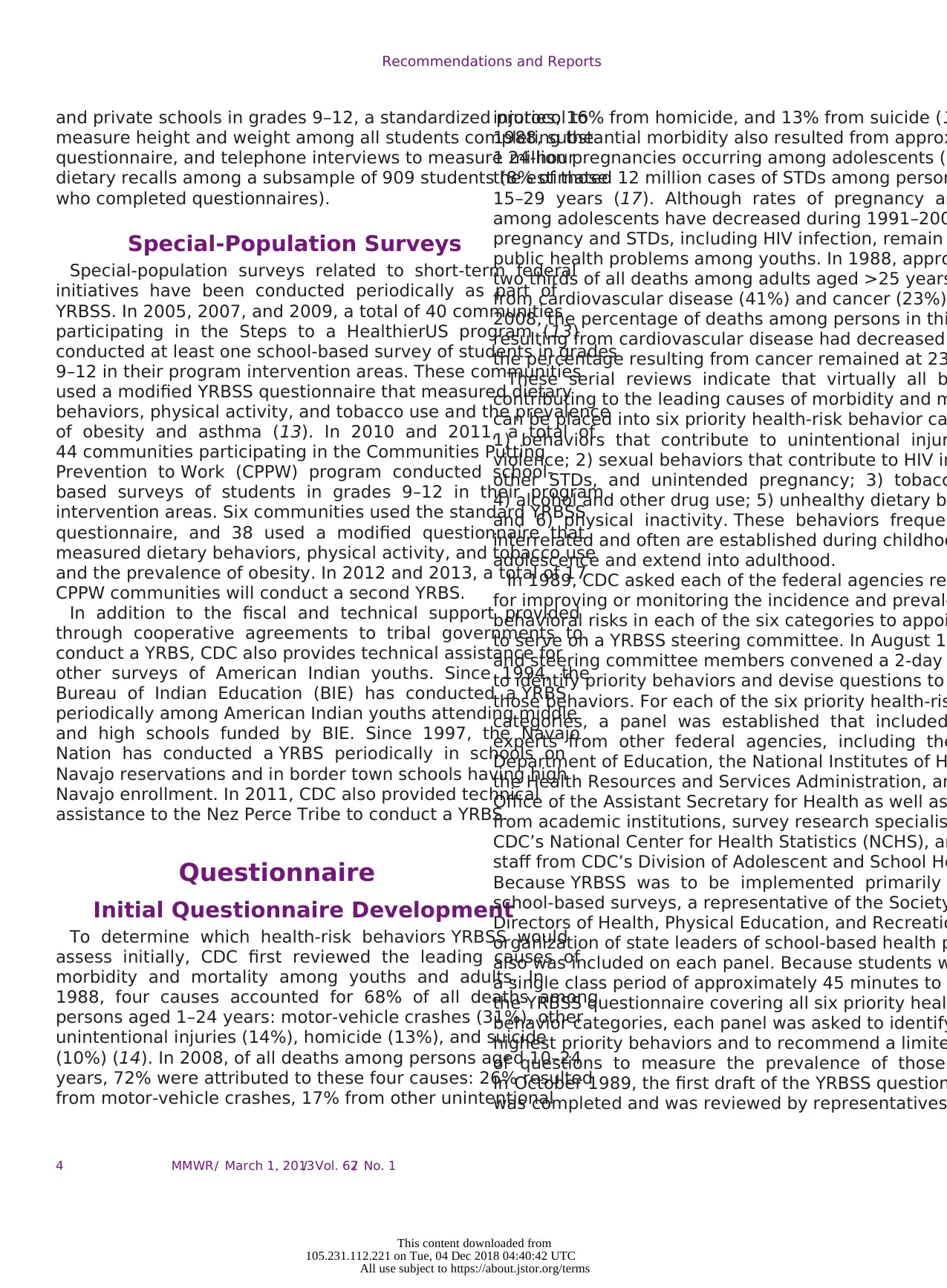

TABLE 1. National health objectives and a leading health indicator measured by the national Youth Risk Behavior

Topic area/Objective no. Objective

Cancer

C-20.3 Reduce the proportion of adolescents in grades 9–12 who report using artificial sources of ultraviolet light for tanning

C-20.5 Increase the proportion of adolescents in grades 9–12 who follow protective measures that may reduce the risk of skin c

Injury and violence prevention

IVP-34 Reduce physical fighting among adolescents

IVP-35 Reduce bullying among adolescents

IVP-36 Reduce weapon carrying by adolescents on school property

Mental health and mental disorders

MHMD-2 Reduce suicide attempts by adolescents

MHMD-3 Reduce the proportion of adolescents who engage in disordered eating behaviors in an attempt to control their weight

Physical activity

PA-3.1 Increase the proportion of adolescents who meet current Federal physical activity guidelines for aerobic physical activit

PA-3.2 Increase the proportion of adolescents who meet current Federal physical activity guidelines for muscle-strengthening a

PA-3.3 Increase the proportion of adolescents who meet current Federal physical activity guidelines for aerobic physical activit

muscle-strengthening activity

PA-5 Increase the proportion of adolescents who participate in daily school physical education

PA-8.2.3 Increase the proportion of adolescents in grades 9–12 who view television, videos, or play video games for no more tha

PA-8.3.3 Increase the proportion of adolescents in grades 9–12 who use a computer or play computer games outside of school (f

work) for no more than 2 hours a day

Sleep health

SH-3 Increase the proportion of students in grades 9–12 who get sufficient sleep

Substance abuse

SA-1 Reduce the proportion of adolescents who report that they rode, during the previous 30 days, with a driver who had bee

Tobacco use

TU-2.1 Reduce the use of tobacco products by adolescents (past month)

TU-2.2† Reduce the use of cigarettes by adolescents (past month)

TU-2.3 Reduce the use of smokeless tobacco products by adolescents (past month)

TU-2.4 Reduce the use of cigars by adolescents (past month)

TU-7 Increase smoking cessation attempts by adolescent smokers

* Source: National health objectives and leading health indicators are determined by the US Department of Health and Human Services. Adap

of Health and Human Services. Healthy People 2020. Available at http://www.healthypeople.gov/2020/default.aspx.

† Leading health indicator.

This report focuses predominantly on the ongoing school-

based national, state, territorial, tribal, and large urban school

district surveys. The national Youth Risk Behavior Survey

(YRBS) provides data representative of students in grades 9–12

attending U.S. high schools. State, territorial, tribal, and large

urban school district surveys provide data representative of high

school students or middle school students in states, territories,

tribal governments, and large urban school districts that receive

funding from CDC through cooperative agreements. Starting

in 2013, education or health agencies in all 50 states, seven

territorial education agencies, and 31 local education agencies

are eligible to receive funding to conduct a YRBS.

One-Time National Surveys

Several one-time national surveys have been conducted as

part of YRBSS. These one-time surveys include a Youth Risk

Behavior Supplement, which was added to the 1992 National

Health Interview Survey to provide information regarding

persons aged 12–21 years, including youths attending school

as well as those not attending school (10); a National College

Health Risk Behavior Survey, which was conducted in

to measure the prevalence of health-risk behavior

undergraduate students enrolled in public and private

4-year colleges and universities (11); and a National A

High School Youth Risk Behavior Survey, which was c

in 1998 to measure selected health-risk behaviors am

nationally representative sample of students in grade

attending alternative high schools (12).

In 2010, also as part of YRBSS, CDC conducted

National Youth Physical Activity and Nutrition Stud

(NYPANS), which was designed to 1) provide nati

representative data on behaviors and behavioral dete

related to nutrition and physical activity among high

students, 2) provide data to help improve the clarity a

the performance of questions on the YRBSS questionn

and 3) enhance understanding of the associations

behaviors and behavioral determinants related to

activity and nutrition and their association with body

index (BMI) (weight[kg]/height[m]2). The study included a

paper-and-pencil questionnaire administered to a nat

representative sample of 11,429 students attendin

MMWR / March 1, 2013/ Vol. 62/ No. 1 3

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

Secure Best Marks with AI Grader

Need help grading? Try our AI Grader for instant feedback on your assignments.

Recommendations and Reports

and private schools in grades 9–12, a standardized protocol to

measure height and weight among all students completing the

questionnaire, and telephone interviews to measure 24-hour

dietary recalls among a subsample of 909 students (8% of those

who completed questionnaires).

Special-Population Surveys

Special-population surveys related to short-term federal

initiatives have been conducted periodically as part of

YRBSS. In 2005, 2007, and 2009, a total of 40 communities

participating in the Steps to a HealthierUS program (13)

conducted at least one school-based survey of students in grades

9–12 in their program intervention areas. These communities

used a modified YRBSS questionnaire that measured dietary

behaviors, physical activity, and tobacco use and the prevalence

of obesity and asthma (13). In 2010 and 2011, a total of

44 communities participating in the Communities Putting

Prevention to Work (CPPW) program conducted school-

based surveys of students in grades 9–12 in their program

intervention areas. Six communities used the standard YRBSS

questionnaire, and 38 used a modified questionnaire that

measured dietary behaviors, physical activity, and tobacco use

and the prevalence of obesity. In 2012 and 2013, a total of 17

CPPW communities will conduct a second YRBS.

In addition to the fiscal and technical support provided

through cooperative agreements to tribal governments to

conduct a YRBS, CDC also provides technical assistance for

other surveys of American Indian youths. Since 1994, the

Bureau of Indian Education (BIE) has conducted a YRBS

periodically among American Indian youths attending middle

and high schools funded by BIE. Since 1997, the Navajo

Nation has conducted a YRBS periodically in schools on

Navajo reservations and in border town schools having high

Navajo enrollment. In 2011, CDC also provided technical

assistance to the Nez Perce Tribe to conduct a YRBS.

Questionnaire

Initial Questionnaire Development

To determine which health-risk behaviors YRBSS would

assess initially, CDC first reviewed the leading causes of

morbidity and mortality among youths and adults. In

1988, four causes accounted for 68% of all deaths among

persons aged 1–24 years: motor-vehicle crashes (31%), other

unintentional injuries (14%), homicide (13%), and suicide

(10%) (14). In 2008, of all deaths among persons aged 10–24

years, 72% were attributed to these four causes: 26% resulted

from motor-vehicle crashes, 17% from other unintentional

injuries, 16% from homicide, and 13% from suicide (1

1988, substantial morbidity also resulted from approx

1 million pregnancies occurring among adolescents (1

the estimated 12 million cases of STDs among person

15–29 years (17). Although rates of pregnancy an

among adolescents have decreased during 1991–200

pregnancy and STDs, including HIV infection, remain

public health problems among youths. In 1988, appro

two thirds of all deaths among adults aged >25 years

from cardiovascular disease (41%) and cancer (23%)

2008, the percentage of deaths among persons in thi

resulting from cardiovascular disease had decreased

the percentage resulting from cancer remained at 23

These serial reviews indicate that virtually all b

contributing to the leading causes of morbidity and m

can be placed into six priority health-risk behavior ca

1) behaviors that contribute to unintentional injur

violence; 2) sexual behaviors that contribute to HIV in

other STDs, and unintended pregnancy; 3) tobacc

4) alcohol and other drug use; 5) unhealthy dietary be

and 6) physical inactivity. These behaviors frequen

interrelated and often are established during childhoo

adolescence and extend into adulthood.

In 1989, CDC asked each of the federal agencies res

for improving or monitoring the incidence and prevale

behavioral risks in each of the six categories to appoi

to serve on a YRBSS steering committee. In August 19

and steering committee members convened a 2-day

to identify priority behaviors and devise questions to

those behaviors. For each of the six priority health-ris

categories, a panel was established that included

experts from other federal agencies, including the

Department of Education, the National Institutes of H

the Health Resources and Services Administration, an

Office of the Assistant Secretary for Health as well as

from academic institutions, survey research specialis

CDC’s National Center for Health Statistics (NCHS), an

staff from CDC’s Division of Adolescent and School He

Because YRBSS was to be implemented primarily

school-based surveys, a representative of the Society

Directors of Health, Physical Education, and Recreatio

organization of state leaders of school-based health p

also was included on each panel. Because students w

a single class period of approximately 45 minutes to c

the YRBSS questionnaire covering all six priority healt

behavior categories, each panel was asked to identify

highest priority behaviors and to recommend a limite

of questions to measure the prevalence of those

In October 1989, the first draft of the YRBSS question

was completed and was reviewed by representatives

MMWR / March 1, 2013/ Vol. 62/ No. 14

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

and private schools in grades 9–12, a standardized protocol to

measure height and weight among all students completing the

questionnaire, and telephone interviews to measure 24-hour

dietary recalls among a subsample of 909 students (8% of those

who completed questionnaires).

Special-Population Surveys

Special-population surveys related to short-term federal

initiatives have been conducted periodically as part of

YRBSS. In 2005, 2007, and 2009, a total of 40 communities

participating in the Steps to a HealthierUS program (13)

conducted at least one school-based survey of students in grades

9–12 in their program intervention areas. These communities

used a modified YRBSS questionnaire that measured dietary

behaviors, physical activity, and tobacco use and the prevalence

of obesity and asthma (13). In 2010 and 2011, a total of

44 communities participating in the Communities Putting

Prevention to Work (CPPW) program conducted school-

based surveys of students in grades 9–12 in their program

intervention areas. Six communities used the standard YRBSS

questionnaire, and 38 used a modified questionnaire that

measured dietary behaviors, physical activity, and tobacco use

and the prevalence of obesity. In 2012 and 2013, a total of 17

CPPW communities will conduct a second YRBS.

In addition to the fiscal and technical support provided

through cooperative agreements to tribal governments to

conduct a YRBS, CDC also provides technical assistance for

other surveys of American Indian youths. Since 1994, the

Bureau of Indian Education (BIE) has conducted a YRBS

periodically among American Indian youths attending middle

and high schools funded by BIE. Since 1997, the Navajo

Nation has conducted a YRBS periodically in schools on

Navajo reservations and in border town schools having high

Navajo enrollment. In 2011, CDC also provided technical

assistance to the Nez Perce Tribe to conduct a YRBS.

Questionnaire

Initial Questionnaire Development

To determine which health-risk behaviors YRBSS would

assess initially, CDC first reviewed the leading causes of

morbidity and mortality among youths and adults. In

1988, four causes accounted for 68% of all deaths among

persons aged 1–24 years: motor-vehicle crashes (31%), other

unintentional injuries (14%), homicide (13%), and suicide

(10%) (14). In 2008, of all deaths among persons aged 10–24

years, 72% were attributed to these four causes: 26% resulted

from motor-vehicle crashes, 17% from other unintentional

injuries, 16% from homicide, and 13% from suicide (1

1988, substantial morbidity also resulted from approx

1 million pregnancies occurring among adolescents (1

the estimated 12 million cases of STDs among person

15–29 years (17). Although rates of pregnancy an

among adolescents have decreased during 1991–200

pregnancy and STDs, including HIV infection, remain

public health problems among youths. In 1988, appro

two thirds of all deaths among adults aged >25 years

from cardiovascular disease (41%) and cancer (23%)

2008, the percentage of deaths among persons in thi

resulting from cardiovascular disease had decreased

the percentage resulting from cancer remained at 23

These serial reviews indicate that virtually all b

contributing to the leading causes of morbidity and m

can be placed into six priority health-risk behavior ca

1) behaviors that contribute to unintentional injur

violence; 2) sexual behaviors that contribute to HIV in

other STDs, and unintended pregnancy; 3) tobacc

4) alcohol and other drug use; 5) unhealthy dietary be

and 6) physical inactivity. These behaviors frequen

interrelated and often are established during childhoo

adolescence and extend into adulthood.

In 1989, CDC asked each of the federal agencies res

for improving or monitoring the incidence and prevale

behavioral risks in each of the six categories to appoi

to serve on a YRBSS steering committee. In August 19

and steering committee members convened a 2-day

to identify priority behaviors and devise questions to

those behaviors. For each of the six priority health-ris

categories, a panel was established that included

experts from other federal agencies, including the

Department of Education, the National Institutes of H

the Health Resources and Services Administration, an

Office of the Assistant Secretary for Health as well as

from academic institutions, survey research specialis

CDC’s National Center for Health Statistics (NCHS), an

staff from CDC’s Division of Adolescent and School He

Because YRBSS was to be implemented primarily

school-based surveys, a representative of the Society

Directors of Health, Physical Education, and Recreatio

organization of state leaders of school-based health p

also was included on each panel. Because students w

a single class period of approximately 45 minutes to c

the YRBSS questionnaire covering all six priority healt

behavior categories, each panel was asked to identify

highest priority behaviors and to recommend a limite

of questions to measure the prevalence of those

In October 1989, the first draft of the YRBSS question

was completed and was reviewed by representatives

MMWR / March 1, 2013/ Vol. 62/ No. 14

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

Recommendations and Reports

education agency of each state, the District of Columbia,

four U.S. territories, and 16 local education agencies then

funded by CDC. Survey research specialists from NCHS

also provided comments and suggestions. A second version

of the YRBSS questionnaire was administered during spring

1990 to a national sample of students in grades 9–12 as well

as to samples of students in 25 states and nine large urban

school districts. In addition, the second version was sent to

the Questionnaire Design Research Laboratory at NCHS

for laboratory and field testing with high school students.

NCHS staff examined student responses to the questionnaire

and recommended ways to improve reliability and validity by

clarifying the wording of questions, setting recall periods, and

identifying response options.

In October 1990, a third version of the YRBSS questionnaire

was completed. The questionnaire was similar to that used

during spring 1990 but revised to take into account data

collected by CDC and state and local education agencies

during spring 1990, information from NCHS’s laboratory

and field tests, and input from YRBSS steering committee

members and representatives of each state and the 16 local

education agencies. It also included questions for measuring

national health objectives for 2000 (5). During spring 1991,

this questionnaire was used by 26 states and 11 large urban

school districts to conduct a YRBS and by CDC to conduct

a national YRBS.

In 1991, CDC determined that biennial surveys would be

sufficient to measure health-risk behaviors among students

because behavior changes typically occur gradually. Since 1991,

YRBSs have been conducted every odd year at the national,

state, territorial, and large urban school district levels.

Questionnaire Characteristics and

Revisions

All YRBSS questionnaires are self-administered, and students

record their responses on a computer-scannable questionnaire

booklet or answer sheet. Skip patterns* are not included in any

YRBSS questionnaire to help ensure that similar amounts of

time are required to complete the questionnaire, regardless of

each student’s health-risk behavior status. This technique also

prevents students from detecting on other answer sheets and

questionnaire booklets a pattern of blank responses that might

identify the specific health-risk behaviors of other students.

In each even-numbered year between 1991 and 1997, in

consultation with the sites (states, territories, and large urban

school districts) conducting a survey, CDC revised the YRBSS

questionnaire to be used in the subsequent cycle. These revisions

* Skip patterns occur when a particular response to one question indicates to the

respondents that they should not answer one or more subsequent questions.

reflected site and national priorities. For example, in

added 10 questions to the 1993 questionnaire to mea

National Education Goal for safe, disciplined, and drug

schools (21) and to address reporting requirements fo

Department of Education’s Safe and Drug-Free Schoo

(http://www2.ed.gov/about/offices/list/osdfs/index.htm

In 1997, CDC undertook an in-depth, systematic rev

the YRBSS questionnaire. The review was motivated b

factors, including a goal for YRBSS to measure Health

2010 national health objectives, which were being de

at that time. The purpose of the review and the subse

revision process was to ensure that the questionnaire

provide the most effective assessment of the most cr

risk behaviors among youths. To guide the decision-m

process, CDC solicited input from content experts fro

and academia as well as from representatives from o

agencies; state, territorial, and local education agenc

health departments; and national organizations, foun

and institutes. On the basis of input from approximate

persons, CDC developed a proposed set of questi

revisions that were sent to all state, territorial, and lo

agencies for further input. In addition to consider

amount of support from sites for the proposed revisio

considered multiple factors in making final decisions

questionnaire, including 1) input from the original rev

2) whether the question measured a health-risk b

practiced by youths, 3) whether data on the topic wer

from other sources, 4) the relation of the behavior to

causes of morbidity and mortality among youths and

5) whether effective interventions existed that could

modify the behavior. As a result of this process, CDC

1999 YRBSS questionnaire by adding 16 new question

11 questions, and making substantial wording change

questions. For example, two questions that assessed

height and weight were added in recognition of i

concerns regarding obesity. As a result, YRBSS now g

national, state, territorial, tribal, and large urban scho

estimates of BMI calculated from self-reported data.

The 2013 YRBSS questionnaire reflects minor ch

that CDC has made to the questionnaire since 1999. D

each even-numbered year since 1999, CDC has soug

from experts both inside and outside of CDC reg

what questions should be changed, added, or deleted

changes, additions, and deletions were then placed o

sent to the YRBS coordinators at all sites, and each si

for or against each proposed change, addition, and de

CDC considered the results of this balloting process w

finalizing each questionnaire. Each cycle, CDC de

standard questionnaire that sites can use as is or mod

meet their needs. The 2013 standard YRBSS question

MMWR / March 1, 2013/ Vol. 62/ No. 1 5

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

education agency of each state, the District of Columbia,

four U.S. territories, and 16 local education agencies then

funded by CDC. Survey research specialists from NCHS

also provided comments and suggestions. A second version

of the YRBSS questionnaire was administered during spring

1990 to a national sample of students in grades 9–12 as well

as to samples of students in 25 states and nine large urban

school districts. In addition, the second version was sent to

the Questionnaire Design Research Laboratory at NCHS

for laboratory and field testing with high school students.

NCHS staff examined student responses to the questionnaire

and recommended ways to improve reliability and validity by

clarifying the wording of questions, setting recall periods, and

identifying response options.

In October 1990, a third version of the YRBSS questionnaire

was completed. The questionnaire was similar to that used

during spring 1990 but revised to take into account data

collected by CDC and state and local education agencies

during spring 1990, information from NCHS’s laboratory

and field tests, and input from YRBSS steering committee

members and representatives of each state and the 16 local

education agencies. It also included questions for measuring

national health objectives for 2000 (5). During spring 1991,

this questionnaire was used by 26 states and 11 large urban

school districts to conduct a YRBS and by CDC to conduct

a national YRBS.

In 1991, CDC determined that biennial surveys would be

sufficient to measure health-risk behaviors among students

because behavior changes typically occur gradually. Since 1991,

YRBSs have been conducted every odd year at the national,

state, territorial, and large urban school district levels.

Questionnaire Characteristics and

Revisions

All YRBSS questionnaires are self-administered, and students

record their responses on a computer-scannable questionnaire

booklet or answer sheet. Skip patterns* are not included in any

YRBSS questionnaire to help ensure that similar amounts of

time are required to complete the questionnaire, regardless of

each student’s health-risk behavior status. This technique also

prevents students from detecting on other answer sheets and

questionnaire booklets a pattern of blank responses that might

identify the specific health-risk behaviors of other students.

In each even-numbered year between 1991 and 1997, in

consultation with the sites (states, territories, and large urban

school districts) conducting a survey, CDC revised the YRBSS

questionnaire to be used in the subsequent cycle. These revisions

* Skip patterns occur when a particular response to one question indicates to the

respondents that they should not answer one or more subsequent questions.

reflected site and national priorities. For example, in

added 10 questions to the 1993 questionnaire to mea

National Education Goal for safe, disciplined, and drug

schools (21) and to address reporting requirements fo

Department of Education’s Safe and Drug-Free Schoo

(http://www2.ed.gov/about/offices/list/osdfs/index.htm

In 1997, CDC undertook an in-depth, systematic rev

the YRBSS questionnaire. The review was motivated b

factors, including a goal for YRBSS to measure Health

2010 national health objectives, which were being de

at that time. The purpose of the review and the subse

revision process was to ensure that the questionnaire

provide the most effective assessment of the most cr

risk behaviors among youths. To guide the decision-m

process, CDC solicited input from content experts fro

and academia as well as from representatives from o

agencies; state, territorial, and local education agenc

health departments; and national organizations, foun

and institutes. On the basis of input from approximate

persons, CDC developed a proposed set of questi

revisions that were sent to all state, territorial, and lo

agencies for further input. In addition to consider

amount of support from sites for the proposed revisio

considered multiple factors in making final decisions

questionnaire, including 1) input from the original rev

2) whether the question measured a health-risk b

practiced by youths, 3) whether data on the topic wer

from other sources, 4) the relation of the behavior to

causes of morbidity and mortality among youths and

5) whether effective interventions existed that could

modify the behavior. As a result of this process, CDC

1999 YRBSS questionnaire by adding 16 new question

11 questions, and making substantial wording change

questions. For example, two questions that assessed

height and weight were added in recognition of i

concerns regarding obesity. As a result, YRBSS now g

national, state, territorial, tribal, and large urban scho

estimates of BMI calculated from self-reported data.

The 2013 YRBSS questionnaire reflects minor ch

that CDC has made to the questionnaire since 1999. D

each even-numbered year since 1999, CDC has soug

from experts both inside and outside of CDC reg

what questions should be changed, added, or deleted

changes, additions, and deletions were then placed o

sent to the YRBS coordinators at all sites, and each si

for or against each proposed change, addition, and de

CDC considered the results of this balloting process w

finalizing each questionnaire. Each cycle, CDC de

standard questionnaire that sites can use as is or mod

meet their needs. The 2013 standard YRBSS question

MMWR / March 1, 2013/ Vol. 62/ No. 1 5

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

Recommendations and Reports

includes five questions that assess demographic information;

23 questions related to unintentional injuries and violence; 10

on tobacco use; 18 on alcohol and other drug use; seven on

sexual behaviors; 16 on body weight and dietary behaviors,

including height and weight; five on physical activity; and

two on other health-related topics (i.e., asthma and sleep). The

2013 standard questionnaire and the rationale for the inclusion

of each question are available at http://www.cdc.gov/yrbss.

For the national YRBS, five to 11 additional questions are

added to the standard questionnaire each cycle. These questions

typically cover health-related topics that do not fit in the six

priority health-risk behavior categories (e.g., sun protection).

The 2013 national YRBS questionnaire also is available at

http://www.cdc.gov/yrbss.

Each cycle, CDC makes the standard questionnaire available

to sites as a computer-scannable booklet. In 2011, nine states,

one tribe, and six large urban school districts used the standard

questionnaire computer-scannable booklets. CDC sends sites

that wish to modify the standard questionnaire a print-ready

copy of their questionnaire and scannable answer sheets.

Sites can modify the standard questionnaire within certain

parameters: 1) two thirds of the questions from the standard

YRBSS questionnaire must remain unchanged; 2) additional

questions are limited to eight mutually exclusive response

options; and 3) skip patterns, grid formats, and fill-in-the

blank formats cannot be used. Furthermore, sites that modify

the standard YRBSS questionnaire and use the scannable

answer sheets must retain the height and weight questions

as questions six and seven and cannot have more than 99

questions. This numerical limit is set so the questionnaire can

be completed during a single class period by all students, even

those who might read slowly.

For sites that want to modify the standard questionnaire, CDC

also provides a list of optional questions for consideration. This

list has been available to sites since 1999 and is updated when the

standard YRBSS questionnaire is updated. It includes questions on

the current version of the national YRBS questionnaire; questions

that have been included in a previous national, state, territorial,

tribal, or large urban school district YRBS questionnaire; and

questions designed to address topics of key interest to CDC or

the sites. By using these optional questions, sites can obtain data

comparable to those from the national YRBS or from other sites

that use these questions and be assured they are adding questions

that already have been reviewed and approved by CDC. A site also

can choose to develop its own questions if none of the optional

questions addresses a topic that the site wants to measure. CDC

reviews site-developed questions to ensure that their complexity,

reading level, and formatting are appropriate for a YRBS. In 2011,

a total of 38 states, five territories, 16 large urban school districts,

and three tribes modified the standard questionnaire.

Questionnaire Reliability and Valid

CDC has conducted two test-retest reliability studie

national YRBS questionnaire, one in 1992 and one in

In the first study, the 1991 version of the questionnai

administered to a convenience sample of 1,679 stude

grades 7–12. The questionnaire was administered

occasions, 14 days apart (22). Approximately three fo

of the questions were rated as having a substantial o

reliability (kappa = 61%–100%), and no statistically s

differences were observed between the prevalence e

for the first and second times that the questionn

administered. The responses of students in grade 7 w

consistent than those of students in grades 9–12, indi

that the questionnaire is best suited for students in th

In the second study, the 1999 questionnaire was ad

to a convenience sample of 4,619 high school st

The questionnaire was administered on two occas

approximately 2 weeks apart (23). Approximately

five questions (22%) had significantly different pr

estimates for the first and second times that the ques

was administered. Ten questions (14%) had both

<61% and significantly different time-1 and time-2 pr

estimates, indicating that the reliability of these ques

questionable (23). These problematic questions were

or deleted from later versions of the questionnaire.

No study has been conducted to assess the validity

self-reported behaviors that are included on the Y

questionnaire. However, in 2003, CDC reviewed e

empiric literature to assess cognitive and situational

might affect the validity of adolescent self-reporting o

measured by the YRBSS questionnaire (24). In this re

CDC determined that, although self-reports of these t

behaviors are affected by both cognitive and situation

these factors do not threaten the validity of self-repor

type of behavior equally. In addition, each type of beh

differs in the extent to which its self-report can be va

an objective measure. For example, reports of tobacc

influenced by both cognitive and situational factors a

be validated by biochemical measures (e.g., cotinine)

of sexual behavior also can be influenced by both cog

and situational factors, but no standard exists to valid

behavior. In contrast, reports of physical activity are i

substantially by cognitive factors and to a lesser

situational ones. Such reports can be validated by me

electronic monitors (e.g., heart rate monitors). Under

the differences in factors that compromise the validit

reporting of different types of behavior can assist pol

in interpreting data and researchers in designing mea

do not compromise validity.

MMWR / March 1, 2013/ Vol. 62/ No. 16

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

includes five questions that assess demographic information;

23 questions related to unintentional injuries and violence; 10

on tobacco use; 18 on alcohol and other drug use; seven on

sexual behaviors; 16 on body weight and dietary behaviors,

including height and weight; five on physical activity; and

two on other health-related topics (i.e., asthma and sleep). The

2013 standard questionnaire and the rationale for the inclusion

of each question are available at http://www.cdc.gov/yrbss.

For the national YRBS, five to 11 additional questions are

added to the standard questionnaire each cycle. These questions

typically cover health-related topics that do not fit in the six

priority health-risk behavior categories (e.g., sun protection).

The 2013 national YRBS questionnaire also is available at

http://www.cdc.gov/yrbss.

Each cycle, CDC makes the standard questionnaire available

to sites as a computer-scannable booklet. In 2011, nine states,

one tribe, and six large urban school districts used the standard

questionnaire computer-scannable booklets. CDC sends sites

that wish to modify the standard questionnaire a print-ready

copy of their questionnaire and scannable answer sheets.

Sites can modify the standard questionnaire within certain

parameters: 1) two thirds of the questions from the standard

YRBSS questionnaire must remain unchanged; 2) additional

questions are limited to eight mutually exclusive response

options; and 3) skip patterns, grid formats, and fill-in-the

blank formats cannot be used. Furthermore, sites that modify

the standard YRBSS questionnaire and use the scannable

answer sheets must retain the height and weight questions

as questions six and seven and cannot have more than 99

questions. This numerical limit is set so the questionnaire can

be completed during a single class period by all students, even

those who might read slowly.

For sites that want to modify the standard questionnaire, CDC

also provides a list of optional questions for consideration. This

list has been available to sites since 1999 and is updated when the

standard YRBSS questionnaire is updated. It includes questions on

the current version of the national YRBS questionnaire; questions

that have been included in a previous national, state, territorial,

tribal, or large urban school district YRBS questionnaire; and

questions designed to address topics of key interest to CDC or

the sites. By using these optional questions, sites can obtain data

comparable to those from the national YRBS or from other sites

that use these questions and be assured they are adding questions

that already have been reviewed and approved by CDC. A site also

can choose to develop its own questions if none of the optional

questions addresses a topic that the site wants to measure. CDC

reviews site-developed questions to ensure that their complexity,

reading level, and formatting are appropriate for a YRBS. In 2011,

a total of 38 states, five territories, 16 large urban school districts,

and three tribes modified the standard questionnaire.

Questionnaire Reliability and Valid

CDC has conducted two test-retest reliability studie

national YRBS questionnaire, one in 1992 and one in

In the first study, the 1991 version of the questionnai

administered to a convenience sample of 1,679 stude

grades 7–12. The questionnaire was administered

occasions, 14 days apart (22). Approximately three fo

of the questions were rated as having a substantial o

reliability (kappa = 61%–100%), and no statistically s

differences were observed between the prevalence e

for the first and second times that the questionn

administered. The responses of students in grade 7 w

consistent than those of students in grades 9–12, indi

that the questionnaire is best suited for students in th

In the second study, the 1999 questionnaire was ad

to a convenience sample of 4,619 high school st

The questionnaire was administered on two occas

approximately 2 weeks apart (23). Approximately

five questions (22%) had significantly different pr

estimates for the first and second times that the ques

was administered. Ten questions (14%) had both

<61% and significantly different time-1 and time-2 pr

estimates, indicating that the reliability of these ques

questionable (23). These problematic questions were

or deleted from later versions of the questionnaire.

No study has been conducted to assess the validity

self-reported behaviors that are included on the Y

questionnaire. However, in 2003, CDC reviewed e

empiric literature to assess cognitive and situational

might affect the validity of adolescent self-reporting o

measured by the YRBSS questionnaire (24). In this re

CDC determined that, although self-reports of these t

behaviors are affected by both cognitive and situation

these factors do not threaten the validity of self-repor

type of behavior equally. In addition, each type of beh

differs in the extent to which its self-report can be va

an objective measure. For example, reports of tobacc

influenced by both cognitive and situational factors a

be validated by biochemical measures (e.g., cotinine)

of sexual behavior also can be influenced by both cog

and situational factors, but no standard exists to valid

behavior. In contrast, reports of physical activity are i

substantially by cognitive factors and to a lesser

situational ones. Such reports can be validated by me

electronic monitors (e.g., heart rate monitors). Under

the differences in factors that compromise the validit

reporting of different types of behavior can assist pol

in interpreting data and researchers in designing mea

do not compromise validity.

MMWR / March 1, 2013/ Vol. 62/ No. 16

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Recommendations and Reports

In 2000, CDC conducted a study to assess the validity of

the two YRBS questions on self-reported height and weight

(25). In that study, 2,965 high school students completed

the 1999 version of the YRBSS questionnaire on two

occasions approximately 2 weeks apart. After completing

the questionnaire, the students were weighed and had their

height measured. Self-reported height, weight, and BMI

calculated from these values were substantially reliable, but on

average, students in the study overreported their height by 2.7

inches and underreported their weight by 3.5 pounds, which

indicates that YRBSS probably underestimates the prevalence

of overweight and obesity in adolescent populations.

Operational Procedures

The national YRBS is conducted during February–May of

each odd-numbered year. All except a few sites also conduct

their survey during this period; certain sites conduct their

YRBS during the fall of odd-numbered years or during even-

numbered years. Separate samples and operational procedures

are used in the national survey and in the state, territorial,

tribal, and large urban school district surveys. The national

sample is not an aggregation of the state and large urban

school district surveys, and state or large urban school district

estimates cannot be obtained from the national survey.

In certain instances, a school is selected as part of the national

sample as well as a state or large urban school district sample.

Similarly, a school might be selected as part of both a state and a

large urban school district sample or a state and a tribal sample.

When a school is selected as part of two or more samples, the

field work is conducted only once to minimize the burden on

the school and eliminate duplication of efforts. The school’s

data then are incorporated into both datasets during data

processing. The coordination of these overlapping samples

is critical to the successful operation of YRBSS, and weekly

meetings are required to ensure that overlapping schools are

identified, responsibilities for recruitment and data collection

are documented, and methods for sharing data are agreed upon.

National Survey

Since 1990, the national school-based YRBS has been

conducted under contract with ICF Macro, Inc., an ICF

International Company. With CDC oversight, the contractor

is responsible for sample design and sample selection. After the

schools have been selected, the contractor also is responsible

for obtaining the appropriate state-, district-, and school-

level clearances to conduct the survey in those schools. The

contractor works with sampled schools to select classes,

schedule data collection, and obtain parental permission. In

addition, the contractor hires and trains data coll

follow a common protocol to administer the questionn

in the schools, coordinates data collection, weights th

and prepares the data for analysis.

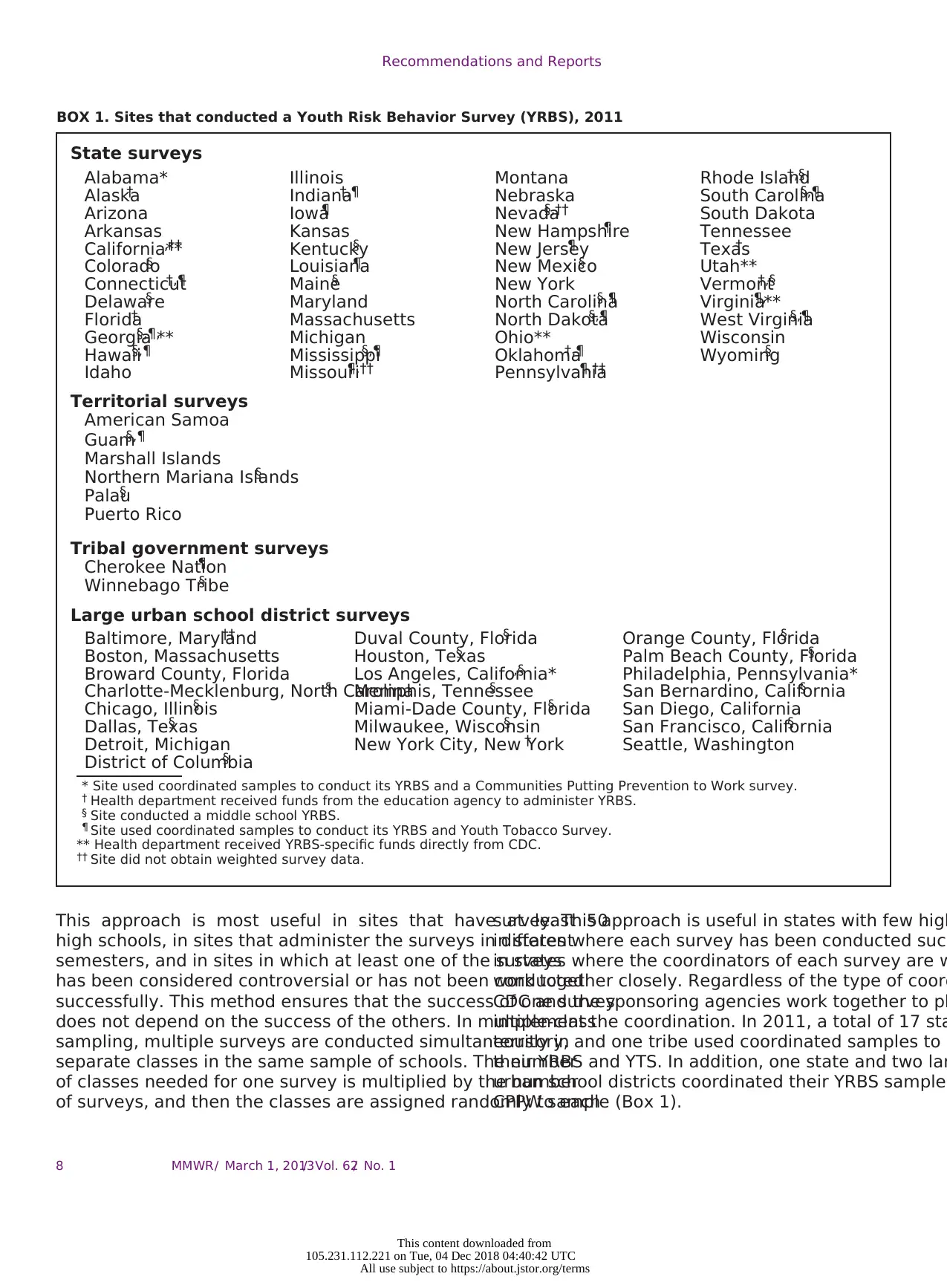

State, Territorial, Tribal, and Large U

School District Surveys

Before 2003, CDC funded state and local education

for HIV prevention or coordinated school health progr

sites could use a portion of these cooperative agreem

to conduct a YRBS. Since the 2003 cycle, separate co

agreement funds have been made available to sites t

a survey, and since 2008, both state education and st

agencies have been eligible to apply for these fu

state must determine which agency will take respons

conducting its survey. In 2011, five state health depa

directly received separate YRBSS cooperative agreem

and health departments in an additional eight states

urban school district received funds from the educatio

to lead administration of their survey (Box 1). The rem

surveys were conducted by education agencies. Since

governments also have been eligible to apply for fund

a YRBS. Certain state and local education agencies co

YRBS with the assistance of survey contractors. In 20

of 24 state education agencies and five local educatio

hired contractors to assist with survey administration

State, territorial, and local agencies and tribal gover

funded by CDC to conduct a YRBS do so among samp

of high school students. In addition, certain sites

a separate survey among middle school students by u

modified YRBSS questionnaire designed specifically fo

reading and comprehension skills of students in this a

In 2011, a total of 16 states, three territories, one trib

large urban school districts conducted a middle schoo

(Box 1). In addition, in 2011, one state (Alaska)

large urban school districts (Memphis and San Bernar

conducted a YRBS among alternative school students

Certain states coordinate their YRBS sample with sa

for other surveys (e.g., the Youth Tobacco Survey

(http://www.cdc.gov/tobacco/data_statistics/surveys/y

htm) to reduce the burden on schools and students a

save resources. States use one of two methods of coo

sampling: multiple-school sampling and multiple-cl

sampling. In multiple-school sampling, the number of

needed for one survey is multiplied by the number of

being coordinated. This method produces nonoverl

samples of schools. The separate samples can be use

the same or separate semesters, and schools can be

that they will be asked to participate in only one

MMWR / March 1, 2013/ Vol. 62/ No. 1 7

This content downloaded from

105.231.112.221 on Tue, 04 Dec 2018 04:40:42 UTC

All use subject to https://about.jstor.org/terms

In 2000, CDC conducted a study to assess the validity of

the two YRBS questions on self-reported height and weight

(25). In that study, 2,965 high school students completed

the 1999 version of the YRBSS questionnaire on two

occasions approximately 2 weeks apart. After completing

the questionnaire, the students were weighed and had their

height measured. Self-reported height, weight, and BMI

calculated from these values were substantially reliable, but on

average, students in the study overreported their height by 2.7

inches and underreported their weight by 3.5 pounds, which

indicates that YRBSS probably underestimates the prevalence

of overweight and obesity in adolescent populations.

Operational Procedures