Advanced Data Analytics (563.784): Feature Selection Techniques

VerifiedAdded on 2022/12/19

|19

|3124

|1

Report

AI Summary

This report provides a comprehensive overview of various feature selection techniques used in data analysis. It begins with an abstract and table of contents, followed by an introduction that highlights the significance of feature selection in data modeling. The literature review delves into ten different techniques including Boruta, Machine Learning Algorithms, Lasso regression, Information Value and Weights of Evidence, Linear Regression, Recursive Feature Elimination (RFE), Genetic Algorithm, DALEX Package, Stepwise regression and Simulated Annealing, discussing their advantages, disadvantages, and applications. The results analysis section examines the practical application of some of these techniques, such as Boruta, Machine Learning Algorithms, Lasso regression, and DALEX Package, illustrating how they can be utilized to enhance efficiency in data analysis. The report concludes by summarizing the key findings and emphasizing the importance of selecting the right feature selection technique for optimal results. References are provided to support the research and findings.

FEATURE SELECTION TECHNIQUES

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Abstract

The research was aimed at feature selection in detail stating the various techniques under

feature selection outlining the importance, demerits of each method and also showing the

multiple techniques applications. Most methods in feature selection were almost the same;

therefore determining the best techniques that can be applied efficiently for excellent results

was quite hard. All the plans show different ways in achieving or solving the same problem;

therefore in the analysis, only some techniques of feature selection method were discussed in

detailed explaining how they could be used, giving examples with their efficiency.

The research was aimed at feature selection in detail stating the various techniques under

feature selection outlining the importance, demerits of each method and also showing the

multiple techniques applications. Most methods in feature selection were almost the same;

therefore determining the best techniques that can be applied efficiently for excellent results

was quite hard. All the plans show different ways in achieving or solving the same problem;

therefore in the analysis, only some techniques of feature selection method were discussed in

detailed explaining how they could be used, giving examples with their efficiency.

Table of Contents

Introduction..............................................................................................................................1

Literature review......................................................................................................................1

1) Boruta................................................................................................................................1

Advantages of Boruta Feature Selection........................................................................2

2) Variable Importance of Machine Learning Algorithms...............................................2

3) Lasso regression...............................................................................................................3

Advantages of lasso regression........................................................................................3

4) Stepwise Forward and Backward Selection..................................................................3

5) Relative Importance from Linear Regression...............................................................3

Importance of linear regression.......................................................................................3

Disadvantages of linear regression..................................................................................3

6) Recursive Feature Elimination (RFE)............................................................................3

Importance of recursive feature elimination..................................................................3

7) Genetic Algorithm............................................................................................................3

Introduction..............................................................................................................................1

Literature review......................................................................................................................1

1) Boruta................................................................................................................................1

Advantages of Boruta Feature Selection........................................................................2

2) Variable Importance of Machine Learning Algorithms...............................................2

3) Lasso regression...............................................................................................................3

Advantages of lasso regression........................................................................................3

4) Stepwise Forward and Backward Selection..................................................................3

5) Relative Importance from Linear Regression...............................................................3

Importance of linear regression.......................................................................................3

Disadvantages of linear regression..................................................................................3

6) Recursive Feature Elimination (RFE)............................................................................3

Importance of recursive feature elimination..................................................................3

7) Genetic Algorithm............................................................................................................3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Importance of generic Algorithm....................................................................................4

8) Simulated Annealing........................................................................................................4

Advantages of Simulated Annealing...............................................................................4

9) Information Value and Weights of Evidence.................................................................4

10) DALEX Package.............................................................................................................4

Importance of Dalex Package..........................................................................................4

Disadvantages of Dalex Package.....................................................................................4

Result Analysis.........................................................................................................................5

1) Boruta................................................................................................................................5

2) Variable Importance of Machine Learning Algorithms...............................................5

3) Lasso regression...............................................................................................................5

4) DALEX Package...............................................................................................................9

Conclusion...............................................................................................................................14

Referencing.............................................................................................................................15

8) Simulated Annealing........................................................................................................4

Advantages of Simulated Annealing...............................................................................4

9) Information Value and Weights of Evidence.................................................................4

10) DALEX Package.............................................................................................................4

Importance of Dalex Package..........................................................................................4

Disadvantages of Dalex Package.....................................................................................4

Result Analysis.........................................................................................................................5

1) Boruta................................................................................................................................5

2) Variable Importance of Machine Learning Algorithms...............................................5

3) Lasso regression...............................................................................................................5

4) DALEX Package...............................................................................................................9

Conclusion...............................................................................................................................14

Referencing.............................................................................................................................15

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Introduction

In statistics learning feature selection that is also known as attribute selection, variable subset

selection or variable selection is the operation that deals with the act of selecting a subset of

appropriate features that can be effective in modelling creation (B Agarwal and N Mittal

2013).

According to S Beniwal and J Arora (2012), Feature selection is commonly used to eliminate

any irrelevant, unwanted, and unessential features from any data set that cannot help in

achieving high levels of accuracy in a model.

Feature selection techniques can be applicable in many ways:

i) They only require short training sessions for any new user to be fully equipped to start

using any method.

ii) The techniques help simplify models making the information acquired easy to interpret by

users or researchers.

iii) Feature technique helps reduce mistakes or occurrence that might be a result of organizing

and analysing data with thousands of dimensions (S Beniwal and J Arora, 2012).

Always the primary need of using feature selection is when the data acquired contains

unwanted and unnecessarily information or data that be removed without the data set losing

meaning (B Agarwal and N Mittal, 2013). Feature selection has more than ten techniques that

can be used appropriately in the analysis of any data set. The feature selection techniques

include; boruta, Information Value, and Weights of Evidence, Machine Learning Algorithms,

Variable Importance from Machine Learning Algorithms, Stepwise Forward and Backward

In statistics learning feature selection that is also known as attribute selection, variable subset

selection or variable selection is the operation that deals with the act of selecting a subset of

appropriate features that can be effective in modelling creation (B Agarwal and N Mittal

2013).

According to S Beniwal and J Arora (2012), Feature selection is commonly used to eliminate

any irrelevant, unwanted, and unessential features from any data set that cannot help in

achieving high levels of accuracy in a model.

Feature selection techniques can be applicable in many ways:

i) They only require short training sessions for any new user to be fully equipped to start

using any method.

ii) The techniques help simplify models making the information acquired easy to interpret by

users or researchers.

iii) Feature technique helps reduce mistakes or occurrence that might be a result of organizing

and analysing data with thousands of dimensions (S Beniwal and J Arora, 2012).

Always the primary need of using feature selection is when the data acquired contains

unwanted and unnecessarily information or data that be removed without the data set losing

meaning (B Agarwal and N Mittal, 2013). Feature selection has more than ten techniques that

can be used appropriately in the analysis of any data set. The feature selection techniques

include; boruta, Information Value, and Weights of Evidence, Machine Learning Algorithms,

Variable Importance from Machine Learning Algorithms, Stepwise Forward and Backward

Selection, Dalex Package, Linear Regression, Recursive Feature Elimination, Genetic

Algorithm and Simulated Annealing (B Khagi, GR Kwon, and R Lama,2019).

Literature review

In this, the various feature selection techniques will be discussed in details stating their

advantages, disadvantages, where they can be applied and their efficiency. Ten different

feature selection will be addressed.

1) Boruta

This is a feature selection technique that includes selecting and ranking using the random

forest algorithm (X Liao, M Khandelwal, H Yang, and M Koopialipoor, 2019).

Advantages of Boruta Feature Selection.

i) The feature selection technique helps in determining if the variable used in a data set is

essential or not (Y Mejova and P Srinivasan,2011).

ii) The method helps in determining the variable that is more statistically important (Y

Mejova and P Srinivasan, 2011).

Algorithm and Simulated Annealing (B Khagi, GR Kwon, and R Lama,2019).

Literature review

In this, the various feature selection techniques will be discussed in details stating their

advantages, disadvantages, where they can be applied and their efficiency. Ten different

feature selection will be addressed.

1) Boruta

This is a feature selection technique that includes selecting and ranking using the random

forest algorithm (X Liao, M Khandelwal, H Yang, and M Koopialipoor, 2019).

Advantages of Boruta Feature Selection.

i) The feature selection technique helps in determining if the variable used in a data set is

essential or not (Y Mejova and P Srinivasan,2011).

ii) The method helps in determining the variable that is more statistically important (Y

Mejova and P Srinivasan, 2011).

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

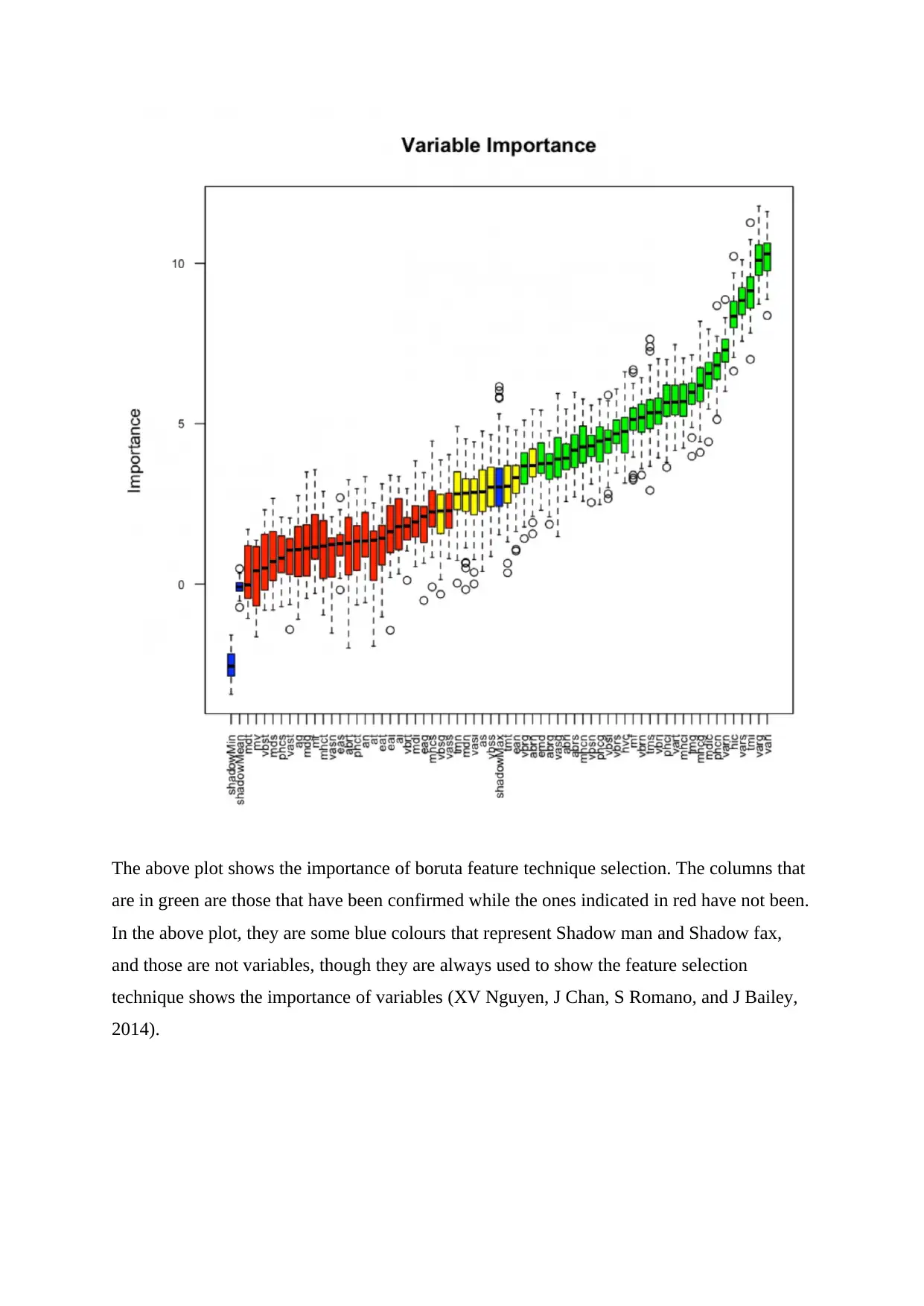

The above plot shows the importance of boruta feature technique selection. The columns that

are in green are those that have been confirmed while the ones indicated in red have not been.

In the above plot, they are some blue colours that represent Shadow man and Shadow fax,

and those are not variables, though they are always used to show the feature selection

technique shows the importance of variables (XV Nguyen, J Chan, S Romano, and J Bailey,

2014).

are in green are those that have been confirmed while the ones indicated in red have not been.

In the above plot, they are some blue colours that represent Shadow man and Shadow fax,

and those are not variables, though they are always used to show the feature selection

technique shows the importance of variables (XV Nguyen, J Chan, S Romano, and J Bailey,

2014).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

2) Machine Learning Algorithms

This is a feature selection that always contemplates variables that have frequently been using

in ML algorithms that are important (S Dalai, B Chatterjee, D Dey, & S Chakravorti, 2012).

3) Lasso regression

The method involves application of shrinkage. Shrinkage this a technique in which the

available data points are shrunk towards a central tendency point like calculation of mean,

standard deviation, or variance (M Mafarja, I Aljarah, AA Heidari, and AI Hammouri,2018).

The method is uniquely suitable for models that show unusually high potentials of

multicollinearity.

Advantages of lasso regression

i) The method allows and gives an individual the ability to regularize by either pushing a

number to 0 to make them useful in a new data set in the case provided(M Mafarja, I

Aljarah, AA Heidari, and AI Hammouri,2018).

4) Information Value and Weights of Evidence

This feature selection technique can be used to explain, show, and decide the importance of a

given variable in spelling out the binary variable Y variable (P Kumbhar and M Mali, 2016).

The method usually goes on well with other classification models such as logistics regression

that can be used to model binary variables(SCH Hoi, J Wang, P Zhao & R Jin,2012).

.

This is a feature selection that always contemplates variables that have frequently been using

in ML algorithms that are important (S Dalai, B Chatterjee, D Dey, & S Chakravorti, 2012).

3) Lasso regression

The method involves application of shrinkage. Shrinkage this a technique in which the

available data points are shrunk towards a central tendency point like calculation of mean,

standard deviation, or variance (M Mafarja, I Aljarah, AA Heidari, and AI Hammouri,2018).

The method is uniquely suitable for models that show unusually high potentials of

multicollinearity.

Advantages of lasso regression

i) The method allows and gives an individual the ability to regularize by either pushing a

number to 0 to make them useful in a new data set in the case provided(M Mafarja, I

Aljarah, AA Heidari, and AI Hammouri,2018).

4) Information Value and Weights of Evidence

This feature selection technique can be used to explain, show, and decide the importance of a

given variable in spelling out the binary variable Y variable (P Kumbhar and M Mali, 2016).

The method usually goes on well with other classification models such as logistics regression

that can be used to model binary variables(SCH Hoi, J Wang, P Zhao & R Jin,2012).

.

5) Linear Regression

This method involves a linear approach that shows the relationship between a dependent

variable and an independent variable. Usually, the case of an individual variable is called

linear regression (DJ Dittman, TM Khoshgoftaar, and R Wald, 2010).

Importance of linear regression

i) Linear regression is direct; it usually makes the estimation method easy and straightforward

to interpret on a module degree (DJ Dittman, TM Khoshgoftaar and R Wald, 2010).

ii) Linear regression often shows the correlation between response variables and covariates

(DJ Dittman, TM Khoshgoftaar, and R Wald, 2010).

Disadvantages of linear regression

i) In using the technique if the available number of variables is more than the samples

provided, then the model usually models the noise instead of the deriving the relationship

between the possible variables (E Rashedi, H Nezamabadi…-Pour, and S Saryazdi,2013).

ii) Linear regression technique only shows the relationship between an independent and

dependent variable that is always linear. The method usually assumes both the dependant and

independent variable have a relationship that is on a straight line, which in some cases is very

incorrect (E Rashedi, H Nezamabadi…-Pour, and S Saryazdi, 2013).

6) Recursive Feature Elimination (RFE)

This is a feature selection technique that is concerned with the right shape of a model and

eliminates the weakest variable until the required good number of features are achieved (L

Silva, ML Koga, CE Cugnasca, & AHR Costa, 2013).

This method involves a linear approach that shows the relationship between a dependent

variable and an independent variable. Usually, the case of an individual variable is called

linear regression (DJ Dittman, TM Khoshgoftaar, and R Wald, 2010).

Importance of linear regression

i) Linear regression is direct; it usually makes the estimation method easy and straightforward

to interpret on a module degree (DJ Dittman, TM Khoshgoftaar and R Wald, 2010).

ii) Linear regression often shows the correlation between response variables and covariates

(DJ Dittman, TM Khoshgoftaar, and R Wald, 2010).

Disadvantages of linear regression

i) In using the technique if the available number of variables is more than the samples

provided, then the model usually models the noise instead of the deriving the relationship

between the possible variables (E Rashedi, H Nezamabadi…-Pour, and S Saryazdi,2013).

ii) Linear regression technique only shows the relationship between an independent and

dependent variable that is always linear. The method usually assumes both the dependant and

independent variable have a relationship that is on a straight line, which in some cases is very

incorrect (E Rashedi, H Nezamabadi…-Pour, and S Saryazdi, 2013).

6) Recursive Feature Elimination (RFE)

This is a feature selection technique that is concerned with the right shape of a model and

eliminates the weakest variable until the required good number of features are achieved (L

Silva, ML Koga, CE Cugnasca, & AHR Costa, 2013).

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Importance of recursive feature elimination

i) The method as the name states can be used to work on large data volumes that contain

attributes that can be used to predict the required feature in a model (L Silva, ML Koga, CE

Cugnasca, & AHR Costa, 2013).

ii) The feature selection method importantly eliminates the worst-performing variables in a

model increasing the performance of the elements left behind in a model (L Silva, ML Koga,

CE Cugnasca, & AHR Costa, 2013).

7) Genetic Algorithm

This is a feature selection technique that is used in solving unconstrained and constrained

optimizations complications that are based on a natural selection process (LT Vu, LT Vu, NT

Nguyen, and PTT Do, 2019).

Importance of generic Algorithm

i) The technique uses its rules to help itself in achieving a required right solution where the

cost involved can be minimized compared to other commonly used methods of an algorithm

(LT Vu, LT Vu, NT Nguyen, and PTT Do, 2019).

8) DALEX Package

This feature selection method is a powerful package because it comprises and describes

various ideas that are usually accepted by the ML algorithm (CCO Ramos, AN de Souza, and

AX Falcao, 2011).

Importance of Dalex Package

i) Dalex package usually possesses various attributes that help an individual in understanding

the relationship between model output and input variables.

i) The method as the name states can be used to work on large data volumes that contain

attributes that can be used to predict the required feature in a model (L Silva, ML Koga, CE

Cugnasca, & AHR Costa, 2013).

ii) The feature selection method importantly eliminates the worst-performing variables in a

model increasing the performance of the elements left behind in a model (L Silva, ML Koga,

CE Cugnasca, & AHR Costa, 2013).

7) Genetic Algorithm

This is a feature selection technique that is used in solving unconstrained and constrained

optimizations complications that are based on a natural selection process (LT Vu, LT Vu, NT

Nguyen, and PTT Do, 2019).

Importance of generic Algorithm

i) The technique uses its rules to help itself in achieving a required right solution where the

cost involved can be minimized compared to other commonly used methods of an algorithm

(LT Vu, LT Vu, NT Nguyen, and PTT Do, 2019).

8) DALEX Package

This feature selection method is a powerful package because it comprises and describes

various ideas that are usually accepted by the ML algorithm (CCO Ramos, AN de Souza, and

AX Falcao, 2011).

Importance of Dalex Package

i) Dalex package usually possesses various attributes that help an individual in understanding

the relationship between model output and input variables.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

ii) In this feature selection technique, the models that are as a result of stacking, bagging, and

boosting always possess high-performance levels (CCO Ramos, AN de Souza, and AX

Falcao, 2011).

Disadvantages of Dalex Package

i) An analysis of large volumes of information or data using the Dalex package technique, it

usually becomes prolonged (CCO Ramos, AN de Souza, and AX Falcao, 2011).

ii) Dalex application of various algorithms is generally not parallelized

9) Stepwise regression.

This technique in feature selection is used to select the available features if in any case, the Y

variable available is in numeric. The method finds the most effective regression model by

dropping and choosing variables to be able to achieve a model that has the smallest AIC

(Kerzner and Harold,2013).

10) Simulated Annealing

This is a technique that is used globally in algorithm and usually permits a suboptimal answer

to be welcomed with the hope that a better solution will be derived(Y Saeys, T Abeel, and Y

Van de Peer,2008).The technique usually works effectively by making small random changes

to a solution that was achieved earlier on while monitoring the changes and see if the

performance has upgraded in any way (Meredith, Jack R., Samuel J. Mantel Jr, and Scott M,

2017). The amendment made can only be accepted if the importance of the solution improves

or if the performance achieved is above an outlined percentage (C Fernandez-Lozano, JA

Seoane, M Gestal & TR Gaunt, 2015).

boosting always possess high-performance levels (CCO Ramos, AN de Souza, and AX

Falcao, 2011).

Disadvantages of Dalex Package

i) An analysis of large volumes of information or data using the Dalex package technique, it

usually becomes prolonged (CCO Ramos, AN de Souza, and AX Falcao, 2011).

ii) Dalex application of various algorithms is generally not parallelized

9) Stepwise regression.

This technique in feature selection is used to select the available features if in any case, the Y

variable available is in numeric. The method finds the most effective regression model by

dropping and choosing variables to be able to achieve a model that has the smallest AIC

(Kerzner and Harold,2013).

10) Simulated Annealing

This is a technique that is used globally in algorithm and usually permits a suboptimal answer

to be welcomed with the hope that a better solution will be derived(Y Saeys, T Abeel, and Y

Van de Peer,2008).The technique usually works effectively by making small random changes

to a solution that was achieved earlier on while monitoring the changes and see if the

performance has upgraded in any way (Meredith, Jack R., Samuel J. Mantel Jr, and Scott M,

2017). The amendment made can only be accepted if the importance of the solution improves

or if the performance achieved is above an outlined percentage (C Fernandez-Lozano, JA

Seoane, M Gestal & TR Gaunt, 2015).

Advantages of Simulated Annealing

i) The feature selection method gives room for a search to maintain an optimal solution that

can be accepted globally (C Fernandez-Lozano, JA Seoane, M Gestal & TR Gaunt, 2015).

.

Result Analysis

In this discussion of the various feature selection techniques will be done. The reviews will

show how the various methods can be applied in solving problems and how they can work to

increase efficiency in different question-solving.

1) Boruta

In using this method a user can always alter the rigidity of the available model by either

modifying their p values which are more significant than 0.01. In Boruta, maxRuns is usually

the amount of measure a model has been the pound (JP Barddal, F Enembreck, HM Gomes,

and A Bifet, 2019).

2) Machine Learning Algorithms

In this technique, the variables that contain the most significant in a model can be very

useless in a regression model, so for efficiency, all the models available should be

advantageous to all the algorithms (H Faris, MA Hassonah, AZ Ala'M, and S Mirjalili,2018).

In finding the importance of a variable in ML, we consider the following.

1) Train the wanted variable

i) The feature selection method gives room for a search to maintain an optimal solution that

can be accepted globally (C Fernandez-Lozano, JA Seoane, M Gestal & TR Gaunt, 2015).

.

Result Analysis

In this discussion of the various feature selection techniques will be done. The reviews will

show how the various methods can be applied in solving problems and how they can work to

increase efficiency in different question-solving.

1) Boruta

In using this method a user can always alter the rigidity of the available model by either

modifying their p values which are more significant than 0.01. In Boruta, maxRuns is usually

the amount of measure a model has been the pound (JP Barddal, F Enembreck, HM Gomes,

and A Bifet, 2019).

2) Machine Learning Algorithms

In this technique, the variables that contain the most significant in a model can be very

useless in a regression model, so for efficiency, all the models available should be

advantageous to all the algorithms (H Faris, MA Hassonah, AZ Ala'M, and S Mirjalili,2018).

In finding the importance of a variable in ML, we consider the following.

1) Train the wanted variable

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 19

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.