Advanced Data Mining: Cardiac Arrhythmia Classification Project

VerifiedAdded on 2023/06/10

|10

|4822

|461

Project

AI Summary

This project focuses on data mining techniques for cardiac arrhythmia classification, aiming to distinguish between the presence and absence of cardiac arrhythmia and classify it into one of 16 groups. It leverages methods such as KNN, Naive Bayes, SVM, Gradient Boosting, Model Tree, and Random Forest, using the CRISP-DM methodology. The project analyzes a dataset with 279 attributes, including linear and nominal values, to decrease differences among cardiologists and program classifications. The literature review provides a background on various data mining methods, and the results indicate an accuracy of 77.3% for heartbeat classification. The project concludes with a discussion of future work involving online detection methods for improved accuracy and efficiency. Desklib provides access to this and other solved assignments for students.

Data Mining

Author:

Abstract

The spot light of this research is on data

mining. The aim of this project is to

leverage the methods learnt in the course

module and to execute data mining study.

This research ensures to distinguish

between the presence and absence of

cardiac arrhythmia and classify it in one

of the 16 groups. Thus, the purpose is to

decrease the differences among the

cardiologs and programs classification.

The literature review in this report acts as

a background to understand various

methods that can help data mining, for

this project. Relatively, various methods’

uses and effects in various studies are

determined. Subsequently, the provided

data set is analysed using the methods like

KNN, Naïve Bayes, SVM, Gradient

Boosting, Model tree and Random Forest.

The methodology used is CRISP-DM

procedure. CRISP-DM procedure is a

well-proven methodology, which is known

for its robustness. It is observed that, the

accuracy of Heart beat based on the

classes is 77.3. In this project, KNN, Naïve

Bayes, SVM, Gradient Boosting, Model

tree and Random Forest methods are all

discussed. The future work is regarded as

finding the heart beat accuracy by using

an online detection method instead of the

used method, with improvement in time

and budget.

Keywords: KNN, SVM, CRISP-DM,

Random Forest, Gradient Boosting, Model

tree, Naïve Bayes, Heart beat, cardiac

arrhythmia

1. Introduction

This research ensures to distinguish between

the presence and absence of cardiac

arrhythmia and classify it in one of the 16

groups. The research questions are related to

the accuracy of heat beats. The methodology

utilized for this project includes, CRISP-DM

procedure, which is a well-proven

methodology and known for its robustness.

Here, the provided data set is analysed using

the methods like KNN, Naïve Bayes, SVM,

Gradient Boosting, Model tree and Random

Forest. The database comprises of 279

attributes, out of which 206 are linear valued

and the remaining are nominal. The

Class 01 denotes 'normal' then the ECG

classes ranging from 02 to 15 denotes

different classes of arrhythmia and Class 16

denote the remaining unclassified ones.

Current there exists a computer program

which makes all these classifications. But,

there exists some differences amongst the

cardiologs and programs classification. In

this case, the cardiologs are taken as a gold

standard, for decreasing such difference,

with the help of machine learning tools.

Aim

As a whole, this project mainly aims to

leverage the methods learnt in the course

module and to execute a significant data

1

Author:

Abstract

The spot light of this research is on data

mining. The aim of this project is to

leverage the methods learnt in the course

module and to execute data mining study.

This research ensures to distinguish

between the presence and absence of

cardiac arrhythmia and classify it in one

of the 16 groups. Thus, the purpose is to

decrease the differences among the

cardiologs and programs classification.

The literature review in this report acts as

a background to understand various

methods that can help data mining, for

this project. Relatively, various methods’

uses and effects in various studies are

determined. Subsequently, the provided

data set is analysed using the methods like

KNN, Naïve Bayes, SVM, Gradient

Boosting, Model tree and Random Forest.

The methodology used is CRISP-DM

procedure. CRISP-DM procedure is a

well-proven methodology, which is known

for its robustness. It is observed that, the

accuracy of Heart beat based on the

classes is 77.3. In this project, KNN, Naïve

Bayes, SVM, Gradient Boosting, Model

tree and Random Forest methods are all

discussed. The future work is regarded as

finding the heart beat accuracy by using

an online detection method instead of the

used method, with improvement in time

and budget.

Keywords: KNN, SVM, CRISP-DM,

Random Forest, Gradient Boosting, Model

tree, Naïve Bayes, Heart beat, cardiac

arrhythmia

1. Introduction

This research ensures to distinguish between

the presence and absence of cardiac

arrhythmia and classify it in one of the 16

groups. The research questions are related to

the accuracy of heat beats. The methodology

utilized for this project includes, CRISP-DM

procedure, which is a well-proven

methodology and known for its robustness.

Here, the provided data set is analysed using

the methods like KNN, Naïve Bayes, SVM,

Gradient Boosting, Model tree and Random

Forest. The database comprises of 279

attributes, out of which 206 are linear valued

and the remaining are nominal. The

Class 01 denotes 'normal' then the ECG

classes ranging from 02 to 15 denotes

different classes of arrhythmia and Class 16

denote the remaining unclassified ones.

Current there exists a computer program

which makes all these classifications. But,

there exists some differences amongst the

cardiologs and programs classification. In

this case, the cardiologs are taken as a gold

standard, for decreasing such difference,

with the help of machine learning tools.

Aim

As a whole, this project mainly aims to

leverage the methods learnt in the course

module and to execute a significant data

1

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

mining study. The provided data set is used

as a source, for this project.

Objective

The objective of this project is to distinguish

between the presence and absence of cardiac

arrhythmia and classify it in one of the 16

groups. Thus, the purpose is to decrease the

differences among the cardiologs and

programs classification.

2. Literature Review

According to [1], it is believed that for

predicting the project’s disappointment risk

or scheme several models are utilized and

one of them includes Naïve Bayes. The

Naive Bayes prototype was used for creating

the confusion matrix. Here, the result were

compare and the result showed the

dissimilar quantity than the inhabitants

which are utilized for scoring the set and it

represented that the validation must be

corrected. The only reason for selecting

Naïve Bayes is its capacity of handling the

missing data that are useful for projects like

CSI, where the data goes missing every now

and then. However, selection of Naïve

Bayes contains a dualistic approach such as,

the missing information get seized and it

helps to derive simple probabilistic classifier

based on Bayes assumptions. It is observed

that the results of Naïve Bayes can be often

inaccurate, but its performance in the

organizational activities are good. However,

in certain cases, poor calibration of Naïve

Bayes is possible. The performance is

satisfactory due to highly interdependent

probability with the factual potentials. When

they are largely interdependent with each

other, this lets to instantly calculate large

samples and allows to indirectly handle the

data with the missing interdependent

element.

As per [2], KNN algorithm is referred as a

simplest machine learning algorithms, which

idealizes that, the objects which closer to

each other will contain same characteristics.

Therefore based on the characteristic

features of the nearby objects, the nearest

neighbor is predicted. Generally, KNN deals

with continuous attributes, but it can also

work with the discrete attributes. While the

discrete attributes are dealt, if the attribute

values for the two instances a2, b2 are

different thus, the difference among them is

equivalent to one, if not it is equivalent to

zero. According to the results found related

KNN shows the sensitivity, specificity, and

accuracy, for diagnosis of patients with heart

diseases. The value of K ranges between 1

to 13 and the achieved accuracy ranges from

94 percent to 97.4 percent, which contains

different K values. The value of K equal to 7

achieved the highest accuracy and

specificity (97.4% and 99% respectively).

This paper are shows that the KNN is the

widely utilized data mining method, for the

classification problems. On the other hand,

KNN’s simplicity is regarded as best. It is

considered to have relatively high

convergence speed, which makes it famous

to select. Further, the major demerit of the

KNN classifiers is the requirement of large

memory, for storing the complete sample.

When the sample is large, response time on

a sequential computer is also large. KNN is

used to find the closest neighbors of the

given data with all the available training

data. In this paper, if a label is found then

the algorithm quits, otherwise the system

2

as a source, for this project.

Objective

The objective of this project is to distinguish

between the presence and absence of cardiac

arrhythmia and classify it in one of the 16

groups. Thus, the purpose is to decrease the

differences among the cardiologs and

programs classification.

2. Literature Review

According to [1], it is believed that for

predicting the project’s disappointment risk

or scheme several models are utilized and

one of them includes Naïve Bayes. The

Naive Bayes prototype was used for creating

the confusion matrix. Here, the result were

compare and the result showed the

dissimilar quantity than the inhabitants

which are utilized for scoring the set and it

represented that the validation must be

corrected. The only reason for selecting

Naïve Bayes is its capacity of handling the

missing data that are useful for projects like

CSI, where the data goes missing every now

and then. However, selection of Naïve

Bayes contains a dualistic approach such as,

the missing information get seized and it

helps to derive simple probabilistic classifier

based on Bayes assumptions. It is observed

that the results of Naïve Bayes can be often

inaccurate, but its performance in the

organizational activities are good. However,

in certain cases, poor calibration of Naïve

Bayes is possible. The performance is

satisfactory due to highly interdependent

probability with the factual potentials. When

they are largely interdependent with each

other, this lets to instantly calculate large

samples and allows to indirectly handle the

data with the missing interdependent

element.

As per [2], KNN algorithm is referred as a

simplest machine learning algorithms, which

idealizes that, the objects which closer to

each other will contain same characteristics.

Therefore based on the characteristic

features of the nearby objects, the nearest

neighbor is predicted. Generally, KNN deals

with continuous attributes, but it can also

work with the discrete attributes. While the

discrete attributes are dealt, if the attribute

values for the two instances a2, b2 are

different thus, the difference among them is

equivalent to one, if not it is equivalent to

zero. According to the results found related

KNN shows the sensitivity, specificity, and

accuracy, for diagnosis of patients with heart

diseases. The value of K ranges between 1

to 13 and the achieved accuracy ranges from

94 percent to 97.4 percent, which contains

different K values. The value of K equal to 7

achieved the highest accuracy and

specificity (97.4% and 99% respectively).

This paper are shows that the KNN is the

widely utilized data mining method, for the

classification problems. On the other hand,

KNN’s simplicity is regarded as best. It is

considered to have relatively high

convergence speed, which makes it famous

to select. Further, the major demerit of the

KNN classifiers is the requirement of large

memory, for storing the complete sample.

When the sample is large, response time on

a sequential computer is also large. KNN is

used to find the closest neighbors of the

given data with all the available training

data. In this paper, if a label is found then

the algorithm quits, otherwise the system

2

classifier is applied. The proposed algorithm

was used to recognize the object. The results

are compared to those obtained with single

system classifier and KNN.

According to [3], in this paper the authors

ensures that, SVM's effectiveness is

analyzed, where the medical dataset

classifying (Heart disease classification) is

done with the classification techniques.

Further, even the Naïve Bayes classifier,

RBF network and SVM Classifier’s

performance is analyzed. With respective to

SVM, the observation proves that it can

generate effective accuracy level in

classification. Here, the authors have used

WEKA environment for retrieving the

results. Especially for medical dataset the

SVM classifier results prove to be robust

and effective too.

The authors in [4], have concluded that

bagging works fine for most of the decision

tree types but needs some tuning. Whereas

the neutral nets and the SVMs need careful

selection of parameters. In terms of Random

Forest, boosting, SVM and other methods

showed significant performance for the

STATLOG data set.

It is stated in [5] that, the Model trees are

referred as a type of decision tree, which at

the leaves has functions of linear regression.

It is considered as a successful method to

predict continuous numeric values.

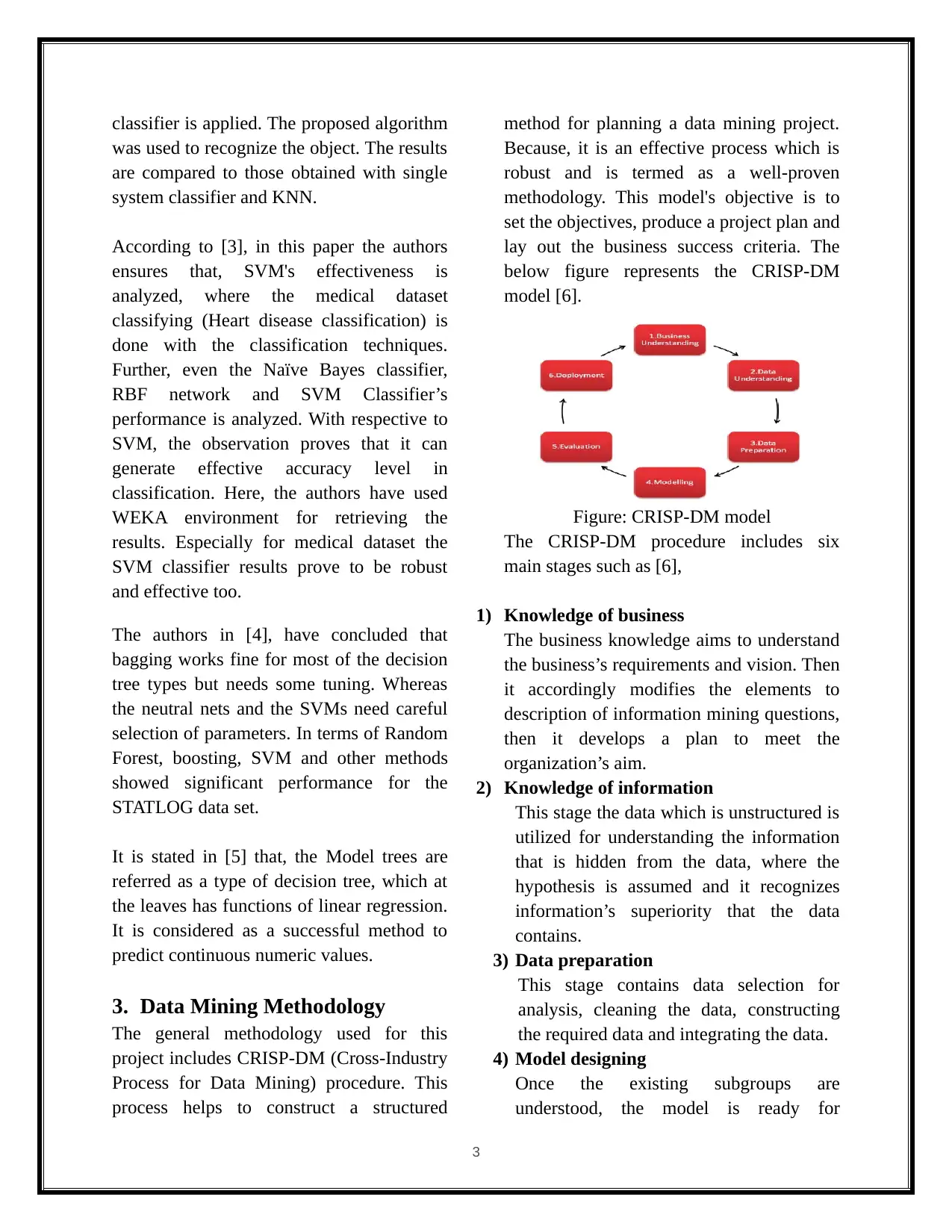

3. Data Mining Methodology

The general methodology used for this

project includes CRISP-DM (Cross-Industry

Process for Data Mining) procedure. This

process helps to construct a structured

method for planning a data mining project.

Because, it is an effective process which is

robust and is termed as a well-proven

methodology. This model's objective is to

set the objectives, produce a project plan and

lay out the business success criteria. The

below figure represents the CRISP-DM

model [6].

Figure: CRISP-DM model

The CRISP-DM procedure includes six

main stages such as [6],

1) Knowledge of business

The business knowledge aims to understand

the business’s requirements and vision. Then

it accordingly modifies the elements to

description of information mining questions,

then it develops a plan to meet the

organization’s aim.

2) Knowledge of information

This stage the data which is unstructured is

utilized for understanding the information

that is hidden from the data, where the

hypothesis is assumed and it recognizes

information’s superiority that the data

contains.

3) Data preparation

This stage contains data selection for

analysis, cleaning the data, constructing

the required data and integrating the data.

4) Model designing

Once the existing subgroups are

understood, the model is ready for

3

was used to recognize the object. The results

are compared to those obtained with single

system classifier and KNN.

According to [3], in this paper the authors

ensures that, SVM's effectiveness is

analyzed, where the medical dataset

classifying (Heart disease classification) is

done with the classification techniques.

Further, even the Naïve Bayes classifier,

RBF network and SVM Classifier’s

performance is analyzed. With respective to

SVM, the observation proves that it can

generate effective accuracy level in

classification. Here, the authors have used

WEKA environment for retrieving the

results. Especially for medical dataset the

SVM classifier results prove to be robust

and effective too.

The authors in [4], have concluded that

bagging works fine for most of the decision

tree types but needs some tuning. Whereas

the neutral nets and the SVMs need careful

selection of parameters. In terms of Random

Forest, boosting, SVM and other methods

showed significant performance for the

STATLOG data set.

It is stated in [5] that, the Model trees are

referred as a type of decision tree, which at

the leaves has functions of linear regression.

It is considered as a successful method to

predict continuous numeric values.

3. Data Mining Methodology

The general methodology used for this

project includes CRISP-DM (Cross-Industry

Process for Data Mining) procedure. This

process helps to construct a structured

method for planning a data mining project.

Because, it is an effective process which is

robust and is termed as a well-proven

methodology. This model's objective is to

set the objectives, produce a project plan and

lay out the business success criteria. The

below figure represents the CRISP-DM

model [6].

Figure: CRISP-DM model

The CRISP-DM procedure includes six

main stages such as [6],

1) Knowledge of business

The business knowledge aims to understand

the business’s requirements and vision. Then

it accordingly modifies the elements to

description of information mining questions,

then it develops a plan to meet the

organization’s aim.

2) Knowledge of information

This stage the data which is unstructured is

utilized for understanding the information

that is hidden from the data, where the

hypothesis is assumed and it recognizes

information’s superiority that the data

contains.

3) Data preparation

This stage contains data selection for

analysis, cleaning the data, constructing

the required data and integrating the data.

4) Model designing

Once the existing subgroups are

understood, the model is ready for

3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

construction, where table formation,

reporting, understanding characteristics,

and data preprocessing takes place.

5)Estimation

This stage assess the level of met business

objectives and determines if there exists

any business reason for the model to be

deficient. The results of the generated data

mining will be evaluated in this phase. The

challenges and future direction will also be

revealed in this stage.

6) Distribution

This stage summarizes the strategy used

for deployment along with important steps

and their functioning. For instance, plan

monitoring and its maintenance. Finally, a

final report will be written based on the

deployment plan, where the project's

experiences and summary are documented.

Naive Bayes

Naive Bayes classification algorithm refers

to a probabilistic classifier, which works

depending on the probability models that

contains strong independence assumptions.

It was named after Thomas Bayes. Mostly,

on reality, the independence assumptions

don’t have any impact so they are regarded

as naive. The probability models can be

derived with the help of Bayes' theorem.

Depending on the nature of the probability

model, the Naive Bayes algorithm can be

trained, where the learning settings can be

supervised. The Naive Bayes model

comprises of a large cube, which contains

the dimensions such as follows [7]:

1) Name of the input field.

2) Value of input field for discrete

fields, or for continuous fields.

3) The Naive Bayes algorithm classifies

the continuous fields into discrete

bins.

4) How many times the target field

value appears is recorded, with the

input field value.

KNN

KNN stands for K Nearest Neighbors –

Classification, which is a simple algorithm

that stores all the available cases and

classifies new cases based on a similarity

measure (e.g., distance functions). In early

1970’s, it was utilized in statistical

estimation and pattern recognition, as a non-

parametric technique [8].

The K Nearest Neighbors – Classification

can be utilized for classification as well as

for regression predictive problems. But,

mostly it is utilized for classification related

industry problems [9]. For evaluation any

technique, the following aspects are checked

[10]:

1. Comfort of interpreting the output.

2. Time of calculation.

3. Predictive Power

Gradient Boosting

The short-term traffic prediction for the

intelligent traffic systems is important and it

gets influenced by its neighboring traffic

conditions. In such case, the Gradient

boosting decision trees (GBDT), which is an

ensemble learning method, helps in making

short-term traffic prediction depending on

the traffic volume data set. In such a case it

was proved that the GBDT’s prediction

accuracy is actually higher when compared

to SVM, whereas the accuracy of multi-step-

ahead models is comparatively lower than

4

reporting, understanding characteristics,

and data preprocessing takes place.

5)Estimation

This stage assess the level of met business

objectives and determines if there exists

any business reason for the model to be

deficient. The results of the generated data

mining will be evaluated in this phase. The

challenges and future direction will also be

revealed in this stage.

6) Distribution

This stage summarizes the strategy used

for deployment along with important steps

and their functioning. For instance, plan

monitoring and its maintenance. Finally, a

final report will be written based on the

deployment plan, where the project's

experiences and summary are documented.

Naive Bayes

Naive Bayes classification algorithm refers

to a probabilistic classifier, which works

depending on the probability models that

contains strong independence assumptions.

It was named after Thomas Bayes. Mostly,

on reality, the independence assumptions

don’t have any impact so they are regarded

as naive. The probability models can be

derived with the help of Bayes' theorem.

Depending on the nature of the probability

model, the Naive Bayes algorithm can be

trained, where the learning settings can be

supervised. The Naive Bayes model

comprises of a large cube, which contains

the dimensions such as follows [7]:

1) Name of the input field.

2) Value of input field for discrete

fields, or for continuous fields.

3) The Naive Bayes algorithm classifies

the continuous fields into discrete

bins.

4) How many times the target field

value appears is recorded, with the

input field value.

KNN

KNN stands for K Nearest Neighbors –

Classification, which is a simple algorithm

that stores all the available cases and

classifies new cases based on a similarity

measure (e.g., distance functions). In early

1970’s, it was utilized in statistical

estimation and pattern recognition, as a non-

parametric technique [8].

The K Nearest Neighbors – Classification

can be utilized for classification as well as

for regression predictive problems. But,

mostly it is utilized for classification related

industry problems [9]. For evaluation any

technique, the following aspects are checked

[10]:

1. Comfort of interpreting the output.

2. Time of calculation.

3. Predictive Power

Gradient Boosting

The short-term traffic prediction for the

intelligent traffic systems is important and it

gets influenced by its neighboring traffic

conditions. In such case, the Gradient

boosting decision trees (GBDT), which is an

ensemble learning method, helps in making

short-term traffic prediction depending on

the traffic volume data set. In such a case it

was proved that the GBDT’s prediction

accuracy is actually higher when compared

to SVM, whereas the accuracy of multi-step-

ahead models is comparatively lower than

4

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

the 1-step-ahead models. GBDT is smaller

when compared to SVM in terms of

prediction errors [11].

SVM

In this work the SVM algorithm is utilized

for classification. SVM is a machine-

learning system which has set up itself as a

powerful tool in many classification

problems. Essentially expressed, the SVM

distinguishes the best isolating hyperplane

between the two classes of the preparation

tests inside the element space by

concentrating on the preparation cases set at

the edge of the class descriptors. Along these

lines, an ideal hyperplane is fitted, as well as

less preparing tests are adequately utilized;

in this manner high preparation accuracy is

accomplished with little preparing sets. In

spite of the fact that SVM isolates the

information just into two classes, grouping

into extra classes is conceivable by applying

either the one against all (OAA) or one

against one (OAO) techniques [12].

The One against all method is uses

the set of binary classifier and it able

to divide the each class from all

classes and each data objects are

classified into easily determine the

largest decision value.

The One against one method is used

to constructs the parallel SVMs and

it able to trained on the data from the

two classes. It use the voting strategy

to predict the data objects in the

class.

Random Forest

The Random forest is an easy and flexible to

use data mining algorithm that produces the

great results. It has the simplicity, so it is

one of most used algorithms and it can be

used for both regression and classification

tasks. It can handle missing values and

modeller for categorical values. It adds the

additional randomness to the model while

growing the trees. It has following

advantages compared with other

classification techniques.

1. It avoid the overfitting problem in

classification problems.

2. In regression and classification task,

same random forest algorithm can be

used.

3. It used for identifying the most

important features from the training

data set [13].

4. Increasing the predictive power

5. Increasing the models speed.

6. In medical domain, it can be utilized

to both recognize the right

combination of parts in

pharmaceutical, and to distinguish

illnesses by investigating the

patient's medicinal records.

Model tree

The Model trees are referred as a type of

decision tree, which at the leaves has

functions of linear regression. It is

considered as a successful method to predict

continuous numeric values [5]. It can be

used for classification problems, with the

help of a standard method of transforming a

classification problem into a problem of

function approximation.

4. Evaluation and Results

In this section, we will show the

consequences of our experiments as

5

when compared to SVM in terms of

prediction errors [11].

SVM

In this work the SVM algorithm is utilized

for classification. SVM is a machine-

learning system which has set up itself as a

powerful tool in many classification

problems. Essentially expressed, the SVM

distinguishes the best isolating hyperplane

between the two classes of the preparation

tests inside the element space by

concentrating on the preparation cases set at

the edge of the class descriptors. Along these

lines, an ideal hyperplane is fitted, as well as

less preparing tests are adequately utilized;

in this manner high preparation accuracy is

accomplished with little preparing sets. In

spite of the fact that SVM isolates the

information just into two classes, grouping

into extra classes is conceivable by applying

either the one against all (OAA) or one

against one (OAO) techniques [12].

The One against all method is uses

the set of binary classifier and it able

to divide the each class from all

classes and each data objects are

classified into easily determine the

largest decision value.

The One against one method is used

to constructs the parallel SVMs and

it able to trained on the data from the

two classes. It use the voting strategy

to predict the data objects in the

class.

Random Forest

The Random forest is an easy and flexible to

use data mining algorithm that produces the

great results. It has the simplicity, so it is

one of most used algorithms and it can be

used for both regression and classification

tasks. It can handle missing values and

modeller for categorical values. It adds the

additional randomness to the model while

growing the trees. It has following

advantages compared with other

classification techniques.

1. It avoid the overfitting problem in

classification problems.

2. In regression and classification task,

same random forest algorithm can be

used.

3. It used for identifying the most

important features from the training

data set [13].

4. Increasing the predictive power

5. Increasing the models speed.

6. In medical domain, it can be utilized

to both recognize the right

combination of parts in

pharmaceutical, and to distinguish

illnesses by investigating the

patient's medicinal records.

Model tree

The Model trees are referred as a type of

decision tree, which at the leaves has

functions of linear regression. It is

considered as a successful method to predict

continuous numeric values [5]. It can be

used for classification problems, with the

help of a standard method of transforming a

classification problem into a problem of

function approximation.

4. Evaluation and Results

In this section, we will show the

consequences of our experiments as

5

indicated by random forests and support

vector machine algorithm [14]. It will give

trial comes about resampling arrhythmia

dataset. Also, we will make utilization of

benchmarking datasets like thyroid,

cardiotocography, and audiology and to test

productivity of our classification algorithm.

The short outcomes for assessments of

thyroid, cardiotocography, and audiology

will likewise be given. The consequences of

the examination are summarized, correlation

of the accuracy and learning time on the

dataset between random forest, KDD and

SVM.

SVM is compelling in high dimensional

spaces like the arrhythmia data set. To start

with, mRMR include determination was

performed. The data set was then part into

70%-30% amongst prepare and test

individually. Since we are managing a

skewed data set with modest number of

lines, we utilized bootstrapping to enhance

the execution of the algorithm. The training

data was multiplied in estimate utilizing

irregular testing, while at the same time

ensuring every one of the data focuses in the

first prepare data were spoken to in any

event once. To decide the sort of portion

most fitting, the SVM model was

constructed utilizing polynomial bits of

fluctuating degrees and a Gaussian kernel.

The quadratic kernel, brought about a decent

model fit, limiting the generalization error as

can be found in Figure 1 [15].

This led us to the assuming that there were

significant second order interactions among

the feature variables in the design matrix.

Figure 2 plots the generalization accuracy on

the test set with the quantity of best

highlights chose. It can be seen that the best

accuracy is gotten with around 254

highlights. Also, due to the greatly based

dissemination of classes, the model

demonstrated inefficient in anticipating

classes with low density. In particular the

two classes meant just a single tuple in the

test set. The sheer absence of data, implied

that there was no real way to fabricate

important dispersions of the highlights

expected to arrange classes one and two. To

address the issue of misclassifying class 0

(sinus Tachycardia) as class 1 (ordinary), we

utilized an anomaly indicator. We regarded

the SVM as a one class classifier and

isolated every one of the data focuses from

the birthplace (in highlight space F) so as to

augment the separation from this hyperplane

to the starting point [16]. This outcomes in a

binary function which catches areas in the

data space where the likelihood density of

the data lives. In this manner the capacity

returns +1 in the district (catching the

preparation data focuses) and 1 somewhere

else. On discovering abnormalities in the

data set, we utilized our instinctive thinking

from the SVM confusion matrix viz. that

class 5 were for the most part misclassified

as 1. Subsequently we found the

inconsistencies which lied a long way from

the data collection, nearest to the root and

identified the focuses anticipated by our

SVM model as 5. We renamed these

conditions of conceivable sinus tachycardia

as typical state. This enhanced the accuracy

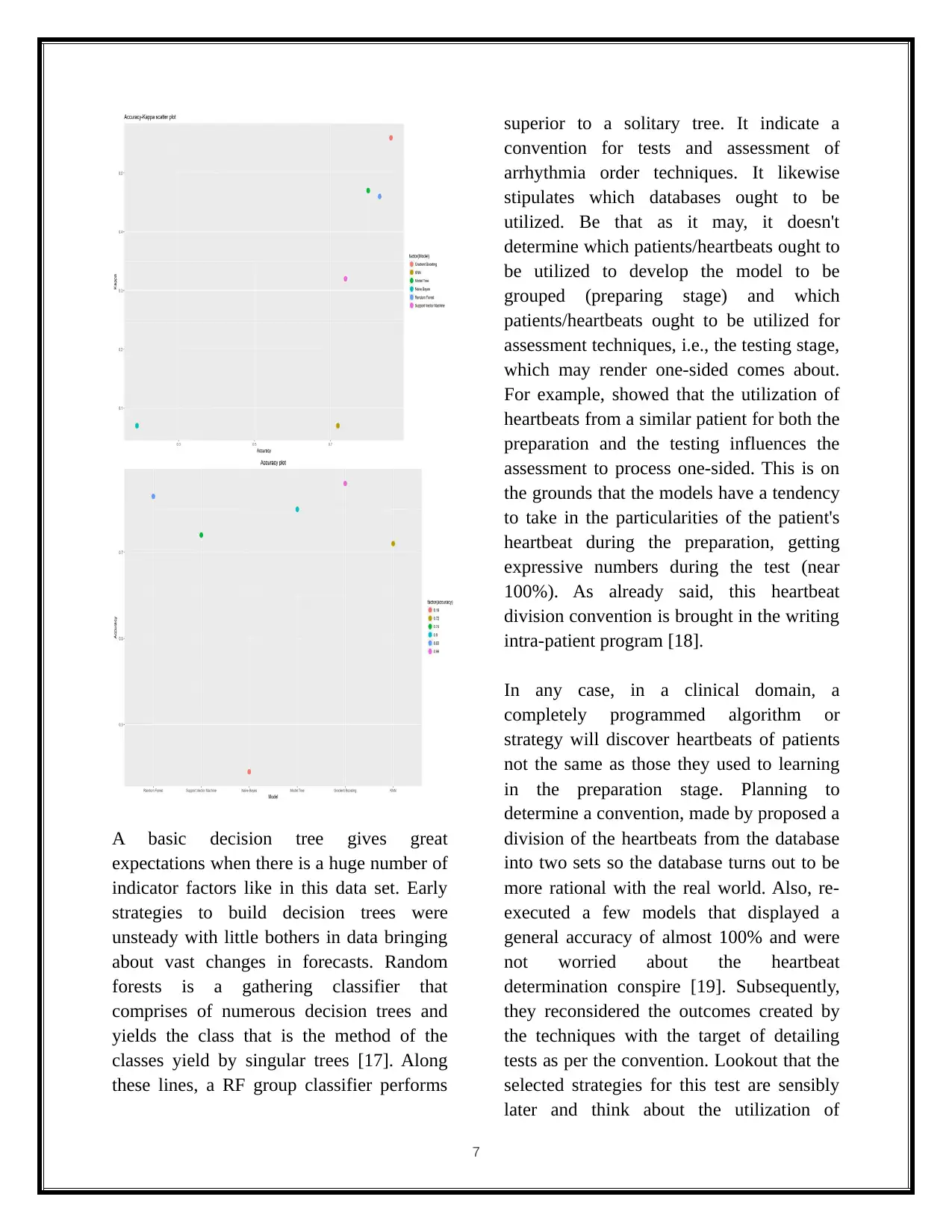

to 70%. The below images are displays the

accuracy based on algorithm.

6

vector machine algorithm [14]. It will give

trial comes about resampling arrhythmia

dataset. Also, we will make utilization of

benchmarking datasets like thyroid,

cardiotocography, and audiology and to test

productivity of our classification algorithm.

The short outcomes for assessments of

thyroid, cardiotocography, and audiology

will likewise be given. The consequences of

the examination are summarized, correlation

of the accuracy and learning time on the

dataset between random forest, KDD and

SVM.

SVM is compelling in high dimensional

spaces like the arrhythmia data set. To start

with, mRMR include determination was

performed. The data set was then part into

70%-30% amongst prepare and test

individually. Since we are managing a

skewed data set with modest number of

lines, we utilized bootstrapping to enhance

the execution of the algorithm. The training

data was multiplied in estimate utilizing

irregular testing, while at the same time

ensuring every one of the data focuses in the

first prepare data were spoken to in any

event once. To decide the sort of portion

most fitting, the SVM model was

constructed utilizing polynomial bits of

fluctuating degrees and a Gaussian kernel.

The quadratic kernel, brought about a decent

model fit, limiting the generalization error as

can be found in Figure 1 [15].

This led us to the assuming that there were

significant second order interactions among

the feature variables in the design matrix.

Figure 2 plots the generalization accuracy on

the test set with the quantity of best

highlights chose. It can be seen that the best

accuracy is gotten with around 254

highlights. Also, due to the greatly based

dissemination of classes, the model

demonstrated inefficient in anticipating

classes with low density. In particular the

two classes meant just a single tuple in the

test set. The sheer absence of data, implied

that there was no real way to fabricate

important dispersions of the highlights

expected to arrange classes one and two. To

address the issue of misclassifying class 0

(sinus Tachycardia) as class 1 (ordinary), we

utilized an anomaly indicator. We regarded

the SVM as a one class classifier and

isolated every one of the data focuses from

the birthplace (in highlight space F) so as to

augment the separation from this hyperplane

to the starting point [16]. This outcomes in a

binary function which catches areas in the

data space where the likelihood density of

the data lives. In this manner the capacity

returns +1 in the district (catching the

preparation data focuses) and 1 somewhere

else. On discovering abnormalities in the

data set, we utilized our instinctive thinking

from the SVM confusion matrix viz. that

class 5 were for the most part misclassified

as 1. Subsequently we found the

inconsistencies which lied a long way from

the data collection, nearest to the root and

identified the focuses anticipated by our

SVM model as 5. We renamed these

conditions of conceivable sinus tachycardia

as typical state. This enhanced the accuracy

to 70%. The below images are displays the

accuracy based on algorithm.

6

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

A basic decision tree gives great

expectations when there is a huge number of

indicator factors like in this data set. Early

strategies to build decision trees were

unsteady with little bothers in data bringing

about vast changes in forecasts. Random

forests is a gathering classifier that

comprises of numerous decision trees and

yields the class that is the method of the

classes yield by singular trees [17]. Along

these lines, a RF group classifier performs

superior to a solitary tree. It indicate a

convention for tests and assessment of

arrhythmia order techniques. It likewise

stipulates which databases ought to be

utilized. Be that as it may, it doesn't

determine which patients/heartbeats ought to

be utilized to develop the model to be

grouped (preparing stage) and which

patients/heartbeats ought to be utilized for

assessment techniques, i.e., the testing stage,

which may render one-sided comes about.

For example, showed that the utilization of

heartbeats from a similar patient for both the

preparation and the testing influences the

assessment to process one-sided. This is on

the grounds that the models have a tendency

to take in the particularities of the patient's

heartbeat during the preparation, getting

expressive numbers during the test (near

100%). As already said, this heartbeat

division convention is brought in the writing

intra-patient program [18].

In any case, in a clinical domain, a

completely programmed algorithm or

strategy will discover heartbeats of patients

not the same as those they used to learning

in the preparation stage. Planning to

determine a convention, made by proposed a

division of the heartbeats from the database

into two sets so the database turns out to be

more rational with the real world. Also, re-

executed a few models that displayed a

general accuracy of almost 100% and were

not worried about the heartbeat

determination conspire [19]. Subsequently,

they reconsidered the outcomes created by

the techniques with the target of detailing

tests as per the convention. Lookout that the

selected strategies for this test are sensibly

later and think about the utilization of

7

expectations when there is a huge number of

indicator factors like in this data set. Early

strategies to build decision trees were

unsteady with little bothers in data bringing

about vast changes in forecasts. Random

forests is a gathering classifier that

comprises of numerous decision trees and

yields the class that is the method of the

classes yield by singular trees [17]. Along

these lines, a RF group classifier performs

superior to a solitary tree. It indicate a

convention for tests and assessment of

arrhythmia order techniques. It likewise

stipulates which databases ought to be

utilized. Be that as it may, it doesn't

determine which patients/heartbeats ought to

be utilized to develop the model to be

grouped (preparing stage) and which

patients/heartbeats ought to be utilized for

assessment techniques, i.e., the testing stage,

which may render one-sided comes about.

For example, showed that the utilization of

heartbeats from a similar patient for both the

preparation and the testing influences the

assessment to process one-sided. This is on

the grounds that the models have a tendency

to take in the particularities of the patient's

heartbeat during the preparation, getting

expressive numbers during the test (near

100%). As already said, this heartbeat

division convention is brought in the writing

intra-patient program [18].

In any case, in a clinical domain, a

completely programmed algorithm or

strategy will discover heartbeats of patients

not the same as those they used to learning

in the preparation stage. Planning to

determine a convention, made by proposed a

division of the heartbeats from the database

into two sets so the database turns out to be

more rational with the real world. Also, re-

executed a few models that displayed a

general accuracy of almost 100% and were

not worried about the heartbeat

determination conspire [19]. Subsequently,

they reconsidered the outcomes created by

the techniques with the target of detailing

tests as per the convention. Lookout that the

selected strategies for this test are sensibly

later and think about the utilization of

7

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

different classifiers and different types of

highlight extraction. Breaking down the

qualities, it can be viewed that the outcomes

acquired by a similar characterization

technique utilizing a plan of random

determination are fundamentally better than

the qualities got with tests. It play out a

reasonable assessment of ECG-based

heartbeat characterization techniques,

heartbeats of a similar patient ought not be

available in both preparing and testing sets,

since it's anything but a practical situation.

Something else, the classifiers will learn

subtleties of patients in the preparation set

and all things considered, the assessment of

a technique on the testing set utilizing

heartbeats of a patient whose heartbeats are

available in the preparation set too, is one-

sided, regardless of whether the heartbeats

of a similar patient are extraordinary [20].

In general speculation accuracy was 72.3%.

Henceforth we utilized a serial classifier

comprising of RF and straight part SVM

which gave us a generalization error of

22.6% or accuracy of 77.4%. This gives a

peripheral change over the generalization

errors. In this investigation, the forecast

capacity of random forests classifier is

expanded in deciding minority test classes

via preparing the algorithm with simple

random sampled data. Besides, this testing

system did not reduce the execution of the

classifier in forecast of real classes. Along

these lines, a resampling procedure may be

considered for datasets of uneven class

conveyances to enhance the forecast

capacity of classifiers for classes spoke to

with modest number of occurrences. One of

the essential points of interest of the

proposed algorithm when contrasted with

the ECG-based methodologies in the writing

is that it is totally in view of the HRV flag

which can be removed from the underlying

ECG motion with a high accuracy

notwithstanding for uproarious as well as

muddled chronicles [21]. This is while, most

ECG-based strategies utilize the

morphological highlights of the ECG, which

is genuinely influenced by noise. As a last

indicate, due the short handling time and

moderately high accuracy of the proposed

strategy, it can be utilized as a continuous

arrhythmia order framework.

5. Conclusions and Future Work

The aim of this project are met, where

methods learnt in the course module are

leveraged and data mining study is executed,

using the provided data set. The objective is

met, where the presence and absence of

cardiac arrhythmia are distinguished and are

classified in one of the 16 groups. The

literature review provides a background for

understanding the different methods, its uses

and effects in various studies. The

methodology used is CRISP-DM procedure.

t is observed that, the accuracy of Heart beat

based on the classes is 77.3. The methods

like KNN, Naïve Bayes, SVM, Gradient

Boosting, Model tree and Random Forest

are discussed.

The future work will be on how to improvise

the accuracy of the heat beats, using online

detection method instead of using the other

available methods, along with improvement

in time and budget. Moreover, it is believed

that the performance can be improved, so

the future work will also focus on

performance.

8

highlight extraction. Breaking down the

qualities, it can be viewed that the outcomes

acquired by a similar characterization

technique utilizing a plan of random

determination are fundamentally better than

the qualities got with tests. It play out a

reasonable assessment of ECG-based

heartbeat characterization techniques,

heartbeats of a similar patient ought not be

available in both preparing and testing sets,

since it's anything but a practical situation.

Something else, the classifiers will learn

subtleties of patients in the preparation set

and all things considered, the assessment of

a technique on the testing set utilizing

heartbeats of a patient whose heartbeats are

available in the preparation set too, is one-

sided, regardless of whether the heartbeats

of a similar patient are extraordinary [20].

In general speculation accuracy was 72.3%.

Henceforth we utilized a serial classifier

comprising of RF and straight part SVM

which gave us a generalization error of

22.6% or accuracy of 77.4%. This gives a

peripheral change over the generalization

errors. In this investigation, the forecast

capacity of random forests classifier is

expanded in deciding minority test classes

via preparing the algorithm with simple

random sampled data. Besides, this testing

system did not reduce the execution of the

classifier in forecast of real classes. Along

these lines, a resampling procedure may be

considered for datasets of uneven class

conveyances to enhance the forecast

capacity of classifiers for classes spoke to

with modest number of occurrences. One of

the essential points of interest of the

proposed algorithm when contrasted with

the ECG-based methodologies in the writing

is that it is totally in view of the HRV flag

which can be removed from the underlying

ECG motion with a high accuracy

notwithstanding for uproarious as well as

muddled chronicles [21]. This is while, most

ECG-based strategies utilize the

morphological highlights of the ECG, which

is genuinely influenced by noise. As a last

indicate, due the short handling time and

moderately high accuracy of the proposed

strategy, it can be utilized as a continuous

arrhythmia order framework.

5. Conclusions and Future Work

The aim of this project are met, where

methods learnt in the course module are

leveraged and data mining study is executed,

using the provided data set. The objective is

met, where the presence and absence of

cardiac arrhythmia are distinguished and are

classified in one of the 16 groups. The

literature review provides a background for

understanding the different methods, its uses

and effects in various studies. The

methodology used is CRISP-DM procedure.

t is observed that, the accuracy of Heart beat

based on the classes is 77.3. The methods

like KNN, Naïve Bayes, SVM, Gradient

Boosting, Model tree and Random Forest

are discussed.

The future work will be on how to improvise

the accuracy of the heat beats, using online

detection method instead of using the other

available methods, along with improvement

in time and budget. Moreover, it is believed

that the performance can be improved, so

the future work will also focus on

performance.

8

Therefore, the purpose of decreasing the

differences among the cardiologs and

programs classification is achieved.

References

[1]S. Kantilal Sawan, "Failure prediction of

California Solar Initiative projects",

2018.

[2]H. Khamis, K. Cheruiyo and S. Kimani,

"Application of k- Nearest Neighbour

Classification in Medical Data

Mining", International Journal of

Information and Communication

Technology Research, vol. 4, no. 4,

2014.

[3]P. Janardhanan, H. L. and F. Sabika,

"Effectiveness of Support Vector

Machines in Medical Data

mining", Journal of

Communications Software and

Systems, vol. 11, no. 1, p. 25, 2015.

[4]R. Caruana and A. Niculescu-Mizil,

"An Empirical Comparison of

Supervised Learning

Algorithm", 23rd International

Conference on Machine Learning,

Pittsburgh., 2006.

[5]E. Frank, Y. Wang, S. Inglis, G.

Holmes and I. Witten, 2018. .

[6]"Developing Predictive Analytics

Solutions Using Agile/DevOps

Techniques", DMI, 2018. [Online].

Available:

https://dminc.com/blog/developing

-predictive-analytics-solutions-

using-agiledevops-techniques/.

[Accessed: 13- Aug- 2018].

[7]"IBM Knowledge Center", Ibm.com,

2018. [Online]. Available:

https://www.ibm.com/support/knowled

gecenter/en/SSEPGG_9.7.0/com.ibm.i

m.overview.doc/

c_naive_bayes_classification.html.

[Accessed: 13- Aug- 2018].

[8]"Exercise", Saedsayad.com, 2018.

[Online]. Available:

http://www.saedsayad.com/knn_exercis

e.htm. [Accessed: 13- Aug- 2018].

[9]"Exercise", Saedsayad.com, 2018.

[Online]. Available:

http://www.saedsayad.com/knn_exercis

e.htm. [Accessed: 13- Aug- 2018].

[10]B. data, I. Python) and T. Srivastava,

"Introduction to KNN, K-Nearest

Neighbors : Simplified", Analytics

Vidhya, 2018. [Online]. Available:

https://www.analyticsvidhya.com/blog/

2018/03/introduction-k-neighbours-

algorithm-clustering/. [Accessed: 13-

Aug- 2018].

[11]S. Yang, J. Wu, Y. Du, Y. He and X.

Chen, "Ensemble Learning for Short-

Term Traffic Prediction Based on

Gradient Boosting Machine", Journal

of Sensors, vol. 2017, pp. 1-15, 2017.

[12]"The Random Forest Algorithm –

Towards Data Science", Towards Data

Science, 2018. [Online]. Available:

https://towardsdatascience.com/the-

random-forest-algorithm-d457d499ffcd.

[Accessed: 11- Aug- 2018].

[13]"How Random Forest Algorithm Works

in Machine Learning", Medium, 2018.

[Online]. Available:

9

differences among the cardiologs and

programs classification is achieved.

References

[1]S. Kantilal Sawan, "Failure prediction of

California Solar Initiative projects",

2018.

[2]H. Khamis, K. Cheruiyo and S. Kimani,

"Application of k- Nearest Neighbour

Classification in Medical Data

Mining", International Journal of

Information and Communication

Technology Research, vol. 4, no. 4,

2014.

[3]P. Janardhanan, H. L. and F. Sabika,

"Effectiveness of Support Vector

Machines in Medical Data

mining", Journal of

Communications Software and

Systems, vol. 11, no. 1, p. 25, 2015.

[4]R. Caruana and A. Niculescu-Mizil,

"An Empirical Comparison of

Supervised Learning

Algorithm", 23rd International

Conference on Machine Learning,

Pittsburgh., 2006.

[5]E. Frank, Y. Wang, S. Inglis, G.

Holmes and I. Witten, 2018. .

[6]"Developing Predictive Analytics

Solutions Using Agile/DevOps

Techniques", DMI, 2018. [Online].

Available:

https://dminc.com/blog/developing

-predictive-analytics-solutions-

using-agiledevops-techniques/.

[Accessed: 13- Aug- 2018].

[7]"IBM Knowledge Center", Ibm.com,

2018. [Online]. Available:

https://www.ibm.com/support/knowled

gecenter/en/SSEPGG_9.7.0/com.ibm.i

m.overview.doc/

c_naive_bayes_classification.html.

[Accessed: 13- Aug- 2018].

[8]"Exercise", Saedsayad.com, 2018.

[Online]. Available:

http://www.saedsayad.com/knn_exercis

e.htm. [Accessed: 13- Aug- 2018].

[9]"Exercise", Saedsayad.com, 2018.

[Online]. Available:

http://www.saedsayad.com/knn_exercis

e.htm. [Accessed: 13- Aug- 2018].

[10]B. data, I. Python) and T. Srivastava,

"Introduction to KNN, K-Nearest

Neighbors : Simplified", Analytics

Vidhya, 2018. [Online]. Available:

https://www.analyticsvidhya.com/blog/

2018/03/introduction-k-neighbours-

algorithm-clustering/. [Accessed: 13-

Aug- 2018].

[11]S. Yang, J. Wu, Y. Du, Y. He and X.

Chen, "Ensemble Learning for Short-

Term Traffic Prediction Based on

Gradient Boosting Machine", Journal

of Sensors, vol. 2017, pp. 1-15, 2017.

[12]"The Random Forest Algorithm –

Towards Data Science", Towards Data

Science, 2018. [Online]. Available:

https://towardsdatascience.com/the-

random-forest-algorithm-d457d499ffcd.

[Accessed: 11- Aug- 2018].

[13]"How Random Forest Algorithm Works

in Machine Learning", Medium, 2018.

[Online]. Available:

9

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

https://medium.com/@Synced/how-

random-forest-algorithm-works-in-

machine-learning-3c0fe15b6674.

[Accessed: 11- Aug- 2018].

[14]"How Random Forest Algorithm Works

in Machine Learning", Medium, 2018.

[Online]. Available:

https://medium.com/@Synced/how-

random-forest-algorithm-works-in-

machine-learning-3c0fe15b6674.

[Accessed: 11- Aug- 2018].

[15]J. Brownlee, "Support Vector Machines

for Machine Learning", Machine

Learning Mastery, 2018. [Online].

Available:

https://machinelearningmastery.com/su

pport-vector-machines-for-machine-

learning/. [Accessed: 11- Aug- 2018].

[16]"Chapter 2 : SVM (Support Vector

Machine) — Theory – Machine

Learning 101 – Medium", Medium,

2018. [Online]. Available:

https://medium.com/machine-learning-

101/chapter-2-svm-support-vector-

machine-theory-f0812effc72.

[Accessed: 11- Aug- 2018].

[17]"Understanding Support Vector

Machine algorithm from examples

(along with code)", Analytics Vidhya,

2018. [Online]. Available:

https://www.analyticsvidhya.com/blog/

2017/09/understaing-support-vector-

machine-example-code/. [Accessed: 11-

Aug- 2018].

[18]T. SOMAN and P. O. BOBBIE,

"Classification of Arrhythmia Using

Machine Learning Techniques", 2018.

[19]F. Melgani and Y. Bazi, "Classification

of Electrocardiogram Signals With

Support Vector Machines and Particle

Swarm Optimization", IEEE

Transactions on Information

Technology in Biomedicine, vol. 12, no.

5, pp. 667-677, 2008.

[20]M. Daliri, "Feature selection using

binary particle swarm optimization and

support vector machines for medical

diagnosis", Biomedizinische

Technik/Biomedical Engineering, vol.

57, no. 5, 2012.

[21]V. Gupta, S. Srinivasan and S. S Kudli,

"Prediction and Classification of

Cardiac Arrhythmia", 2018.

10

random-forest-algorithm-works-in-

machine-learning-3c0fe15b6674.

[Accessed: 11- Aug- 2018].

[14]"How Random Forest Algorithm Works

in Machine Learning", Medium, 2018.

[Online]. Available:

https://medium.com/@Synced/how-

random-forest-algorithm-works-in-

machine-learning-3c0fe15b6674.

[Accessed: 11- Aug- 2018].

[15]J. Brownlee, "Support Vector Machines

for Machine Learning", Machine

Learning Mastery, 2018. [Online].

Available:

https://machinelearningmastery.com/su

pport-vector-machines-for-machine-

learning/. [Accessed: 11- Aug- 2018].

[16]"Chapter 2 : SVM (Support Vector

Machine) — Theory – Machine

Learning 101 – Medium", Medium,

2018. [Online]. Available:

https://medium.com/machine-learning-

101/chapter-2-svm-support-vector-

machine-theory-f0812effc72.

[Accessed: 11- Aug- 2018].

[17]"Understanding Support Vector

Machine algorithm from examples

(along with code)", Analytics Vidhya,

2018. [Online]. Available:

https://www.analyticsvidhya.com/blog/

2017/09/understaing-support-vector-

machine-example-code/. [Accessed: 11-

Aug- 2018].

[18]T. SOMAN and P. O. BOBBIE,

"Classification of Arrhythmia Using

Machine Learning Techniques", 2018.

[19]F. Melgani and Y. Bazi, "Classification

of Electrocardiogram Signals With

Support Vector Machines and Particle

Swarm Optimization", IEEE

Transactions on Information

Technology in Biomedicine, vol. 12, no.

5, pp. 667-677, 2008.

[20]M. Daliri, "Feature selection using

binary particle swarm optimization and

support vector machines for medical

diagnosis", Biomedizinische

Technik/Biomedical Engineering, vol.

57, no. 5, 2012.

[21]V. Gupta, S. Srinivasan and S. S Kudli,

"Prediction and Classification of

Cardiac Arrhythmia", 2018.

10

1 out of 10

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.