Cloud Computing: Confusion Matrix Report for AI Classification Models

VerifiedAdded on 2023/04/08

|2

|302

|301

Report

AI Summary

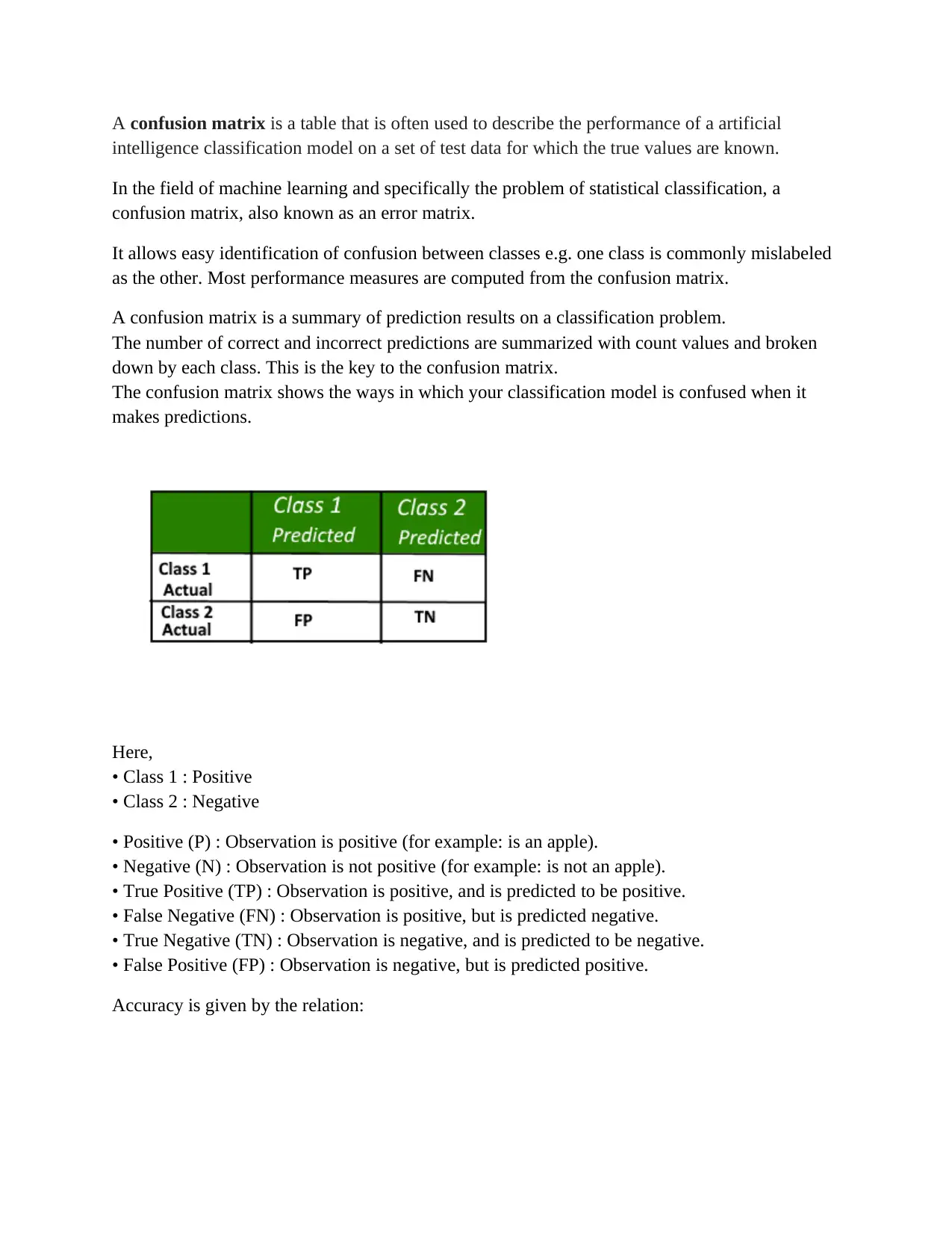

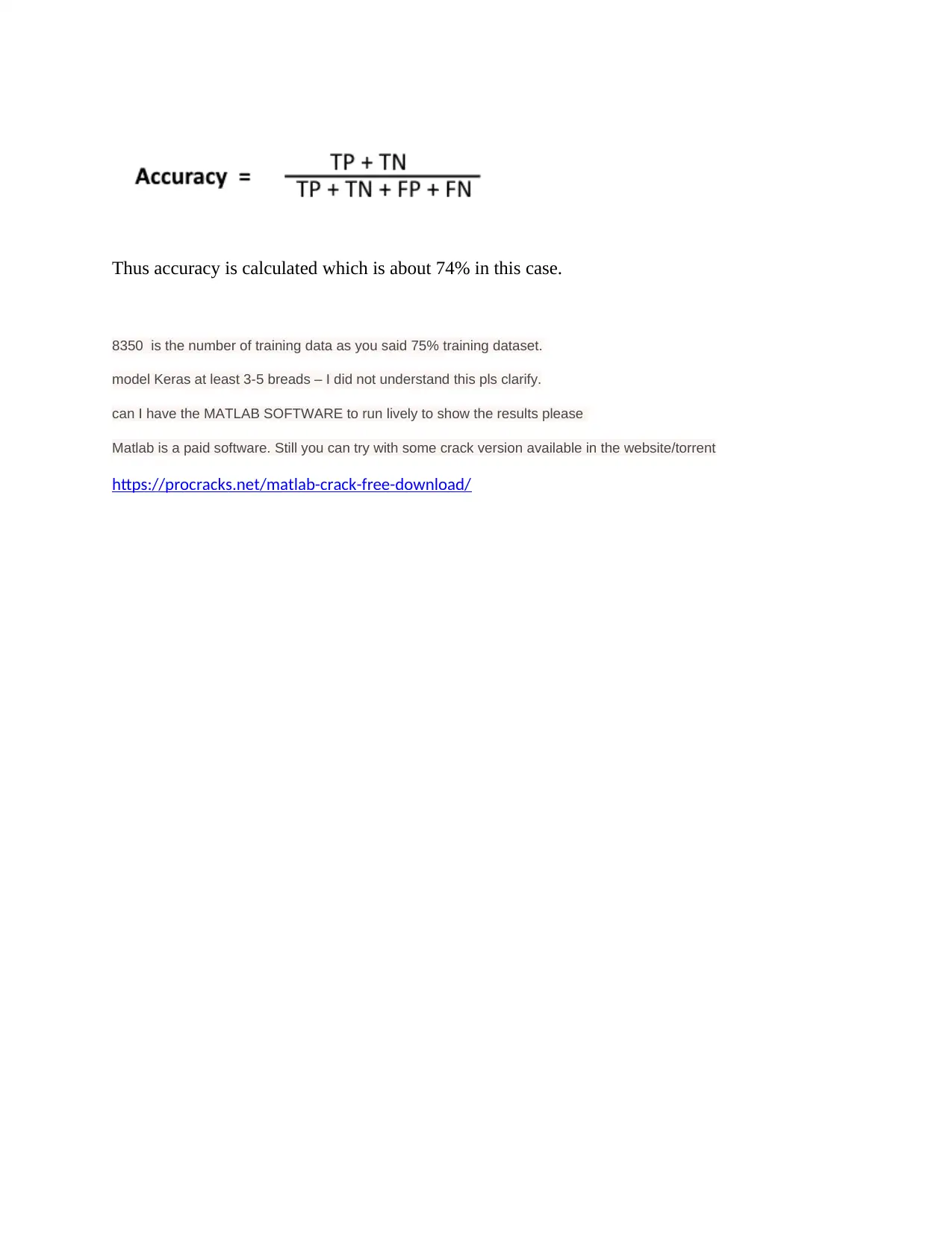

This report delves into the concept of the confusion matrix, a crucial tool for evaluating the performance of AI classification models. It explains the components of the matrix, including true positives, false negatives, and true negatives, and how these elements contribute to calculating accuracy. The report references a 74% accuracy rate based on a 75% training dataset of 8350 data points. Additionally, the report mentions the use of Keras and MATLAB, although the latter is a paid software. The report explores the application of the confusion matrix within the context of cloud computing and machine learning, offering a comprehensive understanding of its significance in assessing and improving the performance of AI classification models.

1 out of 2

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)