Southeast University: Intelligent Agent for Safe Driving with CNN

VerifiedAdded on 2023/03/30

|16

|3310

|402

Project

AI Summary

This project details the design and implementation of an intelligent agent aimed at enhancing driving safety by monitoring driver behavior and detecting potential distractions, particularly those related to mobile phone use. The project leverages Convolutional Neural Networks (CNN) to analyze driver postures and predict actions, with the goal of preventing accidents. The agent's design includes a prototype, pseudocode algorithm, and code implementation, demonstrating how CNN can be used to differentiate various driving postures, such as normal driving, responding to calls, smoking, and eating. The system incorporates various components, including percepts, actions, goals, and an environment, to effectively monitor and respond to driver actions. The project also discusses the legal implications of mobile phone use while driving and explores search-based techniques, like decision trees, to make informed decisions. The CNN model is trained and fine-tuned using a distributed filtering method, achieving high accuracy in identifying driver postures even under various road and lighting conditions. The project concludes by emphasizing the importance of such systems in reducing accidents and promoting safer driving habits.

qwertyuiopasdfghjklzxcvbnmqw

ertyuiopasdfghjklzxcvbnmqwert

yuiopasdfghjklzxcvbnmqwertyui

opasdfghjklzxcvbnmqwertyuiopa

sdfghjklzxcvbnmqwertyuiopasdf

ghjklzxcvbnmqwertyuiopasdfghj

klzxcvbnmqwertyuiopasdfghjklz

xcvbnmqwertyuiopasdfghjklzxcv

bnmqwertyuiopasdfghjklzxcvbn

mqwertyuiopasdfghjklzxcvbnmq

wertyuiopasdfghjklzxcvbnmqwer

tyuiopasdfghjklzxcvbnmqwertyui

opasdfghjklzxcvbnmqwertyuiopa

sdfghjklzxcvbnmqwertyuiopasdf

ghjklzxcvbnmqwertyuiopasdfghj

klzxcvbnmqwertyuiopasdfghjklz

xcvbnmrtyuiopasdfghjklzxcvbnm

qwertyuiopasdfghjklzxcvbnmqw

INTELLIGENT AGENT

ARTIFICIAL INTELLIGENCE

5/31/2019

ertyuiopasdfghjklzxcvbnmqwert

yuiopasdfghjklzxcvbnmqwertyui

opasdfghjklzxcvbnmqwertyuiopa

sdfghjklzxcvbnmqwertyuiopasdf

ghjklzxcvbnmqwertyuiopasdfghj

klzxcvbnmqwertyuiopasdfghjklz

xcvbnmqwertyuiopasdfghjklzxcv

bnmqwertyuiopasdfghjklzxcvbn

mqwertyuiopasdfghjklzxcvbnmq

wertyuiopasdfghjklzxcvbnmqwer

tyuiopasdfghjklzxcvbnmqwertyui

opasdfghjklzxcvbnmqwertyuiopa

sdfghjklzxcvbnmqwertyuiopasdf

ghjklzxcvbnmqwertyuiopasdfghj

klzxcvbnmqwertyuiopasdfghjklz

xcvbnmrtyuiopasdfghjklzxcvbnm

qwertyuiopasdfghjklzxcvbnmqw

INTELLIGENT AGENT

ARTIFICIAL INTELLIGENCE

5/31/2019

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Abstract

Driver’s basic perceptive procedure has long been documented because of the primary

conducive factors in guests’ injuries. It takes a glance at offerings singular device that applies

the convolution neural community (CNN) to automatically analyse and predict predefined

riding postures. The essential plan is to help the drivers to drive properly. In distinction to

older techniques, CNN will habitually know the way to differentiate functions directly from

raw photos. Within the authors' research, a CNN version becomes 1st retrained by the manner

of associate unattended characteristic gaining data of method known as distributed filtering

and later fine-tuned with a category. The approach becomes incontestable usage of the

Southeast University mistreatment posture info set that comprised videos covering four riding

postures, which are normal riding, responding to a cellular Smartphone decision, smoking

and eating. Compared to alternative noted processes with special photograph descriptors and

kind methods, the author’s scheme achieves nice performance with associate usual accuracy

of 99.78%. To gauge the generalization performance and effectiveness in additional wise

conditions, the approach equally examines the employment of alternative specifically

designed information units that recall of terrible illuminations and distinctive road conditions,

reaching associate traditional accuracy of ninety-nine, three and ninety-five, seventy-seven

percent, sequentially.

Driver’s basic perceptive procedure has long been documented because of the primary

conducive factors in guests’ injuries. It takes a glance at offerings singular device that applies

the convolution neural community (CNN) to automatically analyse and predict predefined

riding postures. The essential plan is to help the drivers to drive properly. In distinction to

older techniques, CNN will habitually know the way to differentiate functions directly from

raw photos. Within the authors' research, a CNN version becomes 1st retrained by the manner

of associate unattended characteristic gaining data of method known as distributed filtering

and later fine-tuned with a category. The approach becomes incontestable usage of the

Southeast University mistreatment posture info set that comprised videos covering four riding

postures, which are normal riding, responding to a cellular Smartphone decision, smoking

and eating. Compared to alternative noted processes with special photograph descriptors and

kind methods, the author’s scheme achieves nice performance with associate usual accuracy

of 99.78%. To gauge the generalization performance and effectiveness in additional wise

conditions, the approach equally examines the employment of alternative specifically

designed information units that recall of terrible illuminations and distinctive road conditions,

reaching associate traditional accuracy of ninety-nine, three and ninety-five, seventy-seven

percent, sequentially.

Contents

INTRODUCTION.....................................................................................................................................3

Driving...............................................................................................................................................3

Cell Phone Distraction to Driving.......................................................................................................3

Statistical Evidences...........................................................................................................................3

Driving Laws.......................................................................................................................................4

DESIGN..................................................................................................................................................4

Prototype Design...............................................................................................................................4

Intelligent Agent................................................................................................................................5

Steps..............................................................................................................................................6

Pseudocode Algorithm......................................................................................................................6

Code Implementation Using the CNN Algorithm...............................................................................7

CNN Algorithm...............................................................................................................................7

Purpose and Functionality of the Code..............................................................................................7

Components (Environment, Perceptron’s Actions, Functions, Attributes)........................................7

Agent Type.....................................................................................................................................8

Percepts.........................................................................................................................................8

Actions...........................................................................................................................................8

Goals..............................................................................................................................................8

Environment..................................................................................................................................8

Search Based Technique Used...........................................................................................................8

Decision Tree.....................................................................................................................................9

CONCLUSION.........................................................................................................................................9

REFERENCES........................................................................................................................................10

INTRODUCTION

Life is precious and invaluable than anything in the universe. Having said this the risks that

threaten sustainability of life must be minimized to the best possible extent, by maintaining

the lifestyle and manners accordingly.

Driving

Driving is movement of a motor vehicle, such as buses, trucks, cars and motorcycles, while

controlling the operation. The aspect of driving, in relation with safety of life should be

explored and best driving methods and habits must be determined, in order to minimize

accidents.

INTRODUCTION.....................................................................................................................................3

Driving...............................................................................................................................................3

Cell Phone Distraction to Driving.......................................................................................................3

Statistical Evidences...........................................................................................................................3

Driving Laws.......................................................................................................................................4

DESIGN..................................................................................................................................................4

Prototype Design...............................................................................................................................4

Intelligent Agent................................................................................................................................5

Steps..............................................................................................................................................6

Pseudocode Algorithm......................................................................................................................6

Code Implementation Using the CNN Algorithm...............................................................................7

CNN Algorithm...............................................................................................................................7

Purpose and Functionality of the Code..............................................................................................7

Components (Environment, Perceptron’s Actions, Functions, Attributes)........................................7

Agent Type.....................................................................................................................................8

Percepts.........................................................................................................................................8

Actions...........................................................................................................................................8

Goals..............................................................................................................................................8

Environment..................................................................................................................................8

Search Based Technique Used...........................................................................................................8

Decision Tree.....................................................................................................................................9

CONCLUSION.........................................................................................................................................9

REFERENCES........................................................................................................................................10

INTRODUCTION

Life is precious and invaluable than anything in the universe. Having said this the risks that

threaten sustainability of life must be minimized to the best possible extent, by maintaining

the lifestyle and manners accordingly.

Driving

Driving is movement of a motor vehicle, such as buses, trucks, cars and motorcycles, while

controlling the operation. The aspect of driving, in relation with safety of life should be

explored and best driving methods and habits must be determined, in order to minimize

accidents.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Accidents are possible with the following safety issues, while driving.

1. Texting and talking over phone, while driving

2. Sleep deprived driving

3. Speeding

4. Driving impaired by drugs directly or with influence

5. Reckless driving

6. Distracted driving

7. Street racing

All the above 7 factors have negative influence on driveability, which is the smooth delivery

of power.

Cell Phone Distraction to Driving

At any instance of time, there are about 660,000 drivers attempt using phones in hand, while

driving. Eventually, distraction rates for driving by cell phones are huge and alarmingly high.

Statistical Evidences

According to the reports of National Safety Council usage of cell, while driving result

more than 1.5 million crashes, every year.

Texting while driving cause injuries up to 390,000, from accidents, every year.

1 car accident out of 4 is caused by simultaneous driving and texting, in the United

States.

Cause of accident, while texting and driving is 6 times more likely, compared driving

while drunk.

The driver’s attention is taken away for 5 seconds, while answering a text and this

time is enough to travel the football field length, while travelling at the speed of 55

mph.

Compared to all other tasks related to cell phone, the most dangerous activity is

texting, by far.

74% of drivers wish and support ban over usage of hand-held cell phone.

94% of drivers wish and support ban over texting in the cell phone, while driving.

Driving Laws

To minimize the accidents, driving laws have been are defined and imposed, in almost all the

countries. Every driver, who comes on the road, is subject to the respective laws of the

jurisdiction, where the driver is driving.

And Australia government has imposed the law related to driving and using the mobile phone

and stated as follows.

“A driver of a vehicle can only touch a mobile phone to receive and terminate a phone call if

the phone is secured in a mounting affixed to the vehicle. If the phone is not secured in a

mounting, it can only be used to receive or terminate a phone call without touching it (e.g.

using voice activation, a Bluetooth hands-free car kit, ear piece or headset)”.

The law also indicates that…

1. Texting and talking over phone, while driving

2. Sleep deprived driving

3. Speeding

4. Driving impaired by drugs directly or with influence

5. Reckless driving

6. Distracted driving

7. Street racing

All the above 7 factors have negative influence on driveability, which is the smooth delivery

of power.

Cell Phone Distraction to Driving

At any instance of time, there are about 660,000 drivers attempt using phones in hand, while

driving. Eventually, distraction rates for driving by cell phones are huge and alarmingly high.

Statistical Evidences

According to the reports of National Safety Council usage of cell, while driving result

more than 1.5 million crashes, every year.

Texting while driving cause injuries up to 390,000, from accidents, every year.

1 car accident out of 4 is caused by simultaneous driving and texting, in the United

States.

Cause of accident, while texting and driving is 6 times more likely, compared driving

while drunk.

The driver’s attention is taken away for 5 seconds, while answering a text and this

time is enough to travel the football field length, while travelling at the speed of 55

mph.

Compared to all other tasks related to cell phone, the most dangerous activity is

texting, by far.

74% of drivers wish and support ban over usage of hand-held cell phone.

94% of drivers wish and support ban over texting in the cell phone, while driving.

Driving Laws

To minimize the accidents, driving laws have been are defined and imposed, in almost all the

countries. Every driver, who comes on the road, is subject to the respective laws of the

jurisdiction, where the driver is driving.

And Australia government has imposed the law related to driving and using the mobile phone

and stated as follows.

“A driver of a vehicle can only touch a mobile phone to receive and terminate a phone call if

the phone is secured in a mounting affixed to the vehicle. If the phone is not secured in a

mounting, it can only be used to receive or terminate a phone call without touching it (e.g.

using voice activation, a Bluetooth hands-free car kit, ear piece or headset)”.

The law also indicates that…

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

It is illegal for the driver of a vehicle to create, send or look at a text message, video

message, email or similar communication, even when the phone is secured in a mounting or

can be operated without touching it.

GPS may be used by a driver whilst driving if no touch of the keypad or screen is required.

Unlike NSW, in WA the same rules apply to all drivers, including L and P plate drivers.

… according to safety commission, 2019

These laws are the same for all the territories and states in entire Australia. The vehicle driver

mustn’t make use of the mobile phone no matter vehicle is stationary or moving, except while

the vehicle is parked. Sometimes the driver may be exempted from this law, because of

another law applied in the same jurisdiction. This law is not applicable in case the cell phone

gets mounted and securely fixed or positioned in a way, if a hands free device is used by the

driver. Learner drivers or provisional drivers are banned from using the mobile phone, in

some locations, while they attempt to control the vehicle.

Hence, there is a requirement to design a new detecting system that can monitor the activities

of the driver, especially, when using the mobile phone. Various activities performed with the

driver must be monitored closely and then the respective actions must be performed

accordingly. This intelligent system should be able to alert the driver with the warning

messages, when he or she attempts to operate the mobile, in restricted ways. The same

respective agent should also release penalty after throwing warning message to the driver.

This system can help the driver to drive the vehicle without operating the mobile, except

connecting the call or disconnecting the call, while the mobile phone is mounted to the stand

near the driving seat in the vehicle. Other operations, like texting the messages, gendering at

the instant messages and video messages are considered as unlawful actions.

DESIGN

Prototype Design

A prototype is a model, release or sample of a new product, built for testing a determined

process or concept to be learned or replicated from. A prototype helps in a new design

evaluation that helps to enhance precision by users and system analysts (Blackwell & Manar,

2015).

Intelligent Agent

The intelligent agent is designed to allow only two operations, connecting and disconnecting

the calls. This intelligent agent receives the inputs, processes the input and releases the

output. There are different ways input is accessed by the agent and different ways that each of

its output operation is performed.

A vehicle driver can use the cell phone and contact it only for two operations, accepting call

and ending calls. Initially, intelligent agent verifies whether the handset is verified in

mounting attached to the vehicle or not. It is important that the handset is mounted to the

vehicle, as it can eliminate the need for holding the cell phone with the hand, since hands of

driver are always busy holding the steering and gears. Attending and speaking over the phone

message, email or similar communication, even when the phone is secured in a mounting or

can be operated without touching it.

GPS may be used by a driver whilst driving if no touch of the keypad or screen is required.

Unlike NSW, in WA the same rules apply to all drivers, including L and P plate drivers.

… according to safety commission, 2019

These laws are the same for all the territories and states in entire Australia. The vehicle driver

mustn’t make use of the mobile phone no matter vehicle is stationary or moving, except while

the vehicle is parked. Sometimes the driver may be exempted from this law, because of

another law applied in the same jurisdiction. This law is not applicable in case the cell phone

gets mounted and securely fixed or positioned in a way, if a hands free device is used by the

driver. Learner drivers or provisional drivers are banned from using the mobile phone, in

some locations, while they attempt to control the vehicle.

Hence, there is a requirement to design a new detecting system that can monitor the activities

of the driver, especially, when using the mobile phone. Various activities performed with the

driver must be monitored closely and then the respective actions must be performed

accordingly. This intelligent system should be able to alert the driver with the warning

messages, when he or she attempts to operate the mobile, in restricted ways. The same

respective agent should also release penalty after throwing warning message to the driver.

This system can help the driver to drive the vehicle without operating the mobile, except

connecting the call or disconnecting the call, while the mobile phone is mounted to the stand

near the driving seat in the vehicle. Other operations, like texting the messages, gendering at

the instant messages and video messages are considered as unlawful actions.

DESIGN

Prototype Design

A prototype is a model, release or sample of a new product, built for testing a determined

process or concept to be learned or replicated from. A prototype helps in a new design

evaluation that helps to enhance precision by users and system analysts (Blackwell & Manar,

2015).

Intelligent Agent

The intelligent agent is designed to allow only two operations, connecting and disconnecting

the calls. This intelligent agent receives the inputs, processes the input and releases the

output. There are different ways input is accessed by the agent and different ways that each of

its output operation is performed.

A vehicle driver can use the cell phone and contact it only for two operations, accepting call

and ending calls. Initially, intelligent agent verifies whether the handset is verified in

mounting attached to the vehicle or not. It is important that the handset is mounted to the

vehicle, as it can eliminate the need for holding the cell phone with the hand, since hands of

driver are always busy holding the steering and gears. Attending and speaking over the phone

can be conducted by utilizing headset, Bluetooth or just without the need for holding the cell

phone.

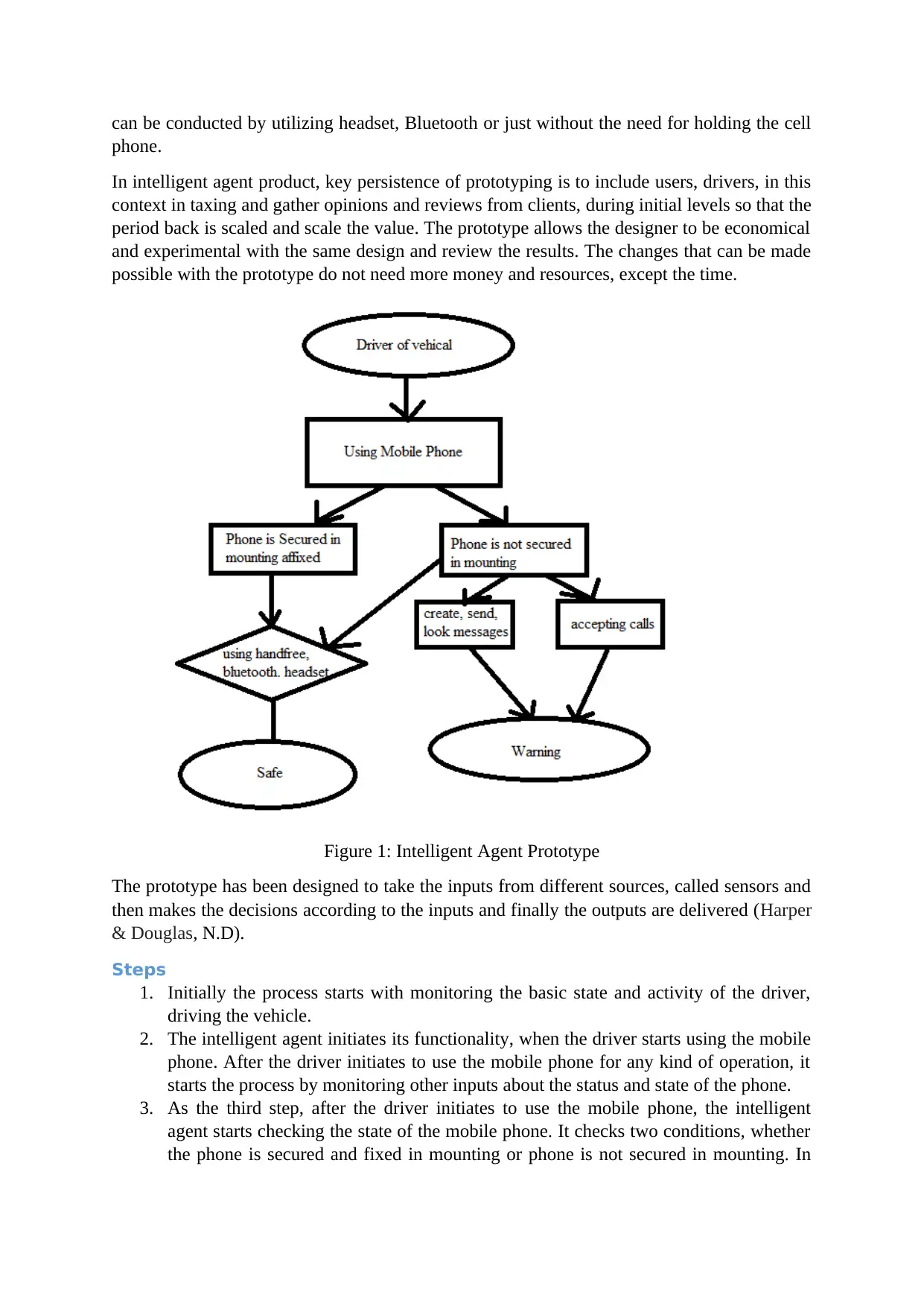

In intelligent agent product, key persistence of prototyping is to include users, drivers, in this

context in taxing and gather opinions and reviews from clients, during initial levels so that the

period back is scaled and scale the value. The prototype allows the designer to be economical

and experimental with the same design and review the results. The changes that can be made

possible with the prototype do not need more money and resources, except the time.

Figure 1: Intelligent Agent Prototype

The prototype has been designed to take the inputs from different sources, called sensors and

then makes the decisions according to the inputs and finally the outputs are delivered (Harper

& Douglas, N.D).

Steps

1. Initially the process starts with monitoring the basic state and activity of the driver,

driving the vehicle.

2. The intelligent agent initiates its functionality, when the driver starts using the mobile

phone. After the driver initiates to use the mobile phone for any kind of operation, it

starts the process by monitoring other inputs about the status and state of the phone.

3. As the third step, after the driver initiates to use the mobile phone, the intelligent

agent starts checking the state of the mobile phone. It checks two conditions, whether

the phone is secured and fixed in mounting or phone is not secured in mounting. In

phone.

In intelligent agent product, key persistence of prototyping is to include users, drivers, in this

context in taxing and gather opinions and reviews from clients, during initial levels so that the

period back is scaled and scale the value. The prototype allows the designer to be economical

and experimental with the same design and review the results. The changes that can be made

possible with the prototype do not need more money and resources, except the time.

Figure 1: Intelligent Agent Prototype

The prototype has been designed to take the inputs from different sources, called sensors and

then makes the decisions according to the inputs and finally the outputs are delivered (Harper

& Douglas, N.D).

Steps

1. Initially the process starts with monitoring the basic state and activity of the driver,

driving the vehicle.

2. The intelligent agent initiates its functionality, when the driver starts using the mobile

phone. After the driver initiates to use the mobile phone for any kind of operation, it

starts the process by monitoring other inputs about the status and state of the phone.

3. As the third step, after the driver initiates to use the mobile phone, the intelligent

agent starts checking the state of the mobile phone. It checks two conditions, whether

the phone is secured and fixed in mounting or phone is not secured in mounting. In

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

either cases, the intelligent agent takes two different processes, on the basis of

condition fulfilled.

4. As the fourth stage or fourth step, the agent checks whether the driver is using hands

free, Bluetooth or headset, it takes the output to the following stage. On the other

hand, if the phone is not secured in mounting it still checks whether the driver is using

handsfree, Bluetooth or headset, it passes the its results and outputs to the next stage.

And in case the phone is not secured in mounting and the driver does not use any

handsfree, Bluetooth or headset, the agent performs any one of the two actions, either

accepting the call or create, send or look for the messages.

5. As the final or fifth step, the agent outputs its results as ‘safe’ otherwise, it sends a

‘warning message’.

The entire functionality of the intelligent agent is performed in these three steps or stages.

PSEUDOCODE ALGORITHM

Driver of a vehical;

Using Mobile Phone;

if(During Driving){

Phone is not secure in mounting

While(Warning)

}

function driver-Agent([location,status]) returns an action

if status

= Using mobile phone during driving then return Warning

else if location

=

A then return Right

else if location

=

B then return Left

def driver-Agent(sensors):

if sensors[’status’] == ’Using mobile phone during driving’:

return ’Warning’

condition fulfilled.

4. As the fourth stage or fourth step, the agent checks whether the driver is using hands

free, Bluetooth or headset, it takes the output to the following stage. On the other

hand, if the phone is not secured in mounting it still checks whether the driver is using

handsfree, Bluetooth or headset, it passes the its results and outputs to the next stage.

And in case the phone is not secured in mounting and the driver does not use any

handsfree, Bluetooth or headset, the agent performs any one of the two actions, either

accepting the call or create, send or look for the messages.

5. As the final or fifth step, the agent outputs its results as ‘safe’ otherwise, it sends a

‘warning message’.

The entire functionality of the intelligent agent is performed in these three steps or stages.

PSEUDOCODE ALGORITHM

Driver of a vehical;

Using Mobile Phone;

if(During Driving){

Phone is not secure in mounting

While(Warning)

}

function driver-Agent([location,status]) returns an action

if status

= Using mobile phone during driving then return Warning

else if location

=

A then return Right

else if location

=

B then return Left

def driver-Agent(sensors):

if sensors[’status’] == ’Using mobile phone during driving’:

return ’Warning’

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

elif sensors[’location’] == ’A’:

return ’Right’

elif sensors[’location’] == ’B’:

return ’Left’

Agent Programs

Function driver(percept)returns action

Static driver, the driver drive a vehicle

memory ← UPDATE-MEMORY(memory, percept)

action ← CHOOSE-BEST-ACTION(memory)

memory ← UPDATE-MEMORY(memory, action)

return action

The pseudocode algorithm for intelligent agent performs its designed functions, in the

following ways.

Driver will need to understand and interpret where the vehicle is and is there anything else

standing on the road and the speed of the vehicle, with which it is running then. The driver

has to focus on driving in order to have good control over the vehicle. The driver should

ensure that the mobile phone is secured to the mounting, before even starting the vehicle.

Driver should only attempt to press a button or touch a button, to connect to answer the call

and the same to disconnect the call and these actions are accepted only when the driver makes

use of Bluetooth, hands-free, headset etc., to attend the call.

The algorithm ensures that each step of the prototype design is implemented using the

specified programming language.

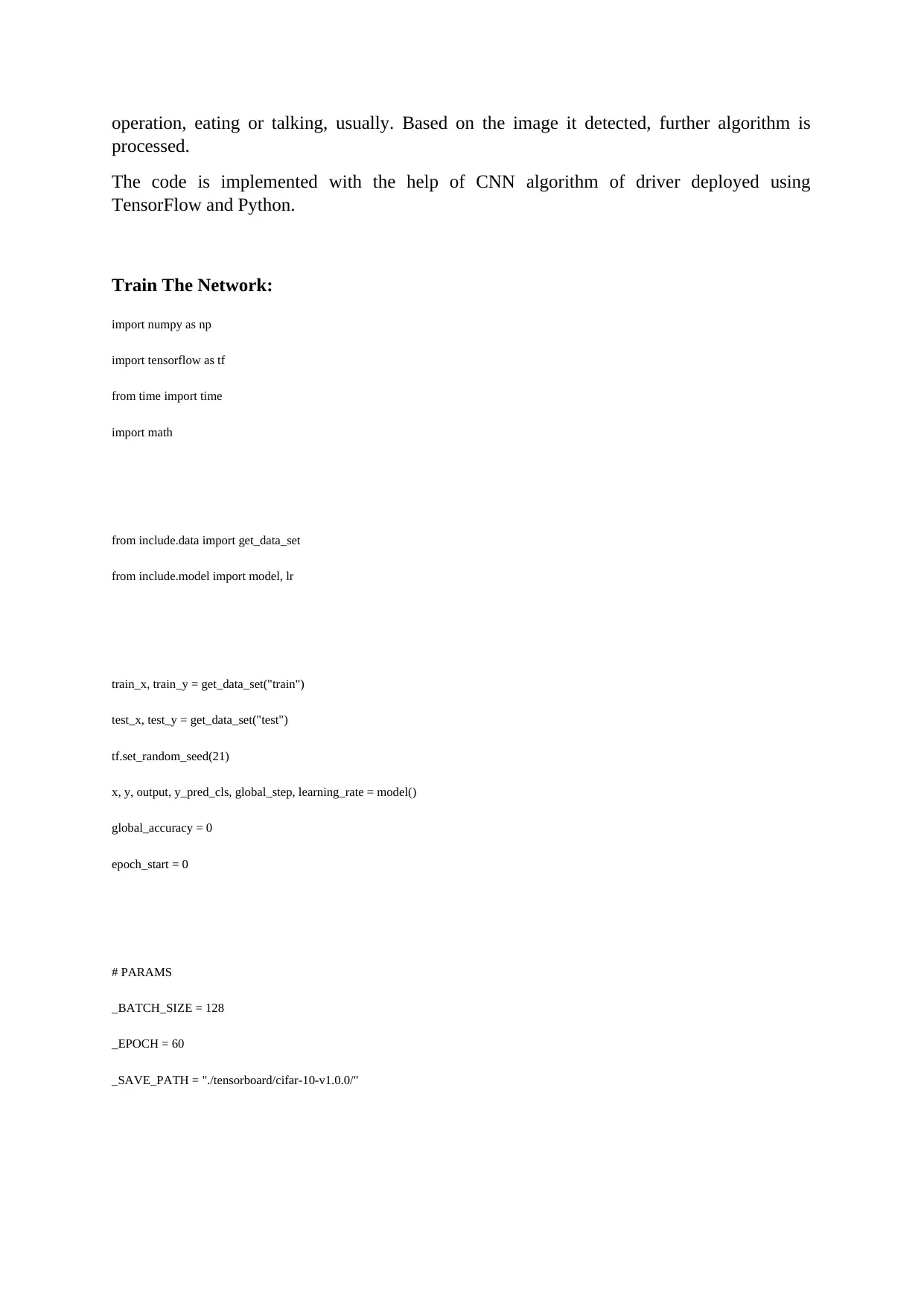

CODE IMPLEMENTATION USING THE CNN ALGORITHM

CNN Algorithm

Convolutional Neural Network is a deep neural networks class that is applied for visual

image analysis. CNNs are multilayer perceptron’s regularized versions.

The difference between image classification algorithms and CNN algorithms is that the CNN

algorithms are pre-processed. Another major advantage of the CNN algorithm is that it is

independent from human effort and prior knowledge in the design feature. These algorithms

have various applications, including image classification, image and video recognition,

natural language processing, recommender systems and medical image analysis.

Here CNN algorithm has been adapted and implemented to image classification of the driver

and identify what kind of operation that the driver is performing. It detects the image and the

activities of the driver are interpreted. The activity of the driver can be driving, mobile

return ’Right’

elif sensors[’location’] == ’B’:

return ’Left’

Agent Programs

Function driver(percept)returns action

Static driver, the driver drive a vehicle

memory ← UPDATE-MEMORY(memory, percept)

action ← CHOOSE-BEST-ACTION(memory)

memory ← UPDATE-MEMORY(memory, action)

return action

The pseudocode algorithm for intelligent agent performs its designed functions, in the

following ways.

Driver will need to understand and interpret where the vehicle is and is there anything else

standing on the road and the speed of the vehicle, with which it is running then. The driver

has to focus on driving in order to have good control over the vehicle. The driver should

ensure that the mobile phone is secured to the mounting, before even starting the vehicle.

Driver should only attempt to press a button or touch a button, to connect to answer the call

and the same to disconnect the call and these actions are accepted only when the driver makes

use of Bluetooth, hands-free, headset etc., to attend the call.

The algorithm ensures that each step of the prototype design is implemented using the

specified programming language.

CODE IMPLEMENTATION USING THE CNN ALGORITHM

CNN Algorithm

Convolutional Neural Network is a deep neural networks class that is applied for visual

image analysis. CNNs are multilayer perceptron’s regularized versions.

The difference between image classification algorithms and CNN algorithms is that the CNN

algorithms are pre-processed. Another major advantage of the CNN algorithm is that it is

independent from human effort and prior knowledge in the design feature. These algorithms

have various applications, including image classification, image and video recognition,

natural language processing, recommender systems and medical image analysis.

Here CNN algorithm has been adapted and implemented to image classification of the driver

and identify what kind of operation that the driver is performing. It detects the image and the

activities of the driver are interpreted. The activity of the driver can be driving, mobile

operation, eating or talking, usually. Based on the image it detected, further algorithm is

processed.

The code is implemented with the help of CNN algorithm of driver deployed using

TensorFlow and Python.

Train The Network:

import numpy as np

import tensorflow as tf

from time import time

import math

from include.data import get_data_set

from include.model import model, lr

train_x, train_y = get_data_set("train")

test_x, test_y = get_data_set("test")

tf.set_random_seed(21)

x, y, output, y_pred_cls, global_step, learning_rate = model()

global_accuracy = 0

epoch_start = 0

# PARAMS

_BATCH_SIZE = 128

_EPOCH = 60

_SAVE_PATH = "./tensorboard/cifar-10-v1.0.0/"

processed.

The code is implemented with the help of CNN algorithm of driver deployed using

TensorFlow and Python.

Train The Network:

import numpy as np

import tensorflow as tf

from time import time

import math

from include.data import get_data_set

from include.model import model, lr

train_x, train_y = get_data_set("train")

test_x, test_y = get_data_set("test")

tf.set_random_seed(21)

x, y, output, y_pred_cls, global_step, learning_rate = model()

global_accuracy = 0

epoch_start = 0

# PARAMS

_BATCH_SIZE = 128

_EPOCH = 60

_SAVE_PATH = "./tensorboard/cifar-10-v1.0.0/"

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

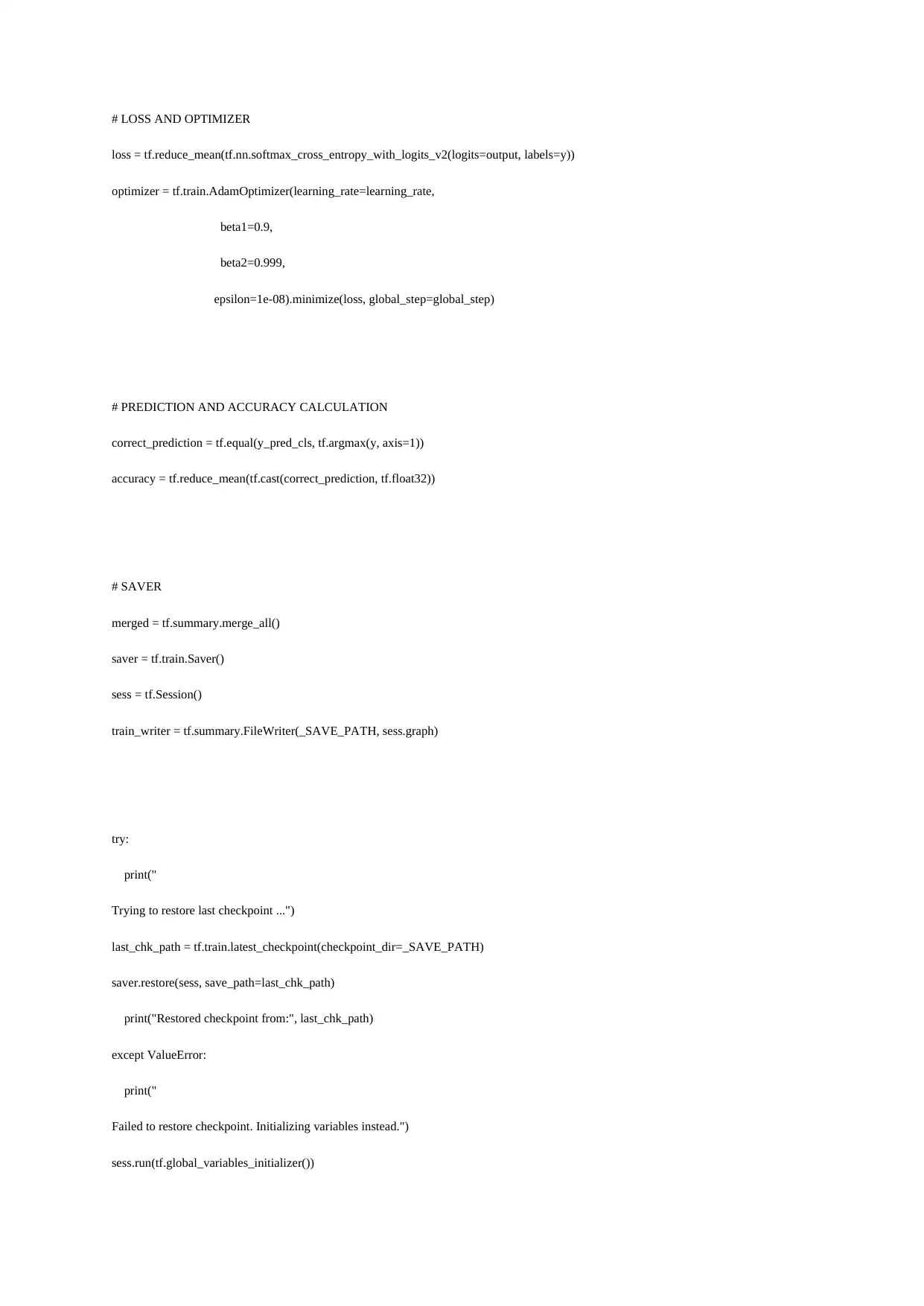

# LOSS AND OPTIMIZER

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(logits=output, labels=y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate,

beta1=0.9,

beta2=0.999,

epsilon=1e-08).minimize(loss, global_step=global_step)

# PREDICTION AND ACCURACY CALCULATION

correct_prediction = tf.equal(y_pred_cls, tf.argmax(y, axis=1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# SAVER

merged = tf.summary.merge_all()

saver = tf.train.Saver()

sess = tf.Session()

train_writer = tf.summary.FileWriter(_SAVE_PATH, sess.graph)

try:

print("

Trying to restore last checkpoint ...")

last_chk_path = tf.train.latest_checkpoint(checkpoint_dir=_SAVE_PATH)

saver.restore(sess, save_path=last_chk_path)

print("Restored checkpoint from:", last_chk_path)

except ValueError:

print("

Failed to restore checkpoint. Initializing variables instead.")

sess.run(tf.global_variables_initializer())

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(logits=output, labels=y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate,

beta1=0.9,

beta2=0.999,

epsilon=1e-08).minimize(loss, global_step=global_step)

# PREDICTION AND ACCURACY CALCULATION

correct_prediction = tf.equal(y_pred_cls, tf.argmax(y, axis=1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# SAVER

merged = tf.summary.merge_all()

saver = tf.train.Saver()

sess = tf.Session()

train_writer = tf.summary.FileWriter(_SAVE_PATH, sess.graph)

try:

print("

Trying to restore last checkpoint ...")

last_chk_path = tf.train.latest_checkpoint(checkpoint_dir=_SAVE_PATH)

saver.restore(sess, save_path=last_chk_path)

print("Restored checkpoint from:", last_chk_path)

except ValueError:

print("

Failed to restore checkpoint. Initializing variables instead.")

sess.run(tf.global_variables_initializer())

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

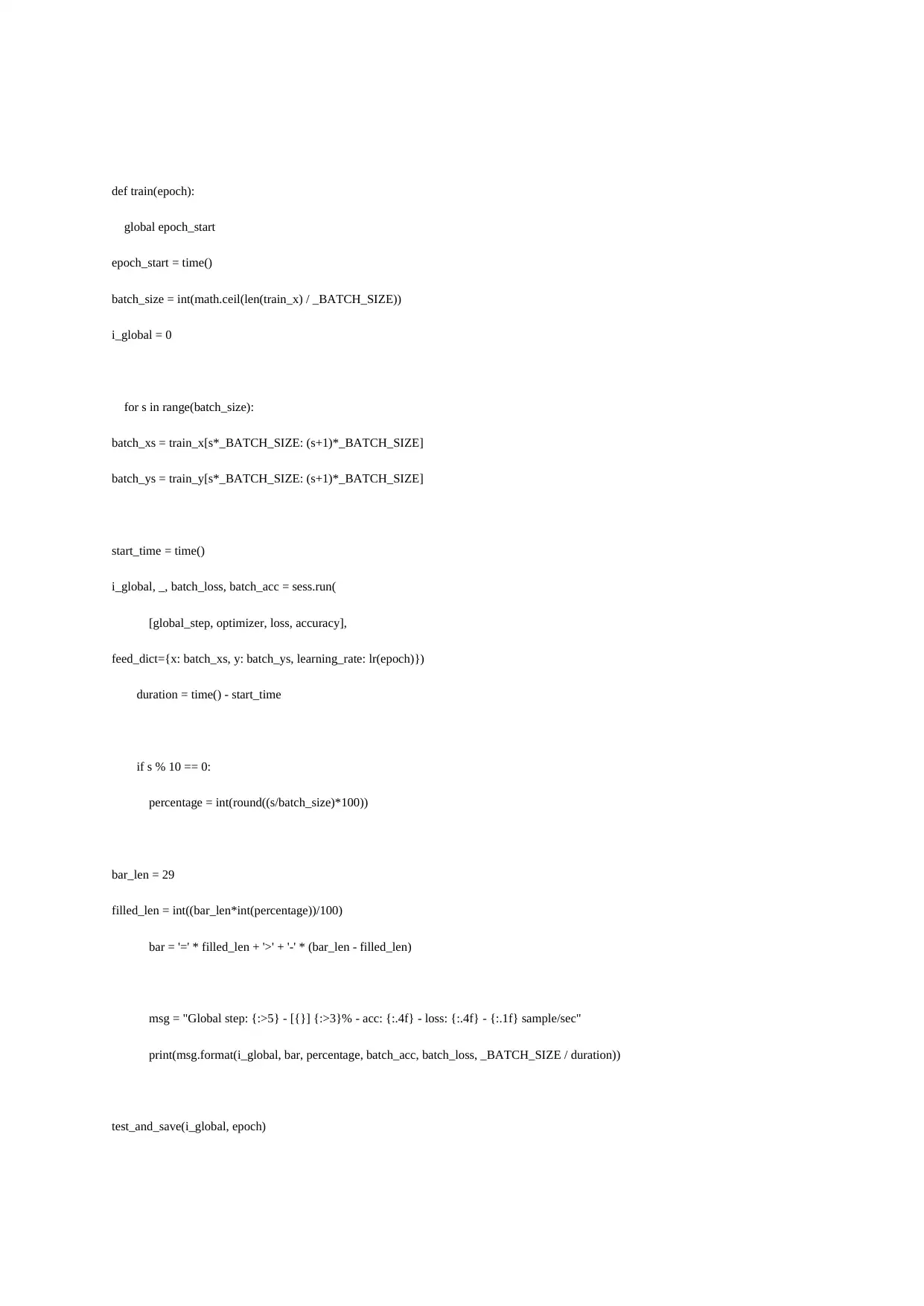

def train(epoch):

global epoch_start

epoch_start = time()

batch_size = int(math.ceil(len(train_x) / _BATCH_SIZE))

i_global = 0

for s in range(batch_size):

batch_xs = train_x[s*_BATCH_SIZE: (s+1)*_BATCH_SIZE]

batch_ys = train_y[s*_BATCH_SIZE: (s+1)*_BATCH_SIZE]

start_time = time()

i_global, _, batch_loss, batch_acc = sess.run(

[global_step, optimizer, loss, accuracy],

feed_dict={x: batch_xs, y: batch_ys, learning_rate: lr(epoch)})

duration = time() - start_time

if s % 10 == 0:

percentage = int(round((s/batch_size)*100))

bar_len = 29

filled_len = int((bar_len*int(percentage))/100)

bar = '=' * filled_len + '>' + '-' * (bar_len - filled_len)

msg = "Global step: {:>5} - [{}] {:>3}% - acc: {:.4f} - loss: {:.4f} - {:.1f} sample/sec"

print(msg.format(i_global, bar, percentage, batch_acc, batch_loss, _BATCH_SIZE / duration))

test_and_save(i_global, epoch)

global epoch_start

epoch_start = time()

batch_size = int(math.ceil(len(train_x) / _BATCH_SIZE))

i_global = 0

for s in range(batch_size):

batch_xs = train_x[s*_BATCH_SIZE: (s+1)*_BATCH_SIZE]

batch_ys = train_y[s*_BATCH_SIZE: (s+1)*_BATCH_SIZE]

start_time = time()

i_global, _, batch_loss, batch_acc = sess.run(

[global_step, optimizer, loss, accuracy],

feed_dict={x: batch_xs, y: batch_ys, learning_rate: lr(epoch)})

duration = time() - start_time

if s % 10 == 0:

percentage = int(round((s/batch_size)*100))

bar_len = 29

filled_len = int((bar_len*int(percentage))/100)

bar = '=' * filled_len + '>' + '-' * (bar_len - filled_len)

msg = "Global step: {:>5} - [{}] {:>3}% - acc: {:.4f} - loss: {:.4f} - {:.1f} sample/sec"

print(msg.format(i_global, bar, percentage, batch_acc, batch_loss, _BATCH_SIZE / duration))

test_and_save(i_global, epoch)

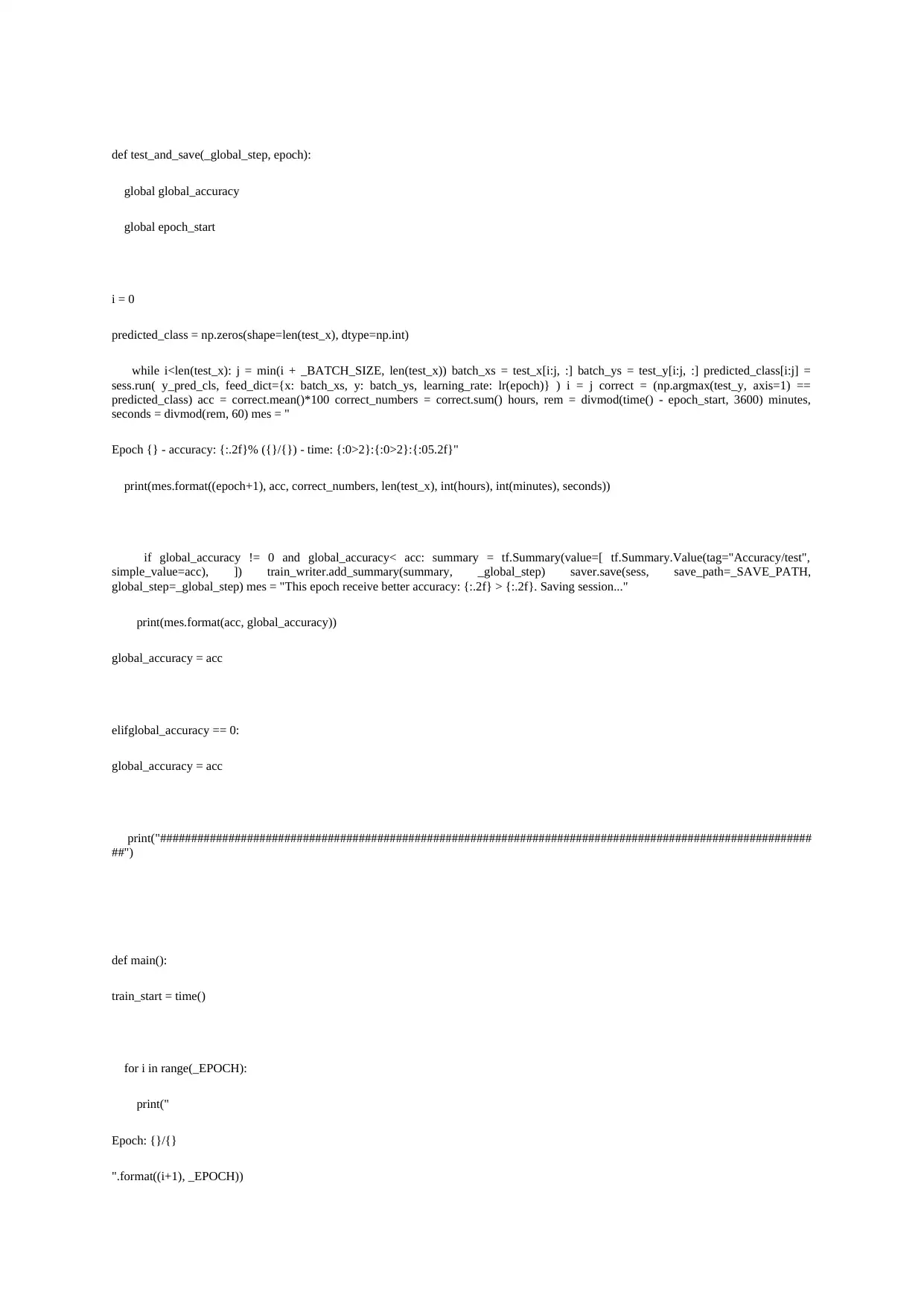

def test_and_save(_global_step, epoch):

global global_accuracy

global epoch_start

i = 0

predicted_class = np.zeros(shape=len(test_x), dtype=np.int)

while i<len(test_x): j = min(i + _BATCH_SIZE, len(test_x)) batch_xs = test_x[i:j, :] batch_ys = test_y[i:j, :] predicted_class[i:j] =

sess.run( y_pred_cls, feed_dict={x: batch_xs, y: batch_ys, learning_rate: lr(epoch)} ) i = j correct = (np.argmax(test_y, axis=1) ==

predicted_class) acc = correct.mean()*100 correct_numbers = correct.sum() hours, rem = divmod(time() - epoch_start, 3600) minutes,

seconds = divmod(rem, 60) mes = "

Epoch {} - accuracy: {:.2f}% ({}/{}) - time: {:0>2}:{:0>2}:{:05.2f}"

print(mes.format((epoch+1), acc, correct_numbers, len(test_x), int(hours), int(minutes), seconds))

if global_accuracy != 0 and global_accuracy< acc: summary = tf.Summary(value=[ tf.Summary.Value(tag="Accuracy/test",

simple_value=acc), ]) train_writer.add_summary(summary, _global_step) saver.save(sess, save_path=_SAVE_PATH,

global_step=_global_step) mes = "This epoch receive better accuracy: {:.2f} > {:.2f}. Saving session..."

print(mes.format(acc, global_accuracy))

global_accuracy = acc

elifglobal_accuracy == 0:

global_accuracy = acc

print("#########################################################################################################

##")

def main():

train_start = time()

for i in range(_EPOCH):

print("

Epoch: {}/{}

".format((i+1), _EPOCH))

global global_accuracy

global epoch_start

i = 0

predicted_class = np.zeros(shape=len(test_x), dtype=np.int)

while i<len(test_x): j = min(i + _BATCH_SIZE, len(test_x)) batch_xs = test_x[i:j, :] batch_ys = test_y[i:j, :] predicted_class[i:j] =

sess.run( y_pred_cls, feed_dict={x: batch_xs, y: batch_ys, learning_rate: lr(epoch)} ) i = j correct = (np.argmax(test_y, axis=1) ==

predicted_class) acc = correct.mean()*100 correct_numbers = correct.sum() hours, rem = divmod(time() - epoch_start, 3600) minutes,

seconds = divmod(rem, 60) mes = "

Epoch {} - accuracy: {:.2f}% ({}/{}) - time: {:0>2}:{:0>2}:{:05.2f}"

print(mes.format((epoch+1), acc, correct_numbers, len(test_x), int(hours), int(minutes), seconds))

if global_accuracy != 0 and global_accuracy< acc: summary = tf.Summary(value=[ tf.Summary.Value(tag="Accuracy/test",

simple_value=acc), ]) train_writer.add_summary(summary, _global_step) saver.save(sess, save_path=_SAVE_PATH,

global_step=_global_step) mes = "This epoch receive better accuracy: {:.2f} > {:.2f}. Saving session..."

print(mes.format(acc, global_accuracy))

global_accuracy = acc

elifglobal_accuracy == 0:

global_accuracy = acc

print("#########################################################################################################

##")

def main():

train_start = time()

for i in range(_EPOCH):

print("

Epoch: {}/{}

".format((i+1), _EPOCH))

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 16

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.