CS 301: Algorithm Analysis - Big O, Big Theta, and Big Omega

VerifiedAdded on 2022/10/10

|3

|741

|387

Homework Assignment

AI Summary

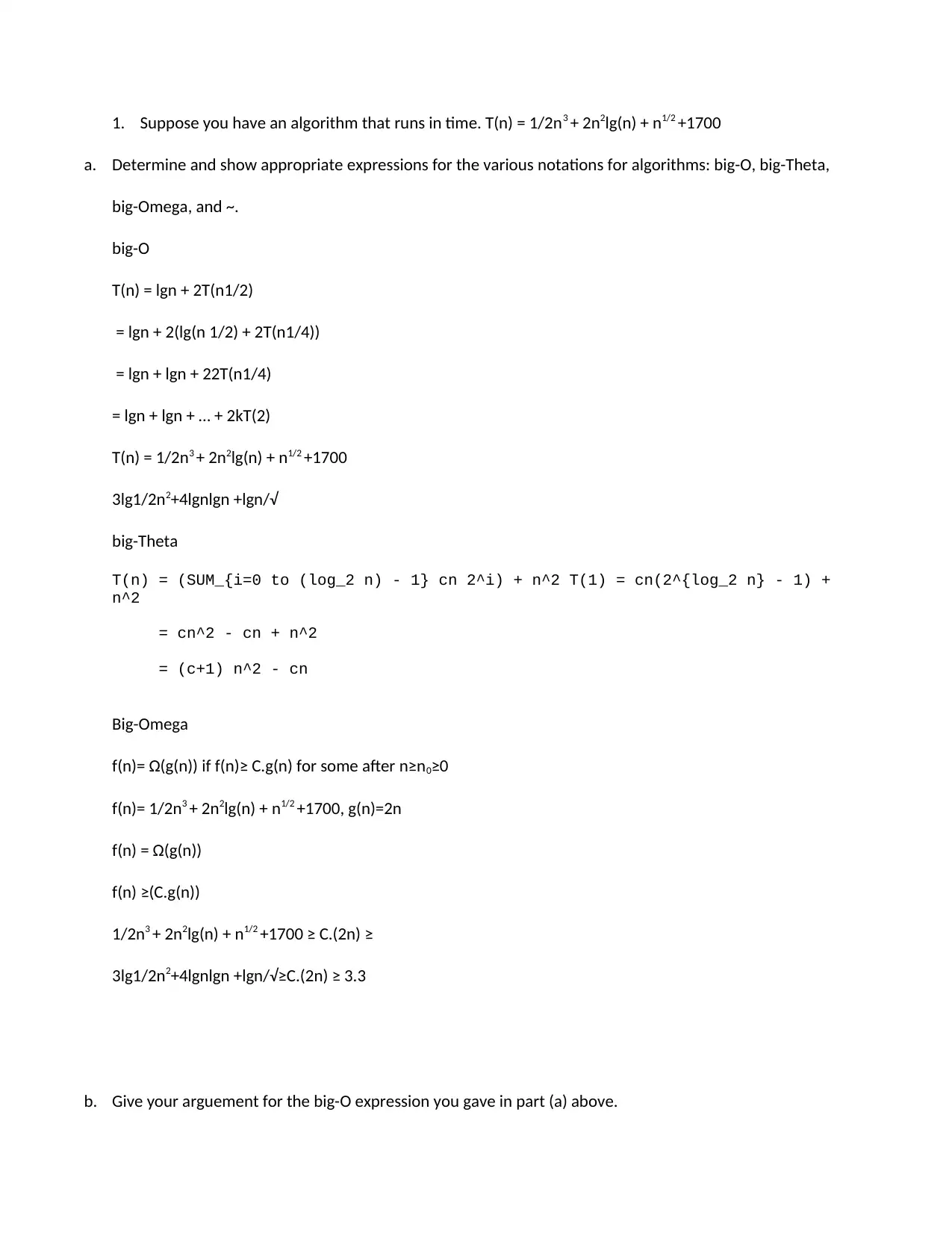

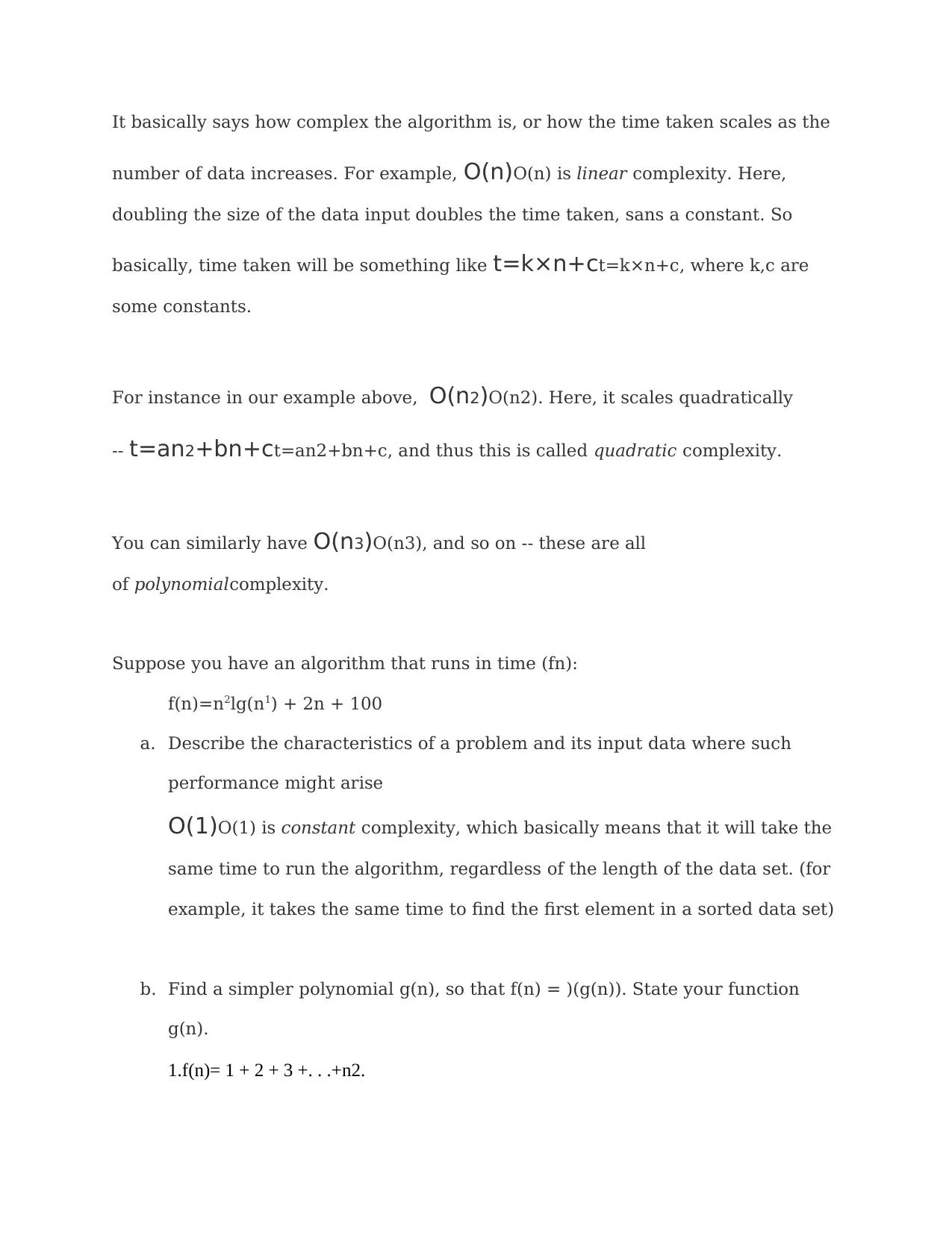

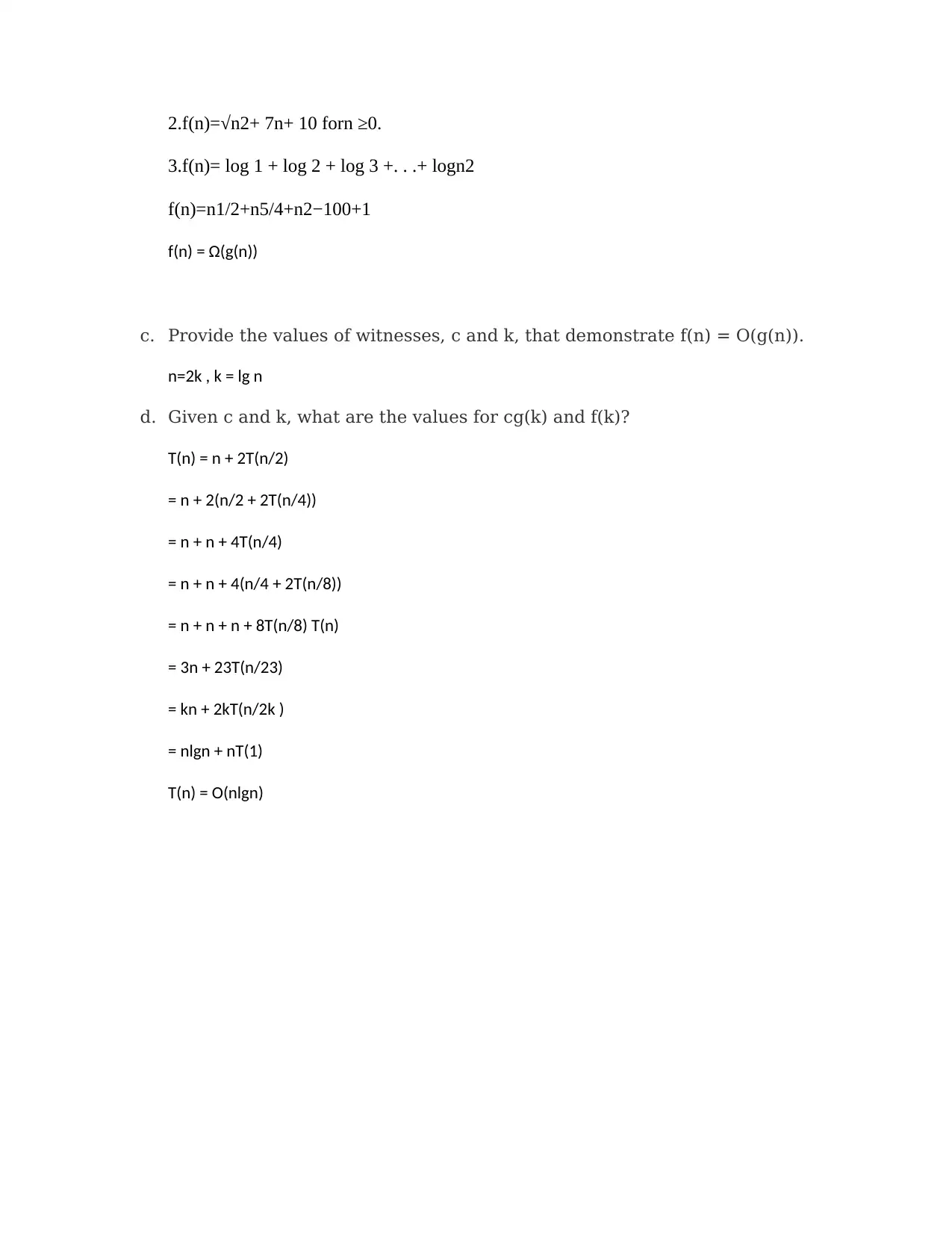

This assignment solution delves into the analysis of algorithms, focusing on the determination and application of various notations to express algorithm performance. It explores Big-O, Big-Theta, and Big-Omega notations to represent time complexity. The solution provides expressions for these notations based on a given algorithm, including arguments and examples. The assignment also covers the characteristics of problems and input data where specific performance arises, along with the simplification of polynomial functions using Big O notation. Furthermore, the solution explores the use of witnesses to demonstrate the Big O relationship between functions, and analyzes the time complexity of recursive algorithms. This comprehensive analysis helps students understand how to evaluate and compare the efficiency of algorithms.

1 out of 3

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)