The Application of Computer Vision in Facial Filler Injection Analysis

VerifiedAdded on 2023/01/19

|47

|13253

|64

Report

AI Summary

This report delves into the application of computer vision in the context of facial filler injections, exploring how AI can enhance these procedures. The study begins with an introduction to facial fillers and the challenges computers face in image analysis compared to human perception. The literature review covers face detection techniques, including texture descriptors and deep learning methods, facial feature extraction, and wrinkle detection algorithms like Hessian Line Tracking. The methodological approach outlines the use of the FERET database, MTCNN for face detection, image cropping, k-means clustering for data labeling, and the Inception model with transfer learning for wrinkle prediction. The implementation strategy details model formulation, data preparation, and feature engineering. Evaluation includes proposed metrics and experimental strategies. The results show an 85.3% accuracy using the Inception model in predicting wrinkles. The discussion summarizes the findings, answers research questions, and addresses limitations, proposing recommendations for future research, particularly in the context of beauty treatments and aesthetic medicine.

Analysing the Role of

Computer Vision in Facial Filler

Injections

Computer Vision in Facial Filler

Injections

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Abstract

Analysing the Role of Computer Vision in Facial Filler Injections

The report will highlight the Role of Computer Vision in Facial Filler Injections and in

particular facial detection, facial feature extraction, and wrinkle detection. After a systematic

review of the past literature and research, the study will use mixed research methodology to

deduce conclusions. Experiments on the use of computer visions on image acquisition, image

processing and analysis will be done. From the results of the literature review, although much

has been written regarding these three areas, there is still a dearth in research regarding how

computer vision can be deployed in facial filler injections. Such is the case that although

researchers have come up with operators to detect human faces on an image, existing studies

have not been able to meet the challenges, for instance those posed by illumination, old age, or

artefacts resulting from repeated patches. To address this research gaps, the study will carry

out experiments in three major areas including image acquisition, processing, and analysis

to ascertain whether computer vision methods can influence the detection of areas to

apply facial filler injections which can be included in various image based applications related

to beauty treatments and aesthetic medicine.

Analysing the Role of Computer Vision in Facial Filler Injections

The report will highlight the Role of Computer Vision in Facial Filler Injections and in

particular facial detection, facial feature extraction, and wrinkle detection. After a systematic

review of the past literature and research, the study will use mixed research methodology to

deduce conclusions. Experiments on the use of computer visions on image acquisition, image

processing and analysis will be done. From the results of the literature review, although much

has been written regarding these three areas, there is still a dearth in research regarding how

computer vision can be deployed in facial filler injections. Such is the case that although

researchers have come up with operators to detect human faces on an image, existing studies

have not been able to meet the challenges, for instance those posed by illumination, old age, or

artefacts resulting from repeated patches. To address this research gaps, the study will carry

out experiments in three major areas including image acquisition, processing, and analysis

to ascertain whether computer vision methods can influence the detection of areas to

apply facial filler injections which can be included in various image based applications related

to beauty treatments and aesthetic medicine.

Table of Contents

Abstract...........................................................................................................................2

Introduction.....................................................................................................................4

Literature Review............................................................................................................6

Introduction..................................................................................................................6

Face Detection.............................................................................................................7

Texture descriptor....................................................................................................7

Viola-Jones face detector.........................................................................................8

Deep learning-based method....................................................................................9

Facial Feature Extraction...........................................................................................11

Cascaded regression-based method.......................................................................11

Deep learning-based methods................................................................................13

Comprehensive survey...........................................................................................15

Wrinkle Detection......................................................................................................18

Hessian Line Tracking...........................................................................................18

Transient wrinkles detector....................................................................................18

Gabor filters and image morphology.....................................................................19

Texture orientation fields.......................................................................................19

Conclusion.................................................................................................................22

Methodological Approach.............................................................................................23

Implementation strategy................................................................................................26

Evaluation......................................................................................................................32

Discussion.....................................................................................................................34

References.....................................................................................................................41

Abstract...........................................................................................................................2

Introduction.....................................................................................................................4

Literature Review............................................................................................................6

Introduction..................................................................................................................6

Face Detection.............................................................................................................7

Texture descriptor....................................................................................................7

Viola-Jones face detector.........................................................................................8

Deep learning-based method....................................................................................9

Facial Feature Extraction...........................................................................................11

Cascaded regression-based method.......................................................................11

Deep learning-based methods................................................................................13

Comprehensive survey...........................................................................................15

Wrinkle Detection......................................................................................................18

Hessian Line Tracking...........................................................................................18

Transient wrinkles detector....................................................................................18

Gabor filters and image morphology.....................................................................19

Texture orientation fields.......................................................................................19

Conclusion.................................................................................................................22

Methodological Approach.............................................................................................23

Implementation strategy................................................................................................26

Evaluation......................................................................................................................32

Discussion.....................................................................................................................34

References.....................................................................................................................41

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Chapter One

Introduction

Filler injections are a modern cosmetic procedure and have been widely embraced by

women and men alike because of their wonderful ability to create fuller cheeks, lips,

and other facial features. Filler injections are also used to reduce the effects of

wrinkles around the mouth, eyes, and eyebrows and to hide any scars that may be

causing an individual to feel self-conscious and unattractive.

We as human beings can effortlessly see what the image represents. As an example,

we can easily see that the image contains a number of objects, and we can detect faces

on an image as well distinguish between the different features of the face. When it

comes to computer systems, on the other hand, has difficulties. Computers cannot

easily see whether the image contains objects or not. Also, cannot easily detect human

faces and facial features.

The aim of this thesis is to explore the contributions of the existing work presented on

the implementation of computer vision in the analysis of facial features and investigate

the role of computer vision in facial filler injections.

Research questions

What are the best methods that enable computers to detect a face on an image?

What are the computer vision methods to discover the location of different facial

features such as mouth, eyes?

Can pattern recognition methods be used to detect wrinkles or fine lines?

Introduction

Filler injections are a modern cosmetic procedure and have been widely embraced by

women and men alike because of their wonderful ability to create fuller cheeks, lips,

and other facial features. Filler injections are also used to reduce the effects of

wrinkles around the mouth, eyes, and eyebrows and to hide any scars that may be

causing an individual to feel self-conscious and unattractive.

We as human beings can effortlessly see what the image represents. As an example,

we can easily see that the image contains a number of objects, and we can detect faces

on an image as well distinguish between the different features of the face. When it

comes to computer systems, on the other hand, has difficulties. Computers cannot

easily see whether the image contains objects or not. Also, cannot easily detect human

faces and facial features.

The aim of this thesis is to explore the contributions of the existing work presented on

the implementation of computer vision in the analysis of facial features and investigate

the role of computer vision in facial filler injections.

Research questions

What are the best methods that enable computers to detect a face on an image?

What are the computer vision methods to discover the location of different facial

features such as mouth, eyes?

Can pattern recognition methods be used to detect wrinkles or fine lines?

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Data will be used for investigations

The Facial Recognition Technology (FERET) database.

Experiments expected to run

1. Image acquisition.

2. Image processing.

3. Image analysis.

Output of the thesis

Computer vision method influence the detection of areas to apply facial filler

injections.

The Facial Recognition Technology (FERET) database.

Experiments expected to run

1. Image acquisition.

2. Image processing.

3. Image analysis.

Output of the thesis

Computer vision method influence the detection of areas to apply facial filler

injections.

Chapter Two

Literature Review

Introduction

Modern medical practice has embraced facial filler injections as part of the

innumerable cosmetic procedures that characterize the current age of medicine. These

procedures are not only popular among women, but men as well, meaning that their

acceptability will continue to increase in the world across different demographics. The

reason why facial-filler injections have grown in popularity draws from the fact that

they carry the ability to help people to create fuller cheeks, lips, in addition to other

related facial features. Filler injections, at the same time also diminish the effects of

wrinkles around the eyes, mouth, and eyebrows not to mention the fact that they also

help people to hide scars. More importantly is the fact that they play an integral role in

helping people to stop being self-conscious about their appearance while increasing

their overall confidence.

This literature review is divided into three major parts including facial

detection, facial feature extraction, and wrinkle detection. The first part of the

literature review explores studies on facial detection and covers specific areas, such as

face description with Local Binary Patterns (LBP). Medical practice requires the input

of the actual face into a computer in order to correctly analyse it for optimization with

regard to filler injections. The review will seek to analyse the extent to which existing

operators have delivered significant capabilities that have gone a long way towards

helping medical practitioners with facial detection. The second part of the review will

explore facial feature extraction. After an image is input into a computer, there is a

need for an operative that can be used for extracting facial features for analysis and

subsequent optimization in order to generate desired results for facial filler injections.

The review covers studies for instance which make contributions to research including

proposing a novel approach for image alignment whose basis is on ensemble of

regression trees with the capacity to carry out shape invariant selection of features. The

last section on detection of wrinkles is necessary in light of difficulties encountered

related to wrinkle variability that require complex algorithms for detecting the said

wrinkles. Other problems have to do with transient wrinkles that appear from facial

expressions and require a different approach from permanent wrinkles of old age. The

review covers different aspects in wrinkle detection including Hessian Line Tracking

Literature Review

Introduction

Modern medical practice has embraced facial filler injections as part of the

innumerable cosmetic procedures that characterize the current age of medicine. These

procedures are not only popular among women, but men as well, meaning that their

acceptability will continue to increase in the world across different demographics. The

reason why facial-filler injections have grown in popularity draws from the fact that

they carry the ability to help people to create fuller cheeks, lips, in addition to other

related facial features. Filler injections, at the same time also diminish the effects of

wrinkles around the eyes, mouth, and eyebrows not to mention the fact that they also

help people to hide scars. More importantly is the fact that they play an integral role in

helping people to stop being self-conscious about their appearance while increasing

their overall confidence.

This literature review is divided into three major parts including facial

detection, facial feature extraction, and wrinkle detection. The first part of the

literature review explores studies on facial detection and covers specific areas, such as

face description with Local Binary Patterns (LBP). Medical practice requires the input

of the actual face into a computer in order to correctly analyse it for optimization with

regard to filler injections. The review will seek to analyse the extent to which existing

operators have delivered significant capabilities that have gone a long way towards

helping medical practitioners with facial detection. The second part of the review will

explore facial feature extraction. After an image is input into a computer, there is a

need for an operative that can be used for extracting facial features for analysis and

subsequent optimization in order to generate desired results for facial filler injections.

The review covers studies for instance which make contributions to research including

proposing a novel approach for image alignment whose basis is on ensemble of

regression trees with the capacity to carry out shape invariant selection of features. The

last section on detection of wrinkles is necessary in light of difficulties encountered

related to wrinkle variability that require complex algorithms for detecting the said

wrinkles. Other problems have to do with transient wrinkles that appear from facial

expressions and require a different approach from permanent wrinkles of old age. The

review covers different aspects in wrinkle detection including Hessian Line Tracking

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

as well as fast deterministic algorithms based on image morphology as well as Gabor

filters with the aim of improving localization results. The reason why the review is

divided into three major parts is to allow for a thorough exploration of the concepts

related to filler injections and establish gaps that remain regarding how computer

vision can help to improve the process.

Face Detection

Texture descriptor. In the first study, researchers presented a novel and efficient

facial image representation based on the texture features of LBP (Ahonen, Hadid, &

Pietikainen, 2006). The study primarily divided the facial image into smaller regions

and proceeded to compute a description of each region using local binary patterns. In

the next stage, the researchers combined the descriptors into a spatially enhanced

histogram. The rationale was that the texture description of a single region described

the appearance of the region while the combination of all region descriptions encoded

the global geometry of the face. Facial description approaches that employ the LBP

approach are one of the most preferred texture descriptors whose use also cuts across

numerous applications. Preference for the LBP based approach draws from evidence in

which it is highly discriminative while also generating a number of merits. Among the

advantages that the LBP-based face description approach generates is invariance to

monotonic gray-level changes as well as computational efficiency (Ahonen et al.,

2006). The reason why these two factors are particularly important is because they

make the approach suitable for image analysis tasks that tend to be increasingly

demanding. The rationale behind researchers’ preference for LBP-based facial

description similarly draws from the fact that faces can be seen as composition of

micro-patterns whereby an operator, such as LBP is better positioned in describing

them. In the beginning, the LBP operator was primarily designed as a tool for texture

description. With the LBP, every pixel of an image derives a label. This is achieved by

thresholding the 3×3-neighborhood of each pixel with the midpoint pixel-value – the

operator considers the result as a binary number (Ahonen et al., 2006). They (the

operator) then use the histogram of the labels as a texture descriptor. In their study,

Ahonen et al. (2006) assessed their approach on the face recognition problem using the

Colorado State University Face Identification Evaluation System. The images in the

experiment were acquired from the FERET database. The FERET database comprises

of 14,051 grayscale images in total which as Ahonen et al. report (2006) represent

filters with the aim of improving localization results. The reason why the review is

divided into three major parts is to allow for a thorough exploration of the concepts

related to filler injections and establish gaps that remain regarding how computer

vision can help to improve the process.

Face Detection

Texture descriptor. In the first study, researchers presented a novel and efficient

facial image representation based on the texture features of LBP (Ahonen, Hadid, &

Pietikainen, 2006). The study primarily divided the facial image into smaller regions

and proceeded to compute a description of each region using local binary patterns. In

the next stage, the researchers combined the descriptors into a spatially enhanced

histogram. The rationale was that the texture description of a single region described

the appearance of the region while the combination of all region descriptions encoded

the global geometry of the face. Facial description approaches that employ the LBP

approach are one of the most preferred texture descriptors whose use also cuts across

numerous applications. Preference for the LBP based approach draws from evidence in

which it is highly discriminative while also generating a number of merits. Among the

advantages that the LBP-based face description approach generates is invariance to

monotonic gray-level changes as well as computational efficiency (Ahonen et al.,

2006). The reason why these two factors are particularly important is because they

make the approach suitable for image analysis tasks that tend to be increasingly

demanding. The rationale behind researchers’ preference for LBP-based facial

description similarly draws from the fact that faces can be seen as composition of

micro-patterns whereby an operator, such as LBP is better positioned in describing

them. In the beginning, the LBP operator was primarily designed as a tool for texture

description. With the LBP, every pixel of an image derives a label. This is achieved by

thresholding the 3×3-neighborhood of each pixel with the midpoint pixel-value – the

operator considers the result as a binary number (Ahonen et al., 2006). They (the

operator) then use the histogram of the labels as a texture descriptor. In their study,

Ahonen et al. (2006) assessed their approach on the face recognition problem using the

Colorado State University Face Identification Evaluation System. The images in the

experiment were acquired from the FERET database. The FERET database comprises

of 14,051 grayscale images in total which as Ahonen et al. report (2006) represent

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

1,199 individuals. The images in the database vary with regard to facial expressions,

lighting, as well as pose angle among many other attributes. The study however only

employed frontal face images that were further divided into five clusters. For control

algorithms, the researchers employed Elastic Bunch Graph Matching (EBGM),

Bayesian Intra/Extra-personal Classifier, and Principal Component Analysis (PCA). In

order to ensure that the experiment could be reproduced, the researchers utilized

publicly available Colorado State University face identification evaluation system. The

system deploys the FERET face images while also following the FERET test

procedure for semi-automatic face-recognition algorithms – however, the authors

applied slight adjustments in order to fit the model to their experiment. This is the first

study to apply the LBP operator to represent facial images – the LBP was initially

applied in image retrieval and texture classification. Moreover, the developed model in

this experiment is not only limited to face detection, representation, and facial

expression recognition. Such is the case because the model can be applied to other

recognition and object detection tasks (Ahonen et al., 2006).

Viola-Jones face detector. In the second study, researchers described a machine

learning approach for detecting objects visually with the capacity to process images

extremely rapidly while also achieving high rates of detection (Viola & Jones, 2001).

The paper primarily fused new algorithms as well as insights to develop a framework

for robust and extremely fast object detection. There were three major contributions in

the proposed approach that the authors outline. Firstly, the experiment demonstrates a

new image representation referred to as integral image which is ideal because of the

innate capacity for fast feature evaluation - this capability was in part inspired by

previous research by Papageorgiou et al. (1998), quoted in Viola and Jones, (2001).

However, the detection system in the new experiment did not work directly with image

intensities. Like was the case with the previous authors, the new study employed a set

of features that echoed Haar Basis functions (although these are supplemented by more

complex related filters). So as to compute these features at a high rate and at many

scales, the study introduced the integral image representation for images. Significant to

note is the fact that the integral image can be computed from an image with only a

handful of operations per pixel. Once the image is computed, the researchers could

then compute any of the Haar-like features at any location or scale in constant time

(Viola & Jones, 2001). Secondly, the study also contributes to related body of research

with a method or framework for constructing a classifier which is achieved through

lighting, as well as pose angle among many other attributes. The study however only

employed frontal face images that were further divided into five clusters. For control

algorithms, the researchers employed Elastic Bunch Graph Matching (EBGM),

Bayesian Intra/Extra-personal Classifier, and Principal Component Analysis (PCA). In

order to ensure that the experiment could be reproduced, the researchers utilized

publicly available Colorado State University face identification evaluation system. The

system deploys the FERET face images while also following the FERET test

procedure for semi-automatic face-recognition algorithms – however, the authors

applied slight adjustments in order to fit the model to their experiment. This is the first

study to apply the LBP operator to represent facial images – the LBP was initially

applied in image retrieval and texture classification. Moreover, the developed model in

this experiment is not only limited to face detection, representation, and facial

expression recognition. Such is the case because the model can be applied to other

recognition and object detection tasks (Ahonen et al., 2006).

Viola-Jones face detector. In the second study, researchers described a machine

learning approach for detecting objects visually with the capacity to process images

extremely rapidly while also achieving high rates of detection (Viola & Jones, 2001).

The paper primarily fused new algorithms as well as insights to develop a framework

for robust and extremely fast object detection. There were three major contributions in

the proposed approach that the authors outline. Firstly, the experiment demonstrates a

new image representation referred to as integral image which is ideal because of the

innate capacity for fast feature evaluation - this capability was in part inspired by

previous research by Papageorgiou et al. (1998), quoted in Viola and Jones, (2001).

However, the detection system in the new experiment did not work directly with image

intensities. Like was the case with the previous authors, the new study employed a set

of features that echoed Haar Basis functions (although these are supplemented by more

complex related filters). So as to compute these features at a high rate and at many

scales, the study introduced the integral image representation for images. Significant to

note is the fact that the integral image can be computed from an image with only a

handful of operations per pixel. Once the image is computed, the researchers could

then compute any of the Haar-like features at any location or scale in constant time

(Viola & Jones, 2001). Secondly, the study also contributes to related body of research

with a method or framework for constructing a classifier which is achieved through

using AdaBoost1 in the selection of a small number of critical features. It is important

to note that within any given image sub-window, the total number of Harr-like features

is usually high – higher than the total number of pixels (Viola & Jones, 2001).

Subsequently, in order to account for fast classification with an operator that is not

easily overwhelmed by the sheer scale of tasks, there is a need to ensure that the

learning process excludes a large majority of available features, while focusing on a

small set of features. The selection of features was inspired by the previous study of

Tieu and Viola (2000) whereby it was achieved by a simple modification of the

AdaBoost procedure. In the modification, the weaker learner is restrained in order to

ensure that each weak classifier that the operator returns relies only on one feature. For

this matter, each phase of the boosting process, responsible for selecting a new weak

classifier, is perceived as a feature selection process. The third and last major

contribution of Viola and Jones (2001), study is related to the method of successfully

fusing more complex classifiers in a cascade structure – this is critical because it

dramatically increases the detector by focusing attention on regions of an image that

appear to be promising. The rationale behind focus of attention models, as the authors

explain, draws from the fact that in many instances, it is usually possible for an

experiment to rapidly determine where in an image an object might occur (Viola &

Jones, 2001). For this matter the experiment reserved more complex processing effort

for such regions on an image. The key measure of this model is the rate of false

negatives registered in the attentional process. As such, the researchers selected

majority of object instances by the attentional filter.

Deep learning-based method. In the third study, researchers proposed a deep

cascaded multitask framework that made use of the inherent correlation between

alignment and detection to boost up their performance (Zhang, Zhang, Li, & Qiao,

2016). Although face detection and alignment are increasingly useful in many face

applications for instance facial expression analysis and face recognition, there are a

number of challenges that real world applications of such tasks face. These challenges

are attributed to large visual variations such as extreme lightings, large pose variations,

and occlusions (Zhang et al., 2016). The study sought to build on the cascade face

detector by Viola and Jones in which the researchers utilized AdaBoost and Haar-Like

features for training cascaded classifiers – this approach is reported to achieve good

1 According to Viola and Jones (2001) the “AdaBoost learning algorithm was used to boost the

classification of performance of a simple (sometimes called weak) learning algorithm” (p.I-513).

to note that within any given image sub-window, the total number of Harr-like features

is usually high – higher than the total number of pixels (Viola & Jones, 2001).

Subsequently, in order to account for fast classification with an operator that is not

easily overwhelmed by the sheer scale of tasks, there is a need to ensure that the

learning process excludes a large majority of available features, while focusing on a

small set of features. The selection of features was inspired by the previous study of

Tieu and Viola (2000) whereby it was achieved by a simple modification of the

AdaBoost procedure. In the modification, the weaker learner is restrained in order to

ensure that each weak classifier that the operator returns relies only on one feature. For

this matter, each phase of the boosting process, responsible for selecting a new weak

classifier, is perceived as a feature selection process. The third and last major

contribution of Viola and Jones (2001), study is related to the method of successfully

fusing more complex classifiers in a cascade structure – this is critical because it

dramatically increases the detector by focusing attention on regions of an image that

appear to be promising. The rationale behind focus of attention models, as the authors

explain, draws from the fact that in many instances, it is usually possible for an

experiment to rapidly determine where in an image an object might occur (Viola &

Jones, 2001). For this matter the experiment reserved more complex processing effort

for such regions on an image. The key measure of this model is the rate of false

negatives registered in the attentional process. As such, the researchers selected

majority of object instances by the attentional filter.

Deep learning-based method. In the third study, researchers proposed a deep

cascaded multitask framework that made use of the inherent correlation between

alignment and detection to boost up their performance (Zhang, Zhang, Li, & Qiao,

2016). Although face detection and alignment are increasingly useful in many face

applications for instance facial expression analysis and face recognition, there are a

number of challenges that real world applications of such tasks face. These challenges

are attributed to large visual variations such as extreme lightings, large pose variations,

and occlusions (Zhang et al., 2016). The study sought to build on the cascade face

detector by Viola and Jones in which the researchers utilized AdaBoost and Haar-Like

features for training cascaded classifiers – this approach is reported to achieve good

1 According to Viola and Jones (2001) the “AdaBoost learning algorithm was used to boost the

classification of performance of a simple (sometimes called weak) learning algorithm” (p.I-513).

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

performance with real-time efficiency. The study nevertheless had to overcome the

obstacle in which Viola and Jones’s detector degraded significantly when it came to

real-world applications with larger visual variations of human faces. The study as

such, proposed new convolutional neural networks-based (CNNs) framework for joint

face detection as well as alignment with a carefully designed lightweight CNN

architecture ideal for performance in real time. Although, initial CNNS achieved

remarkable results in relation to various computer vision functions, for instance face

recognition and image classification, due to the complex structure and somewhat

blackbox nature of CNNs, the operator was ruled out as increasingly expensive for real

life practical application. In the experiments approach, the researchers resize an image

to different scales with the aim of developing an image pyramid. The result is then

used as input for a three-stage cascaded framework. In the first stage, the researchers

exploited a fully convolutional network they referred to as a proposal network with the

aim of obtaining the sample facial windows as well as their bounding box regression

vectors. The samples or candidates were then calibrated on the basis of estimated

bounding box regression vectors. Lastly, the researchers employed a non-maximum

suppression (NMS) to fuse two highly overlapped candidates (Zhang et al., 2016). In

the second stage of the experiment, the study fed all the candidates to yet another CNN

they referred to as the refine-network (R-network). This further rejected several false

candidates, while also calibrating the images with bounding box regression, and

conducting NMS. The third and last stage was the same as the second one. However,

in the final phase of the experiment, the objective was to identify regions of the face in

a more supervise manner. Particularly, the network was controlled so as to output five

facial landmarks. The experimental results of the study demonstrated that the methods

that the researchers employed consistently outperformed existing state of the art

approaches with regard to various challenging benchmarks such as, annotated facial

landmarks in the wild (AFLW) (for face alignment), face detection dataset and

benchmark (FDDB) and WIDER FACE for face detection. Particularly, the three

major contributions of these studies with regard to improvement of performance

include online hard sample strategy, carefully designed cascaded CNNs architecture,

and joint face-alignment learning (Ahonen et al., 2006).

obstacle in which Viola and Jones’s detector degraded significantly when it came to

real-world applications with larger visual variations of human faces. The study as

such, proposed new convolutional neural networks-based (CNNs) framework for joint

face detection as well as alignment with a carefully designed lightweight CNN

architecture ideal for performance in real time. Although, initial CNNS achieved

remarkable results in relation to various computer vision functions, for instance face

recognition and image classification, due to the complex structure and somewhat

blackbox nature of CNNs, the operator was ruled out as increasingly expensive for real

life practical application. In the experiments approach, the researchers resize an image

to different scales with the aim of developing an image pyramid. The result is then

used as input for a three-stage cascaded framework. In the first stage, the researchers

exploited a fully convolutional network they referred to as a proposal network with the

aim of obtaining the sample facial windows as well as their bounding box regression

vectors. The samples or candidates were then calibrated on the basis of estimated

bounding box regression vectors. Lastly, the researchers employed a non-maximum

suppression (NMS) to fuse two highly overlapped candidates (Zhang et al., 2016). In

the second stage of the experiment, the study fed all the candidates to yet another CNN

they referred to as the refine-network (R-network). This further rejected several false

candidates, while also calibrating the images with bounding box regression, and

conducting NMS. The third and last stage was the same as the second one. However,

in the final phase of the experiment, the objective was to identify regions of the face in

a more supervise manner. Particularly, the network was controlled so as to output five

facial landmarks. The experimental results of the study demonstrated that the methods

that the researchers employed consistently outperformed existing state of the art

approaches with regard to various challenging benchmarks such as, annotated facial

landmarks in the wild (AFLW) (for face alignment), face detection dataset and

benchmark (FDDB) and WIDER FACE for face detection. Particularly, the three

major contributions of these studies with regard to improvement of performance

include online hard sample strategy, carefully designed cascaded CNNs architecture,

and joint face-alignment learning (Ahonen et al., 2006).

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

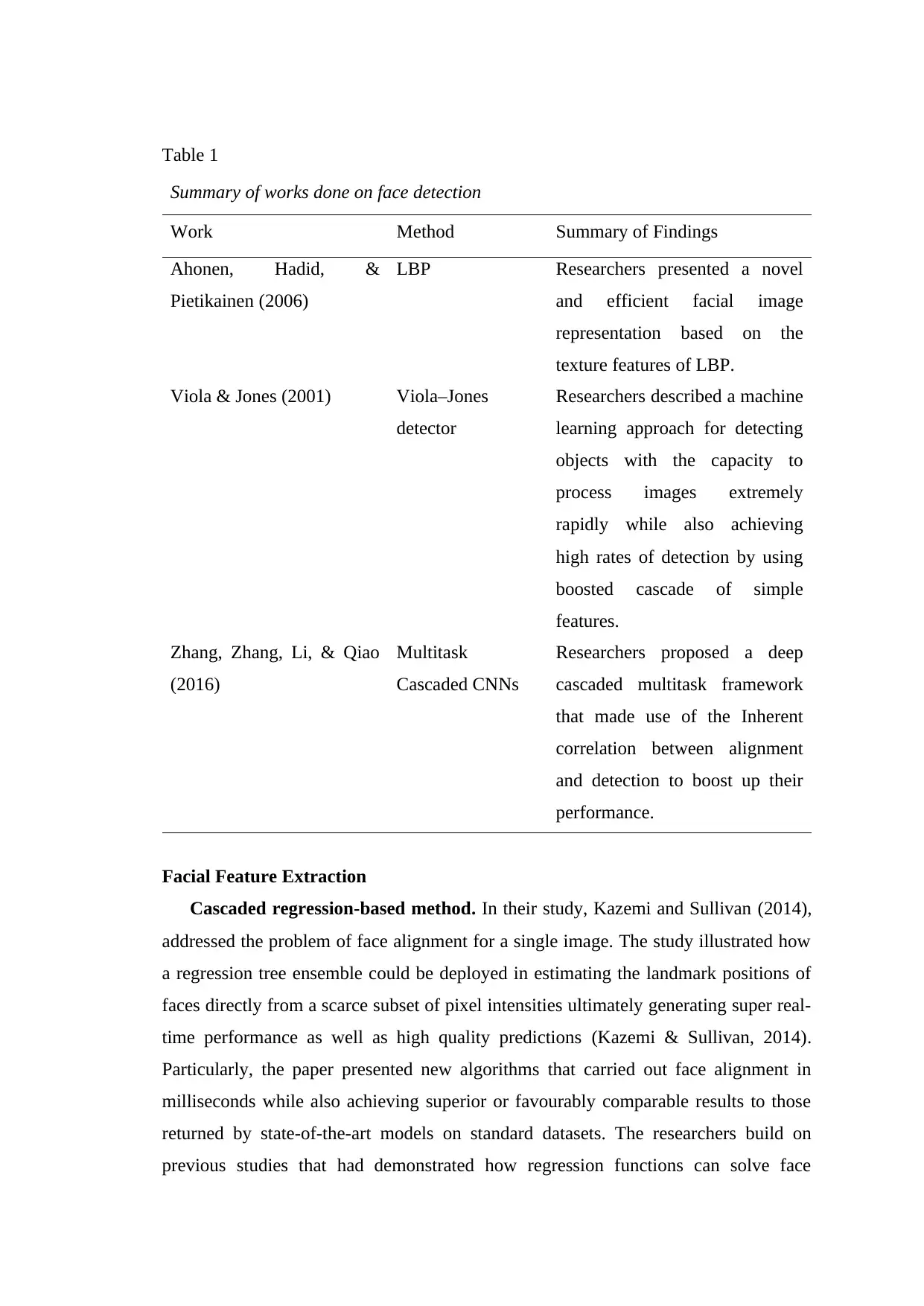

Table 1

Summary of works done on face detection

Work Method Summary of Findings

Ahonen, Hadid, &

Pietikainen (2006)

LBP Researchers presented a novel

and efficient facial image

representation based on the

texture features of LBP.

Viola & Jones (2001) Viola–Jones

detector

Researchers described a machine

learning approach for detecting

objects with the capacity to

process images extremely

rapidly while also achieving

high rates of detection by using

boosted cascade of simple

features.

Zhang, Zhang, Li, & Qiao

(2016)

Multitask

Cascaded CNNs

Researchers proposed a deep

cascaded multitask framework

that made use of the Inherent

correlation between alignment

and detection to boost up their

performance.

Facial Feature Extraction

Cascaded regression-based method. In their study, Kazemi and Sullivan (2014),

addressed the problem of face alignment for a single image. The study illustrated how

a regression tree ensemble could be deployed in estimating the landmark positions of

faces directly from a scarce subset of pixel intensities ultimately generating super real-

time performance as well as high quality predictions (Kazemi & Sullivan, 2014).

Particularly, the paper presented new algorithms that carried out face alignment in

milliseconds while also achieving superior or favourably comparable results to those

returned by state-of-the-art models on standard datasets. The researchers build on

previous studies that had demonstrated how regression functions can solve face

Summary of works done on face detection

Work Method Summary of Findings

Ahonen, Hadid, &

Pietikainen (2006)

LBP Researchers presented a novel

and efficient facial image

representation based on the

texture features of LBP.

Viola & Jones (2001) Viola–Jones

detector

Researchers described a machine

learning approach for detecting

objects with the capacity to

process images extremely

rapidly while also achieving

high rates of detection by using

boosted cascade of simple

features.

Zhang, Zhang, Li, & Qiao

(2016)

Multitask

Cascaded CNNs

Researchers proposed a deep

cascaded multitask framework

that made use of the Inherent

correlation between alignment

and detection to boost up their

performance.

Facial Feature Extraction

Cascaded regression-based method. In their study, Kazemi and Sullivan (2014),

addressed the problem of face alignment for a single image. The study illustrated how

a regression tree ensemble could be deployed in estimating the landmark positions of

faces directly from a scarce subset of pixel intensities ultimately generating super real-

time performance as well as high quality predictions (Kazemi & Sullivan, 2014).

Particularly, the paper presented new algorithms that carried out face alignment in

milliseconds while also achieving superior or favourably comparable results to those

returned by state-of-the-art models on standard datasets. The researchers build on

previous studies that had demonstrated how regression functions can solve face

alignment. However, in this study, each regression function that the authors performed

in the cascade efficiently estimated the shape from an initial estimate as well as the

intensity of a scarce set of pixels that they had indexed in relation to the said initial

estimate (Kazemi & Sullivan, 2014). This study adds to a large body of existing

investigations on facial alignment. Particularly, the researchers integrate two major

elements present in several essential algorithms into their learnt regression functions.

The first element revolves around how pixel intensities are indexed in relation to the

current estimate of the shape or image. The features that the study extracts for vector

representation of a face image can vary significantly as a result of both nuisance

factors such as adjustments in illumination as well as shape deformation (Kazemi &

Sullivan, 2014). The result is that it makes the process of accurately estimating shapes

using such features increasingly complex. Subsequently, there arises a dilemma in

which while for successful experimentation, there is a need for reliable features to

accurately predict the shape of the face, on the other hand, there is also a need for an

accurate estimate of the shape for extraction of reliable features (Kazemi & Sullivan,

2014). The study, just like previous studies, as such employed a cascade (an iterative

approach) as a cure for the challenge. As opposed to regressing the shape parameters

based on extracted features from the global coordinate system of the image, the

researchers opted to transform the image to a normalized coordinate system on the

basis of a current estimate of the shape – the features were then extracted to predict an

update vector for the shape parameters. The process was carried out several times until

the experiment registered image convergence. With the second element, the

experiment considered the manner in which the researchers could overcome the

difficulty of the problem of inference prediction. This problem arose because at the

time of testing, the researchers have to estimate the shape using an alignment

algorithm – the algorithm is ideally a high dimensional vector that best agrees with the

shape of the data and the experiment’s model of shape. Successful algorithms, as the

researchers explain, resolve this problem by assuming that the estimated shape has to

lie in a linear subspace. The experiment could, for instance discover this image by

locating the principle components of the training shapes. From this rationale, the

researchers considerably decreased the number of possible shapes considered in the

course of inference and hence helped to steer clear of local optima. At the same time,

the assumption was in line with previous studies in which a defined class of regressors

are guaranteed to generate predictions that kept in a linear subspace described by the

in the cascade efficiently estimated the shape from an initial estimate as well as the

intensity of a scarce set of pixels that they had indexed in relation to the said initial

estimate (Kazemi & Sullivan, 2014). This study adds to a large body of existing

investigations on facial alignment. Particularly, the researchers integrate two major

elements present in several essential algorithms into their learnt regression functions.

The first element revolves around how pixel intensities are indexed in relation to the

current estimate of the shape or image. The features that the study extracts for vector

representation of a face image can vary significantly as a result of both nuisance

factors such as adjustments in illumination as well as shape deformation (Kazemi &

Sullivan, 2014). The result is that it makes the process of accurately estimating shapes

using such features increasingly complex. Subsequently, there arises a dilemma in

which while for successful experimentation, there is a need for reliable features to

accurately predict the shape of the face, on the other hand, there is also a need for an

accurate estimate of the shape for extraction of reliable features (Kazemi & Sullivan,

2014). The study, just like previous studies, as such employed a cascade (an iterative

approach) as a cure for the challenge. As opposed to regressing the shape parameters

based on extracted features from the global coordinate system of the image, the

researchers opted to transform the image to a normalized coordinate system on the

basis of a current estimate of the shape – the features were then extracted to predict an

update vector for the shape parameters. The process was carried out several times until

the experiment registered image convergence. With the second element, the

experiment considered the manner in which the researchers could overcome the

difficulty of the problem of inference prediction. This problem arose because at the

time of testing, the researchers have to estimate the shape using an alignment

algorithm – the algorithm is ideally a high dimensional vector that best agrees with the

shape of the data and the experiment’s model of shape. Successful algorithms, as the

researchers explain, resolve this problem by assuming that the estimated shape has to

lie in a linear subspace. The experiment could, for instance discover this image by

locating the principle components of the training shapes. From this rationale, the

researchers considerably decreased the number of possible shapes considered in the

course of inference and hence helped to steer clear of local optima. At the same time,

the assumption was in line with previous studies in which a defined class of regressors

are guaranteed to generate predictions that kept in a linear subspace described by the

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 47

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2025 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.