Assessment and Evaluation in Education: Learning Tasks Analysis

VerifiedAdded on 2021/11/16

|20

|7041

|75

Homework Assignment

AI Summary

This assignment delves into the critical aspects of assessment and evaluation within the educational context. It begins by defining key terms like 'test,' 'testing,' 'assessment,' 'measurement,' and 'evaluation,' clarifying their distinct roles and purposes. The paper then explores the purposes of measurement and evaluation, including student placement, classification, certification, and improving instruction. It differentiates between placement and summative evaluation types. Furthermore, the assignment examines the functions of tests in education, such as encouraging learning, gauging student knowledge, and identifying individual challenges. It also discusses measurement scales and various levels of educational objectives, differentiating between aims and objectives. Specific objectives at the instructional level are discussed, along with the importance of feedback and the affective and psychomotor domains. The affective domain, encompassing emotional responses, is explored through its levels and characteristics. The psychomotor domain, focusing on motor skills, is also examined, including its six levels. The assignment offers a comprehensive understanding of the various facets of assessment and evaluation, providing a valuable resource for students.

Asses

sment

and

Evalu

ation

in

Educa

tion

REFLECTION PAPERS:

The Learning Tasks

sment

and

Evalu

ation

in

Educa

tion

REFLECTION PAPERS:

The Learning Tasks

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Worksheets NO. 1

Explain the terms test and testing.

The test can be used as a measuring instrument or as a teaching tool. A measure is a sort or

category of measuring equipment that is widely used to obtain data about a person. After you've completed a

week of classes or lessons, the instructor will usually email you an exam. This test is a tool that the teacher

uses to collect data that will be used to evaluate you. It's a type of educationally significant device that an

individual conducts on their own with the objective of evaluating the changes or gains made by instruments

like an inventory, a questionnaire, a perspective, a scale, and so on.

Testing, on the other hand, is the process of administering a test. In other terms, testing is the

process of acquiring or allowing you to take a test in order to obtain a numerical representation of your

cognitive or non-cognitive features. The equipment or instrument will do the measurement, and the

measuring system will make the evaluation.

Define the term assessment.

As a method of gathering data on student success during the educational process, a teacher

evaluation is intended to assist the teacher in determining which topics or abilities the students are having

difficulty with so that action can be taken to improve student learning while the course is still in session.

Assessment is a systematic basis for drawing inferences about students' learning and

development... it is a way of identifying, selecting, preparing, collecting, assessing, analyzing, and applying

information to enhance students' learning and development.

This is a general term that refers to the systematic assurance of findings or features using some

kind of estimation approach. It is a standard method for determining the degree to which a capability or

feature is present in an element or substance.

Clarify the terms measurement and evaluation

This is a wide phrase that refers to the process of employing a measurement tool to methodically

determine results or qualities. It's a method of determining the quantitative presence of a function or feature

in an object or material using a methodical approach. It's the systematic assignment of numerical values or

figures to an attribute in an entity or organization, to put it another way. To put it another way, it's the formal

attribution of numerical numbers or figures to a trait or attribute of a person or an object. These instruments

can be employed in schools to compute and acquire the numerical value of training ability, aptitude,

achievement, and so on. Experiments using a pencil and paper are also available. This means that the values

of the variable are converted to numbers by calculation.

Assessment is the process of using the outcomes of assessments in the classroom. This

information is used by teachers to examine the relationship between what was said in the lesson and what

was learned. Examine the information gathered to see what kids already know and comprehend, as well as

how far they've come.

Explain the terms test and testing.

The test can be used as a measuring instrument or as a teaching tool. A measure is a sort or

category of measuring equipment that is widely used to obtain data about a person. After you've completed a

week of classes or lessons, the instructor will usually email you an exam. This test is a tool that the teacher

uses to collect data that will be used to evaluate you. It's a type of educationally significant device that an

individual conducts on their own with the objective of evaluating the changes or gains made by instruments

like an inventory, a questionnaire, a perspective, a scale, and so on.

Testing, on the other hand, is the process of administering a test. In other terms, testing is the

process of acquiring or allowing you to take a test in order to obtain a numerical representation of your

cognitive or non-cognitive features. The equipment or instrument will do the measurement, and the

measuring system will make the evaluation.

Define the term assessment.

As a method of gathering data on student success during the educational process, a teacher

evaluation is intended to assist the teacher in determining which topics or abilities the students are having

difficulty with so that action can be taken to improve student learning while the course is still in session.

Assessment is a systematic basis for drawing inferences about students' learning and

development... it is a way of identifying, selecting, preparing, collecting, assessing, analyzing, and applying

information to enhance students' learning and development.

This is a general term that refers to the systematic assurance of findings or features using some

kind of estimation approach. It is a standard method for determining the degree to which a capability or

feature is present in an element or substance.

Clarify the terms measurement and evaluation

This is a wide phrase that refers to the process of employing a measurement tool to methodically

determine results or qualities. It's a method of determining the quantitative presence of a function or feature

in an object or material using a methodical approach. It's the systematic assignment of numerical values or

figures to an attribute in an entity or organization, to put it another way. To put it another way, it's the formal

attribution of numerical numbers or figures to a trait or attribute of a person or an object. These instruments

can be employed in schools to compute and acquire the numerical value of training ability, aptitude,

achievement, and so on. Experiments using a pencil and paper are also available. This means that the values

of the variable are converted to numbers by calculation.

Assessment is the process of using the outcomes of assessments in the classroom. This

information is used by teachers to examine the relationship between what was said in the lesson and what

was learned. Examine the information gathered to see what kids already know and comprehend, as well as

how far they've come.

List the purposes of measurement and evaluation

The following are the key goals of measuring and evaluation:

student placement, which is placing students in the right learning sequence, and classification or

streaming of students based on ability or subjects.

Choosing students for various courses – general, professional, technical, commercial, and so on.

Certification: This is used to verify that a student has met a certain standard of performance.

Stimulating learning: this can be motivation of the student or teacher, providing feedback,

suggesting suitable practice etc.

Improving instruction: by assisting in the evaluation of teaching arrangements. For the aim of

research.

For the purpose of providing guidance and counseling.

For the purpose of changing the curriculum.

For the purpose of choosing pupils for employment.

For the purpose of changing teaching methods.

For the goal of giving the pupil a promotion.

For informing parents about their children's progress.

For the awarding of scholarships and merit awards, as well as the enrollment and maintenance of

students in educational institutions.

Explain the types of Evaluation

Placement Evaluation is a sort of assessment that is used to place pupils in the most appropriate

group or class. This form of evaluation is aimed to assist both the student and the teacher in

identifying areas where the student has failed to learn so that the failure can be corrected. Examining

the Situation, you’ve utilized formative evaluation as a teacher to discover some of your students'

flaws. Summative evaluation is a sort of evaluation that takes place at the end of a course of

teaching to determine how well the objectives were met.

Explain the functions of tests in education

In education, tests are used for a variety of reasons, some of which are listed below. Encourage

your students to learn. As teachers, it is our responsibility to encourage our pupils to continue their

studies. This is why the test is given to kids every day to encourage them to study. They devote a

significant amount of time and effort to their weekly, terminal, or year-end promotional events.

Some students will be unable to attend individual sessions without these assessments, while others

will be less likely to pay attention while the teacher is teaching, regardless of how dynamic and

entertaining the lesson is.

Calculate the amount of knowledge the students have gained. The exam can be used to see how

well the students have understood or covered the material. Many of your pupils will obtain excellent

marks if you teach a subject in class and provide a test at the end of the day. This demonstrates that

they were well-versed on the subject. All of their efforts, though, will be for naught if they receive a

lousy grade. You'll have to teach a little more. The exam results will assist you decide whether to go

on to the next subject or retake the one you're currently studying.

Determine the specific challenges each student confronts. Tests should be designed and distributed

to pupils in order to discover true difficulties. It's done to see what kind of disciplinary action should

be taken. The diagnostic of assessment usage is the discovery of defects and seriousness on the part

The following are the key goals of measuring and evaluation:

student placement, which is placing students in the right learning sequence, and classification or

streaming of students based on ability or subjects.

Choosing students for various courses – general, professional, technical, commercial, and so on.

Certification: This is used to verify that a student has met a certain standard of performance.

Stimulating learning: this can be motivation of the student or teacher, providing feedback,

suggesting suitable practice etc.

Improving instruction: by assisting in the evaluation of teaching arrangements. For the aim of

research.

For the purpose of providing guidance and counseling.

For the purpose of changing the curriculum.

For the purpose of choosing pupils for employment.

For the purpose of changing teaching methods.

For the goal of giving the pupil a promotion.

For informing parents about their children's progress.

For the awarding of scholarships and merit awards, as well as the enrollment and maintenance of

students in educational institutions.

Explain the types of Evaluation

Placement Evaluation is a sort of assessment that is used to place pupils in the most appropriate

group or class. This form of evaluation is aimed to assist both the student and the teacher in

identifying areas where the student has failed to learn so that the failure can be corrected. Examining

the Situation, you’ve utilized formative evaluation as a teacher to discover some of your students'

flaws. Summative evaluation is a sort of evaluation that takes place at the end of a course of

teaching to determine how well the objectives were met.

Explain the functions of tests in education

In education, tests are used for a variety of reasons, some of which are listed below. Encourage

your students to learn. As teachers, it is our responsibility to encourage our pupils to continue their

studies. This is why the test is given to kids every day to encourage them to study. They devote a

significant amount of time and effort to their weekly, terminal, or year-end promotional events.

Some students will be unable to attend individual sessions without these assessments, while others

will be less likely to pay attention while the teacher is teaching, regardless of how dynamic and

entertaining the lesson is.

Calculate the amount of knowledge the students have gained. The exam can be used to see how

well the students have understood or covered the material. Many of your pupils will obtain excellent

marks if you teach a subject in class and provide a test at the end of the day. This demonstrates that

they were well-versed on the subject. All of their efforts, though, will be for naught if they receive a

lousy grade. You'll have to teach a little more. The exam results will assist you decide whether to go

on to the next subject or retake the one you're currently studying.

Determine the specific challenges each student confronts. Tests should be designed and distributed

to pupils in order to discover true difficulties. It's done to see what kind of disciplinary action should

be taken. The diagnostic of assessment usage is the discovery of defects and seriousness on the part

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

of students. It contributes to the worthwhile task of giving remedial treatment to kids, individuals, or

groups.

Determine the students' specific abilities; each of us possesses a unique ability or talent.

Aptitude exams and success tests could be used to evaluate how the talent they created was

assessed.

Explain the measurement scales

The statistical analysis' measurement scale and the type of information offered by the numbers.

Each of the four scales (nominal, ordinal, range, and ratio) conveys information in distinct ways.

Measurement is the process of assigning numbers to persons and objects in a meaningful way, and

understanding the scales of measurement is crucial to deciphering the numbers allotted to them.

groups.

Determine the students' specific abilities; each of us possesses a unique ability or talent.

Aptitude exams and success tests could be used to evaluate how the talent they created was

assessed.

Explain the measurement scales

The statistical analysis' measurement scale and the type of information offered by the numbers.

Each of the four scales (nominal, ordinal, range, and ratio) conveys information in distinct ways.

Measurement is the process of assigning numbers to persons and objects in a meaningful way, and

understanding the scales of measurement is crucial to deciphering the numbers allotted to them.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Worksheets No. 2

List the different levels of educational objectives.

At several levels, educational objectives might be established. The national level, institutional

level, and instructional level are all examples of these levels.

National level - we have merely policy statements of what education should achieve for the nation.

Institutional level - The aims are logically derived and related to both the ones at the national level

and the one at the instructional levels. These institutional objectives are usually specified by an act.

Instructional level - objectives specified at both the national and institutional level are all broad

goals and aims. Educational objectives are stated in the form in which they are to operate in the

classroom.

Give the differences between aims and objectives.

The aim is the main goal that the person or organization is aiming to accomplish and also what

you hope to achieve.

Objective is something that a person/entity aims to accomplish by continuing to push by her/his

action.

Write specific objectives at the instructional level

Educational objectives are stated in the form in which they are to operate in the classroom.

Behavioral objectives or learning outcomes. They are defined in terms of the desired learning

outcomes. These objectives define what teaching is supposed to accomplish, what the learner is

supposed to understand from the instruction, how the learner is supposed to act after receiving the

instruction, and what he needs to do to show that he has learned what is expected from the

instruction.

As a result, these instructional objectives are described in behavioral terms, with action verbs

describing the desired behavior that the learner will exhibit to demonstrate that he has learned. They

are an example of instructional goals. They are learner-centric rather than teacher-centric.

Explain the importance of feedback in instructional situation.

The importance of instructional objectives in the teaching system cannot be overstated.

The input obtained from the assessment of instructional objectives is translated into determining

how well national educational objectives have been met in relation to the type of institution in

question, as well as their suitability. In the end, the findings may lead to the revision of any or all of

the levels' objectives. They may result in changes to the institution's curriculum. They may lead to

changes in teaching methods or the provision of instructional resources at the instructional level.

Aside from feedback, instructional objectives are crucial because the teacher's plans for what to

teach and how to teach it are based on the goals that must be met. The evaluation of students'

learning outcomes will tell him whether or not the objectives have been met. As a result, the

educational process is given meaning and direction by the instructional objectives.

List the different levels of educational objectives.

At several levels, educational objectives might be established. The national level, institutional

level, and instructional level are all examples of these levels.

National level - we have merely policy statements of what education should achieve for the nation.

Institutional level - The aims are logically derived and related to both the ones at the national level

and the one at the instructional levels. These institutional objectives are usually specified by an act.

Instructional level - objectives specified at both the national and institutional level are all broad

goals and aims. Educational objectives are stated in the form in which they are to operate in the

classroom.

Give the differences between aims and objectives.

The aim is the main goal that the person or organization is aiming to accomplish and also what

you hope to achieve.

Objective is something that a person/entity aims to accomplish by continuing to push by her/his

action.

Write specific objectives at the instructional level

Educational objectives are stated in the form in which they are to operate in the classroom.

Behavioral objectives or learning outcomes. They are defined in terms of the desired learning

outcomes. These objectives define what teaching is supposed to accomplish, what the learner is

supposed to understand from the instruction, how the learner is supposed to act after receiving the

instruction, and what he needs to do to show that he has learned what is expected from the

instruction.

As a result, these instructional objectives are described in behavioral terms, with action verbs

describing the desired behavior that the learner will exhibit to demonstrate that he has learned. They

are an example of instructional goals. They are learner-centric rather than teacher-centric.

Explain the importance of feedback in instructional situation.

The importance of instructional objectives in the teaching system cannot be overstated.

The input obtained from the assessment of instructional objectives is translated into determining

how well national educational objectives have been met in relation to the type of institution in

question, as well as their suitability. In the end, the findings may lead to the revision of any or all of

the levels' objectives. They may result in changes to the institution's curriculum. They may lead to

changes in teaching methods or the provision of instructional resources at the instructional level.

Aside from feedback, instructional objectives are crucial because the teacher's plans for what to

teach and how to teach it are based on the goals that must be met. The evaluation of students'

learning outcomes will tell him whether or not the objectives have been met. As a result, the

educational process is given meaning and direction by the instructional objectives.

Explain the meaning of affective domain

Affective domain: Affective goals are those that emphasize a sense of tone, sentiment, or degree

of completeness. Affective goals can range from simple sensitivity to specific stimuli to

sophisticated but internally consistent personality and conscience consistency.

Describe the levels of affective domain

Receiving, reacting, assessing, assembling, and characterizing are the five processes of this

domain. These subdomains are structured in a hierarchical pattern, starting with simple feelings or

motivations and progressing to more sophisticated ones.

Receiving. In the affective domain, this is the lowest degree of learning outcomes. It entails

showing up. It is the learner's willingness to pay attention to a certain stimulus or his sensitivity to

the presence of a specific problem, event, condition, or circumstance. There are three levels to it.

The conscious acknowledgment of the existence of certain problems, conditions, situations,

occurrences, phenomena, and so on is known as awareness. Willingness: The ability to acknowledge

the thing, event, or problem rather than ignoring or avoiding it is the next level. Controlled or

chosen attention: This refers to the learner's decision to pay attention to a particular scenario,

problem, event, or occurrence.

Responding. In this case the learner responds to the event by participating.

Simple obedience or compliance is a form of acquiescence in responding. Willingness to respond:

This refers to a person's willingness to respond to a specific scenario. Satisfaction with the response:

if he is pleased with the outcome, he enjoys reacting to the circumstance.

State objectives in the affective domain

Affective domain is generally covert in behavior. The educational objectives here vary from

simple attention to complex and internally consistent qualities of character and conscience.

Mention the characteristic features of affective domain.

Our ideas, impulses, and acts all fall into the emotional world. This domain includes our internal

responses to things like emotions, beliefs, gratitude, passion, inspiration, and attitudes.

Explain the psychomotor domain of instructional objectives.

Similarly, the psychomotor domain is concerned with motor abilities or skills. As a result, the

instructional goals will place a greater emphasis on performance skills. Muscle activities are part of

the psychomotor realm. It is concerned with actions that require the use of the limbs (hand) or the

entire body. Humans are born with certain abilities, which should develop organically.

Affective domain: Affective goals are those that emphasize a sense of tone, sentiment, or degree

of completeness. Affective goals can range from simple sensitivity to specific stimuli to

sophisticated but internally consistent personality and conscience consistency.

Describe the levels of affective domain

Receiving, reacting, assessing, assembling, and characterizing are the five processes of this

domain. These subdomains are structured in a hierarchical pattern, starting with simple feelings or

motivations and progressing to more sophisticated ones.

Receiving. In the affective domain, this is the lowest degree of learning outcomes. It entails

showing up. It is the learner's willingness to pay attention to a certain stimulus or his sensitivity to

the presence of a specific problem, event, condition, or circumstance. There are three levels to it.

The conscious acknowledgment of the existence of certain problems, conditions, situations,

occurrences, phenomena, and so on is known as awareness. Willingness: The ability to acknowledge

the thing, event, or problem rather than ignoring or avoiding it is the next level. Controlled or

chosen attention: This refers to the learner's decision to pay attention to a particular scenario,

problem, event, or occurrence.

Responding. In this case the learner responds to the event by participating.

Simple obedience or compliance is a form of acquiescence in responding. Willingness to respond:

This refers to a person's willingness to respond to a specific scenario. Satisfaction with the response:

if he is pleased with the outcome, he enjoys reacting to the circumstance.

State objectives in the affective domain

Affective domain is generally covert in behavior. The educational objectives here vary from

simple attention to complex and internally consistent qualities of character and conscience.

Mention the characteristic features of affective domain.

Our ideas, impulses, and acts all fall into the emotional world. This domain includes our internal

responses to things like emotions, beliefs, gratitude, passion, inspiration, and attitudes.

Explain the psychomotor domain of instructional objectives.

Similarly, the psychomotor domain is concerned with motor abilities or skills. As a result, the

instructional goals will place a greater emphasis on performance skills. Muscle activities are part of

the psychomotor realm. It is concerned with actions that require the use of the limbs (hand) or the

entire body. Humans are born with certain abilities, which should develop organically.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Discuss the six levels of psychomotor domain

1.1 Reflex Movements. At the lowest level of the psychomotor domain is the reflex movements

which every normal human being should be able to make. The movements are all natural, except

where the case is abnormal, in which case it may demand therapy programs.

1.2 Basic Fundamental Movements. Like the case of reflex movements, these are basic movements

which are natural. Educators have little or nothing to do with them, except in an abnormal case

where special educators step in to assist. There are three sub-categories at this stage. These are:

1.3 Individuals' ability to notice and discriminate things using their senses is referred to as perceptual

capacities. By physically tasting, smelling, seeing, hearing, and touching objects, such people

recognize and compare them. You can figure out which sensory organs are involved in these

actions.

1.4 Physical abilities. These abilities fall in the area of health and physical education

1.5 Skilled Movements. This is a higher ability than the physical abilities. Once you have acquired

the physical abilities, you now apply various types of these physical abilities in making or

creating things. You can combine skills in manipulative, endurance and flexibility in writing and

drawing. You can combine the neuromuscular movements together with flexibility to help you in

drawing. An individual can combine strength, endurance, flexibility and manipulative

movements in activities.

1.6 Non-discursive Communication. This is the highest level which demands a combination of all the

lower levels to reach a high degree of expertise.

Give examples of the activities needed in each level.

Reflex Movements. Apart from unusual circumstances, educators are unconcerned with these

movements. Let us now consider some examples. Could you name a few of them? The twinkling

of eyes, trying to avoid a blow or something thrown at you, jumping up when in danger,

ingesting items, urinating or stooling by a child, and so on may have come to mind.

Basic Fundamental Movements - Locomotor movement: which involves movements of the body

from place to place such as crawling, walking, leaping, jumping etc.

Non-locomotor movements: which involves body movements that do not involve moving

from one place to another. These include muscular movements, wriggling of the trunk, head and

any other part of the body. They also include turning, twisting etc. of the body.

Manipulative movements: which involves the use of the hands or limbs to move things to

control things etc.

Perceptual abilities. - Drawing, painting, cutting, pasting, folding

Physical abilities. - Some gardening activities, such as raking and pushing a lawn mower

Skilled Movements - For skills like drumming, typing or playing the organ or the keyboard in

music, you will need a combination of manipulative movements and some perceptive abilities

and flexibly.

1.1 Reflex Movements. At the lowest level of the psychomotor domain is the reflex movements

which every normal human being should be able to make. The movements are all natural, except

where the case is abnormal, in which case it may demand therapy programs.

1.2 Basic Fundamental Movements. Like the case of reflex movements, these are basic movements

which are natural. Educators have little or nothing to do with them, except in an abnormal case

where special educators step in to assist. There are three sub-categories at this stage. These are:

1.3 Individuals' ability to notice and discriminate things using their senses is referred to as perceptual

capacities. By physically tasting, smelling, seeing, hearing, and touching objects, such people

recognize and compare them. You can figure out which sensory organs are involved in these

actions.

1.4 Physical abilities. These abilities fall in the area of health and physical education

1.5 Skilled Movements. This is a higher ability than the physical abilities. Once you have acquired

the physical abilities, you now apply various types of these physical abilities in making or

creating things. You can combine skills in manipulative, endurance and flexibility in writing and

drawing. You can combine the neuromuscular movements together with flexibility to help you in

drawing. An individual can combine strength, endurance, flexibility and manipulative

movements in activities.

1.6 Non-discursive Communication. This is the highest level which demands a combination of all the

lower levels to reach a high degree of expertise.

Give examples of the activities needed in each level.

Reflex Movements. Apart from unusual circumstances, educators are unconcerned with these

movements. Let us now consider some examples. Could you name a few of them? The twinkling

of eyes, trying to avoid a blow or something thrown at you, jumping up when in danger,

ingesting items, urinating or stooling by a child, and so on may have come to mind.

Basic Fundamental Movements - Locomotor movement: which involves movements of the body

from place to place such as crawling, walking, leaping, jumping etc.

Non-locomotor movements: which involves body movements that do not involve moving

from one place to another. These include muscular movements, wriggling of the trunk, head and

any other part of the body. They also include turning, twisting etc. of the body.

Manipulative movements: which involves the use of the hands or limbs to move things to

control things etc.

Perceptual abilities. - Drawing, painting, cutting, pasting, folding

Physical abilities. - Some gardening activities, such as raking and pushing a lawn mower

Skilled Movements - For skills like drumming, typing or playing the organ or the keyboard in

music, you will need a combination of manipulative movements and some perceptive abilities

and flexibly.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Worksheets No. 3

List the different types of items used in classroom test.

Multiple-choice tests: - It is one of the most widely used types of tests in any classroom.

These examinations are used to evaluate the student's grasp of both difficult and simple ideas.

Multiple-choice examinations are designed to measure a student's readiness to respond to a given

question.

Matching tests are another important form of tests that can be seen in any classroom.

Matching tests are designed and taken in order to assess the student's understanding of relation

between events and dates, events and places, and so on.

True-False tests are designed to determine a student's decision on a single subject. This is

arguably one of the greatest ways to assess a student's understanding.

Short-answer tests: These tests consist of multiple-choice questions with responses that can

be written in two to three lines. These tests are intended to provide a quick but thorough solution

to a specific issue or concept.

Problem examinations are commonly developed in topics such as mathematics and science.

These assessments necessitate a variety of calculations based on the student's conceptual

framework and understanding. Giving students ten minutes to solve a problem that you can solve

in two minutes is a frequent problem-solving strategy.

Oral Tests: Oral exams are an excellent approach to evaluate a student's conceptual

framework and learning. Written tests may not provide a complete picture of a student's learning

and conceptual framework. However, when a teacher listens to a student's notions and ideas, the

outcome is more evident.

Identify the sequence of planning a classroom test

Planning a classroom test that is both practical and effective in demonstrating knowledge of

the instructional objectives and subject covered necessitates careful thought. As a result, the

following guidelines can help you organize a classroom test:

Determine the purpose of the test;

Describe the instructional objectives and content to be measured.

mine the relative emphasis to be given to each learning outcome;

Select the most appropriate item formats (essay or objective);

Develop the test blue print to guide the test construction;

Prepare test items that is relevant to the learning outcomes specified in the test

plan;

Decide on the pattern of scoring and the interpretation of result;

Decide on the length and duration of the test, and

Assemble the items into a test, prepare direction and administer the test.

List the different types of items used in classroom test.

Multiple-choice tests: - It is one of the most widely used types of tests in any classroom.

These examinations are used to evaluate the student's grasp of both difficult and simple ideas.

Multiple-choice examinations are designed to measure a student's readiness to respond to a given

question.

Matching tests are another important form of tests that can be seen in any classroom.

Matching tests are designed and taken in order to assess the student's understanding of relation

between events and dates, events and places, and so on.

True-False tests are designed to determine a student's decision on a single subject. This is

arguably one of the greatest ways to assess a student's understanding.

Short-answer tests: These tests consist of multiple-choice questions with responses that can

be written in two to three lines. These tests are intended to provide a quick but thorough solution

to a specific issue or concept.

Problem examinations are commonly developed in topics such as mathematics and science.

These assessments necessitate a variety of calculations based on the student's conceptual

framework and understanding. Giving students ten minutes to solve a problem that you can solve

in two minutes is a frequent problem-solving strategy.

Oral Tests: Oral exams are an excellent approach to evaluate a student's conceptual

framework and learning. Written tests may not provide a complete picture of a student's learning

and conceptual framework. However, when a teacher listens to a student's notions and ideas, the

outcome is more evident.

Identify the sequence of planning a classroom test

Planning a classroom test that is both practical and effective in demonstrating knowledge of

the instructional objectives and subject covered necessitates careful thought. As a result, the

following guidelines can help you organize a classroom test:

Determine the purpose of the test;

Describe the instructional objectives and content to be measured.

mine the relative emphasis to be given to each learning outcome;

Select the most appropriate item formats (essay or objective);

Develop the test blue print to guide the test construction;

Prepare test items that is relevant to the learning outcomes specified in the test

plan;

Decide on the pattern of scoring and the interpretation of result;

Decide on the length and duration of the test, and

Assemble the items into a test, prepare direction and administer the test.

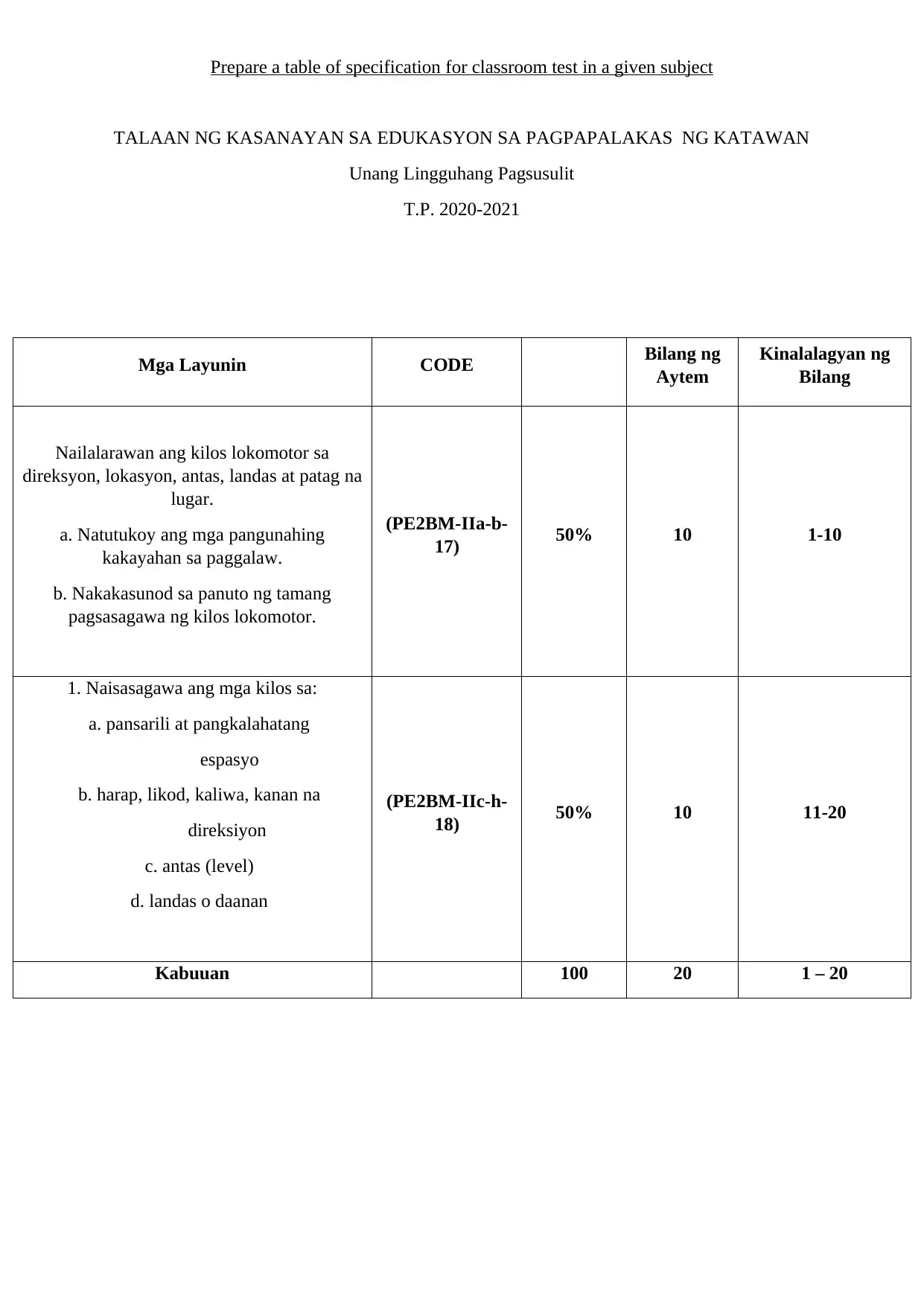

Prepare a table of specification for classroom test in a given subject

TALAAN NG KASANAYAN SA EDUKASYON SA PAGPAPALAKAS NG KATAWAN

Unang Lingguhang Pagsusulit

T.P. 2020-2021

Mga Layunin CODE Bilang ng

Aytem

Kinalalagyan ng

Bilang

Nailalarawan ang kilos lokomotor sa

direksyon, lokasyon, antas, landas at patag na

lugar.

a. Natutukoy ang mga pangunahing

kakayahan sa paggalaw.

b. Nakakasunod sa panuto ng tamang

pagsasagawa ng kilos lokomotor.

(PE2BM-IIa-b-

17) 50% 10 1-10

1. Naisasagawa ang mga kilos sa:

a. pansarili at pangkalahatang

espasyo

b. harap, likod, kaliwa, kanan na

direksiyon

c. antas (level)

d. landas o daanan

(PE2BM-IIc-h-

18) 50% 10 11-20

Kabuuan 100 20 1 – 20

TALAAN NG KASANAYAN SA EDUKASYON SA PAGPAPALAKAS NG KATAWAN

Unang Lingguhang Pagsusulit

T.P. 2020-2021

Mga Layunin CODE Bilang ng

Aytem

Kinalalagyan ng

Bilang

Nailalarawan ang kilos lokomotor sa

direksyon, lokasyon, antas, landas at patag na

lugar.

a. Natutukoy ang mga pangunahing

kakayahan sa paggalaw.

b. Nakakasunod sa panuto ng tamang

pagsasagawa ng kilos lokomotor.

(PE2BM-IIa-b-

17) 50% 10 1-10

1. Naisasagawa ang mga kilos sa:

a. pansarili at pangkalahatang

espasyo

b. harap, likod, kaliwa, kanan na

direksiyon

c. antas (level)

d. landas o daanan

(PE2BM-IIc-h-

18) 50% 10 11-20

Kabuuan 100 20 1 – 20

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Carry out item moderation processes

After the test items have been created, they are moderated by an expert or panel of experts before

being used in a school or class-wide test, such as an end-of-term exam. The subject head should read

through the items before sending them to external moderators (assessors).

Make intelligent ideas and changes to the areas of need that have already been identified. Before

selecting the most appropriate one for external assessors (subject specialists and assessment experts) to

provide final feedback prior to use, the subject expert may find it necessary to enlist the help of others in the

department who are knowledgeable in that discipline to perform a similar exercise (subject experts'

validation).

The marking system, as well as the marks assigned to various areas of the content covered, should be

submitted with the test pieces to the external assessors.

The final objects (those that made it through the moderation phase) will have face, construct, and

material validity as test measuring instruments if this technique is followed appropriately.

Assemble moderated test items for use.

Processes of moderation the following are instructions for preparing and assembling essay and

objective test pieces.

Describe the different types of objectives questions.

Objective test items are divided first into two-supply test items and selection test items. These two

are then sub-divided into:

Supply test items

Short answers

Completion

Selection test items

Arrangements

True-false

Matching

Multiple choice items

After the test items have been created, they are moderated by an expert or panel of experts before

being used in a school or class-wide test, such as an end-of-term exam. The subject head should read

through the items before sending them to external moderators (assessors).

Make intelligent ideas and changes to the areas of need that have already been identified. Before

selecting the most appropriate one for external assessors (subject specialists and assessment experts) to

provide final feedback prior to use, the subject expert may find it necessary to enlist the help of others in the

department who are knowledgeable in that discipline to perform a similar exercise (subject experts'

validation).

The marking system, as well as the marks assigned to various areas of the content covered, should be

submitted with the test pieces to the external assessors.

The final objects (those that made it through the moderation phase) will have face, construct, and

material validity as test measuring instruments if this technique is followed appropriately.

Assemble moderated test items for use.

Processes of moderation the following are instructions for preparing and assembling essay and

objective test pieces.

Describe the different types of objectives questions.

Objective test items are divided first into two-supply test items and selection test items. These two

are then sub-divided into:

Supply test items

Short answers

Completion

Selection test items

Arrangements

True-false

Matching

Multiple choice items

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Describe the different types of essay questions.

Essay tests can be either extended response or free response. In this case, the questions are phrased in

such a way that the student's responses require him to go beyond the scope of the topics or questions stated.

In order to offer his answer, the student must arrange and organize his thoughts. Put his ideas through by

using his own words and writing to communicate himself freely, exactly, and clearly. Discuss the questions

in depth, including various facets of his understanding on the problem or question raised.

Restricted - Type of response. The questions of this category are so structured that the students'

options are constrained, and the scope of their responses is specified and confined. The responses given are,

to some extent, manipulated. Let's look at some examples now.

Give three advantages and two disadvantages of essay tests.

State four uses of tests in education.

Explain five factors which influence the choice of building site.

Mention five rules for preventing accident in a workshop.

State 5 technical drawing instruments and their uses.

Describe four sources of energy.

Define Helix and give two applications of helix.

Compare the characteristics of objectives and essay tests.

Short-answer essays, extended-response essays, problem-solving, and accomplishment exam items

are objective, while multiple-choice, true-false, matching, and completion items are subjective. Students

must devote more effort to preparation and training for essay evaluations than they do for objective exams.

Explain the meaning of test Administration

Test administration, as you may know, is the process of introducing the learning activity that

examinees must complete in order to identify the degree of learning that has occurred during the teaching-

learning process. This technique is equally as important as the test preparation.

This is due to the fact that when a test is performed poorly, the quality and reliability of the results

suffer dramatically. During the administration of the test, all examinees must be given a reasonable

opportunity to demonstrate their mastery of the learning outcomes being assessed.

Essay tests can be either extended response or free response. In this case, the questions are phrased in

such a way that the student's responses require him to go beyond the scope of the topics or questions stated.

In order to offer his answer, the student must arrange and organize his thoughts. Put his ideas through by

using his own words and writing to communicate himself freely, exactly, and clearly. Discuss the questions

in depth, including various facets of his understanding on the problem or question raised.

Restricted - Type of response. The questions of this category are so structured that the students'

options are constrained, and the scope of their responses is specified and confined. The responses given are,

to some extent, manipulated. Let's look at some examples now.

Give three advantages and two disadvantages of essay tests.

State four uses of tests in education.

Explain five factors which influence the choice of building site.

Mention five rules for preventing accident in a workshop.

State 5 technical drawing instruments and their uses.

Describe four sources of energy.

Define Helix and give two applications of helix.

Compare the characteristics of objectives and essay tests.

Short-answer essays, extended-response essays, problem-solving, and accomplishment exam items

are objective, while multiple-choice, true-false, matching, and completion items are subjective. Students

must devote more effort to preparation and training for essay evaluations than they do for objective exams.

Explain the meaning of test Administration

Test administration, as you may know, is the process of introducing the learning activity that

examinees must complete in order to identify the degree of learning that has occurred during the teaching-

learning process. This technique is equally as important as the test preparation.

This is due to the fact that when a test is performed poorly, the quality and reliability of the results

suffer dramatically. During the administration of the test, all examinees must be given a reasonable

opportunity to demonstrate their mastery of the learning outcomes being assessed.

State the steps involved in test administration

The following are guidelines and steps involved in test administration aimed at ensuring quality in

test administration.

Collection of the question papers in time from custodian to be able to start the test at the

appropriate time stipulated.

Ensure compliance with the stipulated sitting arrangements in the test to prevent collision

between or among the students.

Ensure orderlyand proper distribution of questions papers to the students.

Do not talk unnecessarily before the test.

Students’ time should not be wasted at the beginning of the test with unnecessary remarks,

instructions or threat that may develop test anxiety.

It is necessary to remind the students of the need to avoid malpractices before they start and

make it clear that cheating will be penalized.

Stick to the instructions regarding the conduct of the test and avoid giving hints to students

who ask about particular items.

But make corrections or clarifications to the students whenever necessary. Keep interruptions

during the test to a minimum.

Identify the need for civility and credibility in test administration

Credibility and civility are two aspects of evaluation traits that are crucial in today's ever changing

educational communities. Credibility refers to the value that the eventual recipients and consumers of

evaluation results place on the outcomes in terms of grades obtained, certifications issued, or the issuing

institution. Civility, on the other hand, asks if the people being assessed are in a position to give their best

without hindrances or encumbrances in the qualities being evaluated, and if the exercise is considered as

important to or external to the learning process.

State the factors to be considered for credible and civil test administration

Instructions: - The instructions to the test administrator should clarify how the test will be

administered, as well as the arrangements that will be taken to ensure that the test is administered properly

and that the scripts and other materials are handled properly. For effective compliance, the administrator's

instructions should be unambiguous.

Test Duration: The amount of time it takes to complete the test is crucial in test administration and

should be clearly indicated for both test administrators and students.

The test atmosphere should be learner-friendly, with acceptable physical circumstances such as

sufficient work space, decent and comfortable writing desks, proper lighting, good ventilation, moderate

temperature, amenities within reasonable distance, and the serenity required for optimal concentration.

Other necessary conditions: Other necessary conditions include the fact that the questions and

questions paper should be friendly with bold characters, neat, decent, clear and appealing and not such that

The following are guidelines and steps involved in test administration aimed at ensuring quality in

test administration.

Collection of the question papers in time from custodian to be able to start the test at the

appropriate time stipulated.

Ensure compliance with the stipulated sitting arrangements in the test to prevent collision

between or among the students.

Ensure orderlyand proper distribution of questions papers to the students.

Do not talk unnecessarily before the test.

Students’ time should not be wasted at the beginning of the test with unnecessary remarks,

instructions or threat that may develop test anxiety.

It is necessary to remind the students of the need to avoid malpractices before they start and

make it clear that cheating will be penalized.

Stick to the instructions regarding the conduct of the test and avoid giving hints to students

who ask about particular items.

But make corrections or clarifications to the students whenever necessary. Keep interruptions

during the test to a minimum.

Identify the need for civility and credibility in test administration

Credibility and civility are two aspects of evaluation traits that are crucial in today's ever changing

educational communities. Credibility refers to the value that the eventual recipients and consumers of

evaluation results place on the outcomes in terms of grades obtained, certifications issued, or the issuing

institution. Civility, on the other hand, asks if the people being assessed are in a position to give their best

without hindrances or encumbrances in the qualities being evaluated, and if the exercise is considered as

important to or external to the learning process.

State the factors to be considered for credible and civil test administration

Instructions: - The instructions to the test administrator should clarify how the test will be

administered, as well as the arrangements that will be taken to ensure that the test is administered properly

and that the scripts and other materials are handled properly. For effective compliance, the administrator's

instructions should be unambiguous.

Test Duration: The amount of time it takes to complete the test is crucial in test administration and

should be clearly indicated for both test administrators and students.

The test atmosphere should be learner-friendly, with acceptable physical circumstances such as

sufficient work space, decent and comfortable writing desks, proper lighting, good ventilation, moderate

temperature, amenities within reasonable distance, and the serenity required for optimal concentration.

Other necessary conditions: Other necessary conditions include the fact that the questions and

questions paper should be friendly with bold characters, neat, decent, clear and appealing and not such that

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 20

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.