A Comprehensive Report on Big Data Analysis for Business Support

VerifiedAdded on 2023/06/07

|8

|2024

|336

Report

AI Summary

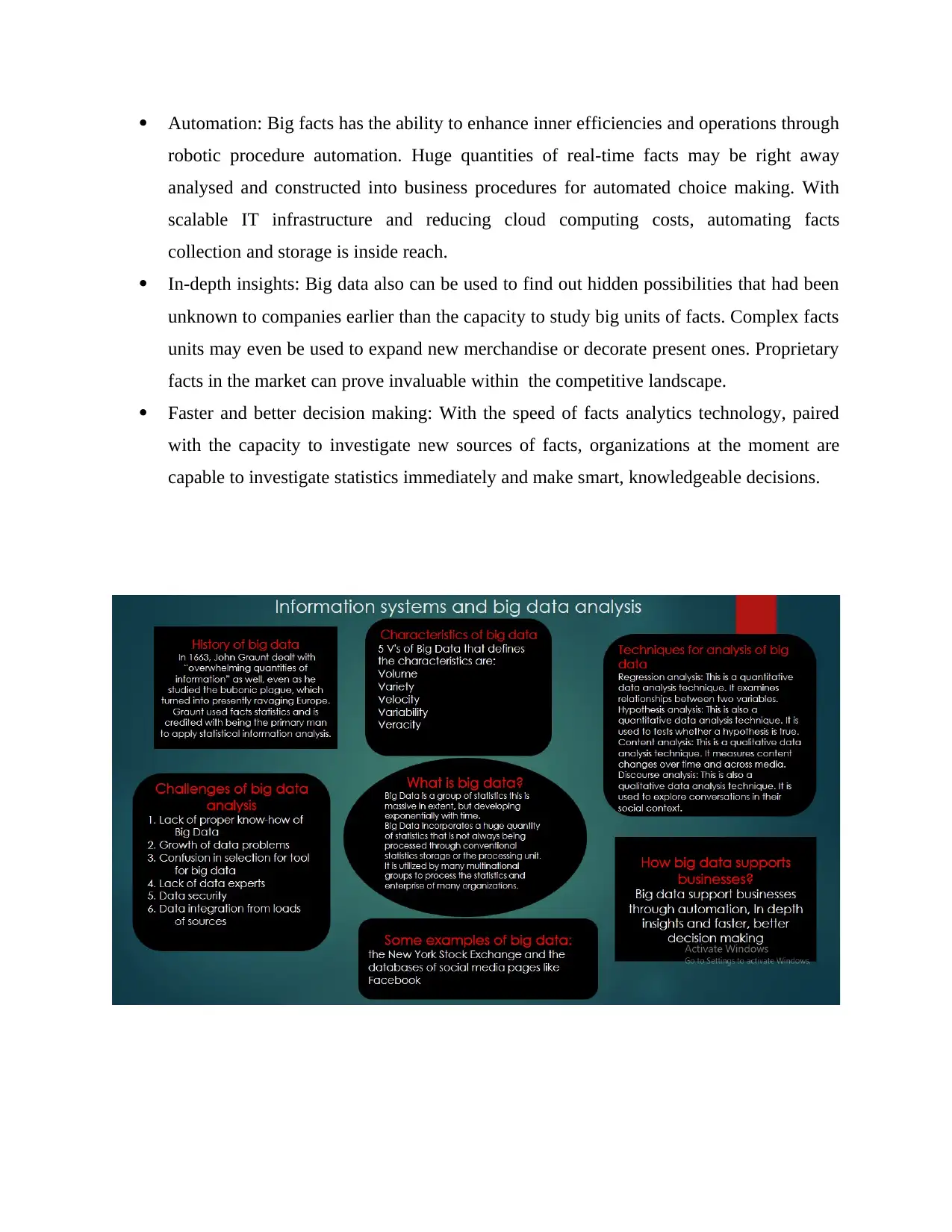

This report provides a comprehensive overview of big data analysis. It begins with a historical perspective, defining big data and outlining its core characteristics, including volume, variety, velocity, variability, and veracity. The report then delves into the challenges associated with big data analysis, such as the lack of expertise, data storage issues, and security concerns. It explores various techniques currently used to analyze big data, including regression analysis, hypothesis analysis, content analysis, and discourse analysis. Furthermore, the report examines how big data technology supports businesses through automation, in-depth insights, and improved decision-making processes. The document also includes a list of references.

Information systems

and big data analysis

and big data analysis

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Table of Contents

History of Big data.................................................................................................................3

What is big data?....................................................................................................................3

Characteristics of big data......................................................................................................3

Challenges of big data analysis..............................................................................................4

Techniques that are currently available to analysis big data..................................................6

How big data technology could support business giving examples.......................................6

REFERENCES................................................................................................................................8

History of Big data.................................................................................................................3

What is big data?....................................................................................................................3

Characteristics of big data......................................................................................................3

Challenges of big data analysis..............................................................................................4

Techniques that are currently available to analysis big data..................................................6

How big data technology could support business giving examples.......................................6

REFERENCES................................................................................................................................8

History of Big data

Big Data has been defined with the aid of using a few Data Management pundits (with a chunk

of a snicker) as “huge, overwhelming, and uncontrollable quantities of information.” In 1663,

John Graunt dealt with “overwhelming quantities of information” as well, even as he studied the

bubonic plague, which turned into presently ravaging Europe. Graunt used facts statistics and is

credited with being the primary man to apply statistical information analysis. In the early 1800s,

the sector of statistics improved to consist accumulating and studying information. Evolution of

Big Data consists of some of initial steps for its foundation, and even as searching again to 1663

is not important for the growth of information volumes today, the factor stays that “Big Data” is

a relative term relying on who is discussing it. Big Data to Amazon or Google may be very

exclusive than Big Data to a medium-sized organization (Al-Abassi and Parizi, 2020).

What is big data?

Big Data is a group of statistics this is massive in extent, but developing exponentially with time.

It is a statistics with so huge size and complexity that none of conventional statistics control tools

can save it or process it efficiently. Big data is likewise a statistics however with massive size.

Big Data incorporates a huge quantity of statistics that is not always being processed through

conventional statistics storage or the processing unit. It is utilized by many multinational groups

to process the statistics and enterprise of many organizations. The statistics flow could exceed a

hundred and fifty exabytes in line with day earlier than replication (Bi and Wang, 2021).

Some examples of big data are as follows: the New York Stock Exchange is an instance of Big

Data that generates approximately one terabyte of recent change statistics in line with day. The

statistic suggests that 500+terabytes of recent statistics get assimilated into the databases of

social media page Facebook, each day. This statistics is specifically generated in phrases of

image and video uploads, message exchanges, setting remarks etc.

Big Data has been defined with the aid of using a few Data Management pundits (with a chunk

of a snicker) as “huge, overwhelming, and uncontrollable quantities of information.” In 1663,

John Graunt dealt with “overwhelming quantities of information” as well, even as he studied the

bubonic plague, which turned into presently ravaging Europe. Graunt used facts statistics and is

credited with being the primary man to apply statistical information analysis. In the early 1800s,

the sector of statistics improved to consist accumulating and studying information. Evolution of

Big Data consists of some of initial steps for its foundation, and even as searching again to 1663

is not important for the growth of information volumes today, the factor stays that “Big Data” is

a relative term relying on who is discussing it. Big Data to Amazon or Google may be very

exclusive than Big Data to a medium-sized organization (Al-Abassi and Parizi, 2020).

What is big data?

Big Data is a group of statistics this is massive in extent, but developing exponentially with time.

It is a statistics with so huge size and complexity that none of conventional statistics control tools

can save it or process it efficiently. Big data is likewise a statistics however with massive size.

Big Data incorporates a huge quantity of statistics that is not always being processed through

conventional statistics storage or the processing unit. It is utilized by many multinational groups

to process the statistics and enterprise of many organizations. The statistics flow could exceed a

hundred and fifty exabytes in line with day earlier than replication (Bi and Wang, 2021).

Some examples of big data are as follows: the New York Stock Exchange is an instance of Big

Data that generates approximately one terabyte of recent change statistics in line with day. The

statistic suggests that 500+terabytes of recent statistics get assimilated into the databases of

social media page Facebook, each day. This statistics is specifically generated in phrases of

image and video uploads, message exchanges, setting remarks etc.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Characteristics of big data

5 V's of Big Data that defines the characteristics are:

Volume – The name of Big Data is itself associated with a length that is enormous. Size

of statistics performs a completely important position in figuring out value out of

statistics. Also, whether or not a selected statistics can truly be taken into consideration as

a Big Data, relies upon the extent of statistics. Hence, 'Volume' is one feature which is

required to be taken into consideration even as coping with Big Data solutions. Variety –

The subsequent component of Big Data is its variety (Hausladen and Schosser, 2020).

Variety explains heterogeneous sources and the character of statistics, both established

and unstructured. During advance days, spreadsheets and databases have been the best

sources of statistics taken into consideration through maximum of the applications.

Nowadays, statistics in the form of emails, photos, videos, tracking devices, PDFs, audio,

etc. also are being taken into consideration within the evaluation applications. This kind

of unstructured statistics poses certain problems for storage, mining and analysing

statistics.

Velocity – The velocity explains the speed of technology of statistics. How speedy the

statistics is generated and processed to fulfil the demands, determines actual ability

within the statistics. Velocity of big data offers with the velocity at which statistics flows

in from sources like enterprise processes, utility logs, networks, and social media sites,

sensors, Mobile devices, etc. The float of statistics is large and continuous.

Variability – This explains the inconsistency which may be proven through the statistics

at times, consequently hampering the manner of being capable to handle and control the

statistics effectively.

Veracity - Veracity explains how much the statistics is reliable. It has many approaches

to clear out or translate the statistics (Kovacova and Lewis, 2021). Veracity is the manner

of being capable of handling and controlling statistics efficiently. Big Data is likewise

important in enterprise development. For instance, Facebook posts with hashtags.

5 V's of Big Data that defines the characteristics are:

Volume – The name of Big Data is itself associated with a length that is enormous. Size

of statistics performs a completely important position in figuring out value out of

statistics. Also, whether or not a selected statistics can truly be taken into consideration as

a Big Data, relies upon the extent of statistics. Hence, 'Volume' is one feature which is

required to be taken into consideration even as coping with Big Data solutions. Variety –

The subsequent component of Big Data is its variety (Hausladen and Schosser, 2020).

Variety explains heterogeneous sources and the character of statistics, both established

and unstructured. During advance days, spreadsheets and databases have been the best

sources of statistics taken into consideration through maximum of the applications.

Nowadays, statistics in the form of emails, photos, videos, tracking devices, PDFs, audio,

etc. also are being taken into consideration within the evaluation applications. This kind

of unstructured statistics poses certain problems for storage, mining and analysing

statistics.

Velocity – The velocity explains the speed of technology of statistics. How speedy the

statistics is generated and processed to fulfil the demands, determines actual ability

within the statistics. Velocity of big data offers with the velocity at which statistics flows

in from sources like enterprise processes, utility logs, networks, and social media sites,

sensors, Mobile devices, etc. The float of statistics is large and continuous.

Variability – This explains the inconsistency which may be proven through the statistics

at times, consequently hampering the manner of being capable to handle and control the

statistics effectively.

Veracity - Veracity explains how much the statistics is reliable. It has many approaches

to clear out or translate the statistics (Kovacova and Lewis, 2021). Veracity is the manner

of being capable of handling and controlling statistics efficiently. Big Data is likewise

important in enterprise development. For instance, Facebook posts with hashtags.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Challenges of big data analysis

Big Data are characterised through excessive dimensionality and massive pattern size. These

capabilities raise 3 particular demanding challenges: (i) excessive dimensionality brings noise

accumulation, spurious correlations and incidental homogeneity; (ii) excessive dimensionality

mixed with massive pattern size creates problems along with heavy computational fee and

algorithmic instability; (iii) the big samples in Big Data are commonly aggregated from a couple

of sources at distinctive time factors utilising distinctive technology. This creates problems of

heterogeneity, experimental versions and statistical biases, and needs us to increase extra

adaptive and strong procedures (Li and Luo, 2022).

Lack of proper know-how of Big Data: Companies fail in projects of big data because of

inadequate know-how. Employees might not realize what facts is, its storage, processing,

significance, and sources. Data experts can also additionally realize what goes on,

however others might not have a clean picture. For instance, if personnel do not

recognize the significance of facts storage, they may not preserve the backup of touchy

facts. They may not use databases nicely for storage. As a result, whilst this vital facts is

required, it can not be retrieved easily.

Growth of data problems: One of the most demanding situations of Big Data is storing

these kind of big units of facts nicely. The quantity of facts being saved in data facilities

and databases of corporations is growing rapidly. As those data develop exponentially

with time, it gets extraordinarily tough to handle (Mangla and Zhang, 2020). Most of the

facts is unstructured and originated from documents, videos, audios, textual content

documents and different sources. This means one can not locate them in databases.

Confusion in selection for tool for big data: Companies frequently get burdened even as

choosing the excellent device for Big Data evaluation and storage. These questions

trouble corporations and every so often they are not able to find answers. They become

making poor choices and choosing irrelevant era. As a result, money, time, efforts and

hours of work are wasted.

Lack of data experts: To run those contemporary-day technology and tools of big data,

corporations want professional facts experts. These experts will consist of data scientists,

Big Data are characterised through excessive dimensionality and massive pattern size. These

capabilities raise 3 particular demanding challenges: (i) excessive dimensionality brings noise

accumulation, spurious correlations and incidental homogeneity; (ii) excessive dimensionality

mixed with massive pattern size creates problems along with heavy computational fee and

algorithmic instability; (iii) the big samples in Big Data are commonly aggregated from a couple

of sources at distinctive time factors utilising distinctive technology. This creates problems of

heterogeneity, experimental versions and statistical biases, and needs us to increase extra

adaptive and strong procedures (Li and Luo, 2022).

Lack of proper know-how of Big Data: Companies fail in projects of big data because of

inadequate know-how. Employees might not realize what facts is, its storage, processing,

significance, and sources. Data experts can also additionally realize what goes on,

however others might not have a clean picture. For instance, if personnel do not

recognize the significance of facts storage, they may not preserve the backup of touchy

facts. They may not use databases nicely for storage. As a result, whilst this vital facts is

required, it can not be retrieved easily.

Growth of data problems: One of the most demanding situations of Big Data is storing

these kind of big units of facts nicely. The quantity of facts being saved in data facilities

and databases of corporations is growing rapidly. As those data develop exponentially

with time, it gets extraordinarily tough to handle (Mangla and Zhang, 2020). Most of the

facts is unstructured and originated from documents, videos, audios, textual content

documents and different sources. This means one can not locate them in databases.

Confusion in selection for tool for big data: Companies frequently get burdened even as

choosing the excellent device for Big Data evaluation and storage. These questions

trouble corporations and every so often they are not able to find answers. They become

making poor choices and choosing irrelevant era. As a result, money, time, efforts and

hours of work are wasted.

Lack of data experts: To run those contemporary-day technology and tools of big data,

corporations want professional facts experts. These experts will consist of data scientists,

data analysts and data engineers who' are skilled in running with the tools and making

facts out of big data units (Verma and Kumar, 2018).

Data security: Securing those big units of facts is one of the daunting demanding

situations of Big Data. Often corporations are so busy in know-how, storing and reading

their data units that they push data safety for later stages. But, this is not a clever step as

unprotected facts repositories can end up breeding grounds for malicious hackers.

Data integration from loads of sources: Data in an corporation comes from loads of

sources, like social media pages, ERP applications, consumer logs, monetary reviews, e-

mails, shows and reviews created through personnel. Combining all this facts to put

together reviews is a tough task. This is a place frequently overlooked through firms. But,

facts integration is important for evaluation, reporting and enterprise intelligence, so it

must be perfect.

Techniques that are currently available to analysis big data

The top 4 data analysis techniques are as follows:

Regression analysis: This is a quantitative data analysis technique. It examines relationships

between two variables.

Hypothesis analysis: This is also a quantitative data analysis technique. It is used to tests whether

a hypothesis is true.

Content analysis: This is a qualitative data analysis technique. It measures content changes over

time and across media.

Discourse analysis: This is also a qualitative data analysis technique. It is used to explore

conversations in their social context.

How big data technology could support business giving examples

Understanding and utilising massive data is an essential competitive gain for main corporations.

To the extent corporations can gather greater facts from present infrastructure and customers will

provide them the possibility to find out hidden insights that their competition do not have get

access to. Big data can show an abundance of latest increase in opportunities, from inner insights

to front-going facing client interactions. Three principal enterprise opportunities include:

automation, in-depth insights, and data driven making of decisions.

facts out of big data units (Verma and Kumar, 2018).

Data security: Securing those big units of facts is one of the daunting demanding

situations of Big Data. Often corporations are so busy in know-how, storing and reading

their data units that they push data safety for later stages. But, this is not a clever step as

unprotected facts repositories can end up breeding grounds for malicious hackers.

Data integration from loads of sources: Data in an corporation comes from loads of

sources, like social media pages, ERP applications, consumer logs, monetary reviews, e-

mails, shows and reviews created through personnel. Combining all this facts to put

together reviews is a tough task. This is a place frequently overlooked through firms. But,

facts integration is important for evaluation, reporting and enterprise intelligence, so it

must be perfect.

Techniques that are currently available to analysis big data

The top 4 data analysis techniques are as follows:

Regression analysis: This is a quantitative data analysis technique. It examines relationships

between two variables.

Hypothesis analysis: This is also a quantitative data analysis technique. It is used to tests whether

a hypothesis is true.

Content analysis: This is a qualitative data analysis technique. It measures content changes over

time and across media.

Discourse analysis: This is also a qualitative data analysis technique. It is used to explore

conversations in their social context.

How big data technology could support business giving examples

Understanding and utilising massive data is an essential competitive gain for main corporations.

To the extent corporations can gather greater facts from present infrastructure and customers will

provide them the possibility to find out hidden insights that their competition do not have get

access to. Big data can show an abundance of latest increase in opportunities, from inner insights

to front-going facing client interactions. Three principal enterprise opportunities include:

automation, in-depth insights, and data driven making of decisions.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Automation: Big facts has the ability to enhance inner efficiencies and operations through

robotic procedure automation. Huge quantities of real-time facts may be right away

analysed and constructed into business procedures for automated choice making. With

scalable IT infrastructure and reducing cloud computing costs, automating facts

collection and storage is inside reach.

In-depth insights: Big data also can be used to find out hidden possibilities that had been

unknown to companies earlier than the capacity to study big units of facts. Complex facts

units may even be used to expand new merchandise or decorate present ones. Proprietary

facts in the market can prove invaluable within the competitive landscape.

Faster and better decision making: With the speed of facts analytics technology, paired

with the capacity to investigate new sources of facts, organizations at the moment are

capable to investigate statistics immediately and make smart, knowledgeable decisions.

robotic procedure automation. Huge quantities of real-time facts may be right away

analysed and constructed into business procedures for automated choice making. With

scalable IT infrastructure and reducing cloud computing costs, automating facts

collection and storage is inside reach.

In-depth insights: Big data also can be used to find out hidden possibilities that had been

unknown to companies earlier than the capacity to study big units of facts. Complex facts

units may even be used to expand new merchandise or decorate present ones. Proprietary

facts in the market can prove invaluable within the competitive landscape.

Faster and better decision making: With the speed of facts analytics technology, paired

with the capacity to investigate new sources of facts, organizations at the moment are

capable to investigate statistics immediately and make smart, knowledgeable decisions.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

REFERENCES

Books and Journals

Al-Abassi, A. and Parizi, R.M., 2020. Industrial big data analytics: challenges and

opportunities. Handbook of big data privacy. pp.37-61.

Bi, Z. and Wang, L., 2021. Internet of things (IoT) and big data analytics (BDA) for digital

manufacturing (DM). International Journal of Production Research. pp.1-18.

Hausladen, I. and Schosser, M., 2020. Towards a maturity model for big data analytics in airline

network planning. Journal of Air Transport Management, 82. p.101721.

Kovacova, M. and Lewis, E., 2021. Smart factory performance, cognitive automation, and

industrial big data analytics in sustainable manufacturing internet of things. Journal of

Self-Governance & Management Economics, 9(3).

Li, L. and Luo, X.R., 2022. Evaluating the impact of big data analytics usage on the decision-

making quality of organizations. Technological Forecasting and Social Change, 175.

p.121355.

Mangla and Zhang, Z.J., 2020. Mediating effect of big data analytics on project performance of

small and medium enterprises. Journal of Enterprise Information Management.

Verma, S. and Kumar, S., 2018. An extension of the technology acceptance model in the big data

analytics system implementation environment. Information Processing &

Management, 54(5). pp.791-806.

Books and Journals

Al-Abassi, A. and Parizi, R.M., 2020. Industrial big data analytics: challenges and

opportunities. Handbook of big data privacy. pp.37-61.

Bi, Z. and Wang, L., 2021. Internet of things (IoT) and big data analytics (BDA) for digital

manufacturing (DM). International Journal of Production Research. pp.1-18.

Hausladen, I. and Schosser, M., 2020. Towards a maturity model for big data analytics in airline

network planning. Journal of Air Transport Management, 82. p.101721.

Kovacova, M. and Lewis, E., 2021. Smart factory performance, cognitive automation, and

industrial big data analytics in sustainable manufacturing internet of things. Journal of

Self-Governance & Management Economics, 9(3).

Li, L. and Luo, X.R., 2022. Evaluating the impact of big data analytics usage on the decision-

making quality of organizations. Technological Forecasting and Social Change, 175.

p.121355.

Mangla and Zhang, Z.J., 2020. Mediating effect of big data analytics on project performance of

small and medium enterprises. Journal of Enterprise Information Management.

Verma, S. and Kumar, S., 2018. An extension of the technology acceptance model in the big data

analytics system implementation environment. Information Processing &

Management, 54(5). pp.791-806.

1 out of 8

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.