Project Proposal: Big Data Modeling for Data Stream Applications

VerifiedAdded on 2023/05/29

|8

|1506

|314

Project

AI Summary

This project proposal addresses the challenges of modeling big data in data stream applications, where traditional methods are insufficient due to the vast and unpredictable nature of data. The introduction highlights the importance of effective data modeling for organizing and storing massive datasets, ensuring improved performance, reduced costs, and enhanced output quality. The research background emphasizes the growth of data from internet usage and smart devices, and the limitations of traditional data modeling approaches. The research identifies the problems of unpredictable growth rates and the need for elastic data interfaces. The proposed solution introduces a feature-based modeling approach, detailing the main features of big data applications, including infrastructure, data storage, services, preprocessing, and processing/interface groups. The conclusion reinforces the effectiveness of the feature model-based approach. References to relevant literature are included, and the proposal aims to enhance understanding of data modeling techniques for students and researchers.

BIG DATA MODELLING, PROJECT PROPOSAL 1

BIG DATA MODELLING, PROJECT PROPOSAL

Student Name

Institution Affiliation

Facilitator

Course

Date

BIG DATA MODELLING, PROJECT PROPOSAL

Student Name

Institution Affiliation

Facilitator

Course

Date

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

BIG DATA MODELLING, PROJECT PROPOSAL 2

Table of Contents

1.0 Introduction................................................................................................................................3

2.0 Research background.................................................................................................................3

3.0 Research problems.....................................................................................................................4

4.0 Research objectives...................................................................................................................5

5.0 Proposed modelling technique...................................................................................................5

6.0 Conclusion.................................................................................................................................7

7.0 References..................................................................................................................................7

Table of Contents

1.0 Introduction................................................................................................................................3

2.0 Research background.................................................................................................................3

3.0 Research problems.....................................................................................................................4

4.0 Research objectives...................................................................................................................5

5.0 Proposed modelling technique...................................................................................................5

6.0 Conclusion.................................................................................................................................7

7.0 References..................................................................................................................................7

BIG DATA MODELLING, PROJECT PROPOSAL 3

1.0 Introduction

Just like the case for other real-life applications in the library where books need to be

classified and arranged systematically on shelves so that they can be accessed easily, the massive

data handled in big data applications need an effective way to be kept in order. This process of

sorting and storing big data is called “data modelling”. In its basic definition, a data model is a

method used to organize and store data just like it is the case with the Dewey Decimal System

when it comes to organizing books in a library. Effective Data modelling in big data applications

will help arrange data in accordance with its services and usage (De Martino et al, 2014, p.650).

Through that, improved performance can be realized in big data applications considering the fact

that data models will optimize the speed of querying and reduce the input-output throughput. The

cost of handling big data will also be minimized in consideration to the fact that effective data

models will significantly reduce data redundancy. Lastly, effective data modelling approaches

will improve the quality of big data application outputs through consistent statistics and reduced

computing errors (LaValle et al, 2011, p.21).

2.0 Research background

The expansive growth in internet usage capacity, smart devices and all the other forms of

information technology in the current digital era has led to an equally impressive data growth

rate. The trend has appeared with its own challenges considering the fact that traditional

approaches to data classification, organization and storage had been designed to accommodate

small amounts of data (Lee, 2005, p.950). For instance, statistics have shown that the huge

amounts of data handled by the data stream applications cannot be stored and fully managed in a

system and that raises the concern on looking for better ways to handle data in such an

application. Additionally, contrary to traditional data, the arrival rate of the data stream has been

1.0 Introduction

Just like the case for other real-life applications in the library where books need to be

classified and arranged systematically on shelves so that they can be accessed easily, the massive

data handled in big data applications need an effective way to be kept in order. This process of

sorting and storing big data is called “data modelling”. In its basic definition, a data model is a

method used to organize and store data just like it is the case with the Dewey Decimal System

when it comes to organizing books in a library. Effective Data modelling in big data applications

will help arrange data in accordance with its services and usage (De Martino et al, 2014, p.650).

Through that, improved performance can be realized in big data applications considering the fact

that data models will optimize the speed of querying and reduce the input-output throughput. The

cost of handling big data will also be minimized in consideration to the fact that effective data

models will significantly reduce data redundancy. Lastly, effective data modelling approaches

will improve the quality of big data application outputs through consistent statistics and reduced

computing errors (LaValle et al, 2011, p.21).

2.0 Research background

The expansive growth in internet usage capacity, smart devices and all the other forms of

information technology in the current digital era has led to an equally impressive data growth

rate. The trend has appeared with its own challenges considering the fact that traditional

approaches to data classification, organization and storage had been designed to accommodate

small amounts of data (Lee, 2005, p.950). For instance, statistics have shown that the huge

amounts of data handled by the data stream applications cannot be stored and fully managed in a

system and that raises the concern on looking for better ways to handle data in such an

application. Additionally, contrary to traditional data, the arrival rate of the data stream has been

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

BIG DATA MODELLING, PROJECT PROPOSAL 4

unpredictable and thus the traditional data modelling techniques have not been in a position to

handle. For that matter, most of the traditional data modelling process does not suit or fit big data

applications due to the large amounts of data handled by these applications not forgetting the fact

that these applications deal with real-time data (LaValle et al, 2011, p.20).

3.0 Research problems

Traditional data records were characterized by their stability and predictability in their

growth and that made it relatively easy to be modelled using techniques such as UML. In

contrast, the current big data's exponential rate of growth has proved to be unpredictable because

of its myriad sources and forms. Therefore, for big data to be modelled, efforts will have to be

made in coming up with elastic and open data interfaces (Viceconti, Hunter and Hose, 2015,

p.1210). This is because changes in data sources and forms are not easily predictable. So,

considering the fact that the stability and predictability factors had not been taken care of in the

traditional data modelling techniques, most of the available data modelling approaches have been

rendered unsuitable for big data modelling.

Also, in traditional data realm, relational database schemas covered most of relationships

and data links required by business organizations for information support. This has changed with

the big data technology which sometimes doesn’t have databases or uses NoSQL databases

which don’t require database schemas (Lee, 2005, p.948). Big data models will, therefore,

require system components that have entire corporate governance and security, business

information requirements, physical storage for data, integration and open interfaces for all data

types, and have the ability to handle different types of data. The traditional data modelling

techniques have proved incapable of handling these types of functionalities.

unpredictable and thus the traditional data modelling techniques have not been in a position to

handle. For that matter, most of the traditional data modelling process does not suit or fit big data

applications due to the large amounts of data handled by these applications not forgetting the fact

that these applications deal with real-time data (LaValle et al, 2011, p.20).

3.0 Research problems

Traditional data records were characterized by their stability and predictability in their

growth and that made it relatively easy to be modelled using techniques such as UML. In

contrast, the current big data's exponential rate of growth has proved to be unpredictable because

of its myriad sources and forms. Therefore, for big data to be modelled, efforts will have to be

made in coming up with elastic and open data interfaces (Viceconti, Hunter and Hose, 2015,

p.1210). This is because changes in data sources and forms are not easily predictable. So,

considering the fact that the stability and predictability factors had not been taken care of in the

traditional data modelling techniques, most of the available data modelling approaches have been

rendered unsuitable for big data modelling.

Also, in traditional data realm, relational database schemas covered most of relationships

and data links required by business organizations for information support. This has changed with

the big data technology which sometimes doesn’t have databases or uses NoSQL databases

which don’t require database schemas (Lee, 2005, p.948). Big data models will, therefore,

require system components that have entire corporate governance and security, business

information requirements, physical storage for data, integration and open interfaces for all data

types, and have the ability to handle different types of data. The traditional data modelling

techniques have proved incapable of handling these types of functionalities.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

BIG DATA MODELLING, PROJECT PROPOSAL 5

4.0 Research objectives

The aim of this research is to come up with an effective data modelling technique to be

used in modelling. This is in consideration to the fact that the traditional data modelling

approaches have proved to be ineffective because big data applications handle huge amounts of

data that cannot be stored entirely in a system and the data rates are also unpredictable in the

manner it arrives in those applications.

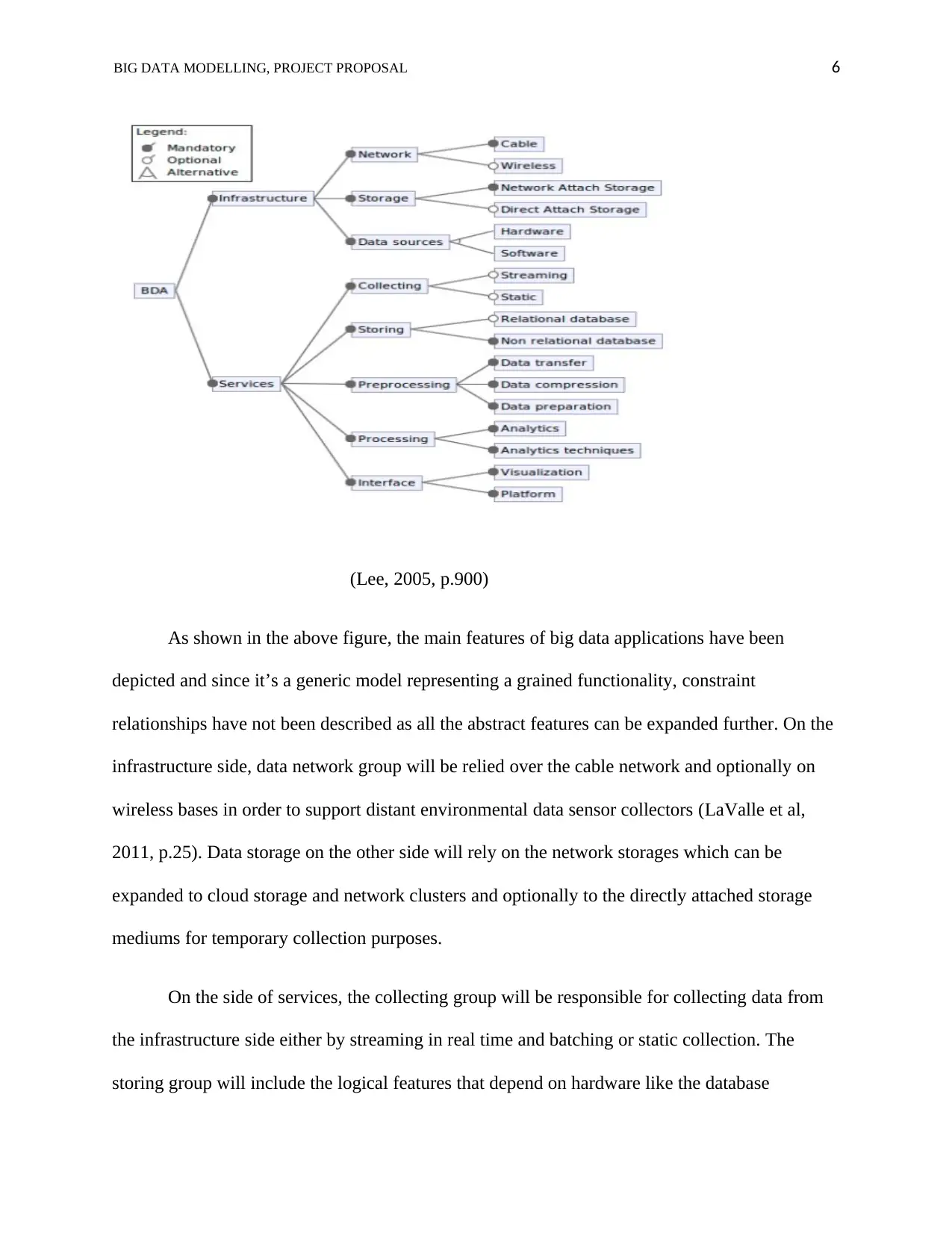

5.0 Proposed modelling technique

Putting into consideration the fact that big data applications handle huge amounts of data

that cannot be stored entirely in a system and the rate unpredictable rates in which data arrives in

those applications, this research proposes a different modelling approach to big data applications

rather than the normal database applications used in traditional data realm (De Martino et al,

2014, p.646). Precisely, the research has proposed a feature-based modelling approach as shown

in the diagram below

4.0 Research objectives

The aim of this research is to come up with an effective data modelling technique to be

used in modelling. This is in consideration to the fact that the traditional data modelling

approaches have proved to be ineffective because big data applications handle huge amounts of

data that cannot be stored entirely in a system and the data rates are also unpredictable in the

manner it arrives in those applications.

5.0 Proposed modelling technique

Putting into consideration the fact that big data applications handle huge amounts of data

that cannot be stored entirely in a system and the rate unpredictable rates in which data arrives in

those applications, this research proposes a different modelling approach to big data applications

rather than the normal database applications used in traditional data realm (De Martino et al,

2014, p.646). Precisely, the research has proposed a feature-based modelling approach as shown

in the diagram below

BIG DATA MODELLING, PROJECT PROPOSAL 6

(Lee, 2005, p.900)

As shown in the above figure, the main features of big data applications have been

depicted and since it’s a generic model representing a grained functionality, constraint

relationships have not been described as all the abstract features can be expanded further. On the

infrastructure side, data network group will be relied over the cable network and optionally on

wireless bases in order to support distant environmental data sensor collectors (LaValle et al,

2011, p.25). Data storage on the other side will rely on the network storages which can be

expanded to cloud storage and network clusters and optionally to the directly attached storage

mediums for temporary collection purposes.

On the side of services, the collecting group will be responsible for collecting data from

the infrastructure side either by streaming in real time and batching or static collection. The

storing group will include the logical features that depend on hardware like the database

(Lee, 2005, p.900)

As shown in the above figure, the main features of big data applications have been

depicted and since it’s a generic model representing a grained functionality, constraint

relationships have not been described as all the abstract features can be expanded further. On the

infrastructure side, data network group will be relied over the cable network and optionally on

wireless bases in order to support distant environmental data sensor collectors (LaValle et al,

2011, p.25). Data storage on the other side will rely on the network storages which can be

expanded to cloud storage and network clusters and optionally to the directly attached storage

mediums for temporary collection purposes.

On the side of services, the collecting group will be responsible for collecting data from

the infrastructure side either by streaming in real time and batching or static collection. The

storing group will include the logical features that depend on hardware like the database

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

BIG DATA MODELLING, PROJECT PROPOSAL 7

structures and storing techniques. The preprocessing group will entail data transfer, extraction,

compression, distribution, and transformation and validation operations. It is the group that will

be equipped with the most critical features for the purposes of validating the input data before

being analyzed (LaValle et al, 2011, p.22)

Lastly, the processing and interface groups will be responsible for data analysis. Where

knowledge will be derived from the raw data before visualization can follow in the interface

group. It is at these two stages that knowledge will be derived through data analytics so that it

can be represented to the end user in an understandable manner and that the end users will have a

chance to interact with the platform.

6.0 Conclusion

In this paper, the feature model-based approach has been proposed as an effective

approach to modelling big data-based applications. This is after the observation that the

traditional approaches to data modelling won’t be effective because unlike the traditional records

of data whose growth rate were predictable, big data applications handle huge amounts of data

that cannot be stored entirely in a system and the rate of data growth is also unpredictable.

7.0 References

De Martino, T., Falcidieno, B., Giannini, F., Hassinger, S. and Ovtcharova, J., 1994. Feature-

based modelling by integrating design and recognition approaches. Computer-Aided

Design, 26(8), pp.646-653.

LaValle, S., Lesser, E., Shockley, R., Hopkins, M.S. and Kruschwitz, N., 2011. Big data,

analytics and the path from insights to value. MIT sloan management review, 52(2), p.21.

structures and storing techniques. The preprocessing group will entail data transfer, extraction,

compression, distribution, and transformation and validation operations. It is the group that will

be equipped with the most critical features for the purposes of validating the input data before

being analyzed (LaValle et al, 2011, p.22)

Lastly, the processing and interface groups will be responsible for data analysis. Where

knowledge will be derived from the raw data before visualization can follow in the interface

group. It is at these two stages that knowledge will be derived through data analytics so that it

can be represented to the end user in an understandable manner and that the end users will have a

chance to interact with the platform.

6.0 Conclusion

In this paper, the feature model-based approach has been proposed as an effective

approach to modelling big data-based applications. This is after the observation that the

traditional approaches to data modelling won’t be effective because unlike the traditional records

of data whose growth rate were predictable, big data applications handle huge amounts of data

that cannot be stored entirely in a system and the rate of data growth is also unpredictable.

7.0 References

De Martino, T., Falcidieno, B., Giannini, F., Hassinger, S. and Ovtcharova, J., 1994. Feature-

based modelling by integrating design and recognition approaches. Computer-Aided

Design, 26(8), pp.646-653.

LaValle, S., Lesser, E., Shockley, R., Hopkins, M.S. and Kruschwitz, N., 2011. Big data,

analytics and the path from insights to value. MIT sloan management review, 52(2), p.21.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

BIG DATA MODELLING, PROJECT PROPOSAL 8

Lee, S.H., 2005. A CAD–CAE integration approach using feature-based multi-resolution and

multi-abstraction modelling techniques. Computer-Aided Design, 37(9), pp.941-955.

Viceconti, M., Hunter, P.J. and Hose, R.D., 2015. Big data, big knowledge: big data for

personalized healthcare. IEEE J. Biomedical and Health Informatics, 19(4), pp.1209-1215.

Lee, S.H., 2005. A CAD–CAE integration approach using feature-based multi-resolution and

multi-abstraction modelling techniques. Computer-Aided Design, 37(9), pp.941-955.

Viceconti, M., Hunter, P.J. and Hose, R.D., 2015. Big data, big knowledge: big data for

personalized healthcare. IEEE J. Biomedical and Health Informatics, 19(4), pp.1209-1215.

1 out of 8

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.