AlexNet-DNN for Breast Cancer Detection and Classification Project

VerifiedAdded on 2020/11/23

|16

|14251

|98

Project

AI Summary

This project proposes a robust and efficient Computer-Aided Diagnosis (CAD) system for breast cancer detection and classification using a Deep Neural Network (DNN) based on the AlexNet architecture. The system incorporates pre-processing, an enhanced adaptive learning-based Gaussian Mixture Model (GMM), connected component analysis for region of interest localization, and AlexNet-DNN for feature extraction. Feature selection is performed using Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA). The model is evaluated on the BreakHis dataset, demonstrating superior performance compared to other state-of-the-art techniques, achieving high accuracy rates. The study highlights the effectiveness of AlexNet-DNN features combined with LDA for dimensional reduction and SVM-based classification, making it suitable for real-world applications. The research emphasizes the importance of early cancer identification and diagnosis using advanced computing techniques.

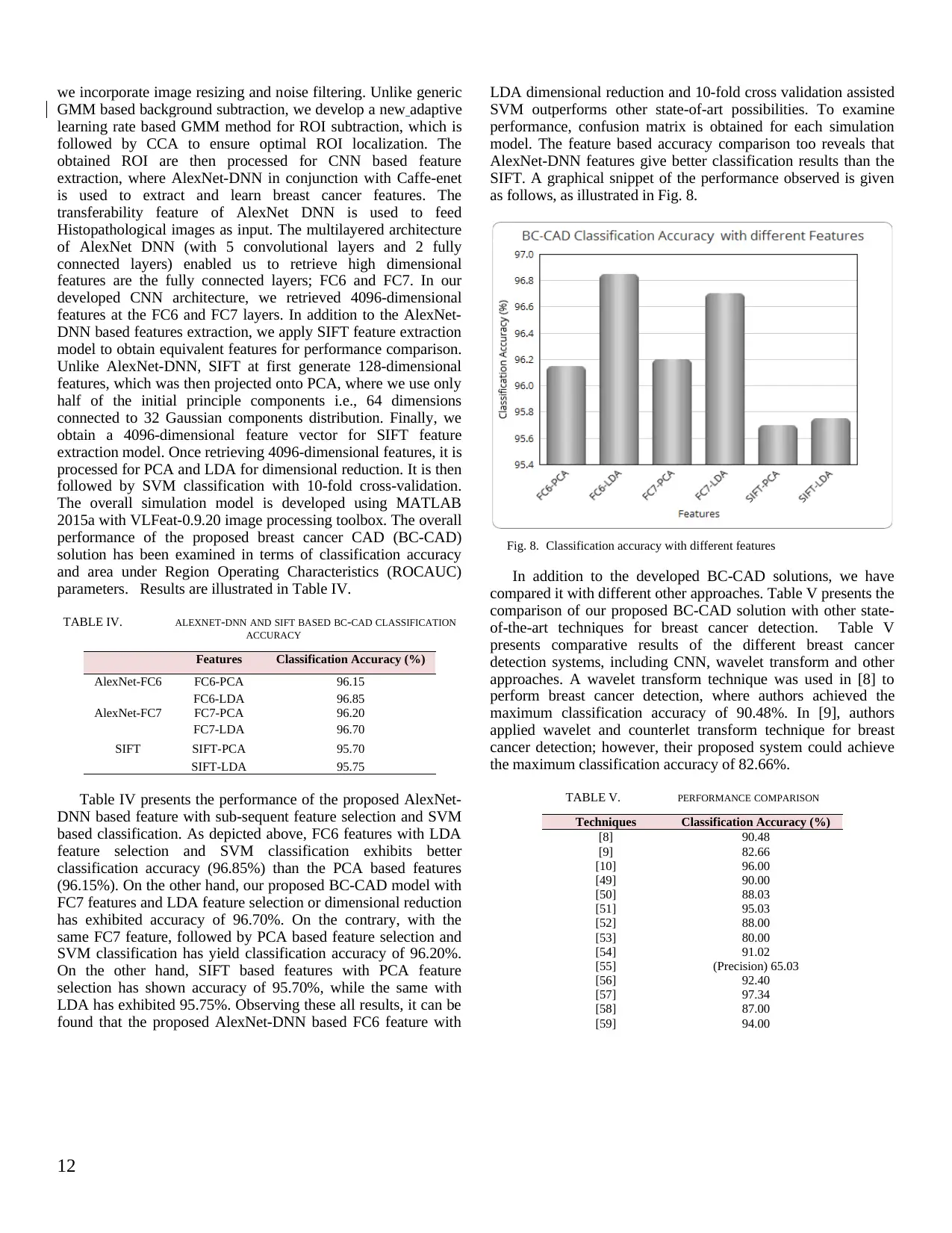

A Robust Deep Neural Network Based Breast Cancer

Detection And Classification

Abstract— The exponential rise in breast cancer cases across

the globe has alarmed academia-industries to achieve certain more

efficient and robust Breast Cancer Computer Aided Diagnosis (BC-

CAD) system for breast cancer detection. A number of techniques

have been developed with focus on case (i.e., data type) centric

segmentation, feature extraction and classification of breast cancer

Histopathological images. However, rising complexity and accuracy

often demands more robust solution. Recently, Convolutional

Neural Network (CNN) has emerged as one of the most efficient

techniques for medical data analysis and various image

classification problems. In this paper a highly robust and efficient

BC-CAD solution has been proposed. Our proposed system

incorporates pre-processing, enhanced adaptive learning based

Gaussian Mixture Model (GMM), connected component analysis

based region of interest localization, AlexNet-DNN based feature

extraction. The Principle Component Analysis (PCA) and Linear

Discriminant Analysis (LDA) based on feature selection which is

used as dimensional reduction. One of the advantages of the

proposed method is that none of the current dimensional reduction

algorithms employed with SVM to perform breast cancer detection

and classification. The overall results obtained signify that the

AlexNet-DNN based features at fully connected layer; FC6 in

conjunction with LDA dimensional reduction and SVM based

classification outperforms other state-of-art techniques for breast

cancer detection. The proposed BC-CAD system has been

performed over real world data BreakHis having significant

diversity and complexity, and therefore we suggest it to be used for

other real-world applications. The proposed method achieved 96.15

for AlexNet-FC6 and 96.20 for AlexNet-FC7 in term of evaluation

measures.

Keywords—Breast Cancer Detection; Computer Aided

Diagnosis; Convolutional Neural Network; AlexNet-DNN; Linear

Discriminant Analysis.

INTRODUCTION

Cancer is a type of disease involving the development and

growth of abnormal cell that invades or spreads to other parts of a

human body. In last few decades, cancer has emerged as one of

the deadliest heath diseases claiming huge death rate. There are

different types of cancers such as breast cancer, blood cancer,

melanoma or skin cancer…etc. However, in last few years, breast

cancer has emerged as the second most common cancer type after

skin cancer in women. Literatures reveal that almost 50% of the

breast cancer cases are found in the developing countries, while

approximate 58% of deaths take place in less developed

countries. It is estimated that around the world over 5,08,000

women died in 2011 due to breast cancer [1]. Various case

studies [1] have revealed that the earlier cancer identification and

its diagnosis can play vital role in providing success diagnosis or

treatment. A similar survey [2] also indicated high pace rise in

deaths caused due to breast cancer in 2012, which is even

increasing with vast pace [2]. The study revealed that the death

rate can reach up to 27 million till 2030 [3]. The exponential rise

in breast cancer has alarmed academia-industries to develop

certain vision based approach for earlier breast cancer detection

and diagnosis. The rise in advanced computing techniques, vision

based computations and decision process…etc. has given rise to a

new dimension having immense potential to meet major

demands. Non-deniably such development often gives a hope for

better human life, health and decision process. In fact, the

development of science and technology often intends to enable

human life secure, healthy and productive. On the other hand,

gigantically rising population across world requires optimal

healthcare solutions. In fact, the traditional manual diagnosis

processes are either confined or less productive to meet the

demands and enable optimal healthcare solution. This as a result

has motivated academia-industries to achieve more efficient

computer aided diagnosis (CAD) solutions for earlier health-

diagnosis. The development of Histopathological analysis and

molecular biology has made vision based breast cancer CAD

system more efficient [4]. Unlike traditional manual microscopic

analysis based approaches vision based CAD system can be of

paramount significance for breast cancer detection and

classification [5].

In last few years, efforts have been made to exploit X-ray

mammography and ultrasound technique to assist breast cancer

detection and diagnosis; however, mammography usually

exhibits poor sensitivity to the minute size cancers. In addition, it

is insignificant especially for augmented breast or dense breast

conditions [60]. Similarly, poor specificity and the complexity in

whole breast-imaging process make ultrasound method confined

and ineffective. As an enhanced solution, authors proposed

Magnetic Resonance Imagining (MRI) systems that possess

better soft tissue resolution capacity and enhanced sensitivity for

breast cancer detection [6]. The MRI was method used to avoid

any ionization radiation and hence it can be suitable for patients

with implants. The key usefulness of MRI method is its ability of

quantification of tumor volume, multi-focal and multi-centric

breast cancer detection [7]. Vision based CAD systems have been

1

Detection And Classification

Abstract— The exponential rise in breast cancer cases across

the globe has alarmed academia-industries to achieve certain more

efficient and robust Breast Cancer Computer Aided Diagnosis (BC-

CAD) system for breast cancer detection. A number of techniques

have been developed with focus on case (i.e., data type) centric

segmentation, feature extraction and classification of breast cancer

Histopathological images. However, rising complexity and accuracy

often demands more robust solution. Recently, Convolutional

Neural Network (CNN) has emerged as one of the most efficient

techniques for medical data analysis and various image

classification problems. In this paper a highly robust and efficient

BC-CAD solution has been proposed. Our proposed system

incorporates pre-processing, enhanced adaptive learning based

Gaussian Mixture Model (GMM), connected component analysis

based region of interest localization, AlexNet-DNN based feature

extraction. The Principle Component Analysis (PCA) and Linear

Discriminant Analysis (LDA) based on feature selection which is

used as dimensional reduction. One of the advantages of the

proposed method is that none of the current dimensional reduction

algorithms employed with SVM to perform breast cancer detection

and classification. The overall results obtained signify that the

AlexNet-DNN based features at fully connected layer; FC6 in

conjunction with LDA dimensional reduction and SVM based

classification outperforms other state-of-art techniques for breast

cancer detection. The proposed BC-CAD system has been

performed over real world data BreakHis having significant

diversity and complexity, and therefore we suggest it to be used for

other real-world applications. The proposed method achieved 96.15

for AlexNet-FC6 and 96.20 for AlexNet-FC7 in term of evaluation

measures.

Keywords—Breast Cancer Detection; Computer Aided

Diagnosis; Convolutional Neural Network; AlexNet-DNN; Linear

Discriminant Analysis.

INTRODUCTION

Cancer is a type of disease involving the development and

growth of abnormal cell that invades or spreads to other parts of a

human body. In last few decades, cancer has emerged as one of

the deadliest heath diseases claiming huge death rate. There are

different types of cancers such as breast cancer, blood cancer,

melanoma or skin cancer…etc. However, in last few years, breast

cancer has emerged as the second most common cancer type after

skin cancer in women. Literatures reveal that almost 50% of the

breast cancer cases are found in the developing countries, while

approximate 58% of deaths take place in less developed

countries. It is estimated that around the world over 5,08,000

women died in 2011 due to breast cancer [1]. Various case

studies [1] have revealed that the earlier cancer identification and

its diagnosis can play vital role in providing success diagnosis or

treatment. A similar survey [2] also indicated high pace rise in

deaths caused due to breast cancer in 2012, which is even

increasing with vast pace [2]. The study revealed that the death

rate can reach up to 27 million till 2030 [3]. The exponential rise

in breast cancer has alarmed academia-industries to develop

certain vision based approach for earlier breast cancer detection

and diagnosis. The rise in advanced computing techniques, vision

based computations and decision process…etc. has given rise to a

new dimension having immense potential to meet major

demands. Non-deniably such development often gives a hope for

better human life, health and decision process. In fact, the

development of science and technology often intends to enable

human life secure, healthy and productive. On the other hand,

gigantically rising population across world requires optimal

healthcare solutions. In fact, the traditional manual diagnosis

processes are either confined or less productive to meet the

demands and enable optimal healthcare solution. This as a result

has motivated academia-industries to achieve more efficient

computer aided diagnosis (CAD) solutions for earlier health-

diagnosis. The development of Histopathological analysis and

molecular biology has made vision based breast cancer CAD

system more efficient [4]. Unlike traditional manual microscopic

analysis based approaches vision based CAD system can be of

paramount significance for breast cancer detection and

classification [5].

In last few years, efforts have been made to exploit X-ray

mammography and ultrasound technique to assist breast cancer

detection and diagnosis; however, mammography usually

exhibits poor sensitivity to the minute size cancers. In addition, it

is insignificant especially for augmented breast or dense breast

conditions [60]. Similarly, poor specificity and the complexity in

whole breast-imaging process make ultrasound method confined

and ineffective. As an enhanced solution, authors proposed

Magnetic Resonance Imagining (MRI) systems that possess

better soft tissue resolution capacity and enhanced sensitivity for

breast cancer detection [6]. The MRI was method used to avoid

any ionization radiation and hence it can be suitable for patients

with implants. The key usefulness of MRI method is its ability of

quantification of tumor volume, multi-focal and multi-centric

breast cancer detection [7]. Vision based CAD systems have been

1

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

found efficient to perform more time efficient and accurate breast

cancer detection to assist early diagnosis. Exploring in depth of

the CAD systems, it can be found that its efficacy primarily

depends on the efficiency of the Region Of Interest (ROI)

segmentation, feature extraction, feature mapping and

classification techniques. There are a number of techniques, such

as wavelet transform [8][9][10], Gabor transform [11], applied

for feature extraction while classification is done using standard

support vector machine (SVM) or other artificial intelligence

approaches [9][12][13]. However, most of the traditional

approaches are confined due to limited feature extraction

efficiency and classification for lesion of different shape, size,

and orientation density. The low information availability too

confines the efficacy of the traditional feature extraction and

cancer classification models. In addition, there is an inevitable

need to develop efficient approaches to deal with high

dimensional features, feature selection and classification or

prediction. Considering large size unannotated data (i.e., breast

cancer image data), the use of CNN as feature extraction tool can

ensure better breast cancer CAD (BC-CAD) solution.

In this paper, a highly robust and efficient CNN based Deep

Neural Network (DNN) model has been developed for breast

cancer detection and classification. To enable an optimal

solution, our proposed model encompasses various enhancements

including pre-processing, ROI identification, Caffe-enet-AlexNet

DNN based feature extraction, PCA and LDA based feature

selection and SVM based classification. To examine the

effectiveness of the proposed DNN model, a parallel BC-CAD

model using Spatial Invariant Fourier Transform (SIFT) feature

extraction has been developed. In addition, to perform breast

cancer prediction, a two class classification model is developed;

where the overall result affirms that the AlexNet-DNN with LDA

feature extraction outperforms other state-of-art techniques for

BC-CAD purpose.

The other sections of the presented manuscript are divided as

follows: Section two presents the related work, which is followed

by the discussion of the proposed AlexNet DNN based breast

cancer detection and classification in Section three. Section four

presents the results and discussion, while the overall research

conclusion is presented in Section five.

RELATED WORK

This section briefs some of the key literatures pertaining to

the breast cancer detection and related technologies.

Rehman et al. [12] derived a diverse feature based breast

cancer detection model, where they applied phylogenetic trees,

various statistical features and local binary patterns for

generating certain distinctive and discriminative features to

perform classification. Authors applied radial basis function

based SVM for two class classification: cancerous and non-

cancerous. Pramanik et al. [8] assessed the breast thermogram to

perform cancer detection, where they at first applied Initial

Feature point Image (IFI) to perform feature extraction for each

segmented breast thermogram. They applied DWT to perform

feature extraction, which was followed by feature classification

using feed-forward Artificial Neural Network (ANN). A similar

effort was made in [9], where authors applied wavelet and

counterlet transformation for feature extraction and SVM

classifier based classification of the mammograms. Yousefi et al.

[11] used multi-channel Gabor wavelet filter for feature

extraction over mammograms. Authors applied Gabor filter

design to perform feature extraction, which was then followed by

two class classifications using Bayesian classifier. Caorsi et al.

[14] proposed an ANN based radar data processing model for

breast cancer detection where they emphasized not only on the

detection of the cancerous tumour but also its geometric features

such as depth and width. A similar effort was made in [15],

where authors proposed a data-driven matched field model to

assist microwave breast cancer detection. George et al. [16]

developed robust and intelligent breast cancer detection and

classification model using cytological images. Authors applied

different classifiers including back-propagation based multilayer

perceptron, Probabilistic Neural Network (PNN), SVM and

learning vector quantization to perform breast cancer

classification. Considering mitosis as a cancer signifier, Tashk et

al. [17] developed an automatic BC-CAD model, where at first

they applied 2-D anisotropic diffusion for noise removal and

image morphological process. To achieve pixel-wise features

from the ROI (i.e., mitosis region), authors derived a statistical

feature extraction model. In addition, to alleviate the issue of

misclassification of mitosis and non-mitosis objects, authors

developed an object-wise Completed Local Binary Pattern

(CLBP) that enabled efficient textural features extraction. The

predominant novelty of the CLBP model was that it is robust

against positional variation, color changes …etc. Authors applied

SVM to perform feature classification. A space processing model

was developed in [18] that enabled time reversal imaging

approach to perform breast cancer detection. To perform

malignant lesion detection, authors applied FDTD breast model

that comprises dense breast tissues with differing fibro-glandular

tissue composition. To achieve better accuracy, Naeemabadi et

al. [13] applied LS and SMO techniques rather applying

traditional SVM for classification. Li et al. [19] developed an

MFSVM-FKNN ensemble classifier for breast cancer detection,

where authors applied the concept of mixture membership

function. Fuzzy classifier based breast cancer detection and

classification model was proposed in [20]. A bio-inspired

immunological scheme was proposed for mammographic mass

classification that classifies malignant tumors from the benign

ones. Gaike et al. [21] at first focused on retrieving the higher

order (particularly third order) features that was later processed

for clustering to perform malignant breast cancer detection. Ali et

al. [22] developed an automatic segmentation model using the

concept of the data acquisition protocol parameter over the image

statistics of DMR-IR database. A similar effort was made in [23],

where authors developed a two phase BC-CAD system. In their

approach at first they applied Neutrosophic Sets (NS) and

optimized Fast Fuzzy C-Mean (F-FCM) algorithm for ROI

segmentation, which was followed by classification to perform

two class classifications, i.e. normal and abnormal tissue.

2

cancer detection to assist early diagnosis. Exploring in depth of

the CAD systems, it can be found that its efficacy primarily

depends on the efficiency of the Region Of Interest (ROI)

segmentation, feature extraction, feature mapping and

classification techniques. There are a number of techniques, such

as wavelet transform [8][9][10], Gabor transform [11], applied

for feature extraction while classification is done using standard

support vector machine (SVM) or other artificial intelligence

approaches [9][12][13]. However, most of the traditional

approaches are confined due to limited feature extraction

efficiency and classification for lesion of different shape, size,

and orientation density. The low information availability too

confines the efficacy of the traditional feature extraction and

cancer classification models. In addition, there is an inevitable

need to develop efficient approaches to deal with high

dimensional features, feature selection and classification or

prediction. Considering large size unannotated data (i.e., breast

cancer image data), the use of CNN as feature extraction tool can

ensure better breast cancer CAD (BC-CAD) solution.

In this paper, a highly robust and efficient CNN based Deep

Neural Network (DNN) model has been developed for breast

cancer detection and classification. To enable an optimal

solution, our proposed model encompasses various enhancements

including pre-processing, ROI identification, Caffe-enet-AlexNet

DNN based feature extraction, PCA and LDA based feature

selection and SVM based classification. To examine the

effectiveness of the proposed DNN model, a parallel BC-CAD

model using Spatial Invariant Fourier Transform (SIFT) feature

extraction has been developed. In addition, to perform breast

cancer prediction, a two class classification model is developed;

where the overall result affirms that the AlexNet-DNN with LDA

feature extraction outperforms other state-of-art techniques for

BC-CAD purpose.

The other sections of the presented manuscript are divided as

follows: Section two presents the related work, which is followed

by the discussion of the proposed AlexNet DNN based breast

cancer detection and classification in Section three. Section four

presents the results and discussion, while the overall research

conclusion is presented in Section five.

RELATED WORK

This section briefs some of the key literatures pertaining to

the breast cancer detection and related technologies.

Rehman et al. [12] derived a diverse feature based breast

cancer detection model, where they applied phylogenetic trees,

various statistical features and local binary patterns for

generating certain distinctive and discriminative features to

perform classification. Authors applied radial basis function

based SVM for two class classification: cancerous and non-

cancerous. Pramanik et al. [8] assessed the breast thermogram to

perform cancer detection, where they at first applied Initial

Feature point Image (IFI) to perform feature extraction for each

segmented breast thermogram. They applied DWT to perform

feature extraction, which was followed by feature classification

using feed-forward Artificial Neural Network (ANN). A similar

effort was made in [9], where authors applied wavelet and

counterlet transformation for feature extraction and SVM

classifier based classification of the mammograms. Yousefi et al.

[11] used multi-channel Gabor wavelet filter for feature

extraction over mammograms. Authors applied Gabor filter

design to perform feature extraction, which was then followed by

two class classifications using Bayesian classifier. Caorsi et al.

[14] proposed an ANN based radar data processing model for

breast cancer detection where they emphasized not only on the

detection of the cancerous tumour but also its geometric features

such as depth and width. A similar effort was made in [15],

where authors proposed a data-driven matched field model to

assist microwave breast cancer detection. George et al. [16]

developed robust and intelligent breast cancer detection and

classification model using cytological images. Authors applied

different classifiers including back-propagation based multilayer

perceptron, Probabilistic Neural Network (PNN), SVM and

learning vector quantization to perform breast cancer

classification. Considering mitosis as a cancer signifier, Tashk et

al. [17] developed an automatic BC-CAD model, where at first

they applied 2-D anisotropic diffusion for noise removal and

image morphological process. To achieve pixel-wise features

from the ROI (i.e., mitosis region), authors derived a statistical

feature extraction model. In addition, to alleviate the issue of

misclassification of mitosis and non-mitosis objects, authors

developed an object-wise Completed Local Binary Pattern

(CLBP) that enabled efficient textural features extraction. The

predominant novelty of the CLBP model was that it is robust

against positional variation, color changes …etc. Authors applied

SVM to perform feature classification. A space processing model

was developed in [18] that enabled time reversal imaging

approach to perform breast cancer detection. To perform

malignant lesion detection, authors applied FDTD breast model

that comprises dense breast tissues with differing fibro-glandular

tissue composition. To achieve better accuracy, Naeemabadi et

al. [13] applied LS and SMO techniques rather applying

traditional SVM for classification. Li et al. [19] developed an

MFSVM-FKNN ensemble classifier for breast cancer detection,

where authors applied the concept of mixture membership

function. Fuzzy classifier based breast cancer detection and

classification model was proposed in [20]. A bio-inspired

immunological scheme was proposed for mammographic mass

classification that classifies malignant tumors from the benign

ones. Gaike et al. [21] at first focused on retrieving the higher

order (particularly third order) features that was later processed

for clustering to perform malignant breast cancer detection. Ali et

al. [22] developed an automatic segmentation model using the

concept of the data acquisition protocol parameter over the image

statistics of DMR-IR database. A similar effort was made in [23],

where authors developed a two phase BC-CAD system. In their

approach at first they applied Neutrosophic Sets (NS) and

optimized Fast Fuzzy C-Mean (F-FCM) algorithm for ROI

segmentation, which was followed by classification to perform

two class classifications, i.e. normal and abnormal tissue.

2

Ismahan et al. [24] used a mathematical morphology concept to

perform masses detection over digitized mammograms. Texture

analysis based ROI detection for thermal imaging based BC-

CAD was performed in [25]. Considering the significance of

feature extraction for mammograms classification, Sanae et al.

[10] exploited comprehensive statistical Block-Based features,

which were extracted from sub-bands of the discrete wavelet

transformation. Once mapping the extraction features, SVM was

applied to perform classification. Unlike traditional approaches,

Maken et al. [26] examined the efficacy of Multiple Instance

Learning (MIL) algorithm for mammograms classification,

where they developed MIL using tile-based spatio-temporal

features. Rosa et al. [27] and Hatipoglu et al. [28] intended to

exploit MIL in conjunction with SVM for mammograms

classification. Non-deniably, MIL approaches have performed

better, particularly for unannotated data; however the likelihood

of better performance with deep features cannot be ignored.

To enable more efficient feature extraction and accurate

performance, DNN has gained significant attention. CNN has

gained widespread recognition to extract features from the

complex image data, such as mammograms, MRI medical data or

even histopathological datasets. CNN techniques which are

inspired biologically by the organization of human visual cortex

possess robust efficiency due to its ability to learn features

invariant to translation, rotation and shifting is their great

advantage. Spanhol et al. [29] applied CNN approach to classify

breast cancer Histopathological images. Their proposed model

performed learning CNN by means of training patches generated

through varied approaches. Authors obtained the best

classification accuracy of 89.6% for images with 40x

enlargement. To achieve this result, authors considered the

patches of the size 64x64 pixels. Hatipoglu et al. [28] used CNN

to classify cellular and non-cellular structures in breast cancer

histopathological images. Authors applied neural network to

perform classification, where they achieved the best classification

accuracy of 86.88%. Recently, Wang et al. [30] used Googlenet

CNN model to perform Histopathological image classification,

where they achieved the accuracy of 98.4% patch classification.

To detect the invasive ductal carcinoma tissue in histological

images for BC-CAD, Cruz-Roa et al. [31] applied CNN feature

extraction approach. Ertosun et al. [32] developed BC-CAD

model using Deep Learning with three distinct CNN models so as

to localize masses in mammography images. To enhance

accuracy of segmentation or ROI identification, authors

incorporated additional novelties such as cropping, translation,

rotation, flipping and scaling techniques. Arevalo et al. [33]

applied CNN based feature extraction followed by SVM based

classification for BC-CAD solution. Authors achieved Receiver

Operating Characteristics (ROC) of 86%. A similar work was

performed in [34], where authors achieved classification

accuracy of 96.7%. Russakovsky et al. [35] and Zuiderveld et al.

[40] trained CNN over ImageNet to perform breast cancer

classification. Authors Abdel-Zaher et al. [36] and Wang et al.

[41] performed breast cancer classification, developing a

classifier by means of the weights of a previously trained Deep

Belief Network (DBN). They applied Levenberg Marquardt

learning based ANN to perform classification. Amongst the

various researches it has been realized that in addition to the ROI

identification and feature extraction efficiency, selecting optimal

features is equally significant to ensure efficient performance. To

achieve this, dimensional reduction and feature selection

measures are of utmost significance. With these objectives, Olfati

et al. [37] applied Genetic Algorithm (GA) to enhanced PCA by

selecting optimal principal components analysis (GA-SPCA).

They applied PCA for dimension reduction, GA for feature

selection [38] and SVM for classification which facilitates to

achieve better performance in order to gain desired results.

The developed system is much effective as it is prepared by

utilizing advanced technology but it has a risk which may create

major problems due to minor technical issues. Moreover, it is

suggested that they can utilize Computerized tomography (CT)

scan and Positron emission tomography (PET) scan to detrmine

actual situation of breats cancer appropriately.

I. PROPOSED METHODOLOGY

This section primarily discusses the proposed research

work and implementation model to achieve intended BC-CAD

solution. This solution provide an accurate breast cancer

detection to assist early diagnosis in more efficient way. My

contribution is all about to make highly robust and efficient CNN

based on a model named as Deep Neural Network (DNN) model.

Moreover, it has been developed for breast cancer detection and

classification. In the current research work, a novel deep learning

based Breast Cancer detection and classification algorithm is

developed. In our work, the predominant emphasis is made on

developing a novel and robust automated CAD system for Breast

Cancer (BC) detection and classification. Our research method

applied on multi-dimensional shape to increase the performance

of the proposed BC-CAD system, where enhancement has been

made for major functional processes including pre-processing,

ROI detection and segmentation, feature extraction, feature

selection and classification. In addition, a standard breast cancer

dataset named BreaKHis [39], which is the Histopathological

image data for breast cancer, has been considered for our study.

To ensure better efficiency enriching input data or medical image

quality is often a better solution. With this objective, the input

Histopathological images are processed for noise removal and

resizing. Once retrieving the suitable input images, it has been

further processed for ROI segmentation, where an enhanced

GMM approach has been taken into consideration. Unlike

generic GMM algorithm, we have derived an adaptive learning

based Gaussian approach that enables swift and accurate ROI

identification. This as a result can play vital role in accurate and

significant feature retrieval for optimal BC-CAD solution.

Noticeably, our applied datasets BreaKHis [39] possesses key

features as marked cellular atypia, mitosis, disruption of

basement membranes, metastasize …etc. These features have

been applied to perform two class classifications. Realizing the

non-deniable fact that inaccurate ROI localization and

insignificant pixel conjuncture might lead inaccurate

3

perform masses detection over digitized mammograms. Texture

analysis based ROI detection for thermal imaging based BC-

CAD was performed in [25]. Considering the significance of

feature extraction for mammograms classification, Sanae et al.

[10] exploited comprehensive statistical Block-Based features,

which were extracted from sub-bands of the discrete wavelet

transformation. Once mapping the extraction features, SVM was

applied to perform classification. Unlike traditional approaches,

Maken et al. [26] examined the efficacy of Multiple Instance

Learning (MIL) algorithm for mammograms classification,

where they developed MIL using tile-based spatio-temporal

features. Rosa et al. [27] and Hatipoglu et al. [28] intended to

exploit MIL in conjunction with SVM for mammograms

classification. Non-deniably, MIL approaches have performed

better, particularly for unannotated data; however the likelihood

of better performance with deep features cannot be ignored.

To enable more efficient feature extraction and accurate

performance, DNN has gained significant attention. CNN has

gained widespread recognition to extract features from the

complex image data, such as mammograms, MRI medical data or

even histopathological datasets. CNN techniques which are

inspired biologically by the organization of human visual cortex

possess robust efficiency due to its ability to learn features

invariant to translation, rotation and shifting is their great

advantage. Spanhol et al. [29] applied CNN approach to classify

breast cancer Histopathological images. Their proposed model

performed learning CNN by means of training patches generated

through varied approaches. Authors obtained the best

classification accuracy of 89.6% for images with 40x

enlargement. To achieve this result, authors considered the

patches of the size 64x64 pixels. Hatipoglu et al. [28] used CNN

to classify cellular and non-cellular structures in breast cancer

histopathological images. Authors applied neural network to

perform classification, where they achieved the best classification

accuracy of 86.88%. Recently, Wang et al. [30] used Googlenet

CNN model to perform Histopathological image classification,

where they achieved the accuracy of 98.4% patch classification.

To detect the invasive ductal carcinoma tissue in histological

images for BC-CAD, Cruz-Roa et al. [31] applied CNN feature

extraction approach. Ertosun et al. [32] developed BC-CAD

model using Deep Learning with three distinct CNN models so as

to localize masses in mammography images. To enhance

accuracy of segmentation or ROI identification, authors

incorporated additional novelties such as cropping, translation,

rotation, flipping and scaling techniques. Arevalo et al. [33]

applied CNN based feature extraction followed by SVM based

classification for BC-CAD solution. Authors achieved Receiver

Operating Characteristics (ROC) of 86%. A similar work was

performed in [34], where authors achieved classification

accuracy of 96.7%. Russakovsky et al. [35] and Zuiderveld et al.

[40] trained CNN over ImageNet to perform breast cancer

classification. Authors Abdel-Zaher et al. [36] and Wang et al.

[41] performed breast cancer classification, developing a

classifier by means of the weights of a previously trained Deep

Belief Network (DBN). They applied Levenberg Marquardt

learning based ANN to perform classification. Amongst the

various researches it has been realized that in addition to the ROI

identification and feature extraction efficiency, selecting optimal

features is equally significant to ensure efficient performance. To

achieve this, dimensional reduction and feature selection

measures are of utmost significance. With these objectives, Olfati

et al. [37] applied Genetic Algorithm (GA) to enhanced PCA by

selecting optimal principal components analysis (GA-SPCA).

They applied PCA for dimension reduction, GA for feature

selection [38] and SVM for classification which facilitates to

achieve better performance in order to gain desired results.

The developed system is much effective as it is prepared by

utilizing advanced technology but it has a risk which may create

major problems due to minor technical issues. Moreover, it is

suggested that they can utilize Computerized tomography (CT)

scan and Positron emission tomography (PET) scan to detrmine

actual situation of breats cancer appropriately.

I. PROPOSED METHODOLOGY

This section primarily discusses the proposed research

work and implementation model to achieve intended BC-CAD

solution. This solution provide an accurate breast cancer

detection to assist early diagnosis in more efficient way. My

contribution is all about to make highly robust and efficient CNN

based on a model named as Deep Neural Network (DNN) model.

Moreover, it has been developed for breast cancer detection and

classification. In the current research work, a novel deep learning

based Breast Cancer detection and classification algorithm is

developed. In our work, the predominant emphasis is made on

developing a novel and robust automated CAD system for Breast

Cancer (BC) detection and classification. Our research method

applied on multi-dimensional shape to increase the performance

of the proposed BC-CAD system, where enhancement has been

made for major functional processes including pre-processing,

ROI detection and segmentation, feature extraction, feature

selection and classification. In addition, a standard breast cancer

dataset named BreaKHis [39], which is the Histopathological

image data for breast cancer, has been considered for our study.

To ensure better efficiency enriching input data or medical image

quality is often a better solution. With this objective, the input

Histopathological images are processed for noise removal and

resizing. Once retrieving the suitable input images, it has been

further processed for ROI segmentation, where an enhanced

GMM approach has been taken into consideration. Unlike

generic GMM algorithm, we have derived an adaptive learning

based Gaussian approach that enables swift and accurate ROI

identification. This as a result can play vital role in accurate and

significant feature retrieval for optimal BC-CAD solution.

Noticeably, our applied datasets BreaKHis [39] possesses key

features as marked cellular atypia, mitosis, disruption of

basement membranes, metastasize …etc. These features have

been applied to perform two class classifications. Realizing the

non-deniable fact that inaccurate ROI localization and

insignificant pixel conjuncture might lead inaccurate

3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

classification accuracy, we have applied Connected Component

Analysis (CCA) model over segmented region. It eliminates

those image components that percept to be connected with the

target ROI; however, it is insignificant toward BC-CAD

classification. Once obtaining the CCA processed segmented

ROI, we have processed it for feature extraction followed by

feature selection and classification to achieve anticipated BC-

CAD solution. Unlike existing feature extraction models, such as

Gabor filter [11], Wavelet Transform [8][9][10], MIL [26-28] or

even classical CNNs [28-33][35], in our research a CNN model

is derived. In the proposed research work, an enhanced DNN

model named AlexNet DNN has been applied to extract

significant features from the Histopathological image datasets.

Realizing the fact that high dimensional features often provide

more significant information to make precise classification or

analysis; in our research work, we have developed AlexNet-DNN

to extract high dimensional features with 4096 Kernels or

dimensions. The proposed AlexNet-DNN model comprises five

convolutional layers in succession with three Fully Connected

(FC) layers (FC6, FC7 and FC8). The feature extracted at the

higher layer provides more significant information that helps in

achieving accurate BC-CAD performance. Considering major

classical approaches, where the probability of over-fitting and

accuracy is often ignored, we have applied a supplementary

model called Caffe-enet that assists AlexNet-DNN to overcome

existing limitations and enables its (AlexNet-DNN) execution

over general purpose computers without any need of

sophisticated Graphical Processing Units (GPUs). The use of

Caffe-enet in conjunction with AlexNet-DNN plays a vital role in

assuring efficient feature extraction even under large scale

unannotated data. It makes a suitable environment for major

breast cancer detection applications. Specifically, owing to high

unannotated breast cancer data, performing DNN learning and

further classification is a mammoth task. Hence to alleviate such

issues, in our proposed model AlexNet applied multilayered

DNN architecture, where at each layer we retrieve the features

for further mapping and classification. However, to achieve more

efficient results, we have considered 4096-dimensional features

extracted at the Fully Connected (FC) layers, FC-6 and FC-7 of

the AlexNet-DNN. The detailed discussion of the developed

DNN model and its implementation for BC-CAD is given in the

next section. In addition, to the proposed AlexNet-DNN based

BC-CAD solution, in this research we have developed a parallel

feature extraction model, called Scale Invariant Fourier

Transform (SIFT). We have applied Fully-Connected (FC)

layers: FC6 and FC7 features, which are 4096-dimensional

features and hence amounts a huge data to process. To enhance

computational efficiency, in this research work, we have applied

PCA and LDA techniques to perform dimension reduction or

feature selection. Once processing for the dimensional reduction,

the selected features are projected to polynomial kernel based

classifier that performs two class classifications: Malignant and

Benign. To further strengthen the efficiency of our proposed

work, 10-fold cross validation approach is applied that ensures

optimal classification accuracy for BC-CAD solution.

The overall proposed research methodology and

implementation schematic is given in the next section.

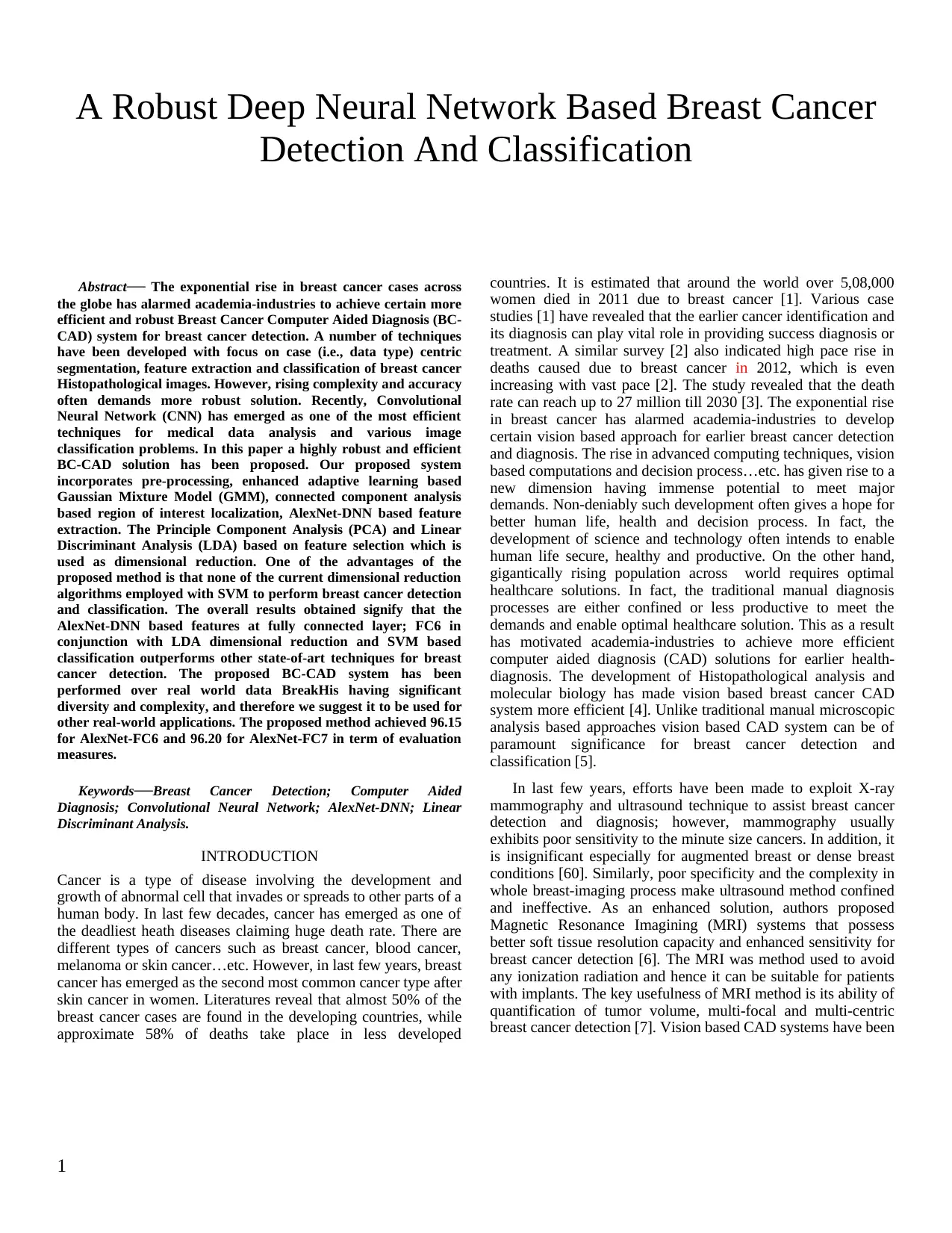

PROPOSED SYSTEM DESIGN

In this section, the detailed discussion of the proposed

research work and implementation schematic is presented. Before

discussing the proposed BC-CAD solution, introducing key

terminologies is must. Table I presents the nomenclature of

different abbreviated terms.

BC Data

Pre/Post

Processing

Breast Cancer

region

Segmentation

SIFT

Caffee-AlexNet

DNN

FC6

FC7

AlexNet-FC6-LDA

AlexNet-FC6-PCA

AlexNet-FC7-LDA

AlexNet-FC7-PCA

SIFT-FV--PCA

SIFT-FV-LDA

Fig. 1. Propsoed BC-CAD System

A detailed discussion of the proposed research model is

given, as presented in Fig. 2, as follows:

.a Breast Cancer Histopathological Image Data

Collection

.b Pre-processing

.c ROI Detection and Localization

.d Feature Extraction

.e Dimensional reduction of Feature Selection,

and

.f BC-CAD Two-Class Classification.

Which will be discussed in details:

1. Breast Cancer Histopathological Image Data

collection

In this research or study, we have used standard datasets

named Breast Cancer Histopathological Image Classification

(BreakHis) [39] that contains a total of 9,109 Histopathological

(say, microscopic) images of breast cancer tissue. The historical

perspective of the BreaKHis states that the microscopic images

are retrieved from 82 patients. BreakHis dataset has been built in

association with the P&D Laboratory - Pathological Anatomy

and Cytopathology, Parana, Brazil (http://www.

prevencaoediagnose.com.br). Unlike generic singe size or single

feature datasets, BreaKHis data are obtained with varied

4

Analysis (CCA) model over segmented region. It eliminates

those image components that percept to be connected with the

target ROI; however, it is insignificant toward BC-CAD

classification. Once obtaining the CCA processed segmented

ROI, we have processed it for feature extraction followed by

feature selection and classification to achieve anticipated BC-

CAD solution. Unlike existing feature extraction models, such as

Gabor filter [11], Wavelet Transform [8][9][10], MIL [26-28] or

even classical CNNs [28-33][35], in our research a CNN model

is derived. In the proposed research work, an enhanced DNN

model named AlexNet DNN has been applied to extract

significant features from the Histopathological image datasets.

Realizing the fact that high dimensional features often provide

more significant information to make precise classification or

analysis; in our research work, we have developed AlexNet-DNN

to extract high dimensional features with 4096 Kernels or

dimensions. The proposed AlexNet-DNN model comprises five

convolutional layers in succession with three Fully Connected

(FC) layers (FC6, FC7 and FC8). The feature extracted at the

higher layer provides more significant information that helps in

achieving accurate BC-CAD performance. Considering major

classical approaches, where the probability of over-fitting and

accuracy is often ignored, we have applied a supplementary

model called Caffe-enet that assists AlexNet-DNN to overcome

existing limitations and enables its (AlexNet-DNN) execution

over general purpose computers without any need of

sophisticated Graphical Processing Units (GPUs). The use of

Caffe-enet in conjunction with AlexNet-DNN plays a vital role in

assuring efficient feature extraction even under large scale

unannotated data. It makes a suitable environment for major

breast cancer detection applications. Specifically, owing to high

unannotated breast cancer data, performing DNN learning and

further classification is a mammoth task. Hence to alleviate such

issues, in our proposed model AlexNet applied multilayered

DNN architecture, where at each layer we retrieve the features

for further mapping and classification. However, to achieve more

efficient results, we have considered 4096-dimensional features

extracted at the Fully Connected (FC) layers, FC-6 and FC-7 of

the AlexNet-DNN. The detailed discussion of the developed

DNN model and its implementation for BC-CAD is given in the

next section. In addition, to the proposed AlexNet-DNN based

BC-CAD solution, in this research we have developed a parallel

feature extraction model, called Scale Invariant Fourier

Transform (SIFT). We have applied Fully-Connected (FC)

layers: FC6 and FC7 features, which are 4096-dimensional

features and hence amounts a huge data to process. To enhance

computational efficiency, in this research work, we have applied

PCA and LDA techniques to perform dimension reduction or

feature selection. Once processing for the dimensional reduction,

the selected features are projected to polynomial kernel based

classifier that performs two class classifications: Malignant and

Benign. To further strengthen the efficiency of our proposed

work, 10-fold cross validation approach is applied that ensures

optimal classification accuracy for BC-CAD solution.

The overall proposed research methodology and

implementation schematic is given in the next section.

PROPOSED SYSTEM DESIGN

In this section, the detailed discussion of the proposed

research work and implementation schematic is presented. Before

discussing the proposed BC-CAD solution, introducing key

terminologies is must. Table I presents the nomenclature of

different abbreviated terms.

BC Data

Pre/Post

Processing

Breast Cancer

region

Segmentation

SIFT

Caffee-AlexNet

DNN

FC6

FC7

AlexNet-FC6-LDA

AlexNet-FC6-PCA

AlexNet-FC7-LDA

AlexNet-FC7-PCA

SIFT-FV--PCA

SIFT-FV-LDA

Fig. 1. Propsoed BC-CAD System

A detailed discussion of the proposed research model is

given, as presented in Fig. 2, as follows:

.a Breast Cancer Histopathological Image Data

Collection

.b Pre-processing

.c ROI Detection and Localization

.d Feature Extraction

.e Dimensional reduction of Feature Selection,

and

.f BC-CAD Two-Class Classification.

Which will be discussed in details:

1. Breast Cancer Histopathological Image Data

collection

In this research or study, we have used standard datasets

named Breast Cancer Histopathological Image Classification

(BreakHis) [39] that contains a total of 9,109 Histopathological

(say, microscopic) images of breast cancer tissue. The historical

perspective of the BreaKHis states that the microscopic images

are retrieved from 82 patients. BreakHis dataset has been built in

association with the P&D Laboratory - Pathological Anatomy

and Cytopathology, Parana, Brazil (http://www.

prevencaoediagnose.com.br). Unlike generic singe size or single

feature datasets, BreaKHis data are obtained with varied

4

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

magnification factors such as 40X, 100X, 200X, and 400X. It

shows the diversity of the data under consideration and the

suitability of BC-CAD model with such data can make it

applicable for different data types. BreakHis breast cancer dataset

contains a total of 2,480 benign and 5,429 malignant

Histopathological images with pixel-dimension 700X460, 3-

channel RGB, 8-bit depth in each channel. The applied BreakHis

datasets contains two distinct groups: benign tumors and

malignant tumors. Noticeably, histologically benign signifies

marked cellular atypia, mitosis…etc. In other words, it signifies

a lesion having no probability of malignancy. Usually, benign

tumors are stated to be “innocents”, which grows gradually and

often remains confined to a definite shape and size. On the other

hand, malignant tumor refers the cancer. It refers a lesion that

invades, destroys the neighboring structures and even spread

across distant sites causing eventual death. The applied BreaKHis

datasets has been obtained by means of partial mastectomy or

excisional biopsy [39].

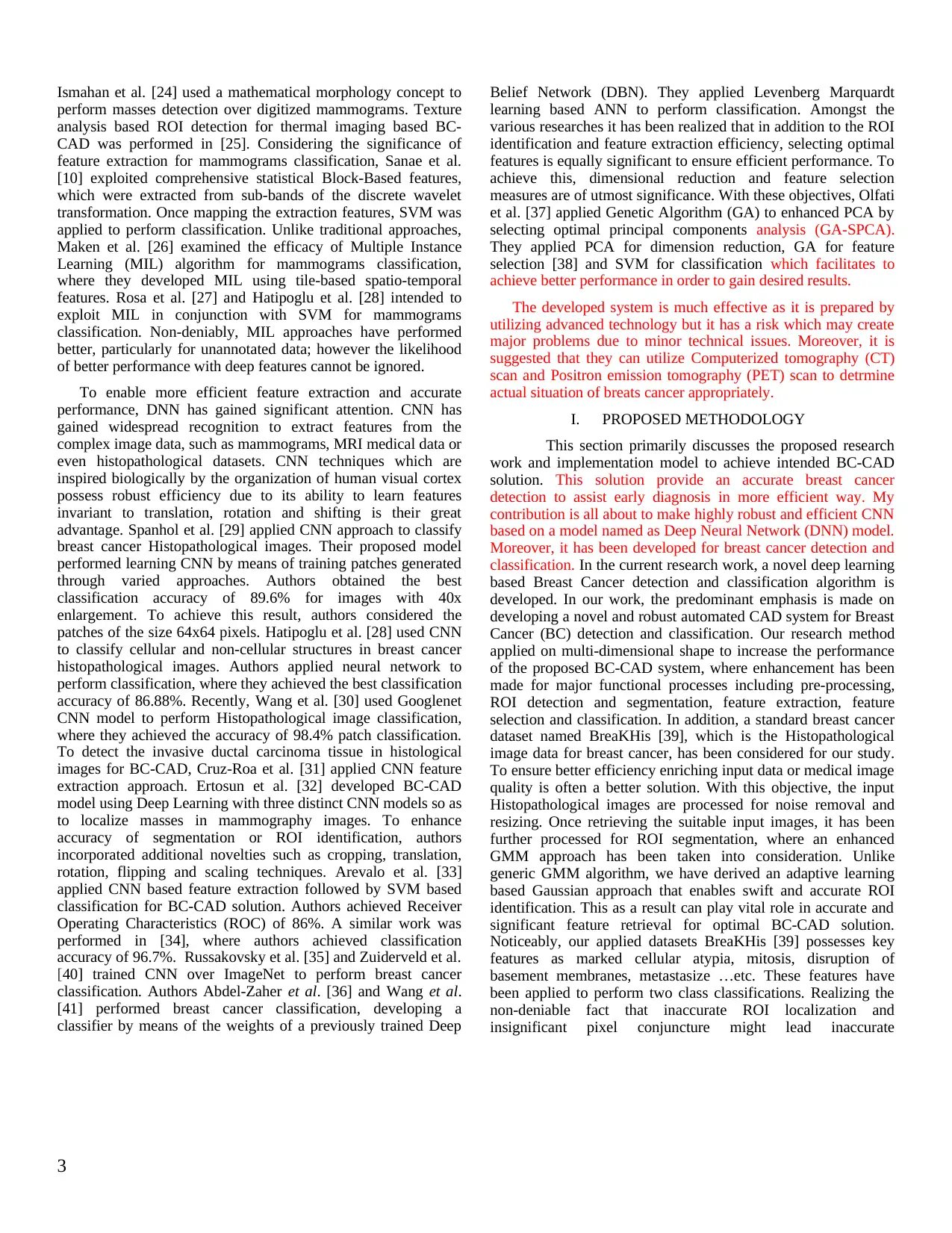

Breast Cancer Segmentation

Connected Components Analysis

ROI Localization

Alaxnet-DNN Based Feature

Extraction

Pre-processing and Anisotropic

Diffusion Based Noise Removal

Breast Cancer (Mass, Tumour, Calcification)

Detection

PCA/LDA based Feature Selection and

Dimensional Reduction

Feature Vector Preparation

10-Fold Cross Validation Based SVM

For BCD Diagnosis Prediction

Malignant

Breast Cancer Detection

and Segmentation

Breast Cancer

Prediction and

Classification

Breast Cancer Data

(BreakHis)

Benign

Performance Analysis

Fig 2. Proposed AlexNet-DNN based BC-CAD solution

The distribution of the data under study is given in Table II.

TABLE II. BREAKHIS 1.0 IS STRUCTURE

Magnification Benign Malignant Total

40X 652 1370 1995

100X 644 1437 2081

200X 623 1390 2013

400X 588 1232 1820

Total of Images 2480 5429 7909

Interestingly, both types of breast tumors can be sorted in

distinct categories on the basis of the way the tumoral cells look

under the microscope. Different types of the breast tumors can

have distinct prognoses and treatment implications. Our data sets

comprise the following distinct types of tumors. The III table

shown about different types of tumors such as benign and

5

shows the diversity of the data under consideration and the

suitability of BC-CAD model with such data can make it

applicable for different data types. BreakHis breast cancer dataset

contains a total of 2,480 benign and 5,429 malignant

Histopathological images with pixel-dimension 700X460, 3-

channel RGB, 8-bit depth in each channel. The applied BreakHis

datasets contains two distinct groups: benign tumors and

malignant tumors. Noticeably, histologically benign signifies

marked cellular atypia, mitosis…etc. In other words, it signifies

a lesion having no probability of malignancy. Usually, benign

tumors are stated to be “innocents”, which grows gradually and

often remains confined to a definite shape and size. On the other

hand, malignant tumor refers the cancer. It refers a lesion that

invades, destroys the neighboring structures and even spread

across distant sites causing eventual death. The applied BreaKHis

datasets has been obtained by means of partial mastectomy or

excisional biopsy [39].

Breast Cancer Segmentation

Connected Components Analysis

ROI Localization

Alaxnet-DNN Based Feature

Extraction

Pre-processing and Anisotropic

Diffusion Based Noise Removal

Breast Cancer (Mass, Tumour, Calcification)

Detection

PCA/LDA based Feature Selection and

Dimensional Reduction

Feature Vector Preparation

10-Fold Cross Validation Based SVM

For BCD Diagnosis Prediction

Malignant

Breast Cancer Detection

and Segmentation

Breast Cancer

Prediction and

Classification

Breast Cancer Data

(BreakHis)

Benign

Performance Analysis

Fig 2. Proposed AlexNet-DNN based BC-CAD solution

The distribution of the data under study is given in Table II.

TABLE II. BREAKHIS 1.0 IS STRUCTURE

Magnification Benign Malignant Total

40X 652 1370 1995

100X 644 1437 2081

200X 623 1390 2013

400X 588 1232 1820

Total of Images 2480 5429 7909

Interestingly, both types of breast tumors can be sorted in

distinct categories on the basis of the way the tumoral cells look

under the microscope. Different types of the breast tumors can

have distinct prognoses and treatment implications. Our data sets

comprise the following distinct types of tumors. The III table

shown about different types of tumors such as benign and

5

malignant along with their examples including overall breast

tumors which may or may not be cancer.

TABLE III. TUMOR CONTAINTS (DISTRIBUTION) IN BREAKHIS 1.0 IS

Benign Tumors: adenosis Malignant Tumors

Fibroadenoma carcinoma

phyllodes tumor lobular carcinoma

tubular adenona mucinous carcinoma

adenosis papillary carcinoma

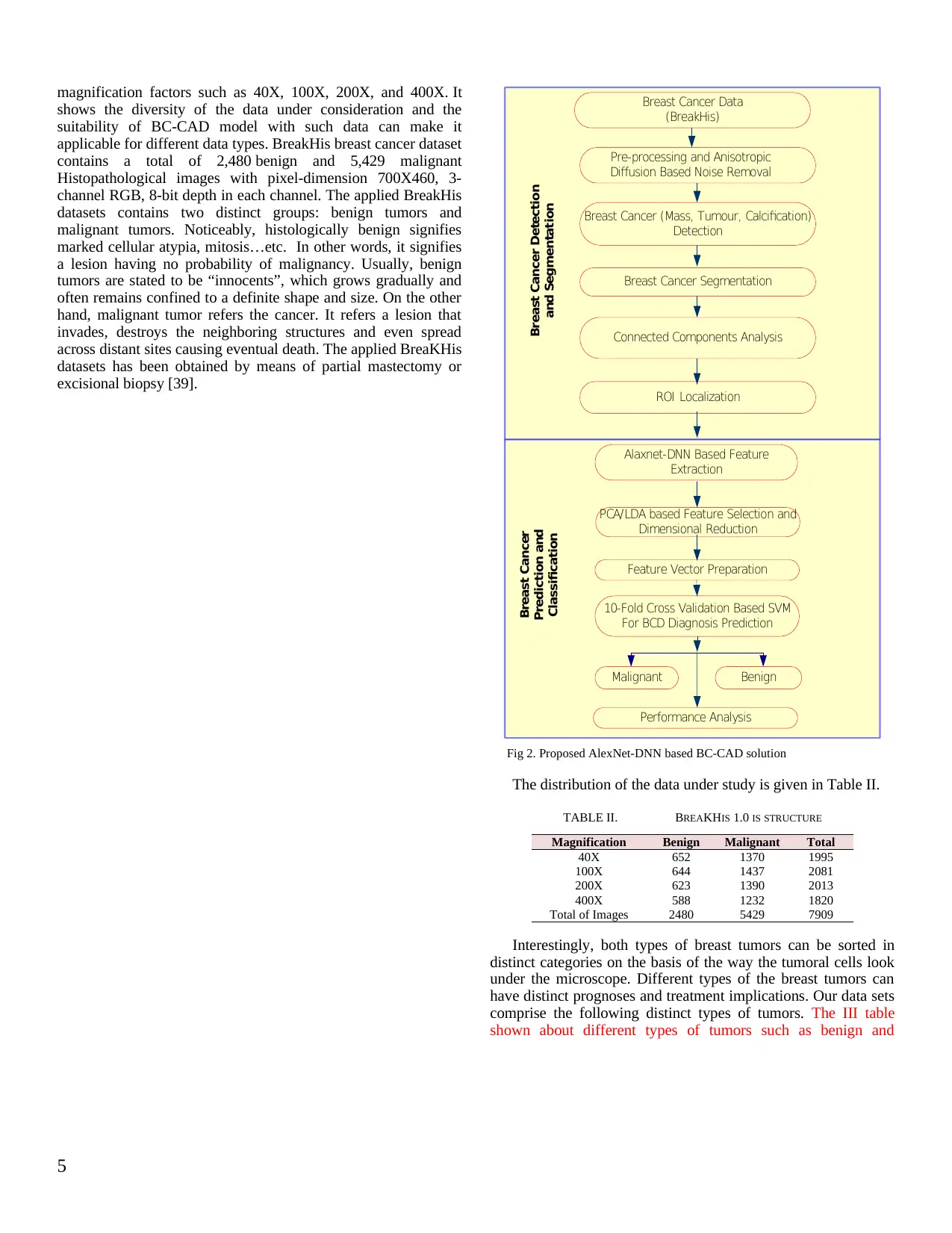

In the dataset, individual Histopathological image filename

stores significant image information containing biopsy method,

type of tumor, patient information (Patient ID), and

magnification factor. For illustration, for an image SOB_B_TA-

14-4659-40-001.png, as illustrated in Fig. 3, it signifies a benign

tumor of tubular adenoma type, obtained at the magnification

factor 40. The biopsy method used was SOB.

(a) (b)

(c) (d)

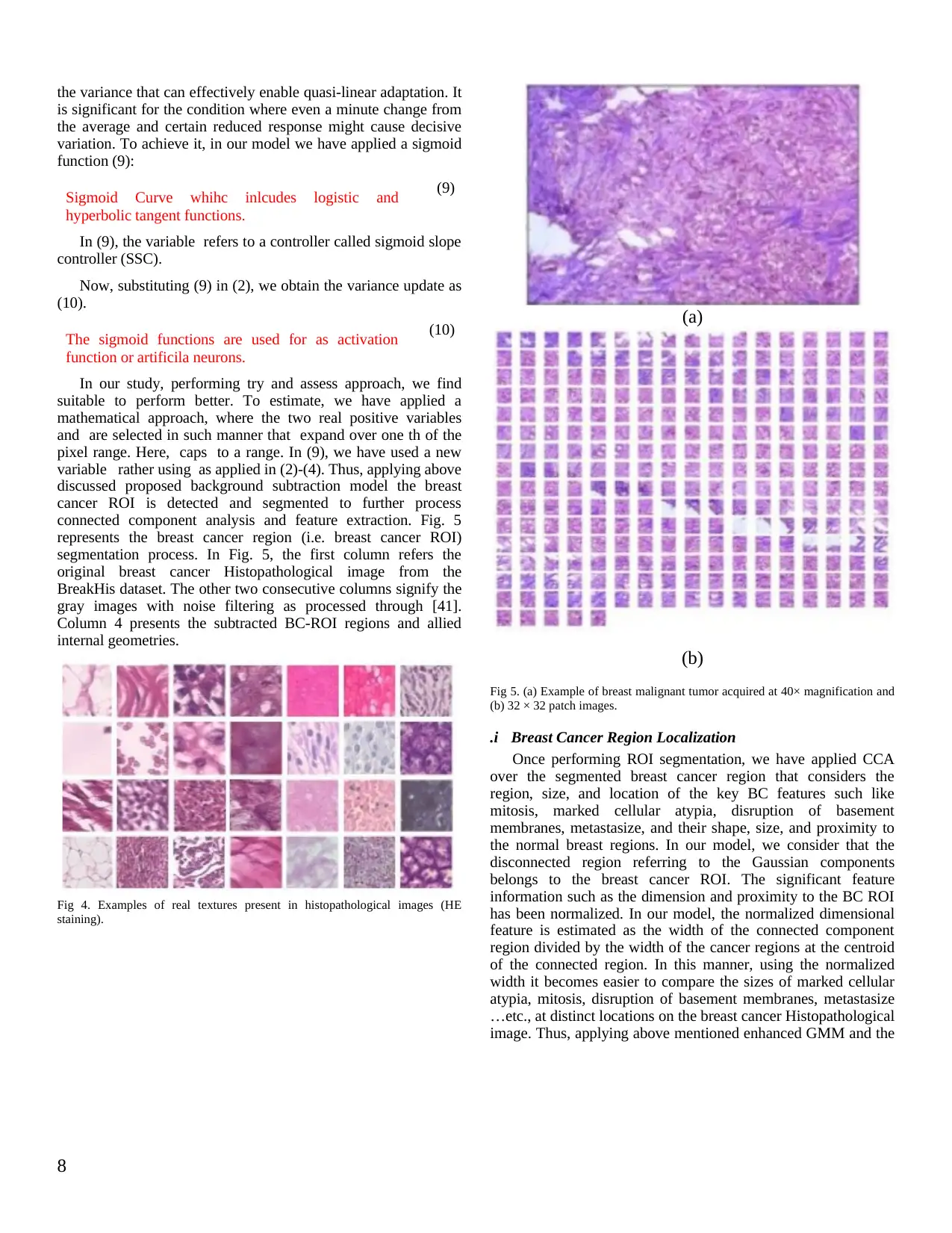

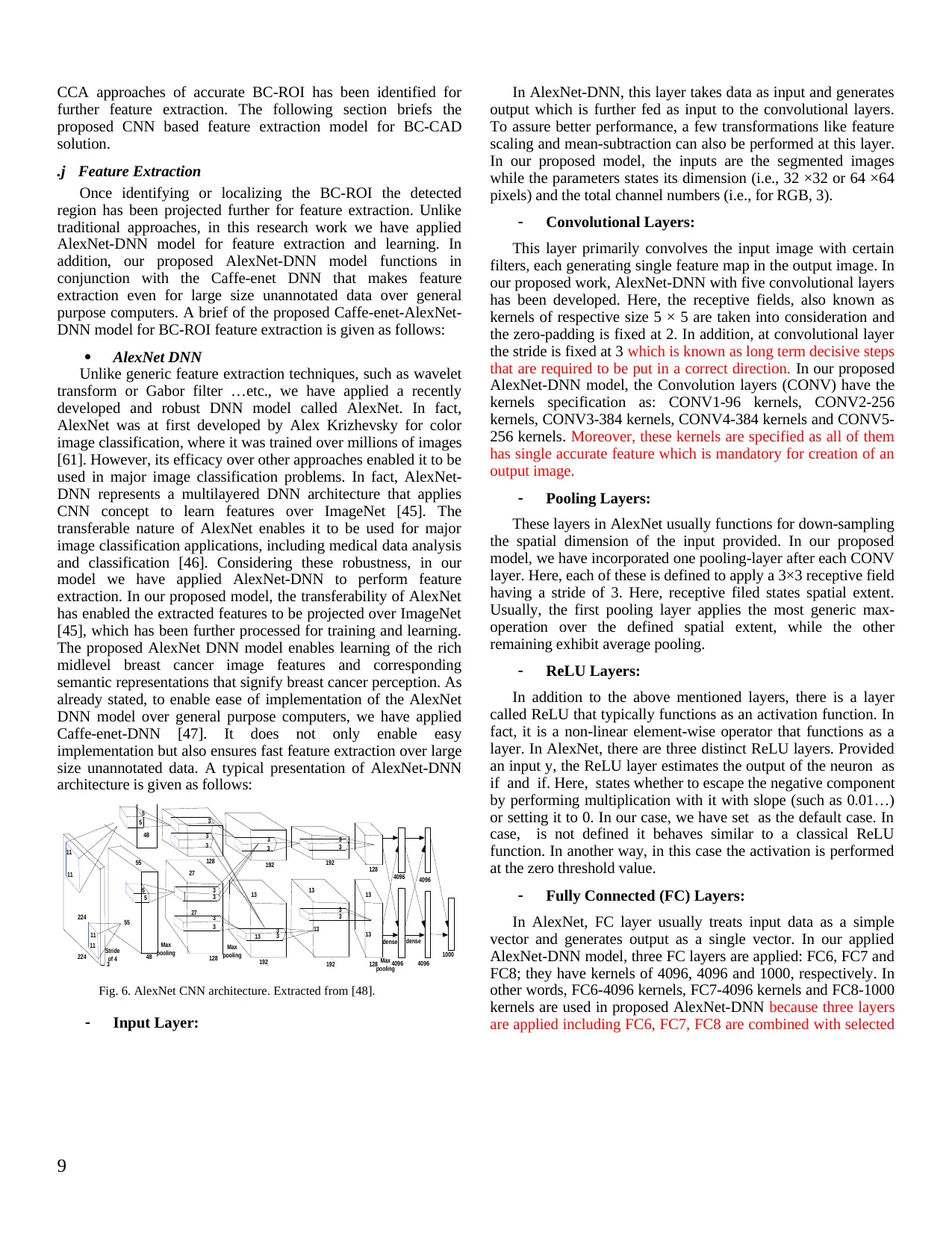

Fig 3. Snippet of the breast malignent cancer at different magnification

factors: (a) 40X, (b) 100X, (c) 200X, and (d) 400X [39].

.g Pre-processing

Non-deniably, the quality of data influences the final

classification accuracy and efficiency. To enable suitable data for

further processing, we have performed pre-processing. In our

research model, the pre-processing model is exceptionally

significant to ensure precise nuclei and allied vicinity

identification and characterization. In breast cancer detection, the

features, such as the shape and size of nuclei, glandular shape …

etc. is expected to be precisely identifiable. Performing pre-

processing assures that suppression of the original image

qualities required to estimate or identify texture representation

and other significant features, precisely [40]. Realizing

interchangeable nature of the pre-processing approaches, we have

performed pre-processing before performing candidate region

detection and feature extraction [40-41]. In our paper, we have

followed the pre-processing instructions provided in [35][41]

Once performing pre-processing, the concept region

identification or segmentation has been processed. In addition to

the above mentioned, in pre-processing, we have resized input

breast cancer Histopathological images to 224X224. A brief of

the proposed segmentation model or ROI identification approach

will be explained as follows.

.h Concept Region Identification

In this section, the process of breast cancer region detection

and segmentation is discussed. Unlike traditional approaches of

segmentation such as Otsu’s method [42], our model applies an

enhanced GMM technique. Here, we segment cancer tumor

region from the background so as to distinguish it from other

regions over Histopathological images. GMM has exhibited

better performance over other state-of-the-art techniques for

background subtraction and ROI identification because of b a

pixel-wise segmentation approach. In addition, GMM robustness

causes to be used for segmenting ROI from the background

region that can be further processed for feature extraction over

the detected ROI. A brief of the proposed GMM model for ROI

detection including several steps is given as follows:

Adaptive-Learning-Based GMM for Background Subtraction

The typical Histopathological images exhibits highly complex

color and textural features that makes classical segmentation

approaches, such as Otsu method, static thresholding based

segmentation and even generic GMM confined to enable optimal

ROI detection. One of the predominant reasons is the static

learning rate [43]. To deal with such limitations, in this research

model, we have developed an enhanced adaptive learning based

GMM model for ROI detection and segmentation. Consider is

the pixel-value at certain instant; GMM can be used to estimate

Probability Density Function (PDF) of. Noticeably, PDF can

have the all connected Gaussians. Here, the PDF of the Gaussian

mixture with components is estimated as: Where signifies

weight factor,. Here, y represents the output with respect to the

values of at nth instant. Here y refers the normalized density of

mean . In equation (1), the variable represents the covariance

matrix. In fact, this condition hypothesizes that the pixel values

of the color components (i.e., red, green, and blue) in the breast

cancer image are independent and have equal variances. On the

contrary, it is infeasible and therefore the distribution of recently

occurred pixel values in the Histopathological image can be

defined in the Gaussian mixture form. In our model, the value of

a new pixel is characterized by means of one of the predominant

components of the mixture model, which is further applied to

update the model. Stauffer et al. [43] applied this concept to

estimate background where initially they initialized these

variables with zero. Once detecting any similarity, such as, with

and as a threshold, the operating variables of the GMM model

are obtained as:

Initially, Neural Networks which are internediatde (1)

6

tumors which may or may not be cancer.

TABLE III. TUMOR CONTAINTS (DISTRIBUTION) IN BREAKHIS 1.0 IS

Benign Tumors: adenosis Malignant Tumors

Fibroadenoma carcinoma

phyllodes tumor lobular carcinoma

tubular adenona mucinous carcinoma

adenosis papillary carcinoma

In the dataset, individual Histopathological image filename

stores significant image information containing biopsy method,

type of tumor, patient information (Patient ID), and

magnification factor. For illustration, for an image SOB_B_TA-

14-4659-40-001.png, as illustrated in Fig. 3, it signifies a benign

tumor of tubular adenoma type, obtained at the magnification

factor 40. The biopsy method used was SOB.

(a) (b)

(c) (d)

Fig 3. Snippet of the breast malignent cancer at different magnification

factors: (a) 40X, (b) 100X, (c) 200X, and (d) 400X [39].

.g Pre-processing

Non-deniably, the quality of data influences the final

classification accuracy and efficiency. To enable suitable data for

further processing, we have performed pre-processing. In our

research model, the pre-processing model is exceptionally

significant to ensure precise nuclei and allied vicinity

identification and characterization. In breast cancer detection, the

features, such as the shape and size of nuclei, glandular shape …

etc. is expected to be precisely identifiable. Performing pre-

processing assures that suppression of the original image

qualities required to estimate or identify texture representation

and other significant features, precisely [40]. Realizing

interchangeable nature of the pre-processing approaches, we have

performed pre-processing before performing candidate region

detection and feature extraction [40-41]. In our paper, we have

followed the pre-processing instructions provided in [35][41]

Once performing pre-processing, the concept region

identification or segmentation has been processed. In addition to

the above mentioned, in pre-processing, we have resized input

breast cancer Histopathological images to 224X224. A brief of

the proposed segmentation model or ROI identification approach

will be explained as follows.

.h Concept Region Identification

In this section, the process of breast cancer region detection

and segmentation is discussed. Unlike traditional approaches of

segmentation such as Otsu’s method [42], our model applies an

enhanced GMM technique. Here, we segment cancer tumor

region from the background so as to distinguish it from other

regions over Histopathological images. GMM has exhibited

better performance over other state-of-the-art techniques for

background subtraction and ROI identification because of b a

pixel-wise segmentation approach. In addition, GMM robustness

causes to be used for segmenting ROI from the background

region that can be further processed for feature extraction over

the detected ROI. A brief of the proposed GMM model for ROI

detection including several steps is given as follows:

Adaptive-Learning-Based GMM for Background Subtraction

The typical Histopathological images exhibits highly complex

color and textural features that makes classical segmentation

approaches, such as Otsu method, static thresholding based

segmentation and even generic GMM confined to enable optimal

ROI detection. One of the predominant reasons is the static

learning rate [43]. To deal with such limitations, in this research

model, we have developed an enhanced adaptive learning based

GMM model for ROI detection and segmentation. Consider is

the pixel-value at certain instant; GMM can be used to estimate

Probability Density Function (PDF) of. Noticeably, PDF can

have the all connected Gaussians. Here, the PDF of the Gaussian

mixture with components is estimated as: Where signifies

weight factor,. Here, y represents the output with respect to the

values of at nth instant. Here y refers the normalized density of

mean . In equation (1), the variable represents the covariance

matrix. In fact, this condition hypothesizes that the pixel values

of the color components (i.e., red, green, and blue) in the breast

cancer image are independent and have equal variances. On the

contrary, it is infeasible and therefore the distribution of recently

occurred pixel values in the Histopathological image can be

defined in the Gaussian mixture form. In our model, the value of

a new pixel is characterized by means of one of the predominant

components of the mixture model, which is further applied to

update the model. Stauffer et al. [43] applied this concept to

estimate background where initially they initialized these

variables with zero. Once detecting any similarity, such as, with

and as a threshold, the operating variables of the GMM model

are obtained as:

Initially, Neural Networks which are internediatde (1)

6

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

varicables that are not observable known as hidden

units.

Support Vector Machine can be formulated in

context of binary classification to find decision

boudary for maximising margin between two

data classes.

(2)

Partial Least Square include principal

component analysis which has same concept as

PLS.

(3)

LDA technique which are combined in one

objective function.

(4)

where

If no element matches together then the element with the

minimal is re-initialized, and the variables (2-4) are updated as:

SVM and PLS (5)

In above expression (5), refers to the learning rate. Here, is

obtained as:

Support ventor machines is formulatde in order

to for dula optimization problem whihc ensures

unique global solution.

(6)

In our proposed GMM model, we normalize in a way that it

converges toward unit value (i.e., 1). To obtain background

region, authors [43] sorted Gaussians in decreasing order by

using threshold in conjunction with the sums of the weights. As

a result, this achieves a set, where is obtained as:

It summarise turning parameter of all models

which are inlcuded in proposed theory.

(7)

With decrease in variances, usually the Gaussian distribution

gains increases. This as a result makes it more evident to perform

background subtraction. Once estimating GMM parameters,

sorting is performed from the matched mixture distribution

toward most probable background. This is feasible only because

only the relative value of the matched model changes. In our

applied model, ordering is performed in a way that the most

feasible background distributions remains on the top, while the

low probability distributions move towards bottom. In such

manner, the initial value of (7) is considered as the background.

In (7), parameter signifies the result of the least section of the

data responsible for the background. With the minimal possible

value of , the background model is stated to be unimodal, where

using only the most probable distribution enhances

computational efficiency. On the contrary, the high signifies

multimodal distribution, is typically caused due to the iterative

background motion. In this paper, the breast cancer

Histopathological images with static features are taken as input

and hence the use of unimodal approach with low ensures

computationally efficient process. In our approach, the Gaussian

with the maximum and minimal refer to the background region.

Thus, applying aforementioned approach the background region

can be identified that consequently helps in segmenting the ROI.

In major cases, classical GMM updates with certain fixed

learning rate [43] are used. While, considering breast cancer

detection requirement, the static learning rate approach seems

questionable, particularly when there can be excessive texture

variation over the breast cancer Histopathological images. To

deal with such limitation, authors [44] developed an adaptive

learning rate based GMM for ROI segmentation over varying

surface or texture conditions. Non-deniably, there can be certain

texture or the surface conditions, where the pixel might neither be

the breast can tumor (i.e., ROI) nor the background; however, it

could be classified as either type Fibroadenoma and phyllodes

tumor. This as a result may influence accuracy of the proposed

BC-CAD solution. Increasing learning rate might cause high-

rate pixel feature changes [44] that consequently may make

breast cancer tumor or ROI detection susceptible. Though, the

adaptive learning based GMM model [43] can perform ROI

identification under varying background conditions. However,

the very minute and fine-connected texture or surface features

still make existing approach limited. Precisely for breast cancer

Histopathological images, textural or structural features, such as

cellular atypia, mitosis, disruption of basement membranes,

metastasize …etc. are highly intricate and minute feature that

requires more efficient ROI identification model. An enhanced

Gaussian mixture learning based GMM model was proposed in

[44]. However, authors could not address the saturation scenario

particularly during convergence. As an enhanced solution, in this

paper an adaptive learning based GMM model is developed that

detects precise ROI. In our proposed approach, initially, we have

decoupled learning rate from other components such as and. We

have applied a new learning rate variable called adaptive learning

rate that updates mean component using a probability

parameter. Here, states whether a pixel is a part of the nth

Gaussian distribution or not. In our model, the adaptive learning

rate parameter is estimated as follows:

Thresold (8)

Where, states the number of distributions.

Can provide fast and accurate Gaussian update that may

enhance the system to adapt with swift textural variations. As a

result, this can enable optimal ROI detection for the targeted BC-

CAD solution.

Now, substituting as in (3), it is observed that variance

updating might avoid Gaussian saturation. On the other hand, the

fast learning rate might cause degeneracy [44]. To deal with this

issue, we have developed a semi-parametric model to estimate

7

units.

Support Vector Machine can be formulated in

context of binary classification to find decision

boudary for maximising margin between two

data classes.

(2)

Partial Least Square include principal

component analysis which has same concept as

PLS.

(3)

LDA technique which are combined in one

objective function.

(4)

where

If no element matches together then the element with the

minimal is re-initialized, and the variables (2-4) are updated as:

SVM and PLS (5)

In above expression (5), refers to the learning rate. Here, is

obtained as:

Support ventor machines is formulatde in order

to for dula optimization problem whihc ensures

unique global solution.

(6)

In our proposed GMM model, we normalize in a way that it

converges toward unit value (i.e., 1). To obtain background

region, authors [43] sorted Gaussians in decreasing order by

using threshold in conjunction with the sums of the weights. As

a result, this achieves a set, where is obtained as:

It summarise turning parameter of all models

which are inlcuded in proposed theory.

(7)

With decrease in variances, usually the Gaussian distribution

gains increases. This as a result makes it more evident to perform

background subtraction. Once estimating GMM parameters,

sorting is performed from the matched mixture distribution

toward most probable background. This is feasible only because

only the relative value of the matched model changes. In our

applied model, ordering is performed in a way that the most

feasible background distributions remains on the top, while the

low probability distributions move towards bottom. In such

manner, the initial value of (7) is considered as the background.

In (7), parameter signifies the result of the least section of the

data responsible for the background. With the minimal possible

value of , the background model is stated to be unimodal, where

using only the most probable distribution enhances

computational efficiency. On the contrary, the high signifies

multimodal distribution, is typically caused due to the iterative

background motion. In this paper, the breast cancer

Histopathological images with static features are taken as input

and hence the use of unimodal approach with low ensures

computationally efficient process. In our approach, the Gaussian

with the maximum and minimal refer to the background region.

Thus, applying aforementioned approach the background region

can be identified that consequently helps in segmenting the ROI.

In major cases, classical GMM updates with certain fixed

learning rate [43] are used. While, considering breast cancer

detection requirement, the static learning rate approach seems

questionable, particularly when there can be excessive texture

variation over the breast cancer Histopathological images. To

deal with such limitation, authors [44] developed an adaptive

learning rate based GMM for ROI segmentation over varying

surface or texture conditions. Non-deniably, there can be certain

texture or the surface conditions, where the pixel might neither be

the breast can tumor (i.e., ROI) nor the background; however, it

could be classified as either type Fibroadenoma and phyllodes

tumor. This as a result may influence accuracy of the proposed

BC-CAD solution. Increasing learning rate might cause high-

rate pixel feature changes [44] that consequently may make

breast cancer tumor or ROI detection susceptible. Though, the

adaptive learning based GMM model [43] can perform ROI

identification under varying background conditions. However,

the very minute and fine-connected texture or surface features

still make existing approach limited. Precisely for breast cancer

Histopathological images, textural or structural features, such as

cellular atypia, mitosis, disruption of basement membranes,

metastasize …etc. are highly intricate and minute feature that

requires more efficient ROI identification model. An enhanced

Gaussian mixture learning based GMM model was proposed in

[44]. However, authors could not address the saturation scenario

particularly during convergence. As an enhanced solution, in this

paper an adaptive learning based GMM model is developed that

detects precise ROI. In our proposed approach, initially, we have

decoupled learning rate from other components such as and. We