Semester Project: Business Intelligence Report Analysis

VerifiedAdded on 2023/04/03

|29

|3445

|100

Report

AI Summary

This report details a Business Intelligence project undertaken using RapidMiner to analyze Australian weather data. The project is divided into three tasks. Task 1 focuses on exploratory data analysis, decision tree modeling, and logistic regression modeling to predict rainfall. Task 2 involves researching and designing a high-level data warehouse architecture, including its main components and ethical considerations. Task 3 entails creating a crime dataset for a scenario dashboard. The report includes detailed explanations of each step, including the use of RapidMiner for data preparation, model building, and validation, as well as a discussion of data warehouse design and security concerns. The project aims to apply business intelligence techniques to solve real-world problems, demonstrating the practical application of data mining and data warehousing principles.

University

Semester

Business Intelligence

Student ID

Student Name

Submission Date

Semester

Business Intelligence

Student ID

Student Name

Submission Date

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Table of Contents

1. Project Description........................................................................................................................1

2. Task 1 - Rapid Miner.....................................................................................................................1

2.1 Exploratory Data Analysis on Weather AUS Data................................................................1

2.2 Decision Tree Model.............................................................................................................7

2.3 Logistic Regression Model....................................................................................................9

2.4 Final Decision Tree and Final Logistics Regression Models’ Validation............................10

Performance Vector.............................................................................................................................12

3. Task 2 - Research the Relevant Literature...................................................................................13

3.1 Architecture Design of a High Level Data Warehouse........................................................13

3.2 Proposed High Level Data Warehouse Architecture Design’s Main Components...............15

3.3 Security Privacy and the Ethical Concerns..........................................................................16

4. Task 3 – Scenario Dashboard......................................................................................................19

4.1 Crime Category....................................................................................................................19

4.2 Frequency of Occurrence.....................................................................................................20

4.3 Frequency of Crimes............................................................................................................21

4.4 Geographical Presentation of Each police Department Area...............................................21

References...........................................................................................................................................23

1. Project Description........................................................................................................................1

2. Task 1 - Rapid Miner.....................................................................................................................1

2.1 Exploratory Data Analysis on Weather AUS Data................................................................1

2.2 Decision Tree Model.............................................................................................................7

2.3 Logistic Regression Model....................................................................................................9

2.4 Final Decision Tree and Final Logistics Regression Models’ Validation............................10

Performance Vector.............................................................................................................................12

3. Task 2 - Research the Relevant Literature...................................................................................13

3.1 Architecture Design of a High Level Data Warehouse........................................................13

3.2 Proposed High Level Data Warehouse Architecture Design’s Main Components...............15

3.3 Security Privacy and the Ethical Concerns..........................................................................16

4. Task 3 – Scenario Dashboard......................................................................................................19

4.1 Crime Category....................................................................................................................19

4.2 Frequency of Occurrence.....................................................................................................20

4.3 Frequency of Crimes............................................................................................................21

4.4 Geographical Presentation of Each police Department Area...............................................21

References...........................................................................................................................................23

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1. Project Description

We shall be making use of the Rapid Miner Tool for the data readiness, data reading, data

mining, information gathering, data evaluation and analysis on a specific data platform.

For our project we will be using the Australian Weather data and information sets. We shall

be dealing and also identifying the real world issues, use the business intelligences in solving

and getting solutions for complex organizational problems practically and creatively, in the

organization's systems applying the business intelligence's implementation for the business

processes. We have divided the entire project into 3 sub divisions,

Task 1st – Rapid Miner is the tool that we shall be suing for this project of Weather

forecasting and analysis of the weather data for the next day's weather depending on today's

weather conditions by analysing and evaluating the Climate data sets from the Australian

researchers. Now we shall use the following tools and methods for the same – Information

preparation, Business knowledge, Information understanding, the CRISP DM data mining

process’s modeling phase and Evaluation phase.

Task 2nd – We shall study and evaluate large amount of information collected from the

researchers and will use the above mentioned tools and methods to for and the required

results and outcomes for the data warehouse architecture and materials related for the same.

Task 3rd – A Crime dataset will be created for the dashboard.

The above topics and tasks will be evaluated, studied and discussed in detail.

2. Task 1 - Rapid Miner

For the first Task, we shall be using the various technics like applying data

preparation, data understanding, data modelling and CRISP DM data mining process’s

evaluation phases for the analysis of the possibility of rainfall for the next day prediction of

rainfall for the next day depending on data collected for today’s climatic conditions.

1

We shall be making use of the Rapid Miner Tool for the data readiness, data reading, data

mining, information gathering, data evaluation and analysis on a specific data platform.

For our project we will be using the Australian Weather data and information sets. We shall

be dealing and also identifying the real world issues, use the business intelligences in solving

and getting solutions for complex organizational problems practically and creatively, in the

organization's systems applying the business intelligence's implementation for the business

processes. We have divided the entire project into 3 sub divisions,

Task 1st – Rapid Miner is the tool that we shall be suing for this project of Weather

forecasting and analysis of the weather data for the next day's weather depending on today's

weather conditions by analysing and evaluating the Climate data sets from the Australian

researchers. Now we shall use the following tools and methods for the same – Information

preparation, Business knowledge, Information understanding, the CRISP DM data mining

process’s modeling phase and Evaluation phase.

Task 2nd – We shall study and evaluate large amount of information collected from the

researchers and will use the above mentioned tools and methods to for and the required

results and outcomes for the data warehouse architecture and materials related for the same.

Task 3rd – A Crime dataset will be created for the dashboard.

The above topics and tasks will be evaluated, studied and discussed in detail.

2. Task 1 - Rapid Miner

For the first Task, we shall be using the various technics like applying data

preparation, data understanding, data modelling and CRISP DM data mining process’s

evaluation phases for the analysis of the possibility of rainfall for the next day prediction of

rainfall for the next day depending on data collected for today’s climatic conditions.

1

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

For this the following parameters will be considered, (Ahmed Sherif., 2016):

First, we are exploratory data analysis on Australian Weather data.

Decision Tree Model to be prepared

Logistic Regression Model to be made

Final Decision Tree Mode will be validated and its outcome checked.

Logistic Regression Model will be validated and its outcome checked.

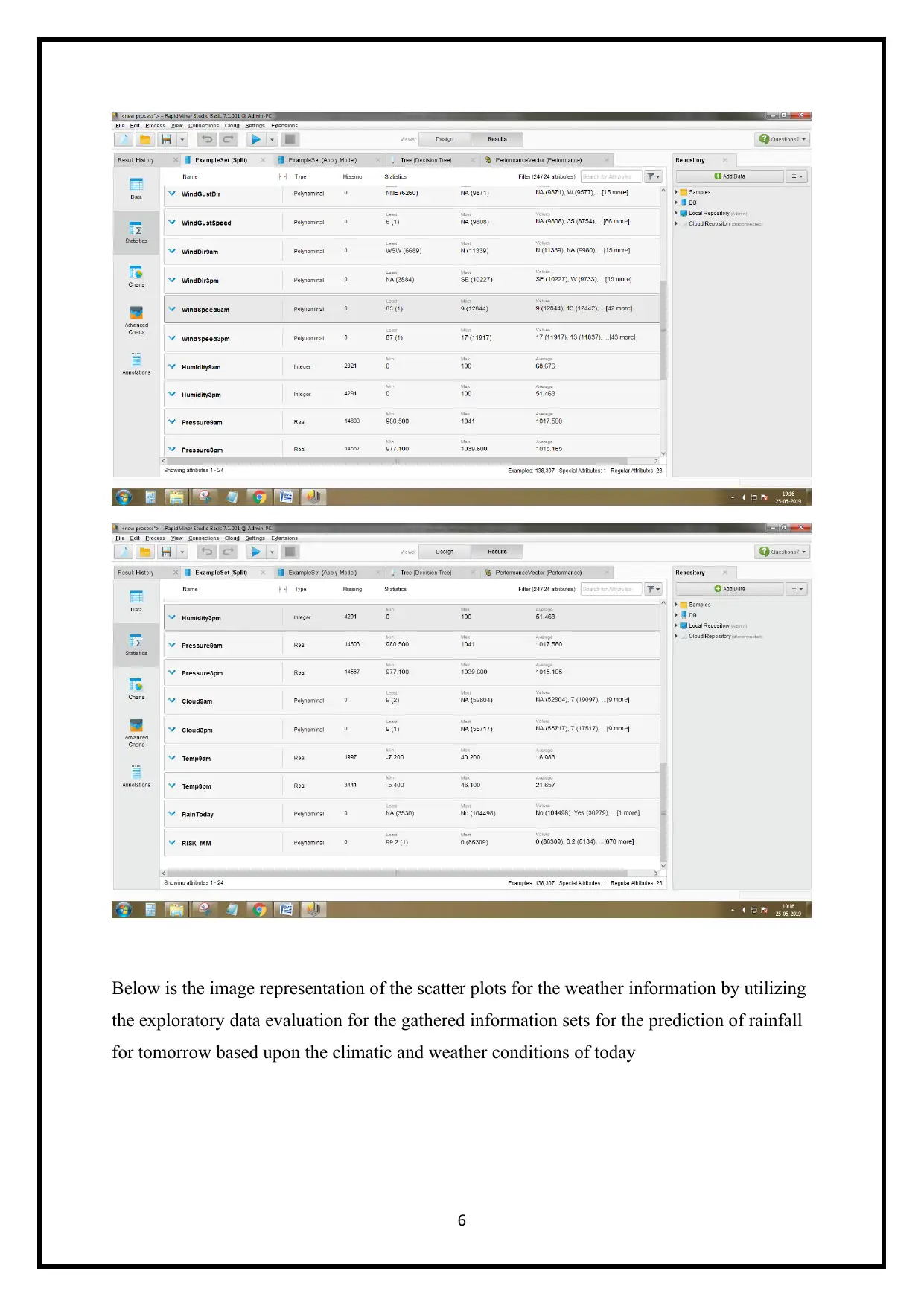

2.1 Exploratory Data Analysis on Weather AUS Data

By making use of the Rapid Miner tool, we shall apply this for the evaluation of the

information related to the Australian weather data. We will study every single factor in the

data set which affects the outcome of the study and also the inter-relationships between all

these parameters. Now the factors that will affect the selected variables will also depend upon

the following factors like parameters lost, faulty data inputs, least parameters, highest values

required, parameters used most often, standard deviation and more.

We shall now look into this aspect of the project and see how these play a role in the analysis

result.

We shall start with applying the Rapid Miner Tool for the exploratory provided by the

Australian Weather data in the below steps,

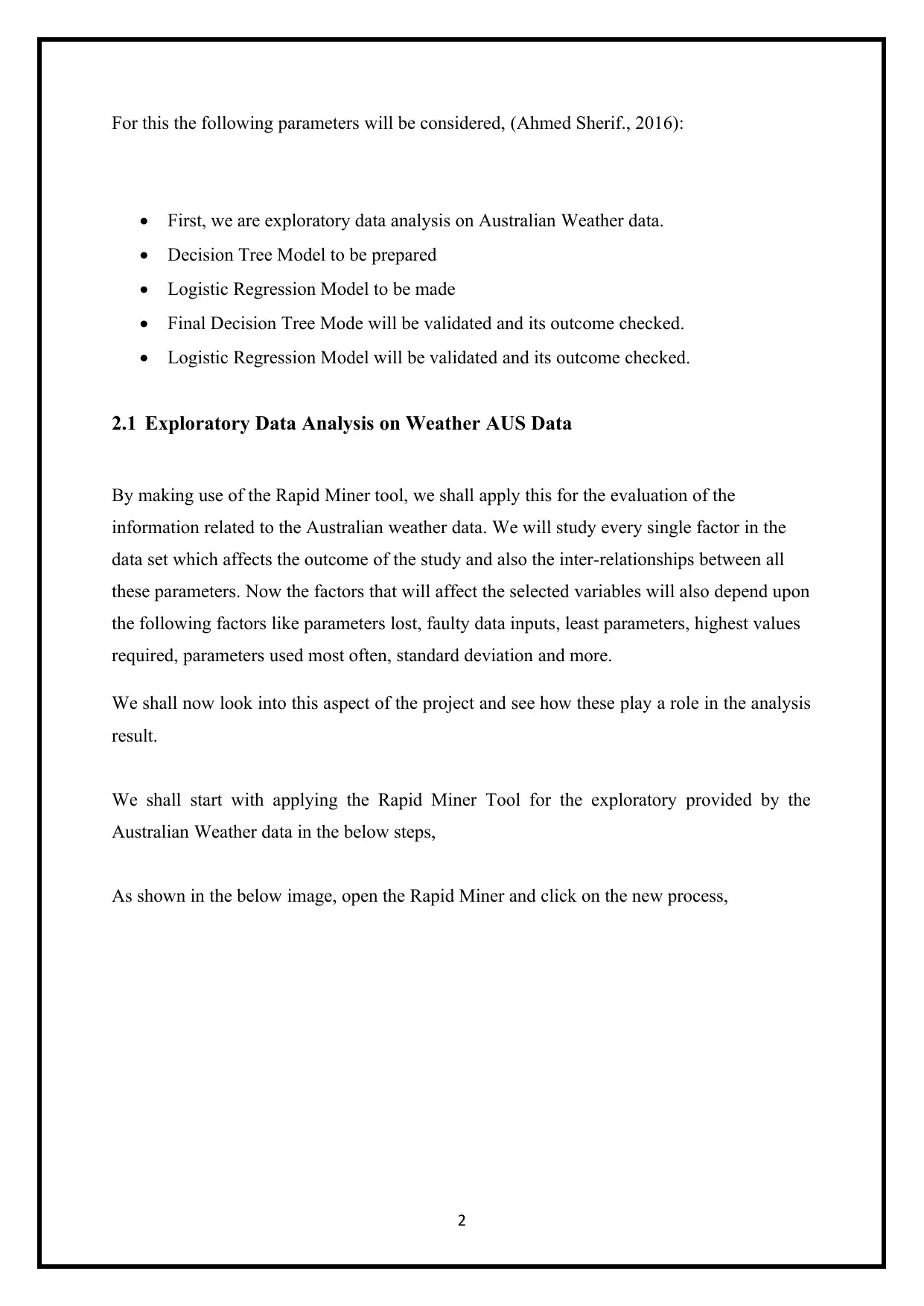

As shown in the below image, open the Rapid Miner and click on the new process,

2

First, we are exploratory data analysis on Australian Weather data.

Decision Tree Model to be prepared

Logistic Regression Model to be made

Final Decision Tree Mode will be validated and its outcome checked.

Logistic Regression Model will be validated and its outcome checked.

2.1 Exploratory Data Analysis on Weather AUS Data

By making use of the Rapid Miner tool, we shall apply this for the evaluation of the

information related to the Australian weather data. We will study every single factor in the

data set which affects the outcome of the study and also the inter-relationships between all

these parameters. Now the factors that will affect the selected variables will also depend upon

the following factors like parameters lost, faulty data inputs, least parameters, highest values

required, parameters used most often, standard deviation and more.

We shall now look into this aspect of the project and see how these play a role in the analysis

result.

We shall start with applying the Rapid Miner Tool for the exploratory provided by the

Australian Weather data in the below steps,

As shown in the below image, open the Rapid Miner and click on the new process,

2

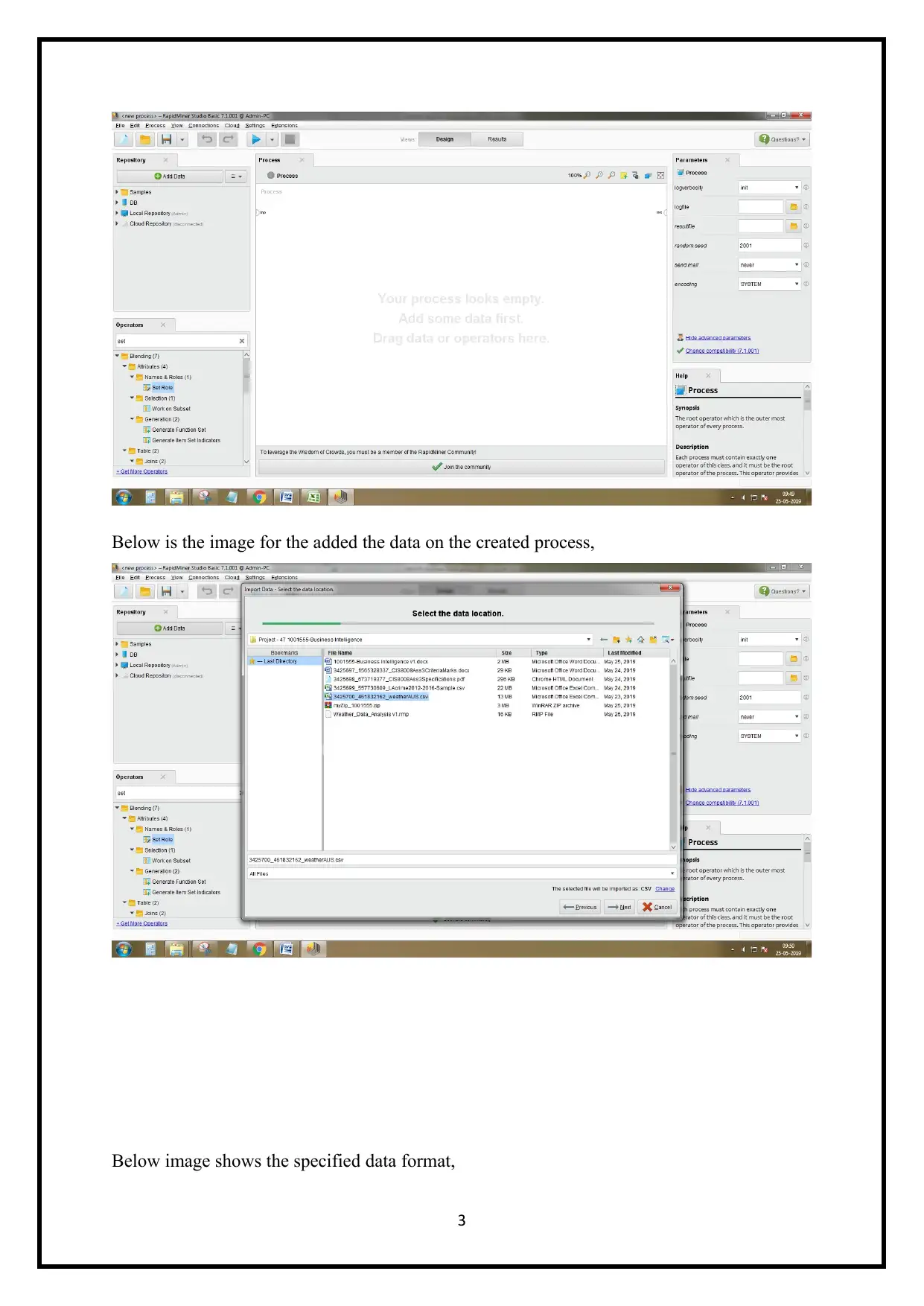

Below is the image for the added the data on the created process,

Below image shows the specified data format,

3

Below image shows the specified data format,

3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

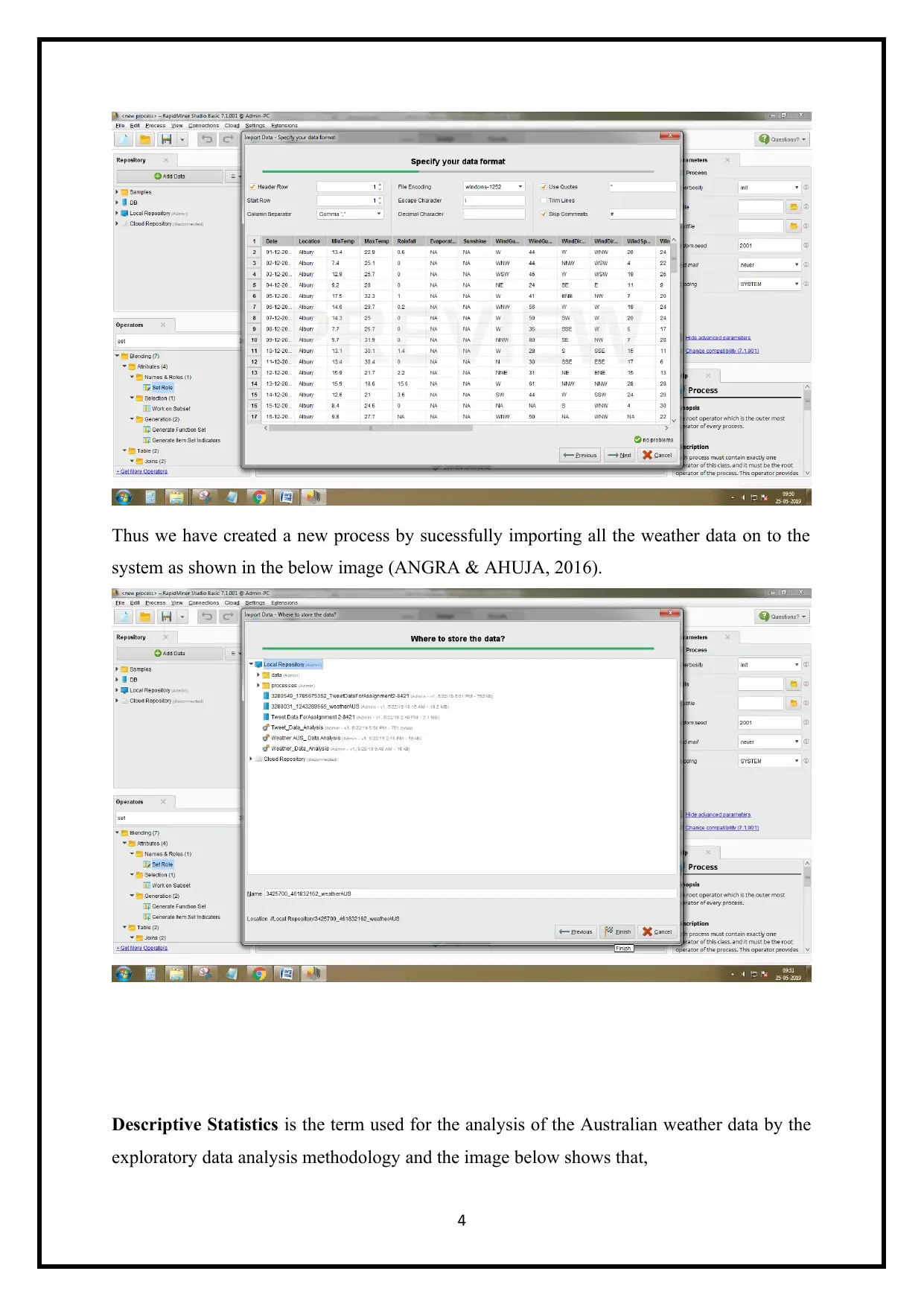

Thus we have created a new process by sucessfully importing all the weather data on to the

system as shown in the below image (ANGRA & AHUJA, 2016).

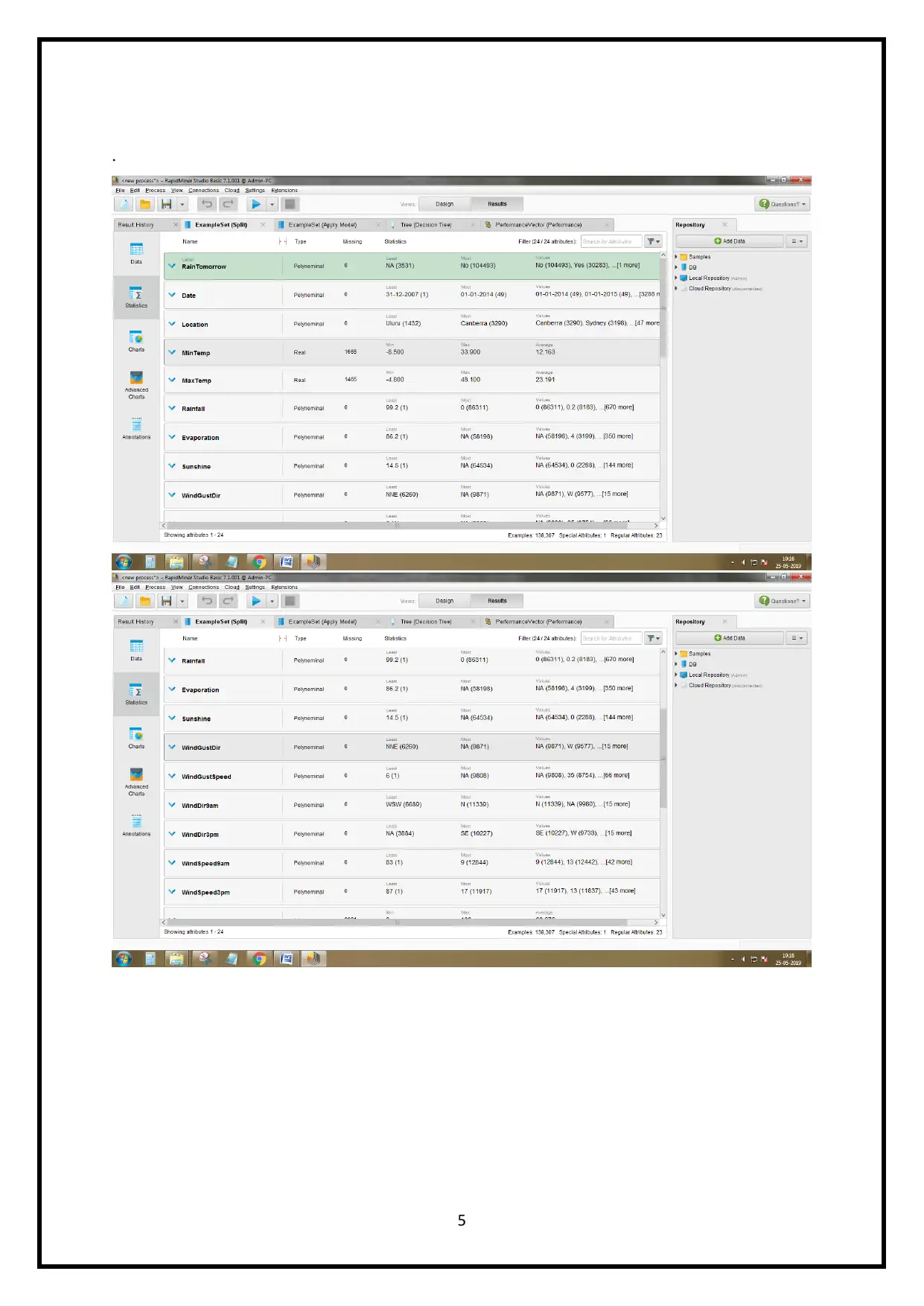

Descriptive Statistics is the term used for the analysis of the Australian weather data by the

exploratory data analysis methodology and the image below shows that,

4

system as shown in the below image (ANGRA & AHUJA, 2016).

Descriptive Statistics is the term used for the analysis of the Australian weather data by the

exploratory data analysis methodology and the image below shows that,

4

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

.

5

5

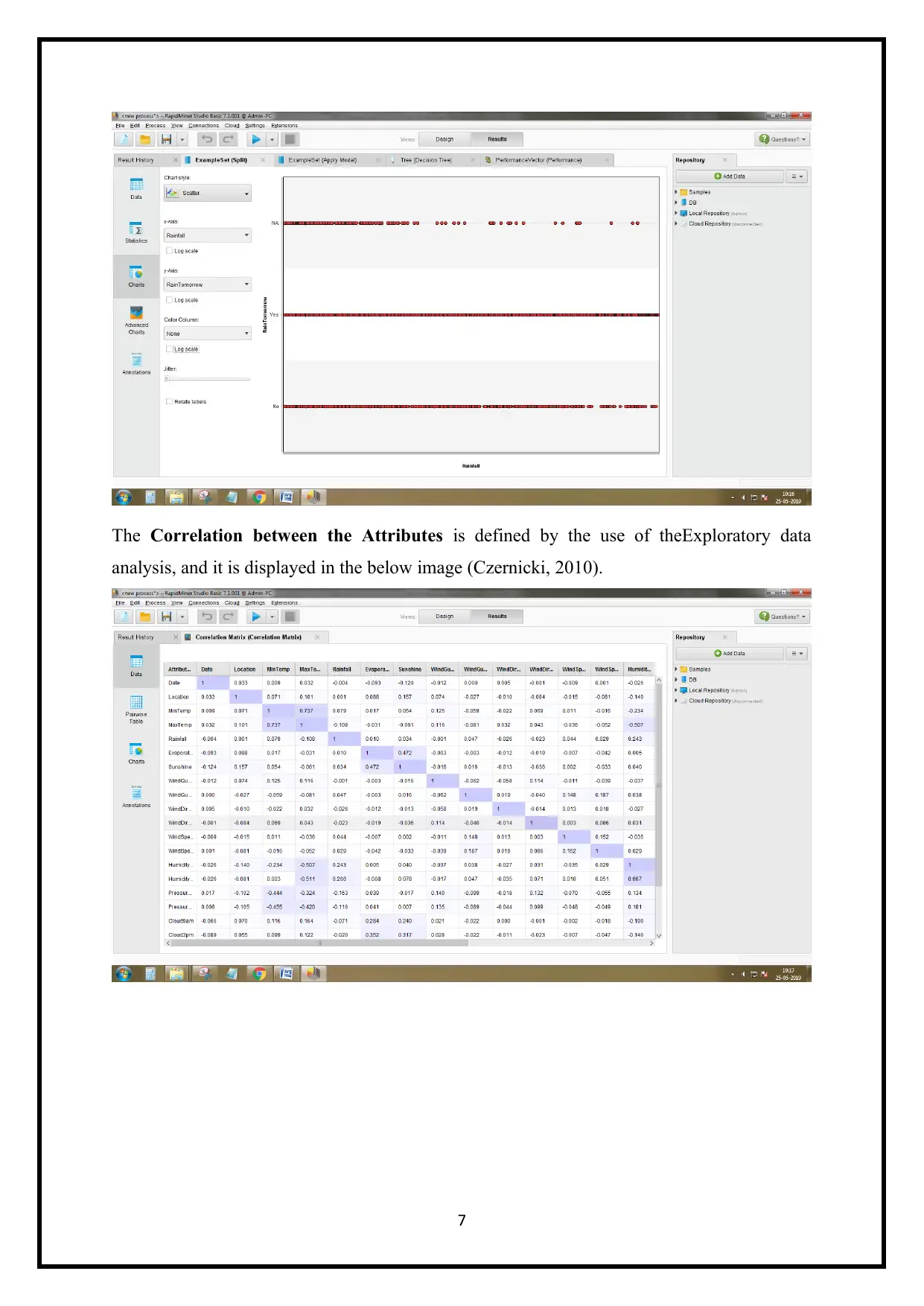

Below is the image representation of the scatter plots for the weather information by utilizing

the exploratory data evaluation for the gathered information sets for the prediction of rainfall

for tomorrow based upon the climatic and weather conditions of today

6

the exploratory data evaluation for the gathered information sets for the prediction of rainfall

for tomorrow based upon the climatic and weather conditions of today

6

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

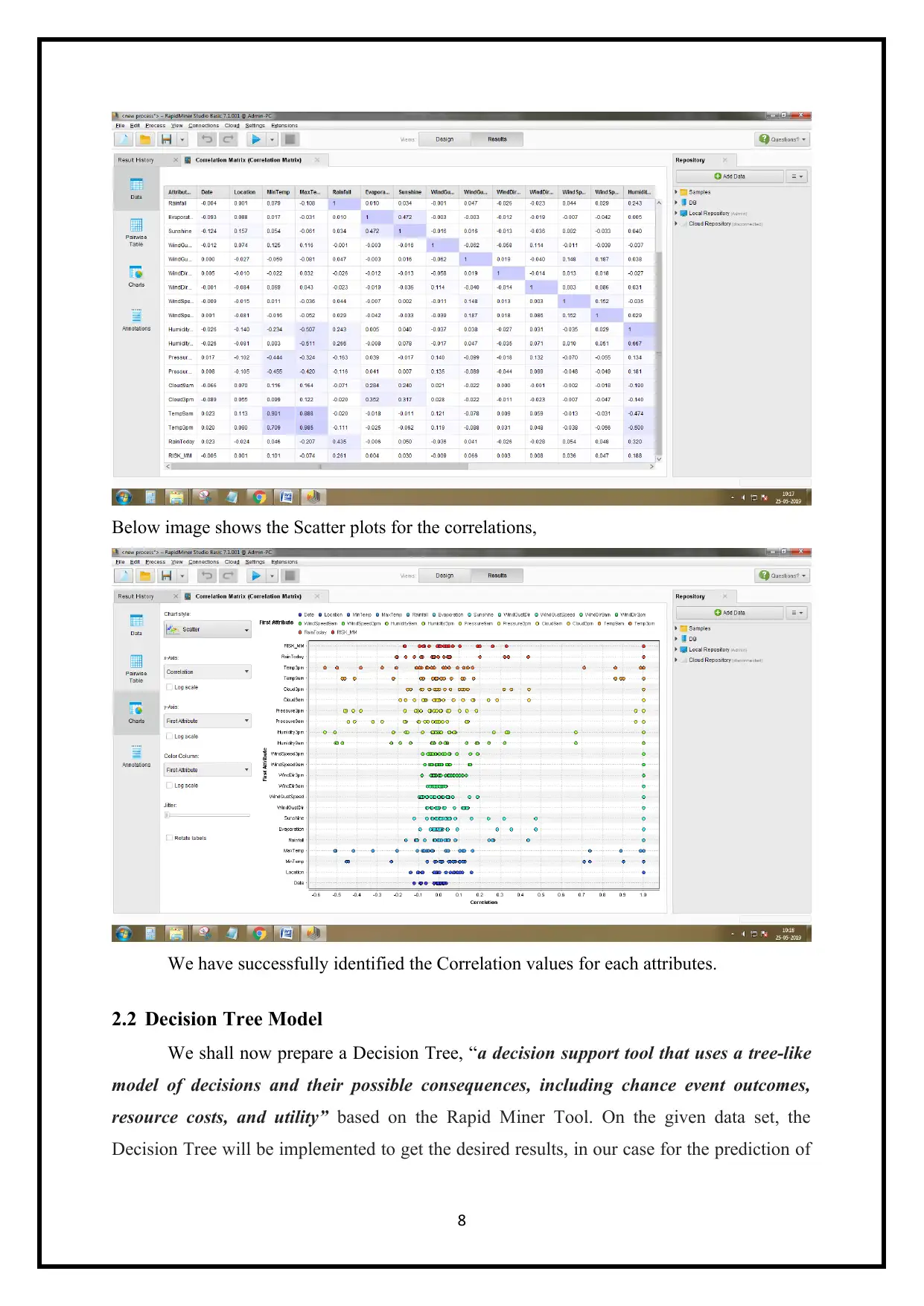

The Correlation between the Attributes is defined by the use of theExploratory data

analysis, and it is displayed in the below image (Czernicki, 2010).

7

analysis, and it is displayed in the below image (Czernicki, 2010).

7

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Below image shows the Scatter plots for the correlations,

We have successfully identified the Correlation values for each attributes.

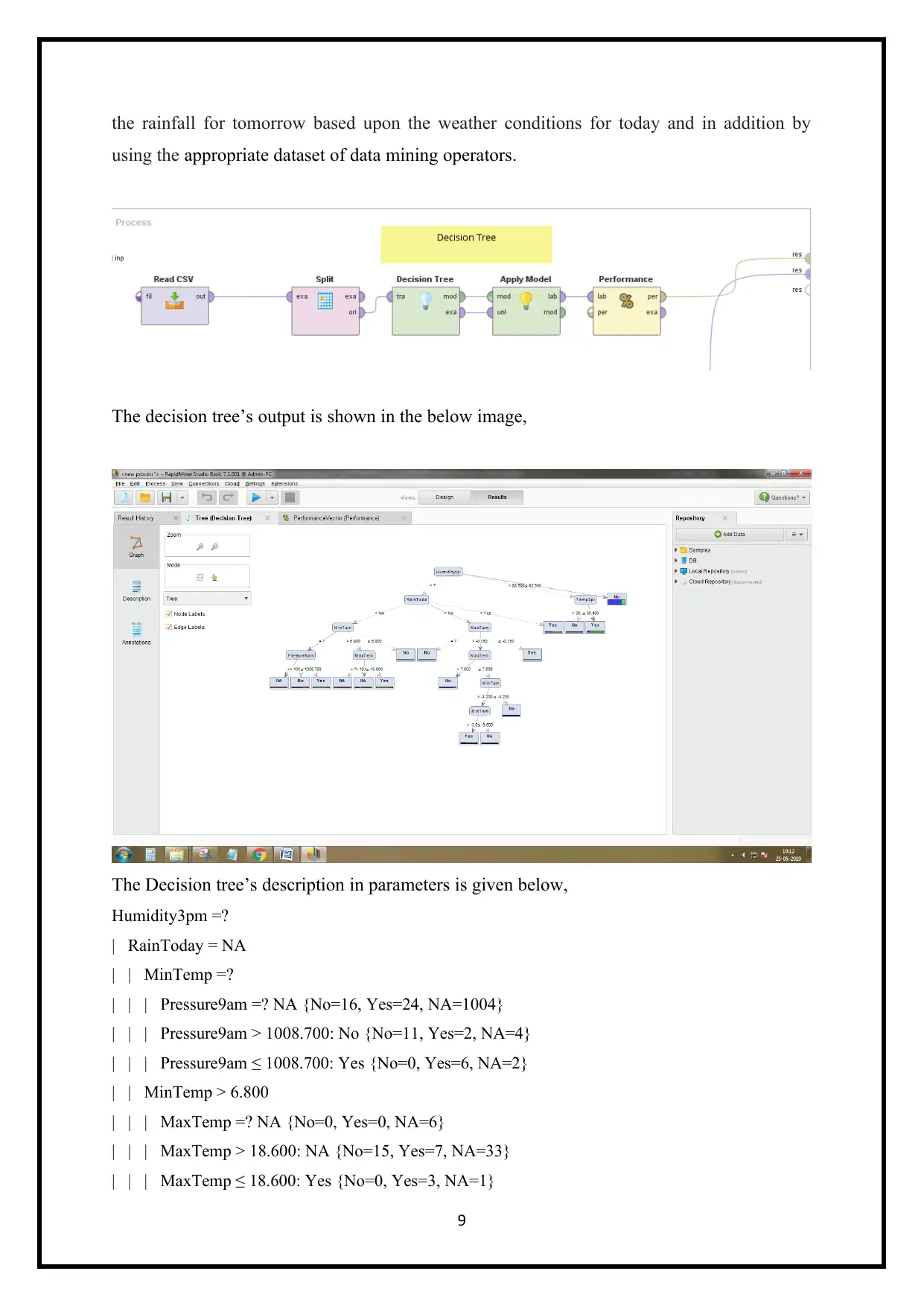

2.2 Decision Tree Model

We shall now prepare a Decision Tree, “a decision support tool that uses a tree-like

model of decisions and their possible consequences, including chance event outcomes,

resource costs, and utility” based on the Rapid Miner Tool. On the given data set, the

Decision Tree will be implemented to get the desired results, in our case for the prediction of

8

We have successfully identified the Correlation values for each attributes.

2.2 Decision Tree Model

We shall now prepare a Decision Tree, “a decision support tool that uses a tree-like

model of decisions and their possible consequences, including chance event outcomes,

resource costs, and utility” based on the Rapid Miner Tool. On the given data set, the

Decision Tree will be implemented to get the desired results, in our case for the prediction of

8

the rainfall for tomorrow based upon the weather conditions for today and in addition by

using the appropriate dataset of data mining operators.

The decision tree’s output is shown in the below image,

The Decision tree’s description in parameters is given below,

Humidity3pm =?

| RainToday = NA

| | MinTemp =?

| | | Pressure9am =? NA {No=16, Yes=24, NA=1004}

| | | Pressure9am > 1008.700: No {No=11, Yes=2, NA=4}

| | | Pressure9am ≤ 1008.700: Yes {No=0, Yes=6, NA=2}

| | MinTemp > 6.800

| | | MaxTemp =? NA {No=0, Yes=0, NA=6}

| | | MaxTemp > 18.600: NA {No=15, Yes=7, NA=33}

| | | MaxTemp ≤ 18.600: Yes {No=0, Yes=3, NA=1}

9

using the appropriate dataset of data mining operators.

The decision tree’s output is shown in the below image,

The Decision tree’s description in parameters is given below,

Humidity3pm =?

| RainToday = NA

| | MinTemp =?

| | | Pressure9am =? NA {No=16, Yes=24, NA=1004}

| | | Pressure9am > 1008.700: No {No=11, Yes=2, NA=4}

| | | Pressure9am ≤ 1008.700: Yes {No=0, Yes=6, NA=2}

| | MinTemp > 6.800

| | | MaxTemp =? NA {No=0, Yes=0, NA=6}

| | | MaxTemp > 18.600: NA {No=15, Yes=7, NA=33}

| | | MaxTemp ≤ 18.600: Yes {No=0, Yes=3, NA=1}

9

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 29

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.