BTEC Level 5 HND Computing - Cloud Computing Solutions Report

VerifiedAdded on 2023/01/20

|14

|2779

|43

Report

AI Summary

This report analyzes the fundamentals of cloud computing, its evolution, and various architectures, including client-server, peer-to-peer (P2P), and high-performance computing (HPC). It delves into the benefits and drawbacks of each architecture, emphasizing the importance of scalability and elasticity. The report presents a scenario for ATN, a Vietnamese toy company, and proposes a cloud computing solution to address their data management challenges. It covers architectural design, deployment models, service models, and technological choices, justifying the appropriateness of the chosen solution. The report aims to provide a comprehensive understanding of cloud computing concepts and their practical applications, using academic references and real-world examples to support its arguments. It also includes a discussion on why an organization should migrate to a cloud computing solution, offering insights into the tools and technologies involved in realizing a cloud computing framework.

ASSIGNMENT 1 BRIEF

Qualification BTEC Level 5 HND Diploma in Computing

Unit number Unit 16: Cloud computing

Assignment title Cloud Computing Solutions

Academic Year 2021 – 2022

Unit Tutor Ho Hai Van

Issue date Submission date

IV name and date

Submission Format:

Format: The submission is in the form of 1 document

You must use font Calibri size 12, set number of the pages and use multiple line spacing at

1.3. Margins must be: left: 1.25 cm; right: 1 cm; top: 1 cm and bottom: 1 cm. The reference

follows Harvard referencing system.

Submission Students are compulsory to submit the assignment in due date and in a way requested by

the Tutors. The form of submission will be a soft copy posted on

http://cms.greenwich.edu.vn/

Note: The Assignment must be your own work, and not copied by or from another student or from

books etc. If you use ideas, quotes or data (such as diagrams) from books, journals or other sources, you

must reference your sources, using the Harvard style. Make sure that you know how to reference

properly, and that understand the guidelines on plagiarism. If you do not, you definitely get failed

Unit Learning Outcomes:

LO1 Demonstrate an understanding of the fundamentals of Cloud Computing and its architectures.

LO2 Evaluate the deployment models, service models and technological drivers of Cloud Computing and

validate their use.

Assignment Brief and Guidance:

Scenario

ATN is a Vietnamese company which is selling toys to teenagers in many provinces all over Vietnam. The

company has the revenue over 700.000 dollars/year. Currently each shop has its own database to store

transactions for that shop only. Each shop has to send the sale data to the board director monthly and

the board director need lots of time to summarize the data collected from all the shops. Besides the

Page 1

Qualification BTEC Level 5 HND Diploma in Computing

Unit number Unit 16: Cloud computing

Assignment title Cloud Computing Solutions

Academic Year 2021 – 2022

Unit Tutor Ho Hai Van

Issue date Submission date

IV name and date

Submission Format:

Format: The submission is in the form of 1 document

You must use font Calibri size 12, set number of the pages and use multiple line spacing at

1.3. Margins must be: left: 1.25 cm; right: 1 cm; top: 1 cm and bottom: 1 cm. The reference

follows Harvard referencing system.

Submission Students are compulsory to submit the assignment in due date and in a way requested by

the Tutors. The form of submission will be a soft copy posted on

http://cms.greenwich.edu.vn/

Note: The Assignment must be your own work, and not copied by or from another student or from

books etc. If you use ideas, quotes or data (such as diagrams) from books, journals or other sources, you

must reference your sources, using the Harvard style. Make sure that you know how to reference

properly, and that understand the guidelines on plagiarism. If you do not, you definitely get failed

Unit Learning Outcomes:

LO1 Demonstrate an understanding of the fundamentals of Cloud Computing and its architectures.

LO2 Evaluate the deployment models, service models and technological drivers of Cloud Computing and

validate their use.

Assignment Brief and Guidance:

Scenario

ATN is a Vietnamese company which is selling toys to teenagers in many provinces all over Vietnam. The

company has the revenue over 700.000 dollars/year. Currently each shop has its own database to store

transactions for that shop only. Each shop has to send the sale data to the board director monthly and

the board director need lots of time to summarize the data collected from all the shops. Besides the

Page 1

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

board can’t see the stock information update in real time.

The table of contents in your technical report should be as follows:

1. As a developer, explain to the board director the fundamentals of cloud computing and how it is

popular nowadays (about 2500 words)

2. Proposed solution (higher level solution description – around 700 words) and explain the

appropriateness of the solution for the scenario (about 400 words with images and diagrams)

which might include:

a. Architectural design (architectural diagram and description).

b. Detailed design:

i. Deployment model (discussion on why that model was chosen).

ii. Service model (discussion on why that model was chosen).

iii. Programming language/ webserver/database server chosen.

3. Summary.

General guidelines:

Instead of providing definitions but also provide with examples.

Provide more own arguments instead of definitions

Making use of academic references instead of web tutorials

For a cloud architecture look at the bottom of this document

Page 2

The table of contents in your technical report should be as follows:

1. As a developer, explain to the board director the fundamentals of cloud computing and how it is

popular nowadays (about 2500 words)

2. Proposed solution (higher level solution description – around 700 words) and explain the

appropriateness of the solution for the scenario (about 400 words with images and diagrams)

which might include:

a. Architectural design (architectural diagram and description).

b. Detailed design:

i. Deployment model (discussion on why that model was chosen).

ii. Service model (discussion on why that model was chosen).

iii. Programming language/ webserver/database server chosen.

3. Summary.

General guidelines:

Instead of providing definitions but also provide with examples.

Provide more own arguments instead of definitions

Making use of academic references instead of web tutorials

For a cloud architecture look at the bottom of this document

Page 2

Learning Outcomes and Assessment Criteria

Pass Merit Distinction

LO1 Demonstrate an understanding of the fundamentals of

Cloud Computing and its architectures

LO1 & 2

D1 Justify the tools chosen

to realize a Cloud

Computing solution.

P1 Analyse the evolution and

fundamental concepts of

Cloud Computing.

P2 Design an appropriate

architectural Cloud

Computing framework for a

given scenario.

M1 Discuss why an

organisation should migrate

to a Cloud Computing

solution.

LO2 Evaluate the deployment models, service models and

technological drivers of Cloud Computing and validate their

use

P3 Define an appropriate

deployment model for a given

scenario.

P4 Compare the service

models for choosing an

adequate model for a given

scenario.

M2 Demonstrate these

deployment models with real

world examples.

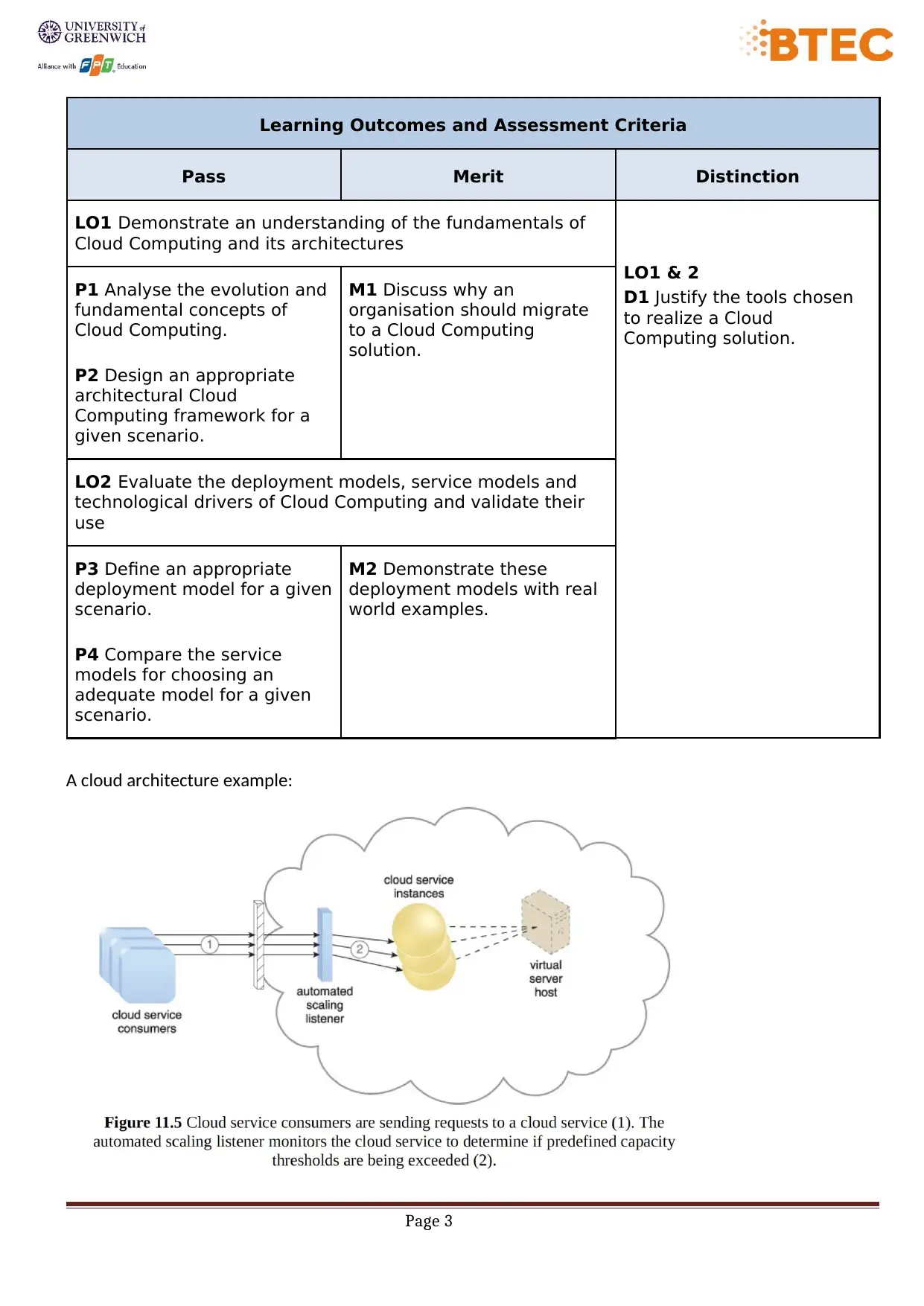

A cloud architecture example:

Page 3

Pass Merit Distinction

LO1 Demonstrate an understanding of the fundamentals of

Cloud Computing and its architectures

LO1 & 2

D1 Justify the tools chosen

to realize a Cloud

Computing solution.

P1 Analyse the evolution and

fundamental concepts of

Cloud Computing.

P2 Design an appropriate

architectural Cloud

Computing framework for a

given scenario.

M1 Discuss why an

organisation should migrate

to a Cloud Computing

solution.

LO2 Evaluate the deployment models, service models and

technological drivers of Cloud Computing and validate their

use

P3 Define an appropriate

deployment model for a given

scenario.

P4 Compare the service

models for choosing an

adequate model for a given

scenario.

M2 Demonstrate these

deployment models with real

world examples.

A cloud architecture example:

Page 3

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

The dynamic scalability architecture can be applied to a range of IT resources, including

virtual servers and cloud storage devices. Besides the core automated scaling listener and

resource replication mechanisms, the following mechanisms can also be used in this form

of cloud architecture:

• Cloud Usage Monitor – Specialized cloud usage monitors can track runtime usage

in response to dynamic fluctuations caused by this architecture.

• Hypervisor – The hypervisor is invoked by a dynamic scalability system to create or

remove virtual server instances, or to be scaled itself.

• Pay-Per-Use Monitor – The pay-per-use monitor is engaged to collect usage cost

information in response to the scaling of IT resources.

Page 4

virtual servers and cloud storage devices. Besides the core automated scaling listener and

resource replication mechanisms, the following mechanisms can also be used in this form

of cloud architecture:

• Cloud Usage Monitor – Specialized cloud usage monitors can track runtime usage

in response to dynamic fluctuations caused by this architecture.

• Hypervisor – The hypervisor is invoked by a dynamic scalability system to create or

remove virtual server instances, or to be scaled itself.

• Pay-Per-Use Monitor – The pay-per-use monitor is engaged to collect usage cost

information in response to the scaling of IT resources.

Page 4

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Page 5

Answer

P1 Analyse the evolution and fundamental concepts of Cloud Computing.

The concept of computing in the "cloud" can be traced back to the origins of utility computing,

which computer scientist John McCarthy publicly proposed in 1961: "If computers of the kind I have

advocated become the computers of the future, then computing may someday be organized as a

public utility just as the telephone system is a public utility...." (Thomas Erl,Zaigham Mahmood,

and Ricardo Puttini, 2014)

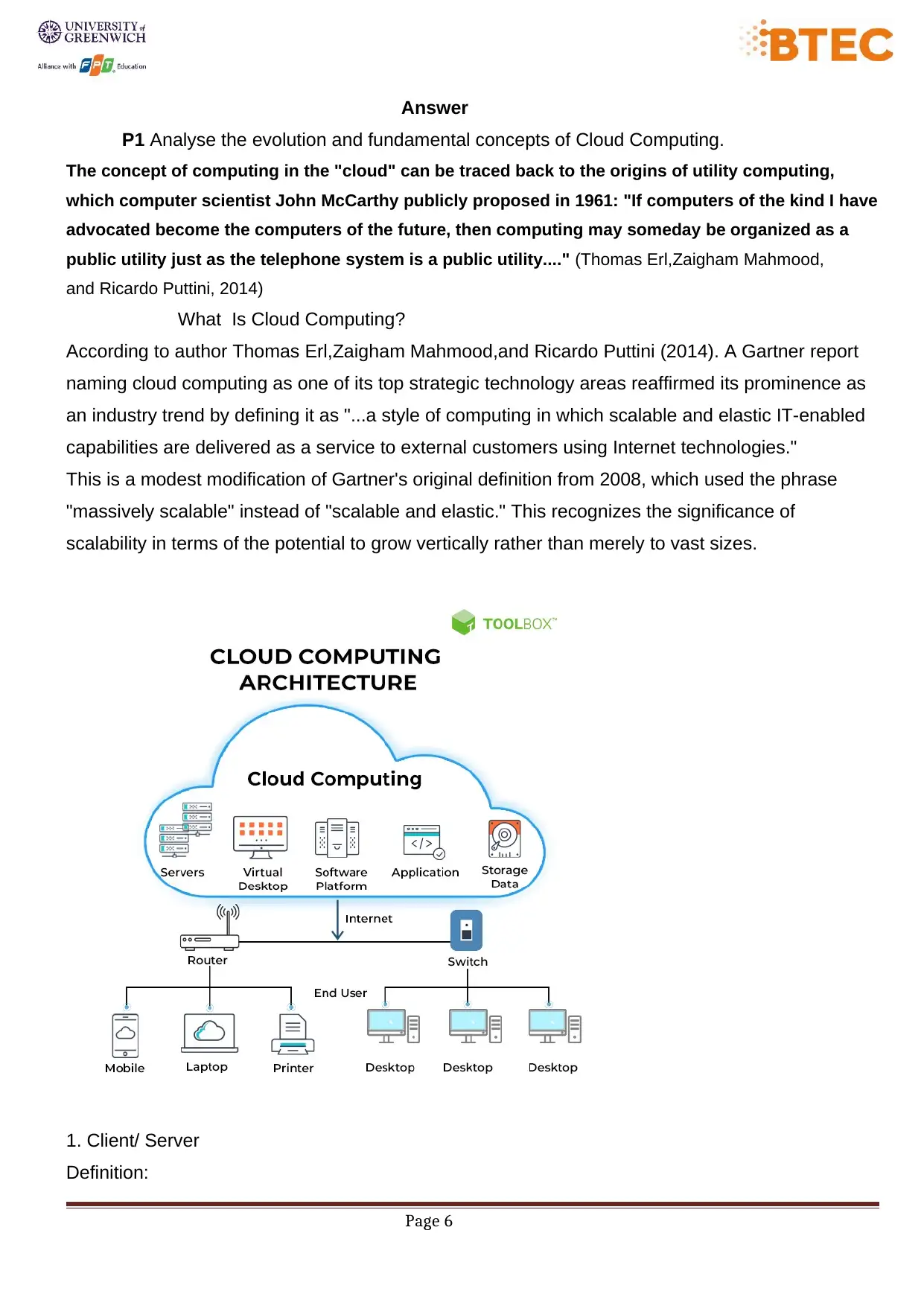

What Is Cloud Computing?

According to author Thomas Erl,Zaigham Mahmood,and Ricardo Puttini (2014). A Gartner report

naming cloud computing as one of its top strategic technology areas reaffirmed its prominence as

an industry trend by defining it as "...a style of computing in which scalable and elastic IT-enabled

capabilities are delivered as a service to external customers using Internet technologies."

This is a modest modification of Gartner's original definition from 2008, which used the phrase

"massively scalable" instead of "scalable and elastic." This recognizes the significance of

scalability in terms of the potential to grow vertically rather than merely to vast sizes.

1. Client/ Server

Definition:

Page 6

P1 Analyse the evolution and fundamental concepts of Cloud Computing.

The concept of computing in the "cloud" can be traced back to the origins of utility computing,

which computer scientist John McCarthy publicly proposed in 1961: "If computers of the kind I have

advocated become the computers of the future, then computing may someday be organized as a

public utility just as the telephone system is a public utility...." (Thomas Erl,Zaigham Mahmood,

and Ricardo Puttini, 2014)

What Is Cloud Computing?

According to author Thomas Erl,Zaigham Mahmood,and Ricardo Puttini (2014). A Gartner report

naming cloud computing as one of its top strategic technology areas reaffirmed its prominence as

an industry trend by defining it as "...a style of computing in which scalable and elastic IT-enabled

capabilities are delivered as a service to external customers using Internet technologies."

This is a modest modification of Gartner's original definition from 2008, which used the phrase

"massively scalable" instead of "scalable and elastic." This recognizes the significance of

scalability in terms of the potential to grow vertically rather than merely to vast sizes.

1. Client/ Server

Definition:

Page 6

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

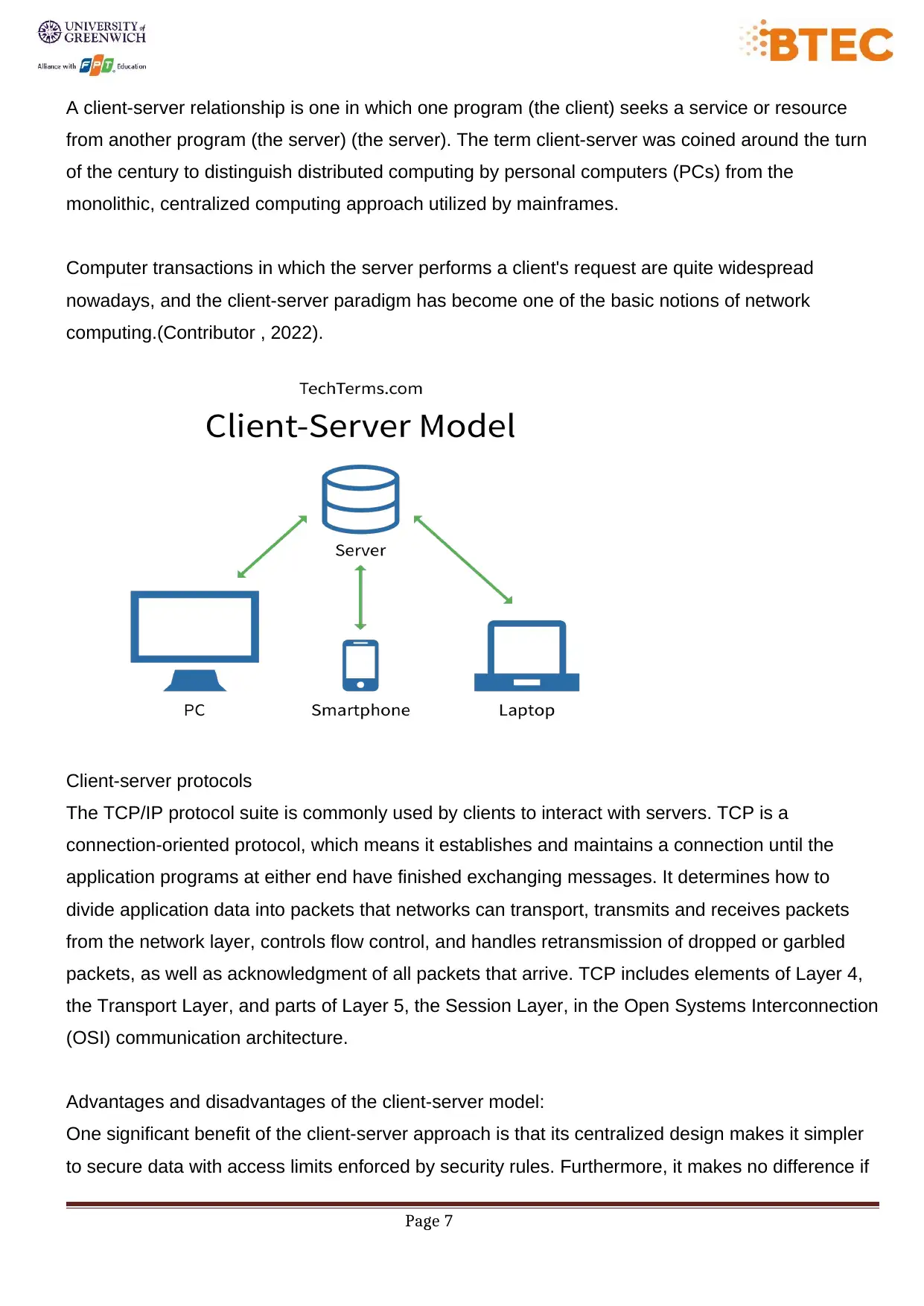

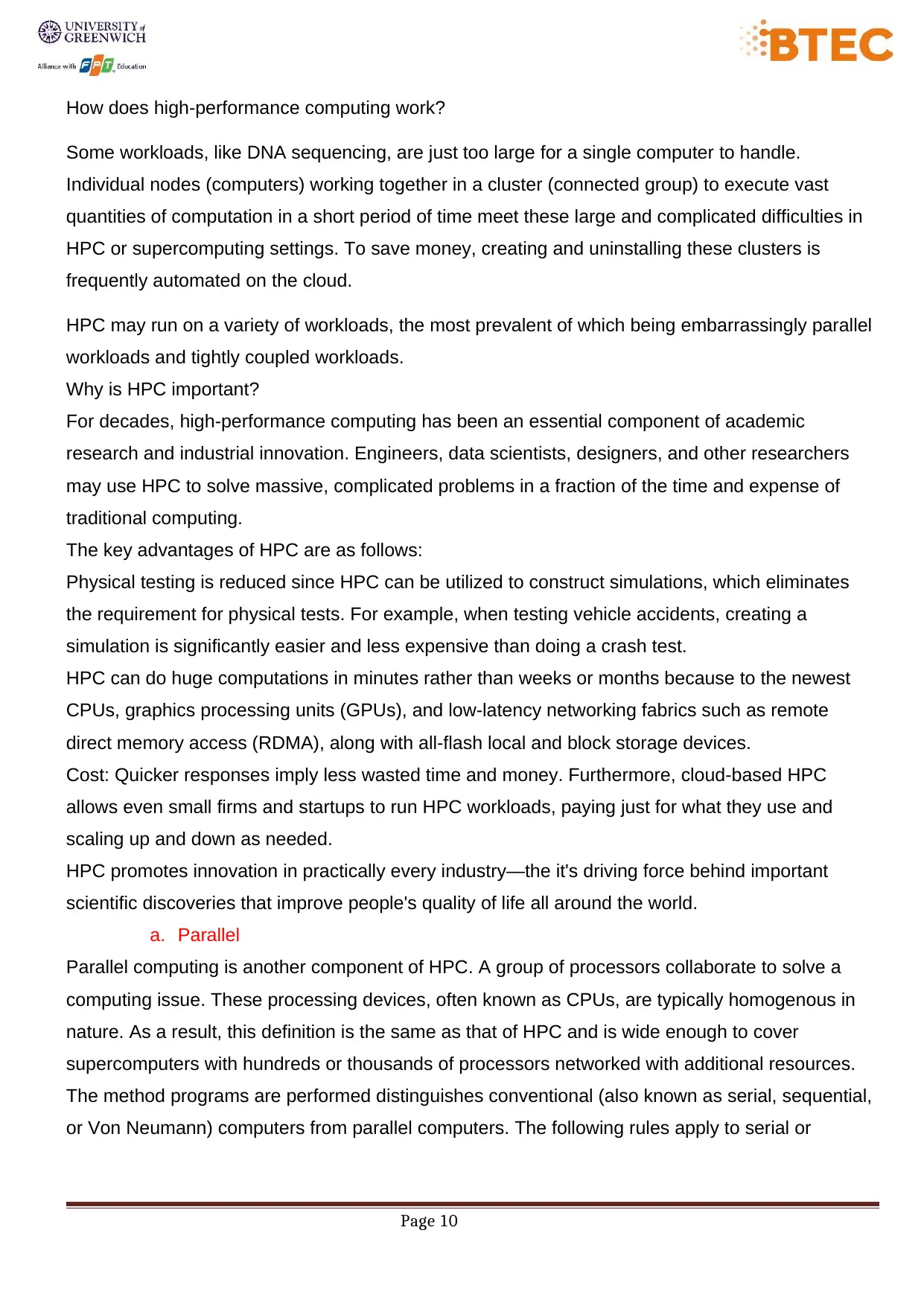

A client-server relationship is one in which one program (the client) seeks a service or resource

from another program (the server) (the server). The term client-server was coined around the turn

of the century to distinguish distributed computing by personal computers (PCs) from the

monolithic, centralized computing approach utilized by mainframes.

Computer transactions in which the server performs a client's request are quite widespread

nowadays, and the client-server paradigm has become one of the basic notions of network

computing.(Contributor , 2022).

Client-server protocols

The TCP/IP protocol suite is commonly used by clients to interact with servers. TCP is a

connection-oriented protocol, which means it establishes and maintains a connection until the

application programs at either end have finished exchanging messages. It determines how to

divide application data into packets that networks can transport, transmits and receives packets

from the network layer, controls flow control, and handles retransmission of dropped or garbled

packets, as well as acknowledgment of all packets that arrive. TCP includes elements of Layer 4,

the Transport Layer, and parts of Layer 5, the Session Layer, in the Open Systems Interconnection

(OSI) communication architecture.

Advantages and disadvantages of the client-server model:

One significant benefit of the client-server approach is that its centralized design makes it simpler

to secure data with access limits enforced by security rules. Furthermore, it makes no difference if

Page 7

from another program (the server) (the server). The term client-server was coined around the turn

of the century to distinguish distributed computing by personal computers (PCs) from the

monolithic, centralized computing approach utilized by mainframes.

Computer transactions in which the server performs a client's request are quite widespread

nowadays, and the client-server paradigm has become one of the basic notions of network

computing.(Contributor , 2022).

Client-server protocols

The TCP/IP protocol suite is commonly used by clients to interact with servers. TCP is a

connection-oriented protocol, which means it establishes and maintains a connection until the

application programs at either end have finished exchanging messages. It determines how to

divide application data into packets that networks can transport, transmits and receives packets

from the network layer, controls flow control, and handles retransmission of dropped or garbled

packets, as well as acknowledgment of all packets that arrive. TCP includes elements of Layer 4,

the Transport Layer, and parts of Layer 5, the Session Layer, in the Open Systems Interconnection

(OSI) communication architecture.

Advantages and disadvantages of the client-server model:

One significant benefit of the client-server approach is that its centralized design makes it simpler

to secure data with access limits enforced by security rules. Furthermore, it makes no difference if

Page 7

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

the clients and the server are built on the same operating system because data is sent via

platform-independent client-server protocols.

One significant downside of the client-server approach is that if too many clients request data from

the server at the same time, the server may get overloaded. Too many requests, in addition to

generating network congestion, may result in a denial of service.

Example

When a bank customer uses a web browser to access online banking services, the client sends a

request to the bank's web server. The customer's login credentials may be kept in a database, and

the webserver acts as a client to the database server. The returned data is interpreted by an

application server using the bank's business logic, and the result is sent to the webpage. Finally,

the webserver displays the result on the client web browser.

A computer executes a request and returns data at each stage in this sequence of client-server

message exchanges. This is the pattern of request-response communications. When all of the

requests have been fulfilled, the sequence is complete, and the web browser displays the data to

the consumer.

2. P2P

P2P computing or networking is a distributed application architecture that divides duties or

workloads among peers. Peers are network participants who are equally privileged and capable.

They are believed to create a node-to-node network.

Peers make a portion of their resources, like as processing power, disk storage, or network

bandwidth, directly available to other network members, eliminating the need for server or stable

host cooperation. In contrast to the classic client-server model, where consumption and provision

of resources are split, peers are both suppliers and consumers of resources.( Bandara,2004)

Page 8

platform-independent client-server protocols.

One significant downside of the client-server approach is that if too many clients request data from

the server at the same time, the server may get overloaded. Too many requests, in addition to

generating network congestion, may result in a denial of service.

Example

When a bank customer uses a web browser to access online banking services, the client sends a

request to the bank's web server. The customer's login credentials may be kept in a database, and

the webserver acts as a client to the database server. The returned data is interpreted by an

application server using the bank's business logic, and the result is sent to the webpage. Finally,

the webserver displays the result on the client web browser.

A computer executes a request and returns data at each stage in this sequence of client-server

message exchanges. This is the pattern of request-response communications. When all of the

requests have been fulfilled, the sequence is complete, and the web browser displays the data to

the consumer.

2. P2P

P2P computing or networking is a distributed application architecture that divides duties or

workloads among peers. Peers are network participants who are equally privileged and capable.

They are believed to create a node-to-node network.

Peers make a portion of their resources, like as processing power, disk storage, or network

bandwidth, directly available to other network members, eliminating the need for server or stable

host cooperation. In contrast to the classic client-server model, where consumption and provision

of resources are split, peers are both suppliers and consumers of resources.( Bandara,2004)

Page 8

Advantage

The following are some of the benefits of peer-to-peer computing: Each peer-to-peer network

computer administers itself. As a result, the network is simple to set up and manage. In a client-

server network, the server processes all client requests. This service is not necessary in peer-to-

peer computing, hence the server's cost is reduced.

The peer-to-peer network is simple to grow and expand. This only boosts the system's data-

sharing capabilities. None of the nodes in the peer-to-peer network is reliant on the others to

function.

Disadvantage

The following are some drawbacks of peer-to-peer computing: Data backup is challenging since it

is stored in several computer systems and there is no central server. It is difficult to establish

general security in a peer-to-peer network since each system is self-contained.

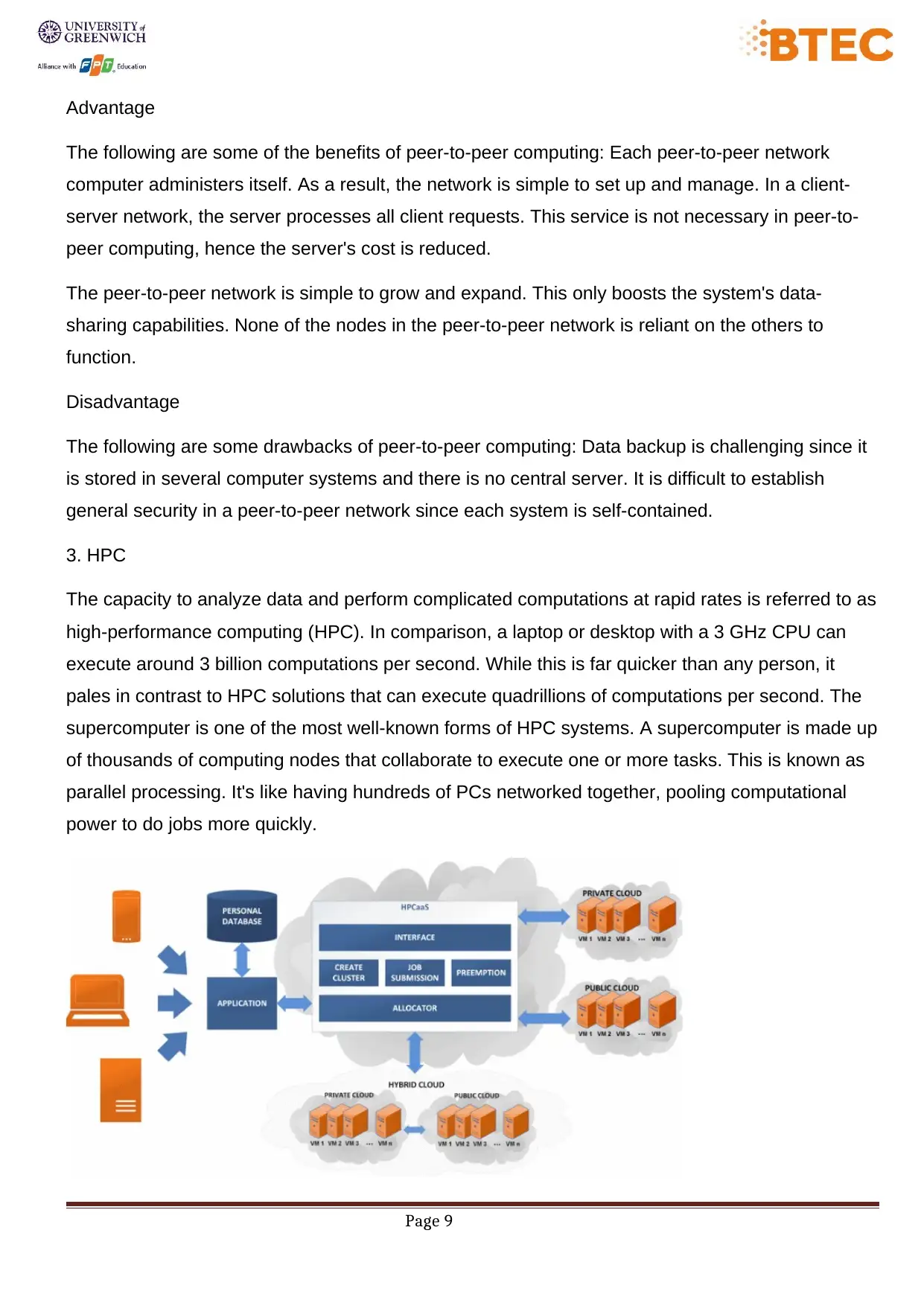

3. HPC

The capacity to analyze data and perform complicated computations at rapid rates is referred to as

high-performance computing (HPC). In comparison, a laptop or desktop with a 3 GHz CPU can

execute around 3 billion computations per second. While this is far quicker than any person, it

pales in contrast to HPC solutions that can execute quadrillions of computations per second. The

supercomputer is one of the most well-known forms of HPC systems. A supercomputer is made up

of thousands of computing nodes that collaborate to execute one or more tasks. This is known as

parallel processing. It's like having hundreds of PCs networked together, pooling computational

power to do jobs more quickly.

Page 9

The following are some of the benefits of peer-to-peer computing: Each peer-to-peer network

computer administers itself. As a result, the network is simple to set up and manage. In a client-

server network, the server processes all client requests. This service is not necessary in peer-to-

peer computing, hence the server's cost is reduced.

The peer-to-peer network is simple to grow and expand. This only boosts the system's data-

sharing capabilities. None of the nodes in the peer-to-peer network is reliant on the others to

function.

Disadvantage

The following are some drawbacks of peer-to-peer computing: Data backup is challenging since it

is stored in several computer systems and there is no central server. It is difficult to establish

general security in a peer-to-peer network since each system is self-contained.

3. HPC

The capacity to analyze data and perform complicated computations at rapid rates is referred to as

high-performance computing (HPC). In comparison, a laptop or desktop with a 3 GHz CPU can

execute around 3 billion computations per second. While this is far quicker than any person, it

pales in contrast to HPC solutions that can execute quadrillions of computations per second. The

supercomputer is one of the most well-known forms of HPC systems. A supercomputer is made up

of thousands of computing nodes that collaborate to execute one or more tasks. This is known as

parallel processing. It's like having hundreds of PCs networked together, pooling computational

power to do jobs more quickly.

Page 9

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

How does high-performance computing work?

Some workloads, like DNA sequencing, are just too large for a single computer to handle.

Individual nodes (computers) working together in a cluster (connected group) to execute vast

quantities of computation in a short period of time meet these large and complicated difficulties in

HPC or supercomputing settings. To save money, creating and uninstalling these clusters is

frequently automated on the cloud.

HPC may run on a variety of workloads, the most prevalent of which being embarrassingly parallel

workloads and tightly coupled workloads.

Why is HPC important?

For decades, high-performance computing has been an essential component of academic

research and industrial innovation. Engineers, data scientists, designers, and other researchers

may use HPC to solve massive, complicated problems in a fraction of the time and expense of

traditional computing.

The key advantages of HPC are as follows:

Physical testing is reduced since HPC can be utilized to construct simulations, which eliminates

the requirement for physical tests. For example, when testing vehicle accidents, creating a

simulation is significantly easier and less expensive than doing a crash test.

HPC can do huge computations in minutes rather than weeks or months because to the newest

CPUs, graphics processing units (GPUs), and low-latency networking fabrics such as remote

direct memory access (RDMA), along with all-flash local and block storage devices.

Cost: Quicker responses imply less wasted time and money. Furthermore, cloud-based HPC

allows even small firms and startups to run HPC workloads, paying just for what they use and

scaling up and down as needed.

HPC promotes innovation in practically every industry—the it's driving force behind important

scientific discoveries that improve people's quality of life all around the world.

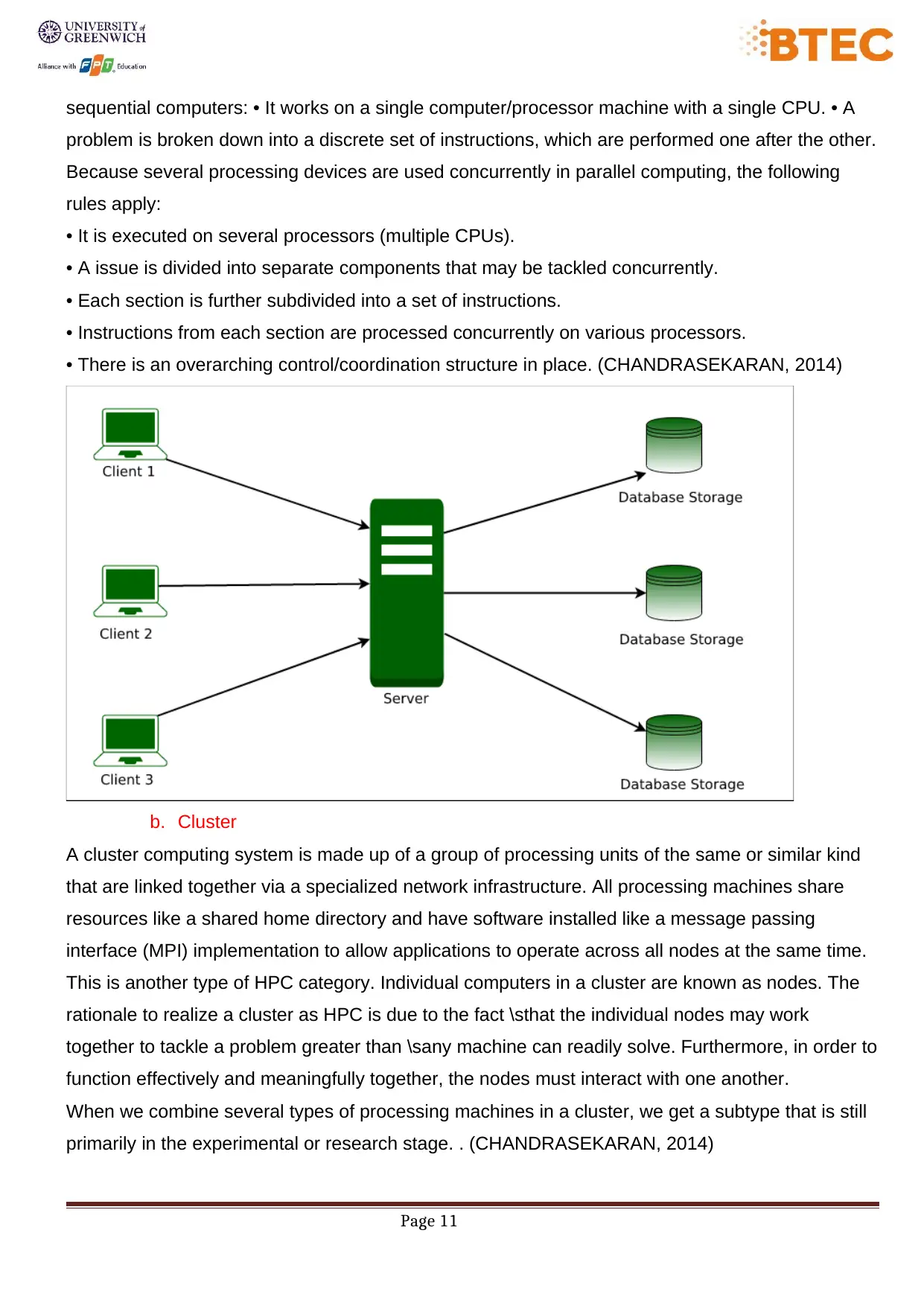

a. Parallel

Parallel computing is another component of HPC. A group of processors collaborate to solve a

computing issue. These processing devices, often known as CPUs, are typically homogenous in

nature. As a result, this definition is the same as that of HPC and is wide enough to cover

supercomputers with hundreds or thousands of processors networked with additional resources.

The method programs are performed distinguishes conventional (also known as serial, sequential,

or Von Neumann) computers from parallel computers. The following rules apply to serial or

Page 10

Some workloads, like DNA sequencing, are just too large for a single computer to handle.

Individual nodes (computers) working together in a cluster (connected group) to execute vast

quantities of computation in a short period of time meet these large and complicated difficulties in

HPC or supercomputing settings. To save money, creating and uninstalling these clusters is

frequently automated on the cloud.

HPC may run on a variety of workloads, the most prevalent of which being embarrassingly parallel

workloads and tightly coupled workloads.

Why is HPC important?

For decades, high-performance computing has been an essential component of academic

research and industrial innovation. Engineers, data scientists, designers, and other researchers

may use HPC to solve massive, complicated problems in a fraction of the time and expense of

traditional computing.

The key advantages of HPC are as follows:

Physical testing is reduced since HPC can be utilized to construct simulations, which eliminates

the requirement for physical tests. For example, when testing vehicle accidents, creating a

simulation is significantly easier and less expensive than doing a crash test.

HPC can do huge computations in minutes rather than weeks or months because to the newest

CPUs, graphics processing units (GPUs), and low-latency networking fabrics such as remote

direct memory access (RDMA), along with all-flash local and block storage devices.

Cost: Quicker responses imply less wasted time and money. Furthermore, cloud-based HPC

allows even small firms and startups to run HPC workloads, paying just for what they use and

scaling up and down as needed.

HPC promotes innovation in practically every industry—the it's driving force behind important

scientific discoveries that improve people's quality of life all around the world.

a. Parallel

Parallel computing is another component of HPC. A group of processors collaborate to solve a

computing issue. These processing devices, often known as CPUs, are typically homogenous in

nature. As a result, this definition is the same as that of HPC and is wide enough to cover

supercomputers with hundreds or thousands of processors networked with additional resources.

The method programs are performed distinguishes conventional (also known as serial, sequential,

or Von Neumann) computers from parallel computers. The following rules apply to serial or

Page 10

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

sequential computers: • It works on a single computer/processor machine with a single CPU. • A

problem is broken down into a discrete set of instructions, which are performed one after the other.

Because several processing devices are used concurrently in parallel computing, the following

rules apply:

• It is executed on several processors (multiple CPUs).

• A issue is divided into separate components that may be tackled concurrently.

• Each section is further subdivided into a set of instructions.

• Instructions from each section are processed concurrently on various processors.

• There is an overarching control/coordination structure in place. (CHANDRASEKARAN, 2014)

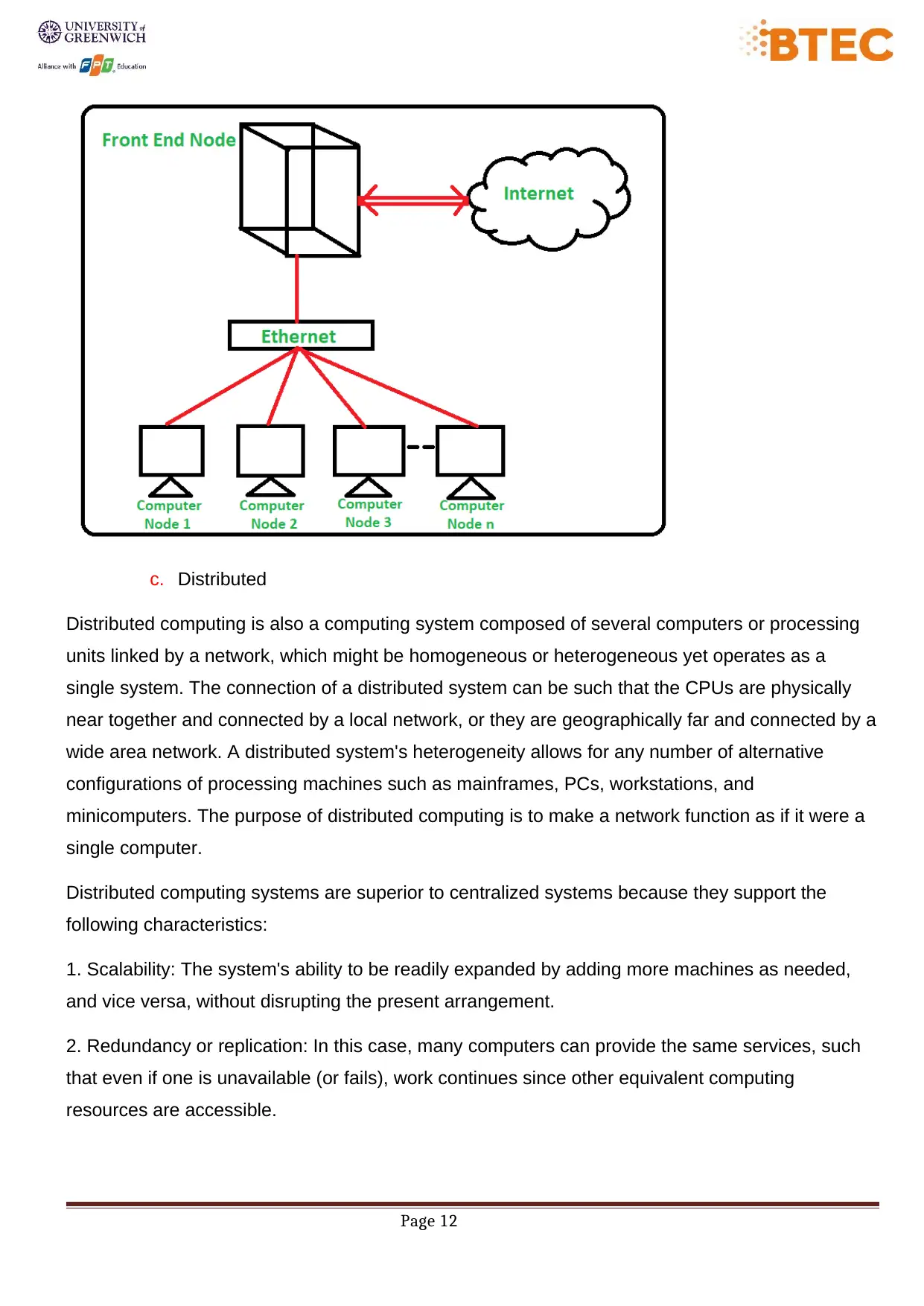

b. Cluster

A cluster computing system is made up of a group of processing units of the same or similar kind

that are linked together via a specialized network infrastructure. All processing machines share

resources like a shared home directory and have software installed like a message passing

interface (MPI) implementation to allow applications to operate across all nodes at the same time.

This is another type of HPC category. Individual computers in a cluster are known as nodes. The

rationale to realize a cluster as HPC is due to the fact \sthat the individual nodes may work

together to tackle a problem greater than \sany machine can readily solve. Furthermore, in order to

function effectively and meaningfully together, the nodes must interact with one another.

When we combine several types of processing machines in a cluster, we get a subtype that is still

primarily in the experimental or research stage. . (CHANDRASEKARAN, 2014)

Page 11

problem is broken down into a discrete set of instructions, which are performed one after the other.

Because several processing devices are used concurrently in parallel computing, the following

rules apply:

• It is executed on several processors (multiple CPUs).

• A issue is divided into separate components that may be tackled concurrently.

• Each section is further subdivided into a set of instructions.

• Instructions from each section are processed concurrently on various processors.

• There is an overarching control/coordination structure in place. (CHANDRASEKARAN, 2014)

b. Cluster

A cluster computing system is made up of a group of processing units of the same or similar kind

that are linked together via a specialized network infrastructure. All processing machines share

resources like a shared home directory and have software installed like a message passing

interface (MPI) implementation to allow applications to operate across all nodes at the same time.

This is another type of HPC category. Individual computers in a cluster are known as nodes. The

rationale to realize a cluster as HPC is due to the fact \sthat the individual nodes may work

together to tackle a problem greater than \sany machine can readily solve. Furthermore, in order to

function effectively and meaningfully together, the nodes must interact with one another.

When we combine several types of processing machines in a cluster, we get a subtype that is still

primarily in the experimental or research stage. . (CHANDRASEKARAN, 2014)

Page 11

c. Distributed

Distributed computing is also a computing system composed of several computers or processing

units linked by a network, which might be homogeneous or heterogeneous yet operates as a

single system. The connection of a distributed system can be such that the CPUs are physically

near together and connected by a local network, or they are geographically far and connected by a

wide area network. A distributed system's heterogeneity allows for any number of alternative

configurations of processing machines such as mainframes, PCs, workstations, and

minicomputers. The purpose of distributed computing is to make a network function as if it were a

single computer.

Distributed computing systems are superior to centralized systems because they support the

following characteristics:

1. Scalability: The system's ability to be readily expanded by adding more machines as needed,

and vice versa, without disrupting the present arrangement.

2. Redundancy or replication: In this case, many computers can provide the same services, such

that even if one is unavailable (or fails), work continues since other equivalent computing

resources are accessible.

Page 12

Distributed computing is also a computing system composed of several computers or processing

units linked by a network, which might be homogeneous or heterogeneous yet operates as a

single system. The connection of a distributed system can be such that the CPUs are physically

near together and connected by a local network, or they are geographically far and connected by a

wide area network. A distributed system's heterogeneity allows for any number of alternative

configurations of processing machines such as mainframes, PCs, workstations, and

minicomputers. The purpose of distributed computing is to make a network function as if it were a

single computer.

Distributed computing systems are superior to centralized systems because they support the

following characteristics:

1. Scalability: The system's ability to be readily expanded by adding more machines as needed,

and vice versa, without disrupting the present arrangement.

2. Redundancy or replication: In this case, many computers can provide the same services, such

that even if one is unavailable (or fails), work continues since other equivalent computing

resources are accessible.

Page 12

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 14

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.