Dissertation: Watermarking Techniques for Cloud Data Security Analysis

VerifiedAdded on 2023/01/19

|25

|9644

|87

Thesis and Dissertation

AI Summary

This dissertation delves into the application and effectiveness of watermarking techniques in cloud computing to enhance data security. It begins with an introduction to cloud computing, outlining its framework, benefits, and the significance of data security. The literature review explores cloud computing frameworks, watermarking techniques and their advantages for businesses, and the security and privacy issues associated with cloud computing. The research methodology section details the approach used to investigate the effectiveness of watermarking. The results, synthesis, and findings chapters present an analysis of the research, discussing the benefits of watermarking, the effectiveness of various techniques, and the implications for cloud data security. The study concludes with recommendations for future research and practical applications of watermarking in cloud environments, emphasizing the importance of protecting data in the cloud. The study aims to contribute to the understanding of cloud computing security.

DISSERTATION

(Application and effectiveness of watermarking technique in cloud computing to

enhance cloud data security)

(Application and effectiveness of watermarking technique in cloud computing to

enhance cloud data security)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

TABLE OF CONTENTS

CHAPTER 1: INTRODUCTION ...................................................................................................1

Background..................................................................................................................................1

Aim and Objectives......................................................................................................................2

Rationale......................................................................................................................................3

Significance..................................................................................................................................3

Research specification.................................................................................................................4

Gantt Chart...................................................................................................................................4

CHAPTER 2 : LITERATURE REVIEW .......................................................................................5

2.1 Cloud computing framework ................................................................................................5

2.2 Watermarking techniques and its benefits for business ........................................................8

2.3 Security and data privacy issues related to cloud computing .............................................12

CHAPTER 3: RESEARCH METHODOLOGY...........................................................................16

Chapter 4 , RESULTS ,SYNTHESIS............................................................................................26

2.1 Cloud computing framework...............................................................................................26

2.2 Watermarking techniques and its benefits for business.......................................................29

2.3 Security and data privacy issues related to cloud computing..............................................31

FINDINGS AND RESULT...........................................................................................................33

CONCLUSION..............................................................................................................................36

RECOMMEDNDATION..............................................................................................................37

REFERENCES..............................................................................................................................38

CHAPTER 1: INTRODUCTION ...................................................................................................1

Background..................................................................................................................................1

Aim and Objectives......................................................................................................................2

Rationale......................................................................................................................................3

Significance..................................................................................................................................3

Research specification.................................................................................................................4

Gantt Chart...................................................................................................................................4

CHAPTER 2 : LITERATURE REVIEW .......................................................................................5

2.1 Cloud computing framework ................................................................................................5

2.2 Watermarking techniques and its benefits for business ........................................................8

2.3 Security and data privacy issues related to cloud computing .............................................12

CHAPTER 3: RESEARCH METHODOLOGY...........................................................................16

Chapter 4 , RESULTS ,SYNTHESIS............................................................................................26

2.1 Cloud computing framework...............................................................................................26

2.2 Watermarking techniques and its benefits for business.......................................................29

2.3 Security and data privacy issues related to cloud computing..............................................31

FINDINGS AND RESULT...........................................................................................................33

CONCLUSION..............................................................................................................................36

RECOMMEDNDATION..............................................................................................................37

REFERENCES..............................................................................................................................38

CHAPTER 1: INTRODUCTION

Background

Cloud computing refers to the software technology used for providing the services over the

internet which can be used for the further use of the technology which can be used to provide

information. It includes servers, storage, database, networking, software, analytics and

intelligence which are used to provide a faster innovation in the things which can change over

within a shorter span of time, flexibility of resources is generated which involves the use of

flexible resources which can change over time. Cloud is built to aim the service which are made

to minimise the negative effect of the internet use this is build for the innovation like you pay for

what you use there is a limited services offered and the exact information can be evaluated out.

The cloud computing is the hardware and software which uses for providing the services

over internet. It is used to store and access data on internet from any device, which is able to use

internet. It is safe and secure to store data over cloud computing (A SURVEY ON

WATERMARKING METHODS FOR SECURITY OF CLOUD DATA, 2016). There are lot of

examples of cloud computing like Google's Gmail, Facebook, etc. because anybody can access

the data via login. As it provides more data storage, improved performance, low maintenance

needed, increased capacity to store data, increased data security, backup and recovery of data is

easy. Now come to the new way to make more securely storage of the data and files on the

cloud computing. The Watermarking technique is very effective and easier to secure the data on

the cloud. Because data security is main issue nowadays, the data can be copied, altered, or

transmitted easily. So using Watermarking technique on storing the data is useful and data can be

protected. Digital watermarking technique protects the digital data, like text, images, audio,

video. Digital Watermarking technique is developed from the steganography (To Propose A

Novel Technique for Watermarking In Cloud Computing, 2015). It includes the embedding of the

information or digital watermark with the files, and the data contains the watermark with the raw

file and then it is stored then it generates a digital watermarked file. Digital Watermarking

Technique has many advantages using to improve data security on the cloud computing, such as

it provides the copyright protection, the important information which has copyright can be

embedded as watermark into production, if any issue is raised on the copyright, then the

watermark can be the evidence from the owner's behalf (A Cloud-Based Watermarking Method

for Health Data and Image Security, 2014).

1

Background

Cloud computing refers to the software technology used for providing the services over the

internet which can be used for the further use of the technology which can be used to provide

information. It includes servers, storage, database, networking, software, analytics and

intelligence which are used to provide a faster innovation in the things which can change over

within a shorter span of time, flexibility of resources is generated which involves the use of

flexible resources which can change over time. Cloud is built to aim the service which are made

to minimise the negative effect of the internet use this is build for the innovation like you pay for

what you use there is a limited services offered and the exact information can be evaluated out.

The cloud computing is the hardware and software which uses for providing the services

over internet. It is used to store and access data on internet from any device, which is able to use

internet. It is safe and secure to store data over cloud computing (A SURVEY ON

WATERMARKING METHODS FOR SECURITY OF CLOUD DATA, 2016). There are lot of

examples of cloud computing like Google's Gmail, Facebook, etc. because anybody can access

the data via login. As it provides more data storage, improved performance, low maintenance

needed, increased capacity to store data, increased data security, backup and recovery of data is

easy. Now come to the new way to make more securely storage of the data and files on the

cloud computing. The Watermarking technique is very effective and easier to secure the data on

the cloud. Because data security is main issue nowadays, the data can be copied, altered, or

transmitted easily. So using Watermarking technique on storing the data is useful and data can be

protected. Digital watermarking technique protects the digital data, like text, images, audio,

video. Digital Watermarking technique is developed from the steganography (To Propose A

Novel Technique for Watermarking In Cloud Computing, 2015). It includes the embedding of the

information or digital watermark with the files, and the data contains the watermark with the raw

file and then it is stored then it generates a digital watermarked file. Digital Watermarking

Technique has many advantages using to improve data security on the cloud computing, such as

it provides the copyright protection, the important information which has copyright can be

embedded as watermark into production, if any issue is raised on the copyright, then the

watermark can be the evidence from the owner's behalf (A Cloud-Based Watermarking Method

for Health Data and Image Security, 2014).

1

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Authentication is provided, when any image or content is stored and the attacker tries to

hack the data but would be unsuccessful because the use of the digital signature would provide

the security to the content so that no modification will be done on the data, it demands for the

authentication and it is highly secured. The watermarking technique provides the imperceptibility

which means the invisibility , suppose the image contains the watermark but the person can't

identify the watermark, and it looks like the original image and the unauthorized person can't

access the image (An Efficient Approach for Security of Cloud Using Watermarking Technique,

2013). Its is very secure from the point of data security because it keeps the watermark private up

to the user only. Nobody else can identify the watermark of other and unable to retrieve any file

from the cloud computing.

Aim and Objectives

Aim

“To describe application and effectiveness of watermarking technique in cloud

computing to enhance cloud data security”

Objectives

To understand conceptual framework of cloud computing

To describe concept of watermarking technique and its benefits for business

To investigate issues related to cloud computing and data security

Problem statement:

This study needs to evaluate the need of the effectiveness of the watermarking techniques used to

evaluate the effects which can be use to minimise the factors which can be used to evaluate the

cloud computing strategies which can be used to generate data related to the watermark.

Watermark is not so secured and there are chances of data theft as anyone can access the data by

recovery generation which can be easily changed with the measurative steps followed easy

modifications of the issues are the major problem related to cloud computing. There are many

cases which can be heard about the outsourcing the data which can be outsourced in return of

money. Data accessed to cloud can be used by the host company which can be a threat to users.

how the conceptual framework of cloud computing can be understood?

Describe the concept of watermark techniques and its benefits for business?

How can issues related to cloud computing and data security can be evaluated?

2

hack the data but would be unsuccessful because the use of the digital signature would provide

the security to the content so that no modification will be done on the data, it demands for the

authentication and it is highly secured. The watermarking technique provides the imperceptibility

which means the invisibility , suppose the image contains the watermark but the person can't

identify the watermark, and it looks like the original image and the unauthorized person can't

access the image (An Efficient Approach for Security of Cloud Using Watermarking Technique,

2013). Its is very secure from the point of data security because it keeps the watermark private up

to the user only. Nobody else can identify the watermark of other and unable to retrieve any file

from the cloud computing.

Aim and Objectives

Aim

“To describe application and effectiveness of watermarking technique in cloud

computing to enhance cloud data security”

Objectives

To understand conceptual framework of cloud computing

To describe concept of watermarking technique and its benefits for business

To investigate issues related to cloud computing and data security

Problem statement:

This study needs to evaluate the need of the effectiveness of the watermarking techniques used to

evaluate the effects which can be use to minimise the factors which can be used to evaluate the

cloud computing strategies which can be used to generate data related to the watermark.

Watermark is not so secured and there are chances of data theft as anyone can access the data by

recovery generation which can be easily changed with the measurative steps followed easy

modifications of the issues are the major problem related to cloud computing. There are many

cases which can be heard about the outsourcing the data which can be outsourced in return of

money. Data accessed to cloud can be used by the host company which can be a threat to users.

how the conceptual framework of cloud computing can be understood?

Describe the concept of watermark techniques and its benefits for business?

How can issues related to cloud computing and data security can be evaluated?

2

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Statement of Purpose:

'This aims to describe the applications and effectiveness of the watermarking techniques in cloud

computing to enhance cloud data security.'

the study carried out reflects on the description of the applications and effectiveness carried out

on the basis of the study carried out which can be used to evaluate the data which are done to aim

the security and services of the cloud computing. The ways of evaluation of the work is carried

out which needs to be evaluated according to the need, this study provides the effectiveness of

the watermarking its benefits and use in cloud computing.

Rationale

The major reason of selecting topic of application and effectiveness of watermarking

technique in cloud computing to enhance cloud data security is the interest topic and researcher

has studied computer science hence this is essential topic and very vast. That is why individual

has selected this topic for the research. In addition, Scholar has worked in past on cloud

computing elements has individual has proper knowledge about it. Scholar is well aware with the

data security issues hence this is being taken into consideration for the present investigations

(Kumar and Gupta, 2018).

Significance

This is very important topic because by gathering information about effectiveness of

watermarking technique companies will be able to control over issue of data security. Apart from

this, investigation will give positive results to other scholar because they will get study material

which can be used by them in future researches (Rhazlane and et.al., 2017). For the business

there are limited sources which needs to be carried out which aims to lower the running cost. It

reflects the use of the cloud computing with the reflection of its benefits of cloud computing

which can be done with the aim to develop the purpose of this research which can be evaluated.

This study will focus on the methods of security system used for the protection of cloud data and

the fundamental ways to evaluate out the security methods. Various methods for watermarking

of the cloud computing is carried out in this study digital watermarking facility has used for the

development and safety of the cloud computing which can be used to improve the data security

and safety of the data. Watermarking is evaluated to improve the data security services on cloud.

It will be important for the researchers to evaluate the security services offered on cloud

3

'This aims to describe the applications and effectiveness of the watermarking techniques in cloud

computing to enhance cloud data security.'

the study carried out reflects on the description of the applications and effectiveness carried out

on the basis of the study carried out which can be used to evaluate the data which are done to aim

the security and services of the cloud computing. The ways of evaluation of the work is carried

out which needs to be evaluated according to the need, this study provides the effectiveness of

the watermarking its benefits and use in cloud computing.

Rationale

The major reason of selecting topic of application and effectiveness of watermarking

technique in cloud computing to enhance cloud data security is the interest topic and researcher

has studied computer science hence this is essential topic and very vast. That is why individual

has selected this topic for the research. In addition, Scholar has worked in past on cloud

computing elements has individual has proper knowledge about it. Scholar is well aware with the

data security issues hence this is being taken into consideration for the present investigations

(Kumar and Gupta, 2018).

Significance

This is very important topic because by gathering information about effectiveness of

watermarking technique companies will be able to control over issue of data security. Apart from

this, investigation will give positive results to other scholar because they will get study material

which can be used by them in future researches (Rhazlane and et.al., 2017). For the business

there are limited sources which needs to be carried out which aims to lower the running cost. It

reflects the use of the cloud computing with the reflection of its benefits of cloud computing

which can be done with the aim to develop the purpose of this research which can be evaluated.

This study will focus on the methods of security system used for the protection of cloud data and

the fundamental ways to evaluate out the security methods. Various methods for watermarking

of the cloud computing is carried out in this study digital watermarking facility has used for the

development and safety of the cloud computing which can be used to improve the data security

and safety of the data. Watermarking is evaluated to improve the data security services on cloud.

It will be important for the researchers to evaluate the security services offered on cloud

3

computing and the security offered by the watermarking to carry out his further research this can

be used by many organizations which work on the cloud computing data for the security and

safety of the data generated this research will be beneficial for the small business to make them

aware for the development and data security.

Research specification

Scholar will use interpretivism philosophy for conducting investigation on application

and effectiveness of watermarking technique in cloud computing to enhance cloud data security.

Researcher will gather all the detail related to watermarking technique from secondary sources

and will analyses data by using books, journals and other essential articles related to

watermarking. In addition to this, individual will take care of ethical aspects and will conduct

entire investigation within by following ethical guild lines properly (Uma and Sumathi, 2017).

Researcher will ensure that details are being used in the investigation in adequate manner hence

theories will be taken into consideration. Inductive approach will be followed to answer the

research questions related to effectiveness of water marking technique in data security.

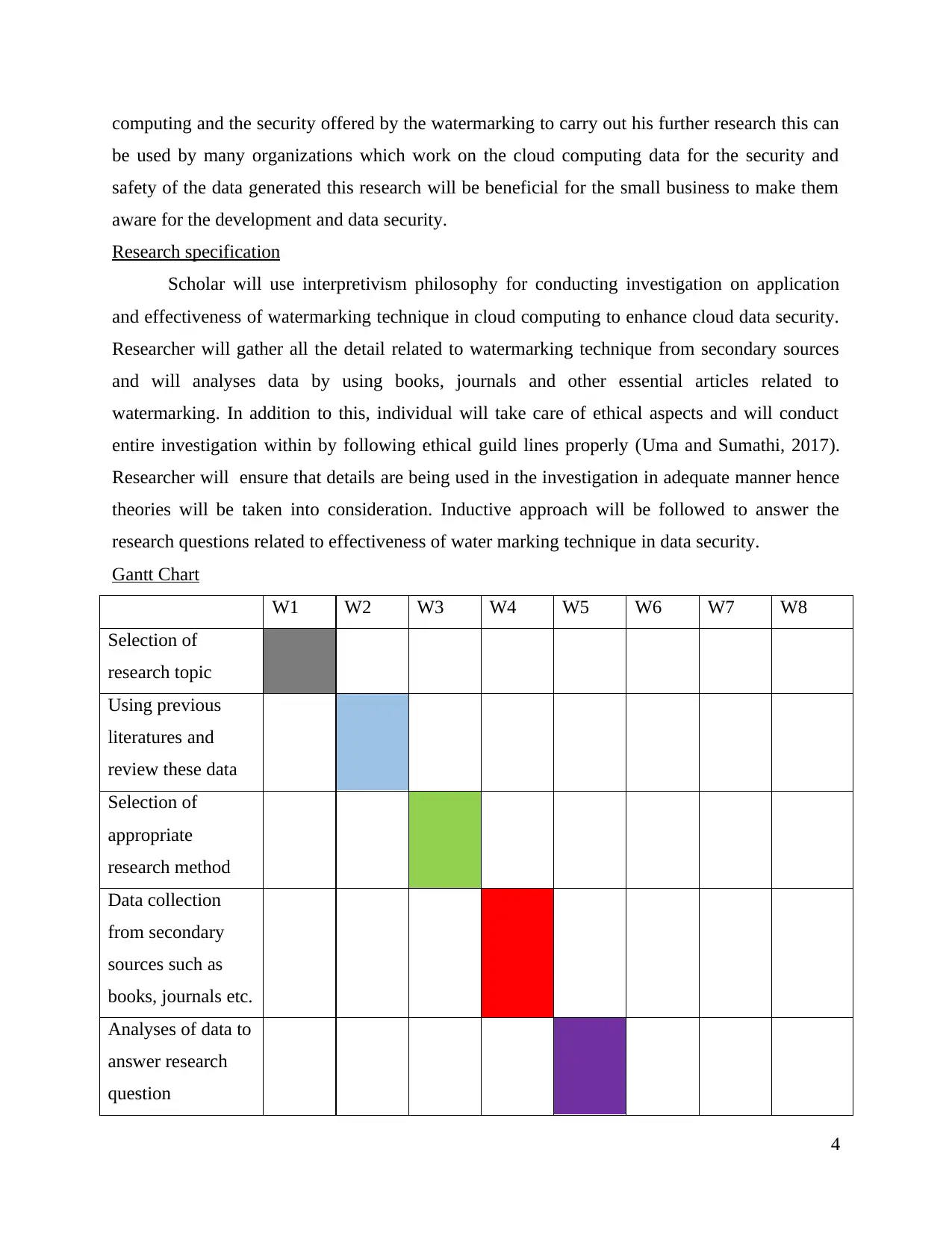

Gantt Chart

W1 W2 W3 W4 W5 W6 W7 W8

Selection of

research topic

Using previous

literatures and

review these data

Selection of

appropriate

research method

Data collection

from secondary

sources such as

books, journals etc.

Analyses of data to

answer research

question

4

be used by many organizations which work on the cloud computing data for the security and

safety of the data generated this research will be beneficial for the small business to make them

aware for the development and data security.

Research specification

Scholar will use interpretivism philosophy for conducting investigation on application

and effectiveness of watermarking technique in cloud computing to enhance cloud data security.

Researcher will gather all the detail related to watermarking technique from secondary sources

and will analyses data by using books, journals and other essential articles related to

watermarking. In addition to this, individual will take care of ethical aspects and will conduct

entire investigation within by following ethical guild lines properly (Uma and Sumathi, 2017).

Researcher will ensure that details are being used in the investigation in adequate manner hence

theories will be taken into consideration. Inductive approach will be followed to answer the

research questions related to effectiveness of water marking technique in data security.

Gantt Chart

W1 W2 W3 W4 W5 W6 W7 W8

Selection of

research topic

Using previous

literatures and

review these data

Selection of

appropriate

research method

Data collection

from secondary

sources such as

books, journals etc.

Analyses of data to

answer research

question

4

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

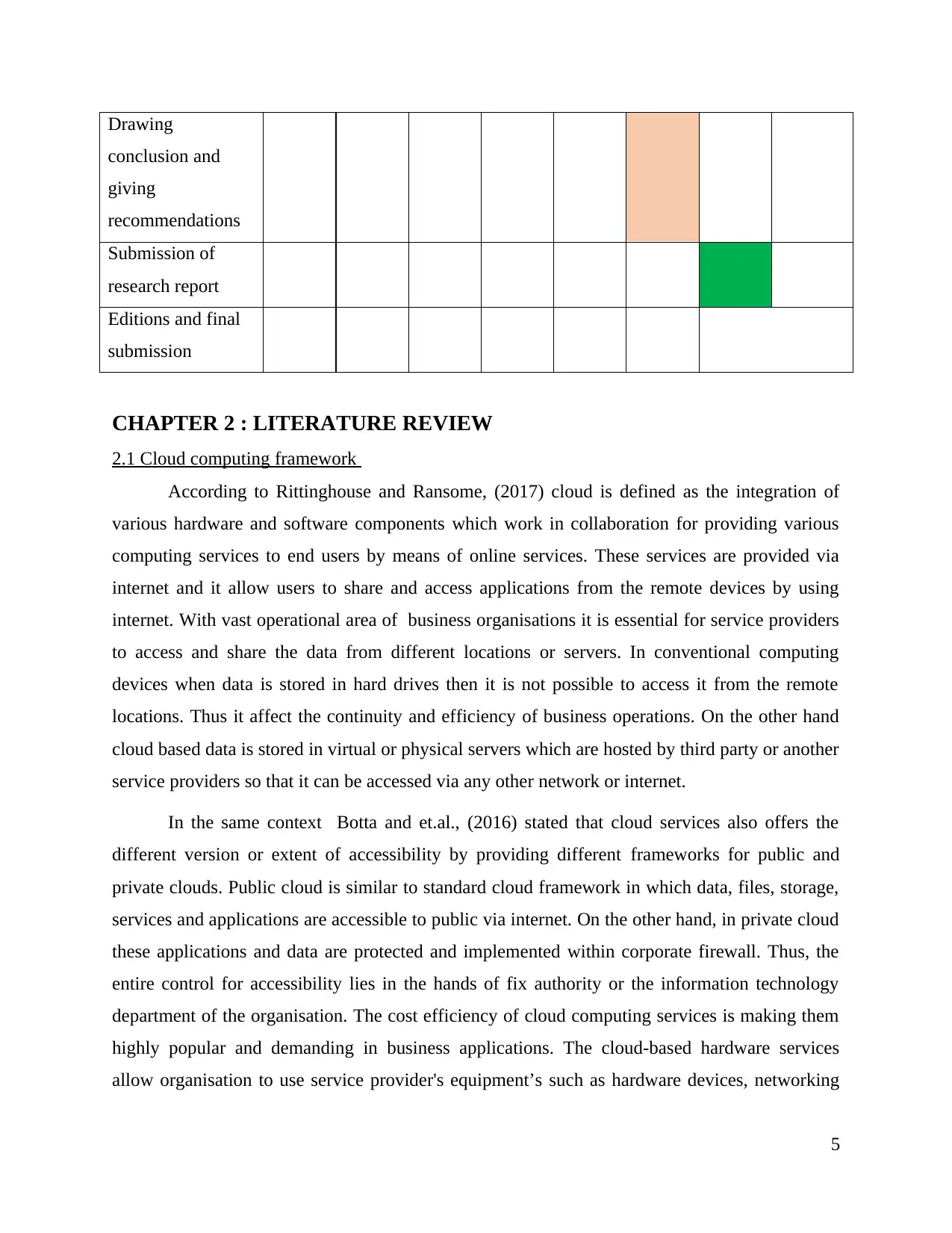

Drawing

conclusion and

giving

recommendations

Submission of

research report

Editions and final

submission

CHAPTER 2 : LITERATURE REVIEW

2.1 Cloud computing framework

According to Rittinghouse and Ransome, (2017) cloud is defined as the integration of

various hardware and software components which work in collaboration for providing various

computing services to end users by means of online services. These services are provided via

internet and it allow users to share and access applications from the remote devices by using

internet. With vast operational area of business organisations it is essential for service providers

to access and share the data from different locations or servers. In conventional computing

devices when data is stored in hard drives then it is not possible to access it from the remote

locations. Thus it affect the continuity and efficiency of business operations. On the other hand

cloud based data is stored in virtual or physical servers which are hosted by third party or another

service providers so that it can be accessed via any other network or internet.

In the same context Botta and et.al., (2016) stated that cloud services also offers the

different version or extent of accessibility by providing different frameworks for public and

private clouds. Public cloud is similar to standard cloud framework in which data, files, storage,

services and applications are accessible to public via internet. On the other hand, in private cloud

these applications and data are protected and implemented within corporate firewall. Thus, the

entire control for accessibility lies in the hands of fix authority or the information technology

department of the organisation. The cost efficiency of cloud computing services is making them

highly popular and demanding in business applications. The cloud-based hardware services

allow organisation to use service provider's equipment’s such as hardware devices, networking

5

conclusion and

giving

recommendations

Submission of

research report

Editions and final

submission

CHAPTER 2 : LITERATURE REVIEW

2.1 Cloud computing framework

According to Rittinghouse and Ransome, (2017) cloud is defined as the integration of

various hardware and software components which work in collaboration for providing various

computing services to end users by means of online services. These services are provided via

internet and it allow users to share and access applications from the remote devices by using

internet. With vast operational area of business organisations it is essential for service providers

to access and share the data from different locations or servers. In conventional computing

devices when data is stored in hard drives then it is not possible to access it from the remote

locations. Thus it affect the continuity and efficiency of business operations. On the other hand

cloud based data is stored in virtual or physical servers which are hosted by third party or another

service providers so that it can be accessed via any other network or internet.

In the same context Botta and et.al., (2016) stated that cloud services also offers the

different version or extent of accessibility by providing different frameworks for public and

private clouds. Public cloud is similar to standard cloud framework in which data, files, storage,

services and applications are accessible to public via internet. On the other hand, in private cloud

these applications and data are protected and implemented within corporate firewall. Thus, the

entire control for accessibility lies in the hands of fix authority or the information technology

department of the organisation. The cost efficiency of cloud computing services is making them

highly popular and demanding in business applications. The cloud-based hardware services

allow organisation to use service provider's equipment’s such as hardware devices, networking

5

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

and storage components and servers. Thus it allows organisation to eliminate the need of

spending huge investments on equipments. At the same time the cloud software services

applications are hosted by the service provider and are accessible via network. Thus business

organisations receives huge benefits in terms of maintenance cost or cost deployment.

As per the view of Almorsy, Grundy and Müller, (2016) cloud services are more safer

and accessible for storing and sharing the data as compare to the other computing methods. With

business expansion across the world multiple copies of data is also required by the organisation

so that their interrelated global operations can be accomplished without any difficulty. The cloud

services allow organisation to store their data on various servers situated in different

geographical locations which is also protected by power supplies. Cloud services are

characterised by on demand self service, ubiquitous access of network, rapid elasticity and pay

per use. The storage and data processing needs of organisation are also distributed by a common

infrastructure in which no particular user is assigned a specific resources.

According to Gai and et.al., (2016) cloud computing frameworks consist of front end

platform such as mobile device and clients, cloud based delivery, networks such as intranet,

internet or intercloud and back end platform like storage and servers. There are various types of

framework available for the cloud services such as SaaS, DaaS, PaaS, IaaS, and DaaS.

Software as service (SaaS): In this type of cloud service framework software is installed and

maintained by the service provider in the cloud only while the users can run the software over

internet or intranet. Ali, Khan and Vasilakos, (2015) stated that with SaaS client machines does

not require the installation of application specific software because applications are executed or

performed in the cloud. This framework is scalable and thus business organisations can easily

manage their ever increasing business data by loading the application on various servers. It

usually involves the annual or monthly for the usage charges.

Data as a service (DaaS): In DaaS, cloud data can be accessed by using well defined API layers.

The data build on this cloud computing framework is provided to users as per their demand

irrespective of the organisational separation between consumer and service provider or the

geographical distinction. The collection of data customer package and software and EAI

middleware increases the burden of software on organisation for managing the particular type of

data. Along with this the regular up-gradation and maintenance cost as well as software update

6

spending huge investments on equipments. At the same time the cloud software services

applications are hosted by the service provider and are accessible via network. Thus business

organisations receives huge benefits in terms of maintenance cost or cost deployment.

As per the view of Almorsy, Grundy and Müller, (2016) cloud services are more safer

and accessible for storing and sharing the data as compare to the other computing methods. With

business expansion across the world multiple copies of data is also required by the organisation

so that their interrelated global operations can be accomplished without any difficulty. The cloud

services allow organisation to store their data on various servers situated in different

geographical locations which is also protected by power supplies. Cloud services are

characterised by on demand self service, ubiquitous access of network, rapid elasticity and pay

per use. The storage and data processing needs of organisation are also distributed by a common

infrastructure in which no particular user is assigned a specific resources.

According to Gai and et.al., (2016) cloud computing frameworks consist of front end

platform such as mobile device and clients, cloud based delivery, networks such as intranet,

internet or intercloud and back end platform like storage and servers. There are various types of

framework available for the cloud services such as SaaS, DaaS, PaaS, IaaS, and DaaS.

Software as service (SaaS): In this type of cloud service framework software is installed and

maintained by the service provider in the cloud only while the users can run the software over

internet or intranet. Ali, Khan and Vasilakos, (2015) stated that with SaaS client machines does

not require the installation of application specific software because applications are executed or

performed in the cloud. This framework is scalable and thus business organisations can easily

manage their ever increasing business data by loading the application on various servers. It

usually involves the annual or monthly for the usage charges.

Data as a service (DaaS): In DaaS, cloud data can be accessed by using well defined API layers.

The data build on this cloud computing framework is provided to users as per their demand

irrespective of the organisational separation between consumer and service provider or the

geographical distinction. The collection of data customer package and software and EAI

middleware increases the burden of software on organisation for managing the particular type of

data. Along with this the regular up-gradation and maintenance cost as well as software update

6

with the change in data format. The DaaS operates on premise that quality of data occurs in

central place and thus it has several advantages such as cost effectiveness, agility and data

quality. This is one of the important feature of this type of cloud framework that it allows users

to move quickly as it is very simple for the service users to access the data without requirement

of any in depth knowledge of type of underlying data. In addition to this it also permits the

necessary modifications in locations or the data structure as per the needs of service users. Since

there is single point of update data access is managed and controlled by means of data services

which in turn provide the high-quality data.

Development as a service (DaaS): According to Messerli and et.al., (2017) this cloud framework

is web based and can be refereed as the community shared development tools. This is the type of

stand-alone model in which integrated development tools are provided along with the runtime

environment for developing application.

Infrastructure as a service (IaaS): In this type of cloud computing framework physical

hardware is used and all servers, storage, system management and network remain virtually.

Thus, the annual or monthly subscription provided by service users for running virtual

components mitigate the requirement of data centre, cooling and other hardware maintenance

devices at the local level.

Platform as a service (PaaS): This type of cloud service framework allows business

organisation or the users to utilise database and application platform as service. In similar

context Xia and et.al., (2016) elucidated that PaaS provides a complete platform which includes

development of application and interface, storage and database development which are delivered

through a remotely hosted service platform to the users. Thus, this framework gives the ability to

develop an enterprise class service for local usage and for on demand services at free of cost or

considerably lower prices.

According to Yang and et.al., (2017) the cloud computing technology consist of two key

elements which are known as cloud virtualisation and service-oriented architecture (SOA).

Cloud virtualisation: It is one of the most important feature of cloud system which allow the

efficient delivery of the cloud services. When virtual computing resources are installed or

implemented in the cloud then it mimics the functions of physical computer resources. For

instance, it also provides flexible load balancing management allowing adjustment of

7

central place and thus it has several advantages such as cost effectiveness, agility and data

quality. This is one of the important feature of this type of cloud framework that it allows users

to move quickly as it is very simple for the service users to access the data without requirement

of any in depth knowledge of type of underlying data. In addition to this it also permits the

necessary modifications in locations or the data structure as per the needs of service users. Since

there is single point of update data access is managed and controlled by means of data services

which in turn provide the high-quality data.

Development as a service (DaaS): According to Messerli and et.al., (2017) this cloud framework

is web based and can be refereed as the community shared development tools. This is the type of

stand-alone model in which integrated development tools are provided along with the runtime

environment for developing application.

Infrastructure as a service (IaaS): In this type of cloud computing framework physical

hardware is used and all servers, storage, system management and network remain virtually.

Thus, the annual or monthly subscription provided by service users for running virtual

components mitigate the requirement of data centre, cooling and other hardware maintenance

devices at the local level.

Platform as a service (PaaS): This type of cloud service framework allows business

organisation or the users to utilise database and application platform as service. In similar

context Xia and et.al., (2016) elucidated that PaaS provides a complete platform which includes

development of application and interface, storage and database development which are delivered

through a remotely hosted service platform to the users. Thus, this framework gives the ability to

develop an enterprise class service for local usage and for on demand services at free of cost or

considerably lower prices.

According to Yang and et.al., (2017) the cloud computing technology consist of two key

elements which are known as cloud virtualisation and service-oriented architecture (SOA).

Cloud virtualisation: It is one of the most important feature of cloud system which allow the

efficient delivery of the cloud services. When virtual computing resources are installed or

implemented in the cloud then it mimics the functions of physical computer resources. For

instance, it also provides flexible load balancing management allowing adjustment of

7

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

computational services as per the demand. Another benefit of cloud virtualisation is that it allows

business to promote high degree of scalability, reliability and availability in terms of access to

cloud technology or information technology needs. In case of fail over support of the disaster

recovery also the cloud virtualisation serves as a critical element of the cloud services.

SOA architecture: As per the view of Stergiou and et.al., (2018) SOA architecture is vital for the

organisation as it permits organisation to access computing solutions which can be improved on

demand basis or as per the situational change in the business environment. SOA encourages the

independent web-based services which can interact with each other via internet. Hence it offers

real time communication and flexibility which makes the cloud-based system and services easily

and quickly reconfigurable delivery. This architecture also put the development cost,

maintenance and deployment on the web service providers. It results in the permission and easy

access to web services at low cost. The characteristic to promote the centralise distribution and

reuse of components makes SOA as one of the most powerful component of cloud computing.

This characteristic also drives low cost in software delivery and development.

Contrary to the above discussion Marinescu, (2017) stated that suitability of the cloud

computing techniques is also critically affected by the several challenges. Apart from the data

security and privacy concerns cloud computing technology and framework performance is also

influenced by the bandwidth cost and performance. The organisations can reduce the cost by

saving their expenses on acquiring new systems, database management and other tasks related to

maintenance. Though this issue is not significant in small applications but it greatly affects the

performance in data intensive applications. Thus, there is need that there must be sufficient

bandwidth so that application timeout and hold off latency can be hold and intricate data and

exhaustive services can be received and delivered. Another challenge in adopting cloud system is

known to be amalgamation of present cloud infrastructure. Thus, the added benefits disappear

from discrete cloud services within organisation are not able to achieve the expected outcomes as

provided by well-integrated environment.

2.2 Watermarking techniques and its benefits for business

As per the view of Minerva M. Yeung (2016) Watermarking technique is a which is used

to hide the digital data which is generated which can be used to evaluate the ownership of

copyright which can be used to evaluate the signal. Watermarking is a tool used to hide a

8

business to promote high degree of scalability, reliability and availability in terms of access to

cloud technology or information technology needs. In case of fail over support of the disaster

recovery also the cloud virtualisation serves as a critical element of the cloud services.

SOA architecture: As per the view of Stergiou and et.al., (2018) SOA architecture is vital for the

organisation as it permits organisation to access computing solutions which can be improved on

demand basis or as per the situational change in the business environment. SOA encourages the

independent web-based services which can interact with each other via internet. Hence it offers

real time communication and flexibility which makes the cloud-based system and services easily

and quickly reconfigurable delivery. This architecture also put the development cost,

maintenance and deployment on the web service providers. It results in the permission and easy

access to web services at low cost. The characteristic to promote the centralise distribution and

reuse of components makes SOA as one of the most powerful component of cloud computing.

This characteristic also drives low cost in software delivery and development.

Contrary to the above discussion Marinescu, (2017) stated that suitability of the cloud

computing techniques is also critically affected by the several challenges. Apart from the data

security and privacy concerns cloud computing technology and framework performance is also

influenced by the bandwidth cost and performance. The organisations can reduce the cost by

saving their expenses on acquiring new systems, database management and other tasks related to

maintenance. Though this issue is not significant in small applications but it greatly affects the

performance in data intensive applications. Thus, there is need that there must be sufficient

bandwidth so that application timeout and hold off latency can be hold and intricate data and

exhaustive services can be received and delivered. Another challenge in adopting cloud system is

known to be amalgamation of present cloud infrastructure. Thus, the added benefits disappear

from discrete cloud services within organisation are not able to achieve the expected outcomes as

provided by well-integrated environment.

2.2 Watermarking techniques and its benefits for business

As per the view of Minerva M. Yeung (2016) Watermarking technique is a which is used

to hide the digital data which is generated which can be used to evaluate the ownership of

copyright which can be used to evaluate the signal. Watermarking is a tool used to hide a

8

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

information in career signal, but does not need to contain a career signal it does not include any

relation to the career signal. Digital watermarking is a tool which is used to verify the

authenticity or integrity of the host signal which reflects on the identity of the owner.

Watermarking technique is used to observe the authentication of any digital system used in cloud

computing. Likewise, traditional watermarking, digital watermarking is used to evaluate the

authenticity of the data which are set up as a host in the digital market which can be used to

evaluate the rest of the data. Digital watermarking is under the certain conditions which after

using algorithm defines the authentication of the data. Digital signal distorts the way that they

become easily perceivable, it is traced effective or ineffective depending on purpose. Traditional

tool is used for media like images and videos but with the evolution of technology there is a

change in the digital watermarking it has included various objects and techniques for

digitalization and for the protection of data. metadata is always added to the digital signal where

the size of information remains fixed.

The variability in the digital water-marketing is dependent on the cases where it is

applied in the use. Water-marketing must be robust and flexible that they can carry all the

sources of the carrier signal and help to adapt the changes as easily and quickly as possible.

Watermarking is a headway of various researches which can be used as technical as well as

commercial tool which can be used to reduce publicity and controversy. The use of internet can

help in the development of the social and electronic publishing and advertising. Real time

information used, product ordering, various digital processes are used in the which needs to be

channelized and made secure which can help in the salient and safer working of the tools used

this ensures safety and data control which can be used in a secure manner.

Contradictory to above discussion, Marwan, Kartit and Ouahmane (2018) there are

abundant opportunities which are developed, which also gave rise to the challenges with the

development of the watermarking various issues are resolved which involves the issue related to

socio-economic policies which resolved many issues which can help in the management of the

personal data. With the help of water-marketing these issues can be resolved as authentication

and legal rights are provided to the authority which can access data. With the help of

watermarking this approach are safer and help in the generating socio-economic policies.

9

relation to the career signal. Digital watermarking is a tool which is used to verify the

authenticity or integrity of the host signal which reflects on the identity of the owner.

Watermarking technique is used to observe the authentication of any digital system used in cloud

computing. Likewise, traditional watermarking, digital watermarking is used to evaluate the

authenticity of the data which are set up as a host in the digital market which can be used to

evaluate the rest of the data. Digital watermarking is under the certain conditions which after

using algorithm defines the authentication of the data. Digital signal distorts the way that they

become easily perceivable, it is traced effective or ineffective depending on purpose. Traditional

tool is used for media like images and videos but with the evolution of technology there is a

change in the digital watermarking it has included various objects and techniques for

digitalization and for the protection of data. metadata is always added to the digital signal where

the size of information remains fixed.

The variability in the digital water-marketing is dependent on the cases where it is

applied in the use. Water-marketing must be robust and flexible that they can carry all the

sources of the carrier signal and help to adapt the changes as easily and quickly as possible.

Watermarking is a headway of various researches which can be used as technical as well as

commercial tool which can be used to reduce publicity and controversy. The use of internet can

help in the development of the social and electronic publishing and advertising. Real time

information used, product ordering, various digital processes are used in the which needs to be

channelized and made secure which can help in the salient and safer working of the tools used

this ensures safety and data control which can be used in a secure manner.

Contradictory to above discussion, Marwan, Kartit and Ouahmane (2018) there are

abundant opportunities which are developed, which also gave rise to the challenges with the

development of the watermarking various issues are resolved which involves the issue related to

socio-economic policies which resolved many issues which can help in the management of the

personal data. With the help of water-marketing these issues can be resolved as authentication

and legal rights are provided to the authority which can access data. With the help of

watermarking this approach are safer and help in the generating socio-economic policies.

9

In accordance with Nasrin M. Makbol and Bee Ee Kho(2018) Singular Value

Decomposition(SVD) consist of important mathematical tools that are helpful in many

operations. This can help in the business that are associated with the online marketing this tools

can effectively maintain the structure and the data provided which can help in the maintenance of

the data, this can help in detecting the False Positive Problem(FPP) which can lead to the

satisfaction and robustness of the SVD problem. Satisfying robustness and imperceptibility

requirements as well as preventing FPP's, in SVD based watermarking is a crucial practice which

can be used in copyrights and authentication of the personal data which cannot be accessed

without the permission of the administrator. All the SVD based algorithms that lead to the false

positive problem can be used to detect the flaws of the data that is based on the false positive

problem.

As per the view of Upasana Yadav(2017) classification of digital water-marketing is done

on the basis of

Robustness:

Fragile water-marketing: it is used for integrated protection which is sensitive towards the

change and alerts when there is any change in the data and the signal. With the help of fragile

water-marketing it is easier to predict that the original data is changed or not.

Semi fragile water-marketing: it is capable of tolerance of some degree of change to water-

marked image like addition of noise attacks.

Robust water-marking: it is used to prevent the noise attacks, geometric or non-geometric

attacks which can be done without the change in the watermarked. This aims that the water-mark

is not destroyed and aims for the development and security to the water-mark.

According to Perceptive:

Visible Watermarking: it is visible in digital data example- logos of channel are visible in the

corner of the screen.

Invisible watermarking: by this technology, inserting a secret information into digital content

which are not visible in the digital images and contents. It needs to be extracted by various

processes to crack the information.

According to attached Digital signal

Image watermarking: This is used to convert original data into digital image.

10

Decomposition(SVD) consist of important mathematical tools that are helpful in many

operations. This can help in the business that are associated with the online marketing this tools

can effectively maintain the structure and the data provided which can help in the maintenance of

the data, this can help in detecting the False Positive Problem(FPP) which can lead to the

satisfaction and robustness of the SVD problem. Satisfying robustness and imperceptibility

requirements as well as preventing FPP's, in SVD based watermarking is a crucial practice which

can be used in copyrights and authentication of the personal data which cannot be accessed

without the permission of the administrator. All the SVD based algorithms that lead to the false

positive problem can be used to detect the flaws of the data that is based on the false positive

problem.

As per the view of Upasana Yadav(2017) classification of digital water-marketing is done

on the basis of

Robustness:

Fragile water-marketing: it is used for integrated protection which is sensitive towards the

change and alerts when there is any change in the data and the signal. With the help of fragile

water-marketing it is easier to predict that the original data is changed or not.

Semi fragile water-marketing: it is capable of tolerance of some degree of change to water-

marked image like addition of noise attacks.

Robust water-marking: it is used to prevent the noise attacks, geometric or non-geometric

attacks which can be done without the change in the watermarked. This aims that the water-mark

is not destroyed and aims for the development and security to the water-mark.

According to Perceptive:

Visible Watermarking: it is visible in digital data example- logos of channel are visible in the

corner of the screen.

Invisible watermarking: by this technology, inserting a secret information into digital content

which are not visible in the digital images and contents. It needs to be extracted by various

processes to crack the information.

According to attached Digital signal

Image watermarking: This is used to convert original data into digital image.

10

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 25

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.