KOI ICT201: Computer Organization and Architecture Deadlock Analysis

VerifiedAdded on 2022/09/27

|9

|1651

|25

Homework Assignment

AI Summary

This assignment delves into the critical aspects of computer organization and architecture, specifically focusing on the concept of deadlocks. It explores various strategies for handling deadlocks, including detection, prevention, and avoidance, detailing how these methods are applied in different contexts such as CPU, main memory, and file management systems. The assignment analyzes the banking algorithm to illustrate deadlock prevention and discusses key metrics like turnaround time and waiting time in the context of scheduling algorithms. Several scheduling algorithms, including First Come First Serve (FCFS), Shortest Job Next (SJN), Shortest Remaining Time (SRT), and Round Robin, are examined with their timelines and characteristics. Furthermore, the assignment includes an analysis of a directed resource graph to demonstrate deadlock situations. The document also provides references to relevant research papers.

Computer Architecture 1

Computer Organization and Architecture

By (Name)

The Name of the Class (Course)

Professor (Tutor)

The Name of the School (University)

The City and State where it is located

The Date

Computer Organization and Architecture

By (Name)

The Name of the Class (Course)

Professor (Tutor)

The Name of the School (University)

The City and State where it is located

The Date

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Computer Architecture 2

Computer Organization and Architecture

1) Handling and managing deadlocks

In computing technology, deadlocks happen when two or more processes compete for

resources. This usually happens when a resource needed by one program is held by another

program, and that resource cannot be released if certain requirements have not been met.

Deadlock detection, prevention and avoidance are used to handle such situations so that

processes do not wait for too long or even starve. Deadlock detection aims at detecting a

deadlock by using resource schedulers that track all resources being held by executing processes

(Furmans, et al., 2019). When a deadlock situation is detected, all processes involved in it are

killed and resources pre-empted from some processes and control handed over to others. With

deadlock prevention, a system evaluates each process before allowing it to enter into execution.

A process that can cause deadlock is never allowed to run into execution. Deadlock avoidance

works by avoiding any instances of deadlock situations rather than taking any precautionary

measures. Wait graphs showing relationships between processes and resources are used in this

case.

The present day microprocessor and CPU technologies that allow for multitasking

strategies. In such environments, the central processing unit is a resource that is shared among

different transactions with hardware clocks designed to ensure that no single process consumes

the CPU completely. This is a prevention mechanism whose goal is to ensure that the CPU can

adequately serve two or more processes simultaneously requesting for its control. The choice of

this mechanism is influenced by the fact that CPU is a critical infrastructure that must not be

allowed to ‘run’ out or be exhausted in its entirety. Any prevention algorithm that relies on

Computer Organization and Architecture

1) Handling and managing deadlocks

In computing technology, deadlocks happen when two or more processes compete for

resources. This usually happens when a resource needed by one program is held by another

program, and that resource cannot be released if certain requirements have not been met.

Deadlock detection, prevention and avoidance are used to handle such situations so that

processes do not wait for too long or even starve. Deadlock detection aims at detecting a

deadlock by using resource schedulers that track all resources being held by executing processes

(Furmans, et al., 2019). When a deadlock situation is detected, all processes involved in it are

killed and resources pre-empted from some processes and control handed over to others. With

deadlock prevention, a system evaluates each process before allowing it to enter into execution.

A process that can cause deadlock is never allowed to run into execution. Deadlock avoidance

works by avoiding any instances of deadlock situations rather than taking any precautionary

measures. Wait graphs showing relationships between processes and resources are used in this

case.

The present day microprocessor and CPU technologies that allow for multitasking

strategies. In such environments, the central processing unit is a resource that is shared among

different transactions with hardware clocks designed to ensure that no single process consumes

the CPU completely. This is a prevention mechanism whose goal is to ensure that the CPU can

adequately serve two or more processes simultaneously requesting for its control. The choice of

this mechanism is influenced by the fact that CPU is a critical infrastructure that must not be

allowed to ‘run’ out or be exhausted in its entirety. Any prevention algorithm that relies on

Computer Architecture 3

timestamps can be effectively used to avoid deadlock in CPU and ensure that no process is

unnecessarily killed/terminated or otherwise starved.

Deadlock avoidance mechanism works best with main memory (Ding, et al., 2010). The

strategy here is to ensure that only one job is requesting for a given memory space, and any other

job is kept in a waiting queue. The OS uses flags to indicate when given memory space that is

shared by some given processes are is dedicated for writing or reading. Processes waiting for this

space inspect these flags trusting that the space will be cleared before attempting to commit their

own changes in the spaces. The choice of this strategy influenced by the fact that several

programs need memory space and circular waits must always be avoided. To keep memory in

correct state, deadlock avoidance dynamically examines resource allocation against resource

requirements for all waiting processes.

Deadlock detection algorithms are best suited strategies to handle deadlocks in storage

memory spaces. Operating systems maintain a busy status of a device causing other programs to

hold-and-wait for a non-busy status. These algorithms help in distinguishing between gadgets

that need resources, and in the determination of whether the requested resources are free or not

so as to prevent deadlock from occurring.

Deadlock avoidance is the best suited strategy to handle deadlocks in files and file

management systems. The operating systems in this situation keeps up a status signal for a file

that is to be inspected by procedures and programs before they attempt to write on them. Where

operating system is not being used to monitor the system, a lock up state (usually in a mutual

document environment) is used and must be perused at the application level to determine the

correct status of a file or a record.

timestamps can be effectively used to avoid deadlock in CPU and ensure that no process is

unnecessarily killed/terminated or otherwise starved.

Deadlock avoidance mechanism works best with main memory (Ding, et al., 2010). The

strategy here is to ensure that only one job is requesting for a given memory space, and any other

job is kept in a waiting queue. The OS uses flags to indicate when given memory space that is

shared by some given processes are is dedicated for writing or reading. Processes waiting for this

space inspect these flags trusting that the space will be cleared before attempting to commit their

own changes in the spaces. The choice of this strategy influenced by the fact that several

programs need memory space and circular waits must always be avoided. To keep memory in

correct state, deadlock avoidance dynamically examines resource allocation against resource

requirements for all waiting processes.

Deadlock detection algorithms are best suited strategies to handle deadlocks in storage

memory spaces. Operating systems maintain a busy status of a device causing other programs to

hold-and-wait for a non-busy status. These algorithms help in distinguishing between gadgets

that need resources, and in the determination of whether the requested resources are free or not

so as to prevent deadlock from occurring.

Deadlock avoidance is the best suited strategy to handle deadlocks in files and file

management systems. The operating systems in this situation keeps up a status signal for a file

that is to be inspected by procedures and programs before they attempt to write on them. Where

operating system is not being used to monitor the system, a lock up state (usually in a mutual

document environment) is used and must be perused at the application level to determine the

correct status of a file or a record.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Computer Architecture 4

2) Banking algorithm

a) The banking system described in this scenario is not deadlocked. Since the two

accounts are being simultaneously updated, the possibility of deadlock occurring is

successfully avoided when one becomes thorough about each step in the algorithm by

locking, updating and unlocking each account. The assumption being made here is that

the computing resources effectively function with the two accounts being in locked

state.

b) If the banking system has the capability of accomondating all the locked accounts, and

the thorough approach of locking, updating and unlocking, deadlock occurrences

would be successfully prevented regardless of processing order. As such, the

numbering policy implemented would not affect the outcome.

3) Waiting and turnaround time.

Turnaround time (TAT) is defined as the interval between initialization of a process and

its completion. It is also the sum of periods that are spent by a process while waiting to get

memory control or get into the ready queue, CPU execution as well as input/output execution

time. It is an important metric that is used to determine the efficiency of scheduling algorithms in

an operating system, which is expressed in terms of units of time that a specific program stays in

a given state for a specific algorithm. This value varies for different applications and

programming languages depending on algorithm c complexity. Waiting time on the other hand

refers to the amount of time a process that is ready for execution consumes while in the ready

queue.

Calculating turnaround and waiting times

4) Timelines for various scheduling algorithms

2) Banking algorithm

a) The banking system described in this scenario is not deadlocked. Since the two

accounts are being simultaneously updated, the possibility of deadlock occurring is

successfully avoided when one becomes thorough about each step in the algorithm by

locking, updating and unlocking each account. The assumption being made here is that

the computing resources effectively function with the two accounts being in locked

state.

b) If the banking system has the capability of accomondating all the locked accounts, and

the thorough approach of locking, updating and unlocking, deadlock occurrences

would be successfully prevented regardless of processing order. As such, the

numbering policy implemented would not affect the outcome.

3) Waiting and turnaround time.

Turnaround time (TAT) is defined as the interval between initialization of a process and

its completion. It is also the sum of periods that are spent by a process while waiting to get

memory control or get into the ready queue, CPU execution as well as input/output execution

time. It is an important metric that is used to determine the efficiency of scheduling algorithms in

an operating system, which is expressed in terms of units of time that a specific program stays in

a given state for a specific algorithm. This value varies for different applications and

programming languages depending on algorithm c complexity. Waiting time on the other hand

refers to the amount of time a process that is ready for execution consumes while in the ready

queue.

Calculating turnaround and waiting times

4) Timelines for various scheduling algorithms

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Computer Architecture 5

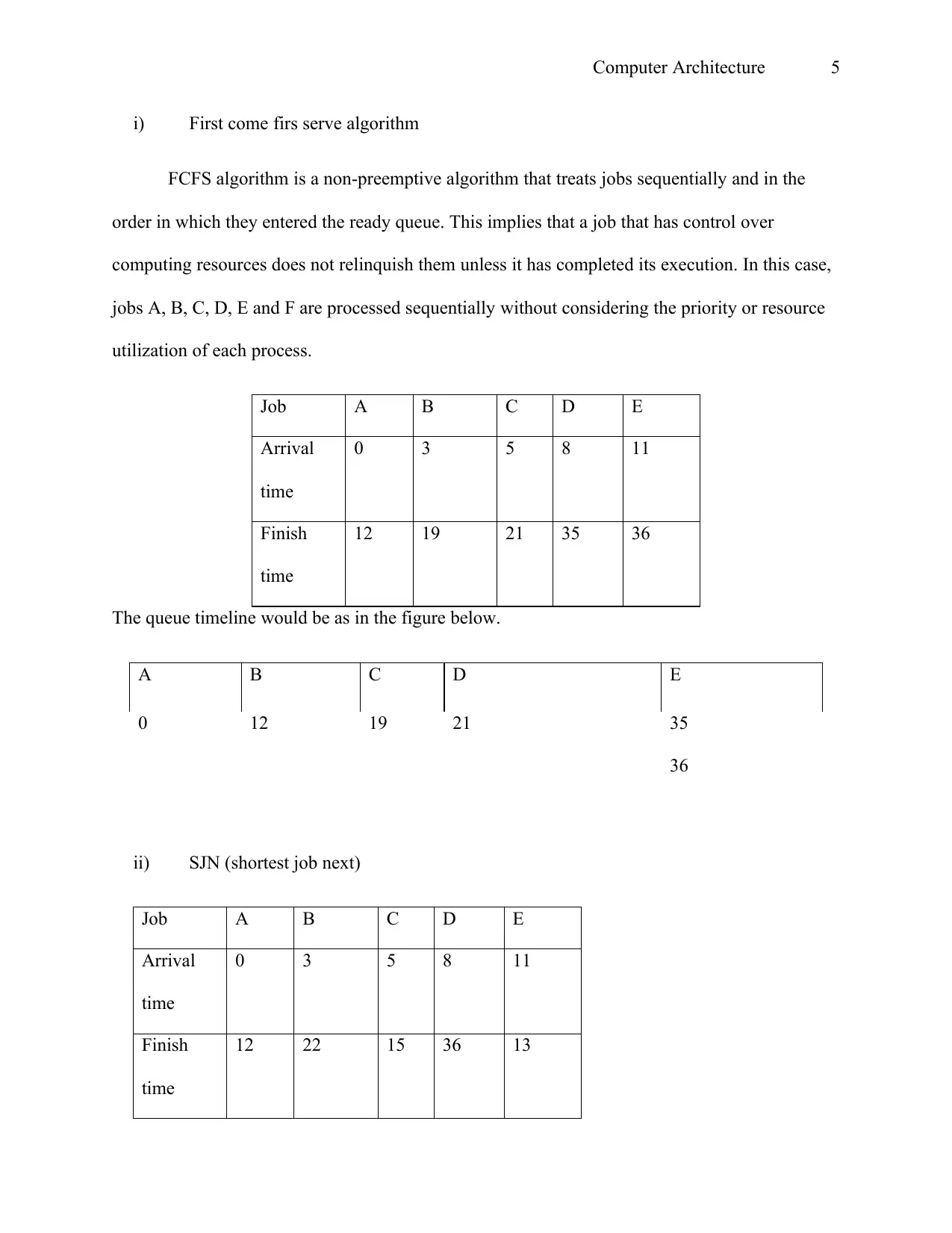

i) First come firs serve algorithm

FCFS algorithm is a non-preemptive algorithm that treats jobs sequentially and in the

order in which they entered the ready queue. This implies that a job that has control over

computing resources does not relinquish them unless it has completed its execution. In this case,

jobs A, B, C, D, E and F are processed sequentially without considering the priority or resource

utilization of each process.

Job A B C D E

Arrival

time

0 3 5 8 11

Finish

time

12 19 21 35 36

The queue timeline would be as in the figure below.

A B C D E

0 12 19 21 35

36

ii) SJN (shortest job next)

Job A B C D E

Arrival

time

0 3 5 8 11

Finish

time

12 22 15 36 13

i) First come firs serve algorithm

FCFS algorithm is a non-preemptive algorithm that treats jobs sequentially and in the

order in which they entered the ready queue. This implies that a job that has control over

computing resources does not relinquish them unless it has completed its execution. In this case,

jobs A, B, C, D, E and F are processed sequentially without considering the priority or resource

utilization of each process.

Job A B C D E

Arrival

time

0 3 5 8 11

Finish

time

12 19 21 35 36

The queue timeline would be as in the figure below.

A B C D E

0 12 19 21 35

36

ii) SJN (shortest job next)

Job A B C D E

Arrival

time

0 3 5 8 11

Finish

time

12 22 15 36 13

Computer Architecture 6

A E C B D

0 12 13 15 22

36

In this case, jobs are prioritized based on their length- or the number of CPU cycles

required for the job to completely execute. Job A, though longer than other takes the first priority

because it arrives before all other jobs. Once all jobs have arrived, the algorithm ‘reshuffles’

them and prioritizes them accordingly.

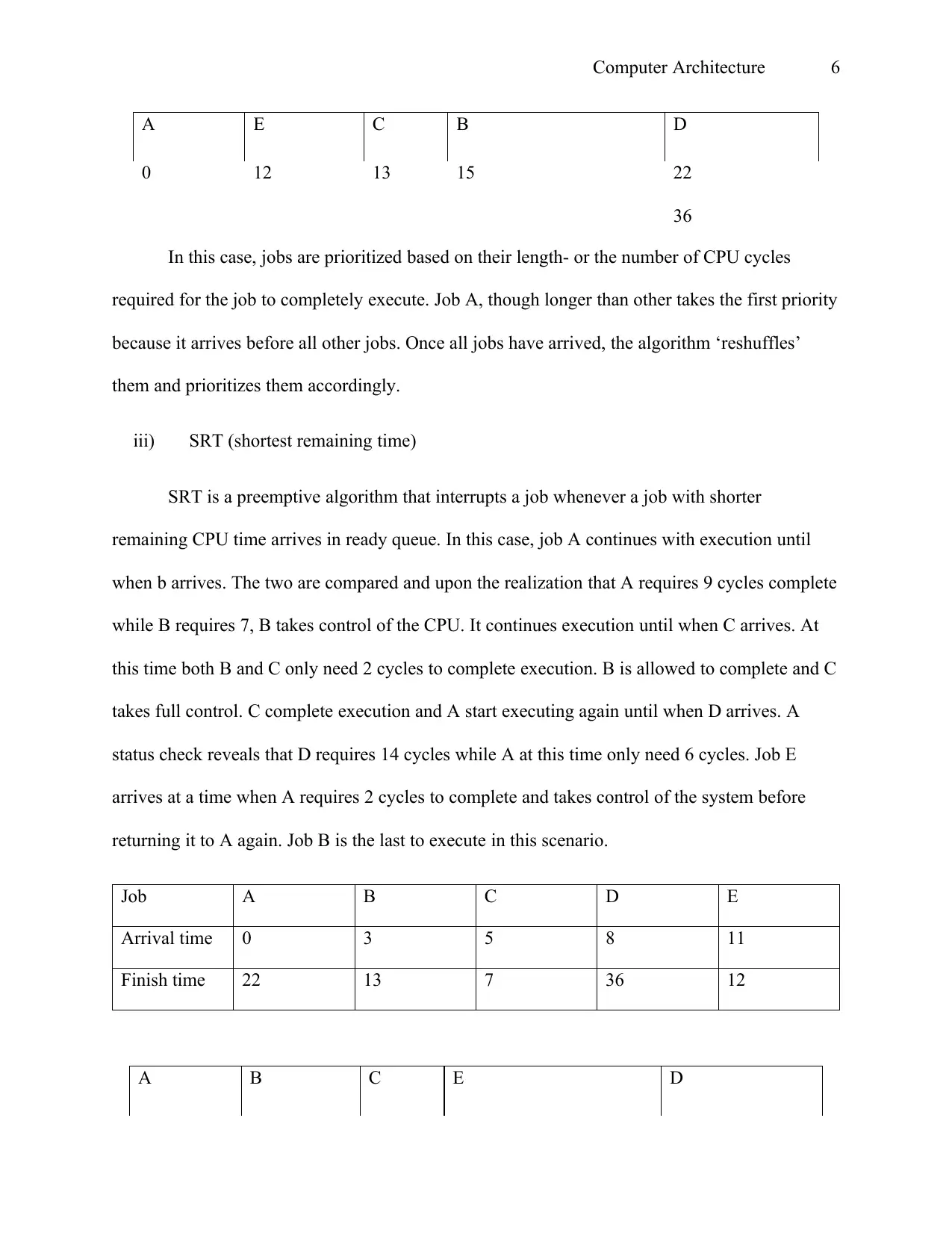

iii) SRT (shortest remaining time)

SRT is a preemptive algorithm that interrupts a job whenever a job with shorter

remaining CPU time arrives in ready queue. In this case, job A continues with execution until

when b arrives. The two are compared and upon the realization that A requires 9 cycles complete

while B requires 7, B takes control of the CPU. It continues execution until when C arrives. At

this time both B and C only need 2 cycles to complete execution. B is allowed to complete and C

takes full control. C complete execution and A start executing again until when D arrives. A

status check reveals that D requires 14 cycles while A at this time only need 6 cycles. Job E

arrives at a time when A requires 2 cycles to complete and takes control of the system before

returning it to A again. Job B is the last to execute in this scenario.

Job A B C D E

Arrival time 0 3 5 8 11

Finish time 22 13 7 36 12

A B C E D

A E C B D

0 12 13 15 22

36

In this case, jobs are prioritized based on their length- or the number of CPU cycles

required for the job to completely execute. Job A, though longer than other takes the first priority

because it arrives before all other jobs. Once all jobs have arrived, the algorithm ‘reshuffles’

them and prioritizes them accordingly.

iii) SRT (shortest remaining time)

SRT is a preemptive algorithm that interrupts a job whenever a job with shorter

remaining CPU time arrives in ready queue. In this case, job A continues with execution until

when b arrives. The two are compared and upon the realization that A requires 9 cycles complete

while B requires 7, B takes control of the CPU. It continues execution until when C arrives. At

this time both B and C only need 2 cycles to complete execution. B is allowed to complete and C

takes full control. C complete execution and A start executing again until when D arrives. A

status check reveals that D requires 14 cycles while A at this time only need 6 cycles. Job E

arrives at a time when A requires 2 cycles to complete and takes control of the system before

returning it to A again. Job B is the last to execute in this scenario.

Job A B C D E

Arrival time 0 3 5 8 11

Finish time 22 13 7 36 12

A B C E D

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Computer Architecture 7

0 3 5 7 8 36

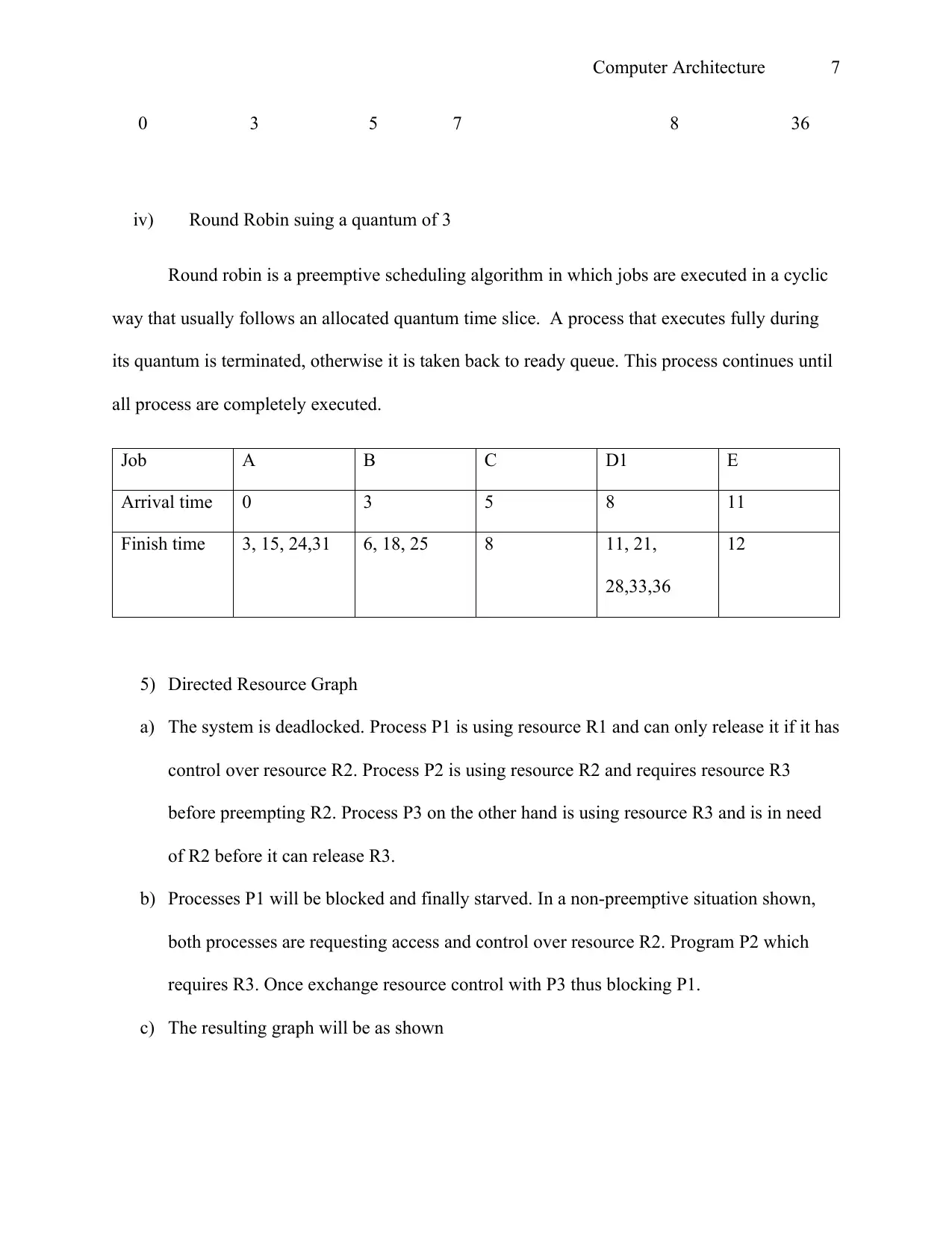

iv) Round Robin suing a quantum of 3

Round robin is a preemptive scheduling algorithm in which jobs are executed in a cyclic

way that usually follows an allocated quantum time slice. A process that executes fully during

its quantum is terminated, otherwise it is taken back to ready queue. This process continues until

all process are completely executed.

Job A B C D1 E

Arrival time 0 3 5 8 11

Finish time 3, 15, 24,31 6, 18, 25 8 11, 21,

28,33,36

12

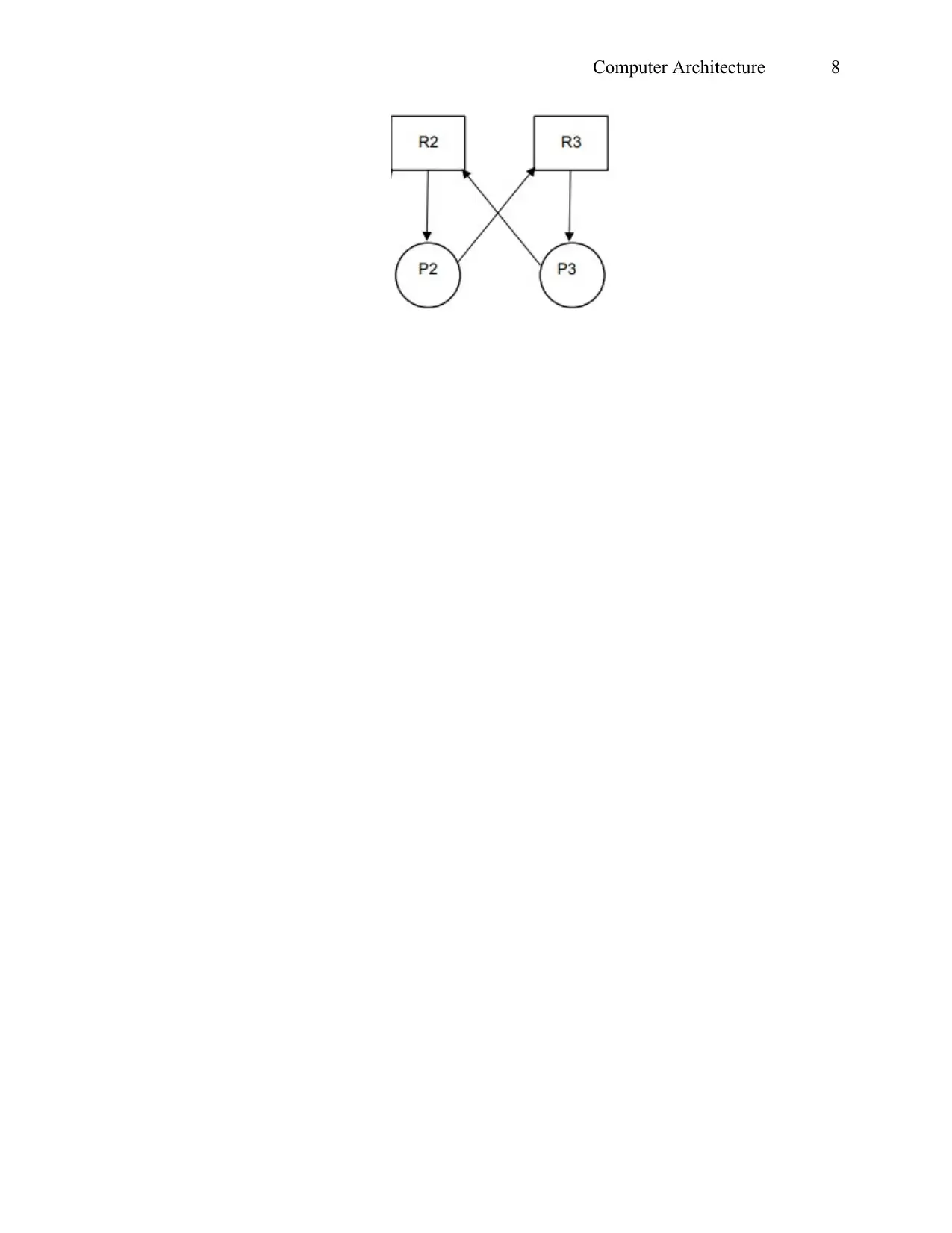

5) Directed Resource Graph

a) The system is deadlocked. Process P1 is using resource R1 and can only release it if it has

control over resource R2. Process P2 is using resource R2 and requires resource R3

before preempting R2. Process P3 on the other hand is using resource R3 and is in need

of R2 before it can release R3.

b) Processes P1 will be blocked and finally starved. In a non-preemptive situation shown,

both processes are requesting access and control over resource R2. Program P2 which

requires R3. Once exchange resource control with P3 thus blocking P1.

c) The resulting graph will be as shown

0 3 5 7 8 36

iv) Round Robin suing a quantum of 3

Round robin is a preemptive scheduling algorithm in which jobs are executed in a cyclic

way that usually follows an allocated quantum time slice. A process that executes fully during

its quantum is terminated, otherwise it is taken back to ready queue. This process continues until

all process are completely executed.

Job A B C D1 E

Arrival time 0 3 5 8 11

Finish time 3, 15, 24,31 6, 18, 25 8 11, 21,

28,33,36

12

5) Directed Resource Graph

a) The system is deadlocked. Process P1 is using resource R1 and can only release it if it has

control over resource R2. Process P2 is using resource R2 and requires resource R3

before preempting R2. Process P3 on the other hand is using resource R3 and is in need

of R2 before it can release R3.

b) Processes P1 will be blocked and finally starved. In a non-preemptive situation shown,

both processes are requesting access and control over resource R2. Program P2 which

requires R3. Once exchange resource control with P3 thus blocking P1.

c) The resulting graph will be as shown

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Computer Architecture 8

Computer Architecture 9

Bibliography

Ding, J., Zhu, H., Zhu, H. & Li, Q., 2010. Formal modeling and verifications of deadlock

prevention solutions in web service oriented system. s.l., IEEE, pp. 335-343.

Furmans, K., Zäzilia, S. & Andreas, T., 2019. Future Technologies in Intralogistics and Material

Handling. In: Operations, Logistics and Supply Chain Management. Cham: Springer, pp. 545-

574.

Bibliography

Ding, J., Zhu, H., Zhu, H. & Li, Q., 2010. Formal modeling and verifications of deadlock

prevention solutions in web service oriented system. s.l., IEEE, pp. 335-343.

Furmans, K., Zäzilia, S. & Andreas, T., 2019. Future Technologies in Intralogistics and Material

Handling. In: Operations, Logistics and Supply Chain Management. Cham: Springer, pp. 545-

574.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

1 out of 9

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.