Data Management: Abstraction, Normalization, Reconciliation Challenges

VerifiedAdded on 2022/09/30

|3

|748

|340

Homework Assignment

AI Summary

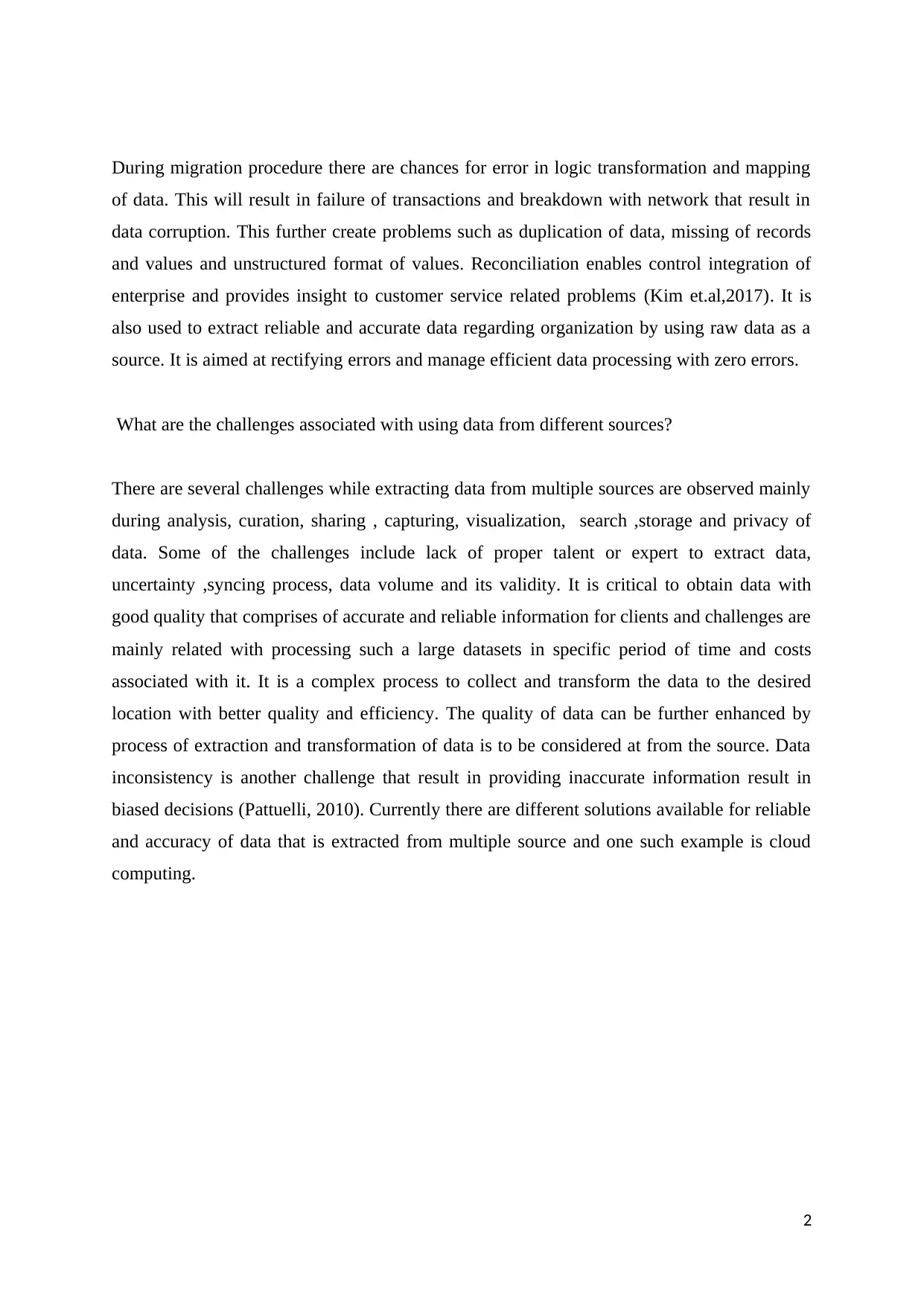

This assignment delves into the processes of data abstraction from clinical records, data normalization, and data reconciliation. It explains how data is extracted from clinical records, converted into electronic health records, and structured for analysis, including patient information, medications, and history. The solution also describes data normalization, which aims to reduce redundancy and improve data consistency, and data reconciliation, used during data migration to ensure data integrity and accuracy, addressing potential errors and inconsistencies. Finally, the assignment identifies challenges associated with using data from multiple sources, such as lack of expertise, data volume, inconsistency, and the importance of data quality, while also highlighting solutions like cloud computing. The solution is supported by cited references.

1 out of 3

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)