MN405 Data and Information Management Assignment 1 Solution - T1 2018

VerifiedAdded on 2021/05/31

|10

|1298

|142

Homework Assignment

AI Summary

This document presents a comprehensive solution to the MN405 Data and Information Management Assignment 1. It begins with database implementation, including the creation of tables and population of data, followed by SQL queries to retrieve and manipulate data. The solution then delves into relational database schema, identifying composite attributes and relationship cardinalities. Furthermore, the assignment explores big data concepts, providing an introduction to Hadoop and MapReduce, explaining their capabilities, limitations, and suitability for processing large datasets in a distributed environment. The document references several sources to support the presented information.

MN405 Data and Information Management

Assignment 1 (T1 2018)

Student ID:

Date:

Module Tutor:

Assignment 1 (T1 2018)

Student ID:

Date:

Module Tutor:

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Part B

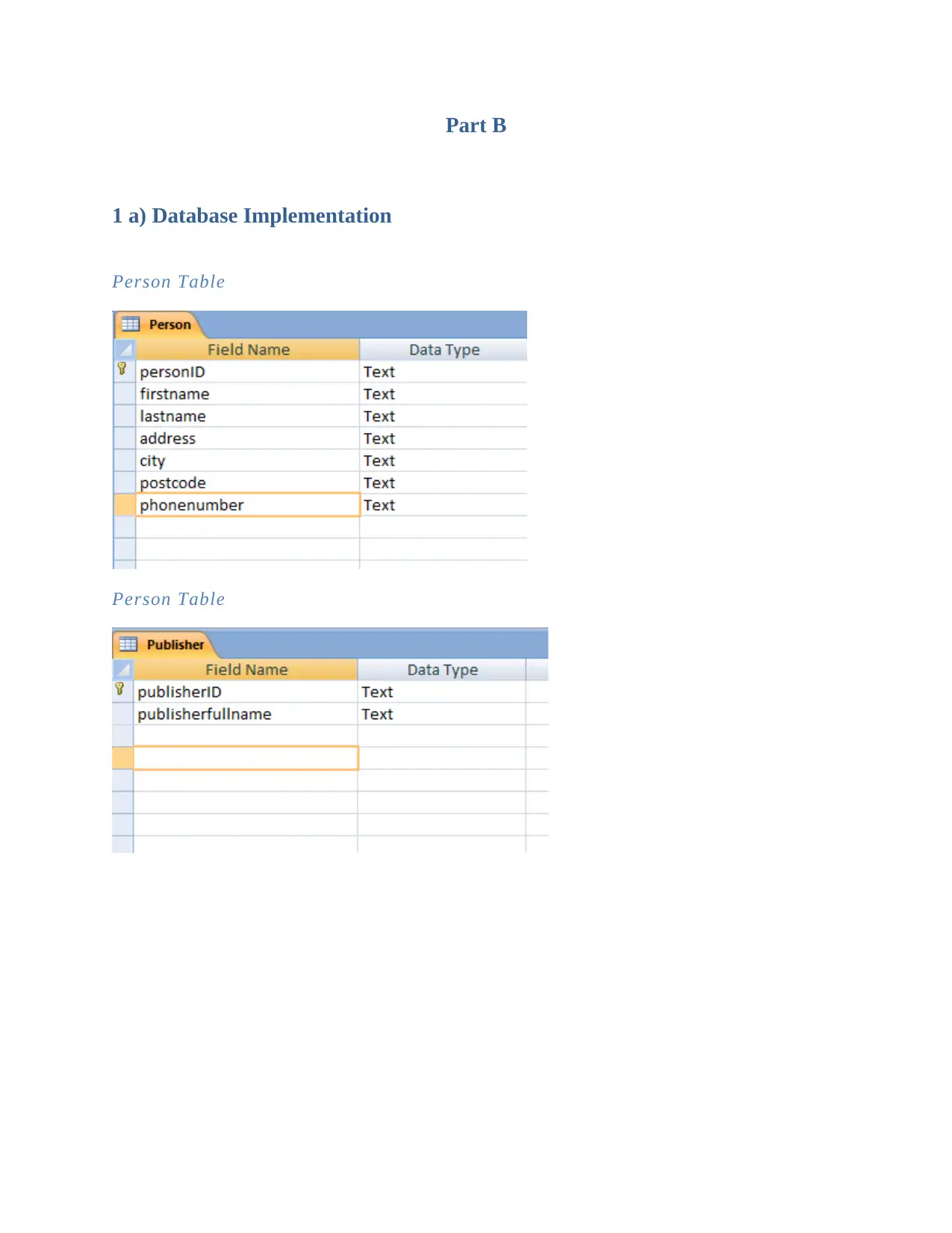

1 a) Database Implementation

Person Table

Person Table

1 a) Database Implementation

Person Table

Person Table

Book Table

Borrow Table

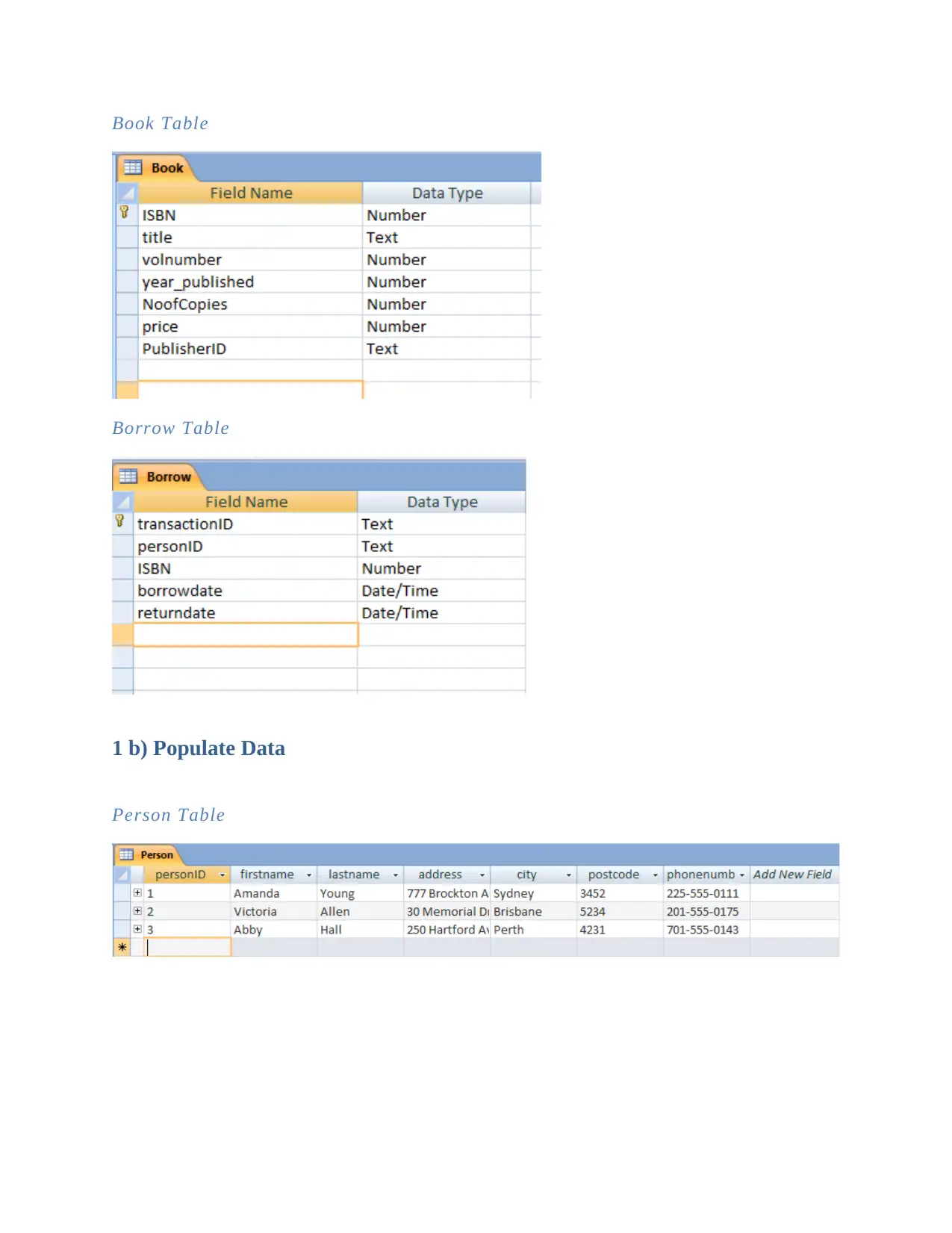

1 b) Populate Data

Person Table

Borrow Table

1 b) Populate Data

Person Table

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

Publisher Table

Book Table

Borrow Table

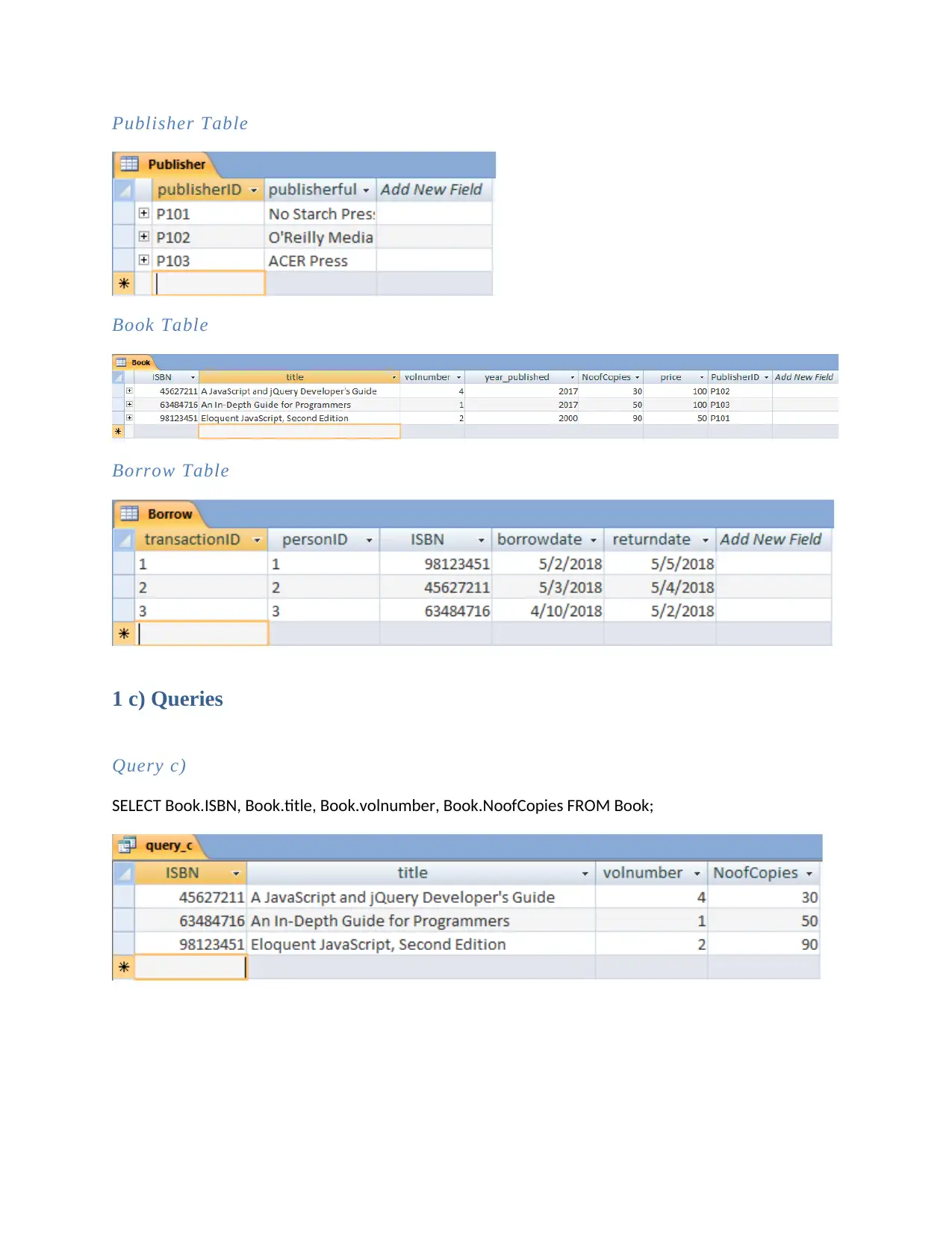

1 c) Queries

Query c)

SELECT Book.ISBN, Book.title, Book.volnumber, Book.NoofCopies FROM Book;

Book Table

Borrow Table

1 c) Queries

Query c)

SELECT Book.ISBN, Book.title, Book.volnumber, Book.NoofCopies FROM Book;

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

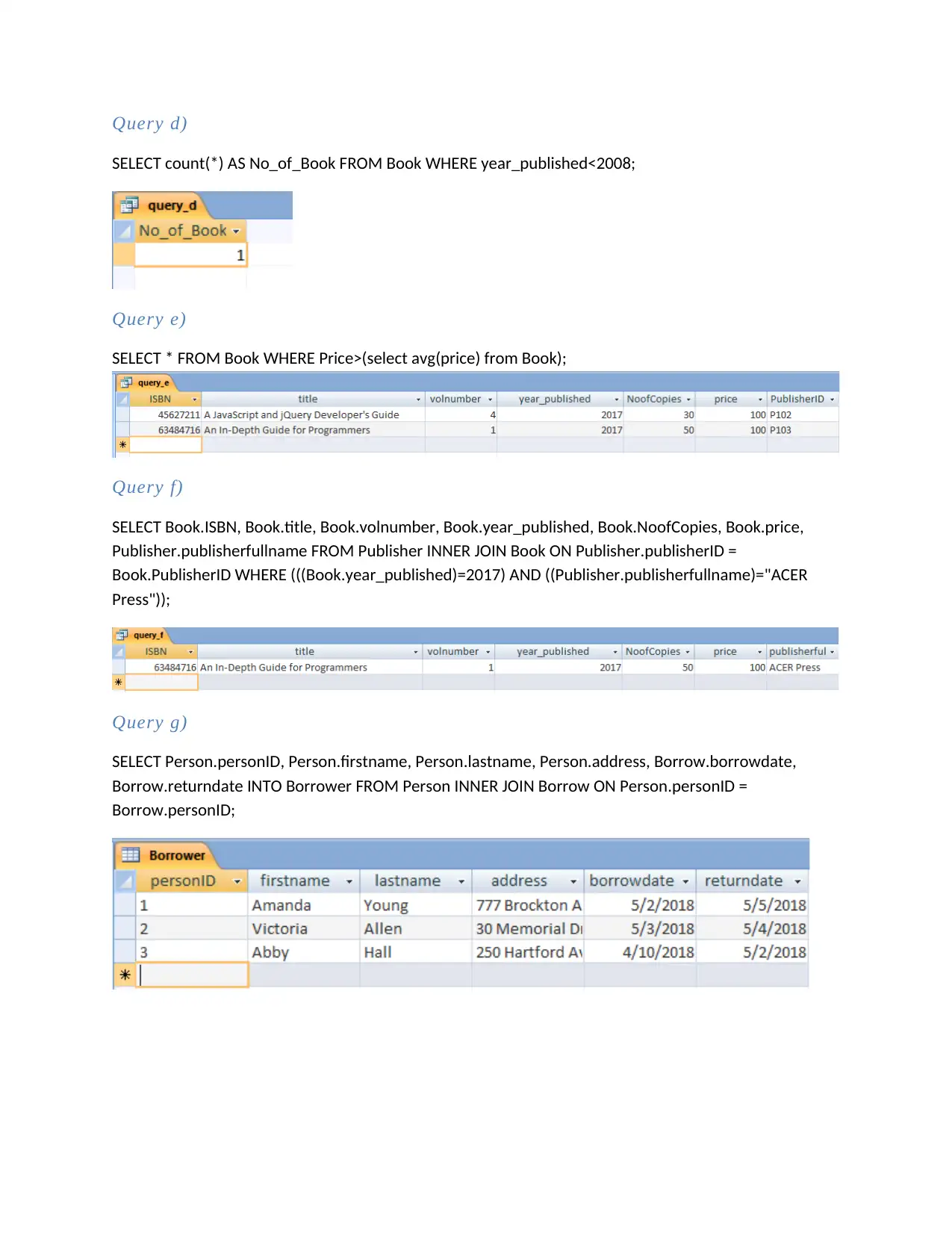

Query d)

SELECT count(*) AS No_of_Book FROM Book WHERE year_published<2008;

Query e)

SELECT * FROM Book WHERE Price>(select avg(price) from Book);

Query f)

SELECT Book.ISBN, Book.title, Book.volnumber, Book.year_published, Book.NoofCopies, Book.price,

Publisher.publisherfullname FROM Publisher INNER JOIN Book ON Publisher.publisherID =

Book.PublisherID WHERE (((Book.year_published)=2017) AND ((Publisher.publisherfullname)="ACER

Press"));

Query g)

SELECT Person.personID, Person.firstname, Person.lastname, Person.address, Borrow.borrowdate,

Borrow.returndate INTO Borrower FROM Person INNER JOIN Borrow ON Person.personID =

Borrow.personID;

SELECT count(*) AS No_of_Book FROM Book WHERE year_published<2008;

Query e)

SELECT * FROM Book WHERE Price>(select avg(price) from Book);

Query f)

SELECT Book.ISBN, Book.title, Book.volnumber, Book.year_published, Book.NoofCopies, Book.price,

Publisher.publisherfullname FROM Publisher INNER JOIN Book ON Publisher.publisherID =

Book.PublisherID WHERE (((Book.year_published)=2017) AND ((Publisher.publisherfullname)="ACER

Press"));

Query g)

SELECT Person.personID, Person.firstname, Person.lastname, Person.address, Borrow.borrowdate,

Borrow.returndate INTO Borrower FROM Person INNER JOIN Borrow ON Person.personID =

Borrow.personID;

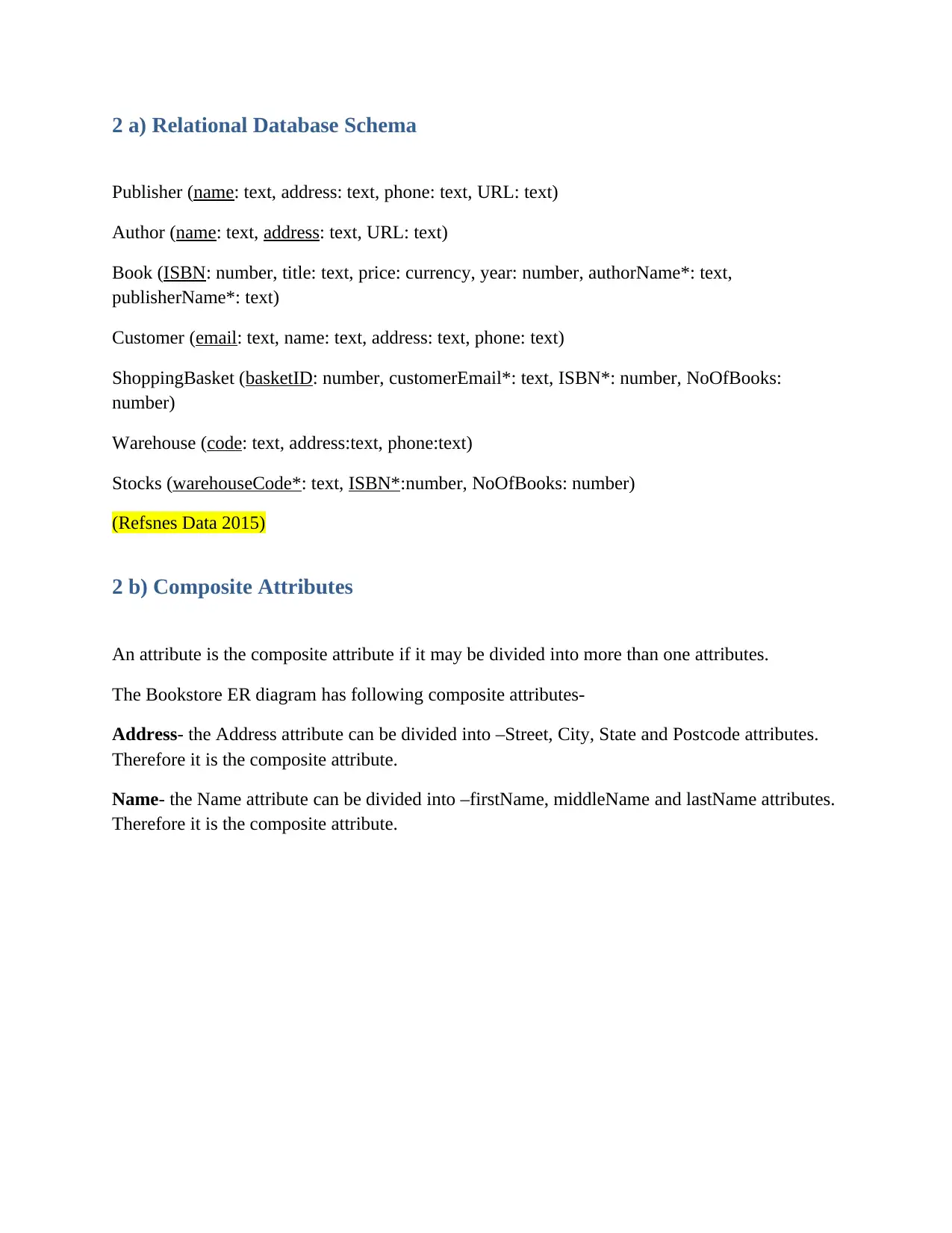

2 a) Relational Database Schema

Publisher (name: text, address: text, phone: text, URL: text)

Author (name: text, address: text, URL: text)

Book (ISBN: number, title: text, price: currency, year: number, authorName*: text,

publisherName*: text)

Customer (email: text, name: text, address: text, phone: text)

ShoppingBasket (basketID: number, customerEmail*: text, ISBN*: number, NoOfBooks:

number)

Warehouse (code: text, address:text, phone:text)

Stocks (warehouseCode*: text, ISBN*:number, NoOfBooks: number)

(Refsnes Data 2015)

2 b) Composite Attributes

An attribute is the composite attribute if it may be divided into more than one attributes.

The Bookstore ER diagram has following composite attributes-

Address- the Address attribute can be divided into –Street, City, State and Postcode attributes.

Therefore it is the composite attribute.

Name- the Name attribute can be divided into –firstName, middleName and lastName attributes.

Therefore it is the composite attribute.

Publisher (name: text, address: text, phone: text, URL: text)

Author (name: text, address: text, URL: text)

Book (ISBN: number, title: text, price: currency, year: number, authorName*: text,

publisherName*: text)

Customer (email: text, name: text, address: text, phone: text)

ShoppingBasket (basketID: number, customerEmail*: text, ISBN*: number, NoOfBooks:

number)

Warehouse (code: text, address:text, phone:text)

Stocks (warehouseCode*: text, ISBN*:number, NoOfBooks: number)

(Refsnes Data 2015)

2 b) Composite Attributes

An attribute is the composite attribute if it may be divided into more than one attributes.

The Bookstore ER diagram has following composite attributes-

Address- the Address attribute can be divided into –Street, City, State and Postcode attributes.

Therefore it is the composite attribute.

Name- the Name attribute can be divided into –firstName, middleName and lastName attributes.

Therefore it is the composite attribute.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

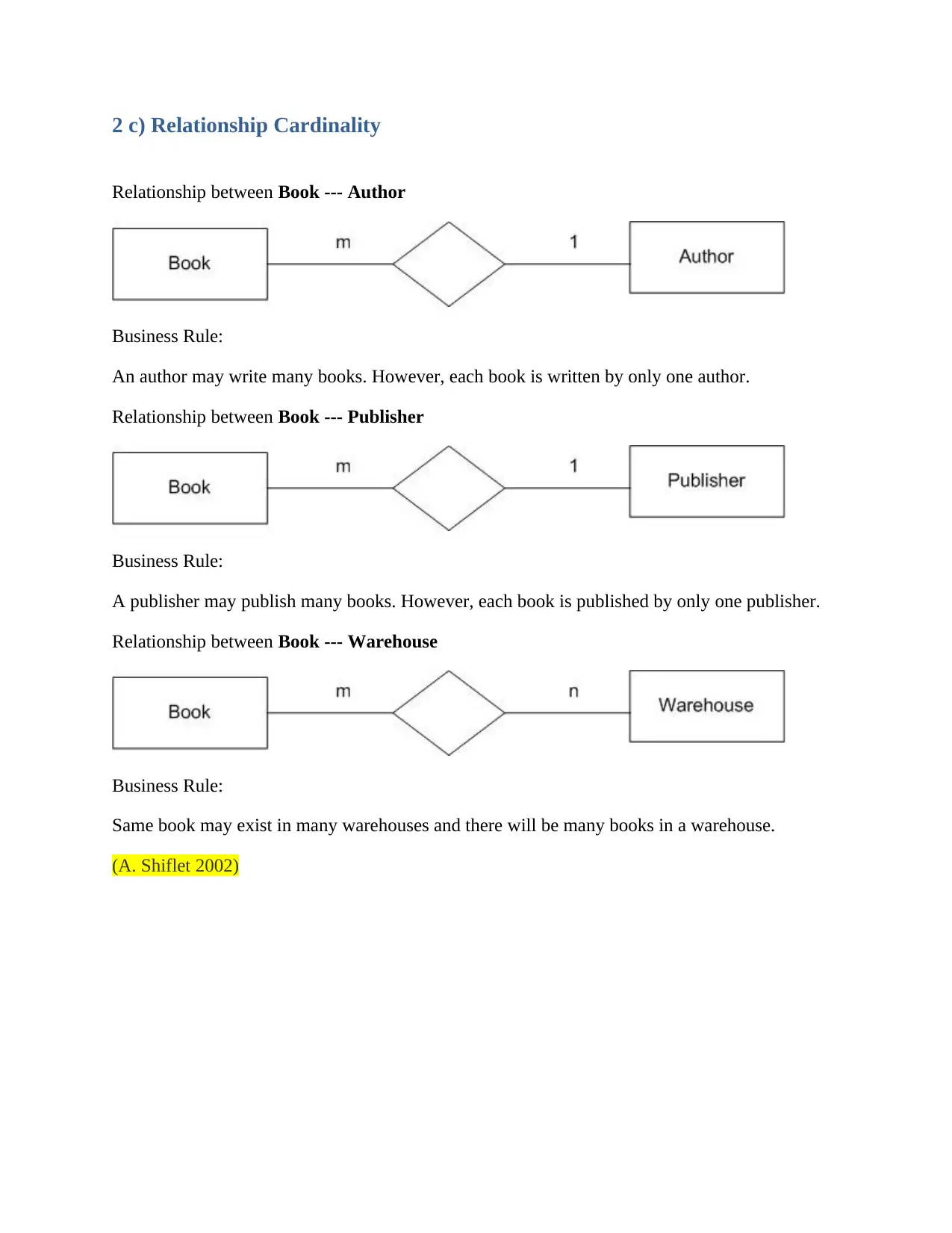

2 c) Relationship Cardinality

Relationship between Book --- Author

Business Rule:

An author may write many books. However, each book is written by only one author.

Relationship between Book --- Publisher

Business Rule:

A publisher may publish many books. However, each book is published by only one publisher.

Relationship between Book --- Warehouse

Business Rule:

Same book may exist in many warehouses and there will be many books in a warehouse.

(A. Shiflet 2002)

Relationship between Book --- Author

Business Rule:

An author may write many books. However, each book is written by only one author.

Relationship between Book --- Publisher

Business Rule:

A publisher may publish many books. However, each book is published by only one publisher.

Relationship between Book --- Warehouse

Business Rule:

Same book may exist in many warehouses and there will be many books in a warehouse.

(A. Shiflet 2002)

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

3 a) Hadoop

Introduction

Hadoop is an Apache open source system that is used to process and store the big data. It is used

in cluster systems in a distributed environment. A big data is not only data it is a very large data

that is equals to a complete subject that contains lots of tools, frameworks etc.

Big data is a very huge data and it is very critical job to process such a large data by the old

RDBMS like Oracle, SQL Server etc. To solve this problem Google produce an algorithm named

‘MapReduce’ to process the big data.

The ‘MapReduce’ algorithm divide the task into small tasks and assign that tasks to different

computers and then collect that results from that computers and combine them and then produce

final result for the user.

Hadoop works on the ‘MapReduce’ algorithm to process the Big Data.

Capabilities

- The most important capability of Hadoop is that it is compatible on all platforms because

it is written in Java.

- Servers can be dynamically removed or added and the Hadoop will work without any

interruption.

- Hadoop does not depend upon the hardware to detect failure, it detect itself by Hadoop

Library.

- Hadoop is very efficient and automatically distributes the data to the parallel connected

systems.

- Hadoop allows the users to quickly access the data without any delay.

- It works on the very less expensive hardware.

Limitations

- Hadoop is not suitable for small data.

- It supports only batch processing.

- The processing speed in Hadoop is slow because of MapReduce algorithm.

- It is not compatible in real time data processing because of the processing of large data

only.

- It is not efficient in iterative processing.

Introduction

Hadoop is an Apache open source system that is used to process and store the big data. It is used

in cluster systems in a distributed environment. A big data is not only data it is a very large data

that is equals to a complete subject that contains lots of tools, frameworks etc.

Big data is a very huge data and it is very critical job to process such a large data by the old

RDBMS like Oracle, SQL Server etc. To solve this problem Google produce an algorithm named

‘MapReduce’ to process the big data.

The ‘MapReduce’ algorithm divide the task into small tasks and assign that tasks to different

computers and then collect that results from that computers and combine them and then produce

final result for the user.

Hadoop works on the ‘MapReduce’ algorithm to process the Big Data.

Capabilities

- The most important capability of Hadoop is that it is compatible on all platforms because

it is written in Java.

- Servers can be dynamically removed or added and the Hadoop will work without any

interruption.

- Hadoop does not depend upon the hardware to detect failure, it detect itself by Hadoop

Library.

- Hadoop is very efficient and automatically distributes the data to the parallel connected

systems.

- Hadoop allows the users to quickly access the data without any delay.

- It works on the very less expensive hardware.

Limitations

- Hadoop is not suitable for small data.

- It supports only batch processing.

- The processing speed in Hadoop is slow because of MapReduce algorithm.

- It is not compatible in real time data processing because of the processing of large data

only.

- It is not efficient in iterative processing.

Conclusion

Hadoop is the best choice for big data processing. It is not suitable for small data but in large

data, it is very appropriate. It works on MapReduce algorithm in distributed environment on

cluster machines. (Tutorialspoint.com 2018)

3 b) MapReduce

Introduction

MapReduce is a software framework which is used to write applications that process a very large

data on large clusters in a parallel way.

The MapReduce decompose the tasks into smaller tasks and assign to the parallel computers and

after processing the Reduce process takes the processed data from parallel computers, sort them

and make the final output result for the users. The Reduce work on three terms- Shuffle, Sort and

Reduce. Hadoop works on the MapReduce algorithm.

The MapReduce works on the <key, value> pair. It takes input as <key, value> and gives output

in the same <key, value> pair pattern.

Capabilities

- MapReduce is highly scalable. It works on Hadoop that is able to handle large data across

multiple servers.

- It is very cost-efective.

- It is very flexible. Companies can work on structured or unstructured data. It gives the

facility to access any type of data and the result may be of any other type.

- The security is very high in MapReduce. It works with HBase security that allows to

access only authorized users.

- It is very simple programming model that works very efficiently.

- It is very resilient in nature. The data that is sent to the main node is copied to other

locations on the network also for backup. In case of data loss, the user can take data from

the saved data.

Limitations

- Not suitable for large data.

- Not suitable for real time processing.

- Not suitable for Graph processing.

- Slow in speed.

- It supports only batch processing.

Hadoop is the best choice for big data processing. It is not suitable for small data but in large

data, it is very appropriate. It works on MapReduce algorithm in distributed environment on

cluster machines. (Tutorialspoint.com 2018)

3 b) MapReduce

Introduction

MapReduce is a software framework which is used to write applications that process a very large

data on large clusters in a parallel way.

The MapReduce decompose the tasks into smaller tasks and assign to the parallel computers and

after processing the Reduce process takes the processed data from parallel computers, sort them

and make the final output result for the users. The Reduce work on three terms- Shuffle, Sort and

Reduce. Hadoop works on the MapReduce algorithm.

The MapReduce works on the <key, value> pair. It takes input as <key, value> and gives output

in the same <key, value> pair pattern.

Capabilities

- MapReduce is highly scalable. It works on Hadoop that is able to handle large data across

multiple servers.

- It is very cost-efective.

- It is very flexible. Companies can work on structured or unstructured data. It gives the

facility to access any type of data and the result may be of any other type.

- The security is very high in MapReduce. It works with HBase security that allows to

access only authorized users.

- It is very simple programming model that works very efficiently.

- It is very resilient in nature. The data that is sent to the main node is copied to other

locations on the network also for backup. In case of data loss, the user can take data from

the saved data.

Limitations

- Not suitable for large data.

- Not suitable for real time processing.

- Not suitable for Graph processing.

- Slow in speed.

- It supports only batch processing.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

- Not efficient in iterative processing.

- MapReduce is not easy to use.

- MapReduce does not cache the intermediate data. It decreases the performance.

(Apache Foundation Software2017)

Conclusion

The MapReduce is very popular now a day because of its flexibility, and efficiency. It can access

and process the large data very efficiently. It is fault tolerance also.

References

A. Shiflet, Three-Level Architecture, 2002. [Online]. Available:

https://wofford-ecs.org/DataAndVisualization/ermodel/material.htm. [Accessed: May. 7, 2018].

Refsnes Data, SQL Server Data Types for various DBs, 2015. [Online]. Available:

http://www.w3schools.com/sql/sql_datatypes.asp. [Accessed: May. 7, 2018]

Tutorialspoint.com, Hadoop Tutorial, 2018. [Online]. Available:

https://www.tutorialspoint.com/hadoop/index.htm. [Accessed: May. 7, 2018]

Apache Foundation Software, MapReduce Tutorial, 2017. [Online]. Available:

https://hadoop.apache.org/docs/r2.8.0/hadoop-mapreduce-client/hadoop-mapreduce-

client-core/MapReduceTutorial.html. [Accessed: May. 7, 2018]

- MapReduce is not easy to use.

- MapReduce does not cache the intermediate data. It decreases the performance.

(Apache Foundation Software2017)

Conclusion

The MapReduce is very popular now a day because of its flexibility, and efficiency. It can access

and process the large data very efficiently. It is fault tolerance also.

References

A. Shiflet, Three-Level Architecture, 2002. [Online]. Available:

https://wofford-ecs.org/DataAndVisualization/ermodel/material.htm. [Accessed: May. 7, 2018].

Refsnes Data, SQL Server Data Types for various DBs, 2015. [Online]. Available:

http://www.w3schools.com/sql/sql_datatypes.asp. [Accessed: May. 7, 2018]

Tutorialspoint.com, Hadoop Tutorial, 2018. [Online]. Available:

https://www.tutorialspoint.com/hadoop/index.htm. [Accessed: May. 7, 2018]

Apache Foundation Software, MapReduce Tutorial, 2017. [Online]. Available:

https://hadoop.apache.org/docs/r2.8.0/hadoop-mapreduce-client/hadoop-mapreduce-

client-core/MapReduceTutorial.html. [Accessed: May. 7, 2018]

1 out of 10

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.