SIT103 Data and Information Management Assignment Solution - 2018

VerifiedAdded on 2023/05/29

|7

|1496

|79

Homework Assignment

AI Summary

This assignment solution addresses key concepts in data and information management, covering topics relevant to the SIT103 course. The solution begins with an overview of Database Management Systems (DBMS), including their advantages (reducing data redundancy, data sharing, data integrity, and security) and essential functions (data definition, manipulation, security, integrity, recovery, and concurrency). It then delves into big data, discussing its challenges (volume, uncertainty, and data integration) and new technologies (predictive analytics, NoSQL databases, stream analytics, and data virtualization). The solution also explains transactions, their properties (atomicity, consistency, isolation, and durability), and deadlocks. Furthermore, it covers SQL commands for data manipulation (INSERT, UPDATE, and SELECT), along with database design principles (business rules, E-R modeling, relationship modeling, and normalization). The assignment references relevant literature to support the concepts discussed.

Running head: DATA AND INFORMATION MANAGEMENT

Data and Information Management

Name of the Student

Name of the University

Author’s Note

Data and Information Management

Name of the Student

Name of the University

Author’s Note

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

2

DATA AND INFORMATION MANAGEMENT

Q1. (a). The four advantages of DBMS are mentioned below:

Reducing Data Redundancy: The file based data management system used to store

multiple files at various locations in the system. This create difficulty in search particular

files at time. Therefore, database management system helps in storing multiple files at a

single place and modification can be easily done.

Sharing data: Users can share their data easily in the database system with proper

authorization (Pappas et al. 2014).

Data Integrity: It means that data has been accurate and consistent in the database.

Data Security: Only authorized users are allowed to access the database system.

(b) Four functions of DBMS are follows:

Data Definition: The DBMS helps in providing definition of structure of the data

inckuding modification in record structure, type and size.

Data Manipulation: After data structure is defined, it can be modified again including

insertion and deletion (Mariotti et al. 2018).

Data Security and Integrity: The DBMS include various constraints that helps in handling

security of data stored in an application.

Data Recovery and Concurrency: Data recovery even after system failure can be done in

DBMS.

Q2. (a) Big data refers to huge data sets that are complex to handle with traditional data-

processing system.

(b) Following are the challenges faced in database technology regarding big data:

DATA AND INFORMATION MANAGEMENT

Q1. (a). The four advantages of DBMS are mentioned below:

Reducing Data Redundancy: The file based data management system used to store

multiple files at various locations in the system. This create difficulty in search particular

files at time. Therefore, database management system helps in storing multiple files at a

single place and modification can be easily done.

Sharing data: Users can share their data easily in the database system with proper

authorization (Pappas et al. 2014).

Data Integrity: It means that data has been accurate and consistent in the database.

Data Security: Only authorized users are allowed to access the database system.

(b) Four functions of DBMS are follows:

Data Definition: The DBMS helps in providing definition of structure of the data

inckuding modification in record structure, type and size.

Data Manipulation: After data structure is defined, it can be modified again including

insertion and deletion (Mariotti et al. 2018).

Data Security and Integrity: The DBMS include various constraints that helps in handling

security of data stored in an application.

Data Recovery and Concurrency: Data recovery even after system failure can be done in

DBMS.

Q2. (a) Big data refers to huge data sets that are complex to handle with traditional data-

processing system.

(b) Following are the challenges faced in database technology regarding big data:

3

DATA AND INFORMATION MANAGEMENT

Huge data volume: There has been increase in the data volume as it has been coming

from various sources. Therefore, database management has been becoming complex.

Uncertainty of data management: The wide use of data management tools including

NoSQL frameworks have been differentiating with traditional relational DBMS (Lazer et

al. 2014). However, other tools are creating uncertainty in data management.

Getting data into Big Data Structure: Big data management include processing and

analyzing large amount of data. Therefore, it becomes difficult to manage large volume

of data at a time using normal data management tools.

(c) Following are new technologies used in the big data:

Predictive analytics: It allows evaluating, optimizing and deploying productive models by

analyzing sources of big data for improving performance of business.

NoSQL databases: It has been a new technology that help in distinguishing traditional

relational database into modern DBMS (Wu et al. 2014).

Stream analytics: This technology help in filtering, aggregating and enriching high

throughput of data sources at any format of data.

Data Virtualization: This technology helps in delivering data from several sources of data

including big data sources such as Hadoop and distributed data sources.

Q3. (a) (i) Transaction refers to an action or many actions that have been performed by single

user and application program. It helps in reading and updating any contents in the database (Suh,

Snodgrass and Currim 2017).

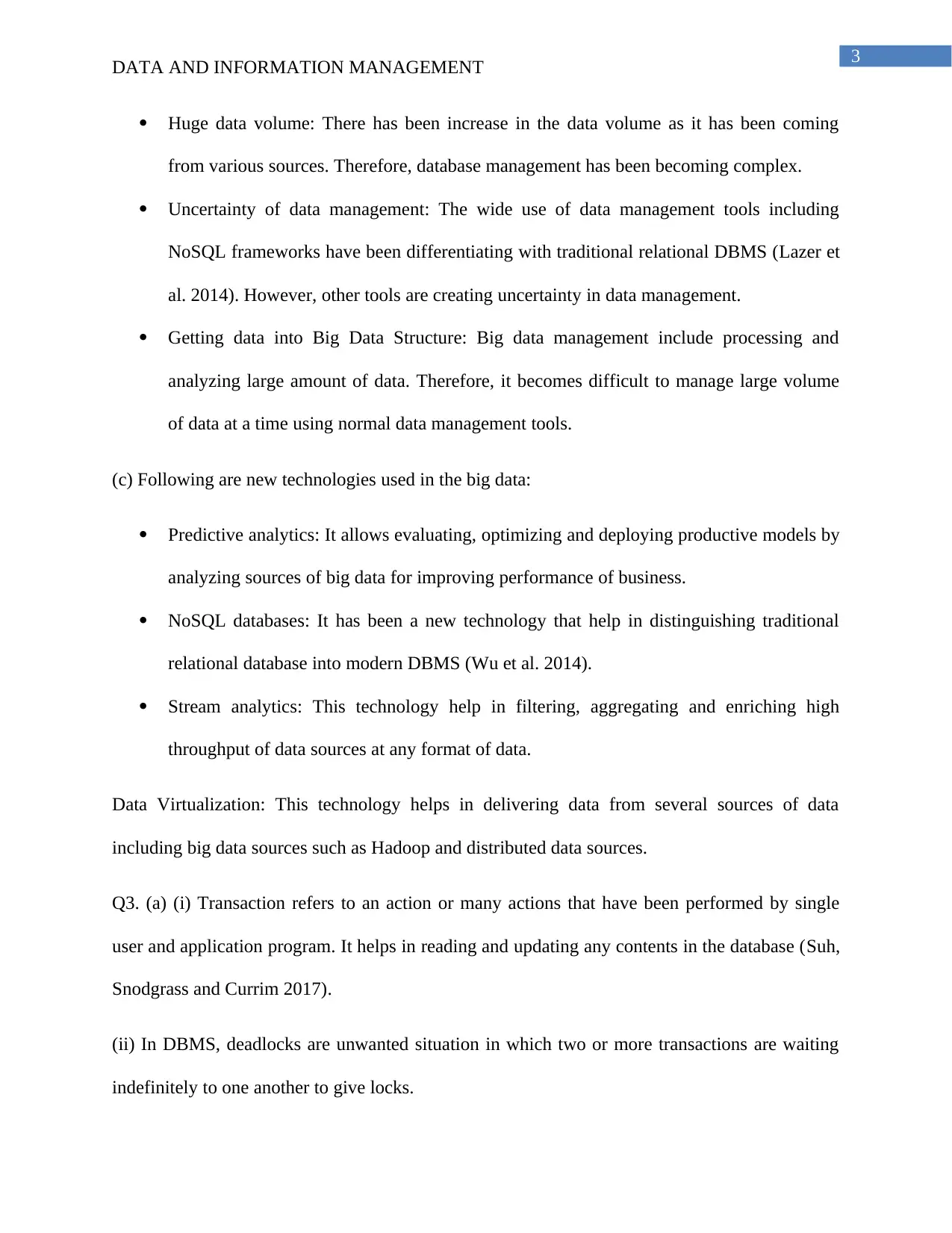

(ii) In DBMS, deadlocks are unwanted situation in which two or more transactions are waiting

indefinitely to one another to give locks.

DATA AND INFORMATION MANAGEMENT

Huge data volume: There has been increase in the data volume as it has been coming

from various sources. Therefore, database management has been becoming complex.

Uncertainty of data management: The wide use of data management tools including

NoSQL frameworks have been differentiating with traditional relational DBMS (Lazer et

al. 2014). However, other tools are creating uncertainty in data management.

Getting data into Big Data Structure: Big data management include processing and

analyzing large amount of data. Therefore, it becomes difficult to manage large volume

of data at a time using normal data management tools.

(c) Following are new technologies used in the big data:

Predictive analytics: It allows evaluating, optimizing and deploying productive models by

analyzing sources of big data for improving performance of business.

NoSQL databases: It has been a new technology that help in distinguishing traditional

relational database into modern DBMS (Wu et al. 2014).

Stream analytics: This technology help in filtering, aggregating and enriching high

throughput of data sources at any format of data.

Data Virtualization: This technology helps in delivering data from several sources of data

including big data sources such as Hadoop and distributed data sources.

Q3. (a) (i) Transaction refers to an action or many actions that have been performed by single

user and application program. It helps in reading and updating any contents in the database (Suh,

Snodgrass and Currim 2017).

(ii) In DBMS, deadlocks are unwanted situation in which two or more transactions are waiting

indefinitely to one another to give locks.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

4

DATA AND INFORMATION MANAGEMENT

Figure 1: Deadlocks in DBMS

(Source: Created by author)

(b) Following are the properties of transaction:

Atomicity: It states that all transaction are treated as a single unit, either all operations are

executed or none.

Consistency: The database needs to be consistent after any transaction. NO transactions

must have adverse effects on data stored in the database.

Isolation: This states that all transactions in the DBMS at a single attempt. Any

transactions must not affect other transaction.

Durability: The database needs to be durable to hold its all transaction updates even if

system fails or restarts.

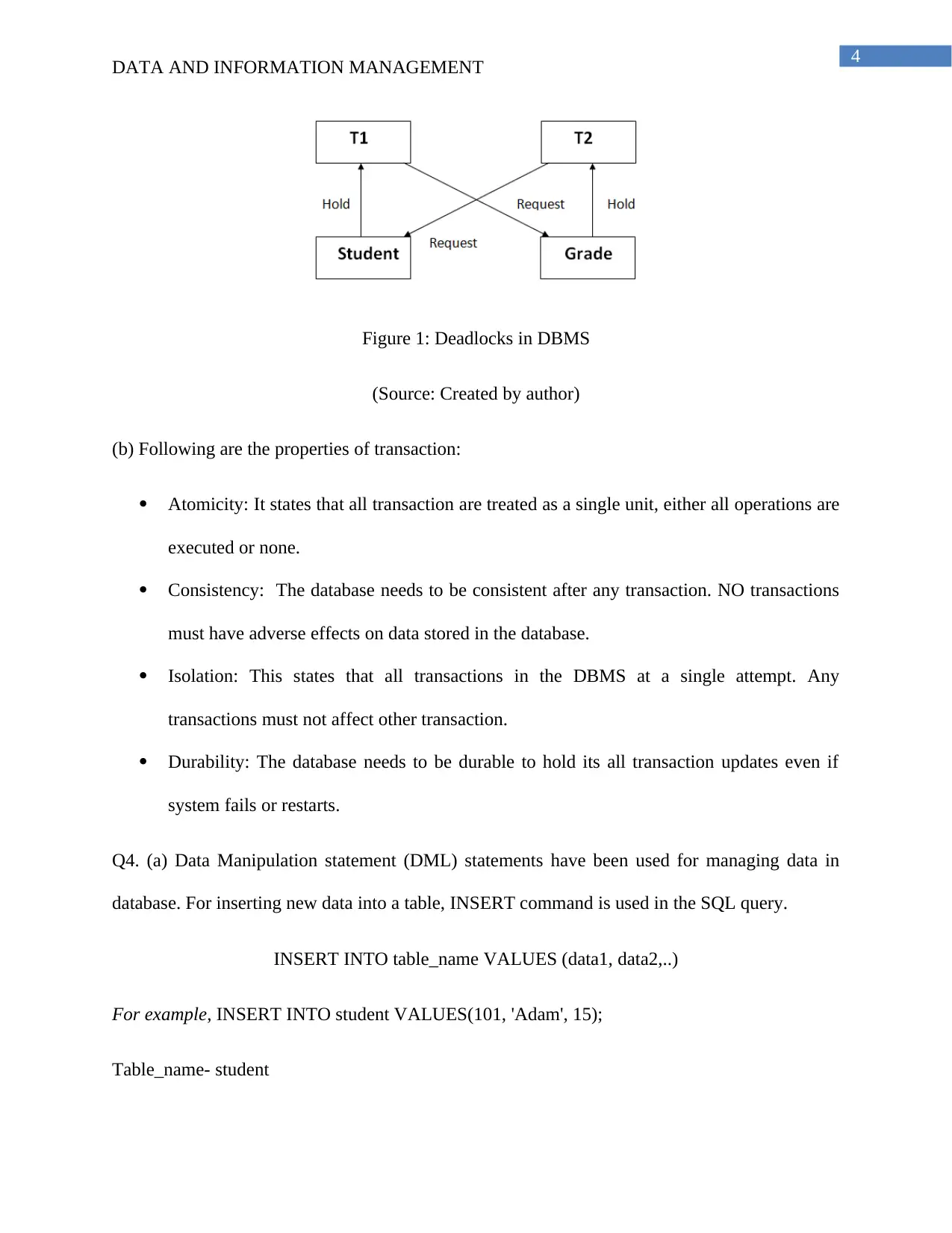

Q4. (a) Data Manipulation statement (DML) statements have been used for managing data in

database. For inserting new data into a table, INSERT command is used in the SQL query.

INSERT INTO table_name VALUES (data1, data2,..)

For example, INSERT INTO student VALUES(101, 'Adam', 15);

Table_name- student

DATA AND INFORMATION MANAGEMENT

Figure 1: Deadlocks in DBMS

(Source: Created by author)

(b) Following are the properties of transaction:

Atomicity: It states that all transaction are treated as a single unit, either all operations are

executed or none.

Consistency: The database needs to be consistent after any transaction. NO transactions

must have adverse effects on data stored in the database.

Isolation: This states that all transactions in the DBMS at a single attempt. Any

transactions must not affect other transaction.

Durability: The database needs to be durable to hold its all transaction updates even if

system fails or restarts.

Q4. (a) Data Manipulation statement (DML) statements have been used for managing data in

database. For inserting new data into a table, INSERT command is used in the SQL query.

INSERT INTO table_name VALUES (data1, data2,..)

For example, INSERT INTO student VALUES(101, 'Adam', 15);

Table_name- student

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

5

DATA AND INFORMATION MANAGEMENT

s_id Name age

101 Adam 15

(b) UPDATE statement is used for changing data in a table. The SQL query is:

UPDATE tableName

SET column1=value1, column2=value2,...

WHERE filterColumn=filterValue

For example, UPDATE HumanResources.Department

SET Name = 'Information Technology'

WHERE DepartmentID = 11

SELECT DepartmentID, Name, GroupName

FROM HumanResources.Department

ROLLBACK

This SQL query helps in updating a row in the Department table.

(c) SELECT command is used for retrieving data from database table. This command helps in

retrieving data that is needed from an operational database.

SELECT * FROM employee

In this case * denotes whole content in the table name employee. This SQL query will

retrieve all data from table name employee.

DATA AND INFORMATION MANAGEMENT

s_id Name age

101 Adam 15

(b) UPDATE statement is used for changing data in a table. The SQL query is:

UPDATE tableName

SET column1=value1, column2=value2,...

WHERE filterColumn=filterValue

For example, UPDATE HumanResources.Department

SET Name = 'Information Technology'

WHERE DepartmentID = 11

SELECT DepartmentID, Name, GroupName

FROM HumanResources.Department

ROLLBACK

This SQL query helps in updating a row in the Department table.

(c) SELECT command is used for retrieving data from database table. This command helps in

retrieving data that is needed from an operational database.

SELECT * FROM employee

In this case * denotes whole content in the table name employee. This SQL query will

retrieve all data from table name employee.

6

DATA AND INFORMATION MANAGEMENT

Q5. Gathering business rules: A business rule has been a statement which include constraint over

an aspect of database including elements in a field. In database design, specific data must be

allocated with specific row in a table (Rawassizadeh et al. 2017). This data must be useful for the

business to grow in the market. For example, A SHIP DATE cannot be prior to an

ORDER DATE for any given order.

Business rule has been imposed on Range of Values element of field specification for the SHIP

DATE field. It ensures that SHIP DATE is meaningful with context of sales order.

E-R modelling: An entity-relationship model (ERM) has been a theoretical and

conceptual model that shows relationships in software development. It has been

database modeling technique that creates abstract diagram in relational database.

Relationship modelling: A relational model is a database management approach

for managing data with the help of architecture and language consistent with first

order predicate logic (Suh, Snodgrass and Currim 2017).

Normalization: Normalization is a technique that helps in maintaining tables in

manner for reducing redundancy and dependency of data (Singhal, Buckley and

Mitra 2017). It helps in dividing larger tables into smaller tables and provide link

between using various relationships.

DATA AND INFORMATION MANAGEMENT

Q5. Gathering business rules: A business rule has been a statement which include constraint over

an aspect of database including elements in a field. In database design, specific data must be

allocated with specific row in a table (Rawassizadeh et al. 2017). This data must be useful for the

business to grow in the market. For example, A SHIP DATE cannot be prior to an

ORDER DATE for any given order.

Business rule has been imposed on Range of Values element of field specification for the SHIP

DATE field. It ensures that SHIP DATE is meaningful with context of sales order.

E-R modelling: An entity-relationship model (ERM) has been a theoretical and

conceptual model that shows relationships in software development. It has been

database modeling technique that creates abstract diagram in relational database.

Relationship modelling: A relational model is a database management approach

for managing data with the help of architecture and language consistent with first

order predicate logic (Suh, Snodgrass and Currim 2017).

Normalization: Normalization is a technique that helps in maintaining tables in

manner for reducing redundancy and dependency of data (Singhal, Buckley and

Mitra 2017). It helps in dividing larger tables into smaller tables and provide link

between using various relationships.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

7

DATA AND INFORMATION MANAGEMENT

References

Lazer, D., Kennedy, R., King, G. and Vespignani, A., 2014. The parable of Google Flu: traps in

big data analysis. Science, 343(6176), pp.1203-1205.

Mariotti, M., Gervasi, O., Vella, F., Cuzzocrea, A. and Costantini, A., 2018. Strategies and

systems towards grids and clouds integration: a DBMS-based solution. Future Generation

Computer Systems, 88, pp.718-729.

Pappas, V., Krell, F., Vo, B., Kolesnikov, V., Malkin, T., Choi, S.G., George, W., Keromytis, A.

and Bellovin, S., 2014, May. Blind seer: A scalable private dbms. In Security and Privacy (SP),

2014 IEEE Symposium on (pp. 359-374). IEEE.

Rawassizadeh, R., Dobbins, C., Nourizadeh, M., Ghamchili, Z. and Pazzani, M., 2017, March. A

natural language query interface for searching personal information on smartwatches. In 2017

IEEE International Conference on Pervasive Computing and Communications Workshops

(PerCom Workshops) (pp. 679-684). IEEE.

Singhal, A., Buckley, C. and Mitra, M., 2017, August. Pivoted document length normalization.

In ACM SIGIR Forum (Vol. 51, No. 2, pp. 176-184). ACM.

Suh, Y.K., Snodgrass, R.T. and Currim, S., 2017. An empirical study of transaction throughput

thrashing across multiple relational DBMSes. Information Systems, 66, pp.119-136.

Wu, X., Zhu, X., Wu, G.Q. and Ding, W., 2014. Data mining with big data. IEEE transactions

on knowledge and data engineering, 26(1), pp.97-107.

DATA AND INFORMATION MANAGEMENT

References

Lazer, D., Kennedy, R., King, G. and Vespignani, A., 2014. The parable of Google Flu: traps in

big data analysis. Science, 343(6176), pp.1203-1205.

Mariotti, M., Gervasi, O., Vella, F., Cuzzocrea, A. and Costantini, A., 2018. Strategies and

systems towards grids and clouds integration: a DBMS-based solution. Future Generation

Computer Systems, 88, pp.718-729.

Pappas, V., Krell, F., Vo, B., Kolesnikov, V., Malkin, T., Choi, S.G., George, W., Keromytis, A.

and Bellovin, S., 2014, May. Blind seer: A scalable private dbms. In Security and Privacy (SP),

2014 IEEE Symposium on (pp. 359-374). IEEE.

Rawassizadeh, R., Dobbins, C., Nourizadeh, M., Ghamchili, Z. and Pazzani, M., 2017, March. A

natural language query interface for searching personal information on smartwatches. In 2017

IEEE International Conference on Pervasive Computing and Communications Workshops

(PerCom Workshops) (pp. 679-684). IEEE.

Singhal, A., Buckley, C. and Mitra, M., 2017, August. Pivoted document length normalization.

In ACM SIGIR Forum (Vol. 51, No. 2, pp. 176-184). ACM.

Suh, Y.K., Snodgrass, R.T. and Currim, S., 2017. An empirical study of transaction throughput

thrashing across multiple relational DBMSes. Information Systems, 66, pp.119-136.

Wu, X., Zhu, X., Wu, G.Q. and Ding, W., 2014. Data mining with big data. IEEE transactions

on knowledge and data engineering, 26(1), pp.97-107.

1 out of 7

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.