Data Mining Assignment: Association Rules, Clustering Analysis Report

VerifiedAdded on 2020/03/16

|8

|936

|155

Homework Assignment

AI Summary

This data mining assignment analyzes association rules and clustering techniques using XL Miner, hierarchical clustering, and K-means clustering. The solution begins by examining association rules, identifying rule redundancy, and evaluating the impact of the minimum confidence interval. It then delves into hierarchical clustering, explaining the importance of data normalization and labeling clusters based on customer characteristics such as flight transactions, balance, and bonus transactions, categorizing customers into "Middle Class Flyers", "High Networth Flyers", and "Infrequent Flyers". A comparison is made with K-means clustering, highlighting pattern differences and labeling clusters accordingly. The assignment emphasizes the importance of matching clusters and understanding the key attributes for customer segmentation, providing a comprehensive analysis of the data mining methods.

Data Mining

Student Id and Name

[Pick the date]

Student Id and Name

[Pick the date]

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

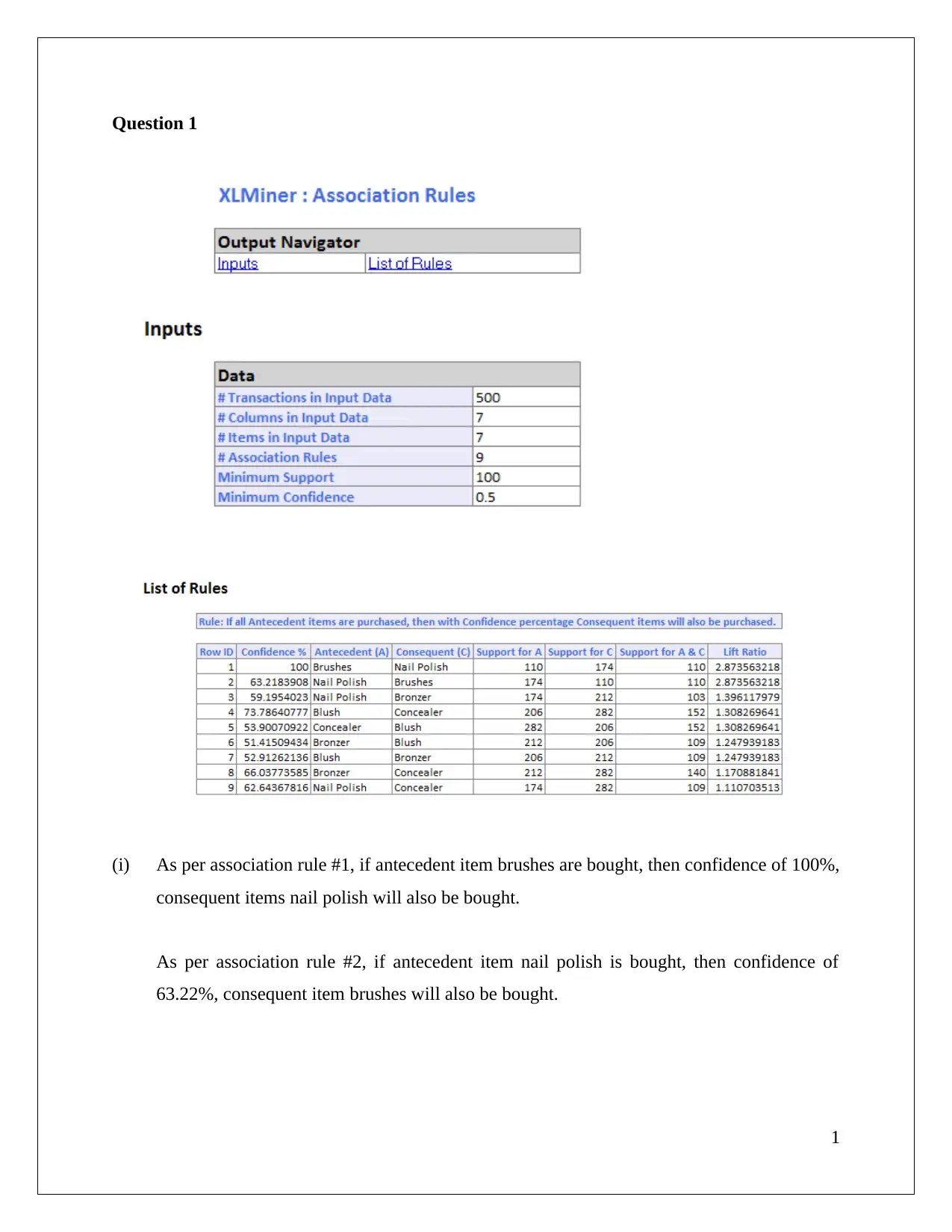

Question 1

(i) As per association rule #1, if antecedent item brushes are bought, then confidence of 100%,

consequent items nail polish will also be bought.

As per association rule #2, if antecedent item nail polish is bought, then confidence of

63.22%, consequent item brushes will also be bought.

1

(i) As per association rule #1, if antecedent item brushes are bought, then confidence of 100%,

consequent items nail polish will also be bought.

As per association rule #2, if antecedent item nail polish is bought, then confidence of

63.22%, consequent item brushes will also be bought.

1

As per association rule #3, if antecedent item nail polish is bought, then confidence of

59.20%, consequent item bronzer will also be bought.

(ii) Association rules often have the problem of rule redundancy. This usually occurs when the

underlying support level observed for a given rule is expected to reasonable accuracy by

the rule preceding the same. One rule which is redundant in the given case is rule 2. This is

because it shares the same lift ratio or support as rule 1. Hence, it would be fruitful to

eliminate the rule 2 from the output (Zaki, 2000).

The given association rules in terms of utility can be analysed considering namely two

considerations i.e. lift ratio along with the underlying confidence level. The significance of

an association rule is captured by the lift ratio as rules are arranged in descending order of

respective lift rations. Usually those rules which are high on atleast one of the parameter

are considered useful in relation to pattern determination along with other useful

information (Liebowitz, 2015).

.

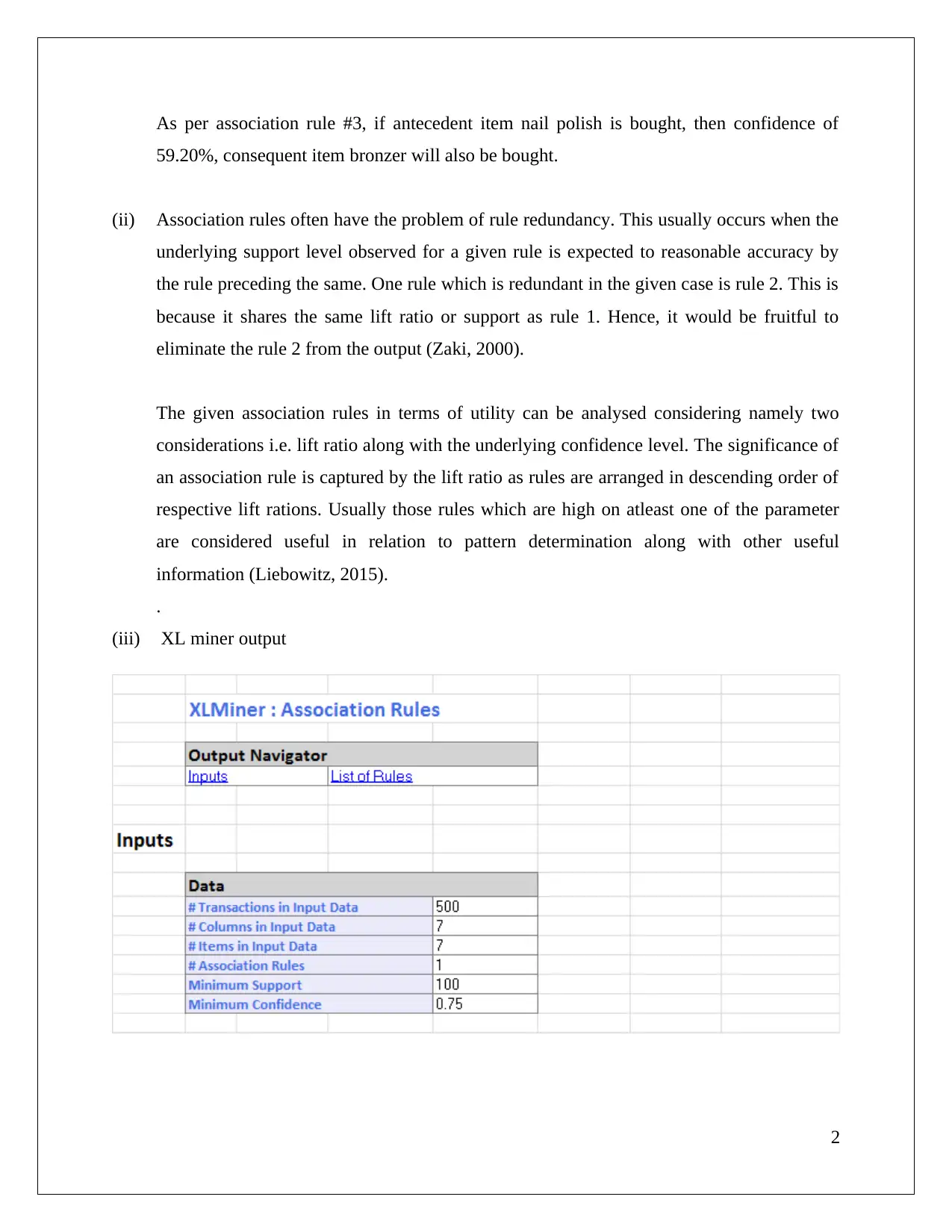

(iii) XL miner output

2

59.20%, consequent item bronzer will also be bought.

(ii) Association rules often have the problem of rule redundancy. This usually occurs when the

underlying support level observed for a given rule is expected to reasonable accuracy by

the rule preceding the same. One rule which is redundant in the given case is rule 2. This is

because it shares the same lift ratio or support as rule 1. Hence, it would be fruitful to

eliminate the rule 2 from the output (Zaki, 2000).

The given association rules in terms of utility can be analysed considering namely two

considerations i.e. lift ratio along with the underlying confidence level. The significance of

an association rule is captured by the lift ratio as rules are arranged in descending order of

respective lift rations. Usually those rules which are high on atleast one of the parameter

are considered useful in relation to pattern determination along with other useful

information (Liebowitz, 2015).

.

(iii) XL miner output

2

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

The XL Miner output provides primary evidence in relation to the impact of rising minimum

confidence interval as rules displayed have dwindled to only one. This is on account of the

inability of the other rules to satiate the minimum confidence level criterion. As a result, it is

imperative that this should not be defined at very high levels or else the rules which may enjoy

high support would be missed (Ana, 2014).

Question 2

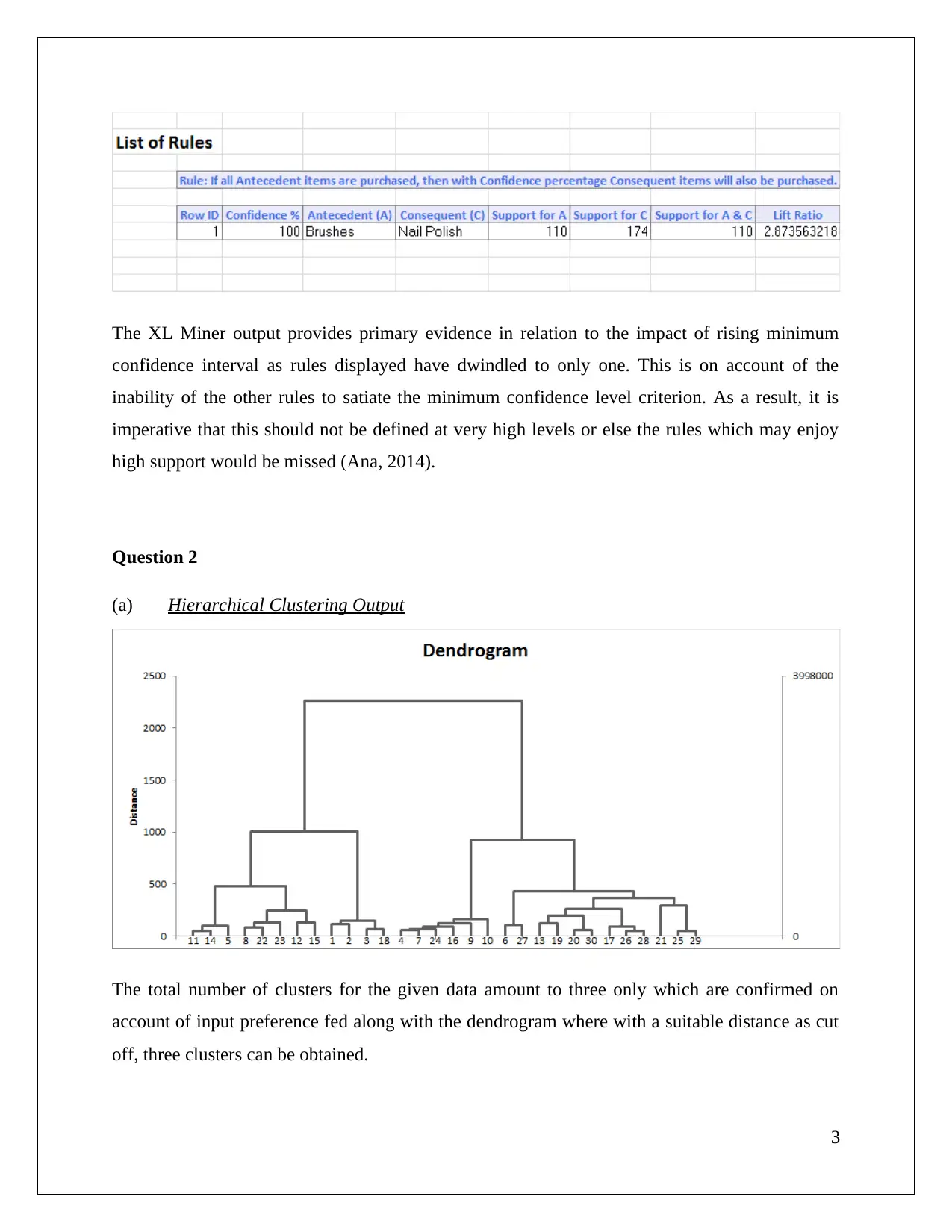

(a) Hierarchical Clustering Output

The total number of clusters for the given data amount to three only which are confirmed on

account of input preference fed along with the dendrogram where with a suitable distance as cut

off, three clusters can be obtained.

3

confidence interval as rules displayed have dwindled to only one. This is on account of the

inability of the other rules to satiate the minimum confidence level criterion. As a result, it is

imperative that this should not be defined at very high levels or else the rules which may enjoy

high support would be missed (Ana, 2014).

Question 2

(a) Hierarchical Clustering Output

The total number of clusters for the given data amount to three only which are confirmed on

account of input preference fed along with the dendrogram where with a suitable distance as cut

off, three clusters can be obtained.

3

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

(b) The data normalisation while undergoing hierarchical clustering is essential to nullify the

effect of differing scales. If this is not carried out, then the distance computation process

would lead to incorrect outcomes thus hampering correct cluster formation. Also, on

account of the preference given to larger sized variable, the accuracy of the whole measure

would be compromised. Hence it makes sense to proceed with normalised data only

(Shumueli et. al., 2016).

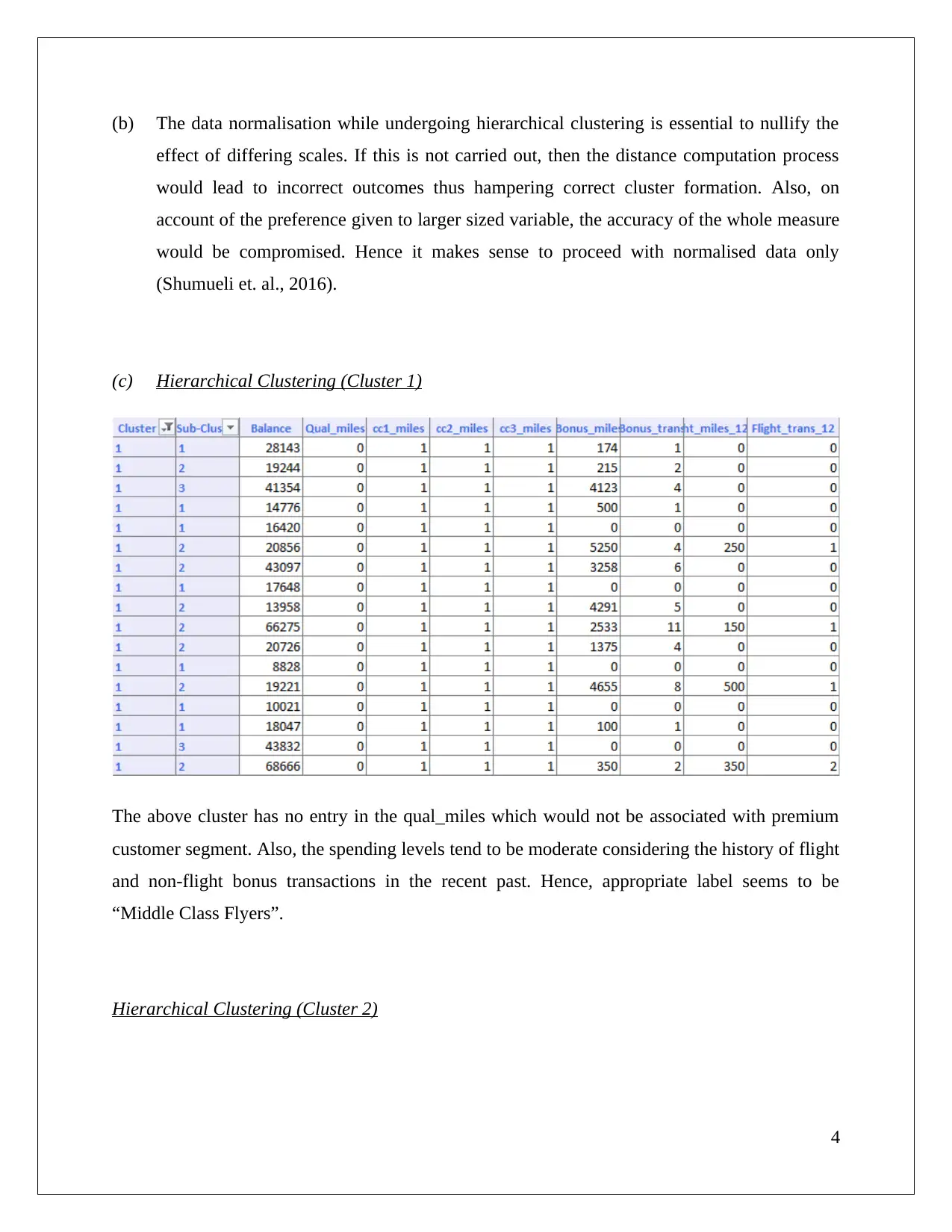

(c) Hierarchical Clustering (Cluster 1)

The above cluster has no entry in the qual_miles which would not be associated with premium

customer segment. Also, the spending levels tend to be moderate considering the history of flight

and non-flight bonus transactions in the recent past. Hence, appropriate label seems to be

“Middle Class Flyers”.

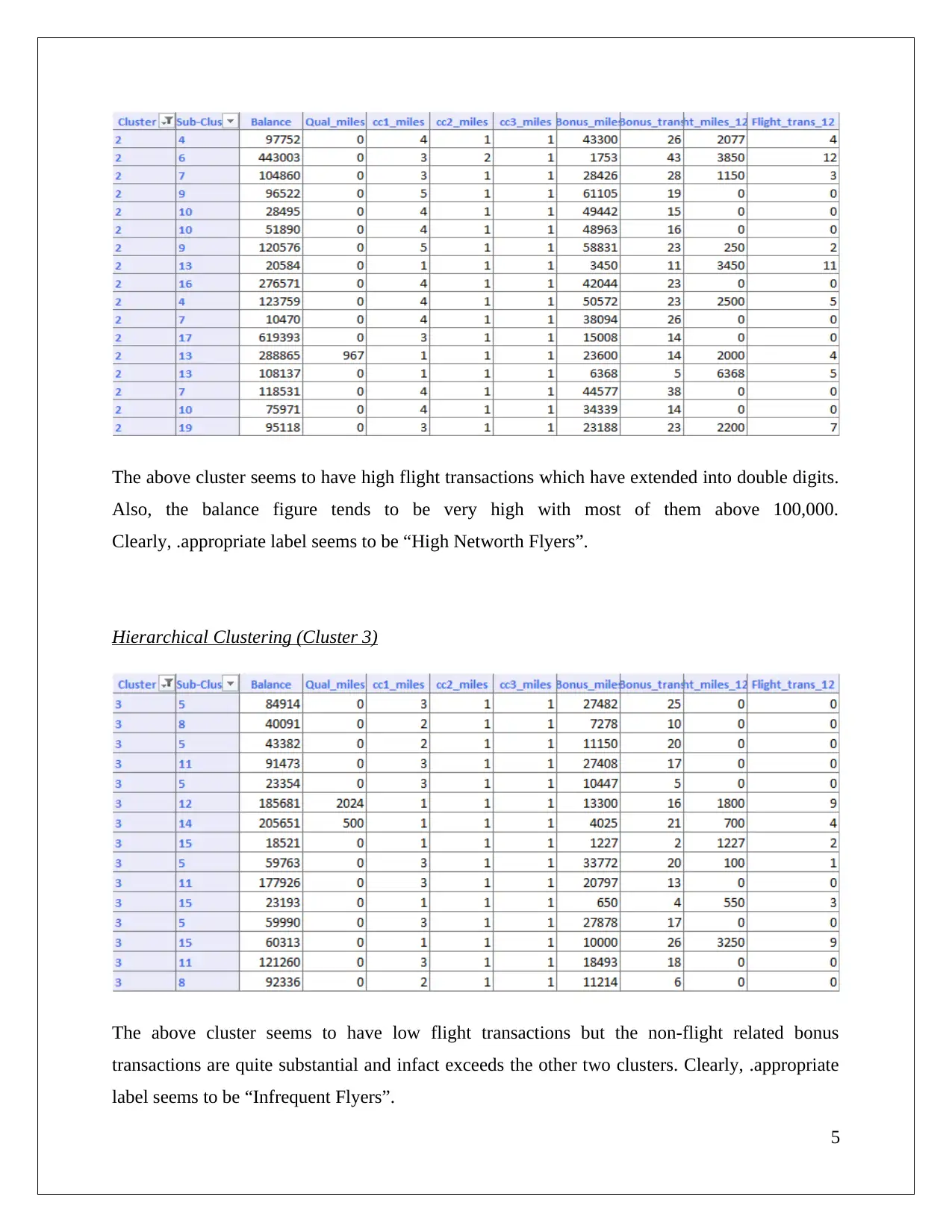

Hierarchical Clustering (Cluster 2)

4

effect of differing scales. If this is not carried out, then the distance computation process

would lead to incorrect outcomes thus hampering correct cluster formation. Also, on

account of the preference given to larger sized variable, the accuracy of the whole measure

would be compromised. Hence it makes sense to proceed with normalised data only

(Shumueli et. al., 2016).

(c) Hierarchical Clustering (Cluster 1)

The above cluster has no entry in the qual_miles which would not be associated with premium

customer segment. Also, the spending levels tend to be moderate considering the history of flight

and non-flight bonus transactions in the recent past. Hence, appropriate label seems to be

“Middle Class Flyers”.

Hierarchical Clustering (Cluster 2)

4

The above cluster seems to have high flight transactions which have extended into double digits.

Also, the balance figure tends to be very high with most of them above 100,000.

Clearly, .appropriate label seems to be “High Networth Flyers”.

Hierarchical Clustering (Cluster 3)

The above cluster seems to have low flight transactions but the non-flight related bonus

transactions are quite substantial and infact exceeds the other two clusters. Clearly, .appropriate

label seems to be “Infrequent Flyers”.

5

Also, the balance figure tends to be very high with most of them above 100,000.

Clearly, .appropriate label seems to be “High Networth Flyers”.

Hierarchical Clustering (Cluster 3)

The above cluster seems to have low flight transactions but the non-flight related bonus

transactions are quite substantial and infact exceeds the other two clusters. Clearly, .appropriate

label seems to be “Infrequent Flyers”.

5

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

(d) K Means Clustering Output

In order to facilitate a comparison of clustering pattern for the two techniques, the matching of

clusters obtained is very critical.

Cluster 1 has the following key attributes on the K Means Clustering output indicated above.

The flight transactions are quite substantial i.e. exceeds 15 per annum.

The balance miles on an average are very high i.e. exceeds 200,000.

The Qual_Miles has an exceeding high value i.e. greater than 800.

On account of the above, this cluster would be labeled as “High Networth Flyers”. Hence, the

reading of the cluster does not match with the verdict of hierarchical clustering where the high

networth customers were captured by Cluster 2. Hence, the pattern difference is apparent for the

two techniques (Ragsdale, 2014).

e) Target along with Offers

6

In order to facilitate a comparison of clustering pattern for the two techniques, the matching of

clusters obtained is very critical.

Cluster 1 has the following key attributes on the K Means Clustering output indicated above.

The flight transactions are quite substantial i.e. exceeds 15 per annum.

The balance miles on an average are very high i.e. exceeds 200,000.

The Qual_Miles has an exceeding high value i.e. greater than 800.

On account of the above, this cluster would be labeled as “High Networth Flyers”. Hence, the

reading of the cluster does not match with the verdict of hierarchical clustering where the high

networth customers were captured by Cluster 2. Hence, the pattern difference is apparent for the

two techniques (Ragsdale, 2014).

e) Target along with Offers

6

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

References

Ana, A. (2014) Integration of Data Mining in Business Intelligence System (4th ed.). Sydney:

IGA Global

Liebowitz, J. (2015) Business Analytics: An Introduction (2nd ed.). New York: CRC Press.

Ragsdale, C. (2014) Spread sheet Modeling and Decision Analysis: A Practical Introduction to

Business Analytics (7th ed.). London: Cengage Learning.

Shumueli, G., Bruce, C.P., Yahav, I., Patel, R. N., Kenneth, C., & Lichtendahl, J. (2016) Data

Mining For Business Analytics: Concepts Techniques and Application (2nd ed.).London:

John Wiley & Sons.

Zaki, M.J.(2000), Generating non-redundant association rules. In: Proceeding of the ACM

SIGKDD, pp. 34–43

7

Ana, A. (2014) Integration of Data Mining in Business Intelligence System (4th ed.). Sydney:

IGA Global

Liebowitz, J. (2015) Business Analytics: An Introduction (2nd ed.). New York: CRC Press.

Ragsdale, C. (2014) Spread sheet Modeling and Decision Analysis: A Practical Introduction to

Business Analytics (7th ed.). London: Cengage Learning.

Shumueli, G., Bruce, C.P., Yahav, I., Patel, R. N., Kenneth, C., & Lichtendahl, J. (2016) Data

Mining For Business Analytics: Concepts Techniques and Application (2nd ed.).London:

John Wiley & Sons.

Zaki, M.J.(2000), Generating non-redundant association rules. In: Proceeding of the ACM

SIGKDD, pp. 34–43

7

1 out of 8

Related Documents

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.