Data Science Assignment on Statistical Analysis and R Programming

VerifiedAdded on 2022/08/27

|7

|688

|22

Homework Assignment

AI Summary

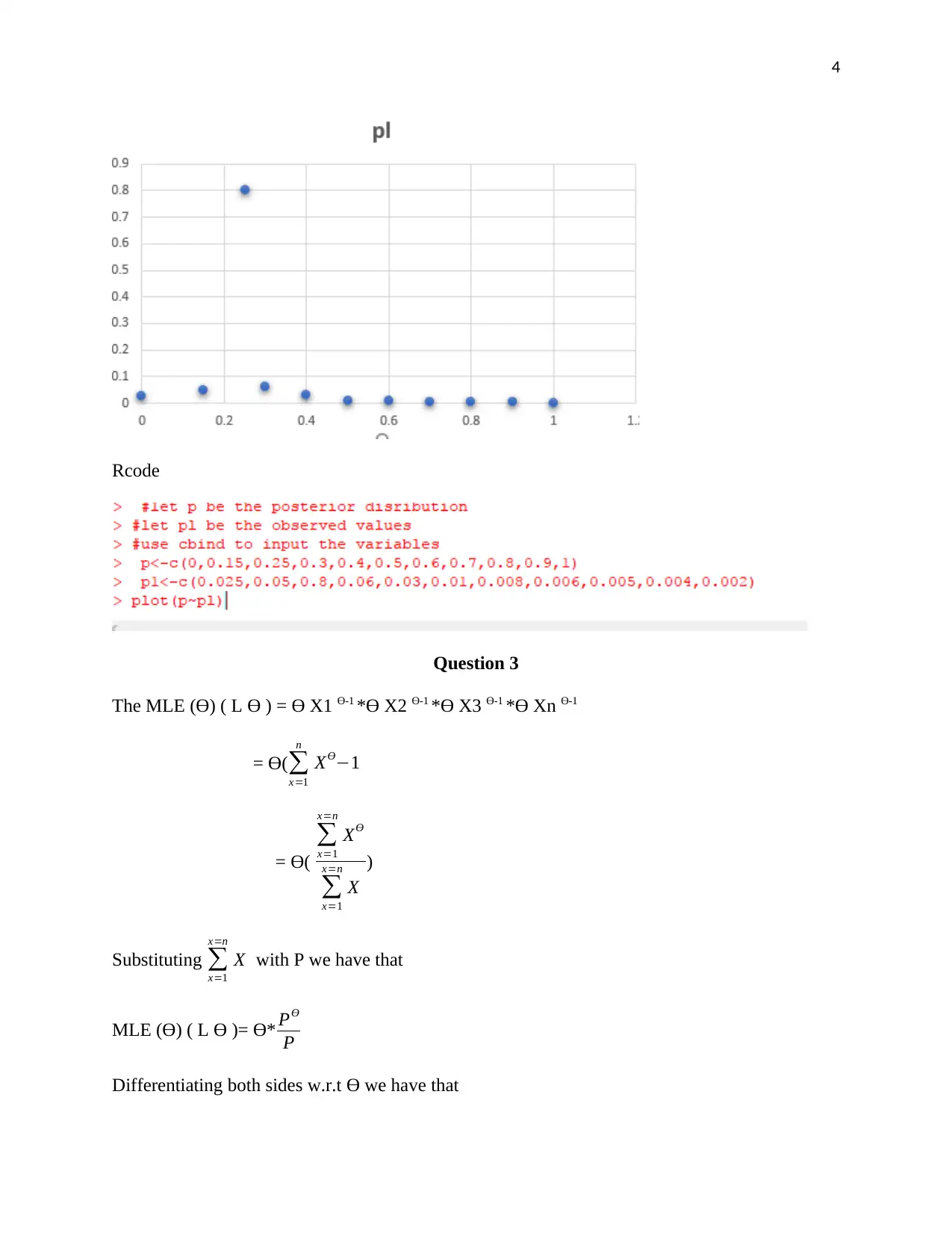

This data science assignment solution addresses several statistical concepts and their practical application using R programming. The assignment begins with the analysis of a random variable's distribution, calculating the distribution of a new variable derived from it. It then explores the expected value of a binomial distribution and provides R code to calculate it. The solution further delves into posterior distributions, estimation, and plotting. The document then examines Maximum Likelihood Estimation (MLE), deriving the MLE for a given function and analyzing how the variance changes with increasing values. Finally, the solution covers the Poisson probability distribution, calculating the MLE for the Poisson distribution and provides the necessary formulas and steps to derive the solution. The assignment includes citations for the references used.

1 out of 7

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)