Data Warehouse Design, Implementation, and Querying for GreenHomeHelp

VerifiedAdded on 2022/12/03

|11

|1392

|73

Report

AI Summary

This report presents the design and implementation of a data warehouse for GreenHomeHelp, developed on an Oracle database, with data extracted from an MS Access database. The report details the ETL process, including data extraction to a flat file, loading into a staging area using data loaders, and data cleaning to ensure consistency. The warehouse design focuses on answering key government questions, utilizing fact and dimension tables. The report includes SQL scripts for data loading, time dimension population, and fact table updates. Furthermore, it provides SQL queries to answer specific business questions, such as the number of sessions filled by type of temp cover, requests made by council, and canceled requests, along with a discussion of the challenges encountered during the implementation process. All SQL DDL scripts used in building the staging area and the complete data warehouse have been included in a zip file attached.

Introduction

Modern businesses are largely dependent on data and information insights. Governments around

the world are also adopting data and information driven policy making processes. To effectively

retrieve information from operational data, data warehouses are employed; as they ease and

streamline the retrieval process.

This report presents the design and implementation of a data warehouse for GreenHomeHelp.

The warehouse was developed on an Oracle database, with data extracted from the given MS

Access database. The data was first extracted from Access through exporting to a flat file, and

then imported into oracle staging area by use of a data loader. Cleaning and transformation then

followed, before the data was loaded into the data mart.

Design Overview

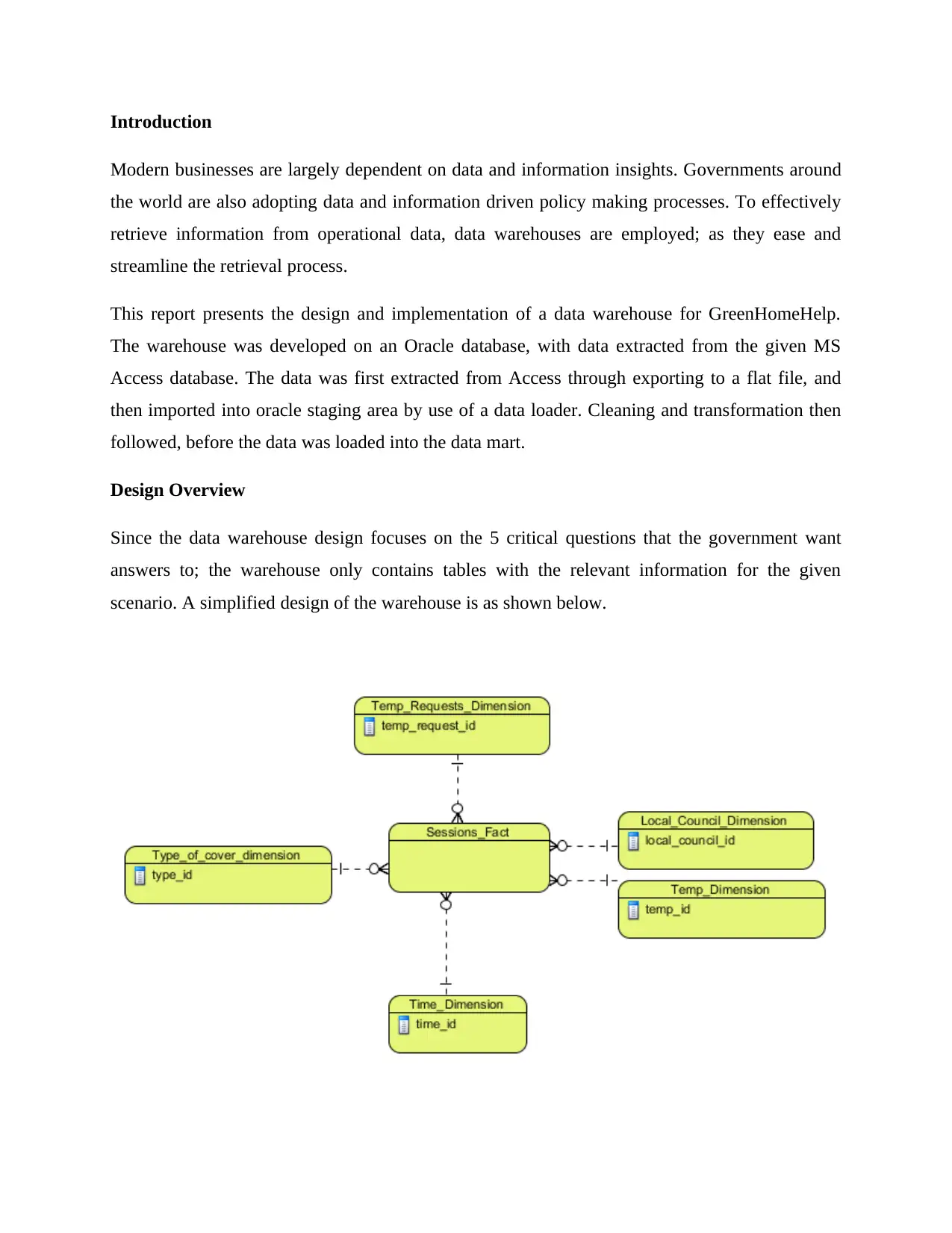

Since the data warehouse design focuses on the 5 critical questions that the government want

answers to; the warehouse only contains tables with the relevant information for the given

scenario. A simplified design of the warehouse is as shown below.

Modern businesses are largely dependent on data and information insights. Governments around

the world are also adopting data and information driven policy making processes. To effectively

retrieve information from operational data, data warehouses are employed; as they ease and

streamline the retrieval process.

This report presents the design and implementation of a data warehouse for GreenHomeHelp.

The warehouse was developed on an Oracle database, with data extracted from the given MS

Access database. The data was first extracted from Access through exporting to a flat file, and

then imported into oracle staging area by use of a data loader. Cleaning and transformation then

followed, before the data was loaded into the data mart.

Design Overview

Since the data warehouse design focuses on the 5 critical questions that the government want

answers to; the warehouse only contains tables with the relevant information for the given

scenario. A simplified design of the warehouse is as shown below.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

ETL: Extraction, Transformation and Loading

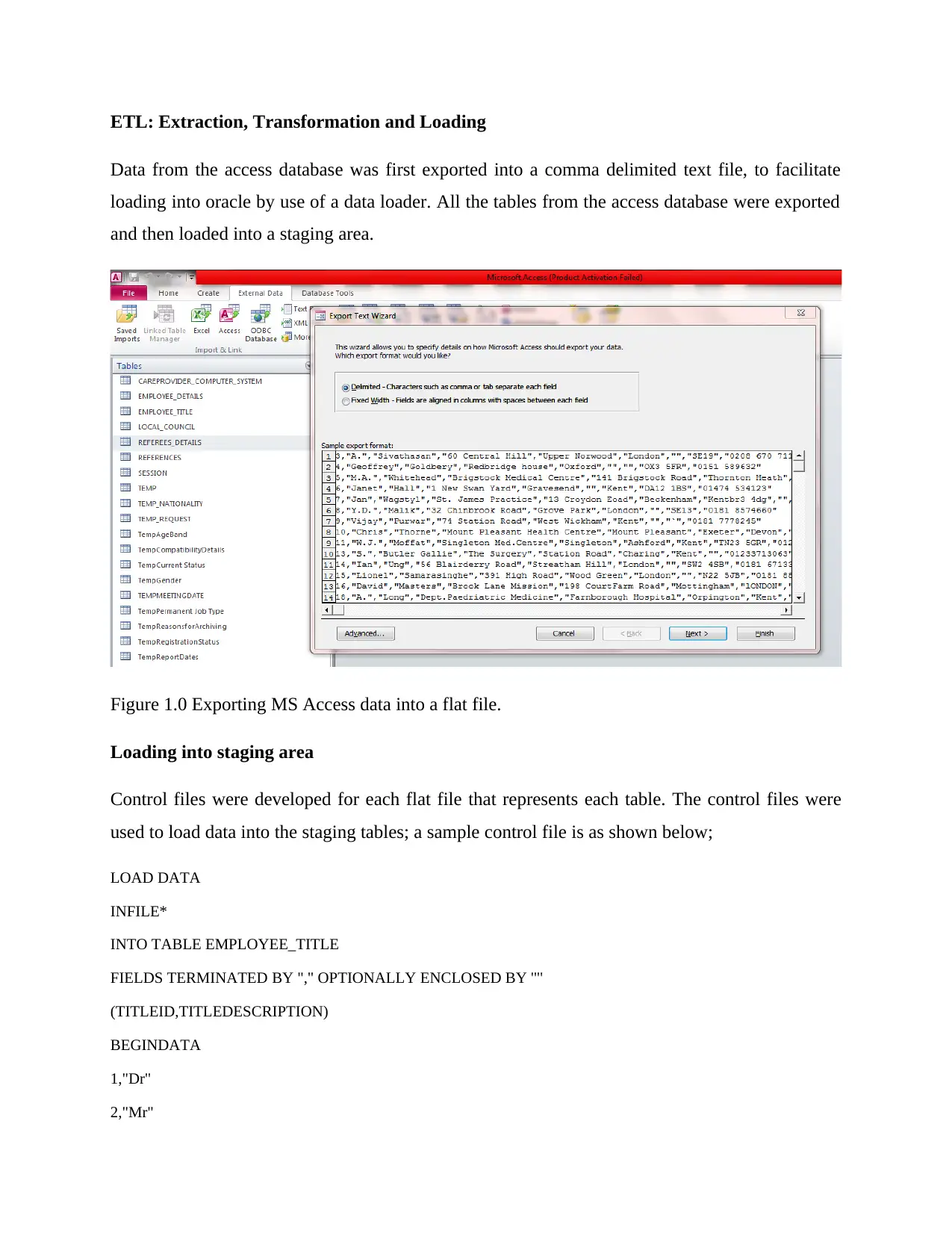

Data from the access database was first exported into a comma delimited text file, to facilitate

loading into oracle by use of a data loader. All the tables from the access database were exported

and then loaded into a staging area.

Figure 1.0 Exporting MS Access data into a flat file.

Loading into staging area

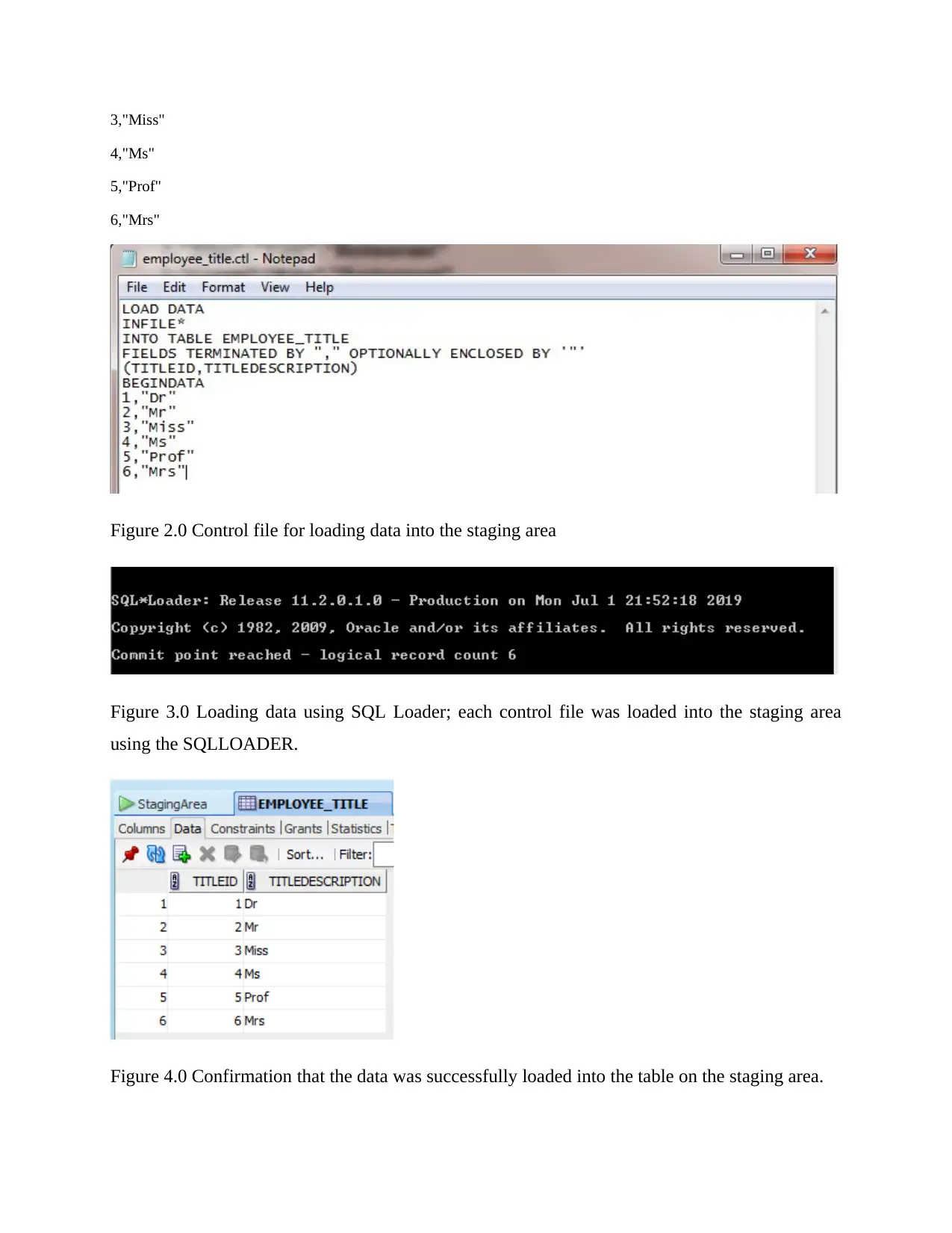

Control files were developed for each flat file that represents each table. The control files were

used to load data into the staging tables; a sample control file is as shown below;

LOAD DATA

INFILE*

INTO TABLE EMPLOYEE_TITLE

FIELDS TERMINATED BY "," OPTIONALLY ENCLOSED BY '"'

(TITLEID,TITLEDESCRIPTION)

BEGINDATA

1,"Dr"

2,"Mr"

Data from the access database was first exported into a comma delimited text file, to facilitate

loading into oracle by use of a data loader. All the tables from the access database were exported

and then loaded into a staging area.

Figure 1.0 Exporting MS Access data into a flat file.

Loading into staging area

Control files were developed for each flat file that represents each table. The control files were

used to load data into the staging tables; a sample control file is as shown below;

LOAD DATA

INFILE*

INTO TABLE EMPLOYEE_TITLE

FIELDS TERMINATED BY "," OPTIONALLY ENCLOSED BY '"'

(TITLEID,TITLEDESCRIPTION)

BEGINDATA

1,"Dr"

2,"Mr"

3,"Miss"

4,"Ms"

5,"Prof"

6,"Mrs"

Figure 2.0 Control file for loading data into the staging area

Figure 3.0 Loading data using SQL Loader; each control file was loaded into the staging area

using the SQLLOADER.

Figure 4.0 Confirmation that the data was successfully loaded into the table on the staging area.

4,"Ms"

5,"Prof"

6,"Mrs"

Figure 2.0 Control file for loading data into the staging area

Figure 3.0 Loading data using SQL Loader; each control file was loaded into the staging area

using the SQLLOADER.

Figure 4.0 Confirmation that the data was successfully loaded into the table on the staging area.

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

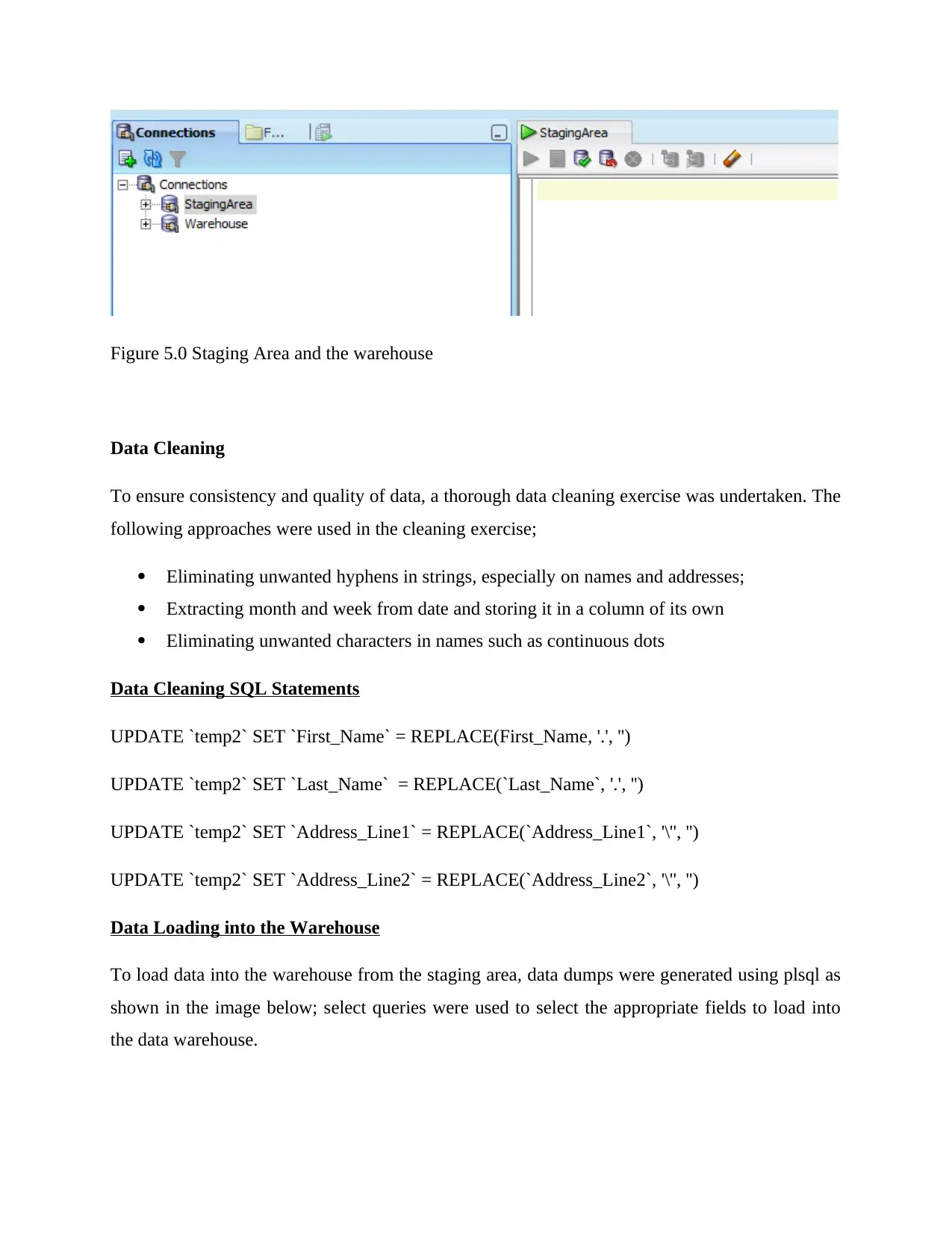

Figure 5.0 Staging Area and the warehouse

Data Cleaning

To ensure consistency and quality of data, a thorough data cleaning exercise was undertaken. The

following approaches were used in the cleaning exercise;

Eliminating unwanted hyphens in strings, especially on names and addresses;

Extracting month and week from date and storing it in a column of its own

Eliminating unwanted characters in names such as continuous dots

Data Cleaning SQL Statements

UPDATE `temp2` SET `First_Name` = REPLACE(First_Name, '.', '')

UPDATE `temp2` SET `Last_Name` = REPLACE(`Last_Name`, '.', '')

UPDATE `temp2` SET `Address_Line1` = REPLACE(`Address_Line1`, '\'', '')

UPDATE `temp2` SET `Address_Line2` = REPLACE(`Address_Line2`, '\'', '')

Data Loading into the Warehouse

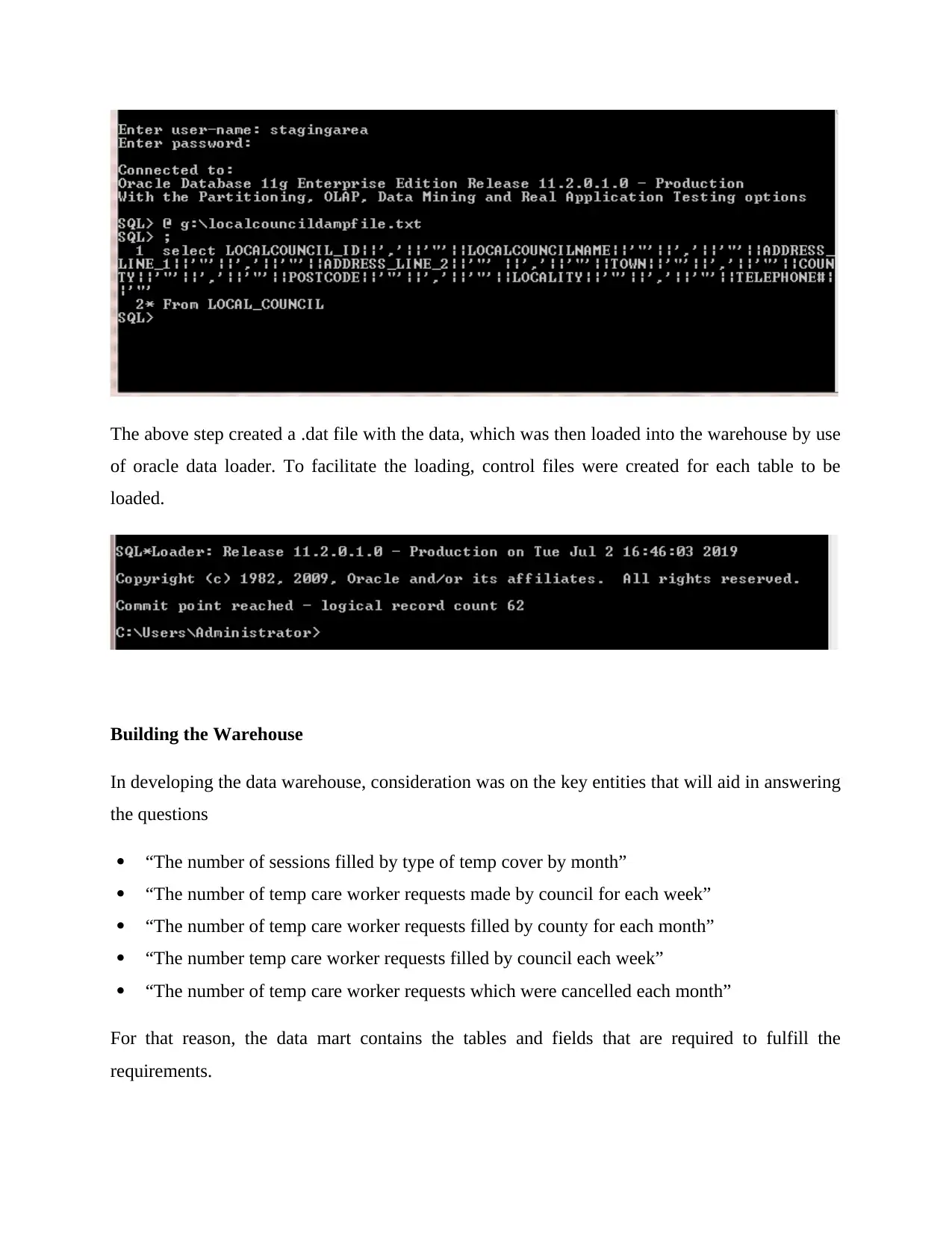

To load data into the warehouse from the staging area, data dumps were generated using plsql as

shown in the image below; select queries were used to select the appropriate fields to load into

the data warehouse.

Data Cleaning

To ensure consistency and quality of data, a thorough data cleaning exercise was undertaken. The

following approaches were used in the cleaning exercise;

Eliminating unwanted hyphens in strings, especially on names and addresses;

Extracting month and week from date and storing it in a column of its own

Eliminating unwanted characters in names such as continuous dots

Data Cleaning SQL Statements

UPDATE `temp2` SET `First_Name` = REPLACE(First_Name, '.', '')

UPDATE `temp2` SET `Last_Name` = REPLACE(`Last_Name`, '.', '')

UPDATE `temp2` SET `Address_Line1` = REPLACE(`Address_Line1`, '\'', '')

UPDATE `temp2` SET `Address_Line2` = REPLACE(`Address_Line2`, '\'', '')

Data Loading into the Warehouse

To load data into the warehouse from the staging area, data dumps were generated using plsql as

shown in the image below; select queries were used to select the appropriate fields to load into

the data warehouse.

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

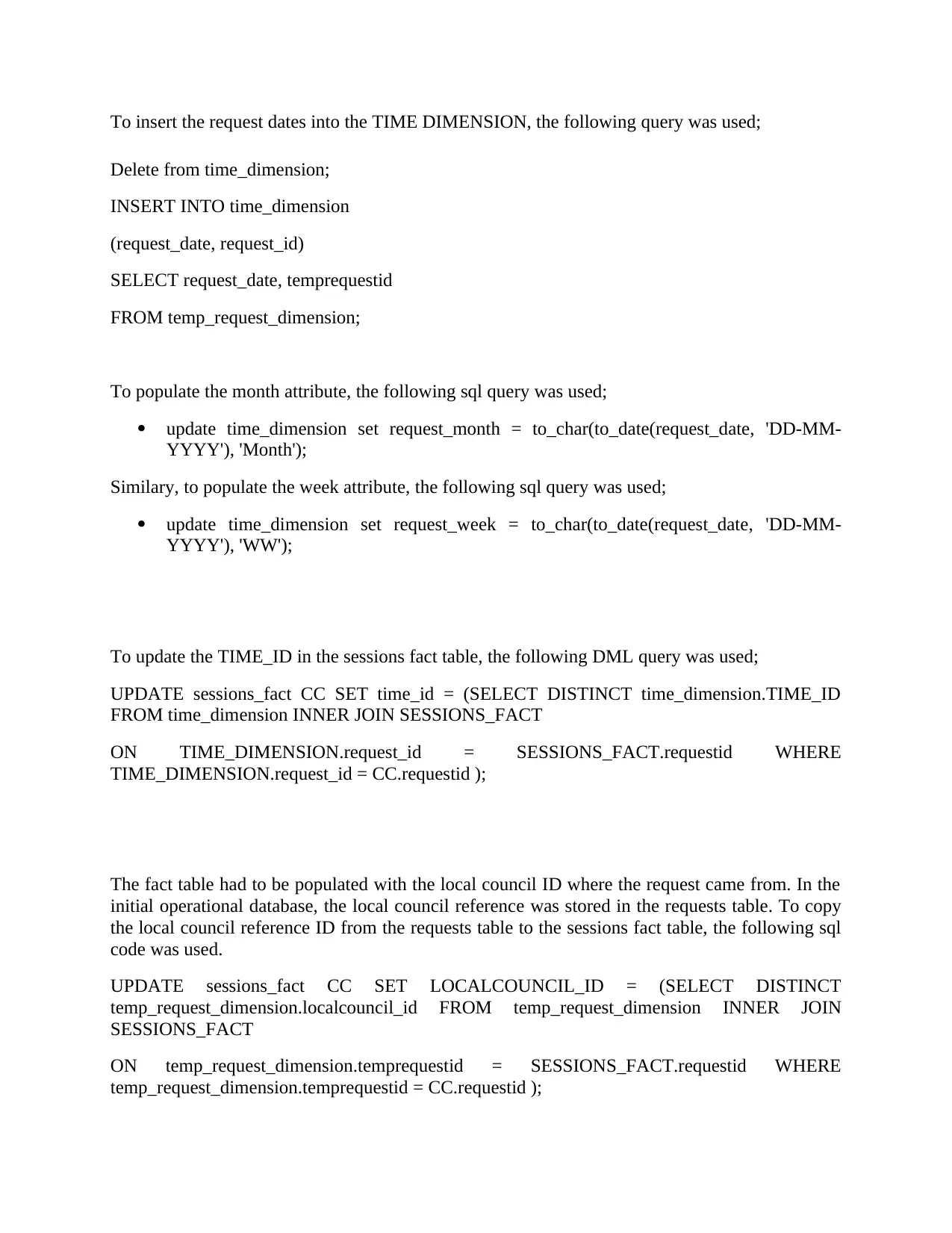

The above step created a .dat file with the data, which was then loaded into the warehouse by use

of oracle data loader. To facilitate the loading, control files were created for each table to be

loaded.

Building the Warehouse

In developing the data warehouse, consideration was on the key entities that will aid in answering

the questions

“The number of sessions filled by type of temp cover by month”

“The number of temp care worker requests made by council for each week”

“The number of temp care worker requests filled by county for each month”

“The number temp care worker requests filled by council each week”

“The number of temp care worker requests which were cancelled each month”

For that reason, the data mart contains the tables and fields that are required to fulfill the

requirements.

of oracle data loader. To facilitate the loading, control files were created for each table to be

loaded.

Building the Warehouse

In developing the data warehouse, consideration was on the key entities that will aid in answering

the questions

“The number of sessions filled by type of temp cover by month”

“The number of temp care worker requests made by council for each week”

“The number of temp care worker requests filled by county for each month”

“The number temp care worker requests filled by council each week”

“The number of temp care worker requests which were cancelled each month”

For that reason, the data mart contains the tables and fields that are required to fulfill the

requirements.

To insert the request dates into the TIME DIMENSION, the following query was used;

Delete from time_dimension;

INSERT INTO time_dimension

(request_date, request_id)

SELECT request_date, temprequestid

FROM temp_request_dimension;

To populate the month attribute, the following sql query was used;

update time_dimension set request_month = to_char(to_date(request_date, 'DD-MM-

YYYY'), 'Month');

Similary, to populate the week attribute, the following sql query was used;

update time_dimension set request_week = to_char(to_date(request_date, 'DD-MM-

YYYY'), 'WW');

To update the TIME_ID in the sessions fact table, the following DML query was used;

UPDATE sessions_fact CC SET time_id = (SELECT DISTINCT time_dimension.TIME_ID

FROM time_dimension INNER JOIN SESSIONS_FACT

ON TIME_DIMENSION.request_id = SESSIONS_FACT.requestid WHERE

TIME_DIMENSION.request_id = CC.requestid );

The fact table had to be populated with the local council ID where the request came from. In the

initial operational database, the local council reference was stored in the requests table. To copy

the local council reference ID from the requests table to the sessions fact table, the following sql

code was used.

UPDATE sessions_fact CC SET LOCALCOUNCIL_ID = (SELECT DISTINCT

temp_request_dimension.localcouncil_id FROM temp_request_dimension INNER JOIN

SESSIONS_FACT

ON temp_request_dimension.temprequestid = SESSIONS_FACT.requestid WHERE

temp_request_dimension.temprequestid = CC.requestid );

Delete from time_dimension;

INSERT INTO time_dimension

(request_date, request_id)

SELECT request_date, temprequestid

FROM temp_request_dimension;

To populate the month attribute, the following sql query was used;

update time_dimension set request_month = to_char(to_date(request_date, 'DD-MM-

YYYY'), 'Month');

Similary, to populate the week attribute, the following sql query was used;

update time_dimension set request_week = to_char(to_date(request_date, 'DD-MM-

YYYY'), 'WW');

To update the TIME_ID in the sessions fact table, the following DML query was used;

UPDATE sessions_fact CC SET time_id = (SELECT DISTINCT time_dimension.TIME_ID

FROM time_dimension INNER JOIN SESSIONS_FACT

ON TIME_DIMENSION.request_id = SESSIONS_FACT.requestid WHERE

TIME_DIMENSION.request_id = CC.requestid );

The fact table had to be populated with the local council ID where the request came from. In the

initial operational database, the local council reference was stored in the requests table. To copy

the local council reference ID from the requests table to the sessions fact table, the following sql

code was used.

UPDATE sessions_fact CC SET LOCALCOUNCIL_ID = (SELECT DISTINCT

temp_request_dimension.localcouncil_id FROM temp_request_dimension INNER JOIN

SESSIONS_FACT

ON temp_request_dimension.temprequestid = SESSIONS_FACT.requestid WHERE

temp_request_dimension.temprequestid = CC.requestid );

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

PL/SQL code listings for the entire system

All SQL DDL scripts used in building the staging area and the complete data warehouse

have been included in a zip file attached.

QUERIES

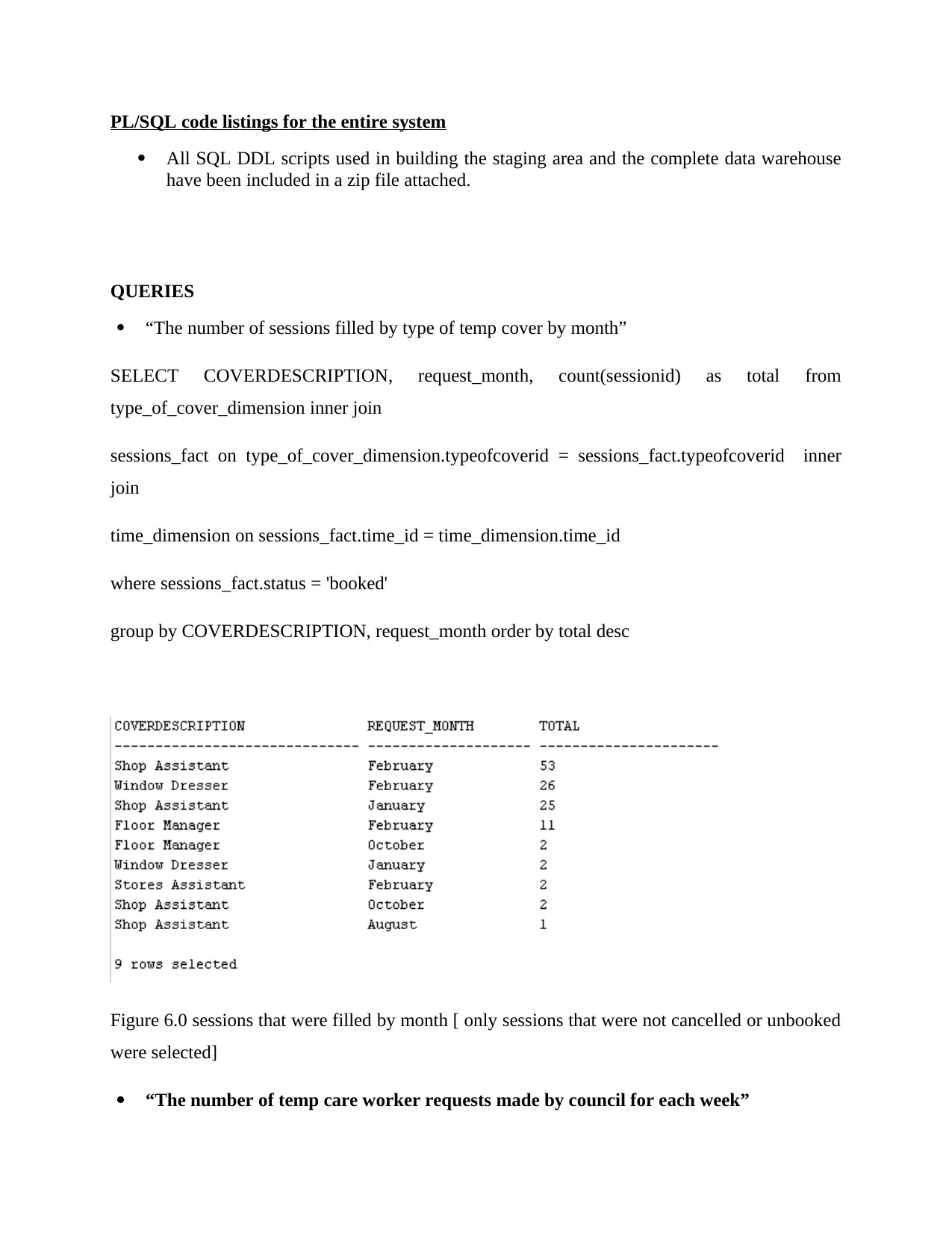

“The number of sessions filled by type of temp cover by month”

SELECT COVERDESCRIPTION, request_month, count(sessionid) as total from

type_of_cover_dimension inner join

sessions_fact on type_of_cover_dimension.typeofcoverid = sessions_fact.typeofcoverid inner

join

time_dimension on sessions_fact.time_id = time_dimension.time_id

where sessions_fact.status = 'booked'

group by COVERDESCRIPTION, request_month order by total desc

Figure 6.0 sessions that were filled by month [ only sessions that were not cancelled or unbooked

were selected]

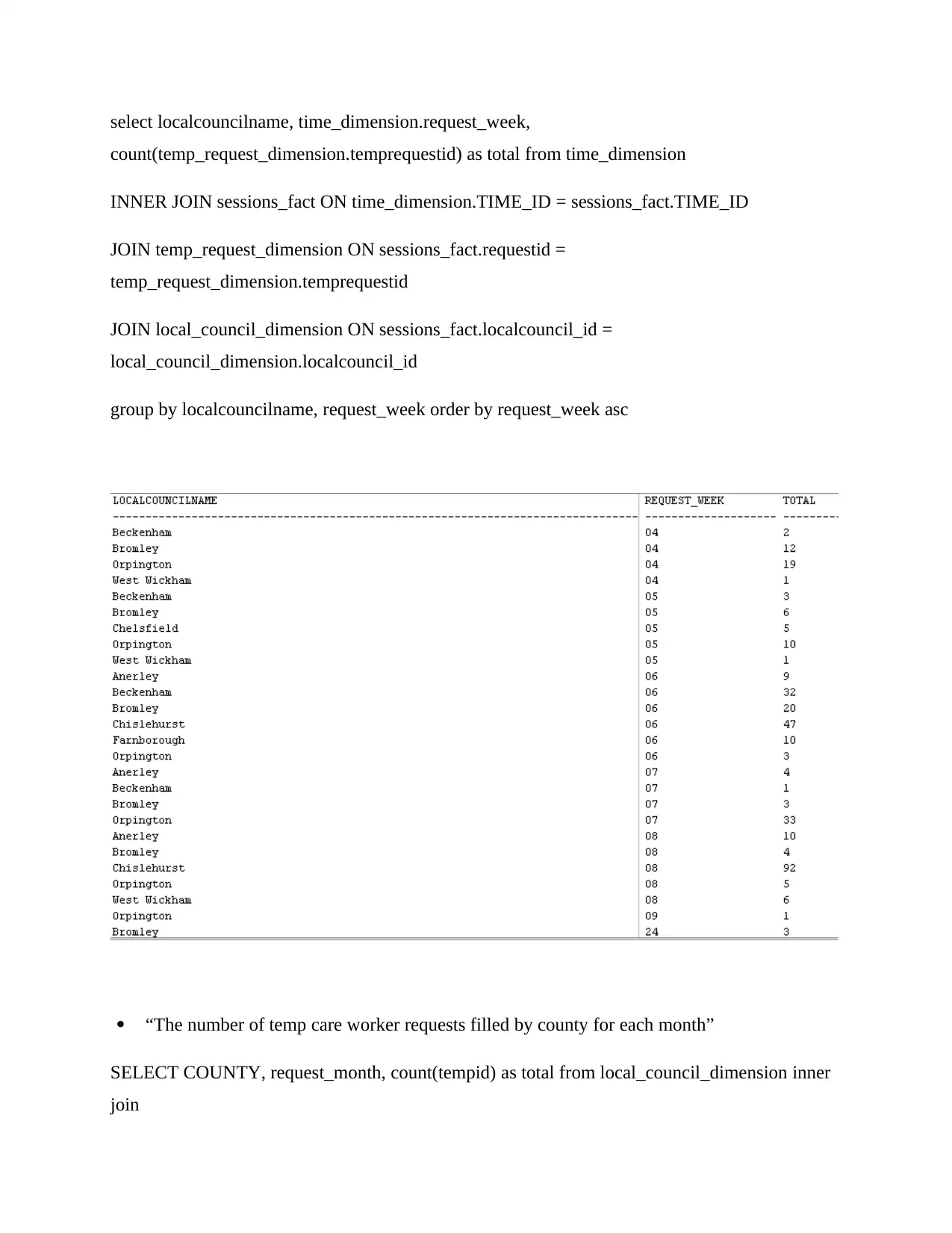

“The number of temp care worker requests made by council for each week”

All SQL DDL scripts used in building the staging area and the complete data warehouse

have been included in a zip file attached.

QUERIES

“The number of sessions filled by type of temp cover by month”

SELECT COVERDESCRIPTION, request_month, count(sessionid) as total from

type_of_cover_dimension inner join

sessions_fact on type_of_cover_dimension.typeofcoverid = sessions_fact.typeofcoverid inner

join

time_dimension on sessions_fact.time_id = time_dimension.time_id

where sessions_fact.status = 'booked'

group by COVERDESCRIPTION, request_month order by total desc

Figure 6.0 sessions that were filled by month [ only sessions that were not cancelled or unbooked

were selected]

“The number of temp care worker requests made by council for each week”

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

select localcouncilname, time_dimension.request_week,

count(temp_request_dimension.temprequestid) as total from time_dimension

INNER JOIN sessions_fact ON time_dimension.TIME_ID = sessions_fact.TIME_ID

JOIN temp_request_dimension ON sessions_fact.requestid =

temp_request_dimension.temprequestid

JOIN local_council_dimension ON sessions_fact.localcouncil_id =

local_council_dimension.localcouncil_id

group by localcouncilname, request_week order by request_week asc

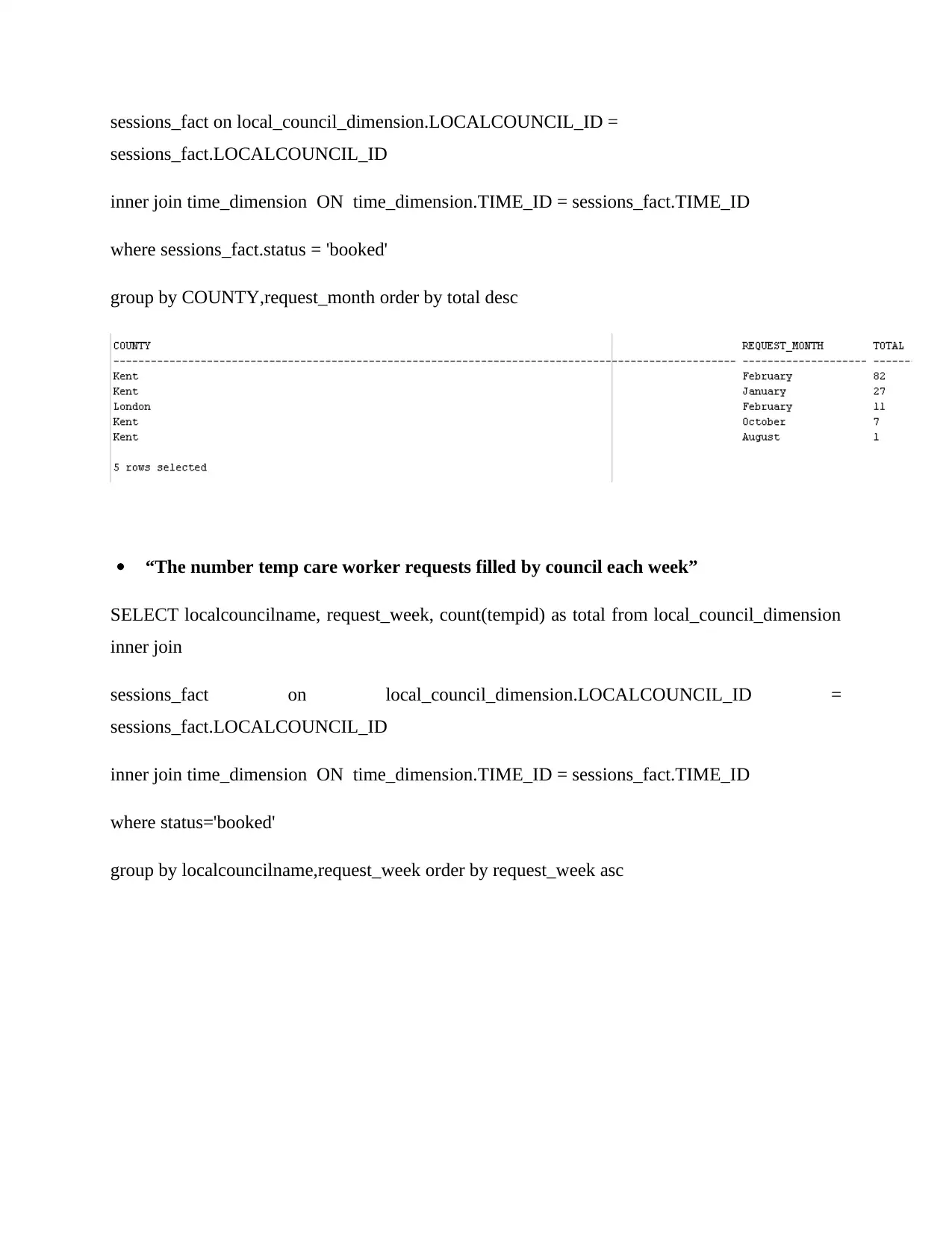

“The number of temp care worker requests filled by county for each month”

SELECT COUNTY, request_month, count(tempid) as total from local_council_dimension inner

join

count(temp_request_dimension.temprequestid) as total from time_dimension

INNER JOIN sessions_fact ON time_dimension.TIME_ID = sessions_fact.TIME_ID

JOIN temp_request_dimension ON sessions_fact.requestid =

temp_request_dimension.temprequestid

JOIN local_council_dimension ON sessions_fact.localcouncil_id =

local_council_dimension.localcouncil_id

group by localcouncilname, request_week order by request_week asc

“The number of temp care worker requests filled by county for each month”

SELECT COUNTY, request_month, count(tempid) as total from local_council_dimension inner

join

sessions_fact on local_council_dimension.LOCALCOUNCIL_ID =

sessions_fact.LOCALCOUNCIL_ID

inner join time_dimension ON time_dimension.TIME_ID = sessions_fact.TIME_ID

where sessions_fact.status = 'booked'

group by COUNTY,request_month order by total desc

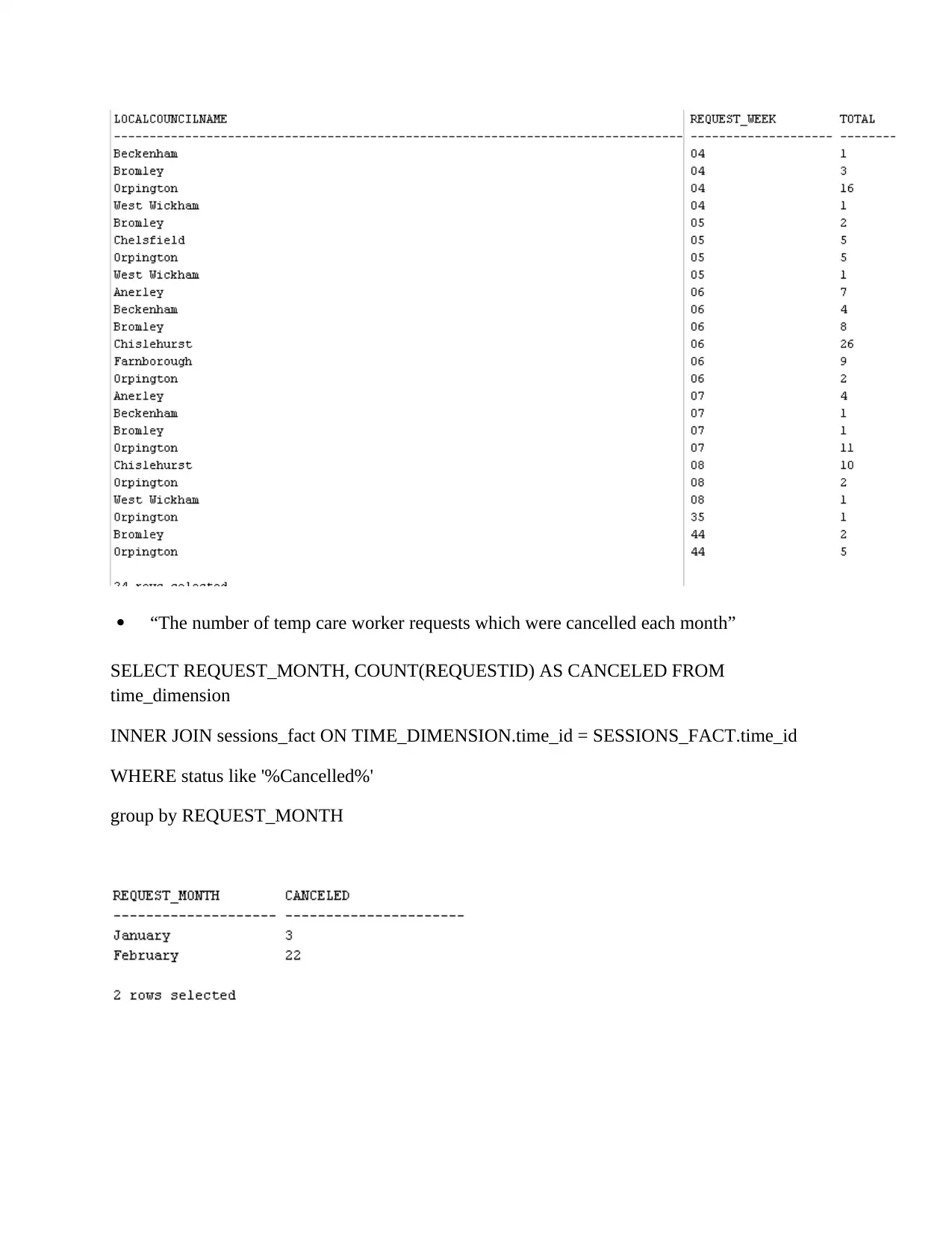

“The number temp care worker requests filled by council each week”

SELECT localcouncilname, request_week, count(tempid) as total from local_council_dimension

inner join

sessions_fact on local_council_dimension.LOCALCOUNCIL_ID =

sessions_fact.LOCALCOUNCIL_ID

inner join time_dimension ON time_dimension.TIME_ID = sessions_fact.TIME_ID

where status='booked'

group by localcouncilname,request_week order by request_week asc

sessions_fact.LOCALCOUNCIL_ID

inner join time_dimension ON time_dimension.TIME_ID = sessions_fact.TIME_ID

where sessions_fact.status = 'booked'

group by COUNTY,request_month order by total desc

“The number temp care worker requests filled by council each week”

SELECT localcouncilname, request_week, count(tempid) as total from local_council_dimension

inner join

sessions_fact on local_council_dimension.LOCALCOUNCIL_ID =

sessions_fact.LOCALCOUNCIL_ID

inner join time_dimension ON time_dimension.TIME_ID = sessions_fact.TIME_ID

where status='booked'

group by localcouncilname,request_week order by request_week asc

⊘ This is a preview!⊘

Do you want full access?

Subscribe today to unlock all pages.

Trusted by 1+ million students worldwide

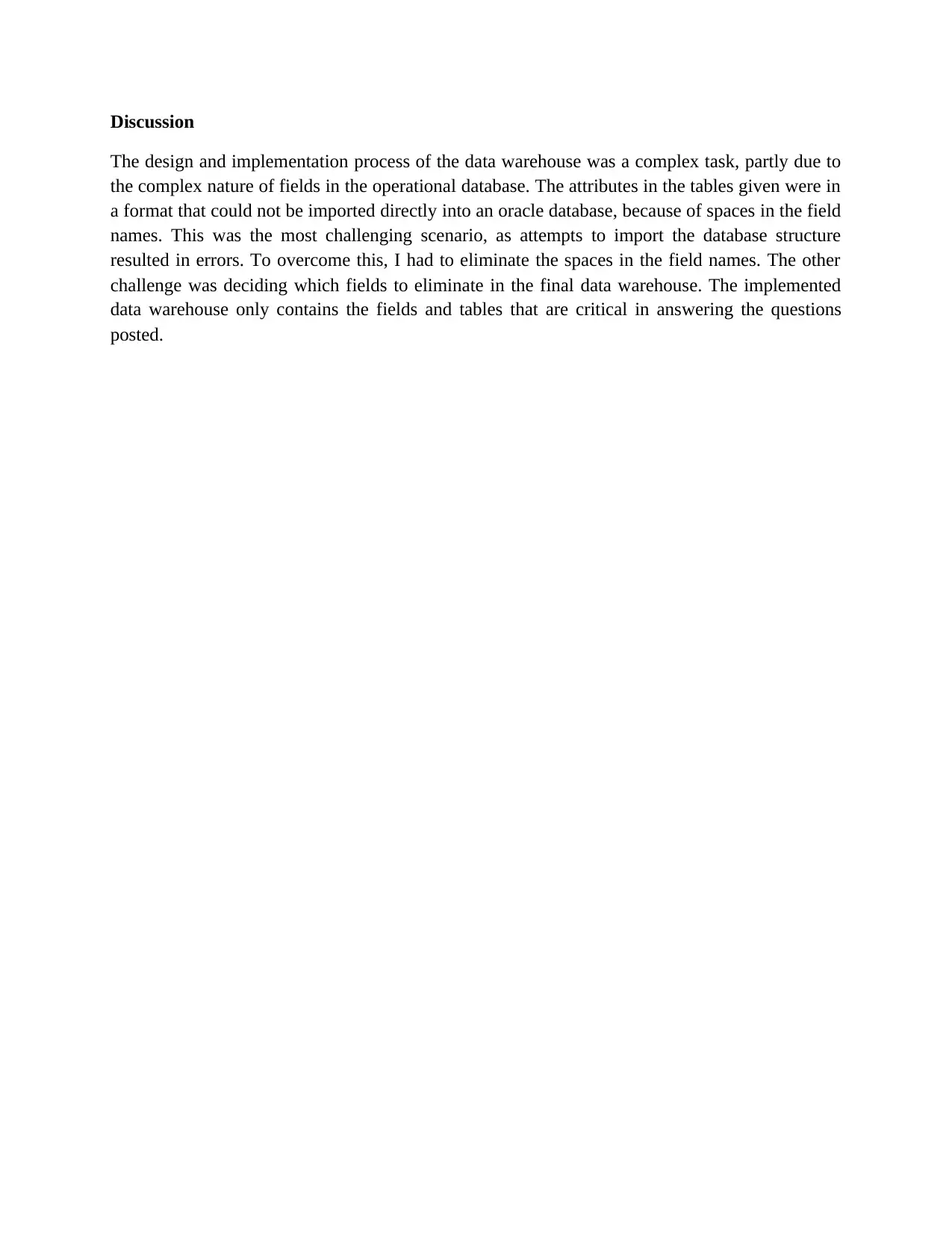

“The number of temp care worker requests which were cancelled each month”

SELECT REQUEST_MONTH, COUNT(REQUESTID) AS CANCELED FROM

time_dimension

INNER JOIN sessions_fact ON TIME_DIMENSION.time_id = SESSIONS_FACT.time_id

WHERE status like '%Cancelled%'

group by REQUEST_MONTH

SELECT REQUEST_MONTH, COUNT(REQUESTID) AS CANCELED FROM

time_dimension

INNER JOIN sessions_fact ON TIME_DIMENSION.time_id = SESSIONS_FACT.time_id

WHERE status like '%Cancelled%'

group by REQUEST_MONTH

Paraphrase This Document

Need a fresh take? Get an instant paraphrase of this document with our AI Paraphraser

Discussion

The design and implementation process of the data warehouse was a complex task, partly due to

the complex nature of fields in the operational database. The attributes in the tables given were in

a format that could not be imported directly into an oracle database, because of spaces in the field

names. This was the most challenging scenario, as attempts to import the database structure

resulted in errors. To overcome this, I had to eliminate the spaces in the field names. The other

challenge was deciding which fields to eliminate in the final data warehouse. The implemented

data warehouse only contains the fields and tables that are critical in answering the questions

posted.

The design and implementation process of the data warehouse was a complex task, partly due to

the complex nature of fields in the operational database. The attributes in the tables given were in

a format that could not be imported directly into an oracle database, because of spaces in the field

names. This was the most challenging scenario, as attempts to import the database structure

resulted in errors. To overcome this, I had to eliminate the spaces in the field names. The other

challenge was deciding which fields to eliminate in the final data warehouse. The implemented

data warehouse only contains the fields and tables that are critical in answering the questions

posted.

1 out of 11

Your All-in-One AI-Powered Toolkit for Academic Success.

+13062052269

info@desklib.com

Available 24*7 on WhatsApp / Email

![[object Object]](/_next/static/media/star-bottom.7253800d.svg)

Unlock your academic potential

Copyright © 2020–2026 A2Z Services. All Rights Reserved. Developed and managed by ZUCOL.